3

Inventing Discovery

Discovery is what science is all about

– N. R. Hanson, ‘An Anatomy of Discovery’, 19671

§ 1

On the night of 11/12 October 1492 Christopher Columbus discovered America. Either Columbus, on the Santa Maria, who claimed to have seen a light shining in the dark some hours before, or the lookout on the Pinta, who actually saw land by the light of the moon, was the first European since the Vikings to see the New World.2 They thought that the land they were approaching was part of Asia – indeed, throughout his lifetime (he died in 1506) Columbus refused to recognize that the Americas were a continent. The first cartographer to show the Americas as a vast land mass (not yet quite a continent) was Martin Waldseemüller in 1507.3

Columbus discovered America, an unknown world, when he was trying to find a new route to a known world, China. Having discovered a new land, he had no word to describe what he had done. Columbus, who had no formal education, acquired several languages – Italian, Portuguese, Castilian, Latin – to supplement the Genoese dialect of his childhood, but only Portuguese had a word (discobrir) for ‘discovery’, and it had acquired it very recently, only since Columbus had failed in his first attempt, in 1485, to get backing from the king of Portugal for his expedition.

The idea of discovery is contemporaneous with Columbus’s plans for his successful expedition, but Columbus could not appeal to it because he wrote the accounts of his voyage not in Portuguese but in Spanish and Latin. The nearest classical Latin verbs are invenio (find out), reperio (obtain) and exploro (explore), with the resulting nouns inventum, repertum and exploratum. Invenio is used by Columbus to announce his discovery of the New World; reperio by Johannes Stradanus for the title of his book of engravings illustrating new discoveries (c.1591); and exploro by Galileo to announce his discovery of the moons of Jupiter (1610).4 In modern translations these words are often represented by the word ‘discovery’, but this obscures the fact that in 1492 ‘discovery’ was not an established concept. More than a hundred years later Galileo still needed, when writing in Latin, to use convoluted phrases such as ‘unknown to all astronomers before me’ to convey it.ii5

In all the major European languages the same metaphorical use of a word meaning ‘uncover’ was soon adopted to describe the voyages of discovery. The lead was taken by the Portuguese, who had been the first to engage in journeys of exploration, attempting, in a series of expeditions beginning in 1421, to find a sea route to the spice islands of India by sailing along the coast of Africa (in the process proving, contrary to the established doctrines of the universities, that the equatorial regions are not too hot for human survival): in that language the word descobrir was already in use by 1484 to mean ‘explore’ (probably as a translation of the Latin patefacere, to lay open). In 1486, however, Fernão Dulmo proposed a quite new type of enterprise, a voyage westwards across the ocean into the unknown to find (descobrirse ou acharse, discover or find) new lands (this was two years after Columbus had proposed sailing westwards to reach China).6 The voyage probably never took place, but it would have been one of discovery rather than exploration. Dulmo discovered nothing; but his concept of discovery was soon to take on a life of its own.iiii

The new word began to spread across Europe with the publication in 1504 of the second of two letters written (or supposedly written) by Amerigo Vespucci, in which he described his journeys to the New World in the service of the king of Portugal. This ‘Letter to Piero Soderini’, written and first published in Italian, had appeared in a dozen editions by 1516. In Italian it used discoperio nine times as an import from Portuguese; the Latin translation (based on a lost French intermediary), published in 1507, used discooperio twice.7 This was the first use of this word in the modern sense of ‘discover’: discooperio exists in late Latin (it occurs in the Vulgate), but only to mean ‘uncover’. Because it did not exist in classical Latin discooperio never established itself as a respectable term; in any case, discovery was such a new concept that at first it required explication. Vespucci helpfully explained that he was writing about the finding of a new world ‘of which our forefathers made absolutely no mention’.iiiiii

The new word spread almost as fast as news of the New World. Fernão Lopes de Castanheda published História do descobrimento e conquista da Índia (i.e. the New World) in 1551, and this was quickly translated into French, Italian and Spanish, and later into German and English, and played a key role in consolidating the new usage. From its first appearances in the titles of books we can see how rapidly the word became established: Dutch – 1524 (but then not again until 1652); Portuguese – 1551; Italian – 1552; French – 1553; Spanish – 1554; English – 1563; German – 1613.

Here are some figures, in thousands, for the production of individual copies of printed books: of necessity, they are merely sophisticated estimates. The Printing Revolution was a very large-scale but at the same time very drawn-out process which neatly coincides with the Scientific Revolution (see below). In 1500 it was only just beginning to pick up speed:

| 1450–1500 | 1500–1550 | 1550–1600 | 1600–1650 | 1650–1700 | 1700–1750 |

| 12,589 | 79,017 | 138,427 | 200,906 | 331,035 | 355,073 |

(From Buringh & van Zanden, ‘Charting the “Rise of the West” ’ (2009), 418.)

If discovery was new with Vespucci, then surely invention was not? In the sixteenth and seventeenth centuries gunpowder, printing and the compass were the three inventions of modernity most frequently cited to prove the superiority of the moderns to the ancients. All three pre-date Columbus, but I can find no example of them being cited in this way before 1492.8 It was the discovery of America which demonstrated the importance of the compass; in due course printing and gunpowder might have seemed of revolutionary importance, but as it happens they were only recognized as revolutionary in the post-Columbus age. And there are good reasons for this: the first battle whose outcome was determined by gunpowder is often said to be Cerignola in 1503, and printing had very little impact before 1500.

We are so used to the varied meanings of the word ‘discovery’ that it is easy to assume it always meant roughly what it means today. ‘I’ve just discovered I’m entitled to a tax refund,’ we say. But ‘discover’ in this sense comes after talk about Columbus discovering the New World; it’s the voyages of discovery which give rise to this loose use of ‘discover’ to mean ‘find out’, and this loose usage was encouraged by the practice of translating invenio as ‘discover’. The core meaning of ‘discovery’, after 1492, is not just an uncovering or a finding out: someone who announces a discovery is, like Columbus, claiming to have got there first, and to have opened the way for all those who will follow. ‘We have found the secret of life,’ Francis Crick announced to all and sundry in the Eagle pub in Cambridge on 13 February 1953, the day he and James Watson worked out the structure of DNA.9 Discoveries are moments in an historical process that is intended to be irreversible. The concept of discovery brings with it a new sense of time as linear rather than cyclical. If the discovery of America was a happy accident, it gave rise to another even more remarkable accident – the discovery of discovery.iviv10

I say ‘more remarkable’, for it is discovery itself which has transformed our world, in a way that simply locating a new land mass could never do.vv Before discovery history was assumed to repeat itself and tradition to provide a reliable guide to the future; and the greatest achievements of civilization were believed to lie not in the present or the future but in the past, in ancient Greece and classical Rome. It is easy to say that our world has been made by science or by technology, but scientific and technological progress depend on a pre-existing assumption, the assumption that there are discoveries to be made.vivi The new attitude was summed up by Louis Le Roy (or Regius, 1510–77) in 1575.11 Le Roy, who was a professor of Greek and had translated Aristotle’s Politics, was the first fully to grasp the character of the new age (I quote the English translation of 1594):

[T]here remayne more thinges to be sought out, then are alreadie invented, and founde. And let us not be so simple, as to attribute so much unto the Auncients, that wee beleeve that they have knowen all, and said all; without leaving anything to be said, by those that should come after them … Let us not thinke that nature hath given them all her good gifts, that she might be barren in time to come: … How many [secrets of nature] have bin first knowen and found out in this age? I say, new lands, new seas, new formes of men, maners, lawes, and customes; new diseases, and new remedies; new waies of the Heaven, and of the Ocean, never before found out; and new starres seen? yea, and how many remaine to be knowen by our posteritie? That which is now hidden, with time will come to light; and our successours will wonder that wee were ignorant of them.12

It is this assumption that there are new discoveries to be made which has transformed the world, for it has made modern science and technology possible.13 (The idea that there are ‘formes of men, maners, lawes, and customes’ also represents the birth of the idea of a comparative study of societies, cultures or civilizations.)14

Le Roy’s text helps us to distinguish events, words and concepts. There were geographical discoveries before 1486 (when Dulmo changed the meaning of the word descobrir), such as that of the Azores, which happened sometime around 1351 – but no one thought of them as such; no one bothered to record the event, for the simple reason that no one was very interested. The Azores were later rediscovered around 1427, but the event still seemed unimportant, and no reliable account survives. The governing assumption was that there was no such thing as new knowledge: just as when I pick up a coin that has been dropped in the street I know that it has belonged to someone else before me, so the first Renaissance sailors to reach the Azores will have assumed that others had been there before them. In the case of the Azores this was mistaken, while it was correct in the case of Madeira, discovered – or rather rediscovered – around the same time, for it was known to Pliny and Plutarch. But no one thought Columbus’s discovery of (as he thought) a new route to Asia was insignificant; there were disputes over whether America was or was not a previously unknown land, but no one claimed that any Greek or Roman sailor had made the voyage westward before Columbus. (There was an obvious explanation: the Greeks and the Romans did not have the compass and so were reluctant to sail out of sight of land.) Thus Columbus knew he was making a discovery, of a new route if not of a new land; the discoverers of the Azores did not.

Although there were already ways of saying that something had been found for the first time and had never been found before (and, indeed, people continued to rely on such phrases to convey ‘discovery’ when writing in Latin), it was very uncommon before 1492 for anyone to want to say anything of the sort, because the governing assumption was that there was ‘nothing new under the sun’ (Ecclesiastes 1:9). The introduction of a new meaning for descrobrir implied a radical shift in perspective and a transformation in how people understood their own actions. There were, one can properly say, no voyages of discovery before 1486, only voyages of exploration. Discovery was a new type of enterprise which came into existence along with the word.

A central concern of the history of ideas, of which the history of science forms part, has to be linguistic change. Usually, linguistic change is a crucial marker of a modification in the way in which people think – it both facilitates that change and makes it easier for us to recognize it. Occasionally, focussing on a linguistic alteration can mislead us into thinking that something important has happened when it hasn’t, or that something happened at a particular moment when it actually happened earlier. There is no simple rule: one has to examine each case on its merits.viivii Take the word ‘boredom’. Did people suffer from boredom before the word was introduced in 1829?15 Surely they did: they had the noun ‘ennui’ (1732), the noun ‘bore’ (1766) and the verb ‘to bore’ (1768). Shakespeare had the word ‘tediosity’. ‘Boredom’ is a new word, not a new concept, and certainly not a new experience (although it may have been a much more frequent experience in the age of Dickens than in the age of Shakespeare, and, where ennui was thought of as peculiarly French, boredom was certainly British). Other cases are a little more complicated. The word ‘nostalgia’ was coined (in Latin) in 1688 as a translation of the German Heimweh (homesickness). It first appears in English in 1729, well before ‘homesick’ and ‘homesickness’. The French had had ‘la maladie du pays’ since at least 1695. Was homesickness new? I rather doubt it, even if there was no word for it; what was new was the idea that it was a potentially fatal illness requiring medical intervention.16 It’s the absence of a simple rule, combined with the fact that much linguistic change lies in giving new meanings to old words, that explains why some of the most important intellectual events have become invisible: we tend to assume that discovery, like boredom, has always been there, even if there are more discoveries at some times than at others; we assume the words are new, but not the concepts that lie behind them. This is true of boredom, but in the case of discovery it is a mistake.

Some activities are language dependent. You cannot play chess without knowing the rules, so you cannot play chess without having some sort of language in which you can express, for example, the concept of checkmate. It doesn’t matter exactly what that language is: a rook is exactly the same piece if you call it a castle, just as a Frisbee was the same thing when it was called a Pluto Platter. In the absence of the word ‘rook’ you could use some sort of phrase, such as ‘the piece which starts out in one of the four corners’, just as you can call a Frisbee a flying disc, but you would find it pretty awkward to keep using phrases like this and would soon feel the need for a specialized word. Individual words and longer phrases can do the same job, but individual words usually do it better. And the introduction of a new word, or a new meaning for an old word, often marks the point at which a concept enters general use and begins to do real work.

Since you cannot play chess without knowing that you are doing so, no matter by what name you call the game, playing chess is what has been called ‘an actor’s concept’, or ‘an actor’s judgement’: you have to have the concept in order to perform the action.17 It is often difficult to work out quite where to draw the line when dealing with actor’s concepts. You can surely experience Schadenfreude, malicious enjoyment of the misfortunes of others, without having a word for it, so Schadenfreude was not new when the word entered the English language late in the nineteenth century; but having the word made it much easier to recognize, describe and discuss. It led people to a new understanding of human motivation: the word and the concept go together. So, too, people were surely embarrassed by awkward social encounters before the word ‘embarrass’, which originally meant to hinder or encumber, acquired a new meaning in the nineteenth century, but they were much more aware of embarrassment after they had a word for it. Only then did children begin to find their parents embarrassing. Schadenfreude and ‘embarrassment’ are not quite actor’s concepts, in that you can experience them without having a word for what it is that you are experiencing, but the words are intellectual tools which enable us to discuss emotional states it would be difficult to talk about without them, and indeed having the words makes it much easier for us to experience and identify the emotional states in a pure and unambiguous form.

So although there were discoveries and inventions before 1486, the invention and dissemination of a word for ‘discovery’ marks a decisive moment, because it makes discovery an actor’s concept: you can set out to make discoveries, knowing that is what you are doing. Le Roy attacks the idea that everything worth saying has already been said and that all that is left for us to do is to expound and summarize the works of our predecessors, urging his readers to make new discoveries: ‘Admonishing the Learned, to adde that by their owne Inuentions, which is wanting in the Sciences; doing that for Posteritie, which Antiquitie hath done for us; to the end, that Learning be not lost, but from day to day may receive some increase.’18

It is worth pausing for a moment over Le Roy’s vocabulary: he uses inventer and l’invention frequently; he writes of how ‘many wonderful things [such as the printing press, the compass and gunpowder] unknown to antiquity have been newly found’; but he also uses the word decouvremens, immediately translatable as ‘discoveries’: ‘decouvremens de terres neuves incogneuës à l’antiquité’; ‘Des navigations & decouvremens de païs’; truth, he says, has not been ‘entierement decouverte’.19 In his usage the meaning of the word has not yet moved far from its original reference to voyages of discovery. Did he need the word to formulate his argument? Perhaps not. What he did need was the example of Columbus, who was for him, as by then for everyone else, the proof that human history was not simply a history of repetitions and vicissitudes; that it could become – that it was in the process of becoming – a history of progress.

§ 2

To claim that discovery was new in 1492, when Columbus discovered America (or in 1486, when Dulmo talked of making discoveries; or in 1504, when Vespucci disseminated the new word across Europe) may seem plain wrong. After all, the learned humanist Polydore Vergil published in 1499 a book which has been recently translated under the title On Discovery (De inventoribus rerum, or On the Inventors), and which looks at first sight as if it is a history of discovery through the ages.20 Vergil’s book was enormously successful, appearing in over one hundred editions.21 The question Vergil asks himself over and over again is: ‘Who invented … ?’; and he runs through a seemingly endless chain of topics, such as language, music, metallurgy, geometry. In nearly every case he finds a range of different answers to his question in his sources but, in brief, his argument is that the Romans and the Greeks attribute most inventions to the Egyptians, from whom they were acquired by the Greeks and the Romans; while the Jews and the Christians insist that the learning of the Egyptians came from the Jews, above all from Moses. (Had Vergil consulted Islamic authorities, he would have found agreement that all learning came from the Jews, but with Enoch rather than Moses identified as the key figure.)22

There are several striking features about Vergil’s display of erudition. He is interested in first founders, rather than in the long-term development of a discipline. So he has virtually nothing to say about progress.viiiviii When it comes to philosophy and the sciences, he identifies no significant contributions from Muslims (Avicenna (980–1037) is the only Muslim mentioned, and the Arabs are not even given credit for Arabic numerals), or Christians: nearly everything that matters happened long ago. He does, it is true, mention a small number of modern inventions – stirrups, the compass, clocks, gunpowder, printing – but he has almost nothing to say about new observations, new explanations or new proofs. Aristotle qualifies as an innovator only because he had the first library, Plato because he said God made the world, Aesculapius because he invented tooth-pulling, Archimedes because he was the first to make a mechanical model of the cosmos. Hippocrates of Chios is included not because he wrote the first geometry textbook but because he engaged in trade. Euclid is not mentioned, Ptolemy only as a geographer not as an astronomer, and Herophilus (the ancient anatomist) only for comparing the rhythms of the pulse to musical measures. If we use the word ‘discovery’ to mean something different from ‘inventions’ (and, of course, Vergil had only one word, inventiones, to cover both meanings), there are only two discoveries in Vergil: Anaxagoras’s explanation for eclipses and Parmenides’ realization that the Evening Star and the Morning Star are the same star. (We cannot really extend the category of discovery to include, for example, the claim that the blood of a dove, wood pigeon or swallow is the best cure for a black eye, although some cultural relativists would argue we should.)

These discoveries are included purely by accident, for Vergil’s model is a long chapter in Pliny’s Natural History (c.78 CE) entitled ‘On the first inventors of diverse things’ which lists numerous inventions (the plough, the alphabet), including some ‘sciences’ (astrology and medicine), and some technologies (the crossbow) but not a single specific discovery. Pythagoras’s theorem (which is only vaguely hinted at by Vergil in discussing the architect’s square), Archimedes’ principle, the anatomical discoveries of Erasistratus – one could compile a long list of all that is missing from both Pliny and Vergil and could have been included if either of them had been interested in discovery as opposed to first founding, or invention, or innovation. There is a simple test of the claim that there is no discovery in Vergil: in the three early-modern-English translations the word ‘discover’ appears in a relevant sense only once: ‘Orestus, son to Dencalion, discovered the vine about Mount Aetna in Sicily’(1686).23 Needless to say, Vergil makes no mention of the contemporary voyages of discovery, although he went on revising his text until 1553.

So in ancient Rome, whose texts Vergil knew exceptionally well, and in the Renaissance prior to 1492, there was no concept of discovery.ixix However, the ancient Greeks did have the concept (they used words related to eureka: heuriskein, eurisis; words which can mean ‘invention’ or ‘discovery’), and developed a literary genre on invention: heurematography.xx Eudemus (c.370–300 BCE) wrote histories of arithmetic, geometry and astronomy. They do not survive except in so far as they were quoted in later works; the history of geometry was an important source for Proclus (412–85), whose commentary on Book I of Euclid was first printed (on the basis of a defective manuscript) in the original Greek in 1533, and then in a far superior Latin translation in 1560. Proclus credits Pythagoras, for example, with discovering the theorem we now call Pythagoras’s theorem, and Menelaus the theorem that is the mathematical foundation for Ptolemaic astronomy. Had Vergil had an opportunity to read Proclus, some of this might have made its way into his text, but it is unlikely that he would have absorbed the concept of discovery. Much of Greek culture had been assimilated by the Romans, but they had found the concept of discovery indigestible, and it is unlikely that Vergil, trained to think like a Roman, would have responded differently.xixi

§ 3

Vergil was one of the leading humanist intellectuals of the sixteenth century, by which date a humanist education (that is to say, an education in writing Latin like a classical Roman) was widely accepted as the best way to introduce young men to the world of learning, for it provided skills that were easily transferable to politics and business. But in the universities, as opposed to the schoolrooms, humanist scholarship was not the central concern. From the end of the eleventh century until the middle of the eighteenth century there was a fundamental continuity in the teaching of universities across Europe: philosophy was the core subject in the curriculum, and the philosophy taught was that of Aristotle.xiixii Aristotle’s natural philosophy was to be found in four texts: his Physics, On the Heavens, On Generation and Corruption and Meteorology, and what we think of as scientific subjects were primarily addressed through commentaries on these texts.24

Aristotle believed that knowledge, including natural philosophy, should be fundamentally deductive in character. Just as geometry starts from undisputed premises (a straight line is the shortest distance between two points) to reach surprising conclusions (the square on the hypotenuse is equal to the sum of the squares of the other two sides), so natural philosophy should start from undisputed premises (the heavens never change) and draw conclusions from them (the only form of movement that can carry on indefinitely without changing is circular movement, so all movement in the heavens must be circular). Ideally, it should be possible to formulate every scientific argument in syllogistic terms, a syllogism being, for example:

All men are mortal.

Socrates is a man.

So Socrates is mortal.

Aristotle explained natural processes in terms of four causes: formal, final, material and efficient. Thus if I construct a table, the formal cause is the design I have in mind; the final cause is my desire to have somewhere to eat my meals; the material cause is various pieces of wood; and the efficient causes are a saw and a hammer. Aristotle thought about the natural world in exactly the same way: that is to say, he saw it as the product of rational, purposive activity. Natural entities seek to realize their ideal form: they are goal oriented (Aristotle’s natural philosophy is teleological, the Greek word telos meaning ‘goal’ or ‘end’). Thus a tadpole has the form of a juvenile frog, and its goal, its final cause, is to become an adult frog. Somewhat surprisingly, the same principles apply to inanimate matter, as we shall see.

Aristotle held that the universe is constructed out of five elements. The heavens are made out of aether, or quintessence, which is translucent and unchanging, neither hot nor cold, dry nor damp. The heavens stretch outwards from the Earth, which is at the centre of the universe, as a series of material spheres carrying the moon, the sun and the planets, and then, above them all, is the starry firmament. The universe is thus spherical and finite; moreover, it is oriented: it has a top, a bottom, a left and a right. Aristotle never thinks in terms of space in the abstract (as geometers already did), but always in terms of place. He denied the very possibility of an empty space, a vacuum. Empty space was a contradiction in terms.

The sublunary world, the world this side of the moon, is the world of generation and corruption – the rest of the universe has existed unchanging from all eternity. In our world there are four primary qualities (hot and cold, wet and dry), and pairs of qualities belong to each of the four elements (earth, water, air and fire), earth, for example, being cold and dry. These elements naturally arrange themselves in concentric spheres outwards from the centre of the universe. Thus all earth seeks to fall towards the centre of the universe, and all fire seeks to rise to the boundary of the moon’s sphere; but water and air sometimes seek to go downwards, and sometimes upwards: Aristotle has no notion of a general principle of gravity.

A tadpole is potentially a frog, and as it grows it develops from potentiality to actuality. The element earth is potentially at the centre of the universe, and as it falls towards that centre it realizes its potential. All water is potentially part of the ocean which surrounds the earth: in a river it flows downhill in order to realize its potential. Water has weight when you take it out of its proper place: try lifting a bucket of water out of a pond. But when it is in its proper place it becomes weightless: when you swim in the ocean you cannot feel the weight of water on you. Aristotle thus does not think of the natural movement of the elements as movement through space; he sees it in teleological terms as the realization of potential. It is essentially a qualitative, not a quantitative, process.xiiixiii

Aristotle does occasionally mention quantities. Thus he says if you have two heavy objects, the heavier one will fall faster than the lighter one, and if the heavy one is twice as heavy it will fall twice as fast. But he isn’t interested enough in the quantities involved to think this through. Does he mean that if you have a one-kilo bag of sugar and a two-kilo bag of sugar, the two-kilo bag will fall twice as fast as the one-kilo bag? Or does he mean only that if you have a cube made out of a heavy material, say mahogany, and another of the same size made out of a lighter material, say pine, that if one is twice as heavy as the other, it will fall twice as fast? The two claims are very different, but Aristotle never distinguishes between them, nor tests his claim that heavy objects fall faster than light ones, for he takes it to be self-evidently true.

Aristotle distinguished sharply between philosophy (which provided causal explanations) and mathematics (which merely identified patterns). Philosophy tells us that the universe consists of concentric spheres; the actual patterns made by the planets as they move through the heavens is a subject for astronomy, which is a sub-branch of mathematics. Astronomy and the other mathematical disciplines (geography, music, optics, mechanics) took their foundational principles from philosophy but elaborated these principles through mathematical reasoning applied to experience. Aristotle thus distinguished sharply between physics (which is part of philosophy and is deductive, teleological and concerned with causation) and astronomy (which is part of mathematics, and merely descriptive and analytic).

Aristotle was remarkable for his explorations of natural phenomena, studying, for example, the development of the chicken embryo within the egg. But as he was taken up in the universities of medieval and Renaissance Europe his works became a textbook of acquired knowledge, not a project to provoke further enquiry. The very possibility of new knowledge came to be doubted, and it was assumed that all that needed to be known was to be found in Aristotle and the rich tradition of commentary upon his texts. The Aristotle of the universities was thus not the real Aristotle but one adapted to provide an educational programme within a world where the most important discipline was taken to be theology. Just as theology was conducted in the form of a commentary upon the Bible and the Church Fathers, so philosophy (and within philosophy, natural philosophy, the study of the universe) was conducted in the form of a commentary upon Aristotle and his commentators. The study of philosophy was thus seen as a preparation for the study of theology because both disciplines were concerned with the explication of authoritative texts.xivxiv

What did this mean in practice? Aristotle took the view that harder substances are denser and heavier than softer substances; it followed that ice is heavier than water. Why does it float? Because of its shape: flat objects are unable to penetrate the water and remain on the surface. Hence a sheet of ice floats on the surface of a pond. Aristotelian philosophers were still happily teaching this doctrine in the seventeenth century, despite the fact that there were two obvious difficulties. It was incompatible with the teaching of Archimedes, who was available in Latin from the twelfth century, and argued that objects float only if they are lighter than the water they displace. The mathematicians followed Archimedes; the philosophers followed Aristotle. Moreover, ice was easily available in much of Europe: in Florence, for example, it was brought down from the Apennines throughout the summer in order to keep fish fresh. The most elementary experiment will show that ice floats no matter what its shape. The philosophers, confident that Aristotle was always right, saw no need to test his claims.25

This indifference to what we would call the facts is exemplified by Alessandro Achillini (1463–1512), a superstar philosopher, the pride of the University of Bologna.26 He was a follower of the Muslim commentator Averroes (1126–98), who studiously avoided introducing religious categories into the interpretation of Aristotle and so implicitly denied the creation of the world and the immortality of the soul. Achillini’s brilliance and the transgressive character of his thought were summarized in a popular saying: ‘It is either the devil or Achillini.’27 In 1505 he published a book on Aristotle’s theory of elements, De elementis, in which he discussed a question that had long been debated by the philosophers: whether the region of the equator would be too hot for human habitation. He quoted Aristotle, Avicenna and Peter of Abano (1257–1316), and concluded, ‘However, that at the equator figs grow the year round, or that the air there is most temperate, or that the animals living there have temperate constitutions, or that the terrestrial paradise is there – these are things which natural experience does not reveal to us.’28 As far as Achillini was concerned, the question of whether figs grow at the equator was as unanswerable as the question of where the Garden of Eden was located, and neither was a question for a philosopher.

As it happens, the Portuguese, in their search for an oceanic route to the spice islands, which involved sailing south along the coast of Africa, had reached the equator in 1474/75 and the Cape of Good Hope in 1488. In 1505 there were already maps showing the new discoveries. The very next year John of Glogau, a professor at Cracow, pointed out (in a mathematical rather than a philosophical work) that the island of Taprobane (Sri Lanka) was very near the equator, and was populous and prosperous.29 Experience had ceased to be something unchanging, identical with what was known to Aristotle, but Achillini was professionally unprepared for this development, even though he also lectured on anatomy, the most empirical of all the university disciplines.

By 1505 then the relationship between experience and philosophy needed to be rethought, but Achillini was incapable of grasping the problem.30 By contrast, Cardinal Gasparo Contarini’s book on the elements, published posthumously in 1548, explained that Aristotle, Avicenna and Averroes had all denied that the equator was habitable: ‘This question, which for many years was disputed between the greatest philosophers, experience has solved in our times. For from this new navigation of the Spaniards and especially of the Portuguese it has been discovered that there is habitation under the equinoctial circle and between the tropics, and that innumerable people dwell in these regions …’31

For Contarini, experience had taken on a new kind of authority. He died in 1542, the year before the publication of Copernicus’s On the Revolutions and Vesalius’s On the Fabric of the Human Body. It was not yet apparent that, once experience was accepted as the ultimate authority, it was only a matter of time before there emerged a new philosophy which would bring the temple of established knowledge crashing down. By 1572 it would be.

§ 4

Before Columbus the primary objective of Renaissance intellectuals was to recover the lost culture of the past, not to establish new knowledge of their own. Until Columbus demonstrated that classical geography was hopelessly misconceived, the assumption was that the arguments of the ancients needed to be interpreted, not challenged.32 But even after Columbus the old attitudes lingered. In 1514 Giovanni Manardi expressed impatience with those who continued to doubt whether human beings could withstand the equatorial heat: ‘If anyone prefers the testimony of Aristotle and Averroes to that of men who have been there,’ he protested, ‘there is no way of arguing with them other than that by which Aristotle himself disputed with those who denied that fire was really hot, namely for such a one to navigate with astrolabe and abacus to seek out the matter for himself.’33 Sometime between 1534 and 1549 Jean Taisnier, a musician and mathematician, remarked that Aristotle was sometimes mistaken; he was challenged by a representative of the pope to produce a convincing example of Aristotle being wrong. His opponents felt sure he would be unable to do so. His response was a lecture attacking Aristotle’s account of falling bodies, the weakest point in his physics.34

It is difficult for us to grasp the extent to which this continued to be the case long into the seventeenth century.xvxv Galileo tells the story of the professor who refused to accept that the nerves were connected to the brain rather than the heart because this was at odds with Aristotle’s explicit statement – and stood his ground even when he was shown the pathways of the nerves in a dissected cadaver.35 There is the famous example of the philosopher Cremonini, who, despite being a close friend of Galileo, refused to look through his telescope. Cremonini went on to publish a lengthy book on the heavens in which no mention was made of Galileo’s discoveries, for the simple reason that they were irrelevant to the task of reconstructing Aristotle’s thinking.36 In 1668 Joseph Glanvill, a leading proponent of the new science, found himself arguing against someone who dismissed all the discoveries made with telescopes and microscopes on the grounds that such instruments were ‘all deceitful and fallacious. Which Answer minds me of the good Woman, who when her Husband urged in an occasion of difference, I saw it, and shall I not believe my own Eyes? Replied briskly, Will you believe your own Eyes, before your own dear Wife?xvixvi And it seems this Gentleman thinks it unreasonable we should believe ours, before his own dear Aristotle.’37 Even the great seventeenth-century anatomist William Harvey, who discovered the circulation of the blood, referred to Aristotle approvingly as ‘the great Dictator of Philosophy’; to Walter Charleton, a founding member of the Royal Society and an opponent of scholasticism, he was quite simply ‘the Despot of the Schools’.38

§ 5

Thus religion, Latin literature and Aristotelian philosophy all concurred: there was no such thing as new knowledge. What looked like new knowledge was, consequently, simply old knowledge which had been mislaid, and history was assumed to go round in circles. On a large scale, the whole universe was held (at least if you put revealed truth to one side and listened to the astrologers) to repeat itself. ‘Everything that has been in the past will be in the future,’ wrote Francesco Guicciardini in his Maxims (left to his family at his death in 1540, and first published in 1857).39 As Montaigne put it in 1580, ‘the beliefs, judgements and opinions of men … have their cycles, seasons, births and deaths, every bit as much as cabbages do.’40 He could quote the best authorities: ‘Aristotle says that all human opinions have existed in the past and will do so in the future an infinite number of other times: Plato, that they are to be renewed and come back into being after 36,000 years’ (an alarming thought, given that the Biblical chronology implied that the world was only six thousand years old; Cicero’s figure of 12,954 years was not much better). Giulio Cesare Vanini wrote (in 1616; he was executed for atheism three years later): ‘Again will Achilles go to Troy, rites and religions be reborn, human history repeat itself. Nothing exists today that did not exist long ago; what has been, shall be.’ On a smaller scale the history of each society was supposed to involve an endless cycle of constitutional forms (anacyclosis), from democracy to tyranny and back again, and it was a small step to assume that cultures, too, recurred, along with constitutions.41

For Platonists, there could be no such thing as genuinely new knowledge, for Plato insisted that the soul already knew the truth, so that whatever seemed new was in fact really reminiscence (anamnesis). In the Meno Socrates persuaded an uneducated slave boy that he already knew that the square on the hypotenuse is equal to the sum of the squares of the other two sides. And of course it is true that a discovery often involves a recognition of the significance of something already known. When Archimedes cried ‘Eureka!’ and ran naked through the streets of Syracuse we say that he had discovered what we call Archimedes’ principle. We could equally say that Archimedes had recognized the implications of something he already knew – that he displaced water when he got into the bath. Recognition and reminiscence imply that our present and future experiences are just like our past experiences; discovery implies that we can experience something that no one has ever experienced before. The idea of discovery is inextricably tied up with ideas of exploration, progress, originality, authenticity and novelty. It is a characteristic product of the late Renaissance.

The Platonists’ doctrines of recurrence and reminiscence were not the real problem, however; both were endorsed by Proclus, who still wrote, as the Greeks did, in terms of discovery. The real obstacle, apart from unquestioning belief in Aristotle, was the even more unquestioning belief in the Bible. Where the Greeks and the Romans believed that human beings had started out as little better than animals and had slowly acquired the skills required for civilization, the Bible insisted that Adam had known the names of everything; Cain and Abel had practised arable and pastoral agriculture; the sons of Cain had invented metallurgy and music; Noah had built an ark and made wine; his near-descendants had set out to build the Tower of Babel. The suggestion that the various skills required for civilization had had to be invented over a long a period of time or that there were significant types of knowledge of which Abraham, Moses and Solomon had no conception was simply unacceptable. The Greeks, the early Church Fathers pointed out, acknowledged their debt to the Egyptians, and it was easy to see that the Egyptians had acquired their learning from the Jews. ‘Stop calling your imitations inventions!’ Tatian (c.120–80) cried in exasperation, rejecting wholesale the claim that the Egyptians and Greeks had made discoveries unknown to the Jews.42

Christianity not only imposed an abbreviated chronology; the liturgy was constructed around an endless cycle, the annual recurrence of the life of Christ. ‘Annually, the Church rejoices because Christ has been born again in Bethlehem; as the winter draws near an end he enters Jerusalem, is betrayed, crucified, and the long Lenten sadness ended at last, rises from death on Easter morning.’ At the same time the sacrament of the Mass affirms ‘the perpetual contemporaneity of The Passion’ and celebrates the ‘marriage of time present with time past’.43

The idea of discovery simply could not take hold in a culture so preoccupied with Biblical chronology and liturgical repetition on the one hand, and secular ideas of rebirth, recurrence and reinterpretation on the other. Francis Bacon complained in 1620 that the world had been bewitched, so inexplicable was the reverence for antiquity. Thomas Browne protested in 1646 against the general assumption that the further back in time one went, the nearer one approached to the truth. (He surely had in mind Bacon’s insistence that the reverse is the case, that veritas filia temporis, ‘truth is the daughter of time.’)44 Symptomatic of this backward orientation of orthodox culture is the title of one of the most important books to describe the new discoveries of Columbus and Vespucci: Paesi novamenti retrovati (Vicenza, 1507; Lands Recently Rediscovered). In the German translation that followed a year later this became Newe unbekanthe Landte (New Unknown Lands).45 This revision marks the first, local triumph of the new.

Of course, it is natural for us to think that there was much that was ‘new’ before 1492. But what looks new to us generally did not look so new (or at least not quite so incontrovertibly new) to contemporaries. An interesting test case is provided by the revolutionary developments in art that took place in Florence early in the fifteenth century. Leon Battista Alberti, returning there in 1434 after long years of exile (Alberti had, in his own view and in the view of his fellow Florentines, been born in exile, in 1404, and had spent the greater part of his adult life in Bologna and Rome), was astonished at what he saw. The new dome of Florence’s cathedral, designed by Brunelleschi, ‘vast enough to cover the entire Tuscan population with its shadow’, towered over the city, and a group of brilliant artists – Brunelleschi himself, Donatello, Masaccio, Ghiberti, Luca della Robbia – were producing work which seemed unlike anything that had gone before. ‘I used both to marvel and to regret that so many excellent and divine arts and sciences, which … were possessed in great abundance by the talented men of antiquity, have now disappeared and are almost entirely lost,’ he wrote in 1436. But now, looking at the achievements of the Florentine artists, he thought ‘our fame should be all the greater if without preceptors and without any model to imitate we invent [troviamo] arts and sciences hitherto unheard of and unseen.’46 Brunelleschi’s dome was ‘[s]urely a feat of engineering, if I am not mistaken, that people did not believe possible these days and was probably equally unknown and unimaginable among the ancients’. Faced with achievements that seemed to have no classical parallel, Alberti nevertheless felt obliged to express himself with great caution: ‘surely’, ‘if I am not mistaken’, ‘probably’.xviixvii Notably, Alberti singles out here Brunelleschi’s dome, not the art of perspective painting, which was his real subject: he and his successors were quite unclear as to whether the latter technique was brand new or simply a rediscovery of the techniques used by the ancient Greeks and Romans for painting stage sets, as described by Vitruvius. Alberti himself claimed (characteristically) in 1435 that the technique of perspective was ‘probably’ unknown to the ancients; Filarete in 1461 insisted it was completely unknown to them; but Sebastiano Serlio in 1537 took exactly the opposite view, saying bluntly ‘perspective is what Vitruvius calls scenographia.’47

In such circumstances the conviction that there was no new knowledge to be had might bow and crack, but it would not quite shatter. To get a sense of its resilience one need only think of Machiavelli, almost a hundred years later, who opens the Discourses on Livy (c.1517) with a reference to the (relatively recent) discovery of the New World and a promise that he, too, has something new to offer, only to turn sharply and insist that in politics, as in law and medicine, all that is required is a faithful adherence to the examples left by the ancients, so that it turns out that what he has to offer is not a voyage into the unknown but a commentary on Livy. Unsurprisingly, Machiavelli thought it perfectly obvious that, despite the invention of gunpowder, Roman military tactics remained the model that all should follow: the whole purpose of his Art of War (1519) was to write for those who, like himself, were delle antiche azioni amatori (lovers of the old ways of doing things).48

Naturally, Copernicus, half a century after the discovery of America, was careful to mention Philolaus the Pythagorean (c.470–385 BCE) as an important precursor in proposing a moving Earth.49 Copernicus’s disciple Rheticus, in the first published account of the Copernican theory, held back any reference to heliocentrism for as long as he possibly could, for fear of alienating his readers.50 The text of Thomas Digges’s Prognostication of 1576 emphasized the absolute novelty and originality of the Copernican system; but the illustration which accompanied the text made no mention of Copernicus, claiming to represent ‘the Cælestiall Orbes according to the most auncient doctrine of the Pythagoreans’, and in later reprints this phrasing was picked up in the table of contents and the chapter headline.51 Even Galileo, in his Dialogue Concerning the Two Chief World Systems (1632), repeatedly coupled Copernicus’s name with that of Aristarchus of Samoa (c.310–230 BCE), to whom he (mistakenly) attributed the invention of heliocentrism.52 What was new was not yet admirable, and thus it presented itself, as best it could, within the carapace of the ancient. Few were prepared, as Le Roy was, to embrace novelty wholeheartedly.

Within a backward-looking culture the crucial distinction was not between old knowledge and new knowledge but between what was generally known and what was known only by a privileged few who had obtained access to secret wisdom.53 Knowledge, it was assumed, was never really lost. It either went underground, becoming esoteric or occult, or it was mislaid and would eventually turn up after centuries of lying neglected in some monastery library. As Chaucer had written in the fourteenth century:

… out of olde feldes, as men seyth,

Cometh al this new corn from yer to yere,

And out of old bokes, in good feyth,

Cometh al this newe science that men lere.54

The discovery of America was crucial in legitimizing innovation because within forty years no one disputed that it really was an unprecedented event, and one that could not be ignored.55 It was also a public event, the beginning of a process whereby new knowledge, in opposition to the old culture of secrecy, established its legitimacy within a public arena. The celebration of innovation had begun even before 1492, however. In 1483 Diogo Cão erected a marble pillar surmounted by a cross at the mouth of what we now call the Congo River to mark the furthest limit of exploration southwards. This was the first of what became a series of pillars, each designed to demarcate the boundaries of the known world, and thus to replace the Pillars of Hercules (the Strait of Gibraltar), which had done so for the ancient world. Then, after Columbus, the Spanish joined in. In 1516 Charles (the future Charles V King of Spain and Holy Roman Emperor) adopted as his device the Pillars of Hercules with the motto plus ultra, ‘further beyond’, a motto later adopted by Bacon. (There is no satisfactory translation of plus ultra because it is ungrammatical Latin.)56 João de Barros was able to claim in 1555 that Hercules’ pillars, ‘which he set up at our very doorstep, as it were, … have been effaced from human memory and thrust into silence and oblivion’.57 One of Galileo’s opponents, Lodovico delle Colombe, complained in 1610/11 that Galileo behaved like someone setting sail on the ocean, heading out past the Pillars of Hercules and crying, ‘Plus ultra!’, when of course he should have recognized that establishing Aristotle’s opinion was the point at which enquiry should stop.58 Poor Lodovico, he does not seem to have realized that the discovery of America had made ridiculous the claim that one should never venture into the unknown. Still, in June 1633, during Galileo’s trial, his friend Benedetto Castelli wrote to him, remarking that the Catholic Church seemed to want to establish new columns of Hercules bearing the slogan non plus ultra.59

But it took more than a century to make innovation – outside geography and cartography – respectable, and then it became respectable only among the mathematicians and anatomists, not among the philosophers and the theologians. In 1553 Giovanni Battista Benedetti published The Resolution of All Geometrical Problems of Euclid and Others with a Single Setting of the Compass, the title page of which announces boldly that this is a ‘discovery’ (‘per Joannem Baptistam de Benedictis Inventa’); he was following Tartaglia, who claimed to have invented his New Science (1537). But Tartaglia and Benedetti were exceptional in boasting of their achievements. A better marker of the new culture of discovery is the publication in 1581 of Robert Norman’s The Newe Attractive. Norman announced on his title page that he was the discoverer of ‘a newe … secret and subtill propertie’, the dip of the compass needle. Although he knew neither Greek nor Latin (though he did know Dutch), he knew enough about discovery to compare himself to Archimedes and Pythagoras, as described by Vitruvius. He had joined the ranks of those ‘overcome with the incredible delight conceived of their own devises and inventions’.60 When Francesco Barozzi’s Cosmographia was translated into Italian in 1607 its title page declared that it contained new discoveries (‘alcune cose di nuovo dall’autore ritrovate’); there had been no mention of these on the title page of the original edition of 1585. By 1608 it was possible to complain that ‘nowadays the discoverers of new things are virtually deified’. One precondition for this, of course, was that, like Tartaglia, like Benedetti, like Norman, and like Barozzi’s translator on his behalf, they made no secret of their discoveries.61

Two decades later a pupil of Galileo, newly appointed as a professor of mathematics at Pisa, complained that ‘of all the millions of things there are to discover [cose trovabili], I don’t discover a single one,’ and as a consequence he lived in ‘endless torment’.62 Since time began there have been impatient young men, anxious that they would fail to live up to their own expectations; but Niccolò Aggiunti may well have been the first to worry that he would never make a significant discovery. In Galileo’s circle, too, all that counted was discovery.

What was remarkable about the knowledge produced by the voyages of discovery was not just that it was indisputably new, nor that it was public. Geography had been transformed, not by philosophers teaching in universities, not by learned scholars reading books in their studies, not by mathematicians scribbling new theorems on their slates; nor had it been deduced from generally recognized truths (as recommended by Aristotle) or found in the pages of ancient manuscripts. It had been found instead by half-educated seamen prepared to stand on the deck of a ship in all weathers. ‘The simple sailors of today,’ wrote Jacques Cartier in 1545, ‘have learned the opposite of the opinion of the philosophers by true experience.’63 Robert Norman described himself as an ‘unlearned Mechanician’. The new knowledge thus represented the triumph of experience over theory and learning, and was celebrated as such. ‘Ignorant Columbus,’ wrote Marin Mersenne in 1625, ‘discovered the New World; yet Lactantius, learned theologian, and Xenophanes, wise philosopher, had denied it.’64 As Joseph Glanvill put it in 1661:

We believe the verticity of the Needle [i.e. that the compass points north], without a Certificate from the dayes of old: And confine not our selves to the sole conduct of the Stars, for fear of being wiser then our Fathers. Had Authority prevail’d here, the Earths fourth part [America] had to us been none, and Hercules his Pillars had still been the worlds Non ultra: Seneca’s Prophesie [that one could sail west to reach India] had yet been an unfulfill’d Prediction, and one moiety of our Globes, an empty Hemisphear.65

What is important here is not, despite what Diderot says, the idea that experience is the best way to acquire knowledge. The saying experientia magistra rerum, ‘experience is a great teacher’, was familiar in the Middle Ages: you don’t learn to ride a horse or shoot an arrow by reading books.66 What is important is, rather, the idea that experience isn’t simply useful because it can teach you things that other people already know: experience can actually teach you that what other people know is wrong. It is experience in this sense – experience as the path to discovery – that was scarcely recognized before the discovery of America.

Of course, the geographical discoveries themselves were only the beginning. From the New World came a flood of new plants (tomatoes, potatoes, tobacco) and new animals (anteaters, opossums, turkeys). This provoked a long process of trying to document and describe the previously unknown flora and fauna of the New World; but also, by reaction, a shocked recognition that there were all sorts of European plants and animals that had never been properly observed and recorded. Once the process of discovery had begun, it turned out that it was possible to make discoveries anywhere, if only one knew how to look. The Old World itself was viewed through new eyes.67

There was a second consequence of describing the new. For classical and Renaissance authors every well-known animal or plant came with a complex chain of associations and meanings. Lions were regal and courageous; peacocks were proud; ants were industrious; foxes were cunning. Descriptions moved easily from the physical to the symbolic and were incomplete without a range of references to poets and philosophers. New plants and animals, whether in the New World or the Old, had no such chain of associations, no penumbra of cultural meanings. What does an anteater stand for? Or an opossum? Natural history thus slowly detached itself from the wider world of learning and began to form an enclave on its own.68

§ 6

The noun ‘discovery’ first appears in its new sense in English in 1554, the verb ‘to discover’ in 1553, while the phrase ‘voyage of discovery’ was being used by 1574.69 Already by 1559 it was possible, in the first English application for a patent, made by the Italian engineer Jacobus Acontius, to talk of discovering, not a new continent, but a new type of machine:

Nothing is more honest than that those who by searching have found out things useful to the public should have some fruit of their rights and labours, as meanwhile they abandon all other modes of gain, are at much expense in experiments, and often sustain much loss, as has happened to me. I have discovered most useful things, new kinds of wheel machines, and of furnaces for dyers and brewers, which when known will be used without my consent, except there be a penalty, and I, poor with expenses and labour, shall have no returns. Therefore I beg a prohibition against using any wheel machines, either for grinding or bruising or any furnaces like mine, without my consent.70

His suit was eventually granted with the statement: ‘[I]t is right that inventors should be rewarded and protected against others making profit out of their discoveries.’xviiixviii This may seem like an extraordinary shift in meaning, for it is easy to see how you might ‘uncover’ something that is already there, much harder to see how you could uncover something that has never previously existed; but it must have been facilitated by the range of meanings present in the Latin word invenio, which covered both finding and inventing. In 1605 this new idea of discovery was generalized by Francis Bacon in Of the Proficiency and Advancement of Learning. Indeed, Bacon claimed that he had discovered how to make discoveries:

And like as the West Indies [i.e. the Americas as a whole]xixxix had never been discovered, if the use of the Mariners’ Needle [the compass], had not been first discovered; though the one be vast Regions, and the other a small Motion. So it cannot be found strange, if Sciences be no further discovered, if the Art itself of Invention and Discovery, hath been passed over.71

Bacon’s claim to have invented the art (i.e. technique) of discovery depended on a series of intellectual moves. First, he rejected all existing knowledge as being unfit for making discoveries and useless for transforming the world. The scholastic philosophy taught in the universities, based on Aristotle, was, Bacon insisted, caught up in a series of futile arguments which could never generate new knowledge of the sort he was looking for. Indeed, he rejected the idea of a knowledge grounded in certainty, in proof. Aristotelian philosophy had been based on the idea that one ought to be able to deduce sciences from generally accepted first principles, so that all science would be comparable to geometry. Instead of proof, Bacon introduced the concept of interpretation; where before scholars had written about interpreting books, now Bacon introduced the idea of ‘the interpretation of nature’.72

What made an interpretation right was not its formal structure but its usefulness, the fact that it made possible prediction and control. Bacon pointed out that the discoveries which were transforming his world – the compass, the printing press, gunpowder, the New World – had been generated in a haphazard fashion. No one knew what might happen if a systematic search for new knowledge was undertaken. Thus Bacon rejected the distinction, so deeply rooted in his society, between theory and practice. In a society where a sharp line was drawn between gentlemen, who had soft hands, and craftsmen and labourers, whose hands were hard, Bacon insisted that effective knowledge would require cooperation between gentlemen and craftsmen, between book-learning and workshop experience.

Bacon’s central claim was thus that knowledge (at least, knowledge of the sort that he was advocating) was power: if you understood something, you acquired the capacity to control and reproduce nature’s effects.xxxx Far from the products of human expertise being necessarily inferior to the products of nature, human beings were in principle capable of doing far more than nature ever did, of doing things ‘of a kind that before their invention the least suspicion of them would scarcely have crossed anyone’s mind, but a man would simply have dismissed them as impossible’.73 Where the goal of Greek philosophy had been contemplative understanding, that of Baconian philosophy was a new technology. Bacon’s ambitions for this new technology were remarkable: it was to be a form of ‘magic’; that is, it was to do things which seemed impossible to those unacquainted with it (as guns seemed a form of magic to Native Americans).74

With this – the discovery of discovery – went a new commitment to what Bacon, when writing in English, called ‘advancement’, ‘progression’ or ‘proficiency’ (using the word in its original sense of ‘moving forward’), and his translators from 1670 called ‘improvement’ or, quite simply, ‘progress’. If the discovery of America began in 1492, so too did the discovery of progress. Bacon was the first person to try to systematize the idea of a knowledge that would make constant progress.75 In his lifetime he published three books outlining the new philosophy – The Advancement of Learning (1605 and, in an expanded Latin version, 1623), The Wisdom of the Ancients (1609), The New Organon (1620, the first part of a projected but unfinished larger work, The Great Instauration) – followed, posthumously, by The New Atlantis and Sylva sylvarum in 1626. Despite its Latin title, Sylva sylvarum is written in English. In Latin silva is a wood but also the collection of raw materials needed for a building. So Sylva sylvarum is literally ‘the wood of woods’, but, effectively, The Lumber Yard. Organon is the Greek word for a tool (Galileo calls his telescope an organon),76 so The New Organon provides the tools, the mental equipment, and the Sylva sylvarum provides the raw materials for Bacon’s enterprise.77

The books were read, but they had little influence, and the demand for them was modest: it took, for example, twenty-five years for The New Organon to appear in a second edition. Bacon had no disciples in England until the 1640s. (He had more influence in France, where a number of his works appeared in translation.)78 The reason for this is very simple: Bacon made no scientific discoveries himself. His claims for his new science were entirely speculative. It was only in the second half of the seventeenth century that he was rescued from relative obscurity and hailed as the prophet of a new age.

§ 7

While Bacon was writing about discovery, others were making discoveries. Slowly and awkwardly, in the course of the sixteenth century, there had come into existence a grammar of scientific discovery: discoveries occur at a specific moment (even if their significance comes to be apparent only over time); they are claimed by a single individual who announces them to the world (even if many people are involved); they are recorded in new names; and they represent irreversible change. No one devised this grammar, and no one wrote down its rules, but they came to be generally understood for the simple reason that they were based on the paradigm case of geographical discovery.xxixxi An early example of someone evidently confident in his understanding of how the rules worked is the anatomist Gabriele Falloppio. He recounted that when he first went to teach at the University of Pisa (1548) he told his students that he had identified a third bone in the ear (other than the hammer and anvil bones) which the great anatomist Andreas Vesalius had not noticed – it is, after all, the smallest bone in the human body. One of his students advised him that Giovanni Filippo Ingrassia, who was teaching in Naples, had already discovered this bone and named it stapes, or ‘the stirrup’. (Ingrassia had made the discovery in 1546, but his work was published only posthumously in 1603.) When Falloppio published in 1561 he acknowledged Ingrassia’s priority and adopted the name he had proposed for the new bone. His admirable behaviour did not go unnoticed: it was a textbook example for Caspar Bartholin in 1611.79 Falloppio knew the rules and was determined to play by them, for he wanted his own discovery to be duly acknowledged. Ingrassia could keep the stirrup bone; Falloppio had discovered the clitoris.80 It might be thought that it wouldn’t be very hard to discover the clitoris; but the standard view, inherited from Galen, was that men and women had exactly the same sexual parts, although they were folded differently; consequently, anatomists gave them the same names – the ovaries (as we now call them), for example, were simply the female testicles. The discovery of the clitoris was therefore another remarkable triumph of experience over theory, since it had no male equivalent but was unique to the female anatomy.81

The anatomists were thus pioneers in carefully recording who claimed to have discovered what: Bartholin’s 1611 textbook begins its account of the clitoris by recording the competing claims of Falloppio, whose claim he favours, and Realdo Colombo, Falloppio’s colleague and rival at the University of Padua (although he suspected, as we might, that it had already been known to the ancients).82 As a former student of medicine, and as a professor at Padua, where many of the new anatomical discoveries were made, from 1592 Galileo was certainly familiar with this new culture of priority claims: Falloppio’s best student, Girolamo Fabrizi d’Acquapendente, who had discovered the valves in the veins, was both his physician and a personal friend.

When Galileo pointed his telescope towards Jupiter on the night of 7 January 1610, he noticed what he took to be some fixed stars near the planet. Over the next night or two the relative positions of the stars and Jupiter changed oddly. At first, Galileo assumed that Jupiter must be moving aberrantly and the stars must be fixed. On the night of 15 January he suddenly grasped that he was seeing moons orbiting Jupiter. He knew he had made a discovery, and at once he knew what to do. He stopped writing his observation notes in Italian and started writing them in Latin – he was planning to publish.83 The moons of Jupiter were discovered by one person at one moment of time, and from the beginning – not retrospectively – Galileo knew not only exactly what it was that he had discovered but that he had made a discovery.

Because Galileo rushed into print, his claim to priority was undisputed. He later claimed that it was in 1610 that he first observed spots on the sun, but he was slow to publish, and in 1612 both he and his Jesuit opponent Christoph Scheiner were advancing competing priority claims.84 They disagreed about how to explain what they had seen, but at least they agreed that the drawings they both published were illustrations of the same phenomena. Matters are not always so straightforward. The classic problem case is the discovery of oxygen. In 1772 Carl Wilhelm Scheele discovered something he called ‘fire air’, while, independently, in 1774 Joseph Priestley discovered something he called ‘dephlogisticated air’ (phlogiston being a substance supposedly given off in burning – a reverse oxygen). In 1777 Antoine Lavoisier published a new theory of combustion which clarified the role of the new gas, which he named ‘oxygen’, meaning (from the Greek) ‘acid-producer’, because he mistakenly thought it to be an essential component of all acids. (The nature of acids was not clarified until Sir Humphry Davy’s work in 1812.) Even Lavoisier did not understand oxygen properly: discovery is often an extended process, and one that can be identified only retrospectively.85 In the case of oxygen it can be said to have begun in 1772 and ended only in 1812.

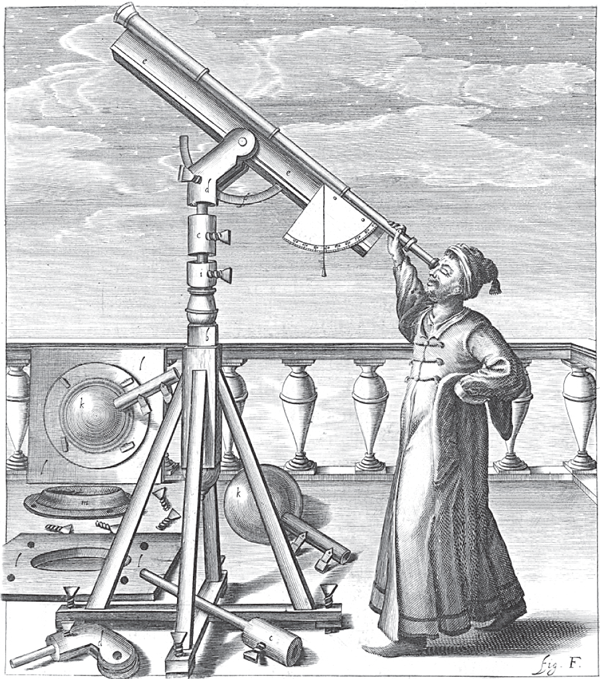

Johannes Hevelius with one of his telescopes (from Selenographia, 1647, an elaborate map of the moon). Hevelius, who lived in Danzig, Poland, built one enormous telescope 150 feet long. He also published an important star atlas. (No drawing or engraving records Galileo’s telescopes, and the two of his manufacture which survive are not as powerful as the one he was using in 1610/11. Thus we do not know what his own astronomical telescopes looked like.)

There are some who claim not just that some discoveries are difficult to pinpoint but that all discovery claims are essentially fictitious. They assert that discovery claims are always made well after the event, and that in reality (if there is a reality here at all) there is never one discoverer but always several, and that it is never possible to say exactly when a discovery is made.86 When did Columbus discover what we now call America? Never, for he never realized that he had not arrived in the Indies.87 Who did discover America? Waldseemüller, perhaps, sitting at his desk, for he was the first to grasp fully what Columbus and Vespucci had done.

The straightforward example of the discovery of the moons of Jupiter shows that these claims are superficially plausible, but mistaken. One mistake is the argument that discovery claims are necessarily retrospective because ‘discovery’ is an ‘achievement word’, like checkmate in chess.88 Passing a driving test is an achievement of the sort they have in mind: you can only be sure you have done it when you get to the end of the test. But any competent chess player can plan a mate several moves ahead; and they know that they have won the game not after they have moved their piece but as soon as they see the move they need to make. Galileo’s discovery of the moons of Jupiter was not like checkmate, or winning a race: he did not plan for it or see it coming. Nor was it like an ace at tennis: you only know you have served one after your opponent has failed to make a return. It was like singing in tune: he knew he was doing it at the same time as he did it. Some achievements are necessarily retrospective (like winning the Nobel Prize, or discovering America), some are simultaneous (like singing in tune) and others can be prospective (like checkmate). Scientific discoveries come in all three forms. As we have seen, the discovery of oxygen was retrospective. The classic example of simultaneous discovery is Archimedes’ cry of ‘Eureka!’ He knew he had found the answer at the very moment he saw the level of the water in his bath rise – which is why he was still naked and dripping wet as he ran through the streets shouting out the good news. So, too, with the discovery of the moons of Jupiter: Galileo had ‘a Eureka moment’.xxiixxii

The really remarkable cases are those of prospective discovery, since they straightforwardly refute the claim that all discoveries are necessarily retrospective constructions. Thus in 1705 Halley, having noticed that a particularly bright comet reappeared roughly every seventy-five years, predicted that the comet now known as Halley’s Comet would return in 1758. The comet reappeared just in time, on Christmas Day 1758, but in any case Halley had modified his prediction in 1717 to ‘about the end of the year 1758, or the beginning of the next’.89 When did Halley make his discovery? In 1705, of course, when he noticed the pattern of regular reappearance; though his improved prediction of 1717 is worthy of note. Surely he didn’t make his discovery in 1758, when he was long dead. The discovery was confirmed in 1758 (and the comet was consequently named Halley’s Comet in 1759), but it was made in 1705; we are not reading something retrospectively into Halley’s statements when we say that he predicted the return of the comet. Similarly, Wilhelm Friedrich Bessel predicted the existence of Neptune on the basis of anomalies in the orbit of Uranus. The search for the new planet began long before it was finally observed in 1846.90

Wittgenstein explained that there are some terms we have and use quite reliably which we cannot adequately define. Take the term ‘game’. What is it that soccer, darts, chess, backgammon and Scrabble have in common? In some games you keep score, but not in chess (except in matches). In some games there are only two sides, but not in all; indeed, some games – solitaire, keepie-uppie – can be played by one person on their own. Games have, Wittgenstein said, a ‘family resemblance’, but this does not mean that the term – or the difference between a game and a sport – can be adequately defined.91

So, too, as the category of discovery has developed over time it has come to include many radically different types of event. Some discoveries are observations – sunspots, for example. Others, such as those of gravity and natural selection, are commonly called theories. Some are technologies, for instance the steam engine. The idea of discovery is no more coherent or defensible than the idea of a game; which means that the idea presents philosophers and historians with all sorts of difficulties, but it does not mean that we should stop using it. In this respect, indeed, it is typical of the key concepts that make up modern science. But in the case of discovery we have a straightforward paradigm case – the case from which the whole language derives. This is Columbus’s discovery of America. Who discovered America? Both Columbus and the lookout on the Pinta. What did they discover? Land. When did they discover it? On the night of 11/12 October 1492.

Both Columbus and the lookout, Rodrigo de Triana, had a claim to have made the discovery. A great sociologist, Robert Merton (1910–2003), became preoccupied with the idea that there are nearly always several people who can lay claim to a discovery, and that where there are not this is because one person has so successfully publicized his own claim (as Galileo did with his discovery of the moons of Jupiter) that other claims are forestalled.92 Merton was a great communicator. We owe to him indispensable phrases which encapsulate powerful arguments, such as ‘unintended consequence’ and ‘self-fulfilling prophecy’; one of his phrases, ‘role model’, has moved out of the university into daily speech. Like all great communicators, he loved language: he wrote a whole book about the word ‘serendipity’, another about the phrase ‘standing on the shoulders of giants’, and he co-edited a volume of social science quotations.93 Yet he complained that no matter how hard he tried he could not win support for the idea of multiple discovery (itself an idea, he pointed out, that had been discovered many times).

We cannot, somehow, give up the idea that discovery, like a race, is a game in which one person wins and everyone else loses. The sociologist’s view is that every race ends with a winner, so that winning is utterly predictable. If the person in the lead trips and falls the outcome is not that no one wins but that someone else wins. In each race there are multiple potential winners. But the participant’s view is that winning is an unpredictable achievement, a personal triumph. We insist on thinking about science from a participant’s viewpoint, not a sociologist’s (or a bookmaker’s). Merton was right, I think, to find this puzzling, as we have become used to thinking about profit and loss in business from both the participant’s point of view (the chief executive with a strategic vision) and from that of the economy as a whole (bulls and bears, booms and busts). Similarly, in medicine we have become used to moving back and forth between case histories and epidemiological arguments. I do not know when I will die, but there are tables which tell me what my life expectancy is and insurers will insure me on the basis of those tables. Somehow we are mesmerized by the idea of the individual role in discovery, just as we are mesmerized by the idea of winning – and of course this obsession serves a function, as it drives competition and encourages striving.

For Merton discoveries are not singular events (like winning), but multiple ones (like crossing the finishing line). Jost Bürgi discovered logarithms around 1588 but did not publish until after John Napier (1614). Harriot (in 1602), Snell (in 1621) and Descartes (in 1637) independently discovered the sine law of refraction, though Descartes was the first to publish. Galileo (1604), Harriot (c.1606) and Beeckman (1619) independently discovered the law of fall; only Galileo published.94 Boyle (1662) and Mariotte (1676) independently discovered Boyle’s law. Darwin and Wallace independently discovered evolution (and published jointly in 1858). The most striking multiples are those when several people claim to make a discovery at almost exactly the same time. Thus Hans Lipperhey, Zacharias Janssen and Jacob Metius all claimed to have discovered the telescope at around the same time in 1608. You might think that those who think that the idea of discovery is a fiction would welcome such cases, but no: as far as they are concerned, multiple discoveries are fictions, too. One far-fetched strategy they have used to undermine such cases is to maintain that in every case where claims are advanced for several different people having made one discovery, they have each in fact discovered different things. That is, Priestley and Lavoisier did not both discover oxygen; they made very different discoveries.95 It is obvious, though, that Lipperhey, Janssen and Metius all discovered (or claimed to have discovered) exactly the same thing.