| 4 | Broken Numbers Writing Fractions | |

Fractions have been part of mathematics for 4000 years or so, but the way we write them and think about them is a much more recent development. In earlier times, when people needed to account for portions of objects, the objects were broken down, sometimes literally, into smaller pieces and then the pieces were counted. (Even our word "fraction," with the same root as "fracture" and "fragment," suggests breaking something up.) This evolved into primitive systems of weights and measures, which made the basic measurement units smaller as more precision was desired. In modern terms, we might say that ounces would be counted instead of pounds, inches instead of feet, cents instead of dollars, etc. Of course, those particular units were not used in early times, but their predecessors were. Some measurement systems still in use today reflect the desire to count smaller units, rather than to deal with fractional parts. For instance, in the following list of familiar liquid measures, each unit is half the size of its predecessor:

gallon, half-gallon, quart, pint, cup, gill.

(In fact, each of these units can be expressed in terms of an even smaller unit, the fluid ounce. A gill is 4 fluid ounces.)

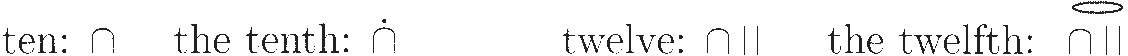

Thus, in its earliest forms, the fraction concept was limited mainly to parts, what we today would call unit fractions, fractions with numerator 1. More general fractional parts were handled by combining unit fractions; what we would call three fifths was thought of as "the half and the tenth."1 This limitation made writing fractions easy. Since the numerator was always 1, it was only necessary to specify the denominator and mark it somehow to show that it represented the part, rather than a whole number of things. The Egyptians did this by putting a symbol meaning "part" or "mouth" over the hieroglyphic numeral.2 For example, using a simple dot or oval for this symbol,

While writing down "parts" was easy, working with them was not. Suppose we take "the fifth" and double it. We'd say we get two fifths. But in the ''parts" system, "two fifths" is not legitimate; the answer must be expressed as a sum of parts; that is, the double of "the fifth" is "the third and the fifteenth." (Right?) Since the Egyptians' multiplication process depended on doubling, they produced extensive tables listing the doubles of various parts.

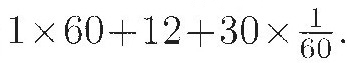

The Mesopotamian scribes, as usual, went their own way. They extended the sexagesimal (base-sixty) system described in Sketch 1 to handle fractions, too, just as we do with our decimal system. So, just as they would write 72 (in their symbols) as "1,12" — meaning 1 x 60 + 12. they would write  — meaning

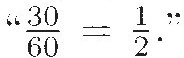

— meaning  That was quite a workable system. As used in ancient Babylon, however, it had one major problem: The Babylonians did not use a symbol (like the semicolon above) to indicate where the fractional part began. So, for example, "30" in a cuneiform tablet might mean "30" or it might mean

That was quite a workable system. As used in ancient Babylon, however, it had one major problem: The Babylonians did not use a symbol (like the semicolon above) to indicate where the fractional part began. So, for example, "30" in a cuneiform tablet might mean "30" or it might mean  To decide which is really meant, one has to rely on the context.

To decide which is really meant, one has to rely on the context.

Both the Egyptian and the Babylonian systems were passed on to the people of Greece, and from them to the Mediterranean cultures. Greek astronomers learned about sexagesimal fractions from the Babylonians and used them in their measurements — hence degrees, minutes, and seconds. This remained common in technical work. Even when the decimal system was adopted for whole numbers, people still used sexagesimals for fractions.

In everyday life, however, people in Greece used a system very similar to the Egyptian system of "parts." In fact, the practice of dealing with fractional values as sums or products of unit fractions dominated the arithmetic of fractions in Greek and Roman times and lasted well into the Middle Ages. (The exception is Diophantus, from around the 3rd century A.D., though scholars argue about exactly how Diophantus thought about fractions. See the Overview for more about his work. Diophantus is often an exception when it comes to Greek mathematics!) Fibonacci's Liber Abbaci, an influential 13th-century European mathematics text, made extensive use of unit fractions and described various ways of converting other fractions to sums of unit fractions.

There was another system in use from antiquity, also based on the notion of parts, but multiplicative. In that system, the process required taking a part of a part (of a part of...). For instance, in this system we might think of 2/15 as "two fifth parts of the third part." There were even constructions like "the third of two fifth parts and the third."

As recently as the 17th century, Russian manuscripts on surveying referred to one ninety-sixth of a particular measure as "half-half-half-half-half-third,"3 expecting the reader to think in terms of successive subdivisions:

In contrast to this unit-fraction approach, our current approach to fractions is based on the idea of measuring by counting copies of a single, small enough part. Instead of measuring out a pint and a cup of milk for a recipe, it's easier to measure three cups. That is, instead of representing a fractional amount by identifying the largest single part within it and then exhausting the rest by successively smaller parts, we simply look for a small part that can be counted up enough times to produce exactly the amount we want. Two numbers would then specify the total amount: the size of the unit part, and the number of times we count it.

This was also how the Chinese mathematicians thought about fractions. The Nine Chapters on the Mathematical Art, which dates back to about 100 B.C., contains a notation for fractions that is very similar to ours. The one difference is that the Chinese avoided using "improper fractions" such as  they would write

they would write  instead. All the usual rules for operating with fractions appear in the Nine Chapters: how to reduce a fraction that is not in lowest terms, how to add fractions, and how to multiply them. For instance, the rule for addition (translated into our terminology) looks like this:

instead. All the usual rules for operating with fractions appear in the Nine Chapters: how to reduce a fraction that is not in lowest terms, how to add fractions, and how to multiply them. For instance, the rule for addition (translated into our terminology) looks like this:

Each numerator is multiplied by the denominators of the other fractions. Add them as the dividend, multiply the denominators as the divisor. Divide; if there is a remainder, let it be the numerator and the divisor be the denominator.4

This is pretty much what we still do!

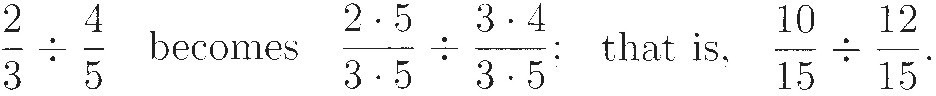

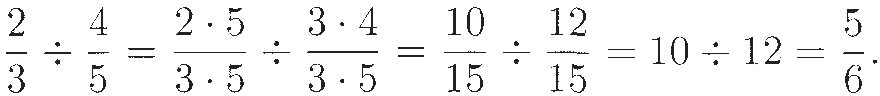

For multiplying and dividing, the method explained in the Nine Chapters also used a kind of reduction to a common "denominator."

This made the process of division natural and obvious. For example, to divide  by

by  they would first multiply both the numerator and denominator of each fraction by the denominator of the other, so that

they would first multiply both the numerator and denominator of each fraction by the denominator of the other, so that

Now that both fractions are written in the same "unit of measurement" (denominator), the question is reduced to a whole-number division problem: dividing the numerator of the first fraction by the numerator of the second. In this case, then.

A similar approach (perhaps learned from the Chinese) appears in manuscripts from India as early as the 7th century A.D. They wrote the two numbers one over the other, with the size of the part below the number of times it was to be counted. No line or mark separated one number from the other. For instance (using our modern numerals), thefifth part of the basic unit taken three times would be written as

The Indian custom of writing fractions as one number over another became common in Europe a few centuries later. Latin writers of the Middle Ages were the first to use the terms numerator ("counter" how many) and denominator ("namer" — of what size) as a convenient way of distinguishing the top number of a fraction from the bottom one. The horizontal bar between the top and bottom numbers was inserted by the Arabs by sometime in the 12th century. It appeared in most Latin manuscripts from then on, except for the early days of printing (the late 15th and early 16th centuries), when it probably was omitted because of typesetting problems. It gradually came back into use in the 16th and 17th centuries. Curiously, although 3/4 is easier to typeset than  this "slash" notation did not appear until about 1850.

this "slash" notation did not appear until about 1850.

The form in which fractions were written affected the arithmetic that developed. For instance, the "invert and multiply" rule for dividing fractions was used by the Indian mathematician Mahāvīra around 850 A.D. However, it was not part of Western (European) arithmetic until the 16th century, probably because it made no sense unless fractions, including fractions larger than 1, were routinely written as one number over another.

The term per cent ("for every hundred") as a name for fractions with denominator 100 began with the commercial arithmetic of the 15th and 16th centuries, when it was common to quote interest rates in hundredths. Such customs have persisted in business, reinforced in this country by a monetary system based on dollars and cents (hundredths of dollars). This has ensured the continued use of percents as a special branch of decimal arithmetic. The percent symbol evolved over several centuries, starting as a handwritten abbreviation for "per 100" around 1425 and gradually being transformed into "per  by 1650, then simply to

by 1650, then simply to  and finally to the "%." sign we use today.5

and finally to the "%." sign we use today.5

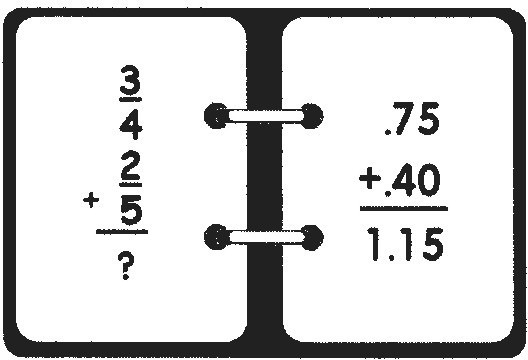

While decimal fractions occur fairly early in Chinese mathematics and Arabic mathematics, these ideas do not seem to have migrated to the West. In Europe, the first use of decimals as a computational device for fractions occurred in the 16th century. They were made popular by Simon Stevin's 1585 book, The Tenth. Stevin, a Flemish mathematician and engineer, showed in his book that writing fractions as decimals allows operations on fractions to be carried out by the much simpler algorithms of whole number arithmetic. Stevin sidesteps the issue of infinite decimals. After all, he was a practical man writing a practical book. For him, 0.333 was as close to  as you might want.

as you might want.

Within a generation, the use of decimal fractions by scientists such as Johannes Kepler and John Napier paved the way for general acceptance of decimal arithmetic. However, the use of a period as the decimal point was not uniformly adopted for many years after that. For quite a while, many different symbols — including an apostrophe, a small wedge, a left parenthesis, a comma, a raised dot, and various other notational devices — were used to separate the whole and fractional parts of a number. In 1729, the first arithmetic book printed in America used a comma for this purpose, but later books tended to favor the use of a period. Usage in Europe and other parts of the world continues to be varied. In many countries the comma is used instead of the period as the separation symbol of choice. Most English-speaking countries use the period, but most other European nations prefer the comma. International agencies and publications often accept both comma and period. Modern computer systems allow the user to choose, as one of the regionalization and language settings, whether the decimal separator should be written as a comma or a period.

Stevin's innovation, together with its application to science and practical computation, had an important effect on how people understood numbers. Up to Stevin's time, things like  or (even worse) π were not quite considered numbers. They were ratios that corresponded to certain geometric quantities, but when it came to thinking of them as numbers, people felt queasy. The invention of decimals allowed people to think of

or (even worse) π were not quite considered numbers. They were ratios that corresponded to certain geometric quantities, but when it came to thinking of them as numbers, people felt queasy. The invention of decimals allowed people to think of  as 1.414 and of π as 3.1416, and suddenly, to paraphrase Tony Gardiner,6 "all numbers looked equally boring." It's no coincidence that it was Stevin who first thought of the real numbers as points on a number line and who declared that all real numbers should have equal status.

as 1.414 and of π as 3.1416, and suddenly, to paraphrase Tony Gardiner,6 "all numbers looked equally boring." It's no coincidence that it was Stevin who first thought of the real numbers as points on a number line and who declared that all real numbers should have equal status.

When calculators were introduced in the middle of the 20th century, it seemed as if decimals had won the day permanently. But the old system of numerators and denominators still has many advantages, both computational and theoretical, and it has proved to be remarkably resilient. We now have calculators and computer programs that can work with common fractions. Percentages are used in commerce, common fractions and mixed numbers appear in recipes, and decimals occur in scientific measurements. These multiple representations are a matter of convenience and also a reminder of the rich history behind ideas we use every day.

For a Closer Look: For more information about this topic, see section 1.17 of [75], which also contains references to further historical literature. Also worth consulting are pages 309–335 of [22] and pages 208–250 of [166], which contain a wealth of material on the historical development of rational numbers and their various notational forms. A particularly nice account of Stevin's innovation and its problems can be found in [62] and [63].

1 See pp. 30–32 of [30] for a nice explanation of this concept of ''parts" in ancient Egypt and [64] or [29] for an extensive discussion.

2 See Sketch 1 for a description of Egyptian numerals. The Egyptians also had special symbols for one half, two thirds, and three fourths.

3 See [92], p. 33, [166]. Vol II, p. 213.

6 Former president of the (British) Mathematical Association, quoted in [162].