Chapter 8:

Testing and Experimenting

Coming up through the ranks at two payments giants, I took for granted the extent to which testing and experimenting were baked into everything we did. Even before imagining the possibilities of digital and mobile, payments companies worked with big data, analytics, and technology to experiment with communications, channel, products, personalization, and promotional strategies.

Testing was how we improved operations — customer selection, line of credit calculations, offer targeting, and repayment risk. We experimented to figure out ways to meet business model requirements under given conditions. We saw testing as a huge positive, the fastest and most cost-effective way to get ahead of the market, understand revenue and expense levers, anticipate and avoid risk, and create value and growth. The channels up until the mid-nineties just happened to be mail and phone.

So my eyes were opened when I left the sector and had two aha’s:

- ➤ Most companies are not realizing the unique advantages of testing and experimenting, and

- ➤ Testing and experimenting works best in a culture that values learning about what is not working as much as confirming what is working.

For change makers, the stakes are too high to rely on guesswork. Seat-of-the-pants may have been necessary in the past, but today’s complexity, competition, and demands to achieve scale all require more. There is too much information, too many choices and decisions, and too little time. Expectations are high, while the effects of innovation investments can be difficult to discern.

Strong testing and experimenting come from mindset, capabilities, investment, and commitment. The starter elements may already be within reach, even inside your head.

Direct-to-consumer and transaction-based business models, such as that of credit cards businesses, have long-valued data sciences capabilities. These companies have proven that testing and experimenting effectiveness depends upon:

- ➤ Knowing the business priority. What is the goal? Can you decompose the goal into distinct variables?

- ➤ Creating a strategy-driven learning agenda. What questions do you want to answer? What are the hypotheses that link to priorities?

- ➤ Structuring tests and experiments. Most organizations miss on population selection. Poor choices bury cross-effects that yield false reads.

- ➤ Following through on post-test analysis. Tests yield more insight than anticipated, but most organizations restrict themselves to simple analysis and don’t pursue insights to potential windfalls.

- ➤ Keeping an inventory of testing. Results of experiments are goldmines. You can keep digging, but that requires maintaining a trail of what has been tested before.

Testing and experimenting is constant for any business creating value and growth.

More is required than statistics, data clouds, and number-crunching tools. This chapter is devoted to setting out testing and experimenting requirements. The principles fit scale efforts of all sizes, shapes, and stages.

Don’t be thrown off course by the jargon surrounding artificial intelligence, big data, machine learning, and whatever else is coming next. There is plenty of confusion. The marketplace for automation offerings is large and fragmented.

The good news is that there are lots of examples from which to borrow. Technology is accessible. Data is abundant. Storage is cheap.

Assumptions about testing and experimenting:

- ➤ There is no excuse not to test, experiment, and apply learning;

- ➤ Testing and experimenting capabilities create a competitive wedge;

- ➤ Testing and experimenting require skills, knowledge integration, infrastructure, and a learning culture;

- ➤ New offers are pushed to us all whenever we turn on our devices. The pace of change is constant. Most offers are incremental.

Testing and experimenting keep startup and grown-up businesses on pace. Even better, they point to marketplace discontinuities leading to innovation.

Surprise: Marketers have conducted A/B tests for a long time

The first known instances of direct mail trace back to 1000 bc.1 Modern-day testing tactics have origins at least back to the early 1960s, when agency executive Lester Wunderman gets credit for coining the term “direct marketing.”2 Testing was structured against control groups. On the edges of direct mail and telemarketing programs, test cells were created allowing for tight measurement of changes to offer, pricing, communications, targeting, and channel. Results were read — maybe beginning within days of deploying, but possibly weeks or even months later — and winners adopted.

Such testing measured and valued execution tactics to decide investments in programs whose pre-digital timeframes were long and whose costs were high. The goals were to find incremental improvements, validate hypotheses, and justify new campaign strategies. Teams debated details, such as the precise level of statistical significance to apply to each test for results to be accepted as empirically sound. Precision was achievable.

Measurement and optimization are quite different from experimentation pursued to shape and scale innovation. Traditional techniques still matter, especially in scale businesses with mature methods to acquire and retain customers. But the speed at which feedback can be obtained with an explosion of variables has disrupted the slow and steady structures of the past. Now it is essential to find ways to pick up bits of precision as needed in shorter, faster cycles. Machine learning is creating an entirely new paradigm — one where testing is built into ongoing self-learning campaigns.

Traditional testing depended upon market opportunities holding still. Today nothing holds still. But there are still principles and patterns, some of which have been proven through decades. So, even when there is no fixed template, rigor is achievable.

Testing and experimenting: “Just the way we work”

Startup Pypestream’s Smart Messaging Platform uses artificial intelligence and chat bots to connect businesses to their customers. Chief customer officer Donna Peeples brings dual perspective on the value of testing and experimenting, having also served as an executive at major corporations including AIG. She says, “The difference I’ve seen is that in the corporate world testing is formalized and structured. In startup land, we don’t even call testing out as a separate activity. It is just the way we work. It’s very iterative and very fluid. There is no line of demarcation between testing, experimenting, and everything else.”3

Integrating testing and experimenting into how a team operates takes a systematic, flexible approach to extracting insights from many forms of data — and then taking action. It’s not about data for the sake of data, or tools for the sake of having the latest number-crunching capability, or data storage for the sake of volume. You may be starting with a clean sheet. You may be jury-rigging your first learning platform, or trying to get out from under the constraints of a rigid, overly complicated one. There are common themes.

Eric Sandosham, cofounder of Singapore-based Red & White Consulting Partners, agrees. He says, “Startups are naturally predisposed to testing and experimenting simply because they have so little historic data to draw upon, and no existing business to defend. These factors make them extremely agile. They can evaluate insights with no stake in the past so they are better able to detect emerging opportunities.”4

What are you trying to figure out?

Decide which of the three types of insight apply to your priorities:

- ➤ Hindsight, or descriptive results explaining what happened. Are results up, down, or sideways? What customer behaviors occur in different channels, or as a result of a marketing program? Did you even know that competition has begun to eat your lunch? Can shining a light on the issue by testing be the wake-up call your organization needs?

- ➤ Insight, or diagnostic results, explaining why something happened. Has pricing affected sales? Has a change someplace between the product team and point of purchase caused a problem that must be pinned down? Diagnostic results uncover execution improvements, building upon what is known from past performance data.

- ➤ Foresight, or progressive insights, about how to make something happen. Are you trying to find the next problem to solve to change market position? Can applying the model of scientific exploration reveal the next innovation? Foresight takes listening, dot-connecting, and then interpreting feedback — multiple sources, through multiple cycles of analysis, with reasonable judgment playing an interpretation role.

Three critical thinking guideposts

No matter the path, consider scribbling these guideposts on sticky notes tacked up in your workspace:

- Define the questions leading to decisions and action, linked to strategic priorities.

- Know the business-model operating levers — user behavior, processes, policies, and regulation. All drive financials.

- Have the execution capability to apply models, including each test result. If not, what is the point of the test?

Ten testing and experimenting pitfalls

- Confusing learning priorities and methodology. Mismatching hindsight, insight, and foresight goals with test approach has penalties. An A/B test is productive to determine relative impact of alternative pricing, offers, messages, or channels. If head-to-head testing is not feasible, pre- and post-testing can work. But neither is relevant if the goal is to foresee performance of an unprecedented idea, where metrics may not even be quantifiable at the outset.

- Failing to stay connected to start and end points. Customer insights, business model, goals, priorities, and strategy are always linchpins to value and growth. They frame testing and experimenting priorities, too.

- Pursuing flawed testing paths. A proposed test becomes so complicated it cannot be implemented. A team defines legitimate test questions. As design is sketched out, more permutations of segments and offers are added. The team strays from priorities.

- Becoming overwhelmed by data. Everything seems knowable. The most valuable data — substantially more valuable than third party overlays — will be data about your customers’ engagement with you. Apply art and science to sort “need to know,” “nice to know,” and “really no need to know” data.

- Coming at the world technology first. Technology is an enabler. By itself even the slickest analytics tool can add complexity and expense that subtracts value. Match tools to the task and the talent.

- Producing results that are not actionable. Tests so clean that they are isolated from the realities of processes, policies, and regulations take time and effort with no practical impact.

- Over-devotion to a method. Agile methodology is a force for speed, focusing teams on what’s good enough. Teams benefit from standardizing cycles — implement, read, react, repeat. But solving for time to the point of inflexibility blocks experiments that only come with time and messiness.

- Expecting the test to tell you what to do. Testing produces insights and raises additional questions. Think critically to decide which results to act upon and which new questions are worth pursuing.

- Rejecting findings at odds with the status quo. A test or experiment is well designed and executed. The findings fly in the face of orthodoxies. Conflict ensues. Politics takes over. Status quo is maintained. Opportunities are missed. This scene is unfortunately familiar when the data suggest a future that causes discomfort among people satisfied with the current state, or fearful of change.

- Not establishing and leveraging an inventory of testing. An inventory affords opportunity to gain speed, connect dots, and provide a knowledge base of discoveries waiting for the right timing to put them to work.

Moving to action through small, manageable steps

How can you make a habit of sensing where there is useful data, analyzing, synthesizing, assessing, and then applying findings quickly? For the answer I sought input from Marcia Tal. Marcia advises executives on advancing innovative data science and technology-based solutions. For much of her career, Marcia was busy inventing, shaping, and leading Citi Decision Management, a powerhouse global function.

A rocket scientist by training, Marcia has a way of patiently breaking down multilayered problems into useful pieces. She defines problem statements to generate tests and experiments. Outputs provide solutions to complex problems by identifying and addressing the component levers. Outputs build momentum for change by creating fact bases that wear down even the toughest resistors and surface unexpected opportunity.

Marcia advises focusing first on internal data sources. She says, “Internal data will always be the most valuable. Other sources won’t replace proprietary data — they can complement it.”5

Not collecting or maintaining a strong customer database? Make it a priority to figure out how to address this gap.

Next, know how the business operates to construct, execute, and interpret tests. Especially important is to understand how customers make decisions that define behavior with the brand.

Marcia shares a wonderful family anecdote highlighting the impact of paying attention to data and seeing insights through to action:

For decades around the 1950s, my uncle Norman owned a store — Norman’s Everybody Store — in Tulsa, Oklahoma. He would ask everybody who came through the door the same question: “What do you need?” That was his way of gathering data. He could sell anything, and he did, from cowboy boots to hats to women’s pajamas to lingerie to men’s underwear. And he applied a very important insight to his location — always next to a bar. What data drove the insight? He knew that people went to the bar after they got paid. So he knew when they had cash, and he wanted to be second in line, after the bartender, to get some of it.

Norman understood his business-model levers. He gathered and applied data about how customer behavior drove sales. He aligned his location strategy to take advantage of a findable and measurable business driver.

At Norman’s Everybody Store, learning from data was as much a part of how things worked as it is today at Pypestream.

Sure, understanding and acting upon customer data back in Norman’s day may look primitive from the perspective of our Amazon world. But what Norman lacked in technology was more than offset by mindset and translation of insights into decisions.

Reminder: don’t let vast data and new technologies take you away from the basics of knowing the operating levers within the business model, including understanding customer behavior. No amount of cool technology or data will make up for lack of attention to these essentials.

Focus on the gaps when designing tests

“You will get lost if you don’t keep going back to the question, ‘What am I trying to do?’” says Angela Curry, former managing director, Global Analytics & Insights at Citi. “Understand the goals. Where do you need insight? Build tests from there.”6

An easy way to gauge a well-defined goal is to fill in the blank in this sentence: “We will be successful in our venture if __________ happens.”

Is success attracting a certain number of users? Getting to a particular level of engagement or a market share goal or a sales volume target? The way you complete the sentence signals the data-driven learning priorities.

Gaps occur because too many teams construct tests without first thinking about what success means. They get caught in the data weeds and don’t build momentum. What is the objective? In post-MVP startups through mature businesses, success means hitting the annual plan. A business unit’s plan numbers may be high level, but they also frame expectations. Every test design should ultimately contribute to achieving the plan.

Your plan may not be as formalized as that of a business unit inside a global, publicly traded company, but whatever plan you have developed defines a horizon line. That horizon line is the direction for test efforts.

Plan

Tackle feasibility questions by considering each business-model lever that might impact the test. Figure out which ones do, and address the consequences. Engaged stakeholders are a big help. You may know your product really well, or be closely familiar with how channels work. Odds are you don’t know all of the operating details. So include people who do. Topics for these conversations:

- ➤ Will tests be readable, with outputs the team can understand and use to impact performance? What measures is the test designed to generate?

- ➤ What are the requirements and work effort to implement the test? Have you defined phase one, understanding that this is likely the first of many cycles?

- ➤ Do regulations affect processes? Do policies define decision rules affecting test execution? Such impacts can come between customer response and the end result.

- ➤ Who are the stakeholders, both to run the test and support findings? Are stakeholders on board to the test design, metrics, assumptions and limitations, implementation plan, success standards, and next steps?

- ➤ Are external stakeholders interested in the test, e.g., regulators or consumer advocates? Are their needs being addressed?

- ➤ Are partners required to implement testing, and execute changes based on findings?

Design experiments yielding metrics that close gaps between performance and goals. Do you want to acquire more customers, and if so, at what cost? Is the goal to expand margins, and if so, by how much? Is your leverage in pricing, distribution, communications, positioning, or product configuration? Do you anticipate new risks, and if so, how can a test validate or disprove mitigation strategies?

What measurement precision is reasonable and necessary? In a mature space with back history, expect greater precision. But if there is less precedent, get comfortable with directional outputs in the early testing of hypotheses. Be ready to build on each new insight to refine, and then refine some more. Accept that attribution of impact across many variables may be a work-in-progress. You may not even realize what all the variables are until after several test cycles.

By laying out the range of priority test questions before diving into the details, it becomes possible to realize the advantages of an integrated plan and avoid the inefficiencies and errors caused by fragmented testing. This discipline also mitigates hindsight bias risk. When people look at results and say, “We already knew that,” the value of testing is diminished. Validation that doesn’t become over-testing — an excuse to avoid a decision — can be useful. Just be sensitive to motivations and unintended consequences.

Go for impact by striking the balance between testing scope, complexity, and speed. Prioritize the “need to knows.” Push back on “let’s add this” temptations.

A pragmatic way to validate the metrics: create a prototype of the results dashboard before launching any tests. Then ask, “What will we do with these results? Where are the answers to the questions we must answer now?” If the answers to these questions are clear, the metrics are on track. If you are spinning in circles coming up with the answers, take action to add missing pieces or edit out extraneous, low priority data.

Assemble the right mix of people for testing and experimenting success

Pre-digital era testing used internal data sets, complemented by a few external overlays. Now, data is accessible from multiple sources, in structured and unstructured formats, in real time. The multichannel customer experience, with its many dimensions, affects sales, returns, repurchase and referrals, out-of-stock product, and ratings and reviews. This complexity intensifies the benefits of a cross-functional skillset — creativity, critical thinking, analytical, technical, operational — whatever perspectives apply to the business-model levers in the daily activities of running the business.

As with any tough problem, diversity of thought and experience opens the pathways to better, faster solutions.

Even if one person is accountable for all aspects of testing, two persona types make testing and experimenting work better:

The planner: Someone who is able to anticipate whether, what, and when testing will be influenced by the operations, processes, or policies of a business. They account for these realities in test design and planning. In a complex business, it’s impossible to anticipate all connections and how they interact without engaging people who know the plumbing and the wiring. The planner identifies and integrates multiple perspectives. They connect the dots between test design and implementation. They are a strategic jack-of-all-trades.

The implementer: This person has hands-on knowledge of analytics tools and methods. Perhaps they are a mathematician or technologist. Such backgrounds provide foundational implementer skill sets. Data is vast and varied, sources expand, and tools change. So what they know today may be obsolete in a few quarters. That is why the implementer who shines is skilled in how to look for information, read and write code to set up tests, and adapt to new techniques.

The planner and the implementer are both motivated to learn. Both are collaborators: if they are not one and the same person, they need each other.

Both benefit from a sustained ability to figure things out on the fly. What is going through their heads is this: “I don’t necessarily need to know everything, but I can figure things out or tap into others for help.”

The infrastructure and tools for testing and experimenting success

Infrastructure matters. If basics are not in place, pulling off a test plan will be challenging. Assemble the components — data sources, campaign management, analytics software, how data is captured, and how it links to partner systems. Ask:

- ➤ What must be automated?

- ➤ Where is the investment to automate not yet justified?

- ➤ Do you know enough to choose the right tools?

- ➤ Is it better to seek providers with functional or sector expertise?

Just as in test design, go back to the problem statement, strategy, and implementation details to make automation decisions.

Say the focus is to test content. Which content is worth testing? If you are delivering content across channels, is the capability in place to pull the channel messaging together — or will silos limit what can be tested, read, and delivered? If the vision is to achieve the holy grail of one-to-one personalization, how do you create content, subject lines, offers for individuals, and execute dynamically?

Or say the customer journey spans digital and physical channels. Where along the path from creating awareness, to investigating options, to buying and then post-purchasing experiences is value created? Is customer behavior interpretable across channels, or are channel data disconnected? What is the plan to justify or overcome limitations?

Finally, getting full value from infrastructure is more than a function of picking and implementing the right tools. An example of a challenge: A company’s corporate email platform was different than that used by the sales force. There was no automated way to assemble all of the interactions with a customer across both platforms. Focus was placed on coordinating these channels to align infrastructure with the organization’s goal of improving sales and engagement. The business model drivers, power and authority, and politics all came into play to make this happen.

Even if your own skills are cutting edge and the team is dogged, infrastructure predating current data integration and flexibility demands can disable well-conceived testing goals.

Licensing a tool to address a capability gap is a widely used approach. Software providers help set up tests and produce results for digital and mobile testing in real time. Is budget tight? Maybe the latest version of a tool is not necessary. Perhaps it would be smarter to go the do-it-yourself route for a few iterations, confirm you are on the right path, and then choose a solution. Free tools, especially in the early days of figuring out your requirements, may be all you need.

What is the condition of your database? For starters:

- ➤ Are records in one dataset, or in different parts of the infrastructure? Is data in organizational silos, off-limits to others because of decision rights, legal vehicle structure, or regulation?

- ➤ Is the database searchable? Can records be easily tagged, e.g., with segment indicators?

- ➤ Can imports and exports be done readily?

- ➤ What about data overlays from third-party providers?

- ➤ Is history maintained for contacts, service inquiries, responses to offers, and channel usage?

- ➤ What compliance rules govern use of the database?

- ➤ Are website and mobile applications set up for testing flexibility?

- ➤ Is whatever digital analytics package in use correctly implemented and robust enough to support priorities?

Partnering for testing and experimenting success

When to partner is a function of answers to questions including:

- ➤ Will a partner bring capability you don’t have?

- ➤ Do potential partners have better talent? Many demands are baked in to the profile of the planner and implementer. Can you attract the best, or should you tap into freelance, consulting, or agency resources?

- ➤ Do you have budget to hire and build internally, or is a variable cost approach more pragmatic?

- ➤ Don’t be dazzled by the partner’s reputation based on PR, or the sign over the door. Who is going to work on your business, the A Team or the B Team? Does the partner team share your passion and sense of purpose?

Use common sense to invest for impact

Each test is an investment. Don’t avoid testing to protect every last short-term dollar of revenue, but don’t harm the business for the sake of a test, either. Some tests will pay out quickly and some will lead to the next set of tests.

A senior marketing executive at a direct-to-consumer brand serving over thirty million users shared a story about testing to quantify the impact of specific channels on new accounts. The company’s marketing activities bring together search, site, mobile advertising, direct mail, and telemarketing in integrated campaigns.

One of the proposals floated was to measure the contribution of search by turning it off for three months.

CMOs are eager to understand how to attribute marketing spend to each channel. But common sense says that the practical consequences of shutting down a channel as important as search, even if such a tactic could yield a precise answer, likely outweigh benefits.

The CMO or CFO or business unit head may resist carving out even a small testing population. Tests may not produce immediate improvements. Such pushback is shortsighted, but exists in organizations that do not yet fully embrace a testing and experimenting mindset.

Tackle reality

Rarely until teams get into the thick of implementation and executing on findings are the operational requirements for testing and experimenting understood and appreciated.

Be eyes-wide-open:

- ➤ Testing is an ongoing commitment, not a one-time event.

- ➤ Infrastructure gaps raise obstacles at big and small, young and old companies. Diagnose what is possible now, what must change, and how to close infrastructure gaps.

- ➤ Testing and experimenting take investment in people, tools, and data infrastructure. There is a cost to every test: for each test some proven action is not being taken. The potential for return exceeding the cost has to be there, at least as a hypothesis.

Bring people along. Getting test results to take hold starts with science and lands squarely with people and their emotions. Learning from tests drives improvement. Learning is not a vehicle for spotlighting failure or mistakes.

Back in Chapter 5, the tools of storytelling were presented in the context of selling the business model to investors. Now, consider how to relate the story that emerges from test results. Do stakeholders prefer charts, words, or colorful graphs? Visualizing data and insight to support audience preferences makes it easier to build understanding and buy-in.

A digital executive and his team at a top insurance carrier led a site redesign that delivered 500 percent growth in leads generated, based on pre- and post-reads. Sounds amazing, right?

The site experience team was celebrating what they saw as a big success. But the company’s sales leaders had a different view. They could not see the impact in the bottom line (or in their commission checks) and as a result, withheld applause.

Of course, lots of factors affect the many sales funnel steps for an intermediated business — from completing a form to getting a call-back, having a meeting, assessing options, completing applications and underwriting, accepting the offer, making payment, and finally closing the sale. But for all practical purposes, it’s a failure of the test that does not bring stakeholders along by translating results into their view of success.

And, in a sector driven by a historically stable distribution model, success in a new channel could be a threat to people whose incentives are connected to the old way of doing things.

The moral of this story: identify stakeholders and get them on board as early as possible, and always before the test is implemented and results are generated. Smart people can discount test results that cause personal discomfort. That’s why building buy-in is as important as getting the design, talent, capabilities, and output reporting details right.

Where do you start and how do you move forward?

Two pieces of advice:

- Think big. Start small. Act quickly. Especially start small. Be really specific about the questions you want the tests to answer. You cannot afford to boil the ocean. Some startups succeed by going after a specific learning niche. Even global companies start with focus on a very specific test objective, and then broaden.

- Iterate and know when to persist. When statistical knowledge, logic, judgment, and stakeholder needs all come together the testing path will be anything but linear.

A financial institution selling a complex product initiated an experiment whose goal was to understand direct-to-consumer selling dynamics, from marketing message to application submission.

Only a small number of applications were submitted online. So the test was seen internally as a failure. But the team leading the test did not give up. They introduced econometric techniques to determine the overall business impact for the population receiving digital messaging. It turned out that among the digital message recipients, business results were measurably up. Digital communications were effective in driving incrementally more prospects to contact an advisor to seek assistance versus traditional methods. Client preference was to follow up the digital marketing message by speaking with a person, not to submit an application online.

In retrospect, this buyer journey makes a lot of sense given the nature of the product offer. Had the team not persisted to assess results more thoroughly the value of the multichannel experience would have been missed.

Testing and experimenting are pillars of scaling. There will be hiccups no matter how well designed the plan. As with the entirety of the business, the testing process itself will develop and refine with experimentation.

Three Cs of Testing and Experimenting

Capabilities:

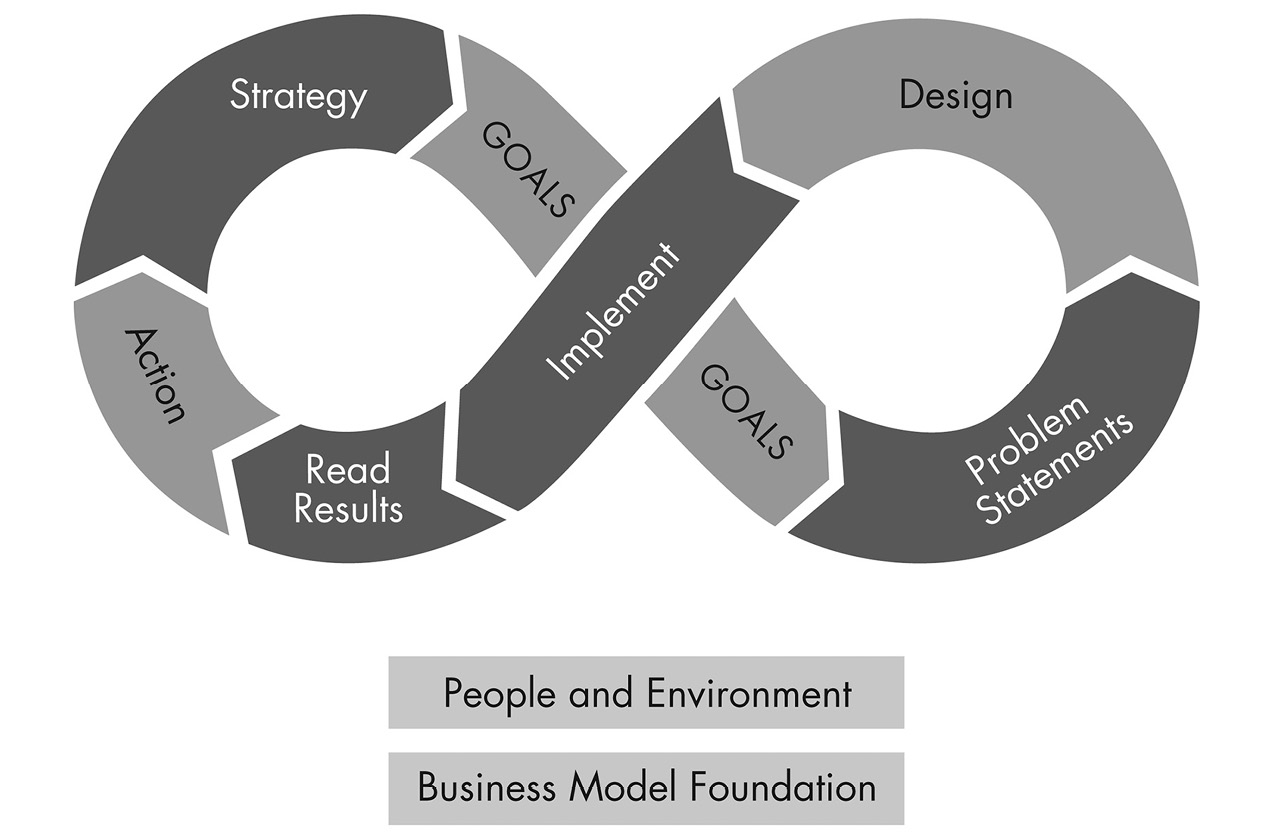

Visualizing the Testing and Experimenting Capability

- ➤ Process: An infinity loop — because each test and result leads to new questions, tests, and results.

- ➤ Foundation: The business-model drivers — the design as you would like it to be, and the reality of performance

- ➤ People & Environment: Diverse functional, technical skill sets, and stakeholders. Must have: operational knowledge of how the business works and what moves the levers, embracing intellectual curiosity

- ➤ Inputs:

- Strategy

- Goal(s)

- Problem Statement(s)

- ➤ Execution:

- Design

- Implement experiments

- ➤ Learn & progress:

- Results

- Actions

Connections:

Team structure: The Pod

In a conventional hierarchical structure, people’s accountability (and incentives) align to their organizational silo (and manager). An alternative structure: create a testing team whose role is to converge around a problem statement — designing, testing, solving, and implementing — then disbanding.

The “Pod” is a semi-permanent, virtual structure driving speed and solutions. Even if an organization is large enough to dedicate a team, a more powerful approach is to tap into people around the organization for different perspectives, specialized knowledge, and relationships.

Dedicate the implementers full-time to solve the problem statement over a sixty-to-ninety-day period. The implementers might be from analytics, product, UX/design, and channel execution. Legal or compliance should be on the team as partners in making the tests happen, not as approvers at the end of the line.

The traditional way is to assign people from each silo to assist on tests — in addition to everything else already on their plates. The risk is testing sits on the side of the desk. The Pod’s value — a highly focused, get-it-done team — is not realized.

The Pod:

- ➤ Engages employees in development assignments where they apply expertise in new directions.

- ➤ Gets everyone to feel vested in answering the question.

- ➤ Increases objectivity about what the data is revealing, and how to push business model operating levers to act upon findings.

- ➤ Allows for faster decision making.

Culture:

Cultural attributes for successful testing and experimenting include:

- ➤ Desiring learning through a proactive search for knowledge and understanding.

- ➤ Fearless about failure or retribution. “Fail fast, leave it in the dust, and go on to the next thing,” says Donna Peeples.

- ➤ Focused on the outcome, scaling and sustaining the business model.

- ➤ Courageous and confident. There could be little (or nothing) upon which to look back, no precedents to model. There could be an incredibly successful legacy now facing disruption.

- ➤ Proactive. Testing to push the boundaries of any orthodoxy, even the limits of policies or regulations.

- ➤ Collaborative. Engaging stakeholders whose worlds are being questioned, given test goals, and whose support is required.

- ➤ Creative and thoughtful. Imagination, dot connecting, and critical thinking animate data and lead to insight, implications, and impact.

Chapter summary

- ➤ Testing and experimenting depend upon willingness to explore, diverse capabilities, investment, and commitment.

- ➤ Effectiveness depends upon knowing the business priority, aligning learning priorities and test design to strategy, pursuing and acting upon post-testing analysis, and keeping an active inventory of tests and experiments.

- ➤ There is no excuse not to test and experiment.

- ➤ Be clear on what types of insight are needed: Hindsight — descriptive results of what happened, insights — diagnostic results explaining why something happened, or foresight — progressive insights about how to make something happen.

- ➤ Three guideposts for testing and experimenting: 1) defining the question, whose answer will enable better decisions, 2) understanding the business model at an operational level, and 3) anticipating execution requirements to translate findings into actions.

- ➤ Three pitfalls: 1) failure to connect strategy all the way through to test methodology, design, execution, interpreting results, and taking action, 2) failure to go deep enough in post-test analysis, including running iterative test cycles to answer the next set of questions, and 3) failure to maintain an inventory of tests and experiments including design, hypotheses, findings, and actions.

- ➤ Take full advantage of proprietary data before going to external sources. Make the ongoing investment to capture, organize, and make sense of your own customer data.

- ➤ A good approach to focusing test goals is to fill in the blank in the sentence: “We will be successful in our venture if ______ happens.” Defining test goals and priorities at the outset enables an integrated plan that will maximize learning.

- ➤ As always, diverse people collaborating make the difference: data experts along with those who shape the strategy and brand, engage directly with customers, and know the wiring and plumbing of the business model. Include the skill sets of both the planner and the implementer.

- ➤ Testing requires infrastructure. What is good enough for early efforts? What is required to scale? Solve for the strategy, goals, and implementation.

- ➤ By design, tests and experiments should answer otherwise unanswerable questions. That means there could be surprise answers, disappointing answers, uncomfortable answers.

- ➤ Focus on what you can read and act upon. Get buy-in on the measurement standards up front — not just the types of metrics, but also the targets defining success.

- ➤ Iterate and persist.7

Notes

1. The Myers & Briggs Foundation, 2014. Sourced during July and August 2017, http://www.myersbriggs.org.

2. Dax Hamman, partner, Reinvent Partners and Fresh Media, in discussion with the author, May 2017.

3. Erik Asgeirsson, CEO, CPA.com, in discussion with author, July 2017.

4. Deborah Chardt, “Marijuana Industry Projected to Create More Jobs Than Manufacturing by 2020,” forbes.com, February 22, 2017.

5. Howard Lee, founder, Spoken Communications, in discussion with author, August 2017.

6. Rochelle Gorey, cofounder, CEO, and president, SpringFour, Inc., in discussion with author, June 2017.

7. Rosenthal, Elizabeth, “How the High Cost of Health Care Is Affecting Most Americans,” NYTimes.com, December 18, 2014.