Cisco Systems It is best known for its switches and routers, but offers significant firewall and intrusion detection products as well (www.cisco.com).

Cisco Systems It is best known for its switches and routers, but offers significant firewall and intrusion detection products as well (www.cisco.com).Network Ports, Services, and Threats

Network Design Elements and Components

In today’s network infrastructures, it is critical to know the fundamentals of basic security infrastructure. Before any computer is connected to the Internet, planning must occur to make sure that the network is designed in a secure manner. Many of the attacks that hackers use are successful because of an insecure network design. That is why it is so important for a security professional to use secure topologies and tools like intrusion detection and prevention. Another example is virtual local area networks (VLANs), which are responsible for securing a broadcast domain to a group of switch ports. This relates directly to secure topologies, because different Internet Protocol (IP) subnets can be put on different port groupings and separated, either by routing or by applying an access control list (ACL). This allows for separation of network traffic, for example, the executive group can be isolated from the general user population on a network.

Other items related to topology that we examine in this chapter include demilitarized zones (DMZs). We will explore how DMZs can be used in conjunction with network address translation (NAT) and extranets to help build a more secure network. By understanding each of these items, you will see how they can be used to build a layered defense against attack.

This chapter also covers intrusion detection. It is important to understand not only the concepts of intrusion detection, but also the use and placement of intrusion detection systems (IDSes) within a network infrastructure. The placement of an IDS is critical to deployment success. We will also cover intrusion prevention systems (IPSes), honeypots, honeynets, and incident response and how they each have a part to play in protecting your network environment.

TEST DAY TIP An ACL is a list of users who have permission to access a resource or modify a file. ACLs are used in nearly all modern-day operating systems (OSes) to determine what permissions a user has on a particular resource or file.

All networks contain services that provide some type of functionality. Some of the services are essential to the health of the network, or required for user functionality, but others can be disabled or removed because they are superfluous. When services exist on networks that are not actively being used, the changes of exploitation are increased. Simply having a service enabled, offers additional opportunity for hackers to attempt entrance into your infrastructure. If a service is required and utilized in the organization, it becomes your job as the administrator to safeguard the service and ensure that all is in working order. When a network service is installed and made available but it is not in use or required by the organization, there is a tendency for the service to fall out of view. It may not be noticed or monitored by system administrators, which provides a perfect mechanism for malicious attackers. They can hammer away at your environment, seemingly without your knowledge in an attempt to breach your environment.

When you are considering whether to enable and disable services, there are things that must be considered to protect the network and its internal systems. It is important to evaluate the current needs and conditions of the network and infrastructure, and then begin to eliminate unnecessary services. This leads to a cleaner network structure, which then becomes less vulnerable to attack.

Not all networks are created the same; thus, not all networks should be physically laid out in the same fashion. The judicious usage of differing security topologies in a network can offer enhanced protection and performance. We will discuss the components of a network and the security implications of each. By understanding the fundamentals of each component and being able to design a network with security considerations in mind, you will be able to better prepare yourself and your environment for the inevitable barrage of attacks that take place every day. With the right planning and design you will be able to minimize the impact of attacks, while successfully protecting important data.

Many tools that exist today can help you to better manage and secure your network environment. We will focus on a few specific tools that give you the visibility that is needed to keep your network secure, especially intrusion detection and protection, firewalls, honeypots, content filters, and protocol analyzers. These tools will allow network administrators to monitor, detect, and contain malicious activity in any network environment. Each of these tools plays a different part in the day-to-day work of a network administrator and makes sure that you are well armed and well prepared to handle whatever malicious attacks might come your way.

A successful security strategy requires many layers and components. One of these components is the intrusion detection system (IDS) and the newer derivation of this technology, the intrusion prevention system (IPS). Intrusion detection is an important piece of security in that it acts as a detective control. A simple analogy to an intrusion detection system is a house alarm. When an intruder enters a house through an entry point that is monitored by the alarm the siren sounds and the police are alerted. Similarly an intrusion prevention system would not only sound the siren and alert the police but it would also kick the intruders out of the house and keep them out by closing the window and locking it automatically. The big distinction between an IDS/IPS and a firewall or other edge screening device is that the latter are not capable of detailed inspection of network traffic patterns and behavior that match known attack signatures. Therefore they are unable to reliably detect or prevent developing or in progress attacks.

The simplest definition of an IDS is “a specialized tool that can detect and identify malicious traffic or activity in a network or on a host.” To achieve this an IDS often utilizes a database of known attack signatures which it can use to compare patterns of activity, traffic, or behavior it sees in the network or on a host. Once an attack has been identified the IDS can issue alarms or alerts or take a variety of actions to terminate the attack. These actions typically range from modifying firewall or router access lists to block the connection from the attacker to using a TCP reset to terminate the connection at both the source and the target. In the end the final goal is the same—interrupt the connection between the attacker and the target and stop the attack.

Like firewalls, intrusion detection systems may be software-based or may combine hardware and software (in the form of preinstalled and preconfigured standalone IDS devices). There are many opinions as to what is the best option. For the exam what’s important is to understand the differences. Often, IDS software runs on the same devices or servers where firewalls, proxies, or other boundary services operate. Although such devices tend to operate at the network periphery, IDS systems can detect and deal with insider attacks as well as external attacks as long as the sensors are placed appropriately to detect such attacks.

As we explained in Chapter 4, intrusion protection systems (IPSes) are a possible line of defense against system attacks. By being proactive and defensive in your approach, as opposed to reactive, you stop more attempts at network access at the door. IPSes typically exist at the boundaries of your network infrastructure and function much like a firewall. The big distinction between IPS and firewalls is that IPSes are smarter devices in that they make determinations based on content as opposed to ports and protocols. By being able to examine content at the application layer the IPSes can perform a better job at protecting your network from things like worms and Trojans, before the destructive content is allowed into your environment.

An IPS is capable of responding to attacks when they occur. This behavior is desirable from two points of view. For one thing, a computer system can track behavior and activity in near-real time and respond much more quickly and decisively during the early stages of an attack. Because automation helps hackers mount attacks, it stands to reason that it should also help security professionals fend them off as they occur. For another thing, an IPS can stand guard and run 24 h per day and 7 days per week, but network administrators may not be able to respond as quickly during off hours as they can during peak hours. By automating a response and moving these systems from detection to prevention, they actually have the ability to block incoming traffic from one or more addresses from which an attack originates. This allows the IPS the ability to halt an attack in process and block future attacks from the same address.

Network intrusion detection systems (NIDS) and network intrusion prevention systems (NIPS) are similar in concept, and an NIPS is at first glance what seems to be an extension of an NIDS, but in actuality, the two systems are complementary and behave in a cooperative fashion. An NIDS exists for the purpose of catching malicious activity once it has arrived in your world. Whether the NIDS in your DMZ or your intranet captures the offending activity is immaterial; in both instances the activity is occurring within your network environment. With an NIPS the activity is typically being detected at the perimeter and disallowed from entering the network.

By deploying an NIDS and an NIPS you provide for a multilayered defense and ideally your NIPS is able to thwart attacks approaching your network from the outside in. Anything that makes it past the NIPS ideally would then be caught by the NIDS inside the network. Attacks originating from inside the network would also be addressed by the NIDS.

Head of the Class

Weighing IDS Options

In addition to the various IDS and IPS vendors mentioned in the list below, judicious use of a good Internet search engine can help network administrators to identify more potential suppliers than they would ever have the time or inclination to investigate in detail. That is why we also urge administrators to consider an alternative: deferring some or all of the organization’s network security technology decisions to a special type of outsourcing company. Known as managed security services providers (MSSPs), these organizations help their customers select, install, and maintain state-of-the-art security policies and technical infrastructures to match. For example, Guardent is an MSSP that includes comprehensive firewall, IDS and IPS services among its many customer offerings; visit www.guardent.com for a description of the company’s various service programs and offerings.

A huge number of potential vendors can provide IDS and IPS products to companies and organizations. Without specifically endorsing any particular vendor, the following products offer some of the most widely used and best-known solutions in this product space:

Cisco Systems It is best known for its switches and routers, but offers significant firewall and intrusion detection products as well (www.cisco.com).

Cisco Systems It is best known for its switches and routers, but offers significant firewall and intrusion detection products as well (www.cisco.com).

GFI LANguard It is a family of monitoring, scanning, and file integrity check products that offer broad intrusion detection and response capabilities (www.gfi.com/languard/).

GFI LANguard It is a family of monitoring, scanning, and file integrity check products that offer broad intrusion detection and response capabilities (www.gfi.com/languard/).

TippingPoint It is a division of 3Com that makes an inline IPS device that is considered one of the first IPS devices on the market.

TippingPoint It is a division of 3Com that makes an inline IPS device that is considered one of the first IPS devices on the market.

Internet Security Systems (ISS) (a division of IBM) ISS offers a family of enterprise-class security products called RealSecure, that includes comprehensive intrusion detection and response capabilities (www.iss.net).

Internet Security Systems (ISS) (a division of IBM) ISS offers a family of enterprise-class security products called RealSecure, that includes comprehensive intrusion detection and response capabilities (www.iss.net).

McAfee It offers the IntruShield IPS systems that can handle gigabit speeds and greater (www.mcafee.com).

McAfee It offers the IntruShield IPS systems that can handle gigabit speeds and greater (www.mcafee.com).

Sourcefire It is the best known vendor of open source IDS software as it is the developer of Snort, which is an open source IDS application that can be run on Windows or Linux systems (www.snort.org).

Sourcefire It is the best known vendor of open source IDS software as it is the developer of Snort, which is an open source IDS application that can be run on Windows or Linux systems (www.snort.org).

Head of the Class

Getting Real Experience Using an IDS

One of the best ways to get some experience using IDS tools like TCPDump and Snort is to check out one of the growing number of bootable Linux OSes. Because all of the tools are precompiled and ready to run right off the CD, you only have to boot the computer to the disk. One good example of such a bootable disk is Backtrack. This CD-based Linux OS actually has more than 300 security tools that are ready to run. Learn more at www.remote-exploit.org/backtrack.html.

A clearinghouse for ISPs known as ISP-Planet offers all kinds of interesting information online about MSSPs, plus related firewall, virtual private networking (VPN), intrusion detection, security monitoring, antivirus, and other security services. For more information, visit any or all of the following URLs:

ISP-Planet Survey Managed Security Service Providers, participating provider’s chart, www.isp-planet.com/technology/mssp/participants_chart.html.

ISP-Planet Survey Managed Security Service Providers, participating provider’s chart, www.isp-planet.com/technology/mssp/participants_chart.html.

Managed firewall services chart, www.isp-planet.com/technology/mssp/firewalls_chart.html.

Managed firewall services chart, www.isp-planet.com/technology/mssp/firewalls_chart.html.

Managed VPN chart, www.isp-planet.com/technology/mssp/services_chart.html.

Managed VPN chart, www.isp-planet.com/technology/mssp/services_chart.html.

Managed intrusion detection and security monitoring, www.isp-planet.com/technology/mssp/monitoring_chart.html.

Managed intrusion detection and security monitoring, www.isp-planet.com/technology/mssp/monitoring_chart.html.

Managed antivirus and managed content filtering and URL blocking, www.isp-planet.com/technology/mssp/mssp_survey2.html.

Managed antivirus and managed content filtering and URL blocking, www.isp-planet.com/technology/mssp/mssp_survey2.html.

Managed vulnerability assessment and emergency response and forensics, www.isp-planet.com/technology/mssp/mssp_survey3.html.

Managed vulnerability assessment and emergency response and forensics, www.isp-planet.com/technology/mssp/mssp_survey3.html.

Exercise 1 introduces you to WinDump. This tool is similar to the Linux tool TCP-Dump. It is a simple packet-capture program that can be used to help demonstrate how IDS systems work. All IDS systems must first capture packets so that the traffic can be analyzed.

1. Go to www.winpcap.org/windump/install/

2. At the top of the page you will see a link for WinPcap. This program will need to be installed as it will allow the capture of low level packets.

3. Next, download and install the WinDump program from the link indicated on the same Web page.

4. You’ll now need to open a command prompt by clicking Start, Run, and entering cmd in the Open Dialog box.

5. With a command prompt open, you can now start the program by typing WinDump from the command line. By default, it will use the first Ethernet adaptor found. You can display the help screen by typing windump—h. The example below specifies the second adaptor.

C:\>windump -i 2

6. You should now see the program running. If there is little traffic on your network, you can open a second command prompt and ping a host such as www.yahoo.com. The results should be seen in the screen you have open that is running WinDump as seen below.

windump: listening on \Device\ethO_

14:07:02.563213 IP earth.137 > 192.168.123.181.137: UDP, length 50

14:07:04.061618 IP earth.137 > 192.168.123.181.137: UDP, length 50

14:07:05.562375 IP earth.137 > 192.168.123.181.137: UDP, length 50

A firewall is the most common device used to protect an internal network from outside intruders. When properly configured, a firewall blocks access to an internal network from the outside, and blocks users of the internal network from accessing potentially dangerous external networks or ports.

There are three firewall technologies examined in the Security+ exam:

Packet filtering

Packet filtering

Application layer gateways

Application layer gateways

Stateful inspection

Stateful inspection

Head of the Class

What Is a Firewall?

A firewall is a security system that is intended to protect an organization’s network against external threats, such as hackers, coming from another network, such as the Internet.

In simple terms, a firewall is a hardware or software device used to keep undesirables electronically out of a network the same way that locked doors and secured server racks keep undesirables physically away from a network. A firewall filters traffic crossing it (both inbound and outbound) based on rules established by the firewall administrator. In this way, it acts as a sort of digital traffic cop, allowing some (or all) of the systems on the internal network to communicate with some of the systems on the Internet, but only if the communications comply with the defined rule set.

All of these technologies have advantages and disadvantages, but the Security+ exam specifically focuses on their abilities and the configuration of their rules. A packet-filtering firewall works at the network layer of the Open Systems Interconnect (OSI) model and is designed to operate rapidly by either allowing or denying packets. The second generation of firewalls is called “circuit level firewalls,” but this type has been largely disbanded as later generations of firewalls absorbed their functions. An application layer gateway operates at the application layer of the OSI model, analyzing each packet, and verifying that it contains the correct type of data for the specific application it is attempting to communicate with. A stateful inspection firewall checks each packet to verify that it is an expected response to a current communications session. This type of firewall operates at the network layer, but is aware of the transport, session, presentation, and application layers and derives its state table based on these layers of the OSI model. Another term for this type of firewall is a “deep packet inspection” firewall, indicating its use of all layers within the packet including examination of the data itself.

To better understand the function of these different types of firewalls, we must first understand what exactly the firewall is doing. The highest level of security requires that firewalls be able to access, analyze, and utilize communication information, communication-derived state, and application-derived state, and be able to perform information manipulation. Each of these terms is defined below:

Communication Information Information from all layers in the packet.

Communication Information Information from all layers in the packet.

Communication-derived State The state as derived from previous communications.

Communication-derived State The state as derived from previous communications.

Application-derived State The state as derived from other applications.

Application-derived State The state as derived from other applications.

Information Manipulation The ability to perform logical or arithmetic functions on data in any part of the packet.

Information Manipulation The ability to perform logical or arithmetic functions on data in any part of the packet.

Different firewall technologies support these requirements in different ways. Again, keep in mind that some circumstances may not require all of these, but only a subset. In that case, it is best to go with a firewall technology that fits the situation rather than one that is simply the newest technology. Table 6.1 shows the firewall technologies and their support of these security requirements.

A proxy server is a server that sits between an intranet and its Internet connection. Proxy servers provide features such as document caching (for faster browser retrieval) and access control. Proxy servers can provide security for a network by filtering and discarding requests that are deemed inappropriate by an administrator. Proxy servers also protect the internal network by masking all internal IP addresses—all connections to Internet servers appear to be coming from the IP address of the proxy servers.

A network layer firewall or a packet-filtering firewall works at the network layer of the OSI model and can be configured to deny or allow access to specific ports or IP addresses. The two policies that can be followed when creating packet-filtering firewall rules are allow by default and deny by default. Allow by default allows all traffic to pass through the firewall except traffic that is specifically denied. Deny by default blocks all traffic from passing through the firewall except for traffic that is explicitly allowed.

Deny by default is the best security policy, because it follows the general security concept of restricting all access to the minimum level necessary to support business needs. The best practice is to deny access to all ports except those that are absolutely necessary. For example, if configuring an externally facing firewall for a demilitarized zone (DMZ), Security+ technicians may want to deny all ports except port 443 (the Secure Sockets Layer [SSL] port) to require all connections coming in to the DMZ to use Hypertext Transfer Protocol Secure (HTTPS) to connect to the Web servers. Although it is not practical to assume that only one port will be needed, the idea is to keep access to a minimum by following the best practice of denying by default.

A firewall works in two directions. It can be used to keep intruders at bay, and it can be used to restrict access to an external network from its internal users. Why do this? A good example is found in some Trojan horse programs. When Trojan horse applications are initially installed, they report back to a centralized location to notify the author or distributor that the program has been activated. Some Trojan horse applications do this by reporting to an Internet Relay Chat (IRC) channel or by connecting to a specific port on a remote computer. By denying access to these external ports in the firewall configuration, Security+ technicians can prevent these malicious programs from compromising their internal network.

The Security+ exam extensively covers ports and how they should come into play in a firewall configuration. The first thing to know is that of 65,535 total ports, ports 0 through 1,023 are considered well-known ports. These ports are used for specific network services and should be considered the only ports allowed to transmit traffic through a firewall. Ports outside the range of 0 through 1,023 are either registered ports or dynamic/private ports.

User ports range from 1,024 through 49,151.

User ports range from 1,024 through 49,151.

Dynamic/private ports range from 49,152 through 65,535.

Dynamic/private ports range from 49,152 through 65,535.

If there are no specialty applications communicating with a network, any connection attempt to a port outside the well-known ports range should be considered suspect. Although there are some network applications that work outside of this range that may need to go through a firewall, they should be considered the exception and not the rule. With this in mind, ports 0 through 1,023 still should not be enabled. Many of these ports also offer vulnerabilities; therefore, it is best to continue with the best practice of denying by default and only opening the ports necessary for specific needs.

For a complete list of assigned ports, visit the Internet Assigned Numbers Authority (IANA) at www.iana.net. The direct link to their list of ports is at www.iana.org/assignments/port-numbers. The IANA is the centralized organization responsible for assigning IP addresses and ports. They are also the authoritative source for which ports applications are authorized to use for the services the applications are providing.

Damage and Defense

Denial-of-Service Attacks

A port is a connection point into a device. Ports can be physical, such as serial ports or parallel ports, or they can be logical. Logical ports are ports used by networking protocols to define a network connection point to a device. Using Transmission Control Protocol/Internet Protocol (TCP/IP), both TCP and User Datagram Protocol (UDP) logical ports are used as connection points to a network device. Because a network device can have thousands of connections active at any given time, these ports are used to differentiate between the connections to the device.

A port is described as well known for a particular service when it is normal and common to find that particular software running at that particular port number. For example, Web servers run on port 80 by default, and File Transfer Protocol (FTP) file transfers use ports 20 and 21 on the server when it is in active mode. In passive mode, the server uses a random port for data connection and port 21 for the control connection.

To determine what port number to use, technicians need to know what port number the given software is using. To make that determination easier, there is a list of common services that run on computers along with their respective well-known ports. This allows the technician to apply the policy of denying by default, and only open the specific port necessary for the application to work. For example, if they want to allow the Siebel Customer Relations Management application from Oracle to work through a firewall, they would check against a port list (or the vendor’s documentation) to determine that they need to allow traffic to port 2,320 to go through the firewall. A good place to search for port numbers and their associated services online is on Wikipedia. This list is fairly up to date and can help you find information on a very large number of services running on all ports (http://en.wikipedia.org/wiki/List_of_TCP_and_UDP_port_numbers). You will notice that even Trojan horse applications have well-known port numbers. A few of these have been listed in Table 6.2.

EXAM WARNING The Security+ exam requires that you understand how the FTP process works. There are two modes in which FTP operates: active and passive.

Active Mode

1. The FTP client initializes a control connection from a random port higherthan 1,024 to the server’s port 21.

2. The FTP client sends a PORT command instructing the server to connect to a port on the client one higher than the client’s control port. This is the client’s data port.

3. The server sends data to the client from server port 20 to the client’s data port. Passive Mode

Passive Mode

1. The FTP client initializes a random port higherthan 1,023 as the control port, and initializes the port one higher than the control port as the data port.

2. The FTP client sends a PASV command instructing the server to open a random data port.

3. The server sends a POfiTcommand notifying the client of the data port number that was just initialized.

4. The FTP client then sends data from the data port it initialized to the data port the server instructed it to use.

Unfortunately for nearly every possible port number, there is a virus or Trojan horse application that could be running there. For a more comprehensive list of Trojans listed by the port they use, go to the SANS Institute Web site at www.sans.org/resources/idfaq/oddports.php.

EXAM WARNING The Security+ exam puts a great deal of weight on your knowledge of specific well-known ports for common network services. The most important ports to remember are:

20 FTP Active Mode Control Port (see the Security+ exam warning on FTP for further information)

21 FTP Active Mode Data Port (see the Security+ exam warning on FTP for further information)

22 Secure Shell (SSH)

23 Telnet

25 Simple Mail Transfer Protocol (SMTP)

80 HTTP

110 Post Office Protocol 3 (POP3)

119 Network News Transfer Protocol (NNTP)

143 Internet Message Access Protocol (IMAP)

443 SSL(HTTPS)

Memorizing these ports and the services that run on them will help you with firewall and network access questions on the Security+ exam.

Packet filtering has both benefits and drawbacks. One of the benefits is speed. Because only the header of a packet is examined and a simple table of rules is checked, this technology is very fast. A second benefit is ease of use. The rules for this type of firewall are easy to define and ports can be opened or closed quickly. In addition, packet-filtering firewalls are transparent to network devices. Packets can pass through a packet-filtering firewall without the sender or receiver of the packet being aware of the extra step. A major bonus of using a packet-filtering firewall is that most current routers support packet filtering.

There are two major drawbacks to packet filtering:

A port is either open or closed. With this configuration, there is no way of simply opening a port in the firewall when a specific application needs it and then closing it when the transaction is complete. When a port is open, there is always a hole in the firewall waiting for someone to attack.

A port is either open or closed. With this configuration, there is no way of simply opening a port in the firewall when a specific application needs it and then closing it when the transaction is complete. When a port is open, there is always a hole in the firewall waiting for someone to attack.

The second major drawback to packet filtering is that it does not understand the contents of any packet beyond the header. Therefore, if a packet has a valid header, it can contain any payload. This is a common failing point that is easily exploited.

The second major drawback to packet filtering is that it does not understand the contents of any packet beyond the header. Therefore, if a packet has a valid header, it can contain any payload. This is a common failing point that is easily exploited.

To expand on this, as only the header is examined, packets cannot be filtered by user name, only IP addresses. With some network services such as Trivial File Transfer Protocol (TFTP) or various UNIX “r” commands (rsh, rep, and so forth), this can cause a problem. Because the port for these services is either opened or closed for all users, the options are either to restrict system administrators from using the services, or invite the possibility of any user connecting and using these services. The operation of this firewall technology is illustrated in Figure 6.1.

Referring to Figure 6.1 the sequence of events is as follows:

1. Communication from the client starts by going through the seven layers of the OSI model.

2. The packet is then transmitted over the physical media to the packet-filtering firewall.

3. The firewall works at the network layer of the OSI model and examines the header of the packet.

4. If the packet is destined for an allowed port, the packet is sent through the firewall over the physical media and up through the layers of the OSI model to the destination address and port.

FIGURE 6.1

Packet Filtering Technology

The second firewall technology is called application filtering or an application-layer gateway. This technology is more advanced than packet filtering, as it examines the entire packet and determines what should be done with the packet based on specific defined rules. For example, with an application-layer gateway, if a Telnet packet is sent through the standard FTP port, the firewall can determine this and block the packet if a rule is defined disallowing Telnet traffic through the FTP port. It should be noted that this technology is used by proxy servers to provide application-layer filtering to clients.

One of the major benefits of application-layer gateway technology is its application-layer awareness. Because application-layer gateway technology can determine more information from a packet than a simple packet filter can, application-layer gateway technology uses more complex rules to determine the validity of any given packet. These rules take advantage of the fact that application-layer gateways can determine whether data in a packet matches what is expected for data going to a specific port. For example, the application-layer gateway can tell if packets containing controls for a Trojan horse application are being sent to the HTTP port (80) and thus, can block them.

Although application-layer gateway technology is much more advanced than packet-filtering technology, it does have its drawbacks. Because every packet is disassembled completely and then checked against a complex set of rules, application-layer gateways are much slower than the packet filters. In addition, only a limited set of application rules are predefined, and any application not included in the predefined list must have custom rules defined and loaded into the firewall. Finally, application-layer gateways process the packet at the application layer of the OSI model. By doing so, the application-layer gateway must then rebuild the packet from the top down and send it back out. This breaks the concept behind client/server architecture and slows the firewall down even further.

Client/server architecture is based on the concept of a client system requesting the services of a server system. This was developed to increase application performance and cut down on the network traffic created by earlier file sharing or mainframe architectures. When using an application-layer gateway, the client/server architecture is broken as the packets no longer flow between the client and the server. Instead, they are deconstructed and reconstructed at the firewall. The client makes a connection to the firewall at which point the packet is analyzed, then the firewall creates a connection to the server for the client. By doing this, the firewall is acting as a proxy between the client and the server. The operation of this technology is illustrated in Figure 6.2.

A honeypot is a computer system that is deliberately exposed to public access—usually on the Internet—for the express purpose of attracting and distracting attackers. In other words, these are the technical equivalent of the familiar police “sting” operation. Although the strategy involved in luring hackers to spend time investigating attractive network devices or servers can cause its own problems, finding ways to lure intruders into a system or network improves the odds of being able to identify those intruders and pursue them more effectively. Figure 6.3 shows a graphical representation of the honeypot concept in action.

FIGURE 6.2

Application-Layer Gateway Technology

Notes from the Field

Walking the Line between Opportunity and Entrapment

Most law enforcement officers are aware of the fine line they must walk when setting up a “sting”—an operation in which police officers pretend to be victims or participants in crime, with the goal of getting criminal suspects to commit an illegal act in their presence. Most states have laws that prohibit entrapment, that is, law enforcement officers are not allowed to cause a person to commit a crime and then arrest him or her for doing it. Entrapment is a defense to prosecution; if the accused person can show at trial that he or she was entrapped, the result must be an acquittal.

FIGURE 6.3

A Honeypot in Use to Keep Attackers from Affecting Critical Production Servers

Courts have traditionally held, however, that providing a mere opportunity’for a criminal to commit a crime does not constitute entrapment. To entrap involves using persuasion, duress, or other undue pressure to force someone to commit a crime that the person would not otherwise have committed. Under this holding, setting up a honeypot or honeynet would be like the (perfectly legitimate) police tactic of placing an abandoned automobile by the side of the road and watching it to see if anyone attempts to burglarize, vandalize, or steal it. It should also be noted that entrapment only applies to the actions of law enforcement or government personnel. A civilian cannot entrap, regardless of how much pressure is exerted on the target to commit the crime. (However, a civilian could be subject to other charges, such as criminal solicitation or criminal conspiracy, for causing someone else to commit a crime.)

The following characteristics are typical of honeypots:

Systems or devices used as lures are set up with only “out of the box” default installations, so that they are deliberately made subject to all known vulnerabilities, exploits, and attacks.

Systems or devices used as lures are set up with only “out of the box” default installations, so that they are deliberately made subject to all known vulnerabilities, exploits, and attacks.

The systems or devices used as lures do not include sensitive information (for example, passwords, data, applications, or services an organization depends on or must absolutely protect), so these lures can be compromised, or even destroyed, without causing damage, loss, or harm to the organization that presents them to be attacked.

The systems or devices used as lures do not include sensitive information (for example, passwords, data, applications, or services an organization depends on or must absolutely protect), so these lures can be compromised, or even destroyed, without causing damage, loss, or harm to the organization that presents them to be attacked.

Systems or devices used as lures often also contain deliberately tantalizing objects or resources, such as files namedpassword. db, folders named Top Secret, and so forth—often consisting only of encrypted garbage data or log files of no real significance or value—to attract and hold an attacker’s interest long enough to give a backtrace a chance of identifying the attack’s point of origin.

Systems or devices used as lures often also contain deliberately tantalizing objects or resources, such as files namedpassword. db, folders named Top Secret, and so forth—often consisting only of encrypted garbage data or log files of no real significance or value—to attract and hold an attacker’s interest long enough to give a backtrace a chance of identifying the attack’s point of origin.

Systems or devices used as lures also include or are monitored by passive applications that can detect and report on attacks or intrusions as soon as they start, so the process of backtracing and identification can begin as soon as possible.

Systems or devices used as lures also include or are monitored by passive applications that can detect and report on attacks or intrusions as soon as they start, so the process of backtracing and identification can begin as soon as possible.

EXAM WARNING A honeypot is a computer system that is deliberately exposed to public access—usually on the Internet—for the express purpose of attracting and distracting attackers. Likewise, a honeynet is a network set up for the same purpose, where attackers not only find vulnerable services or servers, but also find vulnerable routers, firewalls, and other network boundary devices, security applications, and so forth. You must know these for the Security+ exam.

The honeypot technique is best reserved for use when a company or organization employs full-time Information Technology (IT) security professionals who can monitor and deal with these lures on a regular basis, or when law enforcement operations seek to target specific suspects in a “virtual sting” operation. In such situations, the risks are sure to be well understood, and proper security precautions, processes, and procedures are far more likely to already be in place (and properly practiced). Nevertheless, for organizations that seek to identify and pursue attackers more proactively, honeypots can provide valuable tools to aid in such activities.

Exercise 2 outlines the basic process to set up a Windows Honeypot. Although there are many vendors of honeypots that will run on both Windows and Linux computers, this exercise will describe the install of a commercial honeypot that can be used on a corporate network.

1. KFSensor is a Windows-based honeypot IDS that can be downloaded as a demo from www.keyfocus.net/kfsensor/.

2. Fill out the required information for download.

3. Once the program downloads, accept the install defaults and allow the program to reboot the computer to finish the install.

4. Once installed, the program will step you through a wizard process that will configure a basic honeypot.

5. Allow the system to run for some time to capture data. The program will install a sensor in the program tray that will turn red when the system is probed by an attacker.

A honeynet is a network that is set up for the same purpose as a honeypot: to attract potential attackers and distract them from your production network. In a honeynet, attackers will not only find vulnerable services or servers but also find vulnerable routers, firewalls, and other network boundary devices, security applications, and so forth.

The following characteristics are typical of honeynets:

Network devices used as lures are set up with only “out of the box” default installations, so that they are deliberately made subject to all known vulnerabilities, exploits, and attacks.

Network devices used as lures are set up with only “out of the box” default installations, so that they are deliberately made subject to all known vulnerabilities, exploits, and attacks.

The devices used as lures do not include sensitive information (for example, passwords, data, applications, or services an organization depends on or must absolutely protect), so these lures can be compromised, or even destroyed, without causing damage, loss, or harm to the organization that presents them to be attacked.

The devices used as lures do not include sensitive information (for example, passwords, data, applications, or services an organization depends on or must absolutely protect), so these lures can be compromised, or even destroyed, without causing damage, loss, or harm to the organization that presents them to be attacked.

Devices used as lures also include or are monitored by passive applications that can detect and report on attacks or intrusions as soon as they start, so the process of backtracing and identification can begin as soon as possible.

Devices used as lures also include or are monitored by passive applications that can detect and report on attacks or intrusions as soon as they start, so the process of backtracing and identification can begin as soon as possible.

The Honeynet Project at www.honeynet.org is probably the best overall resource on the topic online; it not only provides copious information on the project’s work to define and document standard honeypots and honeynets, but it also does a great job of exploring hacker mindsets, motivations, tools, and attack techniques.

Although this technique of using honeypots or honeynets can help identify the unwary or unsophisticated attacker, it also runs the risk of attracting additional attention from savvier attackers. Honeypots or honeynets, once identified, are often publicized on hacker message boards or mailing lists, and thus become more subject to attacks and hacker activity than they otherwise might be. Likewise, if the organization that sets up a honeypot or honeynet is itself identified, its production systems and networks may also be subjected to more attacks than might otherwise occur.

Content filtering is the process used by various applications to examine content passing through and make a decision on the data based on a set of criteria. Actions are based on the analysis of the content and the resulting actions can results in block or allow.

Content filtering is commonly performed on e-mail and is often also applies to Web page access as well. Filtering out gambling or gaming sites from company machines may be a desired effect of management and can be achieved through content filtering. Examples of content filters include WebSense and Secure Computings WebWasher/SmartFilter. An open source content filter example would be Dans-Guardian and Squid.

A protocol analyzer is used to examine network traffic as it travels along your Ethernet network. They are called by many names, such as pack analyzer, network analyzer, and sniffer, but all function in the same basic way. As traffic moves across the network from machine to machine, the protocol analyzer takes a capture of each packet. This capture is essentially a photocopy, and the original packet is not harmed or altered. Capturing the data allows a malicious hacker to obtain your data and potentially piece it back together to analyze the contents.

Different protocol analyzers function differently, but the overall principle is the same. A sniffer is typically software installed on a machine that can then capture all the traffic on a designated network. Much of the traffic on the network will be destined for all machines, as in the case of broadcast traffic. These packets will be picked up and saved as part of the capture. Also, all traffic destined to and coming from the machine running the sniffer will be captured. To capture traffic addressed to/from another machine on the network, the sniffer should be run in promiscuous mode. If a hub exists on the network this allows the capturing of all packets on the network regardless of their source or destination. Be aware that not all protocol analyzers support promiscuous mode, and having switches on the network makes promiscuous mode difficult to use because of the nature of switched traffic. In the cases where a sniffer that runs promiscuous mode is not available or unfeasible, it might make sense to use the built-in monitor port on the switch instead—if it exists. The monitor port exists to allow for the capture of all data that passes through the switch. Depending on your network architecture, this could encompass one or many subnets.

In this section, we will discuss network ports, network services, and potential threats to your network. To properly protect your network, you need to first identify the existing vulnerabilities. As we will discuss, knowing what exists in your network is the best first defense. By identifying ports that are open but may not be in use, you will be able to begin to close the peep holes into your network from the outside world. By monitoring required services and removing all others, you reduce the opportunity for attack and begin to make your environment more predictable.

Also, by becoming familiar with common network threats that exist today you can take measures to prepare your environment to stand against these threats. The easiest way for a hacker to make its way into your environment is to exploit known vulnerabilities. By understanding how these threats work you will be able to safeguard against them as best possible and be ready for when new threats arise.

As discussed earlier in Chapter 2, OS Hardening, unnecessary network ports and protocols in your environment should be eliminated whenever possible. Many internal networks today utilize TPC/IP as the primary protocol. This has resulted in the partial or complete elimination of such protocols as Internetwork Packet Exchange (IPX), Sequenced Packet Exchange (SPX), and/or NetBIOS Extended User Interface (NetBEUI). It is also important to look at the specific operational protocols used in a network such as Internet Control Messaging Protocol (ICMP), Internet Group Management Protocol (IGMP), Service Advertising Protocol (SAP), and the Network Basic Input/Output System (NetBIOS) functionality associated with Server Message Block (SMB) transmissions in Windows-based systems.

Notes from the Field

Eliminate External NetBIOS Traffic

One of the most common methods of obtaining access to a Windows-based system and then gaining control of that system is through NetBIOS traffic. Windows-based systems use NetBIOS in conjunction with SMB to exchange service information and establish secure channel communications between machines for session maintenance. If file and print sharing is enabled on a Windows computer, NetBIOS traffic can be viewed on the external network unless it has been disabled on the external interface. With the proliferation of digital subscriber line (DSL), Broadband, and other “always on” connections to the Internet, it is vital that this functionality be disabled on all interfaces exposed to the Internet.

Although considering removal of nonessential protocols, it is important to look at every area of the network to determine what is actually occurring and running on the system. The appropriate tools are needed to do this, and the Internet contains a wealth of resources for tools and information to analyze and inspect systems.

A number of functional (and free) tools can be found at sites such as www.found-stone.com/knowledge/free_tools.html. Among these, tools like SuperScan 3.0 are extremely useful in the evaluation process. Monitoring a mixed environment of Windows, UNIX, Linux, and/or Netware machines can be accomplished using tools such as Big Brother, which may be downloaded and evaluated (or in some cases used without charge) by visiting www.bb4.com or Nagios that can be found at www.nagios.org. Another useful tool is Nmap, a portscanner, which is available at http://insecure.org/nmap/. These tools can be used to scan, monitor, and report on multiple platforms giving a better view of what is present in an environment. In UNIX- and Linux-based systems, nonessential services can be controlled in a variety of ways depending on the distribution being worked with. This may include editing or making changes to configuration files like xinetd.conf or inetd.conf or the use of graphical administration tools like linuxconf or webmin in Linux, or the use of facilities like svcadm in Solaris. It may also include the use of ipchains, iptables, pf, or ipfilter in various versions to restrict the options available for connection at a firewall.

NOTE As you begin to evaluate the need to remove protocols and services, make sure that the items you are removing are within your area of control. Consult with your system administrator on the appropriate action to take, and make sure you have prepared a plan to back out and recover if you found that you have removed something that is later deemed necessary or if you make a mistake.

EXAM WARNING The Security+ exam can ask specific questions about ports and what services they support. It’s advisable to learn common ports before attempting the exam. Here are some common ports and services:

21 FTP

22 Secure Shell (SSH)

23 Telnet

25 Simple Mail Transfer Protocol (SMTP)

53 DNS

80 HTTP

110 Post Office Protocol (POP)

161 Simple Network Management Protocol (SNMP)

443 SSL

Memorizing these will help you with the Security+ exam.

Modern Windows-based platforms allow the configuration of OS and network services from provided administrative tools. This can include a service applet in a control panel or a Microsoft Management Console (MMC) tool in a Windows XP/ Vista/2003/2008 environment. It may also be possible to check or modify configurations at the network adaptor properties and configuration pages. In either case, it is important to restrict access and thus limit vulnerability due to unused or unnecessary services or protocols.

Let’s take a moment to use a tool to check what protocols and services are running on systems in a network. This will give you an idea of what you are working with. Exercise 3 uses Nmap to look at the configuration of a network, specifically to generate a discussion and overview of the services and protocols that might be considered when thinking about restricting access at various levels. Nmap is used to scan ports and while it is not a full blown security scanner it can identify additional information about a service that can be used to determine an exploit that could be effective. Security scanners that can be used to detail existing vulnerabilities include products like Nessus and LANGuard Network Security Scanner. If using a UNIX-based platform, a number of evaluation tools have been developed, such as Amap, POf, and Nessus, which can perform a variety of port and security scans. In Exercise 3, you will scan a network to identify potential vulnerabilities.

In this exercise, you will examine a network to identify open ports and what could be potential problems or holes in specific systems. In this exercise, you are going to use Nmap, which you can download and install for free prior to starting the exercise by going to http://insecure.org/nmap/download.html and selecting the download tool. This tool is available for Windows or Linux computers.

To begin the exercise, launch Nmap from the command line. You will want to make sure that you install the program into a folder that is in the path or that you open it from the installed folder. When you have opened a command line prompt, complete the exercise by performing the following steps:

1. From the command line type Nmap. This should generate the following response:

C:\>nmap

Nmap V. 4.20 Usage: nmap [Scan Type(s)] [Options] <host or net

list>

Some Common Scan Types ('*' options require root privileges)

* -sS TCP SYN stealth port scan (default if privileged (root))

-sT TCP connectO port scan (default for unprivileged users)

* -sU UDP port scan

-sP ping scan (Find any reachable machines)

* -sF,-sX,-sN Stealth FIN, Xmas, or Null scan (experts only)

-sR/-I RPC/Identd scan (use with other scan types)

Some Common Options (none are required, most can be combined):

* -0 Use TCP/IP fingerprinting to guess remote operating system

-p <range> ports to scan. Example range:

"1-1024,1080,6666,31337"

-F Only scans ports listed in nmap-services

-v Verbose. Its use is recommended. Use twice for greater

effect.

-P0 Don't ping hosts (needed to scan www.microsoft.com and

others)

* -Ddecoy_hostl,decoy2[,...] Hide scan using many decoys

-T <Paranoid|Sneaky|Polite|Normal|Aggressive|Insane> General

timing policy

-n/-R Never do DNS resolution/Always resolve [default:

sometimes resolve]

-0N/-0X/-0G <1ogfile> Output normal/XML/grepable scan logs to

<1 ogfil e>

-iL <i nputfil e> Get targets from file; Use '-' for stdin

* -S <your_IP>/-e <devicename> Specify source address or

network interface

--interactive Go into interactive mode (then press h for help)

--win_help Windows-specific features

Example: nmap -v -sS -0 www.my.com 192.168.0.0/16 '192.88-

90.*.*'

2. This should give you some idea of some of the types of scans that Nmap can perform. Notice the first and second entries. The -sS is a Transmission Control Protocol (TCP) stealth scan, and the -sT is a TCP full connect. The difference in these is that the stealth scan does only two of the three steps of the TCP handshake, while the full connect scan does all three steps and is slightly more reliable.

Now run Nmap with the -sT option and configure it to scan the entire subnet. The following gives an example of the proper syntax.

C:\>nmap -sT 192.168.1.1-254

3. The scan may take some time. On a large network expect the tool to take longer as there will be many hosts for it to scan.

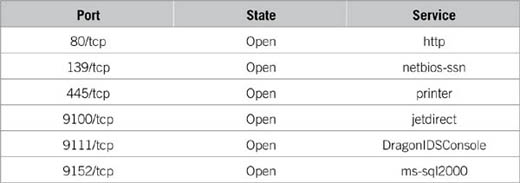

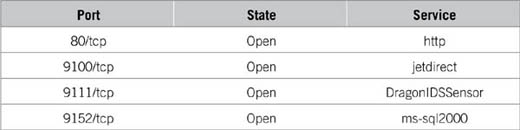

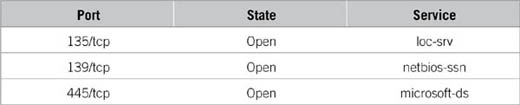

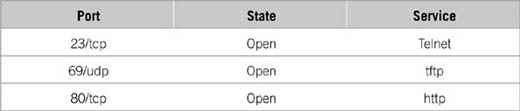

4. When the scan is complete the results will be returned that will look similar to those shown here.

Interesting ports on (192.168.1.17):

(The 1,600 ports scanned but not shown below are in state: filtered)

Interesting ports on (192.168.1.18):

(The 1,594 ports scanned but not shown below are in state: filtered)

Interesting ports on (192.168.1.19):

(The 1,594 ports scanned but not shown below are in state: filtered)

Interesting ports on VENUS (192.168.1.20):

(The 1,596 ports scanned but not shown below are in state: filtered)

Interesting ports on PLUTO (192.168.1.21):

(The 1,596 ports scanned but not shown below are in state: filtered)

Interesting ports on (192.168.1.25):

(The 1,598 ports scanned but not shown below are in state: filtered)

Nmap run completed—254 IP addresses (six hosts up) scanned in 2,528 s.

In the example mentioned earlier, notice how you can see the ports that were identified on each system. Although this is the same type of tool that would be used by an attacker, it’s also a valuable tool for the security professional. You can see from the example that there are a number of ports open on each of the hosts that were probed. Remember that these machines are in an internal network, so some of these ports should be allowed.

TEST DAY TIP Spend a few minutes reviewing port and protocol numbers for standard services provided in the network environment. This will help when you are analyzing questions that require configuration of ACL lists and determinations of appropriate blocks to install to secure a network.

The question as to “should the ports be open” should lead us back to our earlier discussion of policy and risk assessment. If nothing else this type of tool can allow us to see if our hardening activities have worked and verify that no one has opened services on a system that is not allowed. Even for ports that are allowed and have been identified by scanning tools, decisions must be made as to which of these ports are likely to be vulnerable, and then the risks of the vulnerability weighed against the need for the particular service connected to that port. Port vulnerabilities are constantly updated by various vendors and should be reviewed and evaluated for risk at regular intervals to reduce potential problems. It is important to remember that scans of a network should be conducted initially to develop a baseline of what services and protocols are active on the network. Once the network has been secured according to policy, these scans should be conducted on a periodic basis to ensure that the network is in compliance with policy.

Network threats exist in today’s world in many forms. It seems as if the more creative network administrators become in protecting their environments, the more creative hackers and script kiddies become at innovating ways to get past the most admirable security efforts.

One of the more exciting and dynamic aspects of network security relates to the threat of attacks. A great deal of media attention and many vendor product offerings have been targeting attacks and attack methodologies. This is perhaps the reason that CompTIA has been focusing many questions in this particular area. Although there are many different varieties and methods of attack, they can generally all be grouped into several categories:

By the general target of the attack (application, network, or mixed)

By the general target of the attack (application, network, or mixed)

By whether the attack is active or passive

By whether the attack is active or passive

By how the attack works (for example, via password cracking, or by exploiting code and cryptographic algorithms)

By how the attack works (for example, via password cracking, or by exploiting code and cryptographic algorithms)

It’s important to realize that the boundaries between these three categories aren’t fixed. As attacks become more complex, they tend to be both application-based and network-based, which has spawned the new term mixed threat applications. An example of such an attack can be seen in the MyDoom worm, which targeted Windows machines in 2004. Victims received an e-mail indicating a delivery error, and if they executed the attached file, MyDoom would take over. The compromised machine would reproduce the attack by sending the e-mail to contacts in the user’s address book and copying the attachment to peer-to-peer (P2P) sharing directories. It would also open a backdoor on port 3,127, and try to launch a denial of service (DoS) attack against The SCO Group or Microsoft. So, as attackers get more creative, we have seen more and more combined and sophisticated threats. In the next few sections, we will detail some of the most common network threats and attack techniques so that you can be aware of them and understand how to recognize their symptoms and thereby devise a plan to thwart attack.

Head of the Class

Attack Methodologies in Plain English

In this section, we’ve listed network attacks, application attacks, and mixed threat attacks, and within those are included buffer overflows, distributed denial of service (DDoS) attacks, fragmentation attacks, and theft of service attacks. Although the list of descriptions might look overwhelming, generally the names are self-explanatory. For example, consider a DoS attack. As its name implies, this attack is designed to do just one thing—render a computer or network nonfunctional so as to deny service to its legitimate users. That’s it. So, a DoS could be as simple as unplugging machines at random in a data center or as complex as organizing an army of hacked computers to send packets to a single host to overwhelm it and shut down its communications. Another term that has caused some confusion is a mixed threat attack. This simply describes any type of attack that is comprised of two different, smaller attacks. For example, an attack that goes after Outlook clients and then sets up a bootleg music server on the victim machine is classified as a mixed threat attack.

TCP/IP hijacking, or session hijacking, is a problem that has appeared in most TCP/ IP-based applications, ranging from simple Telnet sessions to Web-based e-commerce applications. To hijack a TCP/IP connection, a malicious user must first have the ability to intercept a legitimate user’s data, and then insert himself or herself into that session much like a MITM attack. A tool known as Hunt (www.packetstormsecurity.org/sniffers/hunt/) is very commonly used to monitor and hijack sessions. It works especially well on basic Telnet or FTP sessions.

A more interesting and malicious form of session hijacking involves Web-based applications (especially e-commerce and other applications that rely heavily on cookies to maintain session state). The first scenario involves hijacking a user’s cookie, which is normally used to store login credentials and other sensitive information, and using that cookie to then access that user’s session. The legitimate user will simply receive a “session expired” or “login failed” message and probably will not even be aware that anything suspicious happened. The other issue with Web server applications that can lead to session hijacking is incorrectly configured session timeouts. A Web application is typically configured to timeout a user’s session after a set period of inactivity. If this timeout is too large, it leaves a window of opportunity for an attacker to potentially use a hijacked cookie or even predict a session ID number and hijack a user’s session.

To prevent these types of attacks, as with other TCP/IP-based attacks, the use of encrypted sessions are key; in the case of Web applications, unique and pseudorandom session IDs and cookies should be used along with SSL encryption. This makes it harder for attackers to guess the appropriate sequence to insert into connections, or to intercept communications that are encrypted during transit.

Null sessions are unauthenticated connections. When someone attempts to connect to a Windows machine and does not present credentials, they can potentially successfully connect as an anonymous user, thus creating a Null session.

Null sessions present vulnerability in that once someone has connected to a machine there is a lot to be learned about the machine. The more that is exposed about the machine, the more ammunition a hacker will have to attempt to gain further access. For instance, in Windows NT/2000 content about the local machine SAM database was potentially accessible from a null session. Once someone has obtained information about local usernames, they can then launch a brute force or dictionary attack in an attempt to gain additional access to the machine.

Null session can be controlled to some degree with registry hacks that can be deployed out to your machines, but the version of Windows operating system will dictate what can be configured for null session behavior on your machine.

The most classic example of spoofing is IP spoofing. TCP/IP requires that every host fills in its own source address on packets, and there are almost no measures in place to stop hosts from lying. Spoofing, by definition, is always intentional. However, the fact that some malfunctions and misconfigurations can cause the exact same effect as an intentional spoof causes difficulty in determining whether an incorrect address indicates a spoof.

Spoofing is a result of some inherent flaws in TCP/IP. TCP/IP basically assumes that all computers are telling the truth. There is little or no checking done to verify that a packet really comes from the address indicated in the IP header. When the protocols were being designed in the late 1960s, engineers didn’t anticipate that anyone would or could use the protocol maliciously. In fact, one engineer at the time described the system as flawless because “computers don’t lie.” There are different types of IP spoofing attacks. These include blind spoofing attacks in which the attacker can only send packets and has to make assumptions or guesses about replies, and informed attacks in which the attacker can monitor, and therefore participate in, bidirectional communications.

There are ways to combat spoofing, however. Stateful firewalls usually have spoofing protection whereby they define which IPs are allowed to originate in each of their interfaces. If a packet claimed to be from a network specified as belonging to a different interface, the packet is quickly dropped. This protects from both blind and informed attacks. An easy way to defeat blind spoofing attacks is to disable source routing in your network at your firewall, at your router, or both. Source routing is, in short, a way to tell your packet to take the same path back that it took while going forward. This information is contained in the packet’s IP Options, and disabling this will prevent attackers from using it to get responses back from their spoofed packets.

Spoofing is not always malicious. Some network redundancy schemes rely on automated spoofing to take over the identity of a downed server. This is because the networking technologies never accounted for the need for one server to take over for another.

Technologies and methodologies exist that can help safeguard against spoofing of these capability challenges. These include:

Using firewalls to guard against unauthorized transmissions.

Using firewalls to guard against unauthorized transmissions.

Not relying on security through obscurity, the expectation that using undocumented protocols will protect you.

Not relying on security through obscurity, the expectation that using undocumented protocols will protect you.

Using various cryptographic algorithms to provide differing levels of authentication.

Using various cryptographic algorithms to provide differing levels of authentication.

Subtle attacks are far more effective than obvious ones. Spoofing has an advantage in this respect over a straight vulnerability exploit. The concept of spoofing includes pretending to be a trusted source, thereby increasing the chances that the attack will go unnoticed.

TEST DAY TIP Knowledge of TCP/IP is really helpful when dealing with spoofing and sequence attacks. Having a good grasp of the fundamentals of TCP/IP will make the attacks seem less abstract. Additionally, knowledge of not only what these attacks are, but how they work, will better prepare you to answer test questions.

If the attacks use just occasional induced failures as part of their subtlety, users will often chalk it up to normal problems that occur all the time. By careful application of this technique over time, users’ behavior can often be manipulated.

Address Resolution Protocol (ARP) spoofing can be quickly and easily done with a variety of tools, most of which are designed to work on UNIX OSes. One of the best all-around suites is a package called dsniff. It contains an ARP spoofing utility and a number of other sniffing tools that can be beneficial when spoofing.

To make the most of dsniff you’ll need a Layer 2 switch into which all of your lab machines are plugged. It is also helpful to have various other machines doing routine activities such as Web surfing, checking POP mail, or using Instant Messenger software.

1. To run dsniff for this exercise, you will need a UNIX-based machine. To download the package and to check compatibility, visit the dsniff Web site at www.monkey.org/~dugsong/dsniff.

2. After you’ve downloaded and installed the software, you will see a utility called arpspoof. This is the tool that we’ll be using to impersonate the gateway host. The gateway is the host that routes the traffic to other networks.

3. You’ll also need to make sure that IP forwarding is turned on in your kernel. If you’re using *BSD UNIX, you can enable this with the sysctl command (sysctl – w net.inet.ip.forwarding=1). After this has been done, you should be ready to spoof the gateway.

4. arpspoof is a really flexible tool. It will allow you to poison the ARP of the entire local area network (LAN), or target a single host. Poisoning is the act of tricking the other computers into thinking you are another host. The usage is as follows:

home# arpspoof –i fxp0 10.10.0.1

This will start the attack using interface fxp0, and will intercept any packets bound for 10.10.0.1. The output will show you the current ARP traffic.

5. Congratulations, you’ve just become your gateway.

You can leave the arpspoof process running, and experiment in another window with some of the various sniffing tools which dsniff offers. Dsniff itself is a jack-of-all-trades password grabber. It will fetch passwords for Telnet, FTP, HTTP, Instant Messaging (IM), Oracle, and almost any other password that is transmitted in the clear. Another tool, mailsnarf, will grab any and all e-mail messages it sees, and store them in a standard Berkeley mbox file for later viewing. Finally, one of the more visually impressive tools is WebSpy. This tool will grab Universal Resource Locator (URL) strings sniffed from a specified host, and display them on your local terminal, giving the appearance of surfing along with the victim.

You should now have a good idea of the kind of damage an attacker can do with ARP spoofing and the right tools. This should also make clear the importance of using encryption to handle data. Additionally, any misconceptions about the security or sniffing protection provided by switched networks should now be alleviated thanks to the magic of ARP spoofing!

As you have probably already begun to realize, the TCP/IP protocols were not designed with security in mind and contain a number of fundamental flaws that simply cannot be fixed due to the nature of the protocols. One issue that has resulted from IPv4’s lack of security is the MITM attack. To fully understand how a MITM attack works, let’s quickly review how TCP/IP works.

TCP/IP was formally introduced in 1974 by Vinton Cerf. The original purpose of TCP/IP was not to provide security; rather, it was to provide a high-speed, reliable, communication network links.

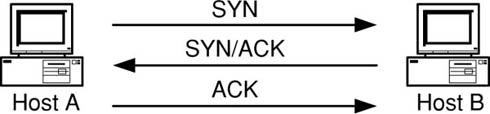

A TCP/IP connection is formed with a three-way handshake. As seen in Figure 6.4, a host (Host A) that wants to send data to another host (Host B) will initiate communications by sending a SYN packet. The SYN packet contains, among other things, the source and destination IP addresses as well as the source and destination port numbers. Host B will respond with a SYN/ACK. The SYN from Host B prompts Host A to send another ACK and the connection is established.

FIGURE 6.4

A Standard TCP/IP Handshake

If a malicious individual can place himself between Host A and Host B, for example compromising an upstream router belonging to the ISP of one of the hosts, he or she can then monitor the packets moving between the two hosts. It is then possible for the malicious individual to analyze and change packets coming and going to the host. It is quite easy for a malicious person to perform this type of attack on Telnet sessions, but, the attacker must first be able to predict the right TCP sequence number and properly modify the data for this type of attack to actually work—all before the session times out waiting for the response. Obviously, doing this manually is hard to pull off; however, tools designed to watch for and modify specific data have been written and work very well.

There are a few ways in which you can prevent MITM attacks from happening, like using a TCP/IP implementation that generates TCP sequence numbers that are as close to truly random as possible.

In a replay attack, a malicious person captures an amount of sensitive traffic, and then simply replays it back to the host in an attempt to replicate the transaction. For example, consider an electronic money transfer. User A transfers a sum of money to Bank B. Malicious User C captures User A’s network traffic, then replays the transaction in an attempt to cause the transaction to be repeated multiple times. Obviously, this attack has no benefit to User C, but could result in User A losing money. Replay attacks, while possible in theory, are quite unlikely due to multiple factors such as the level of difficulty of predicting TCP sequence numbers. However, it has been proven that the formula for generating random TCP sequence numbers, especially in older OSes, isn’t truly random or even that difficult to predict, which makes this attack possible.

Another potential scenario for a replay attack is this: an attacker replays the captured data with all potential sequence numbers, in hopes of getting lucky and hitting the right one, thus causing the user’s connection to drop, or in some cases, to insert arbitrary data into a session.

As with MITM attacks, the use of random TCP sequence numbers and encryption like SSH or Internet Protocol Security (IPSec) can help defend against this problem. The use of timestamps also helps defend against replay attacks.

Even with the most comprehensive filtering in place all firewalls are still vulnerable to DoS attacks. These attacks attempt to render a network inaccessible by flooding a device such as a firewall with packets to the point that it can no longer accept valid packets. This works by overloading the processor of the firewall by forcing it to attempt to process a number of packets far past its limitations. By performing a DoS attack directly against a firewall, an attacker can get the firewall to overload its buffers and start letting all traffic through without filtering it. If a technician is alerted to an attack of this type, they can block the specific IP address that the attack is coming from at their router.

An alternative attack that is more difficult to defend against is the DDoS attack. This attack is worse, because it can come from a large number of computers at the same time. This is accomplished either by the attacker having a large distributed network of systems all over the world (unlikely) or by infecting normal users’ computers with a Trojan horse application, which allows the attacker to force the systems to attack specific targets without the end user’s knowledge. These end-user computers are systems that have been attacked in the past and infected with a Trojan horse by the attacker. By doing this, the attacker is able to set up a large number of systems (called zombies) to perform a DoS attack at the same time. This type of attack constitutes a DDoS attack. Performing an attack in this manner is more effective due to the number of packets being sent. In addition, it introduces another layer of systems between the attacker and the target, making the attacker more difficult to trace.

Domain name kiting is when someone purchases a domain name and then soon after deletes the registration only to immediately reregister it. Because there is normally a 5-day registration grace period offered by many domain name registrars’, domain kiters will abuse this grace period by canceling the domain name registrations to avoid paying for them. This way they can use the domain names without cost.

Because the grace period offered by registrars allows the registration of a domain name to be canceled without cost or penalty as long as the cancellation comes within 5 days of the registration, you can effectively “own” and use a domain name during this short timeframe without actually paying for it.

It has become relatively easy to drop a domain name and claim the refund at the end of the grace period and by taking advantage of this process abusers are able to keep the registrations active on their most revenue-generating sites by cycling through cancellations and an endless refresh of their choice domain name registrations. As no cost is involved in turning over the domain names, domain kiters make money out of domains they are not paying for.