CHAPTER 9

Network Operations

This chapter covers the following official Network+ objectives:

Given a scenario, use appropriate documentation and diagrams to manage the network.

Given a scenario, use appropriate documentation and diagrams to manage the network.

Compare and contrast business continuity and disaster recovery concepts.

Compare and contrast business continuity and disaster recovery concepts.

Explain common scanning, monitoring, and patching processes and summarize their expected

outputs.

Explain common scanning, monitoring, and patching processes and summarize their expected

outputs.

Given a scenario, use remote access methods.

Given a scenario, use remote access methods.

Identify policies and best practices.

Identify policies and best practices.

This chapter covers CompTIA Network+ objectives 3.1, 3.2, 3.3, 3.4, and 3.5. For more information on the official Network+ exam topics, see the “About the Network+ Exam” section in the Introduction.

This chapter focuses on two important parts of the role of a network administrator: documentation and the tools to use to monitor or optimize connectivity. Documentation, although not glamorous, is an essential part of the job. This chapter looks at several aspects of network documentation.

Given a scenario, use appropriate documentation and diagrams to manage the network.

Given a scenario, use appropriate documentation and diagrams to manage the network.

Identify policies and best practices.

Identify policies and best practices.

CramSaver

If you can correctly answer these questions before going through this section, save time by skimming the Exam Alerts in this section and then completing the Cram Quiz at the end of the section.

1. Which network topology focuses on the direction in which data flows within the physical environment?

2. In computing, what are historical readings used as a measurement for future calculations referred to as?

3. True or false: Both logical and physical network diagrams provide an overview of the network layout and function.

Answers

1. The logical network refers to the direction in which data flows on the network within the physical topology. The logical diagram is not intended to focus on the network hardware but rather on how data flows through that hardware.

2. Keeping and reviewing baselines is an essential part of the administrator’s role.

3. True. Both logical and physical network diagrams provide an overview of the network layout and function.

ExamAlert

Remember that this objective begins with “Given a scenario.” That means that you may receive a drag and drop, matching, or “live OS” scenario where you have to click through to complete a specific objective-based task.

Administrators have several daily tasks, and new ones often crop up. In this environment, tasks such as documentation sometimes fall to the background. It’s important that you understand why administrators need to spend valuable time writing and reviewing documentation. Having a well-documented network offers a number of advantages:

Troubleshooting: When something goes wrong on the network, including the wiring, up-to-date documentation

is a valuable reference to guide the troubleshooting effort. The documentation saves

you money and time in isolating potential problems.

Troubleshooting: When something goes wrong on the network, including the wiring, up-to-date documentation

is a valuable reference to guide the troubleshooting effort. The documentation saves

you money and time in isolating potential problems.

Training new administrators: In many network environments, new administrators are hired, and old ones leave. In

this scenario, documentation is critical. New administrators do not have the time

to try to figure out where cabling is run, what cabling is used, potential trouble

spots, and more. Up-to-date information helps new administrators quickly see the network

layout.

Training new administrators: In many network environments, new administrators are hired, and old ones leave. In

this scenario, documentation is critical. New administrators do not have the time

to try to figure out where cabling is run, what cabling is used, potential trouble

spots, and more. Up-to-date information helps new administrators quickly see the network

layout.

Working with contractors and consultants: Consultants and contractors occasionally may need to visit the network to make recommendations

for the network or to add wiring or other components. In such cases, up-to-date documentation

is needed. If documentation is missing, it would be much more difficult for these

people to do their jobs, and more time and money would likely be required.

Working with contractors and consultants: Consultants and contractors occasionally may need to visit the network to make recommendations

for the network or to add wiring or other components. In such cases, up-to-date documentation

is needed. If documentation is missing, it would be much more difficult for these

people to do their jobs, and more time and money would likely be required.

Inventory management: Knowing what you have, where you have it, and what you can turn to in the case of

an emergency is both constructive and helpful.

Inventory management: Knowing what you have, where you have it, and what you can turn to in the case of

an emergency is both constructive and helpful.

Quality network documentation does not happen by accident; rather, it requires careful planning. When creating network documentation, you must keep in mind who you are creating the documentation for and that it is a communication tool. Documentation is used to take technical information and present it in a manner that someone new to the network can understand. When planning network documentation, you must decide what you need to document.

Note

Imagine that you have just taken over a network as administrator. What information would you like to see? This is often a clear gauge of what to include in your network documentation.

All networks differ and so does the documentation required for each network. However, certain elements are always included in quality documentation:

Network topology: Networks can be complicated. If someone new is looking over the network, it is critical

to document the entire topology. This includes both the wired and wireless topologies

used on the network. Network topology documentation typically consists of a diagram

or series of diagrams labeling all critical components used to create the network.

These diagrams utilize common symbols for components such as firewalls, hubs, routers,

and switches. Figure 9.1, for example, shows standard figures for, from left to right, a firewall, a hub,

a router, and a switch.

Network topology: Networks can be complicated. If someone new is looking over the network, it is critical

to document the entire topology. This includes both the wired and wireless topologies

used on the network. Network topology documentation typically consists of a diagram

or series of diagrams labeling all critical components used to create the network.

These diagrams utilize common symbols for components such as firewalls, hubs, routers,

and switches. Figure 9.1, for example, shows standard figures for, from left to right, a firewall, a hub,

a router, and a switch.

FIGURE 9.1 Diagram symbols for a firewall, a hub, a router, and a switch

Wiring layout and rack diagrams: Network wiring can be confusing. Much of it is hidden in walls and ceilings, making

it hard to know where the wiring is and what kind is used on the network. This makes

it critical to keep documentation on network wiring up to date. Diagram what is on

each rack and any unusual configurations that might be employed.

Wiring layout and rack diagrams: Network wiring can be confusing. Much of it is hidden in walls and ceilings, making

it hard to know where the wiring is and what kind is used on the network. This makes

it critical to keep documentation on network wiring up to date. Diagram what is on

each rack and any unusual configurations that might be employed.

IDF/MDF documentation: It is not enough to show that there is an intermediate distribution frame (IDF) and/or

main distribution frame (MDF) in your building. You need to thoroughly document any

and every free-standing or wall-mounted rack and the cables running between them and

the end user devices.

IDF/MDF documentation: It is not enough to show that there is an intermediate distribution frame (IDF) and/or

main distribution frame (MDF) in your building. You need to thoroughly document any

and every free-standing or wall-mounted rack and the cables running between them and

the end user devices.

Server configuration: A single network typically uses multiple servers spread over a large geographic area.

Documentation must include schematic drawings of where servers are located on the

network and the services each provides. This includes server function, server IP address,

operating system (OS), software information, and more. Essentially, you need to document

all the information you need to manage or administer the servers.

Server configuration: A single network typically uses multiple servers spread over a large geographic area.

Documentation must include schematic drawings of where servers are located on the

network and the services each provides. This includes server function, server IP address,

operating system (OS), software information, and more. Essentially, you need to document

all the information you need to manage or administer the servers.

Network equipment: The hardware used on a network is configured in a particular way—with protocols,

security settings, permissions, and more. Trying to remember these would be a difficult

task. Having up-to-date documentation makes it easier to recover from a failure.

Network equipment: The hardware used on a network is configured in a particular way—with protocols,

security settings, permissions, and more. Trying to remember these would be a difficult

task. Having up-to-date documentation makes it easier to recover from a failure.

Network configuration, performance baselines, and key applications: Documentation also includes information on all current network configurations, performance

baselines taken, and key applications used on the network, such as up-to-date information

on their updates, vendors, install dates, and more.

Network configuration, performance baselines, and key applications: Documentation also includes information on all current network configurations, performance

baselines taken, and key applications used on the network, such as up-to-date information

on their updates, vendors, install dates, and more.

Detailed account of network services: Network services are a key ingredient in all networks. Services such as Domain Name

Service (DNS), Dynamic Host Configuration Protocol (DHCP), Remote Access Services

(RAS), and more are an important part of documentation. You should describe in detail

which server maintains these services, the backup servers for these services, maintenance

schedules, how they are structured, and so on.

Detailed account of network services: Network services are a key ingredient in all networks. Services such as Domain Name

Service (DNS), Dynamic Host Configuration Protocol (DHCP), Remote Access Services

(RAS), and more are an important part of documentation. You should describe in detail

which server maintains these services, the backup servers for these services, maintenance

schedules, how they are structured, and so on.

Standard operating procedures/work instructions: Finally, documentation should include information on network policy and procedures.

This includes many elements, ranging from who can and cannot access the server room,

to network firewalls, protocols, passwords, physical security, cloud computing use,

mobile device use, and so on.

Standard operating procedures/work instructions: Finally, documentation should include information on network policy and procedures.

This includes many elements, ranging from who can and cannot access the server room,

to network firewalls, protocols, passwords, physical security, cloud computing use,

mobile device use, and so on.

ExamAlert

Be sure that you know the types of information that should be included in network documentation.

Wiring and Port Locations

Network wiring schematics are an essential part of network documentation, particularly for midsize to large networks, where the cabling is certainly complex. For such networks, it becomes increasingly difficult to visualize network cabling and even harder to explain it to someone else. A number of software tools exist to help administrators clearly document network wiring in detail.

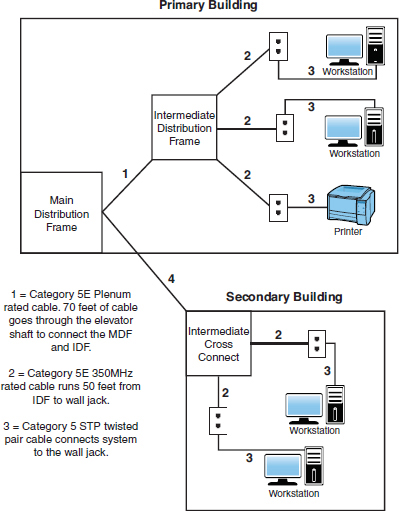

Several types of wiring schematics exist. They can be general, as shown in Figure 9.2, or they can be very specific, indicating the actual type of wiring used, the operating system on each machine, and so on. The more generalized they are, the less they need updating, whereas very specific schematics often need to be changed regularly. Table 9.1 represents another way of documenting data.

ExamAlert

For the exam, be familiar with the look of a general wiring schematic such as the one shown in Figure 9.2.

FIGURE 9.2 A general wiring schematic

Figure 9.2 provides a simplified look at network wiring schematics. Imagine how complicated these diagrams would look on a network with 1,000, 2,000, or even 6,000 computers. Quality network documentation software makes this easier; however, the task of network wiring can be a large one for administrators. Administrators need to ensure that someone can pick up the wiring documentation diagrams and have a good idea of the network wiring.

Caution

Reading schematics and determining where wiring runs are an important part of the administrator’s role. Expect to see a schematic on your exam.

Port locations should be carefully recorded and included in the documentation as well. SNMP can be used directly to map ports on switches and other devices; it is much easier, however, to use software applications that incorporate SNMP and use it to create ready-to-use documentation. A plethora of such programs are available; some are free and many are commercial products.

Troubleshooting Using Wiring Schematics

Some network administrators do not take the time to maintain quality documentation. This will haunt them when it comes time to troubleshoot some random network problems. Without any network wiring schematics, the task will be frustrating and time-consuming. The information shown in Figure 9.2 might be simplified, but you could use that documentation to evaluate the network and make recommendations.

Caution

When looking at a wiring schematic, pay close attention to where the cable is run and the type of cable used if the schematic indicates this. If a correct cable is not used, a problem could occur.

Note

Network wiring schematics are a work in progress. Although changes to wiring do not happen daily, they do occur when the network expands or old cabling is replaced. It is imperative to remember that when changes are made to the network, the schematics and their corresponding references must be updated to reflect the changes. Out-of-date schematics can be frustrating to work with.

Physical and Logical Network Diagrams

In addition to the wiring schematics, documentation should include diagrams of the physical and logical network design. Recall from Chapter 1, “Introduction to Networking Technologies,” that network topologies can be defined on a physical or a logical level. The physical topology refers to how a network is physically constructed—how it looks. The logical topology refers to how a network looks to the devices that use it—how it functions.

Network infrastructure documentation isn’t reviewed daily; however, this documentation is essential for someone unfamiliar with the network to manage or troubleshoot the network. When it comes to documenting the network, you need to document all aspects of the infrastructure. This includes the physical hardware, physical structure, protocols, and software used.

ExamAlert

You should be able to identify a physical and logical diagram. You need to know the types of information that should be included in each diagram.

The physical documentation of the network should include the following elements:

Cabling information: A visual description of all the physical communication links, including all cabling,

cable grades, cable lengths, WAN cabling, and more.

Cabling information: A visual description of all the physical communication links, including all cabling,

cable grades, cable lengths, WAN cabling, and more.

Servers: The server names and IP addresses, types of servers, and domain membership.

Servers: The server names and IP addresses, types of servers, and domain membership.

Network devices: The location of the devices on the network. This includes the printers, hubs, switches,

routers, gateways, and more.

Network devices: The location of the devices on the network. This includes the printers, hubs, switches,

routers, gateways, and more.

Wide-area network: The location and devices of the WAN and components.

Wide-area network: The location and devices of the WAN and components.

User information: Some user information, including the number of local and remote users.

User information: Some user information, including the number of local and remote users.

As you can see, many elements can be included in the physical network diagram. Figure 9.3 shows a physical segment of a network.

FIGURE 9.3 A physical network diagram

Caution

You should recognize the importance of maintaining documentation that includes network diagrams, asset management, IP address utilization, vendor documentation, and internal operating procedures, policies, and standards.

Networks are dynamic, and changes can happen regularly, which is why the physical network diagrams also must be updated. Networks have different policies and procedures on how often updates should occur. Best practice is that the diagram should be updated whenever significant changes to the network occur, such as the addition of a switch or router, a change in protocols, or the addition of a new server. These changes impact how the network operates, and the documentation should reflect the changes.

Caution

There are no hard-and-fast rules about when to change or update network documentation. However, most administrators will want to update whenever functional changes to the network occur.

The logical network refers to the direction in which data flows on the network within the physical topology. The logical diagram is not intended to focus on the network hardware but rather on how data flows through that hardware. In practice, the physical and logical topologies can be the same. In the case of the bus physical topology, data travels along the length of the cable from one computer to the next. So, the diagram for the physical and logical bus would be the same.

This is not always the case. For example, a topology can be in the physical shape of a star, but data is passed in a logical ring. The function of data travel is performed inside a switch in a ring formation. So the physical diagram appears to be a star, but the logical diagram shows data flowing in a ring formation from one computer to the next. Simply put, it is difficult to tell from looking at a physical diagram how data is flowing on the network.

In today’s network environments, the star topology is a common network implementation. Ethernet uses a physical star topology but a logical bus topology. In the center of the physical Ethernet star topology is a switch. It is what happens inside the switch that defines the logical bus topology. The switch passes data between ports as if they were on an Ethernet bus segment.

In addition to data flow, logical diagrams may include additional elements, such as the network domain architecture, server roles, protocols used, and more. Figure 9.4 shows how a logical topology may look in the form of network documentation.

FIGURE 9.4 A logical topology diagram

Caution

The logical topology of a network identifies the logical paths that data signals travel over the network.

Baselines

Baselines play an integral part in network documentation because they let you monitor the network’s overall performance. In simple terms, a baseline is a measure of performance that indicates how hard the network is working and where network resources are spent. The purpose of a baseline is to provide a basis of comparison. For example, you can compare the network’s performance results taken in March to results taken in June, or from one year to the next. More commonly, you would compare the baseline information at a time when the network is having a problem to information recorded when the network was operating with greater efficiency. Such comparisons help you determine whether there has been a problem with the network, how significant that problem is, and even where the problem lies.

To be of any use, baselining is not a one-time task; rather, baselines should be taken periodically to provide an accurate comparison. You should take an initial baseline after the network is set up and operational, and then again when major changes are made to the network. Even if no changes are made to the network, periodic baselining can prove useful as a means to determine whether the network is still operating correctly.

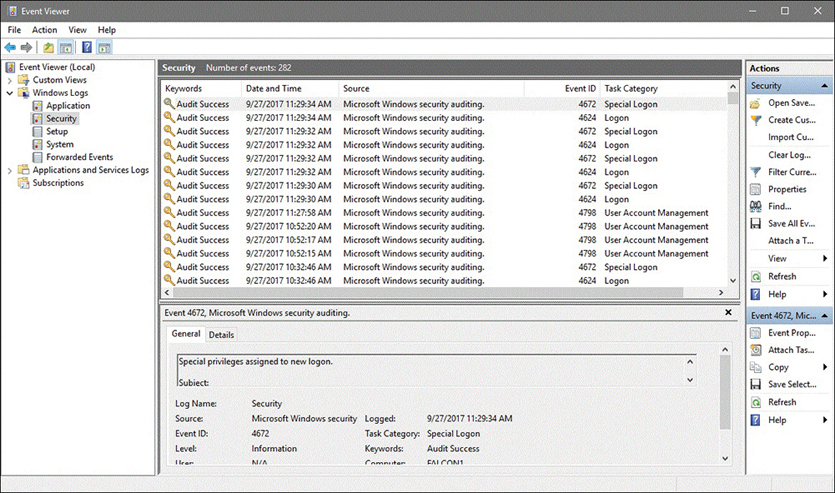

All network operating systems (NOSs), including Windows, Mac OS, UNIX, and Linux, have built-in support for network monitoring. In addition, many third-party software packages are available for detailed network monitoring. These system-monitoring tools provided in a NOS give you the means to take performance baselines, either of the entire network or for an individual segment within the network. Because of the different functions of these two baselines, they are called a system baseline and a component baseline.

To create a network baseline, network monitors provide a graphical display of network statistics. Network administrators can choose a variety of network measurements to track. They can use these statistics to perform routine troubleshooting tasks, such as locating a malfunctioning network card, a downed server, or a denial-of-service (DoS) attack.

Note

Graphing, and the process of seeing data visually, can be much more helpful in identifying trends than looking at raw data and log files.

Collecting network statistics is a process called capturing. Administrators can capture statistics on all elements of the network. For baseline purposes, one of the most common statistics to monitor is bandwidth usage. By reviewing bandwidth statistics, administrators can see where the bulk of network bandwidth is used. Then they can adapt the network for bandwidth use. If too much bandwidth is used by a particular application, administrators can actively control its bandwidth usage. Without comparing baselines, however, it is difficult to see what is normal network bandwidth usage and what is unusual.

Caution

Remember that baselines need to be taken periodically and under the same conditions to be effective. They are used to compare current performance with past performance to help determine whether the network is functioning properly or if troubleshooting is required.

Policies, Procedures, Configurations, and Regulations

Well-functioning networks are characterized by documented policies, procedures, configurations, and regulations. Because they are unique to every network, policies, procedures, configurations, and regulations should be clearly documented.

Policies

By definition, policies refer to an organization’s documented rules about what is to be done, or not done, and why. Policies dictate who can and cannot access particular network resources, server rooms, backup media, and more.

Although networks might have different policies depending on their needs, some common policies include the following:

Network usage policy: Defines who can use network resources such as PCs, printers, scanners, and remote

connections. In addition, the usage policy dictates what can be done with these resources

after they are accessed. No outside systems will be networked without permission from

the network administrator.

Network usage policy: Defines who can use network resources such as PCs, printers, scanners, and remote

connections. In addition, the usage policy dictates what can be done with these resources

after they are accessed. No outside systems will be networked without permission from

the network administrator.

Internet usage policy: This policy specifies the rules for Internet use on the job. Typically, usage should

be focused on business-related tasks. Incidental personal use is allowed during specified

times.

Internet usage policy: This policy specifies the rules for Internet use on the job. Typically, usage should

be focused on business-related tasks. Incidental personal use is allowed during specified

times.

Bring your own device (BYOD) policy: This policy specifies the rules for employees’ personally owned mobile devices (smartphones,

laptops, tablets, and so on) that they bring into the workplace and use to interact

with privileged company information and applications. Two things the policy needs

to address are onboarding and offboarding. Onboarding the mobile device is the procedures gone through to get it ready to go on the network

(scanning for viruses, adding certain apps, and so forth). Offboarding is the process of removing company-owned resources when it is no longer needed (often

done with a wipe or factory reset). Mobile device management (MDM) and mobile application management (MAM) tools (usually third party) are used to administer and leverage both employee-owned

and company-owned mobile devices and applications.

Bring your own device (BYOD) policy: This policy specifies the rules for employees’ personally owned mobile devices (smartphones,

laptops, tablets, and so on) that they bring into the workplace and use to interact

with privileged company information and applications. Two things the policy needs

to address are onboarding and offboarding. Onboarding the mobile device is the procedures gone through to get it ready to go on the network

(scanning for viruses, adding certain apps, and so forth). Offboarding is the process of removing company-owned resources when it is no longer needed (often

done with a wipe or factory reset). Mobile device management (MDM) and mobile application management (MAM) tools (usually third party) are used to administer and leverage both employee-owned

and company-owned mobile devices and applications.

ExamAlert

For the exam, be familiar with onboarding and offboarding.

Email usage policy: Email must follow the same code of conduct as expected in any other form of written

or face-to-face communication. All emails are company property and can be accessed

by the company. Personal emails should be immediately deleted.

Email usage policy: Email must follow the same code of conduct as expected in any other form of written

or face-to-face communication. All emails are company property and can be accessed

by the company. Personal emails should be immediately deleted.

Personal software policy: No outside software should be installed on network computer systems. All software

installations must be approved by the network administrator. No software can be copied

or removed from a site. Licensing restrictions must be adhered to.

Personal software policy: No outside software should be installed on network computer systems. All software

installations must be approved by the network administrator. No software can be copied

or removed from a site. Licensing restrictions must be adhered to.

Password policy: Detail how often passwords must be changed and the minimum level of security for

each (number of characters, use of alphanumeric character set, and so on).

Password policy: Detail how often passwords must be changed and the minimum level of security for

each (number of characters, use of alphanumeric character set, and so on).

User account policy: All users are responsible for keeping their password and account information secret.

All staff are required to log off and sometimes lock their systems after they finish

using them. Attempting to log on to the network with another user account is considered

a serious violation.

User account policy: All users are responsible for keeping their password and account information secret.

All staff are required to log off and sometimes lock their systems after they finish

using them. Attempting to log on to the network with another user account is considered

a serious violation.

International export controls: A number of laws and regulations govern what can and cannot be exported when it comes

to software and hardware to various countries. Employees should take every precaution

to make sure they are adhering to the letter of the law.

International export controls: A number of laws and regulations govern what can and cannot be exported when it comes

to software and hardware to various countries. Employees should take every precaution

to make sure they are adhering to the letter of the law.

Data loss prevention: Losses from employees can quickly put a company in the red. It should be understood

that it is every employee’s responsibility to make sure all preventable losses are

prevented.

Data loss prevention: Losses from employees can quickly put a company in the red. It should be understood

that it is every employee’s responsibility to make sure all preventable losses are

prevented.

Incident response policies: When an incident occurs, all employees should understand it is their responsibility

to be on the lookout for it and report it immediately to the appropriate party.

Incident response policies: When an incident occurs, all employees should understand it is their responsibility

to be on the lookout for it and report it immediately to the appropriate party.

Non Disclosure Agreements (NDAs): NDAs are the oxygen that many companies need to thrive. Employees should understand

the importance of them to continued business operations and agree to follow them to

the letter, and spirit, of the law.

Non Disclosure Agreements (NDAs): NDAs are the oxygen that many companies need to thrive. Employees should understand

the importance of them to continued business operations and agree to follow them to

the letter, and spirit, of the law.

Safety procedures and policies: Safety is everyone’s business, and all employees should know how to do their job

in the safest manner while also looking out for other employees and customers alike.

Safety procedures and policies: Safety is everyone’s business, and all employees should know how to do their job

in the safest manner while also looking out for other employees and customers alike.

Ownership policy: The company owns all data, including users’ email, voice mail, and Internet usage

logs, and the company reserves the right to inspect these at any time. Some companies

even go so far as controlling how much personal data can be stored on a workstation.

Ownership policy: The company owns all data, including users’ email, voice mail, and Internet usage

logs, and the company reserves the right to inspect these at any time. Some companies

even go so far as controlling how much personal data can be stored on a workstation.

This list is just a snapshot of the policies that guide the behavior for administrators and network users. Network policies should be clearly documented and available to network users. Often, these policies are reviewed with new staff members or new administrators. As they are updated, they are rereleased to network users. Policies are regularly reviewed and updated.

Note

You might be asked about network policies. Network policies dictate network rules and provide guidelines for network conduct. Policies are often updated and reviewed and are changed to reflect changes to the network and perhaps changes in business requirements.

Password-Related Policies

Although biometrics and smart cards are becoming more common, they still have a long way to go before they attain the level of popularity that username and password combinations enjoy. Usernames and passwords do not require any additional equipment, which practically every other method of authentication does; the username and password process is familiar to users, easy to implement, and relatively secure. For that reason, they are worthy of more detailed coverage than the other authentication systems previously discussed.

Note

Biometrics are not as ubiquitous as username/password combinations, but they are coming up quickly. Some smartphones, for example, offer the ability to use a fingerprint scanner and/or gestures to access the system instead of username and password. Features such as these are expected to become more common with future releases.

Passwords are a relatively simple form of authentication in that only a string of characters can be used to authenticate the user. However, how the string of characters is used and which policies you can put in place to govern them make usernames and passwords an excellent form of authentication.

Password Policies

All popular network operating systems include password policy systems that enable the network administrator to control how passwords are used on the system. The exact capabilities vary between network operating systems. However, generally they enable the following:

Minimum length of password: Shorter passwords are easier to guess than longer ones. Setting a minimum password

length does not prevent a user from creating a longer password than the minimum; however,

each network operating system has a limit on how long a password can be.

Minimum length of password: Shorter passwords are easier to guess than longer ones. Setting a minimum password

length does not prevent a user from creating a longer password than the minimum; however,

each network operating system has a limit on how long a password can be.

Password expiration: Also known as the maximum password age, password expiration defines how long the

user can use the same password before having to change it. A general practice is that

a password be changed every 30 days. In high-security environments, you might want

to make this value shorter, but you should generally not make it any longer. Having

passwords expire periodically is a crucial feature because it means that if a password

is compromised, the unauthorized user will not indefinitely have access.

Password expiration: Also known as the maximum password age, password expiration defines how long the

user can use the same password before having to change it. A general practice is that

a password be changed every 30 days. In high-security environments, you might want

to make this value shorter, but you should generally not make it any longer. Having

passwords expire periodically is a crucial feature because it means that if a password

is compromised, the unauthorized user will not indefinitely have access.

Prevention of password reuse: Although a system might cause a password to expire and prompt the user to change

it, many users are tempted to use the same password again. A process by which the

system remembers the last 10 passwords, for example, is most secure because it forces

the user to create completely new passwords. This feature is sometimes called enforcing

password history.

Prevention of password reuse: Although a system might cause a password to expire and prompt the user to change

it, many users are tempted to use the same password again. A process by which the

system remembers the last 10 passwords, for example, is most secure because it forces

the user to create completely new passwords. This feature is sometimes called enforcing

password history.

Prevention of easy-to-guess passwords: Some systems can evaluate the password provided by a user to determine whether it

meets a required level of complexity. This prevents users from having passwords such

as password, 12345678, their name, or their nickname.

Prevention of easy-to-guess passwords: Some systems can evaluate the password provided by a user to determine whether it

meets a required level of complexity. This prevents users from having passwords such

as password, 12345678, their name, or their nickname.

ExamAlert

You must identify an effective password policy. For example, a robust password policy would include forcing users to change their passwords on a regular basis.

Password Strength

No matter how good a company’s password policy, it is only as effective as the passwords created within it. A password that is hard to guess, or strong, is more likely to protect the data on a system than one that is easy to guess, or weak.

If you are using only numbers and letters—and the OS is not case sensitive—36 possible combinations exist for each entry, and the total number of possibilities is 366. That might seem like a lot, but to a password-cracking program, it’s not much security. A password that uses eight case-sensitive characters, with letters, numbers, and special characters, has so many possible combinations that a standard calculator cannot display the actual number.

There has always been a debate over how long a password should be. It should be sufficiently long that it is hard to break but sufficiently short that the user can easily remember it (and type it). In a normal working environment, passwords of eight characters are sufficient. Certainly, they should be no fewer than six characters. In environments in which security is a concern, passwords should be 10 characters or more.

Users should be encouraged to use a password that is considered strong. A strong password has at least eight characters; has a combination of letters, numbers, and special characters; uses mixed case; and does not form a proper word. Examples are 3Ecc5T0h and e1oXPn3r. Such passwords might be secure, but users are likely to have problems remembering them. For that reason, a popular strategy is to use a combination of letters and numbers to form phrases or long words. Examples include d1eTc0La and tAb1eT0p. These passwords might not be quite as secure as the preceding examples, but they are still strong and a whole lot better than the name of the user’s pet.

Procedures

Network procedures differ from policies in that they describe how tasks are to be performed. For example, each network administrator has backup procedures specifying the time of day backups are done, how often they are done, and where they are stored. A network is full of a number of procedures for practical reasons and, perhaps more important, for security reasons.

Administrators must be aware of several procedures when on the job. The number and exact type of procedures depends on the network. The network’s overall goal is to ensure uniformity and ensure that network tasks follow a framework. Without this procedural framework, different administrators might approach tasks differently, which could lead to confusion on the network.

Network procedures might include the following:

Backup procedures: Backup procedures specify when they are to be performed, how often a backup occurs,

who does the backup, what data is to be backed up, and where and how it will be stored.

Network administrators should carefully follow backup procedures.

Backup procedures: Backup procedures specify when they are to be performed, how often a backup occurs,

who does the backup, what data is to be backed up, and where and how it will be stored.

Network administrators should carefully follow backup procedures.

Procedures for adding new users: When new users are added to a network, administrators typically have to follow certain

guidelines to ensure that the users have access to what they need, but no more. This

is called the principle of least privilege.

Procedures for adding new users: When new users are added to a network, administrators typically have to follow certain

guidelines to ensure that the users have access to what they need, but no more. This

is called the principle of least privilege.

Privileged user agreement: Administrators and authorized users who have the ability to modify secure configurations

and perform tasks such as account setup, account termination, account resetting, auditing,

and so on need to be held to high standards.

Privileged user agreement: Administrators and authorized users who have the ability to modify secure configurations

and perform tasks such as account setup, account termination, account resetting, auditing,

and so on need to be held to high standards.

Security procedures: Some of the more critical procedures involve security. Security procedures are numerous

but may include specifying what the administrator must do if security breaches occur,

security monitoring, security reporting, and updating the OS and applications for

potential security holes.

Security procedures: Some of the more critical procedures involve security. Security procedures are numerous

but may include specifying what the administrator must do if security breaches occur,

security monitoring, security reporting, and updating the OS and applications for

potential security holes.

Network monitoring procedures: The network needs to be constantly monitored. This includes tracking such things

as bandwidth usage, remote access, user logons, and more.

Network monitoring procedures: The network needs to be constantly monitored. This includes tracking such things

as bandwidth usage, remote access, user logons, and more.

Software procedures/system life cycle: All software must be periodically monitored and updated. Documented procedures dictate

when, how often, why, and for whom these updates are done. When assets are disposed

of, asset disposal procedures should be followed to properly document and log their

removal.

Software procedures/system life cycle: All software must be periodically monitored and updated. Documented procedures dictate

when, how often, why, and for whom these updates are done. When assets are disposed

of, asset disposal procedures should be followed to properly document and log their

removal.

Procedures for reporting violations: Users do not always follow outlined network policies. This is why documented procedures

should exist to properly handle the violations. This might include a verbal warning

upon the first offense, followed by written reports and account lockouts thereafter.

Procedures for reporting violations: Users do not always follow outlined network policies. This is why documented procedures

should exist to properly handle the violations. This might include a verbal warning

upon the first offense, followed by written reports and account lockouts thereafter.

Remote-access and network admission procedures: Many workers remotely access the network. This remote access is granted and maintained

using a series of defined procedures. These procedures might dictate when remote users

can access the network, how long they can access it, and what they can access. Network admission control (NAC)—also referred to as network access control—determines who can get on the network and is usually based on 802.1X guidelines.

Remote-access and network admission procedures: Many workers remotely access the network. This remote access is granted and maintained

using a series of defined procedures. These procedures might dictate when remote users

can access the network, how long they can access it, and what they can access. Network admission control (NAC)—also referred to as network access control—determines who can get on the network and is usually based on 802.1X guidelines.

Change Management Documentation

Change management procedures might include the following:

Document reason for a change: Before making any change at all, the first question to ask is why. A change requested by one user may be based on a misunderstanding of what technology

can do, may be cost prohibitive, or may deliver a benefit not worth the undertaking.

Document reason for a change: Before making any change at all, the first question to ask is why. A change requested by one user may be based on a misunderstanding of what technology

can do, may be cost prohibitive, or may deliver a benefit not worth the undertaking.

Change request: An official request should be logged and tracked to verify what is to be done and

what has been done. Within the realm of the change request should be the configuration

procedures to be used, the rollback process that is in place, potential impact identified,

and a list of those who need to be notified.

Change request: An official request should be logged and tracked to verify what is to be done and

what has been done. Within the realm of the change request should be the configuration

procedures to be used, the rollback process that is in place, potential impact identified,

and a list of those who need to be notified.

Approval process: Changes should not be approved on the basis of who makes the most noise, but rather

who has the most justified reasons. An official process should be in place to evaluate

and approve changes prior to actions being undertaken. The approval can be done by

a single administrator or a formal committee based on the size of your organization

and the scope of the change being approved.

Approval process: Changes should not be approved on the basis of who makes the most noise, but rather

who has the most justified reasons. An official process should be in place to evaluate

and approve changes prior to actions being undertaken. The approval can be done by

a single administrator or a formal committee based on the size of your organization

and the scope of the change being approved.

Maintenance window: After a change has been approved, the next question to address is when it is to take

place. Authorized downtime should be used to make changes to production environments.

Maintenance window: After a change has been approved, the next question to address is when it is to take

place. Authorized downtime should be used to make changes to production environments.

Notification of change: Those affected by a change should be notified after the change has taken place. The

notification should not be just of the change but should include any and all impact

to them and identify who they can turn to with questions.

Notification of change: Those affected by a change should be notified after the change has taken place. The

notification should not be just of the change but should include any and all impact

to them and identify who they can turn to with questions.

Documentation: One of the last steps is always to document what has been done. This should include

documentation on network configurations, additions to the network, and physical location

changes.

Documentation: One of the last steps is always to document what has been done. This should include

documentation on network configurations, additions to the network, and physical location

changes.

These represent just a few of the procedures that administrators must follow on the job. It is crucial that all these procedures are well documented, accessible, reviewed, and updated as needed to be effective.

Configuration Documentation

One other critical form of documentation is configuration documentation. Many administrators believe they could never forget the configuration of a router, server, or switch, but it often happens. Although it is often a thankless, time-consuming task, documenting the network hardware and software configurations is critical for continued network functionality.

Note

Organizing and completing the initial set of network documentation is a huge task, but it is just the beginning. Administrators must constantly update all documentation to keep it from becoming obsolete. Documentation is perhaps one of the less-glamorous aspects of the administrator’s role, but it is one of the most important.

Regulations

The terms regulation and policy are often used interchangeably; however, there is a difference. As mentioned, policies are written by an organization for its employees. Regulations are actual legal restrictions with legal consequences. These regulations are set not by the organizations but by applicable laws in the area. Improper use of networks and the Internet can certainly lead to legal violations and consequences. The following is an example of network regulation from an online company:

“Transmission, distribution, uploading, posting or storage of any material in violation of any applicable law or regulation is prohibited. This includes, without limitation, material protected by copyright, trademark, trade secret or other intellectual property right used without proper authorization, material kept in violation of state laws or industry regulations such as social security numbers or credit card numbers, and material that is obscene, defamatory, libelous, unlawful, harassing, abusive, threatening, harmful, vulgar, constitutes an illegal threat, violates export control laws, hate propaganda, fraudulent material or fraudulent activity, invasive of privacy or publicity rights, profane, indecent or otherwise objectionable material of any kind or nature. You may not transmit, distribute, or store material that contains a virus, ‘Trojan Horse,’ adware or spyware, corrupted data, or any software or information to promote or utilize software or any of Network Solutions services to deliver unsolicited email. You further agree not to transmit any material that encourages conduct that could constitute a criminal offense, gives rise to civil liability or otherwise violates any applicable local, state, national or international law or regulation.”

ExamAlert

For the exam and for real-life networking, remember that regulations often are enforceable by law.

Labeling

One of the biggest problems with documentation is in the time that it takes to do it. To shorten this time, it is human nature to take shortcuts and use code or shorthand when labeling devices, maps, reports, and the like. Although this can save time initially, it can render the labels useless if a person other than the one who created the labels looks at them or if a long period of time has passed since they were created and the author cannot remember what the label now means.

To prevent this dilemma, it is highly recommended that standard labeling rules be created by each organization and enforced at all levels.

You have been given a physical wiring schematic that shows the following:

|

Description |

Installation Notes |

|

Category 5E 350 MHz plenum-rated cable |

Cable runs 50 feet from the MDF to the IDF. Cable placed through the ceiling and through a mechanical room. Cable was installed 01/15/2018, upgrading a nonplenum cable. |

|

Category 5E 350 MHz nonplenum cable |

Horizontal cable runs 45 feet to 55 feet from the IDF to a wall jack. Cable 6 replaced Category 5e cable February 2018. Section of cable run through ceiling and over fluorescent lights. |

|

Category 6a UTP cable |

Patch cable connecting printer runs 15 feet due to printer placement. |

|

8.3-micron core/125-micron |

Connecting fiber cable runs 2 kilometers cladding single mode between the primary and secondary buildings. |

1. Given this information, what cable recommendation might you make, if any?

A. Nonplenum cable should be used between the IDF and MDF.

A. Nonplenum cable should be used between the IDF and MDF.

B. The horizontal cable run should use plenum cable.

B. The horizontal cable run should use plenum cable.

C. The patch cable connecting the printer should be shorter.

C. The patch cable connecting the printer should be shorter.

D. Leave the network cabling as is.

D. Leave the network cabling as is.

2. You have been called in to inspect a network configuration. You are given only one network diagram, shown in the following figure. Using the diagram, what recommendation might you make?

A. Cable 1 does not need to be plenum rated.

A. Cable 1 does not need to be plenum rated.

B. Cable 2 should be STP cable.

B. Cable 2 should be STP cable.

C. Cable 3 should be STP cable.

C. Cable 3 should be STP cable.

D. None. The network looks good.

D. None. The network looks good.

3. Hollis is complaining that the network cabling in her office is outdated and should be changed. What should she do to have the cabling evaluated and possibly changed?

A. Tell her supervisor that IT needs to get on the ball.

A. Tell her supervisor that IT needs to get on the ball.

B. Tell your supervisor that IT needs to get on the ball.

B. Tell your supervisor that IT needs to get on the ball.

C. Purchase new cabling at the local electronics store.

C. Purchase new cabling at the local electronics store.

D. Complete a change request.

D. Complete a change request.

Cram Quiz Answers

1. B. In this scenario, a section of horizontal cable runs through the ceiling and over fluorescent lights. This cable run might be a problem because such devices can cause EMI. Alternatively, plenum cable is used in this scenario. STP may have worked as well.

2. B. In this diagram, Cable 1 is plenum rated and should be fine. Cable 3 is patch cable and does not need to be STP rated. Cable 2, however, goes through walls and ceilings. Therefore, it would be recommended to have a better grade of cable than regular UTP. STP provides greater resistance to EMI.

3. D. An official change request should be logged and tracked to verify what is to be done and what has been done. Within the realm of the change request should be the configuration procedures to be used, the rollback process that is in place, potential impact identified, and a list of those that need to be notified.

Business Continuity and Disaster Recovery

Compare and contrast business continuity and disaster recovery concepts.

Compare and contrast business continuity and disaster recovery concepts.

Identify policies and best practices.

Identify policies and best practices.

CramSaver

If you can correctly answer these questions before going through this section, save time by skimming the Exam Alerts in this section and then completing the Cram Quiz at the end of the section.

1. What is the difference between an incremental backup and a differential backup?

2. What are hot, warm, and cold sites used for?

3. True or false: Acceptable use policies define what controls are required to implement and maintain the security of systems, users, and networks.

Answers

1. With incremental backups, all data that has changed since the last full or incremental backup is backed up. The restore procedure requires several backup iterations: the media used in the latest full backup and all media used for incremental backups since the last full backup. An incremental backup uses the archive bit and clears it after a file is saved to disk. With a differential backup, all data changed since the last full backup is backed up. The restore procedure requires the latest full backup media and the latest differential backup media. A differential backup uses the archive bit to determine which files must be backed up but does not clear it.

2. Hot, warm, and cold sites are designed to provide alternative locations for network operations if a disaster occurs.

3. False. Security policies define what controls are required to implement and maintain the security of systems, users, and networks. Acceptable use policies (AUPs) describe how the employees in an organization can use company systems and resources: both software and hardware.

Even the most fault-tolerant networks can fail, which is an unfortunate fact. When those costly and carefully implemented fault-tolerance strategies fail, you are left with disaster recovery.

Disaster recovery can take many forms. In addition to disasters such as fire, flood, and theft, many other potential business disruptions can fall under the banner of disaster recovery. For example, the failure of the electrical supply to your city block might interrupt the business functions. Such an event, although not a disaster per se, might invoke the disaster recovery methods.

The cornerstone of every disaster recovery strategy is the preservation and recoverability of data. When talking about preservation and recoverability, you must talk about backups. Implementing a regular backup schedule can save you a lot of grief when fault tolerance fails or when you need to recover a file that has been accidentally deleted. When it’s time to design a backup schedule, you can use three key types of backups: full, differential, and incremental.

Backups

Full Backups

The preferred method of backup is the full backup method, which copies all files and directories from the hard disk to the backup media. There are a few reasons why doing a full backup is not always possible. First among them is likely the time involved in performing a full backup.

ExamAlert

During a recovery operation, a full backup is the fastest way to restore data of all the methods discussed here, because only one set of media is required for a full restore.

Depending on the amount of data to be backed up, however, full backups can take an extremely long time when you are backing up and can use extensive system resources. Depending on the configuration of the backup hardware, this can considerably slow down the network. In addition, some environments have more data than can fit on a single medium. This makes doing a full backup awkward because someone might need to be there to change the media.

The main advantage of full backups is that a single set of media holds all the data you need to restore. If a failure occurs, that single set of media should be all that is needed to get all data and system information back. The upshot of all this is that any disruption to the network is greatly reduced.

Unfortunately, its strength can also be its weakness. A single set of media holding an organization’s data can be a security risk. If the media were to fall into the wrong hands, all the data could be restored on another computer. Using passwords on backups and using a secure offsite and onsite location can minimize the security risk.

Differential Backups

Companies that don’t have enough time to complete a full backup daily can use the differential backup. Differential backups are faster than a full backup because they back up only the data that has changed since the last full backup. This means that if you do a full backup on a Saturday and a differential backup on the following Wednesday, only the data that has changed since Saturday is backed up. Restoring the differential backup requires the last full backup and the latest differential backup.

Differential backups know what files have changed since the last full backup because they use a setting called the archive bit. The archive bit flags files that have changed or have been created and identifies them as ones that need to be backed up. Full backups do not concern themselves with the archive bit because all files are backed up, regardless of date. A full backup, however, does clear the archive bit after data has been backed up to avoid future confusion. Differential backups notice the archive bit and use it to determine which files have changed. The differential backup does not reset the archive bit information.

Incremental Backups

Some companies have a finite amount of time they can allocate to backup procedures. Such organizations are likely to use incremental backups in their backup strategy. Incremental backups save only the files that have changed since the last full or incremental backup. Like differential backups, incremental backups use the archive bit to determine which files have changed since the last full or incremental backup. Unlike differentials, however, incremental backups clear the archive bit, so files that have not changed are not backed up.

ExamAlert

Both full and incremental backups clear the archive bit after files have been backed up.

The faster backup time of incremental backups comes at a price—the amount of time required to restore. Recovering from a failure with incremental backups requires numerous sets of media—all the incremental backup media sets and the one for the most recent full backup. For example, if you have a full backup from Sunday and an incremental for Monday, Tuesday, and Wednesday, you need four sets of media to restore the data. Each set in the rotation is an additional step in the restore process and an additional failure point. One damaged incremental media set means that you cannot restore the data. Table 9.2 summarizes the various backup strategies.

|

Backup Type |

Advantage |

Disadvantage |

Data Backed Up |

Archive Bit |

|

Full |

Backs up all data on a single media set. Restoring data requires the fewest media sets. |

Depending on the amount of data, full backups can take a long time. |

All files and directories are backed up. |

Does not use the archive bit, but resets it after data has been backed up. |

|

Differential |

Faster backups than a full backup. |

The restore process takes longer than just a full backup. Uses more media sets than a full backup. |

All files and directories that have changed since the last full backup. |

Uses the archive bit to determine the files that have changed, but does not reset the archive bit. |

|

Incremental |

Faster backup times |

Requires multiple disks; restoring data takes more time than the other backup methods. |

The files and directories that have changed since the last full or incremental backup. |

Uses the archive bit to determine the files that have changed, and resets the archive bit. |

ExamAlert

Review Table 9.2 before taking the Network+ exam.

Snapshots

In addition to the three types of backups previously discussed, there are also snapshots. Whereas a backup can take a long time to complete, the advantage of a snapshot—an image of the state of a system at a particular point in time—is that it is an instantaneous copy of the system. This is often accomplished by splitting a mirrored set of disks or by creating a copy of a disk block when it is written in order to preserve the original and keep it available.

Snapshots are popular with virtual machine implementations. You can take as many snapshots as you want (provided you have enough storage space) in order to be able to revert a machine to a “saved” state. Snapshots contain a copy of the virtual machine settings (hardware configuration), information on all virtual disks attached, and the memory state of the machine at the time of the snapshot. This makes the snapshots additionally useful for virtual machine cloning, allowing the machine to be copied once—or multiple times—for testing.

ExamAlert

Think of a snapshot as a photograph, which is where the name came from, of a moment in time of any system.

Backup Best Practices

Many details go into making a backup strategy a success. The following are issues to consider as part of your backup plan:

Offsite storage: Consider storing backup media sets offsite so that if a disaster occurs in a building,

a current set of media is available offsite. The offsite media should be as current

as any onsite and should be secure.

Offsite storage: Consider storing backup media sets offsite so that if a disaster occurs in a building,

a current set of media is available offsite. The offsite media should be as current

as any onsite and should be secure.

Label media: The goal is to restore the data as quickly as possible. Trying to find the media

you need can prove difficult if it is not marked. Furthermore, this can prevent you

from recording over something you need to keep.

Label media: The goal is to restore the data as quickly as possible. Trying to find the media

you need can prove difficult if it is not marked. Furthermore, this can prevent you

from recording over something you need to keep.

Verify backups: Never assume that the backup was successful. Seasoned administrators know that checking

backup logs and performing periodic test restores are part of the backup process.

Verify backups: Never assume that the backup was successful. Seasoned administrators know that checking

backup logs and performing periodic test restores are part of the backup process.

Cleaning: You need to occasionally clean the backup drive. If the inside gets dirty, backups

can fail.

Cleaning: You need to occasionally clean the backup drive. If the inside gets dirty, backups

can fail.

ExamAlert

A backup strategy must include offsite storage to account for theft, fire, flood, or other disasters.

Using Uninterruptible Power Supplies

No discussion of fault tolerance can be complete without a look at power-related issues and the mechanisms used to combat them. When you design a fault-tolerant system, your planning should definitely include uninterruptible power supplies (UPSs). A UPS serves many functions and is a major part of server consideration and implementation.

On a basic level, a UPS, also known as a battery backup, is a box that holds a battery and built-in charging circuit. During times of good power, the battery is recharged; when the UPS is needed, it’s ready to provide power to the server. Most often, the UPS is required to provide enough power to give the administrator time to shut down the server in an orderly fashion, preventing any potential data loss from a dirty shutdown.

Why Use a UPS?

Organizations of all shapes and sizes need UPSs as part of their fault tolerance strategies. A UPS is as important as any other fault-tolerance measure. Three key reasons make a UPS necessary:

Data availability: The goal of any fault-tolerance measure is data availability. A UPS ensures access

to the server if a power failure occurs—or at least as long as it takes to save a

file.

Data availability: The goal of any fault-tolerance measure is data availability. A UPS ensures access

to the server if a power failure occurs—or at least as long as it takes to save a

file.

Protection from data loss: Fluctuations in power or a sudden power-down can damage the data on the server system.

In addition, many servers take full advantage of caching, and a sudden loss of power

could cause the loss of all information held in cache.

Protection from data loss: Fluctuations in power or a sudden power-down can damage the data on the server system.

In addition, many servers take full advantage of caching, and a sudden loss of power

could cause the loss of all information held in cache.

Protection from hardware damage: Constant power fluctuations or sudden power-downs can damage hardware components

within a computer. Damaged hardware can lead to reduced data availability while the

hardware is repaired.

Protection from hardware damage: Constant power fluctuations or sudden power-downs can damage hardware components

within a computer. Damaged hardware can lead to reduced data availability while the

hardware is repaired.

Power Threats

In addition to keeping a server functioning long enough to safely shut it down, a UPS safeguards a server from inconsistent power. This inconsistent power can take many forms. A UPS protects a system from the following power-related threats:

Blackout: A total failure of the power supplied to the server.

Blackout: A total failure of the power supplied to the server.

Spike: A short (usually less than 1 second) but intense increase in voltage. Spikes can

do irreparable damage to any kind of equipment, especially computers.

Spike: A short (usually less than 1 second) but intense increase in voltage. Spikes can

do irreparable damage to any kind of equipment, especially computers.

Surge: Compared to a spike, a surge is a considerably longer (sometimes many seconds) but

usually less intense increase in power. Surges can also damage your computer equipment.

Surge: Compared to a spike, a surge is a considerably longer (sometimes many seconds) but

usually less intense increase in power. Surges can also damage your computer equipment.

Sag: A short-term voltage drop (the opposite of a spike). This type of voltage drop can

cause a server to reboot.

Sag: A short-term voltage drop (the opposite of a spike). This type of voltage drop can

cause a server to reboot.

Brownout: A drop in voltage that usually lasts more than a few minutes.

Brownout: A drop in voltage that usually lasts more than a few minutes.

Many of these power-related threats can occur without your knowledge; if you don’t have a UPS, you cannot prepare for them. For the cost, it is worth buying a UPS, if for no other reason than to sleep better at night.

Alternatives to UPS

Power management is not limited only to the use of UPSs. In addition, to these devices, you should employ power generators to be able to keep your systems up and running when the electrical provider is down for an extended period of time. Redundant circuits and dual power supplies should also be used for key equipment.

Hot, Warm, and Cold Sites

A disaster recovery plan might include the provision for a recovery site that can be quickly brought into play. These sites fall into three categories: hot, warm, and cold. The need for each of these types of sites depends largely on the business you are in and the funds available. Disaster recovery sites represent the ultimate in precautions for organizations that need them. As a result, they do not come cheaply.

The basic concept of a disaster recovery site is that it can provide a base from which the company can be operated during a disaster. The disaster recovery site normally is not intended to provide a desk for every employee. It’s intended more as a means to allow key personnel to continue the core business functions.

In general, a cold recovery site is a site that can be up and operational in a relatively short amount of time, such as a day or two. Provision of services, such as telephone lines and power, is taken care of, and the basic office furniture might be in place. But there is unlikely to be any computer equipment, even though the building might have a network infrastructure and a room ready to act as a server room. In most cases, cold sites provide the physical location and basic services.

Cold sites are useful if you have some forewarning of a potential problem. Generally, cold sites are used by organizations that can weather the storm for a day or two before they get back up and running. If you are the regional office of a major company, it might be possible to have one of the other divisions take care of business until you are ready to go. But if you are the only office in the company, you might need something a little hotter.

For organizations with the dollars and the desire, hot recovery sites represent the ultimate in fault-tolerance strategies. Like cold recovery sites, hot sites are designed to provide only enough facilities to continue the core business function, but hot recovery sites are set up to be ready to go at a moment’s notice.

A hot recovery site includes phone systems with connected phone lines. Data networks also are in place, with any necessary routers and switches plugged in and turned on. Desks have installed and waiting desktop PCs, and server areas are replete with the necessary hardware to support business-critical functions. In other words, within a few hours, the hot site can become a fully functioning element of an organization.

The issue that confronts potential hot-recovery site users is that of cost. Office space is expensive in the best of times, but having space sitting idle 99.9 percent of the time can seem like a tremendously poor use of money. A popular strategy to get around this problem is to use space provided in a disaster recovery facility, which is basically a building, maintained by a third-party company, in which various businesses rent space. Space is usually apportioned according to how much each company pays.

Sitting between the hot and cold recovery sites is the warm site. A warm site typically has computers but is not configured ready to go. This means that data might need to be upgraded or other manual interventions might need to be performed before the network is again operational. The time it takes to get a warm site operational lands right in the middle of the other two options, as does the cost.

ExamAlert

A hot site mirrors the organization’s production network and can assume network operations at a moment’s notice. Warm sites have the equipment needed to bring the network to an operational state but require configuration and potential database updates. A cold site has the space available with basic services but typically requires equipment delivery.

High Availability and Recovery Concepts

When an incident occurs, it is too late to consider policies and procedures then; this must be done well ahead of time. Business continuity should always be of the utmost concern. Business continuity is primarily concerned with the processes, policies, and methods that an organization follows to minimize the impact of a system failure, network failure, or the failure of any key component needed for operation. Business continuity planning (BCP) is the process of implementing policies, controls, and procedures to counteract the effects of losses, outages, or failures of critical business processes. BCP is primarily a management tool that ensures that critical business functions (CBFs) can be performed when normal business operations are disrupted.

Critical business functions refer to those processes or systems that must be made operational immediately when an outage occurs. The business can’t function without them, and many are information intensive and require access to both technology and data. When you evaluate your business’s sustainability, realize that disasters do indeed happen. If possible, build infrastructures that don’t have a single point of failure (SPOF) or connection. If you’re the administrator for a small company, it is not uncommon for the SPOF to be a router/gateway, but you must identify all critical nodes and critical assets. The best way to remove an SPOF from your environment is to add in redundancy.

Know that every piece of equipment can be rated in terms of mean time between failures (MTBF) and mean time to recovery (MTTR). The MTBF is the measurement of the anticipated or predicted incidence of failure of a system or component between inherent failures, whereas the MTTR is the measurement of how long it takes to repair a system or component after a failure occurs.

Some technologies that can help with availability are the following:

Fault tolerance is the capability to withstand a fault (failure) without losing data. This can be

accomplished through the use of RAID, backups, and similar technologies. Popular fault-tolerant

RAID implementations include RAID 1, RAID 5, and RAID 10.

Fault tolerance is the capability to withstand a fault (failure) without losing data. This can be

accomplished through the use of RAID, backups, and similar technologies. Popular fault-tolerant

RAID implementations include RAID 1, RAID 5, and RAID 10.

Load balancing is a technique in which the workload is distributed among several servers. This feature

can take networks to the next level; it increases network performance, reliability,

and availability. A load balancer can be either a hardware device or software specially

configured to balance the load.

Load balancing is a technique in which the workload is distributed among several servers. This feature

can take networks to the next level; it increases network performance, reliability,

and availability. A load balancer can be either a hardware device or software specially

configured to balance the load.

ExamAlert

Remember that load balancing increases redundancy and therefore data availability. Also, load balancing increases performance by distributing the workload.

NIC teaming is the process of combining multiple network cards for performance and redundancy

(fault tolerance) reasons. This can also be called bonding, balancing, or aggregation.