Chapter 6. Planning AWS Projects and Managing Costs

Throughout the earlier chapters, we explored how to use Amazon EMR, S3, and EC2 to analyze data. In this chapter, we’ll take a look at the project costs of these components, along with a number of the key factors to consider when building a new project or moving an existing project to AWS. There are many benefits to moving an application into AWS, but they need to be weighed against the real-world dependencies that can be encountered in a project.

Developing a Project Cost Model

Whether a company is building a new application or moving an existing application to AWS, developing a model of the costs that will be incurred can help the business understand if moving to AWS and EMR is the right strategy. It may also highlight which components of the application it makes sense to run in AWS and which components will be run most cost effectively in an existing in-house infrastructure.

In most existing applications, the current infrastructure and software licensing can affect the costs and options available in building and operating an application in AWS. In this section, we’ll compare these costs against the similar factors in AWS to help you best determine the solution that meets your project’s needs. We’ll also help you determine key factors to consider in your application development plan and how to estimate and minimize the operational costs of your project.

Software Licensing

The data analysis building blocks covered in this book made heavy use of AWS services and open source tools. In each of the examples, the charges to run the application were only incurred while the application was running—in other words, the “pay as you go” usage charges set by Amazon. However, in the real world, applications may make heavy use of a number of third-party software applications. Software licensing can be a tricky problem in AWS (and many cloud environments) due to the traditional licensing models that many third-party products are built around.

Traditional software licensing typically utilizes one or many of the licensing models shown in Table 6-1.

| Licensing model | Description |

License server | With a license server, the software will need to reach out and validate its license against another server either located at the software firm that sells the software or on a standalone server. Software in this licensing model will operate in AWS, but like the other licensing models, it will limit your ability to scale up in the AWS environment. In the worst-case scenario, you may need to run a separate EC2 instance to act as a license server and incur EC2 charges on that license server instance. |

CPU | Many software packages license software based on the number of CPUs in the server or virtual machine. To stay in compliance with CPU licensing in the AWS cloud, the EC2 instance or Amazon EMR instances must have the same number or fewer virtual cores. The EC2 instance sizing chart can help you identify the EC2 instance types with the needed number of CPUs. This licensing model can create challenges when the number of CPU licenses forces an application to run on EC2 instances with memory sizes below what may be required to meet performance needs. |

Server | In server- or node-locked licensing, the software can only be run on specific servers. Typically, as part of license enforcement, the software will examine hardware attributes like the MAC address, CPU identifiers, and other physical elements of a server. Software with this licensing restriction can be run in the AWS cloud, but will need to be run on a predefined set of instances that matches the licensing parameters. This, like the CPU model, will limit the ability to scale inside AWS with multiple running instances. |

None of the traditional software licensing models are terribly AWS-friendly. Such licensing typically requires a large purchase up front rather than the pay-as-you-go model of AWS services. The restrictions also limit the number of running instances and require you to purchase licenses up to the application’s expected peak load. These limitations are no worse than the scenario of running the application in a traditional data center, but they negate some of the benefit gains from the pay-as-you-go model and from matching demand with the near-instant elasticity of starting additional instances in AWS.

Open source software is the most cloud-friendly model. The software can be loaded into EMR and EC2 instances without the concern of license regimes that tie software to specific machine instances. Many real-world applications, however, will typically make use of some third-party software for some of the system components. An example could be an in-house web application built using Microsoft Windows and Microsoft SQL Server. How can an application like this be moved to AWS to improve scalability, use EMR for website analytics, and still remain compliant with Microsoft software licensing?

AWS and Cloud Licensing

Many, but not all, applications can utilize the cloud licensing relationships Amazon has developed with third-party independent software vendors like Microsoft, Oracle, MapR, and others. These vendors have worked with Amazon to build AWS services with their products preinstalled and include their licensing in the price of the AWS service being used. With these vendors, software licensing is addressed using either a pay-as-you-go model or by leveraging licenses already purchased (also known as the “bring your own license” model).

With pay-as-you-go licensing, third-party software is licensed and paid for on an hourly basis in the same manner as an EC2 instance or other AWS services. The exact amount being paid to license the software by reviewing the charge information available on the AWS service page. Returning to the earlier example of licensing a Windows Server with Microsoft SQL Server, a review of the EC2 charge for a large Amazon Linux image currently costs $0.24 per hour compared to the same size image with Microsoft Windows and SQL Server with a cost of $0.974 per hour. The additional software licensing costs incurred to have Microsoft Windows and SQL Server preinstalled and running to support our app is $0.734 for a large EC2 instance. These licensing costs can vary based on instance sizing or could be a flat rate. Table 6-2 compares a number of AWS services utilizing third-party software and the AWS open source equivalent to demonstrate the licensing cost differences incurred.

| Service | Open source cost | Third-party cost | Difference in cost |

EMR | Amazon EMR - Large - $0.30 per hour | MapR M7 - Large - $0.43 per hour | 43% more ($0.13 per hour) |

EC2 | Amazon Linux - Small - $0.06 per hour | Windows Server - Small - $0.091 per hour | 52% more ($0.031 per hour) |

EC2 | Amazon Linux - Large - $0.24 per hour | Windows Server - Large - $0.364 per hour | 52% more ($0.124 per hour) |

EMR | Amazon EMR - Large - $0.30 per hour | MapR M3 - Large - $0.30 per hour | Same price ($0.00 per hour) |

EMR | Amazon EMR - Large - $0.30 per hour | MapR M5 - Large - $0.36 per hour | 20% more ($0.06 per hour) |

The “bring your own license” model is another option for a select number of third-party products. Both Microsoft and Oracle support this model in AWS for a number of their products. This model is similar to the traditional software licensing model, with some notable exceptions. The software is already preloaded and set up on instance images, and there is no requirement to load software keys. Also, the license is not tied to a specific EC2 or EMR instance. This allows the application to run on reserve, on-demand, or spot instances to save costs on the EC2 usage fees. Most importantly, this allows a business’s existing software licensing investment to be migrated to AWS without incurring additional licensing costs.

More on AWS Cloud Licensing

The “pay as you go” prices for the many AWS products that are pre-configured with third-party software can be found on the individual services pricing pages. Third-party software configurations and pricing exist for EC2, Amazon EMR with MapR, and Relational Database Service (RDS).

The “bring your own license” model is a bit more complicated, with a number of vendors having their own set of supported AWS licensing products and cloud licensing conversion. Amazon has information on Microsoft’s license mobility program on the site under the topic Microsoft License Mobility Through Software Assurance. Information on Oracle licensing can be found under the topic Amazon RDS for Oracle Database and can be run in either model.

Private Data Center and AWS Cost Comparisons

Now that you understand software licensing and how it impacts the project, let’s take a look at the software and other data center components that need to be included in a project’s cost projections. For example, consider the cost components of operating a traditional application in a private data center versus running the same application in AWS with similar attributes. In a traditional data center, you need to account for the following cost elements:

- Estimated upfront costs of purchasing hardware, software licensing costs, and allocation of physical space in the data center

- Estimated labor costs to set up and maintain the servers and software

- Estimated data center costs of electricity, heating, cooling, and networking

- Estimated software maintenance and support costs

In the traditional data center, a company makes a capital expenditure to buy equipment for the application. Because this is physical hardware that the company purchases and owns outright, the hardware and software can typically be depreciated over a three-year period. The IRS has a lot of great material on depreciation, but by this book’s definition, depreciation reduces the cost of the purchased hardware and software over the three years by allowing businesses to take a tax deduction on a portion of the original cost.

When you are running the same application in AWS, a number of the cost elements are similar, but without much of the upfront purchasing costs. In an AWS environment, project cost estimates need to account for the following elements:

- Estimated costs of EC2 and EMR instances over three years

- Estimated labor costs to set up and maintain the EC2 and EMR instances and software

- Estimated software maintenance and support costs

In AWS, there is no need to procure hardware, and in many cases the software costs are hourly licensing charges for preinstalled third-party products. Services like AWS are treated differently from a tax and accounting perspective. Because the business does not own the software and hardware used in AWS in most cases, the business cannot depreciate the cost of AWS services. At first, this may seem like this will increase the cost of running an application in the cloud. However, the business also does not have all the initial upfront costs of the traditional data center with the need to purchase hardware and software before the project can even begin. This money can continue to be put to work for the business until the AWS costs are incurred at a later date.

Cost Calculations on an Example Application

To put many of the licensing and data center costs that have been discussed in perspective, let’s take a look at a typical application and compare the cost of purchasing and building out the infrastructure in a traditional data center versus running the same application in AWS.

For a data analysis application, let’s assume the application being built is a web application with a Hadoop cluster (which would be an EMR cluster in AWS), used to pull data from the web servers to analyze traffic and log information for the site.

The site experiences the following load and server needs throughout the day:

- During business hours from 9 A.M. until 5 P.M., the application needs eight Windows-based web servers, an Oracle database server, and a four-node Hadoop cluster to process traffic.

- During the evening from 5 P.M. until midnight, the application can be scaled down to four Windows-based web servers, an Oracle database server, and a three-node Hadoop cluster to process traffic.

- During the early morning from midnight until 9 A.M., the application can be scaled down to two Windows-based web servers, an Oracle database server, and a two-node Hadoop cluster to process traffic.

In a traditional data center, servers are typically not scaled down and turned off. With AWS, the number of EC2 instances and EMR nodes can be scaled to match needed capacity. Because costs are only incurred on actual AWS usage, this is where some of the cost savings start to become apparent in AWS—notably from lower AWS (and licensing) charges as resources are scaled up and down throughout the day. In Table 6-3, we’ve broken out the costs of running this application in a traditional data center and in AWS.

| Private data center (initial) | AWS (initial) | Private data center (monthly) | AWS (monthly) | |

Totals | $34,925 | $3,125 | $10,393 | $5,512 |

Windows servers | $16,000 | $0 | $0 | $1,218.00 |

Hadoop servers | $8,000 | $0 | $0 | $648.00 |

Database servers | $2,000 | $0 | $0 | $421.00 |

Utilities and building | $0 | $0 | $1,000 | $0 |

Windows software licenses | $4,800 | $0 | $0 | $0 |

Oracle software licenses | $1,000 | $0 | $0 | $0 |

Software support costs | $0 | $0 | $18 | $0 |

24/7 support | $0 | $0 | $0 | $100 |

Labor costs | $3,125 | $3,125 | $9,375 | $3,125 |

In the cost breakout, don’t focus on the exact dollar amounts. The costs will vary greatly based on the application being built, and the regional labor and utility costs will depend on the city in which the application is hosted. The straight three-year costs of the project come to $409,073 for the private data center and $201,557 with AWS. This is clearly a significant savings using AWS for the application over three years.

There are two factors left out of this straight-line cost analysis. The costs do not take into account the depreciation deduction for the purchased hardware in the private data center. Also, the accounting concept of the present value of money is not included either. In simplest terms, the present value attempts to determine how much money the business could make if it invested the money in an alterative project or alternative solution. The net effect of this calculation is the longer a business can delay a cost or charge to some point in the future, the lower the overall cost of the project. This means that many of the upfront software licensing and hardware costs that are incurred in the private data center are seen as being more expensive to the business because they must be incurred at the very beginning of the project. The AWS usage costs, by comparison, are incurred at a later date over the life of the project. These factors can have a significant effect on how the costs of a project are viewed from an accounting perspective.

A large number of college courses and books are dedicated to calculating present value, depreciation, and financial analysis. Fortunately, present value calculation functions are built into Microsoft Excel and many other tools. To calculate the present value and depreciation in this example, we make an assumption that the business can achieve a 10% annual return on its investments, and depreciation savings on purchased hardware roughly equates to $309.16 per month. Performing this calculation for the traditional data center and AWS arrives at the following cost estimates in Excel:

Depreciation savings per month: ( $31,800 Hardware and Software * 35% Corporate Tax Rate ) / 36 Months = $309.16 per month

Traditional Data Center: $34,925 - (PV(10%/12, 36, $10,393)) + (PV(10%/12, 36, $309.16)) = $347,435.66

AWS: $3,125 - (PV(10%/12, 36, $5,512)) = $173,948.69

The total cost savings in this example works out to be $173,486.97, even including depreciation. A lot of the internal debates that occur in organizations on comparing AWS costs to private data center costs leave out the labor, building, utility costs, financial analysis, and many of the other factors in our example. IT managers tend to focus on the costs that are readily available and easier to acquire, such as the hardware and software acquisition costs. Leaving these costs out of the analysis makes AWS appear significantly more expensive. Using only the acquisition costs in the example would have AWS becoming more expensive for the database in about six months and for the web servers in about two years. This is why it is critical to do this type of full analysis when comparing AWS to all the major costs in the traditional data center.

This example is still rather simple, but can be useful for developing a quick analysis of a project in comparing infrastructure costs. Other factors that are not included are infrastructure growth to meet future application demand, storage costs, bandwidth, networking gear, and various other factors that go into projects. Amazon has a number of robust online tools that can help you do a more detailed cost analysis. The Amazon Total Cost of Ownership (TCO) tool can be helpful in this area because it includes many of these additional cost factors.

Existing Infrastructure and AWS

The example assumes that new hardware and software needs to be purchased for a project. However, many large organizations have already made large investments in their current infrastructure and data center. When AWS services are compared to these already sunk costs in existing software licenses, hardware, and personnel, they will, of course, be a more expensive option for the organization. Justifying the additional costs of AWS to management when infrastructure already exists for a project can be challenging. The cost comparison in these situations does not start to produce real savings for an organization until the existing infrastructure needs to be upgraded or the data center has to be expanded to accommodate new projects.

Optimizing AWS Resources to Reduce Project Costs

Many of the examples in this book have used the default region your account was created in and on-demand pricing for AWS services. But in reality, many of the AWS products do not have one single price. In many cases, the costs vary based on the region and type of service used. Now that we understand the cost comparisons between a traditional data center and AWS, let’s review what options are available in AWS to meet application availability, performance, and cost constraints.

Amazon Regions

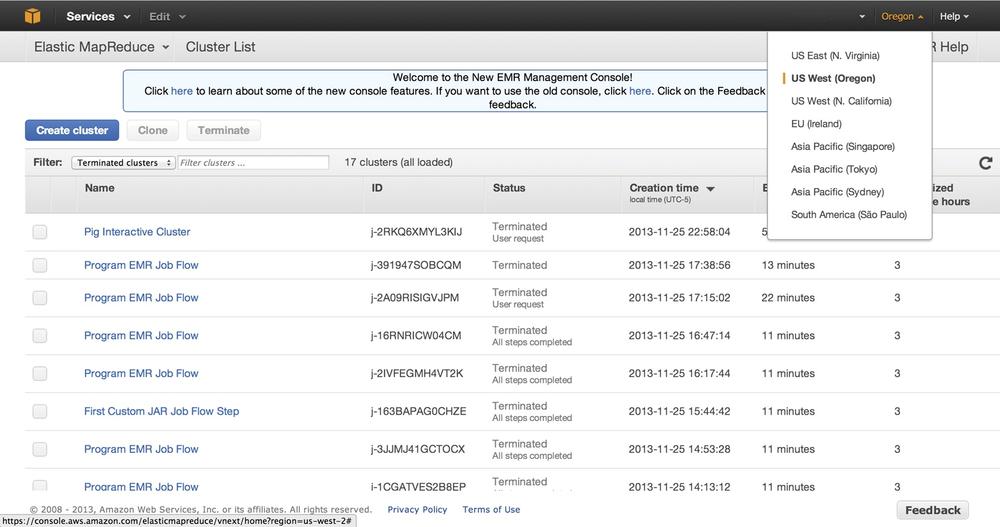

Amazon AWS has data centers located all around the world. Amazon groups its data centers based on geographic regions. Currently, the default region when you create a new account is US West Oregon. Figure 6-1 shows a number of the AWS regions that you can choose when creating new Job Flows, or EC2 instances, or when accessing your S3 stored data.

Amazon attempts to keep similar AWS offerings and software versions in each of its regions; however, there are differences in each region and you should review the AWS regions and endpoints documentation to make sure the region in which the application runs supports the features and functions it needs. AWS Data Pipeline is one example of an AWS service covered in Chapter 3 that is currently only available in the US East region.

The cost of an AWS service will vary based on the AWS region in which the service is located. Table 6-4 shows the differences in these costs (at the time of writing) of some of the AWS services used in the earlier chapters.

| AWS service | US West (Oregon) | US West (N. California) | EU (Ireland) | Asia Pacific (Tokyo) |

EMR EC2 large | $0.300 per hour | $0.320 per hour | $0.320 per hour | $0.410 per hour |

EC2 Linux large | $0.240 per hour | $0.260 per hour | $0.260 per hour | $0.350 per hour |

S3 first 1 TB/month | $0.095 per GB | $0.105 per GB | $0.095 per GB | $0.100 per GB |

Looking at these cost differences, you will note there is only a small cost difference between the regions for each of these services. Though the differences look small, the percentage increase can be significant. For example, running the same EC2 instance in Tokyo instead of Oregon will be a 46 percent increase per hour. Let’s review the cost of a small data analysis app running in each region using the following AWS services:

- 10 large EC2 node Amazon EMR cluster

- 1 terabyte of S3 storage

Looking at this small example application using the AWS Simple Monthly Calculator, you can see that the small difference in the costs in each region for an app can cause the real costs to vary by thousands of dollars per year, simply depending on the region in which the application is run. Table 6-5 shows how the costs can add up simply by changing AWS regions.

| US West (Oregon) | US West (N. California) | EU (Ireland) | Asia Pacific (Tokyo) |

$2,519.59 per month | $2,691.57 per month | $2,680.63 per month | $3,410.77 per month |

Of course, cost is only one factor to consider in picking a region for the application. Performance and availability could be more important factors that may outweigh some of the cost differences. Also, where your data is actually located (aka data locality), the type of data you are processing, and what you plan to do with your results are other key factors to include in selecting a region. The time it takes to transfer your data to the US, or country-specific rules like the EU Data Protection Directive, may make it prohibitive for you to transfer your data to the cheapest AWS region. All of these factors need to be considered before you just pick the lowest cost region.

Amazon Availability Zones

Amazon also has several availability zones within each region. Zones are separate data centers in the same region. Amazon regions are completely isolated from one another, and the failure in one region does not affect another—this is not necessarily the case for zones. These items are important in how you design your application for redundancy and failure. For mission-critical applications, you should run your application in multiple regions and multiple zones in each region. This will allow the application to continue to run if a region or a zone experiences issues. This is a rare, but not completely unheard of, event. The most recent high-profile outage of an AWS data center was the infamous Christmas Eve 2012 outage that affected Netflix servers in the US East (N. Virginia) region.

Maintaining availability of your app and continued data processing is important. Zones and regions may seem less important because you aren’t running the data centers. However, these become useful concepts to be aware of because running an application in multiple regions or availability zones can increase the overall AWS charges incurred by the application. For example, if an application was already using AWS services for a number of other projects in one region in it may make sense to continue to use this same region for other AWS projects. Amazon currently charges $0.02 per gigabyte for US West (Oregon) to move your S3 data to another Amazon region. Other services, like Amazon’s Relational Database Service (RDS), have higher charges for multi-availability zone deployments.

EC2 and EMR Costs with On Demand, Reserve, and Spot Instances

Many of the earlier examples focused on EC2 and EMR instance sizes. Amazon also has a number of pricing models depending on a project’s instance availability needs and whether an organization is willing to pay some upfront costs to lower hourly usage costs. Amazon offers the following pricing models for EC2 and EMR instances:

- Pay as you go: on-demand

- With on-demand instances, Amazon allows you to use EC2 and EMR instances in its data center without any upfront costs. Costs are only incurred as resources are used. If the application being built has a limited lifespan, or a proof of concept needs to be developed to demonstrate the value of a potential project, on-demand instances may be the best choice.

- Reserve instances

- With reserve instances, an upfront cost is paid for instances that will be used on a project. This is very similar to the traditional data center model, but can be a good choice to match an organization’s internal annual budgeting and purchasing processes. A one-year or three-year agreement with Amazon can reserve a number of instances. Purchasing reserve instances lowers the hourly usage rate in some cases to as low as 30% of what would be paid for on-demand instances. Reserve instances can greatly lower costs if the application is long-term and the EC2 and EMR capacity needs are well known over a number of months or years.

- Spot instances

- Spot instances allow an organization to bid on the price for the spare EC2 or EMR compute capacity that exists at the time within AWS. Using spot instances can significantly lower the cost of an application’s operation if the application can gracefully deal with instance failure and has flexibility in the amount of time it takes to complete a Job Flow or the operations inside an EC2 instance. Spot instances become available once the going rate is equal to or less than the target price. However, once the target price goes above a bid price, the spot instances will be terminated. Task instances from the EMR examples are perfect candidates for spot instances in Amazon EMR Job Flows because they do not hold persistent data and can be terminated without causing a Job Flow to fail.

Reserve Instances

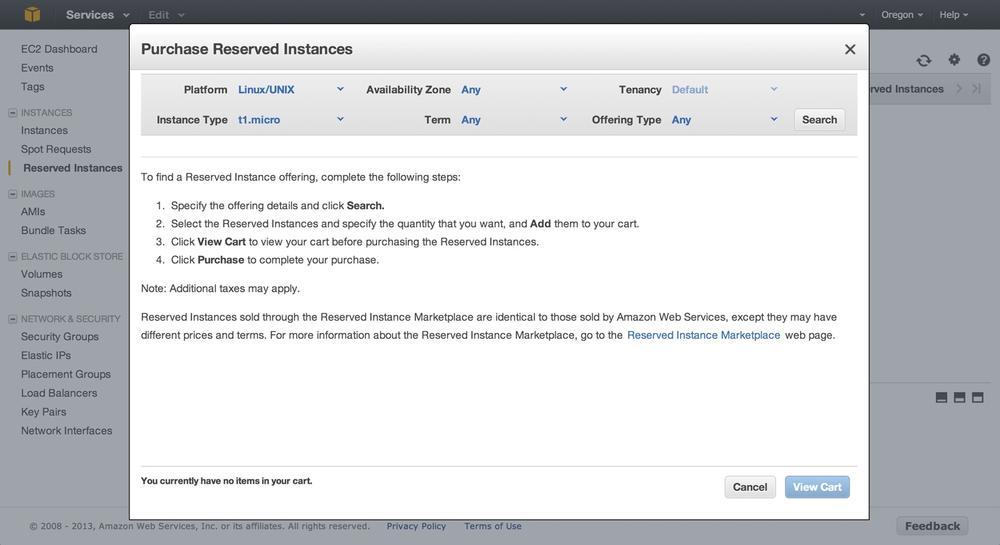

If an application will run for an extended period of time every month, using reserved instances is probably the most cost-effective option for an application. The hourly charges are lower, and reserve instances are not subject to early termination like spot instances. Reserve instances are purchased directly through the EC2 dashboard (see Figure 6-2).

There are a number of key items to be aware of when you are purchasing reserve instances. Figure 6-2 shows purchasing reserve instances in a specific zone in a specific region. This is important because when a Job Flow is created, it needs to use instances from the same availability zone in order to use the purchased reserve instances. If a different availability zone is chosen or you allow Amazon to choose one for you, the Job Flow will be charged the on-demand rate for any EC2 instances used in EMR.

Currently the only ways of specifying the availability zone when launching a new cluster, or Job Flow,

is by specifying the availability zone in the Hardware Configuration when creating a new cluster, using the Elastic MapReduce command-line tool or the AWS SDK. Example 6-1 shows creating a Job Flow using the command line. The availability-zone option specifies the zone in which the job is created so the reserved instances can be used.

hostname$ elastic-mapreduce --create --name "Program EMR Job Flow Reserve" --num-instances 3 --availability-zone us-west-2a--jar s3n://program-emr/log-analysis.jar --main-class com.programemr.LogAnalysisDriver --arg "s3n://program-emr/sample-syslog.log" --arg "s3n://program-emr/run0" Created job flow j-2ZBQDXX8BQQW2

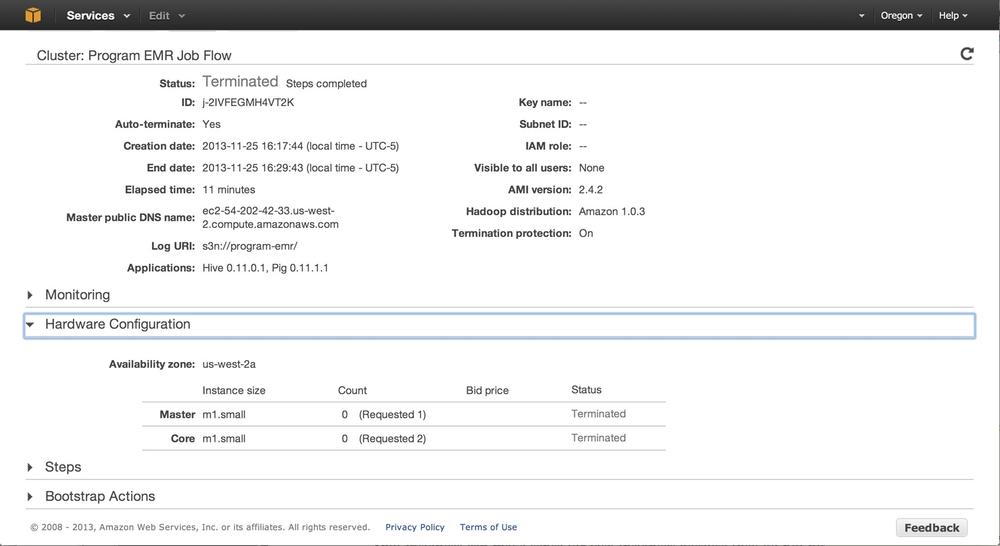

The exact types of instances used by a job flow can be determined by running the command-line utility with the describe command-line option. Information is also available by reviewing the Hardware Configuration section in the Cluster Details page in the EMR Console. Figure 6-3 shows information on the cluster groups, bid price, and instance counts used.

Spot Instances

Spot instances can make sense for task instances because they do not hold persistent data like the core and master nodes of the EMR cluster. The termination of a task node will not cause the entire Job Flow to terminate.

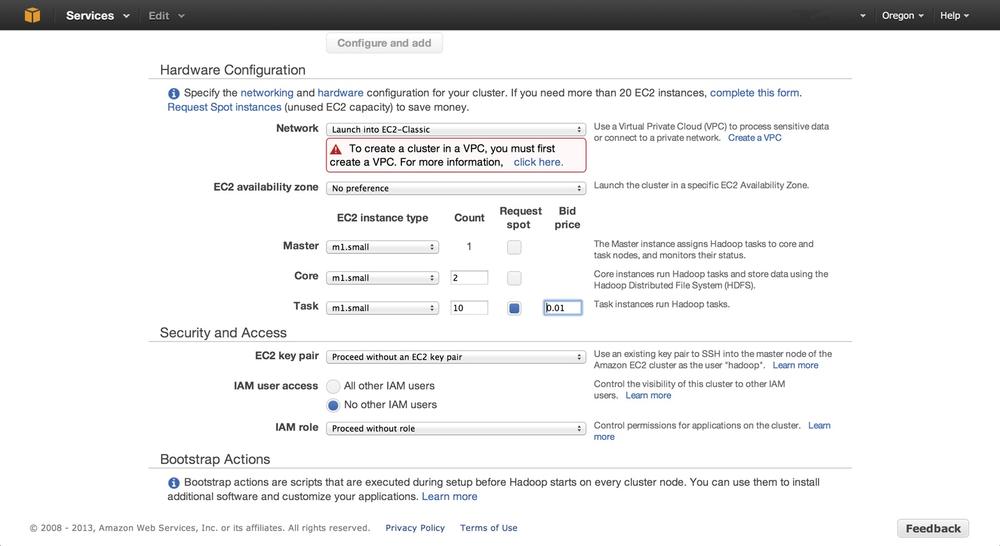

Technically, you can select spot instances for any of the nodes in the cluster, and use them via the EMR Management Console, CLI, or the AWS SDK. In bidding for spot instances, you can check the current price by checking the AWS spot instance page for your region. Figure 6-4 shows using spot instances for the task nodes and setting a bid price of $0.01.

Additional spot instances—or really any instance type—can be added to a Job Flow that is currently running. This may be useful if an application is getting behind and additional capacity is needed to reduce a backlog of work. This functionality is available using the Resize button on the Cluster Details page of a running Job Flow, the command-line tool, or the AWS API. Here is an example of adding five spot task instances to a running Job Flow:

./elastic-mapreduce --jobflow JobFlowId \

--add-instance-group task --instance-type m1.small \

--instance-count 5 --bid-price 0.01

Reducing AWS Project Costs

There are a number of key areas you can focus on to reduce the execution costs of an AWS project using EC2 and EMR. Keeping the following items in mind during development and operation can reduce the monthly AWS charges incurred by an application.

EMR and EC2 usage billed by the hour

One of the quirks in how AWS charges for services is that EC2 and EMR usage is charged on an hourly basis. So if application fires up a test using a 10-instance EMR cluster that immediately fails and has an actual runtime of only one minute, you will still be charged for 10 hours of usage. This is one hour for each instance that ran for one minute. If the application is started again 10 minutes later, the remaining time left in the hour cannot be reclaimed by the newly running instances and new charges are incurred. To reduce this effect, you can do the following on your project:

- Use the minimum cluster size when developing an application in AWS. The application can be scaled up once it is ready to launch for production usage or load testing.

-

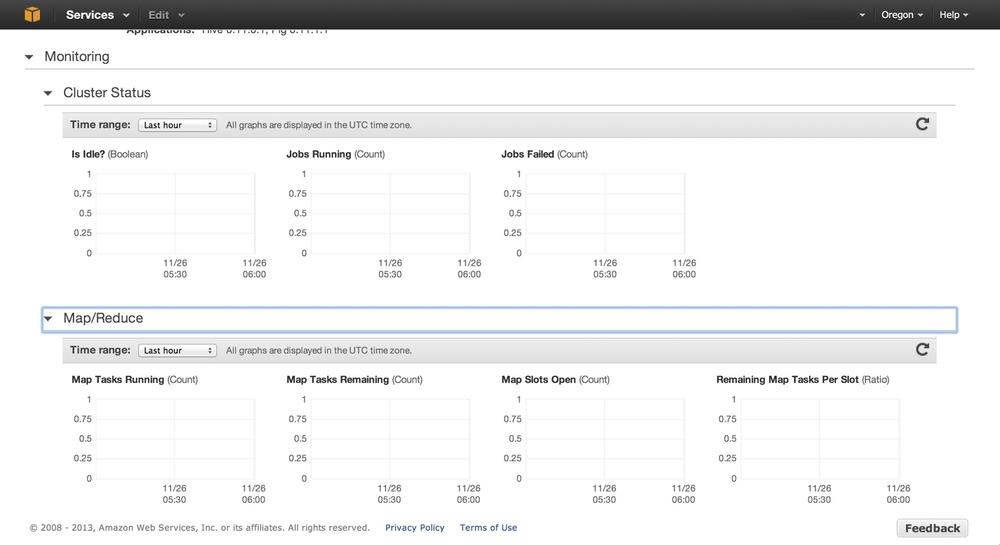

Amazon EMR allows Job Flow usage to be monitored through its monitoring tool CloudFront. There is CloudFront Monitoring for Job Flows in the

Monitoringsection of the Cluster Details page, as shown in Figure 6-5. You can review Job Flow usage and verify how much of your Job Flow activity is processing data instead of waiting for data to arrive. This allows the application to be monitored over time so resources can be increased or decreased as load varies over the life of the application.

Tip

Setting the Amazon EMR cluster size to a node level equal to or less than the expected volume can lead to significant cost savings. An Amazon EMR cluster can be resized after it has been started, but you will typically only want to increase the cluster size. Reducing the number of data nodes in an Amazon EMR cluster can lead to Job Flow failure or data loss. For more information on resizing an Amazon EMR cluster, review Amazon’s article on resizing a running cluster.

Cost efficiencies with reserved and spot instances

Earlier in the chapter, we discussed the different instance purchasing options and how these instance types could be used by the application. To help users better understand the costs with different execution scenarios, Amazon provides a general guide to help you decide when purchasing reserved instances would be the right choice to lower the operational costs of running AWS services.

Using the small data analysis application discussed earlier in the chapter, let’s review a number of scenarios of running the application in each region using the following AWS services for 50% of the month.

- 10 large EC2 node Amazon EMR cluster

- 1 terabyte of S3 storage

In the case of on-demand instances we can use, the AWS Simple Monthly Calculator to calculate the monthly costs incurred. There are no upfront costs for on-demand instances, only monthly costs for usage of the instances.

If you are willing to pay an upfront cost to get reserved EC2 capacity, you can get a lower hourly charge per EC2 instance. This lowers the monthly utilization costs for instances. Using reserved instances and assuming we will fall under Amazon’s heavy utilization category, you can see the costs start to come down, even with only 50% monthly usage, in Table 6-6.

However, we can gain the greatest cost savings by combining the use of reserved instances and spot instances. If your application has fluctuating loads or there is flexibility in the time to complete the work in the Job Flows, a portion of the capacity could be allocated as spot instances. Looking at this scenario using current spot prices, we can see that the hourly cost savings approach the cost of reserved instances without the upfront costs. Of course, the benefits of this structure are dependent on the availability and prices of spot instances. Table 6-6 shows the cost comparisons of the same application utilizing each of these cost options.

| AWS on-demand service | Costs | AWS reserved service | Costs | AWS reserved and spot service | Costs |

Total for 3 years | $35,042.76 | Total for 3 years | $25,821.92 | Total for 3 years | $19,701.28 |

10 EC2 large upfront cost | $0 | 10 EC2 large upfront cost | $10,280.00 | 5 EC2 large upfront cost | $5,140.00 |

10 EC2 Linux large | $878.41 | 10 EC2 reserved Linux large | $336.72 | 5 EC2 reserved Linux large | $168.36 |

0 EC2 Linux spot large | $0 | 0 EC2 Linux spot large | $0 | 5 EC2 Linux spot large | $141.12 |

S3 1 TB | $95.00 | S3 1 TB | $95.00 | S3 1 TB | $95.00 |

Total per month | $973.41 | Total per month | $431.72 | Total per month | $404.48 |

Tip

In general, if an application is up and running and consuming EC2 or EMR hours for more than 40% of the month, it makes sense to start purchasing reserved instances to save on monthly charges. Amazon lists the break-even points between on-demand instances and the various reserved utilization levels on the AWS EC2 website.

Project storage costs

Data analysis projects tend to consume vast amounts of storage. Amazon provides a number of storage options to retain data and classifies the storage options as standard, reduced redundancy, and Glacier storage. Let’s look at each of these and review the benefits and costs of each option.

- Standard storage

- This is the default storage on anything stored in S3. Standard storage items are replicated within the same facility and across several availability zones, so the data has a very low likelihood of being lost. This type of storage is great if this is a primary resource for the data that is not stored durably somewhere else. Standard storage is also a good option if data lives only in the cloud or the cost and time of reloading the data into S3 is too high to sustain a loss. Standard storage is the most expensive S3 storage option, however.

- Reduced redundancy storage

- Data can also be stored with less redundancy in S3. Standard storage makes three copies of a data element in a region, whereas reduced redundancy storage makes only two copies of the data. This is still fairly robust durability. Reduced redundancy can be set on any object uploaded to S3. This type of storage may be good if the data is stored somewhere else in a durable manner or if the data has a limited lifespan. Reduced redundancy storage is roughly 25% cheaper than standard storage.

- Glacier storage

- Glacier is Amazon’s data archival service. Data stored in Glacier may take several hours to retrieve and is best for data that is rarely accessed. This type of storage is best for data that has already been processed, but may need to be retained for compliance purposes or to generate reports at a later point in time. Glacier is Amazon’s lowest cost storage option at only $0.01 per gigabyte.

Data life cycles

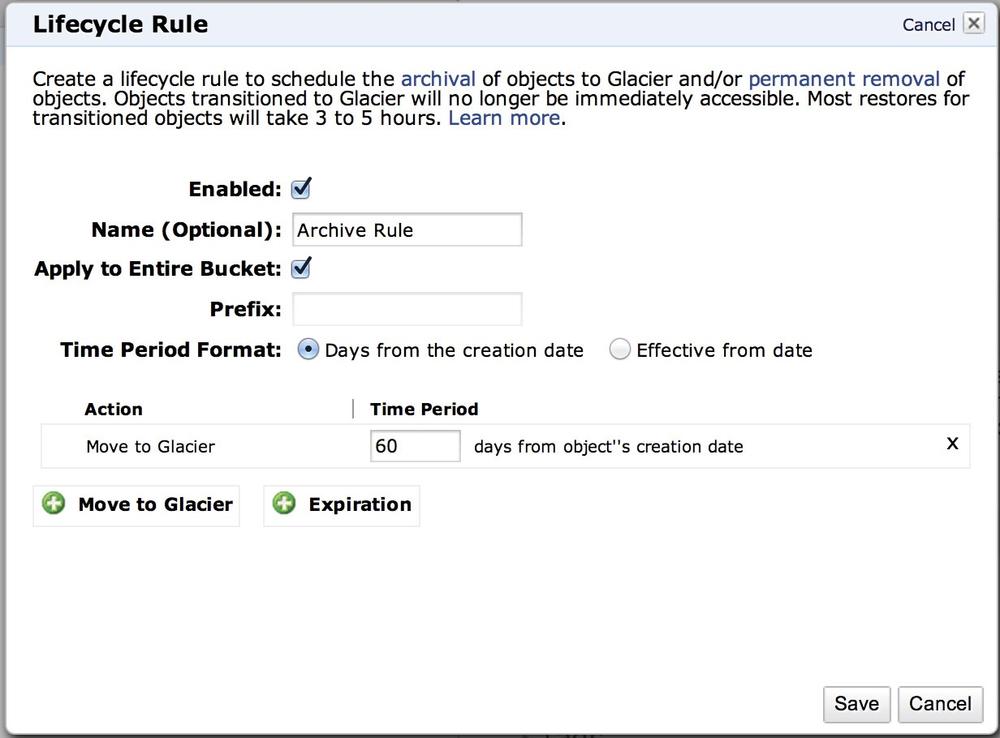

In many projects, you process your data and then need to retain that data for a number of years, often for compliance or reporting reasons. Keeping this data around where it is immediately available and stored on standard storage can become very expensive. In S3, you can set up a data life cycle policy on each of your S3 buckets. With a life cycle rule, you can have Amazon automatically delete or move your data to Glacier after a predefined time period. Figure 6-6 shows the configuration setting for a retention period of 60 days. A rule can be created on any bucket in S3 to move data to Glacier after a configurable period of time.

Let’s look at an example of how this will save on project costs.

Consider an organization with a one-year data retention policy that receives one terabyte of data every month, but only needs to generate a report at the end of each month. The organization keeps two months of data on live S3 storage in case the previous month’s report needs to be rerun. At the end of this year, 12 terabytes of data are stored at Amazon. Table 6-7 shows the cost comparisons of different storage policies and how you can achieve cost savings using a data life cycle policy while still allowing the most recent data to be immediately retrievable.

Amazon Tools for Estimating Your Project Costs

This chapter covered a number of key factors in evaluating a project in the traditional data center and using AWS services. The examples used in this chapter may vary greatly from the application for your organization. The following Amazon tools were used to demonstrate many of the scenarios in this chapter and will be useful in estimating costs for your project:

- Amazon’s Total Cost of Ownership calculator

- This is useful in comparing AWS costs to traditional data center costs for an application.

- AWS Simple Monthly Calculator

- This helps develop monthly cost estimates for AWS services, and scenarios can be saved and sent to others in the organization.

Try these tools out on your project and see what solutions will work best for your organization.