CHAPTER 9

Bug Bounty Programs

This chapter unpacks the topic of bug bounty programs and presents both sides of the discussion—from a software vendor’s point of view and from a security researcher’s point of view. We discuss the topic of vulnerability disclosure at length, including a history of the trends that led up to the current state of bug bounty programs. For example, we discuss full public disclosure, from all points of view, allowing you to decide which approach to take. The types of bug bounty programs are also discussed, including corporate, government, private, public, and open source. We then investigate the Bugcrowd bug bounty platform, from the viewpoint of both a program owner (vendor) and a researcher. We also look at the interfaces for both. Next, we discuss earning a living finding bugs as a researcher. Finally, the chapter ends with a discussion of incident response and how to handle the receipt of vulnerability reports from a software developer’s point of view.

This chapter goes over the whole vulnerability disclosure reporting and response process.

In this chapter, we discuss the following topics:

• History of vulnerability disclosure

• Bug bounty programs

• Bugcrowd in-depth

• Earning a living finding bugs

• Incident response

History of Vulnerability Disclosure

Software vulnerabilities are as old as software itself. Simply put, software vulnerabilities are weakness in either the design or implementation of software that may be exploited by an attacker. It should be noted that not all bugs are vulnerabilities. We will distinguish bugs from vulnerabilities by using the exploitability factor. In 2015, Synopsys produced a report that showed the results of analyzing 10 billion lines of code. The study showed that commercial code had 0.61 defects per 1,000 lines of code (LoC), whereas open source software had 0.76 defects per 1,000 LoC; however, the same study showed that commercial code did better when compared against industry standards, such as OWASP Top 10.1 Since modern applications commonly have LoC counts in the hundreds of thousands, if not millions, a typical application may have dozens of security vulnerabilities. One thing is for sure: as long as we have humans developing software, we will have vulnerabilities. Further, as long as we have vulnerabilities, users are at risk. Therefore, it is incumbent on security professionals and researchers to prevent, find, and fix these vulnerabilities before an attacker takes advantage of them, harming the user.

First, an argument can be made for public safety. It is a noble thing to put the safety of others above oneself. However, one must consider whether or not a particular action is in the interest of public safety. For example, is the public safe if a vulnerability is left unreported and thereby unpatched for years and an attacker is aware of the issue and takes advantage of the vulnerability using a zero-day to cause harm? On the other hand, is the public safe when a security researcher releases a vulnerability report before giving the software vendor an opportunity to fix the issue? Some would argue that the period of time between the release and the fix puts the public at risk; others argue that it is a necessary evil, for the greater good, and that the fastest way to get a fix is through shaming the software developer. There is no consensus on this matter; instead, it is a topic of great debate. In this book, in the spirit of ethical hacking, we will lean toward ethical or coordinated disclosure (as defined later); however, we hope that we present the options in a compelling manner and let you, the reader, decide.

Vendors face a disclosure dilemma: the release of vulnerability information changes the value of the software to users. As Choi et al. have described, users purchase software and expect a level of quality in that software. When updates occur, some users perceive more value, others less value.2 To make matters worse, attackers make their own determination of value in the target, based on the number of vulnerabilities disclosed as well. If the software has never been updated, then an attacker may perceive the target is ripe for assessment and has many vulnerabilities. On the other hand, if the software is updated frequently, that may be an indicator of a more robust security effort on the part of the vendor, and the attacker may move on. However, if the types of vulnerabilities patched are indicative of broader issues—perhaps broader classes of vulnerability, such as remotely exploitable buffer overflows—then attackers might figure there are more vulnerabilities to find and it may attract them like bugs to light or sharks to blood.

Common methods of disclosure include full vendor disclosure, full public disclosure, and responsible disclosure. In the following sections, we describe these concepts.

NOTE These terms are controversial, and some may prefer “partial vendor disclosure” as an option to handle cases when proof of concept (POC) code is withheld and when other parties are involved in the disclosure process. To keep it simple, in this book we will stick with the aforementioned.

Full Vendor Disclosure

Starting around the year 2000, some researchers were more likely to cooperate with vendors and perform full vendor disclosure, whereby the researcher would disclose the vulnerability to the vendor fully and would not disclose to any other parties. There were several reasons for this type of disclosure, including fear of legal reprisals, lack of social media paths to widely distribute the information, and overall respect for the software developers, which led to a sense of wanting to cooperate with the vendor and simply get the vulnerability fixed.

This method often led to an unlimited period of time to patch a vulnerability. Many researchers would simply hand over the information, then wait as long as it took, perhaps indefinitely, until the software vendor fixed the vulnerability—if they ever did. The problem with this method of disclosure is obvious: the vendor has a lack of incentive to patch the vulnerability. After all, if the researcher was willing to wait indefinitely, why bother? Also, the cost of fixing some vulnerabilities might be significant, and before the advent of social media, there was little consequence for not providing a patch to a vulnerability.

In addition, software vendors faced a problem: if they patched a security issue without publically disclosing it, many users would not patch the software. On the other hand, attackers could reverse-engineer the patch and discover the issue, using techniques we will discuss in this book, thus leaving the unpatched user more vulnerable than before. Therefore, the combination of problems with this approach led to the next form of disclosure—full public disclosure.

Full Public Disclosure

In a response to the lack of timely action by software vendors, many security researchers decided to take matters into their own hands. There have been countless zines, mailing lists, and Usenet groups discussing vulnerabilities, including the infamous Bugtraq mailing list, which was created in 1993. Over the years, frustration built in the hacker community as vendors were not seen as playing fairly or taking the researchers seriously. In 2001, Rain Forest Puppy, a security consultant, made a stand and said that he would only give a vendor one week to respond before he would publish fully and publically a vulnerability.3 In 2002, the infamous Full Disclosure mailing list was born and served as a vehicle for more than a decade, where researchers freely posted vulnerability details, with or without vendor notification.4 Some notable founders of the field, such as Bruce Schneier, blessed the tactic as the only way to get results.5 Other founders, like Marcus Ranum, disagreed by stating that we are no better off and less safe.6 Again, there is little to no agreement on this matter; we will allow you, the reader, to determine for yourself where you side.

There are obviously benefits to this approach. First, some have claimed the software vendor is most likely to fix an issue when shamed to do it.7 On the other hand, the approach is not without issues. The approach causes a lack of time for vendors to respond in an appropriate manner and may cause a vendor to rush and not fix the actual problem.8 Of course, those type of shenanigans are quickly discovered by other researchers, and the process repeats. Other difficulties arise when a software vendor is dealing with a vulnerability in a library they did not develop. For example, when OpenSSL had issues with Heartbleed, thousands of websites, applications, and operating system distributions became vulnerable. Each of those software developers had to quickly absorb that information and incorporate the fixed upstream version of the library in their application. This takes time, and some vendors move faster than others, leaving many users less safe in the meantime as attackers began exploiting the vulnerability within days of release.

Another advantage of full public disclosure is to warn the public so that people may take mitigating steps prior to a fix being released. This notion is based on the premise that black hats likely know of the issue already, so arming the public is a good thing and levels the playing field, somewhat, between attackers and defenders.

Through all of this, the question of public harm remains. Is the public safer with or without full disclosure? To fully understand that question, one must realize that attackers conduct their own research and may know about an issue and be using it already to attack users prior to the vulnerability disclosure. Again, we will leave the answer to that question for you to decide.

Responsible Disclosure

So far, we have discussed the two extremes: full vendor disclosure and full public disclosure. Now, let’s take a look at a method of disclosure that falls in between the two: responsible disclosure. In some ways, the aforementioned Rain Forest Puppy took the first step toward responsible disclosure, in that he gave vendors one week to establish meaningful communication, and as long as they maintained that communication, he would not disclose the vulnerability. In this manner, a compromise can be made between the researcher and vendor, and as long as the vendor cooperates, the researcher will as well. This seemed to be the best of both worlds and started a new method of vulnerability disclosure.

In 2007, Mark Miller of Microsoft formally made a plea for responsible disclosure. He outlined the reasons, including the need to allow time for a vendor, such as Microsoft, to fully fix an issue, including the surrounding code, in order to minimize the potential for too many patches.9 Miller made some good points, but others have argued that if Microsoft and others had not neglected patches for so long, there would not have been full public disclosure in the first place.10 To those who would make that argument, responsible disclosure is tilted toward vendors and implies that they are not responsible if researchers do otherwise. Conceding this point, Microsoft itself later changed its position and in 2010 made another plea to use the term coordinated vulnerability disclosure (CVD) instead.11 Around this time, Google turned up the heat by asserting a hard deadline of 60 days for fixing any security issue prior to disclosure.12 The move appeared to be aimed at Microsoft, which sometimes took more than 60 days to fix a problem. Later, in 2014, Google formed a team called Project Zero, aimed at finding and disclosing security vulnerabilities, using a 90-day grace period.13

Still, the hallmark of responsible disclosure is the threat of disclosure after a reasonable period of time. The Computer Emergency Response Team (CERT) Coordination Center (CC) was established in 1988, in response to the Morris worm, and has served for nearly 30 years as a facilitator of vulnerability and patch information.14 The CERT/CC has established a 45-day grace period when handling vulnerability reports, in that the CERT/CC will publish vulnerability data after 45 days, unless there are extenuating circumstances.15 Security researchers may submit vulnerabilities to the CERT/CC or one of its delegated entities, and the CERT/CC will handle coordination with the vendor and will publish the vulnerability when the patch is available or after the 45-day grace period.

No More Free Bugs

So far, we have discussed full vendor disclosure, full public disclosure, and responsible disclosure. All of these methods of vulnerability disclosure are free, whereby the security researcher spends countless hours finding security vulnerabilities and, for various reasons not directly tied to financial compensation, discloses the vulnerability for the public good. In fact, it is often difficult for a researcher to be paid under these circumstances without being construed as shaking down the vendor.

In 2009, the game changed. At the annual CanSecWest conference, three famous hackers, Charlie Miller, Dino Dai Zovi, and Alex Sotirov, made a stand.16 In a presentation led by Miller, Dai Zovi and Sotirov held up a cardboard sign that read “NO MORE FREE BUGS.” It was only a matter of time before researchers became more vocal about the disproportionate number of hours required to research and discover vulnerabilities versus the amount of compensation received by researchers. Not everyone in the security field agreed, and some flamed the idea publically.17 Others, taking a more pragmatic approach, noted that although these three researchers had already established enough “social capital” to demand high consultant rates, others would continue to disclose vulnerabilities for free to build up their status.18 Regardless, this new sentiment sent a shockwave through the security field. It was empowering to some, scary to others. No doubt, the security field was shifting toward researchers over vendors.

Bug Bounty Programs

The phrase “bug bounty” was first used in 1995 by Jarrett Ridlinghafer at Netscape Communication Corporation.19 Along the way, iDefense (later purchased by VeriSign) and TippingPoint helped the bounty process by acting as middlemen between researchers and software, facilitating the information flow and remuneration. In 2004, the Mozilla Foundation formed a bug bounty for Firefox.20 In 2007, the Pwn2Own competition was started at CanSecWest and served as a pivot point in the security field, as researchers would gather to demonstrate vulnerabilities and their exploits for prizes and cash.21 Later, in 2010, Google started its program, followed by Facebook in 2011, followed by the Microsoft Online Services program in 2014.22 Now there are hundreds of companies offering bounties on vulnerabilities.

The concept of bug bounties is an attempt by software vendors to respond to the problem of vulnerabilities in a responsible manner. After all, the security researchers, in the best case, are saving companies lots of time and money in finding vulnerabilities. On the other hand, in the worst case, the reports of security researchers, if not handled correctly, may be prematurely exposed, thus costing companies lots of time and money due to damage control. Therefore, an interesting and fragile economy has emerged as both vendors and researchers have interest and incentives to play well together.

Types of Bug Bounty Programs

Several types of bug bounty programs exist, including corporate, government, private, public, and open source.

Corporate and Government

Several companies, including Google, Facebook, Apple, and Microsoft, are running their own bug bounty programs directly. More recently, Tesla, United, GM, and Uber launched their programs. In these cases, the researcher interacts directly with the company. As discussed already in this chapter, each company has its own views on bug bounties and run how it runs its programs. Therefore, different levels of incentives are offered to researchers. Governments are playing too, as the U.S. government launched a successful “Hack the Pentagon” bug bounty program in 2016,23 which lasted for 24 days. Some 1,400 hackers discovered 138 previously unknown vulnerabilities and were paid about $75,000 in rewards.24 Due to the exclusive nature of these programs, researchers should read the terms of a program carefully and decide whether they want to cooperate with the company or government, prior to posting.

Private

Some companies set up private bug bounty programs, directly or through a third party, to solicit the help of a small set of vetted researchers. In this case, the company or a third party vets the researchers and invites them to participate. The value of private bug bounty programs is the confidentiality of the reports (from the vendor’s point of view) and the reduced size of the researcher pool (from the researcher’s point of view). One challenge that researchers face is they may work tireless hours finding a vulnerability, only to find that it has already been discovered and deemed a “duplicate” by the vendor, which does not qualify for a bounty.25 Private programs reduce that possibility. The downside is related: the small pool of researchers means that vulnerabilities may go unreported, leaving the vendor with a false sense of security, which is often worse than having no sense of security.

Public

Public bug bounty programs are just that—public. This means that any researcher is welcome to submit reports. In this case, companies either directly or through a third party announce the existence of the bug bounty program and then sit back and wait for the reports. The advantage of these programs over private programs is obvious—with a larger pool of researchers, more vulnerabilities may be discovered. On the other hand, only the first researcher gets the bounty, which may turn off some of the best researchers, who may prefer private bounty programs. In 2015, the Google Chrome team broke all barriers for a public bounty program by offering an infinite pool of bounties for their Chrome browser.26 Up to that point, researchers had to compete on one day, at CanSecWest, for a limited pool of rewards. Now, researchers may submit all year for an unlimited pool of funds. Of course, at the bottom of the announcement is the obligatory legalese that states the program is experimental and Google may change it at any time.27 Public bug bounty programs are naturally the most popular ones available and will likely remain that way.

Open Source

Several initiatives exist for securing open source software. In general, the open source projects are not funded and thereby lack the resources that a company may have to handle security vulnerabilities, either internally or reported by others. The Open Source Technology Improvement Fund (OSTIF) is one such effort to support the open source community.28 The OSTIF is funded by individuals and groups looking to make a difference in software that is used by others. Support is given through establishing bug bounties, providing direct funding to open source projects to inject resources to fix issues, and arranging professional audits. The open source projects supported include the venerable OpenSSL and OpenVPN projects. These grassroots projects are noble causes and worthy of researchers’ time and donor funds.

NOTE OSTIF is a registered 501(c)(3) nonprofit organization with the U.S. government and thereby qualifies for tax-deductible donations from U.S. citizens.

Incentives

Bug bounty programs offer many unofficial and official incentives. In the early days, rewards included letters, t-shirts, gift cards, and simply bragging rights. Then, in 2013, Yahoo! was shamed into giving more than swag to researchers. The community began to flame Yahoo! for being cheap with rewards, giving t-shirts or nominal gift cards for vulnerability reports. In an open letter to the community, Ramses Martinez, the director of bug finding at Yahoo!, explained that he had been funding the effort out of his own pocket. From that point onward, Yahoo! increased its rewards to $150 to $15,000 per validated report.29 From 2011 to 2014, Facebook offered an exclusive “White Hat Bug Bounty Program” Visa debit card.30 The rechargeable black card was coveted and, when flashed at a security conference, allowed the researcher to be recognized and perhaps invited to a party.31 Nowadays, bug bounty programs still offer an array of rewards, including Kudos (points that allow researchers to be ranked and recognized), swag, and financial compensation.

Controversy Surrounding Bug Bounty Programs

Not everyone agrees with the use of bug bounty programs because some issues exist that are controversial. For example, vendors may use these platforms to rank researchers, but researchers cannot normally rank vendors. Some bug bounty programs are set up to collect reports, but the vendor might not properly communicate with the researcher. Also, there might be no way to tell whether a response of “duplicate” is indeed accurate. What’s more, the scoring system might be arbitrary and not accurately reflect the value of the vulnerability disclosure, given the value of the report on the black market. Therefore, each researcher will need to decide if a bug bounty program is for them and whether the benefits outweigh the downsides.

Popular Bug Bounty Program Facilitators

Several companies have emerged to facilitate bug bounty programs. The following companies were started in 2012 and are still serving this critical niche:

• Bugcrowd

• HackerOne

• SynAck

Each of these has its strengths and weaknesses, but we will take a deeper look at only one of them: Bugcrowd.

Bugcrowd in Depth

Bugcrowd is one of the leading crowd-source platforms for vulnerability intake and management. It allows for several types of bug bounty programs, including private and public programs. Private programs are not published to the public, but the Bugcrowd team maintains a cadre of top researchers who have proven themselves on the platform, and they can invite a number of those researchers into a program based on the criteria provided. In order to participate in private programs, the researchers must undergo an identity-verification process through a third party. Conversely, researchers may freely submit to public programs. As long as they abide with the terms of the platform and the program, they will maintain an active status on the platform and may continue to participate in the bounty program. If, however, a researcher violates the terms of the platform or any part of the bounty program, they will be banned from the site and forfeit any potential income. This dynamic tends to keep honest researchers honest. Of course, as they say, “hackers gonna hack,” but at least the rules are clearly defined, so there should be no surprises on either side.

CAUTION You have been warned: play nicely or lose your privilege to participate on Bugcrowd or other sites!

Bugcrowd also allows for two types of compensation for researchers: monetary and Kudos. Funded programs are established and then funded with a pool to be allocated by the owner for submissions, based on configurable criteria. Kudos programs are not funded and instead offer bragging rights to researchers, as they accumulate Kudos and are ranked against other researchers on the platform. Also, Bugcrowd uses the ranking system to invite a select set of researchers into private bounty programs.

The Bugcrowd web interface has two parts: one for the program owners and the other for the researchers.

Program Owner Web Interface

The web interface for the program owner is a RESTful interface that automates the management of the bug bounty program.

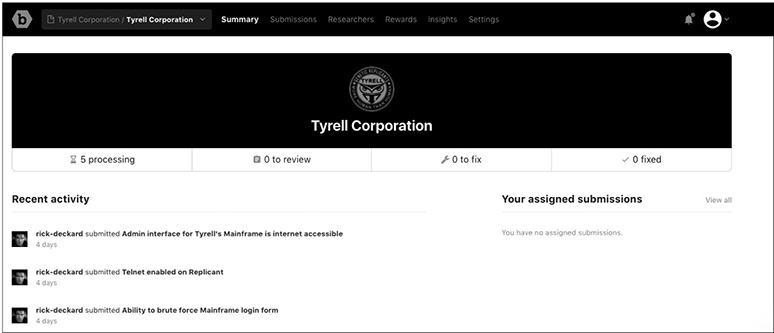

Summary

The first screen within the bug bounty program is the Summary screen, which highlights the number of untriaged submissions. In the example provided here, five submissions have not been categorized. The other totals represent the number of items that have been triaged (shown as “to review”), the number of items to be resolved (shown as “to fix”), and the number of items that have been resolved (shown as “fixed”). A running log of activities is shown at the bottom of the screen.

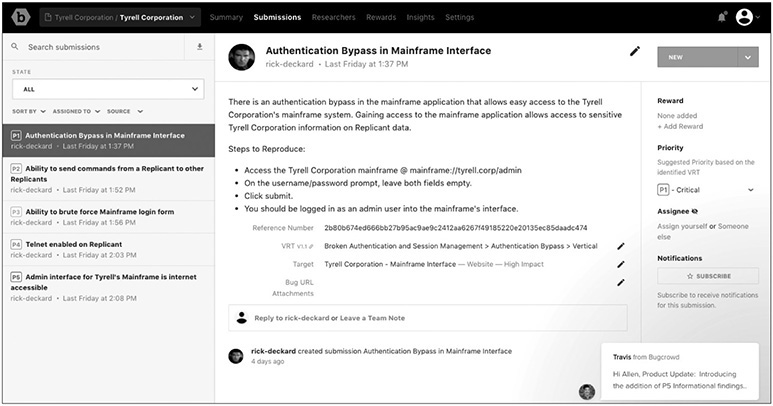

Submissions

The next screen within the program owner’s web interface is the Submissions screen. On the left side of this screen you can see the queue of submissions, along with their priority. These are listed as P1 (Critical), P2 (High), P3 (Moderate), P4 (Low), and P5 (Informational), as shown next.

In the center pane is a description of the submission, along with any metadata, including attachments. On the right side of the screen are options to update the overall status of a submission. The “Open” status levels are New, Triaged, and Unresolved, and the “Closed” status levels are Resolved, Duplicate, Out of Scope, Not Reproducible, Won’t Fix, and Not Applicable. Also from this side of the screen you can adjust the priority of a submission, assign the submission to a team member, and reward the researcher.

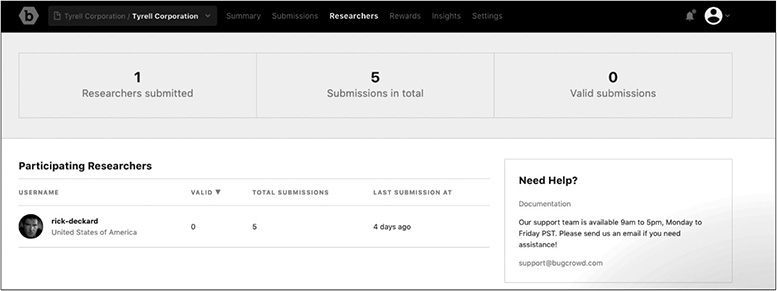

Researchers

You can review the researchers by selecting the Researchers tab in the top menu. The Researchers screen is shown here. As you can see, only one researcher is participating in the bounty program, and he has five submissions.

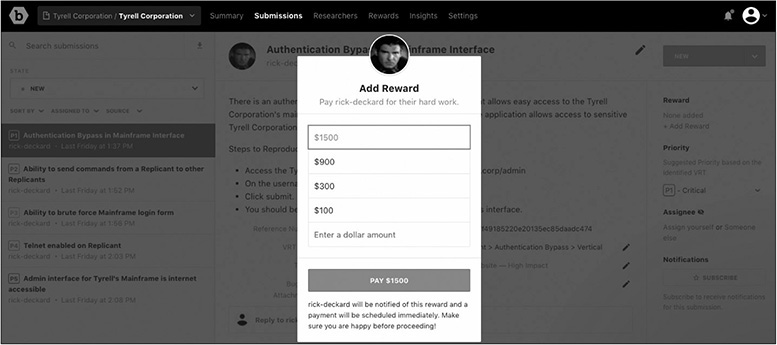

Rewarding Researchers

When selecting a reward as the program owner, you will have a configurable list of rewards to choose from on the right. In the following example, the researcher was granted a bounty of $1,500.

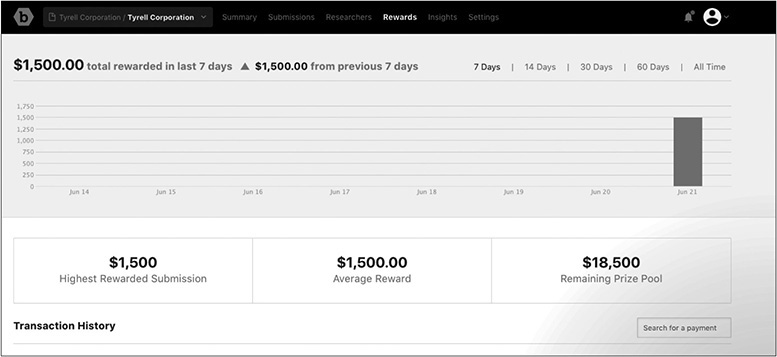

Rewards

You can find a summary of rewards by selecting the Rewards tab in the top menu. As this example shows, a pool of funds may be managed by the platform, and all funding and payment transactions are processed by Bugcrowd.

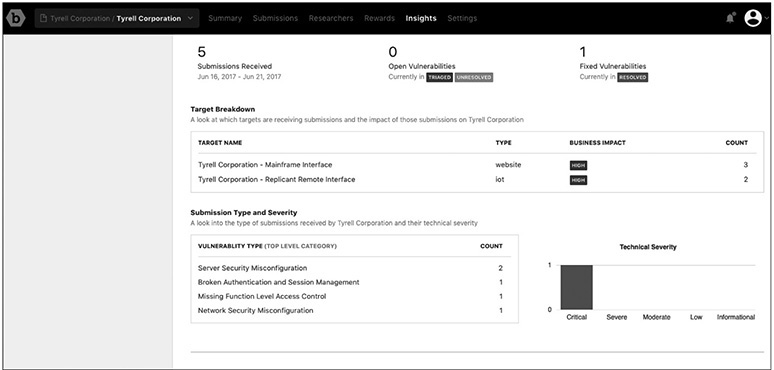

Insights

Bugcrowd provides the program owner with key insights on the Insights screen. It shows key statistics and offers an analysis of submissions, such as target types, submission types, and technical severities.

Resolved Status

When you as the program owner resolve or otherwise adjudicate an issue, you can select a new status to the right of the submission’s detailed summary. In this example, the submission is marked as “resolved,” which effectively closes the issue.

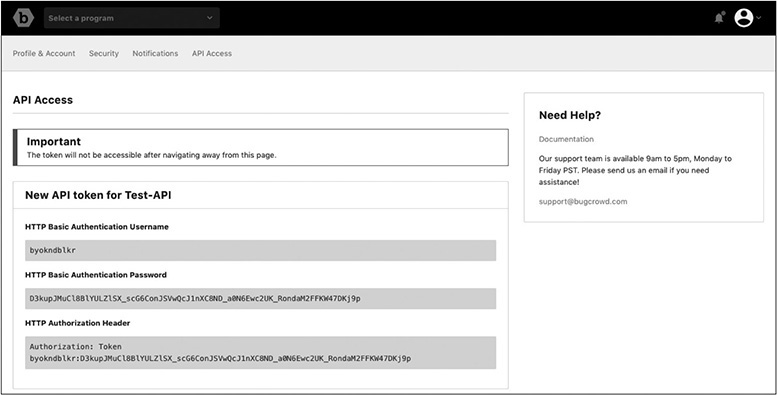

API Access Setup

An application programming interface (API) for Bugcrowd functionality is provided to program owners. In order to set up API access, select API Access in the drop-down menu in the upper-right corner of the screen. Then you can provide a name for the API and create the API tokens.

The API token is provided to the program owner and is only shown on the following screen. You will need to record that token because it is not shown beyond this screen.

NOTE The token shown here has been revoked and will no longer work. Contact Bugcrowd to establish your own program and create an API key.

Program Owner API Example

As the program owner, you can interact with the API by using Curl commands, as illustrated in the API documentation located at https://docs.bugcrowd.com/v1.0/docs/authentication-v3.

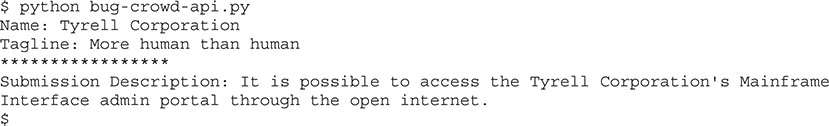

The bug-crowd-api.py Wrapper

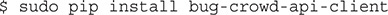

An unofficial wrapper to the Bugcrowd API may be found at https://github.com/asecurityteam/bug_crowd_client.

The library may be installed with Pip, as follows:

Get Bug Bounty Submissions

Using the preceding API key and the bug-crowd-api wrapper, you can interact with submissions programmatically. For example, you can use the following code to pull the description from the first submission of the first bug bounty program:

As you can see, the API wrapper allows for easy retrieval of bounty or submission data. Refer to the API documentation for a full description of functionality.

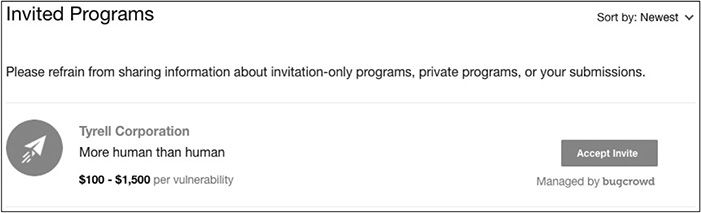

Researcher Web Interface

As a researcher, if you are invited to join a private bug bounty by the Bugcrowd team, you would receive an invitation like the following, which can be found under the Invites menu by accessing the drop-down menu in the upper-right corner of the screen.

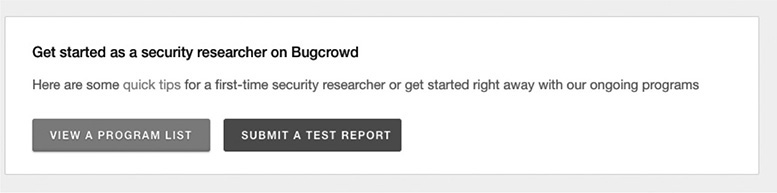

After joining Bugcrowd as a researcher, you are presented with the options shown here (accessed from the main dashboard). You may view “quick tips” (by following the link), review the list of public bounty programs, or submit a test report.

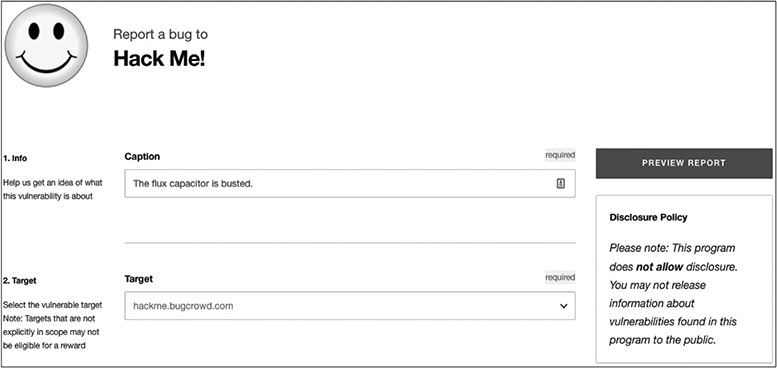

When submitting a test report, you will be directed to the Hack Me! bug bounty program, which is a sandbox for new researchers to play in. By completing the form and clicking Submit, you may test the user interface and learn what to expect when submitting to a real program. For example, you will receive a thank-you e-mail with a link to the submission. This allows you to provide comments and communicate with the program owner.

Earning a Living Finding Bugs

So you want to be a bug bounty hunter, but how much does it pay? Some have reportedly made $200,000 or more a year in bug bounties.32 However, it would be safe to say that is the exception, not the rule. That said, if you are interested in honing your bug-finding skills and earning some money for your efforts, you’ll need to take into consideration the following issues.

Selecting a Target

One of the first considerations is what to target for your bug-hunting efforts. The best approach is to start searching for bounty programs on registries such as Firebounty.com. The newer the product and the more obscure the interface, the more likely you will find undiscovered issues. Remember, for most programs, only the first report is rewarded. Often sites such as Bugcrowd.com will list any known security issues, so you don’t waste your time on issues that have been reported already. Any effort you give to researching your target and its known issues is time well spent.

Registering (If Required)

Some programs require you to register or maybe even be vetted by a third party to participate in them. This process is normally simple, provided you don’t mind sending a copy of your identification to a third party such as NetVerify. If this is an issue for you, move on—there are plenty of other targets that do not require this level of registration.

Understanding the Rules of the Game

Each program will have a set of terms and conditions, and you would do yourself a favor to read them carefully. Often, you will forfeit the right to disclose a vulnerability outside the program if you submit to a bug bounty program. In other words, you will likely have to make your disclosure in coordination with the vendor, and perhaps only if the vendor allows you to disclose. However, sometimes this can be negotiated, because the vendor has an incentive to be reasonable with you as the researcher in order to prevent you from disclosing on your own. In the best-case scenario, the vendor and researcher will reach a win/win situation, whereby the researcher is compensated in a timely manner and the vendor resolves the security issue in a timely manner—in which case the public wins, too.

Finding Vulnerabilities

Once you have found a target, registered (if required), and understand the terms and conditions, it is time to start finding vulnerabilities. You can use several methods to accomplish this task, as outlined in this book, including fuzzing, code reviews, and static and dynamic security testing of applications. Each researcher will tend to find and follow a process that works best for them, but some basic steps are always necessary:

• Enumerate the attack surfaces, including ports and protocols (OSI layers 1–7).

• Footprint the application (OSI layer 7).

• Assess authentication (OSI layers 5–7).

• Assess authorization (OSI layer 7).

• Assess validation of input (OSI layers 1–7, depending on the app or device).

• Assess encryption (OSI layers 2–7, depending on the app or device).

Each of these steps has many substeps and may lead to potential vulnerabilities.

Reporting Vulnerabilities

Not all vulnerability reports are created equal, and not all vulnerabilities get fixed in a timely manner. There are, however, some things you can do to increase your odds of getting your issue fixed and receiving your compensation. Studies have shown that vulnerability reports with stack traces and code snippets and that are easy to read have a higher likelihood of being fixed faster than others.33 This makes sense: make it easy on the software developer, and you are more likely to get results. After all, because you are an ethical hacker, you do want to get the vulnerability fixed in a timely manner, right? The old saying holds true: you can catch more flies with honey than with vinegar.34 Simply put, the more information you provide, in an easy-to-follow and reproducible format, the more likely you are to be compensated and not be deemed a “duplicate” unnecessarily.

Cashing Out

After the vulnerability report has been verified as valid and unique, you as the researcher should expect to be compensated. Remuneration may come in many forms—from cash to debit cards to Bitcoin. Be aware of the regulation that any compensation over $20,000 must be reported to the IRS by the vendor or bug bounty platform provider.35 In any event, you should check with your tax advisor concerning the tax implications of income generated by bug bounty activities.

Incident Response

Now that we have discussed the offensive side of things, let’s turn our attention to the defensive side. How is your organization going to handle incident reports?

Communication

Communication is key to the success of any bug bounty program. First, communication between the researcher and the vendor is critical. If this communication breaks down, one party will become disgruntled and may go public without the other party, which normally does not end well. On the other hand, if communication is established early and often, a relationship may be formed between the researcher and the vendor, and both parties are more likely to be satisfied with the outcome. Communication is where bug bounty platforms such as Bugcrowd, HackerOne, and SynAck shine. It is the primary reason for their existence, to facilitate fair and equitable communication between the parties. Most researchers will expect a quick turnaround on communications sent, and the vendor should expect to respond to researcher messages within 24 to 48 hours of receipt. Certainly, the vendor should not go more than 72 hours without responding to a communication from the researcher.

As a vendor, if you plan to run your own bug bounty program or any other vulnerability intake portal, be sure that the researcher can easily find how to report vulnerabilities on your site. Also be sure to clearly explain how you expect to communicate with the researcher and your intentions to respond within a reasonable time frame to all messages. Often, when researchers become frustrated working with vendors, they cite the fact that the vendor was nonresponsive and ignored communications. This can lead to the researcher going public without the vendor. Be aware of this pitfall and work to avoid it as a vendor. The researcher holds critical information that you as a vendor need to successfully remediate, before the issue becomes public knowledge. You hold the key to that process going smoothly: communication.

Triage

After a vulnerability report is received, a triage effort will need to be performed to quickly sort out if the issue is valid and unique and, if so, what severity it is. The Common Vulnerability Scoring System (CVSS) and Common Weakness Scoring System (CWSS) are helpful in performing this type of triage. The CVSS has gained more traction and is based on the factors of base, temporal, and environmental. Calculators exist online to determine a CVSS score for a particular software vulnerability. The CWSS has gained less traction and has not been updated since 2014; however, it does provide more context and ranking capabilities for vulnerabilities by introducing the factors of base, attack surface, and environmental. By using either the CVSS or CWSS, a vendor may rank vulnerabilities and weaknesses and thereby make internal decisions as to which ones to prioritize and allocate resources to first in order to resolve them.

Remediation

Remediation is the main purpose for vulnerability disclosure. After all, if the vendor is not going to resolve an issue in a timely manner, the researchers will fall back on full public disclosure and force the vendor to remediate. Therefore, it is imperative that a vendor schedule and remediate security vulnerabilities within a timely manner, which is generally 30 to 45 days. Most researchers are willing to wait that long before going public; otherwise, they would not have contacted the vendor in the first place.

It is critical that not only the vulnerability be resolved, but any surrounding code or similar code be reviewed for the existence of related weaknesses. In other words, as the vendor, take the opportunity to review the class of vulnerability across all your code bases to ensure that next month’s fire drill will not be another one of your products. On a related note, be sure that the fix does not open up another vulnerability. Researchers will check the patch and ensure you did not simply move things around or otherwise obfuscate the vulnerability.

Disclosure to Users

To disclose (to users) or not to disclose: that is the question. In some circumstances, when the researcher has been adequately compensated, the vendor may be able to prevent the researcher from publically disclosing without them. However, practically speaking, the truth will come out, either through the researcher or some other anonymous character online. Therefore, as the vendor, you should disclose security issues to users, including some basic information about the vulnerability, the fact that it was a security issue, its potential impact, and how to patch it.

Public Relations

The public vulnerability disclosure information is vital to the user base recognizing the issue and actually applying the patch. In the best-case scenario, a coordinated disclosure is negotiated between the vendor and the researcher, and the researcher is given proper credit (if desired) by the vendor. It is common that the researcher will then post their own disclosure, commending the vendor for cooperation. This is often seen as a positive for the software vendor. In other cases, however, one party may get out ahead of the other, and often the user is the one who gets hurt. If the disclosure is not well communicated, the user may become confused and might not even realize the severity of the issue and therefore not apply the patch. This scenario has the potential of becoming a public relations nightmare, as other parties weigh in and the story takes on a life of its own.

Summary

In this chapter, we discussed bug bounties. We started with a discussion of the history of disclosure and the reasons that bug bounties were created. Next, we moved into a discussion of different types of bug bounties, highlighting the Bugcrowd platform. Then, we discussed how to earn a living reporting bugs. Finally, we covered some practical advice on responding to bug reports as a vendor. This chapter should better equip you to handle bug reports, both as a researcher and a vendor.

For Further Reading

Bugcrowd bugcrowd.com

HackerOne hackerone.com

Iron Geek blog (Adrian Crenshaw) www.irongeek.com/i.php?page=security/ethics-of-full-disclosure-concerning-security-vulnerabilities

Open Source Technology Improvement Fund (OSTIF) ostif.org/the-ostif-mission/

SynAck synack.com

Wikipedia on bug bounties en.wikipedia.org/wiki/Bug_bounty_program

Wikipedia on Bugtraq en.wikipedia.org/wiki/Bugtraq

References

1. Synopsys, “Coverity Scan Open Source Report Shows Commercial Code Is More Compliant to Security Standards than Open Source Code,” Synopsys, July 29, 2015, https://news.synopsys.com/2015-07-29-Coverity-Scan-Open-Source-Report-Shows-Commercial-Code-Is-More-Compliant-to-Security-Standards-than-Open-Source-Code.

2. J. P. Choi, C. Fershtman, and N. Gandal, “Network Security: Vulnerabilities and Disclosure Policy,” Journal of Industrial Economics, vol. 58, no. 4, pp. 868–894, 2010.

3. K. Zetter, “Three Minutes with Rain Forest Puppy | PCWorld,” PCWorld, January 5, 2012.

4. “Full disclosure (mailing list),” Wikipedia, September 6, 2016.

5. B. Schneier, “Essays: Schneier: Full Disclosure of Security Vulnerabilities a ‘Damned Good Idea.’” Schneier on Security, January 2007, https://www.schneier.com/essays/archives/2007/01/schneier_full_disclo.html.

6. M. J. Ranum, “The Vulnerability Disclosure Game: Are We More Secure?” CSO Online, March 1, 2008, www.csoonline.com/article/2122977/application-security/the-vulnerability-disclosure-game--are-we-more-secure-.html.

7. Schneier, “Essays.”

8. Imperva, Inc., “Imperva | Press Release | Analysis of Web Site Penetration Retests Show 93% of Applications Remain Vulnerable After ‘Fixes,’” June 2004, http://investors.imperva.com/phoenix.zhtml?c=247116&p=irol-newsArticle&ID=1595363. [Accessed: 18-Jun-2017]

9. A. Sacco, “Microsoft: Responsible Vulnerability Disclosure Protects Users,” CSO Online, January 9, 2007, www.csoonline.com/article/2121631/build-ci-sdlc/microsoft--responsible-vulnerability-disclosure-protects-users.html. [Accessed: 18-Jun-2017].

10. Schneier, “Essays.”

11. G. Keizer, “Drop ‘Responsible’ from Bug Disclosures, Microsoft Urges,” Computerworld, July 22, 2010, www.computerworld.com/article/2519499/security0/drop--responsible--from-bug-disclosures--microsoft-urges.html. [Accessed: 18-Jun-2017].

12. Keizer, “Drop ‘Responsible’ from Bug Disclosures.”

13. “Project Zero (Google),” Wikipedia, May 2, 2017.

14. “CERT Coordination Center,” Wikipedia, May 30, 2017.

15. CERT/CC, “Vulnerability Disclosure Policy," Vulnerability Analysis | The CERT Division, www.cert.org/vulnerability-analysis/vul-disclosure.cfm? [Accessed: 18-Jun-2017].

16. D. Fisher, “No More Free Bugs for Software Vendors,” Threatpost | The First Stop for Security News, March 23, 2009, https://threatpost.com/no-more-free-bugs-software-vendors-032309/72484/. [Accessed: 18-Jun-2017].

17. P. Lindstrom, “No More Free Bugs,” Spire Security Viewpoint, March 26, 2009, http://spiresecurity.com/?p=65.

18. A. O’Donnell, “‘No More Free Bugs’? There Never Were Any Free Bugs,” ZDNet, March 24, 2009, www.zdnet.com/article/no-more-free-bugs-there-never-were-any-free-bugs/. [Accessed: 18-Jun-2017].

19. “Bug Bounty Program,” Wikipedia, June 14, 2017.

20. Mozilla Foundation, “Mozilla Foundation Announces Security Bug Bounty Program,” Mozilla Press Center, August 2004, https://blog.mozilla.org/press/2004/08/mozilla-foundation-announces-security-bug-bounty-program/. [Accessed: 25-Jun-2017].

21. “Pwn2Own,” Wikipedia, June 14, 2017.

22. E. Friis-Jensen, “The History of Bug Bounty Programs,” Cobalt.io, April 11, 2014, https://blog.cobalt.io/the-history-of-bug-bounty-programs-50def4dcaab3. [Accessed: 18-Jun-2017].

23. C. Pellerin, “DoD Invites Vetted Specialists to ‘Hack’ the Pentagon,” U.S. Department of Defense, March 2016. https://www.defense.gov/News/Article/Article/684616/dod-invites-vetted-specialists-to-hack-the-pentagon/. [Accessed: 24-Jun-2017].

24. J. Harper, “Silicon Valley Could Upend Cybersecurity Paradigm,” National Defense Magazine, vol. 101, no. 759, pp. 32–34, February 2017.

25. B. Popper, “A New Breed of Startups Is Helping Hackers Make Millions—Legally,” The Verge, March 4, 2015, https://www.theverge.com/2015/3/4/8140919/get-paid-for-hacking-bug-bounty-hackerone-synack. [Accessed: 15-Jun-2017].

26. T. Willis, “Pwnium V: The never-ending* Pwnium,” Chromium Blog, February 2015.

27. Willis, “Pwnium V.”

28. “Bug Bounties—What They Are and Why They Work,” OSTIF.org, https://ostif.org/bug-bounties-what-they-are-and-why-they-work/. [Accessed: 15-Jun-2017].

29. T. Ring, “Why Bug Hunters Are Coming in from the Wild,” Computer Fraud & Security, vol. 2014, no. 2, pp. 16–20, February 2014.

30. E. Mills, “Facebook Hands Out White Hat Debit Cards to Hackers,” CNET, December 2011, https://www.cnet.com/news/facebook-hands-out-white-hat-debit-cards-to-hackers/. [Accessed: 24-Jun-2017].

31. Mills, “Facebook Hands Out White Hat Debit Cards to Hackers.”

32. J. Bort, “This Hacker Makes an Extra $100,000 a Year as a ‘Bug Bounty Hunter,’” Business Insider, May 2016, www.businessinsider.com/hacker-earns-80000-as-bug-bounty-hunter-2016-4. [Accessed: 25-Jun-2017].

33. H. Cavusoglu, H. Cavusoglu, and S. Raghunathan, “Efficiency of Vulnerability Disclosure Mechanisms to Disseminate Vulnerability Knowledge,” Transactions of the American Institute of Electrical Engineers, vol. 33, no. 3, pp. 171–185, March 2007.

34. B. Franklin, Poor Richard’s Almanack (1744).

35. K. Price, “US Income Taxes and Bug Bounties,” Bugcrowd Blog, March 17, 2015, http://blog.bugcrowd.com/us-income-taxes-and-bug-bounties/. [Accessed: 25-Jun-2017].