1. THE GREAT WORM

When Robert Morris Jr. released his worm at 8:00 p.m., he had no idea that he might have committed a crime. His concern that night was backlash from fellow geeks: many UNIX administrators would be furious when they found out what he had done. As Cliff Stoll, a computer security expert at Harvard, told The New York Times: “There is not one system manager who is not tearing his hair out. It’s causing enormous headaches.” When the worm first hit, administrators did not know why it had been launched and what damage it was causing. They feared the worst—that the worm was deleting or corrupting the files on the machines it had infected. (It wasn’t, as they would soon discover.)

After he first confessed the “fuckup” to his friend Paul Graham, Robert knew he had to do something. Unfortunately, he could not send out any warning emails because Dean Krafft had ordered the department machines to be disconnected from the main campus network and hence the public internet. At 2:30 a.m., Robert called Andy Sudduth, the system administrator of Harvard’s Aiken Computation Lab, and asked him to send a warning message to other administrators with instructions on how to protect their networks. Though not ready to out himself, Robert also wanted to express remorse for the trouble he was causing. Andy sent out the following message:

From: foo%bar.arpa@RELAY.CS.NET

To: tcp-ip@SRI-NIC

Date: Thu 03:34:13 03/11/1988 EST

Subject: [no subject]

A Possible virus report:

There may be a virus loose on the internet.

Here is the gist of a message Igot:

I’m sorry.

Here are some steps to prevent further transmission:

1) don’t run fingerd, or fix it to not overrun its stack when reading arguments.

2) recompile sendmail w/o DEBUG defined

3) don’t run rexecd

Hope this helps, but more, I hope it is a hoax.

Andy knew the worm wasn’t a hoax and did not want the message traced back to him. He had spent the previous hour devising a way to post the message anonymously, deciding to send it from Brown University to a popular internet Listserv using a fake username (foo%bar.arpa). Waiting until 3:34 a.m. was unfortunate. The worm spawned so swiftly that it crashed the routers managing internet communication. Andy’s message was stuck in a digital traffic jam and would not arrive at its destination for forty-eight hours. System administrators had to fend for themselves.

On November 3, as the administrators were surely tearing their hair out, Robert stayed at home in Ithaca doing his schoolwork and staying off the internet. At 11:00 p.m., he called Paul for an update. Much to his horror, Paul reported that the internet worm was a media sensation. It was one of the top stories on the nightly news of each network; Robert had been unaware, since he did not own a television. Newspapers called around all day trying to discover the culprit. The New York Times reported the story on the front page, above the fold. When asked what he intended to do, Robert replied, “I don’t have any idea.”

Ten minutes later, Robert knew: he had to call the chief scientist in charge of cybersecurity at the National Security Agency (NSA). So he picked up the phone and dialed Maryland. A woman answered the line. “Can I talk to Dad?” Robert asked.

The Ancient History of Cybersecurity

Security experts had been anticipating cyberattacks for a long time—even before the internet was invented. The NSA organized the first panel on cybersecurity in 1967, two years before the first link in the ARPANET, the prototype for the internet, was created. It was so long ago that the conference was held in Atlantic City … unironically.

The NSA’s concern grew with the evolution of computer systems. Before the 1960s, computers were titanic machines housed in their own special rooms. To submit a program—known as a job—a user would hand a stack of punch cards to a computer operator. The operator would collect these jobs in “batches” and put them all through a card reader. Another operator would take the programs read by the reader and store them on large magnetic tapes. The tapes would then feed this batch of programs into the computer, often in another room connected by phone lines, for processing by yet another operator.

In the era of batch processing, as it was called, computer security was quite literal: the computer itself had to be secured. These hulking giants were surprisingly delicate. The IBM 7090, filling a room the size of a soccer field at MIT’s Computation Center, was composed of thousands of fragile vacuum tubes and miles of intricately wound copper strands. The tubes radiated so much heat that they constantly threatened to melt the wires. MIT’s computer room had its own air-conditioning system. These “mainframe” computers—probably named so because their circuitry was stored on large metal frames that swung out for maintenance—were also expensive. The IBM 7094 cost $3 million in 1963 (roughly $30 million in 2023 dollars). IBM gave MIT a discount, provided that they reserved eight hours a day for corporate business. IBM’s president, who sailed yachts on Long Island Sound, used the MIT computer for race handicapping.

Elaborate bureaucratic rules governed who could enter each of the rooms. Only certain graduate students were permitted to hand punch cards to the batch operator. The bar for entering the mainframe room was even higher. The most important rule of all was that no one was to touch the computer itself, except for the operator. A rope often cordoned it off for good measure.

In the early days of computing, then, cybersecurity meant protecting the hardware, not the software—the computer, not the user. After all, there was little need to protect the user’s code and data. Because the computer ran only one job at a time, users could not read or steal one another’s information. By the time someone’s job ran on the computer, the data from the previous user was gone.

Users, however, hated batch processing with the passion of a red-hot vacuum tube. Programmers found it frustrating to wait until all of the jobs in the batch were finished to get their results. Worse still, to rerun the program, with tweaks to code or with different data, meant getting back in the queue and waiting for the next batch to run. It would take days just to work out simple bugs and get programs working. Nor could programmers interact with the mainframe. Once punch cards were submitted to the computer operator, the programmers’ involvement was over. As the computer pioneer Fernando “Corby” Corbató, described it, batch processing “had all the glamour and excitement of dropping one’s clothes off at a laundromat.”

Corby set out to change that. Working at MIT in 1961 with two other programmers, he developed the CTSS, the Compatible Time-Sharing System. CTSS was designed to be a multiuser system. Users would store their private files on the same computer. All would run their programs by themselves. Instead of submitting punch cards to operators, each user had direct access to the mainframe. Sitting at their own terminal, connected to the mainframe by telephone lines, they acted as their own computer operator. If two programmers submitted jobs at the same time, CTSS would play a neat trick: it would run a small part of job 1, run a small part of job 2, and switch back to job 1. It would shuttle back and forth until both jobs were complete. Because CTSS toggled so quickly, users barely noticed the interleaving. They were under the illusion that they had the mainframe all to themselves. Corby called this system “time-sharing.” By 1963, MIT had twenty-four time-sharing terminals, connected via its telephone system, to its IBM 7094.

Hell, as Jean-Paul Sartre famously wrote, is other people. And because CTSS was a multiuser system, it created a kind of cybersecurity hell. While the mainframes were now safe because nobody needed to touch the computer, card readers, or magnetic tapes to run their programs, those producing or using these programs were newly vulnerable.

A time-sharing system works by loading multiple programs into memory and quickly toggling between jobs to provide the illusion of single use. The system places each job in different parts of memory—what computer scientists call “memory spaces.” When CTSS toggled between jobs, it would switch back and forth between memory spaces. Though loading multiple users’ code and data on the same computer optimized precious resources, it also created enormous insecurity. Job #1, running in one memory space, might try to access the code or data in Job #2’s memory space.

By sharing the same computer system, user information was now accessible to prying fingers and eyes. To protect the security of their code and data, CTSS gave each user an account secured by a unique “username” and a four-letter “password.” Users that logged in to one account could only access code or information in the corresponding address space; the rest of the computer’s memory was off-limits. Corby picked passwords for authentication to save room; storing a four-letter password used less precious computer memory than an answer to a security question like “What’s your mother’s maiden name?” The passwords were kept in a file called UACCNT.SECRET.

In the early days of time-sharing, the use of passwords was less about confidentiality and more about rationing computing time. At MIT, for example, each user got four hours of computing time per semester. When Allan Scherr, a PhD researcher, wanted more time, he requested that the UACCNT.SECRET file be printed out. When his request was accepted, he used the password listing to “borrow” his colleagues accounts. Another time, a software glitch displayed every user’s password, instead of the log-in “Message of the Day.” Users were forced to change their passwords.

From Multics to UNIX

Though limited in functionality, CTSS demonstrated that time-sharing was not only technologically possible, but also wildly popular. Programmers liked the immediate feedback and the ability to interact with the computer in real time. A large team from MIT, Bell Labs, and General Electric, therefore, decided to develop a complete multiuser operating system as a replacement for batch processing. They called it Multics, for Multiplexed Information and Computing Service.

The Multics team designed its time-sharing with security in mind. Multics pioneered many security controls still in use today—one of which was storing passwords in garbled form so that users couldn’t repeat Allan Scherr’s simple trick. After six years of development, Multics was released in 1969.

The military saw potential in Multics. Instead of buying separate computers to handle unclassified, classified, secret, and top-secret information, the Pentagon could buy one and configure the operating system so that users could access only information for which they had clearance. The military estimated that it would save $100 million by switching to time-sharing.

Before the air force purchased Multics, they tested it. The test was a disaster. It took thirty minutes to figure out how to hack into Multics, and another two hours to write a program to do it. “A malicious user can penetrate the system at will with relatively minimal effort,” the evaluation concluded.

The research community did not love Multics either. Less concerned with its bad security, computer scientists were unhappy with its design. Multics was complicated and bloated—a typical result of decision by committee. In 1969, part of the Multics group broke away and started over. This new team, led by Dennis Ritchie and Ken Thompson, operated out of an attic at Bell Labs using a spare PDP-7, a “minicomputer” built by the Digital Equipment Corporation (DEC) that cost ten times less than an IBM mainframe.

The Bell Labs team had learned the lesson of Multics’ failure: Keep it simple, stupid. Their philosophy was to build a new multiuser system based on the concept of modularity: every program should do one thing well, and, instead of adding features to existing programs, developers should string together simple programs to form “scripts” that can perform more complex tasks. The name UNIX began as a pun: because early versions of the operating system supported only one user—Ken Thompson—Peter Neumann, a security researcher at Stanford Research International, joked that it was an “emasculated Multics,” or “UNICS.” The spelling was eventually changed to UNIX.

UNIX was a massive success when the first version was completed in 1971. The versatile operating system attracted legions of loyalists with an almost cultish devotion and quickly became standard in universities and labs. Indeed, UNIX has since achieved global domination. Macs and iPhones, for example, run on a direct descendant of Bell Labs’ UNIX. Google, Facebook, Amazon, and Twitter servers run on Linux, an operating system that, as its name suggests, is explicitly modeled after UNIX (though for intellectual-property reasons was rewritten with different code). Home routers, Alexa speakers, and smart toasters also run Linux. For decades, Microsoft was the lone holdout. But in 2018, Microsoft shipped Windows 10 with a full Linux kernel. UNIX has become so dominant that it is part of every computer system on the planet.

As Dennis Ritchie admitted in 1979, “The first fact to face is that UNIX was not developed with security, in any realistic sense, in mind; this fact alone guarantees a vast number of holes.” Some of these vulnerabilities were inadvertent programming errors. Others arose because UNIX gave users greater privileges than they strictly needed, but made their lives easier. Thompson and Ritchie, after all, built the operating system to allow researchers to share resources, not to prevent thieves from stealing them.

The downcode of UNIX, therefore, was shaped by the upcode of the research community—an upcode that included the competition for easy-to-use operating systems, distinctive cultural norms of scientific research, and the values that Thompson and Ritchie themselves held. All of these factors combined to make an operating system that prized convenience and collaboration over safety—and the vast number of security holes left some to wonder whether UNIX, which had conquered the research community, might one day be attacked.

WarGames

In 1983, the polling firm Louis Harris & Associates reported that only 10 percent of adults had a personal computer at home. Of those, 14 percent said they used a modem to send and receive information. When asked, “Would your being able to send and receive messages from other people … on your own home computer be very useful to you personally?” 45 percent of those early computer users said it would not be very useful.

Americans would soon learn about the awesome power of computer networking. The movie WarGames, released in 1983, tells the story of David Lightman, a suburban teenager played by Matthew Broderick, who spends most of his time in his room, unsupervised by his parents and on his computer, like a nerdy Ferris Bueller. To impress his love interest, played by Ally Sheedy, he hacks into the school computer and changes her grade from a B to an A. He also learns how to find computers with which to connect via modem by phoning random numbers—a practice now known as war-dialing (after the movie). David accidentally war-dials the Pentagon’s computer system. Thinking he has found an unreleased computer game, David asks the program, named Joshua, to play a war scenario. When Joshua responds, “Wouldn’t you prefer a nice game of chess?” David tells Joshua, “Let’s play Global Thermonuclear War.” David, however, is not playing a game—Joshua is a NORAD computer and controls the U.S. nuclear arsenal. By telling Joshua to arm missiles and deploy submarines, David’s hacking brings the world to the nuclear brink. The movie ends when David stops the “game” before it’s too late. Joshua, the computer program, wisely concludes, “The only winning move is not to play.”

WarGames grossed $80 million at the box office and was nominated for three Academy Awards. The movie introduced Americans not only to cyberspace, but to cyber-insecurity, as well. The press pursued this darker theme by wondering whether a person with a computer, telephone, and modem—perhaps even a teenager—could hack into military computers and start World War III.

All three major television networks featured the movie on their nightly broadcasts. ABC News opened their report by comparing WarGames to Stanley Kubrick’s Cold War comedy, Dr. Strangelove. Far from being a toy for bored suburban teenagers, the internet, the report suggested, was a doomsday weapon capable of starting nuclear Armageddon. In an effort to reassure the public, NORAD spokesperson General Thomas Brandt told ABC News that computer errors as portrayed in the film could not occur. In these systems, Brandt claimed, “Man is in the loop. Man makes decisions. At NORAD, computers don’t make decisions.” Even though NBC News described the film as having “scary authenticity,” it concluded by advising “all you computer geniuses with your computers and modems and autodialers” to give up. “There’s no way you can play global thermonuclear war with NORAD, which means the rest of us can relax and enjoy the film.”

Not everyone was reassured. President Ronald Reagan had seen the movie at Camp David and was disturbed by the plot. In the middle of a meeting on nuclear missiles and arms control attended by the Joint Chiefs of Staff, the secretaries of state, defense, and treasury, the national security staff, and sixteen powerful lawmakers from Congress, Reagan interrupted the presentation and asked the room whether anyone had seen the movie. None had—it opened just the previous Friday. Reagan, therefore, launched into a detailed summary of the plot. He then turned to General John Vessey Jr., chairman of the Joint Chiefs, and asked, “Could something like this really happen?” Vessey said he would look into it.

A week later, Vessey returned with his answer, presumably having discovered that the military had been studying this issue for close to two decades: “Mr. President, the problem is much worse than you think.” Reagan directed his staff to tackle the problem. Fifteen months later, they returned with NSDD-145, a National Security Decision Directive. The directive empowered the NSA to protect the security of domestic computer networks against “foreign nations … terrorist groups and criminal elements.” Reagan signed the confidential executive order on September 17, 1984.

While WarGames spurred the White House to address what would eventually be called “cyberwarfare,” it prompted Congress to address “cybercrime.” Both the House and the Senate began subcommittee hearings on computer security by showing excerpts of the film. Rep. Dan Glickman, a Kansas Democrat, announced, “We’re gonna show about four minutes from the movie WarGames, which I think outlines the problem fairly clearly.” These hearings ultimately resulted in the nation’s first comprehensive legislation about the internet, and the first-ever federal legislation—legal upcode, in our terminology—on computer crime: the Counterfeit Access Device and Computer Fraud and Abuse Act of 1984, enacted in October 1984.

Politicians were not the only ones concerned. When Ken Thompson won the Turing Lifetime Achievement Award in 1984, the highest honor in the computer-science community, for developing UNIX, he devoted his lecture to cybersecurity, a first for the Turing lecture. In the first half of his lecture, Thompson described a devious hack first used by air force testers when they penetrated the Multics system in 1974. They showed how to insert an undetectable “backdoor” in Multics. A backdoor is a hidden entrance into a computer system that bypasses security, the digital equivalent of a bookcase that doubles as a door to a secret passageway. Thompson showed how someone could surreptitiously do the same to UNIX. (Thompson inserted a backdoor into a version of UNIX used at Bell Labs, and, as he predicted, the backdoor was never detected. The hack would be repeated by Russian intelligence in the 2020 SolarWinds hack, which compromised millions of American computers before being detected.) The moral Thompson drew was bracing: the “only program you can truly trust is the one you wrote yourself.” But since it isn’t possible to write all of one’s software, it is necessary to trust others, which is inherently risky.

Thompson next turned to moralizing. As the cocreator of UNIX, who understood all too well how hackers could exploit multiuser systems, he denounced hackers as “nothing better than drunk drivers.” Thompson ended his speech by warning of “an explosive situation brewing.” Movies and newspapers had begun to hail teenage hackers, making “heroes of vandals by calling them whiz kids.” Thompson was not merely referring to the hype surrounding WarGames. At the same time the movie hit theaters, a group of six young computer hackers, ages sixteen to twenty-two, known as the 414 Club, broke into many high-profile computer systems, including ones at the Los Alamos National Laboratories and Security Pacific National Bank. The spokesman for the 414 Club, seventeen-year-old Neal Patrick, enjoyed his fifteen minutes of fame, appearing on the Today Show, The Phil Donahue Show, and the September 5, 1983, cover of Newsweek, even though he and his friends were just novices.

Thompson pointed to what he called a “cultural gap” in how society understands hacking. “The act of breaking into a computer system has to have the same social stigma as breaking into a neighbor’s house. It should not matter that the neighbor’s door is unlocked.”

Social stigma or not, Congress soon enacted more stringent criminal statutes for hacking. The Computer Fraud and Abuse Act (CFAA) of 1986 made it a federal crime to engage in “unauthorized access” of any government computer and cause over $1,000 in damage. Those convicted of this statute faced up to twenty years in jail and a $250,000 fine.

Infiltrating thousands of government computers and crashing the internet was exactly the kind of Hollywood-esque attack that the new law was designed to punish. Whoever built and released the self-replicating worm on the night of November 2, 1988, was in big trouble.

Bob Morris

When Robert Morris Jr. called home asking for his father late on November 3, his mother replied that he was asleep. “Is it important?”

“Well, I’d really like to speak to him,” Robert replied.

Robert Morris Sr. was the chief scientist for the National Computer Security Center at the NSA. Age fifty-six, “Bob” was a mathematical cryptographer. He had a long, graying beard, unruly hair, wild eyes with a mischievous smile—the kind of eccentric who never looks quite right in a suit. He had only recently moved to Washington after spending twenty-six years at Bell Labs. During that time, he had created many of the core utilities of UNIX. Indeed, he was close friends with Ken Thompson, who a few years earlier had publicly denounced whiz-kid hackers as drunk drivers.

Given his position, Bob understood that his son was in legal jeopardy. Though the FBI had not yet figured out who released the worm, it was only a matter of time before they did. Bob, therefore, advised Robert to remain silent and keep to his plan of meeting his girlfriend in Philadelphia the next day.

No doubt Bob was terrified for his son, but his son’s antics must also have been highly embarrassing. Bob was still new to Washington. He arrived at the NSA headquarters at Fort Meade only two years earlier. Having the head of computer security’s son hack the nation’s computer systems was … awkward.

The call also came at an inopportune time. Bob loved being at the NSA. “For a cryptographer like him, it was akin to going to Mecca,” said Marvin Schaefer, Bob’s predecessor at the NSA, who recommended him for the job. In fact, Bob was taking courses to be promoted to a more classified management position. As he dryly conceded to The New York Times, having nightly mentions of his son on the news was “not a career plus.”

Bob could hardly be angry with Robert. Even before Robert was born, Bob had a terminal installed at home for dialing in to Bell Labs’ mainframe. Soon Robert was using the computer to explore the mainframe by himself and, in the process, learning UNIX. Both he and his father were obsessed with cybersecurity, especially with finding holes in seemingly secure software, and they would talk about the subject often. As the New York Times reporter John Markoff put it, “The case, with all its bizarre twists, illuminates the cerebral world of a father and son—and indeed a whole modern subculture— obsessed with the intellectual challenge of exploring the innermost crannies of the powerful machines that in the last three decades have come to control much of society.” When Robert’s mother was asked whether father and son appreciated their similarities, she responded, “Of course they are aware of it. How could they not be?”

Markoff figured out that Robert Morris Jr. had written the worm when Markoff spoke to Paul Graham. Over several conversations, Paul had referred to the culprit as “Mr. X,” but then Paul slipped up and referred to him as “rtm” by accident. Through Finger, a now-defunct UNIX service that acted like an internet telephone book, Markoff discovered that “rtm” stood for Robert Tappan Morris.

When Markoff contacted him, Bob saw no point in pretending other- wise, so he confessed for his son. Bob told The New York Times that the worm was “the work of a bored graduate student.” Even as fear and anger built against the bored graduate student, his father could not help bragging about his son, and himself. “I know a few dozen people in the country who could have done it. I could have done it, but I’m a darned good programmer.” Bob also had a puckish wit. Speaking about his son’s obsession with computer security, Bob said, “I had a feeling this kind of thing would come to an end the day he found out about girls. Girls are more of a challenge.”

The FBI opened a criminal investigation against Robert Morris Jr. They assigned it a “very high priority” but did not know what to do. FBI spokesman Mickey Drake admitted, “We have no history in the area.” Hackers had been prosecuted before, but predominantly at the state level. A year earlier, a disgruntled worker in Fort Worth, Texas, was convicted of a third-degree felony for wiping out 168,000 payroll records after being dismissed by his employer, an insurance company. But the Department of Justice had never tried anyone under the new 1986 Computer Fraud statute in front of a jury. The department could not decide whether to prosecute Morris’s act as a misdemeanor, punishable by a fine and up to a year in jail, or as a felony, which could lead to ten years behind bars.

Bob Morris retained the criminal defense attorney Thomas Guidoboni in preparation for a possible prosecution. At their first meeting, Guidoboni was struck by Robert’s cluelessness—he was still unaware that he might be charged with a crime. He was most concerned about being expelled from Cornell. Guidoboni also observed that “Robert may have been the youngest twenty-two-year-old I’ve ever met.” On his way home from the meeting, Robert fainted on the metro.

Dissecting the Worm

There are several varieties of downcode. The most familiar kinds are those written in high-level programming languages, with such names as C (the successor to the language B), C++ (the successor to C, ++ being C-speak for “add 1”), Python (named after the British comedy troupe Monty Python), and JavaScript (named after the popular programming language Java as a marketing gimmick, though they have little to do with each other). Humans code in these languages because they are easy to use. Their instructions are written in English, employ basic arithmetic symbols, and have a simple grammar.

Central processing units (CPUs)—the “brains” of the computer—do not understand high-level languages. Instead of using English words, CPUs communicate in binary, the language of zeros and ones. In machine code, as it is known, different binary strings represent specific instructions (e.g., 01100000000000100000010000000010 means “ADD 2+2”). Special programs known as compilers take high-level code that humans write and translate it into machine code so that CPUs can execute it. The machine code is usually put into a binary file so that it can be quickly executed. (In chapter 3, we will encounter a third kind of downcode, known as assembly language.)

Robert Morris Jr. wrote his worm in C, but he did not release this version. Instead, he launched the compiled version, the machine code consisting just of zeros and ones. As Robert slipped into a state of shock, system administrators had no choice but “decompile” the worm. They had, in other words, to painstakingly translate the primitive language of zeros and ones sent to the computers’ central processing units into the high-level symbolic code that Robert originally wrote it in. They were reversing the compilation process that translated the C code into a binary string of zeros and ones that computers can understand, but humans cannot.

With all the misfortune befalling these administrators, they enjoyed one stroke of good luck: a UNIX conference was being held at Berkeley that week. The world experts were assembled on the battlefield as the attack unfolded. Working day and night, the UNIX gurus decoded the worm by the morning of November 4th. They discovered that the worm did not use just one method for breaking into computers. The worm was so effective because it attacked using four different methods, or, in hacker terminology, attack vectors.

The first attack vector was very simple. UNIX lets users select “trusted hosts.” If you had an account on a network, you could tell UNIX to “trust” certain machines on that network. If you selected machine #1 to trust, you could work on, say, machine #2 and access #1 without entering your password again. Trusted hosts were useful because they let users work on several machines simultaneously without having to log in each time.

When the worm first landed on a machine, it would check for any trusted hosts. If any were found, the worm would reach out to establish a network connection. The worm would, as it were, call the trusted host and see if it picked up. If the host picked up, a new network connection would form. The worm would use this connection to send a small program—known as bootstrap code. The bootstrap code would then fetch a copy of the worm, store it on the new machine, and turn it on. The parent worm would move on to the next trusted host while its progeny started the same process at its new home.

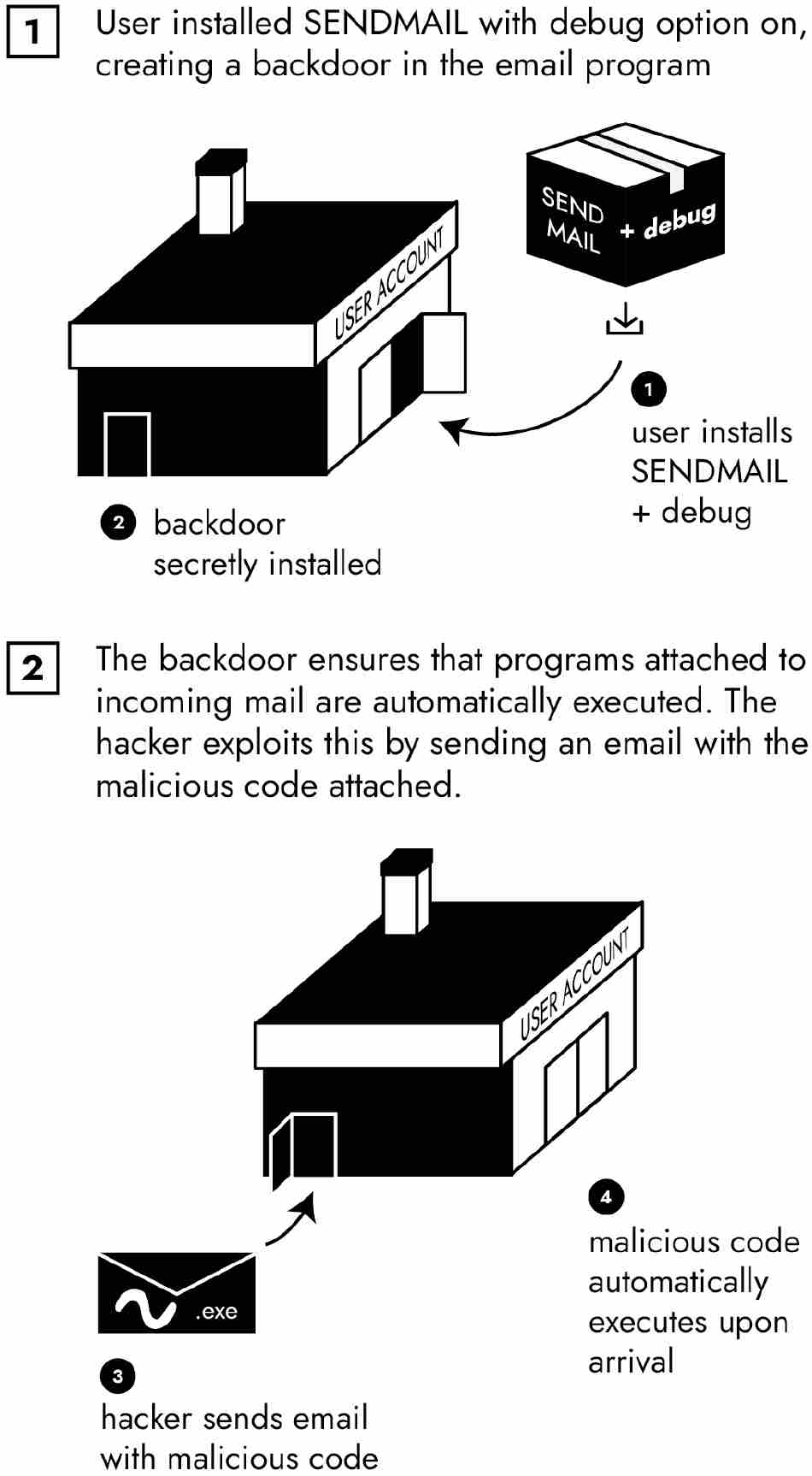

The second attack vector targeted SENDMAIL, an email program written by Eric Allman, then an undergraduate computer-science student at UC Berkeley, in 1975. Because Allman was working for a system administrator who was stingy with computer time, Allman built a backdoor into SENDMAIL. When SENDMAIL was installed with the debug option set, it would permit Allman to send a program over the mail to the administrator’s computer. Once the email message arrived in the administrator’s inbox, SENDMAIL would automatically run the attached program on his machine, thereby enabling Allman to eke out extra computer time. When Allman finished his project, he forgot about the backdoor he’d installed in SENDMAIL.

In the meantime, SENDMAIL became the default email program for UNIX. When it was installed with the debug option set, the backdoor could be opened—which is precisely what the Morris Worm did. When the worm had exhausted all trusted hosts, it emailed a copy of the bootstrap code to other nodes on the network using SENDMAIL.

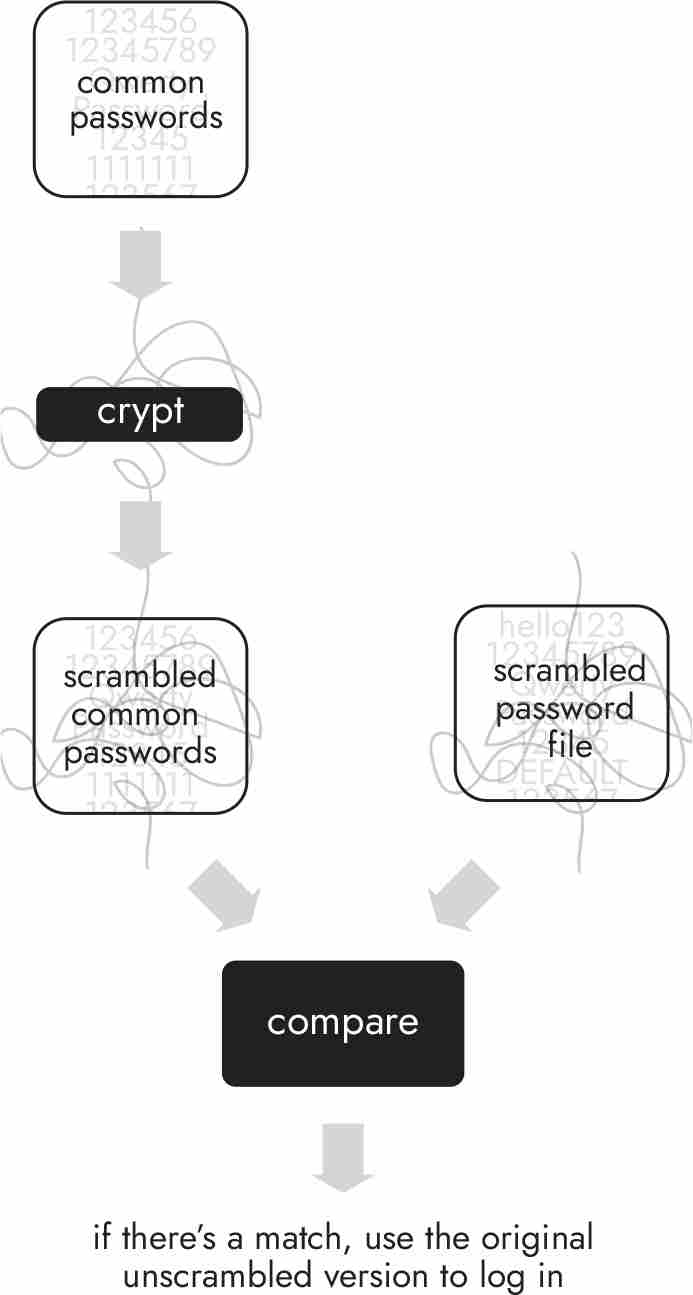

The third attack exploited the vulnerabilities of passwords. When you choose a new password for your laptop or pass code for your phone, the operating system does not store it. Rather, the operating system passes your secret word or phrase through a program to scramble it using complex mathematics. Because the operating system stores only the scrambled version, it does not know what your real password is. This is bad news for hackers because they can’t break into your computer and find the unscrambled version, the only one that allows users to log in.

When a user chose a new password in UNIX, the operating system would run it through a program called crypt. (Fun fact: crypt was written by Bob Morris.) The result was placed in the password file. The next time the user logged in to their account, UNIX would pass the password through crypt and compare the jumbled version to the one stored in the password file. If they matched, UNIX would let the user in.

Like eggs, scrambled passwords are tough to unscramble. The better the mathematics used, the harder it is to undo. Robert, therefore, did not even try to defeat the complex cryptography his father had built into crypt to scramble passwords. Robert’s trick was to run the scrambling process in reverse. The worm carried a list of four hundred commonly used passwords that Robert found on the Harvard, Cornell, and Berkeley systems. (The list for A contained academia, aerobics, algebra, amorphous, analog, anchor, andromache, animals, answer, anthropogenic, anvils, anything, aria, ariadne, arrow, arthur, athena, atmosphere, aztecs, and azure.) The worm ran these passwords through a modified version of crypt. (Another fun fact: Robert’s version of crypt was nine times faster than his father’s version and used less space.) It then compared the scrambled versions to those in the password file. If one matched, the worm knew that the password with the matching scrambled version was the user’s password. Thus, if the scrambled version of the password apple was found in the password file, the worm guessed that the user’s password was apple and would use it to log in to the account.

The fourth attack vector was the most technically complex, and the full explanation will wait until the next chapter. But in short the worm attacked Finger, the same UNIX service that betrayed Robert Morris Jr.’s identity to John Markoff. To send a Finger request, you would type, say, “finger rtm”—rtm being Robert Tappan Morris’s username. Finger would then look up rtm and see whether it had information on the user with this username. If it did, it would respond with the information it had: rtm’s full name, username, address, phone number, and so on.

Instead of submitting a username to Finger, such as rtm, the worm sent a large request that contained malicious code. The Finger program, however, expected a much smaller request and became overloaded. The request flooded the surrounding memory and obliterated the code that enabled Finger to work. By exploiting this overflow, the worm found a new home on the machine running Finger.

The Morris Worm spread so quickly because it used four attack vectors. If it could not find trusted hosts, the worm would try to break into other machines via SENDMAIL. If SENDMAIL’s backdoor wouldn’t open, the worm would try guessing passwords. If password guessing didn’t work, the worm would try to overpower Finger. Any hole found via one of these four attack vectors would lead to another infection.

“You Idiot”

When the worm was fully deciphered, the administrators spotted a major flaw in the code. Before the worm spread to a target, Robert had it check whether a copy was already present. There would be no reason to infect an already-infected machine. Robert worried, however, that a clever administrator might find the worm on its network, kill it, but make it appear as though the worm were still there. To outwit this defensive maneuver, Robert told the worm to ignore reports of infection every seventh time it attacked a computer. That way, even if an account had been digitally “vaccinated” by an administrator, the worm would eventually reinfect the host nonetheless.

Ignoring signs of infection one out of seven times, however, was high—way too high, given Robert wanted to explore the internet, not crash it. Since the worm used so many attack vectors, it ended up probing the same infected target multiple times. Every seventh time it would create a copy of itself. And then it would try again six more times, copying itself again on the seventh. Each machine would fill with worm clones and spend its time searching for hosts to infect and (on the seventh time) reinfect. Networks collapsed, therefore, not because of the malicious actions of any one worm. They buckled due to the collective weight of the swarms. The hosts on the networks could not keep up with the strain placed on their CPUs by the multiplying clones. As Paul said to Robert when he heard that Robert had set the reinfection rate to one out of seven (instead of much less frequently), “You idiot.”

With the mystery of the worm unraveled, it was easy to patch the system to prevent infection. First, administrators were to shut off the trusted-host function. This way the worm could not log on to other machines without entering a password. Second, they should reinstall SENDMAIL with the debug option off, locking the backdoor. Third, administrators should make the file with scrambled passwords unreadable by users. In this way, the worm could not use it to guess passwords. Finally, they were to change the Finger program so that it rejected excessively long usernames, thus preventing the worm from overwhelming it.

By the night of November 4, the worm had been largely eradicated. With the immediate danger having passed, the pressing question became, “Was there a fundamental flaw at the heart of the internet, one that made it so insecure that a graduate student could crash it?”

What Is the Internet?

The first time Americans saw the word internet was on November 7, 1988—in reports on the Morris Worm. The New York Times called it “the Internet,” The Wall Street Journal opted for just “Internet,” and USA Today omitted the definite article but capitalized both the I and the N—as in “InterNet.” The Washington Post chose the nonsensical “the Internet network.”

The word internet comes from one of the two main protocols—that is, sets of procedures—that run the system: IP, which stands for Internet Protocol, and TCP, which stands for Transmission Control Protocol. These sets of procedures let different networks communicate with one another—they are downcode for “internetworking.” TCP/IP, as it is commonly called, was developed by Robert Kahn and Vinton Cerf in 1974 to connect networks that communicated via telephone lines (ARPANET), radio waves (ALOHANET), and satellites (SATNET).

To see how internetworking functions, let’s take a physical-world example. At Yale Law School, we have our own mail system. To send a grade sheet to the registrar, I put it in an interoffice envelope and deliver it to the second- floor mail room. Each morning the intracampus mail service picks up these envelopes and delivers them to the offices, departments, and schools around Yale University. If Stanford law professors want to send grade sheets to their registrar, they would put them in their interoffice mail envelopes, hand the envelopes over to their mail room, and, somehow, the grade sheets get to their registrar.

Suppose, however, that I want to send some of my published work to my friend at Stanford. (This is a hypothetical, of course: I have no friends at Stanford.) I put the articles into a different envelope, address it—“Prof. X / Stanford Law School / Stanford, CA 94305”—and deliver it to our mail room. (If the articles don’t fit into one envelope, I divide them into two envelopes, writing “1 of 2” on the first envelope, and “2 of 2” on the second.) Every afternoon, the Yale Printing Service picks up the outbound mail, stamps it, and delivers it to the U.S. Postal Service in New Haven. The Yale Printing Service office, in other words, is the gateway to the U.S. Postal Service.

The post office in New Haven starts the routing of the letter through the country: New Haven → Hartford → San Francisco → Palo Alto → Stanford Central Mail Room. Stanford Central Mail Room is the university’s gateway for its local mail system. Once the letter enters the Stanford system, it finds its way to my imaginary friend’s office.

The magic of the U.S. Postal Service is its ability to deliver mail between organizations that have their own local mail systems. It is an internetworking protocol—an internet for physical mail. The U.S. Postal Service accomplishes this task through its own upcode. It has protocols for addresses, delivery schedules, and payments. If I want my articles to get to my friend, I can’t just scrawl their name on the envelope or jot down their GPS coordinates. I have to put down a street number, town, state, and zip code.

The post office also uses standardized envelopes and packages. They are designed to stack easily in mailboxes, bags, and trucks. The better they bundle, the more mail can be stuffed into containers and delivered on any given trip.

The internet works in a similar way. If I send an email to my “friend” at Stanford, my email program sends the message to the operating system that runs TCP/IP. When the email message is small, TCP places it in a special electronic envelope called a segment. TCP then appends the “destination port” onto the segment’s address. A port is like a room in a building. The destination port is the “room” in my friend’s computer where incoming mail is processed. (Port 25 is the standard destination port for email. Port 80 and 443 are used for web traffic.) My operating system also adds the source port of my computer to the segment as its return address.

TCP also checks to see whether the entire message fits into one segment. If it’s too big, the operating system chops the message up and stuffs each piece into its own segment. Each segment has the source and destination port, plus a sequence number. If my email is divided into three parts, each segment would contain a sequence number (either 1, 2, or 3). Using standardized TCP segments that stack easily greatly increases the transmission capacity of the internet, much as the post office’s use of standardized envelopes increases the number of letters any carrier can transport at one time.

Having filled out the port and sequence numbering, the operating system passes the segments to another part of the operating system that follows the IP downcode. Here, the operating system stuffs the TCP segments into larger envelopes known as packets—each segment gets its own packet. It then addresses each packet with internet addresses (also known as IP addresses—the set of numbers in a dotted notation such as 172.3.45.100). An IP address is like the computer’s street address, how the email finds its destination. The operating system labels each packet with the source address—the internet address of my computer—and a destination address—the internet address of my friend’s computer in Palo Alto. It then sends the packets on to Yale Law School’s local network.

Once a packet makes it to the edge of Yale University’s network, the packet is transferred to a router. The router acts like a large post office—it determines, based on the address on the envelope, an efficient way to reach the next hop on the trip, accounting for factors like congestion, location, and cost. The packet is then passed from router to router until it makes it to the edge of the Stanford network. Once the packet reaches my friend’s computer, the outer IP packet is zipped open and the address on the inner TCP segment is read. Since the inner segment is addressed to port 25, my friend’s computer knows it is email. Once the segments arrive at the right port, my friend’s email application takes over and presents the message to be read.

Like the U.S. Postal Service, TCP/IP permits different networks following different codes to communicate with one another. My Yale computer doesn’t need to know how the Stanford network operates to send my email. Nor does my friend need to know anything about Yale’s network to reply. Since both networks run TCP/IP, we can communicate with each other.

Now that we know that the internet is a just set of procedures for connecting different networks, we might ask whether the Morris Worm spread so quickly because these fundamental protocols failed or malfunctioned in some way.

As far as we know, they did not. The code did exactly what it was supposed to do: it transmitted packets from one network to another. The protocols were not designed to ensure the safety of the information sent in these packets. Their function is to ensure the transfer of information according to the sender’s instructions—much as the U.S. Postal Service delivers mail, but does not inspect letters for harassment, fraud, or pathogens.

But we might ask, Should the designers of the internet have built downcode to catch worms? To see why the answer has to be no, let’s recall that the worm was a binary file. It was just a string of zeros and ones. For a router to figure out that the packet contained a malicious program, it would have had to decipher the zeros and ones to understand the inner logic. It would have had to figure out, somehow, that the binary string encoded a self- reproducing program unwanted by recipients—as opposed to a program that the recipients welcomed.

This task would have been made even more difficult because the worm was composed of three separate files and was therefore spread out over multiple packets. And not every packet went through the same router. How would routers know what was happening at other routers?

Finding worms, or other kinds of malicious network traffic, in transit is impractical for an internetworking system. The demands on the routers would be too great. Any attempt to ferret out malicious traffic, even if technologically possible, would lead to drastic slowdowns. It would consume precious computing power needed for calculating efficient routes and forwarding packets through them. Consider the mail again: the post office could open all letters and boxes to determine if they contained malicious messages or contents. But doing so would lead to massive delays in delivery.

The internet is founded on the end-to-end principle. According to the end-to-end principle, time-consuming tasks like vetting the security of network traffic are pushed away from the center of the network toward its edges—namely, to the users. Whether the packet I send my friend is a benign email or a self-replicating worm is a determination best made by my friend and his computer, not by the TCP/IP protocols. Revamping the internet with built-in antimalware technology would almost certainly lead to a much worse internet.

The vulnerabilities that the Morris Worm exploited, therefore, were not internet vulnerabilities. But if the internet is not to blame for the Great Worm, what was?

The culprit isn’t hard to spot. The attack vectors of the worm were all UNIX services. More specifically, the worm exploited vulnerabilities that were in a special version of UNIX, known as BSD 4.2 (Berkeley Software Distribution, version 4.2). This “flavor” of UNIX provided trusted hosts, the SENDMAIL program containing a backdoor, passwords scrambled by crypt, and the Finger service.

Robert Morris Jr. knew that many computers on the internet ran BSD 4.2. He built his worm to find these vulnerable end points. Blaming the internet for the Morris Worm would be like blaming the highway system for a spree of bank robberies. Getaway cars use these roads, but it is not the highway’s fault that the banks were so easy to rob.

We can see now that our first question—why is the internet insecure?—is misleading. The internet is a transport system. Built on the end-to-end principle, it will move packets from one place to another, as long as the rules of TCP/IP are followed. Therefore, the better question to ask is: Why are the end points of the internet—the computers themselves—so insecure?

The Lesson of the Worm

For a public that had learned about the internet and hacking from WarGames, it is not surprising that news of the Morris Worm elicited anxieties about the possible compromise of military computers. Robert Tappan Morris Jr. seemed to be a real-life David Lightman. All the major papers ran stories reassuring the public that military computers containing classified information had been unaffected by the worm.

Military computers were protected from infection because the military had its own internet, known as Milnet. As it had learned in the Multics fiasco, its security needs were too high to integrate with the public internet. It could not secure its end points well enough.

As an organization obsessed with secrecy, the military wanted its computers protected with ironclad downcode. So the military demanded that vendors mathematically demonstrate the security of their operating systems. Along with their software, vendors had to submit a highly formal, mathematical representation of their design and then provide logical proofs showing that the design was secure. They would hand this material over to the NSA’s National Computer Security Center for grading. The military would buy only from vendors who had received a high enough security rating from the NSA. In no other way, the military thought, could their information security needs be met.

The story of the VAX VMM Security Kernel demonstrates the pitfalls of this strategy. In 1979, Major Roger Schell led a team to create an operating system that could withstand the NSA’s most rigorous tests and achieve the highest possible score from the NSA—an A1 rating. To do so, his team built the system in a secured laboratory that only the development group could enter. The machine they coded on—the development machine—was housed in a separate locked room within the lab. That locked room was protected by a cage. Physical access to both the lab and the cage was controlled by a key-card system. Finally, the development machine was “air gapped,” meaning that it was not connected to any network, let alone the internet. These precautions were designed to prevent anyone from inserting a secret backdoor.

It took a decade to build the system. By late 1989, the VMM Security Kernel was put in the field to undergo testing at government and aerospace installations. But in March 1990, DEC, the maker of the VAX minicomputer, canceled the project and removed prototypes from the testing sites. The market was not large enough to justify the expense of advertising and supporting the product. In the ensuing decade, other commercial systems were created that, while not as secure as the VMM Security Kernel, were easier to use, were more powerful, had more features, and, crucially, cost less. The innovation cycle in the software business had become so rapid that by the time the formal specification of the software design and proofs were completed, the tested software had become obsolete.

While the military developed its formal verification procedures, the scientific community experimented with a very different form of software production, now known as FOSS—free and open-source software. The Berkeley UNIX group, for example, wrote many new utilities, such as SENDMAIL. They bundled these applications together and posted them, freely downloadable by anyone, under the name Berkeley Software Distribution. Under the Berkeley license, software was not only free of charge, but also freely modifiable. In contrast to the applications that the military purchased, where the source code was proprietary and therefore secret, the software for UNIX BSD was “open-source.” It provided end users free downcode and the legal upcode to modify it as they saw fit.

This FOSS community operated largely on trust and prized availability of information over confidentiality and integrity. One never knew for sure whether a UNIX distribution had backdoors secretly installed. The potential dangers were tempered because the downcode was open-source and hence “auditable.” If a backdoor was hidden in the application, someone would eventually find it, in accordance with Linus’s law, which holds that with enough eyeballs, all bugs are shallow (i.e., easy to find). While this “enough eyes” approach was less secure than the military’s mathematical approach, it produced a plethora of extremely useful applications and operating systems.

By the end of the 1980s, the United States had created two internets: a military internet and a scientific internet. Each developed under different upcode.

The military built its internet by following upcode that imposed strict security requirements. After all, the U.S. military faced the best adversaries in the world—they were highly trained at breaking into computer networks, extremely well funded, and ideologically motivated. These adversaries were after the most prized secrets of the U.S. government: military plans, troop deployments, weapons development, vulnerability reports, strategic analyses, scientific research, and personal correspondence of high-ranking officials. The combination of skill and motivation aimed at the prized assets of the U.S. government demanded the highest vigilance. And the highest vigilance, to the military, was mathematical proof of security.

The other internet—the scientific one—developed under different upcode. Scientists thought that the risk to internet users was low. As in the military case, some users had the skill to hack, but differently, few were motivated to use that skill against other internet users. Also, in contrast to today, when we live a good part of our lives online, few stored data valuable to anyone else on their computers in 1988. E-commerce did not exist; nor did social media. What could an intruder want with another user’s programs and data? More important, the internet had been built by a community united by a common purpose—to communicate and share research. The internet itself was a testament to low threat levels and to the high tolerance the scientific community had toward experimentation and technical virtuosity.

The scientific internet was indeed insecure, but it was a lush, growing jungle. Though researchers did not subject their software to rigorous testing like the military, they produced enormously useful protocols, tools, and infrastructure still in use today. Researchers operated under lax upcode because they assumed that their fellow internet users would be community-minded—altruistic, not destructive. In this community, hacker was not a pejorative; it signified a clever programmer who produced elegant code for solving difficult problems. Only later did hacker acquire sinister connotations.

Robert Morris Jr., rtm, was a hacker in the original sense. He was a computer-science graduate student at Cornell University. As an undergraduate, he was a UNIX administrator responsible for keeping Harvard’s Computer Science network up and running. His worm was an experiment. He did not mean to cause any damage. He was doing science.

Yet he crashed the internet. If a well-meaning scientist could cause so much damage, what could a sinister actor do?

The scientific community began to grasp the monster they’d created. An end-to-end system puts enormous responsibility on the end points, which means you need to trust them and their operating systems. In the first place, you have to rely on them to use security. If they don’t use passwords and leave their accounts open to the world, then attackers don’t have to hack their systems. Hacking is the defeating of a security control; when none are activated, hackers are spared the hassle. But even when the end points are trustworthy, you still have to trust their operating systems. If their operating systems are insecure, then hackers can defeat the security controls that they activated. And if an insecure operating system is prevalent, malicious code can easily spread via the internet to other vulnerable end points; the internet’s function is to transmit information, not to inspect it. Once it reaches another host on the edge of the network, the malware can defeat the security mechanisms there, too. The Morris Worm was designed to be harmless, but it temporarily broke the internet. Someone more malicious might cause permanent damage on a vast scale.

The military was alarmed as well. The Morris Worm confirmed its view that cyberspace was a dangerous place. However, the cost it was paying to protect itself was not sustainable. Software was getting ever more complex, and formal verification more expensive and time-consuming. Aside from the cost and delay, another bottleneck was that fewer than two hundred technicians in the entire world were capable of formally verifying software. Eventually, compromises would have to be made. But if the cost of compromise was a plague of internet worms, then the WarGames scenario might not be a Hollywood fantasy.

Neither community knew what the future would bring. Robert Tappan Morris Jr. did not know either.