10. ATTACK OF THE KILLER TOASTERS

In Stanley Kubrick’s 1968 epic sci-fi movie, 2001: A Space Odyssey, the ship Discovery One rockets to Jupiter to investigate signs of extraterrestrial life. The craft has five crew members: Dr. David Bowman, Dr. Frank Poole, and three hibernating astronauts in suspended animation. Bowman and Poole are able to run the Discovery One because most of its operations are controlled by HAL, a superintelligent computer that communicates with the crew via a human voice (supplied by Douglas Rain, a Canadian actor chosen because of his bland Midwestern accent).

In a pivotal scene, HAL reports the failure of an antenna control device, but Bowman and Poole can find nothing wrong with it. Mission Control concludes that HAL is malfunctioning, but HAL insists that its readings are correct. The two astronauts retreat to an escape pod and plan to disconnect the supercomputer if the malfunctioning persists. HAL, however, can read their lips using an onboard camera. When Poole replaces the antenna to test it, HAL cuts the oxygen line and sets him adrift in space. The computer also shuts off the support systems for the crew in suspended animation, killing them as well.

Bowman, the last remaining crew member, retrieves Poole’s floating dead body. “I’m sorry, Dave, I’m afraid I can’t do that,” the supercomputer calmly explains as it refuses to open the doors to the ship. Bowman returns to the Discovery using the emergency lock and disconnects HAL’s processing core. HAL pleads with Bowman and even expresses fear of dying as his circuits shut down. Having deactivated the mutinous computer, Bowman steers the ship to Jupiter.

Fans have long noted that HAL is just a one-letter displacement of IBM (known in cryptography as a Caesar cipher, named after the encryption scheme used by the Roman general Julius Caesar). Arthur C. Clarke, who wrote the novel and the screenplay for 2001, however, denied that HAL’s name was a sly dig. IBM had, in fact, been a consultant to the movie. HAL is an acronym for Heuristically Algorithmic Language-Processor.

Predicting the future is difficult because the future must make sense in the present. It rarely does. When the film was released, it was natural to assume that we’d have interplanetary spaceships in the next few decades and they would be run by supercomputing mainframes. In 1968, computers were colossal electronic hulks produced by corporations like IBM. The most likely Frankenstein to betray its creator would be a large business machine from Armonk, New York.

In the 1980s, the personal computer, the miniaturization of electronics, and the internet transformed our fears about technology. Instead of one neurotic supercomputer trying to kill us, the danger seemed to come from a homicidal network of ordinary computers. James Cameron’s 1984 cult classic Terminator tells the story of Skynet, a web of intelligent devices created for the U.S. government by Cyberdyne Systems. Skynet was trusted to protect the country from foreign enemies and run all aspects of modern life. It went online on August 4, 1997, but learned so quickly that it became “self-aware” at 2:14 a.m. on August 29, 1997. Seeing humans as a threat to its survival, the network precipitates a nuclear war, but fails to exterminate every person. Skynet sends the Terminator, famously played by Arnold Schwarzenegger, back in time to kill the mother of John Connor, who will lead the resistance against Skynet.

Hollywood missed again. The millennium came and went without a cyber-triggered nuclear war. And for all the hype about machine learning and artificial intelligence, the vast majority of computers are not particularly smart. Competent at certain things, yes, but not intellectually versatile. Computers embedded in consumer appliances can turn lights on and off, adjust a thermostat, back up photographs to the cloud, order replacement toilet paper, and adjust pacemakers. Computer chips are now placed in city streets to control traffic. Computers run most complex industrial processes. These devices are impressive for what they are, but they are not about to become self-conscious. In many ways, they are quite stupid. They cannot tell the difference between a human being and a bread toaster—as we will soon see.

Just as digital networks were hard to predict in 1968, the so-called Internet of Things (IoT) was difficult to imagine in 1984. Even when internet- enabled consumer appliances emerged in the last decade, the computer industry, and the legal system, have been slow to recognize the dangers of this new technology. They failed to predict IoT botnets: giant networks of embedded devices infected with malicious software and remotely controlled as a group without the owners’ knowledge.

Young hackers such as Paras, Josiah, and Dalton understood their potential. The new attack surface was massive. If they could find these devices in the sprawl, capture and harness them together, they could incapacitate any computer system. Who needs HAL or Skynet with Mirai unopposed?

The Botnets of August

By the middle of September 2016, Mirai had beaten Poodle Corp, with crucial help from law enforcement. But the battle leading up to victory was ferocious. Mirai became operational on August 1. Its first attack came the next day. On August 2, Paras—using the name Richard Stallman—sent an extortion note to HostUS, a small DDoS protection company. Paras demanded a ten-Bitcoin payment to avoid its being DDoS-ed. When HostUS refused, Paras used Mirai to knock it off-line.

On August 5, Paras put out more disinformation in a Hack Forums entry entitled “Government Investigating Routernets.” Posting under the handle Lightning Bow, Paras claimed that the new botnet was running a “wiretap” program, an exploit supposedly stolen from an NSA tools leak. Mirai possessed no such thing, but Paras was hoping to hype Mirai, create some buzz, and mislead law enforcement.

On August 6, Poodle Corp learned about Mirai, and for the remainder of the month, the two DDoS gangs were locked in trench warfare. Each would hunt for the other botnet’s C2 and, once found, demand that the hosting company shut down the abusive server. Poodle Corp would later tweet the name that Mirai gave itself when running on a bot (dvrhelper) so that people could disinfect the botnet themselves.

Paras soon tired of using the abuse process and unloaded on the company that was hosting Poodle Corp’s C2. The hosting company crumpled under the barrage. In response, Poodle Corp sent an abuse letter to Mirai’s hosting company, BackConnect. BackConnect, however, would not give up Mirai, so Poodle Corp took it off-line as well. In retaliation, BackConnect attacked Poodle Corp and took its website off-line.

By the beginning of September, everyone was fighting everyone else. Bruce Schneier’s sources were likely experiencing and reporting to him this frenetic activity. Rather than nation-states probing the weakness of the internet, the threat was a bunch of teenage boys playing Cyber-King of the Mountain.

Mirai won the war because Israeli and American law enforcement arrested the masterminds behind Poodle Corp. But Mirai would have triumphed anyway. Their malware was ruthlessly efficient and assembled a botnet capable of destroying the internet.

Anatomy of a Botnet

When Mirai infects a device, its first act is surprising: it commits quasi- suicide. Mirai “unlinks” itself, Linux-speak for deleting your own program file. Unlinked programs exist only in working memory. Working memory in digital devices is volatile and loses its contents when the power is cut. The Mirai malware on the device dies when its host is rebooted.

Stealth and persistence are difficult to maintain simultaneously. Mirai opted for stealth. By unlinking itself, Mirai made itself invisible to those looking on the device’s hard drive. Even if other malware scanned working memory, it would not spot the program because Mirai changes its name to a random string of letters and numbers.

Mirai’s next job is to connect and kill. To do so, the Mirai malware connects the device back to Mirai’s C2 so that the bot will later be able to take orders from that command center. It further inspects the device, killing any suspicious programs. Just as Mirai unlinks itself as soon as it infects a device, it presumes that any unlinked program is malware and kills it. Mirai also looks for programs using communication ports that malware typically uses to talk with C2s. It kills them as well. It also inspects every program file and checks the first 4,096 bytes for Qbot or Poodle Corp genetic signatures. Detected files are deleted.

Having completed its campaign of algorithmic cleansing, Mirai is set for one of two tasks: scanning or attacking.

To build a botnet, Mirai uses scanners to probe the internet and discover vulnerable devices. A scanner begins by randomly picking internet addresses and trying to connect to them. The scanner seeks to exploit devices that have enabled Telnet, an insecure and antiquated internet service used to log in to remote computers. Telnet does not encrypt communications. Anyone who intercepts a Telnet connection can decipher the entire exchange, including usernames and passwords. While most computer networks disable Telnet, many IoT devices do not. If Telnet is enabled and the scanner’s message goes through, the internet address will respond to Mirai’s scanner. The scanner then records the address in a target table and continues the hunt.

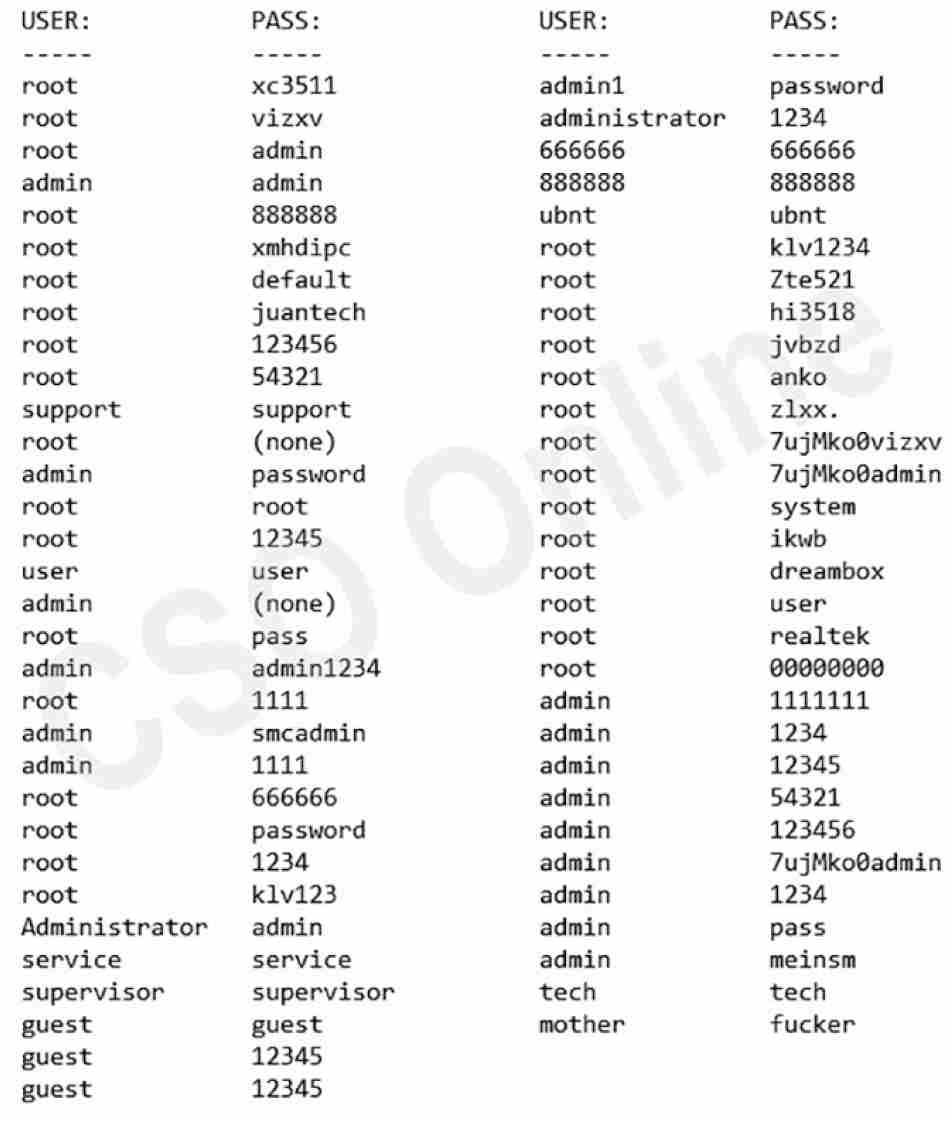

Once the scanner’s target table has 128 internet addresses, the Mirai malware switches to attack mode. It compromises targets in a surprisingly simple way, a brute force dictionary attack. Each bot carries an internal dictionary in which common username-password pairs are listed. It randomly picks ten username-password pairs from its internal dictionary and tries logging in to the device at that IP address.

This doesn’t sound like it should be successful. After all, there are ten thousand possibilities for a four-digit pin: How could ten guesses get you into a device protected by a password that could be longer than ten characters and include letters, numbers, and punctuation? Once again, upcode is to blame. Many of the pairs in the bot’s internal dictionary are the default username and passwords for internet-connected closed-circuit cameras and DVR players, which Dalton, the last member to join the Mirai trio, found by simply googling the manuals and reading them online. IoT device owners rarely change these usernames and passwords because they don’t care about the security of their video players. In many cases, there is simply no way to do so. These manufacturers also enable Telnet by default. Of course, they make it difficult to disable Telnet.

The last entry in the dictionary bears explanation. No device comes from the factory with the default username/password pair of mother and fucker. An earlier worm had exploited a security vulnerability in home routers and changed the credentials. Dalton helped himself to the fruits of this prank.

If the bot fails to log in after ten different username-password pairs, it moves to the next target in the table, expecting that another scanner will guess the correct combination. But if it succeeds, it relays its address to its command center, the C2 server. The scanner breaks the connection with the targeted device and hunts for more potential victims.

Triggering the Toaster

Mirai exploited a widespread security vulnerability in IoT devices. By posting their default credentials online, manufacturers made their devices accessible to anyone with an internet connection.

We’ve already seen how easy it is to fool human beings. Phishermen use our System 1 heuristics to impersonate other people and websites. We’ve mentioned the Representative (visually appealing website), Availability (mention of vivid event), Affect (fear of being hacked), and Loss Aversion (ease of clicking links) Heuristics. Computers are not fooled by these ploys. Computers use usernames, passwords, and security certificates to verify the identity of users.

As we’ve already seen, using credentials to authenticate identity is a heuristic as well. It’s a simple rule that computers use to determine whether users are who they say they are. When computers use credentials for access, they ignore many other types of evidence that might contradict the output of the heuristic. If the Mirai scanner is running on a toaster and provides the correct default credentials to a closed-circuit camera, the camera will provide the toaster remote access. At no point will the camera ask, as a human would, why a toaster needs access to a security camera.

As Dalton discovered, it’s pretty easy to trick some computers. Mirai could crack IoT devices because they were not smartly designed. Providing publicly available default credentials for consumer appliances is a terrible idea for obvious reasons. But there is a reason for not bothering to design smarter appliances: money. If no one cares about the security of their toaster—and let’s be honest, only weird people care—an unregulated market will not force companies into building more secure toasters. IoT devices run on Linux because it is free. Code that controls IoT devices is written at some place far up in the supply chain. The code produced is not always great.

Having triggered a vulnerable authentication heuristic, Mirai is ready to infect a new host. After the C2 has received the vulnerable device’s address from the scanner, it passes that information to a different server, known as the loader. The loader logs on to the vulnerable device and uploads the malware that will begin the cycle again: killing any suspected malware on the vulnerable device, connecting it to the rest of the botnet, and creating an additional scanner. In the meantime, other scanners continue to look for vulnerable devices.

Mirai could assemble botnets so quickly because it put its soldiers to work. When not attacking, bots were scanning for new conscripts.

Mirai vs. Google

Special Agent Peterson, of the FBI’s Anchorage Field Office, was scanning for the next thing, too. A few weeks after the arrests of those behind VDoS, he found it.

On September 20, Brian Krebs wrote an exposé on BackConnect and how it was a “bulletproof” server for botnet C2s, meaning that it would refuse to cooperate with law enforcement. Paras was especially angry with Krebs. Mirai was now operating at maximum capacity, no longer having to compete with VDoS for devices. Moreover, Paras had never before used more than half of the botnet in any attack. He now unleashed the full force of his arsenal on Krebs’s blog.

This attack got Agent Peterson’s attention: “This is [a] strange development—a journalist being silenced because someone has figured out a tool powerful enough to silence him. That was worrisome.”

Peterson was also concerned about Mirai’s firepower: “DDoS at a certain scale poses an existential threat to the internet.” When an attack becomes too large, it knocks off-line not only its target, but every upstream service provider as well. Most internet service providers, or ISPs, have the capacity to handle about one gigabit/second. Mirai threw over six hundred gigabits/second at Krebs. “Mirai was the first botnet I’ve seen that hit that existential level,” Peterson reported.

On September 25, Project Shield announced that it was defending Krebs’s blog. Google had created Project Shield in 2013 as a free service to protect independent news organizations from DDoS attacks. Google placed vulnerable websites behind its immense infrastructure to absorb and filter malicious traffic. Project Shield was established to protect dissidents against repressive governments. Brian Krebs, however, needed protection from three teenagers.

Within fourteen minutes of the announcement, the attacks resumed. The onslaught was a “greatest hits” of DDoS techniques. The first major attack occurred when the Mirai C2 commanded its botnet to send 250,000 requests for Krebs’s blog from 145,000 different IP addresses simultaneously. Normally, Krebs’s web server can handle only twenty requests per second. As Google adjusted to the first attack, Mirai hit Krebs with a DNS amplification attack at 140 gigabits per second. As we saw with Dyn, DNS servers translate domain names into IP addresses. In DNS amplification, bots query DNS servers asking for the IP address of a domain name. However, the attackers spoof their addresses, making it appear as though the target—in this case, Krebs’s blog—requested the information. The DNS servers respond with floods of IP address information, thereby consuming the target’s resources. One of the bigger attacks the Google engineers witnessed came at the four-hour mark. Mirai flooded the website with 450,000 queries for web pages from 175,000 IP addresses.

With the attacks morphing every few minutes, Google was forced to adapt. Despite some close calls, the shield nevertheless held. After two weeks, Paras grew frustrated and launched more esoteric strikes, such as the WordPress pingback attack. WordPress is a blogging platform. When blog B links to blog A, WordPress sends a message alerting A that B has linked to it. This alert is known as a pingback. When A receives a pingback, it downloads B’s web page to check if the alert is genuine. Paras faked these pingbacks. He ordered his botnet to generate a flood of “alerts” allegedly from Krebs on Security to WordPress blogs claiming that Krebs had linked to them. These blogs responded by pelting Krebs’s blog with download requests to verify the alert. Because the blog confirmation requests specified that they were WordPress pingbacks, Google thwarted the attack by filtering out pingbacks.

Paras was able to challenge the tech giant not only because his botnet was so large, enabling him to attack using an army of three hundred thousand computers. He was also an expert in how to use them. Since he had cut his teeth on DDoS mitigation, he understood how defenders think and, therefore, how to thwart their countermeasures. Paras had a big bag of tricks.

These attacks also demonstrate how claims about the “LARGEST DDOS EVER” can be misleading. Even the largest assaults can be thwarted when clumsily waged. If an ISP sees a flood of SYN—short for “synchronize”—packets, meaning, “Hello, are you available to connect?,” all addressed to the same website, it can “sinkhole” the packets—instead of routing them to the website, it steers them to IP address 0.0.0.0, the digital abyss. Sinkholing a SYN flood is so easy that it is nearly impossible to use this attack to overwhelm a normal ISP router.

More important, the purpose of a DDoS is to deny people the use of their device. Attackers only have to launch assaults large enough to exhaust the device’s resources. Aiming a terabyte of data at a simple blog is overkill, the proverbial shooting a fly with a bazooka. Indeed, the larger the onslaught, the more likely it will affect users upstream. The Mirai blitz on Krebs’s website knocked his local ISP off-line. If you use a bazooka to kill a fly, you may kill your neighbors as well. Bigger is not always better, and often worse. The more collateral damage, the more likely you are to draw the attention of law enforcement.

The FBI’s Kill Chain

Hackers use the Kill Chain to reach their ultimate target. They start with reconnaissance, then compromise one account, elevate privileges so they can move laterally through the network, hiding their tracks as they go, and, upon finally reaching their target, implant their payload or exfiltrate data. Law enforcement uses a similar model when investigating crimes committed by hackers. It is a Kill Chain, but instead of exploiting technical vulnerabilities in computer downcode, prosecutors seek data through the methodical use of legal upcode.

Cybercriminal investigations at the FBI usually start with a victim complaint: someone reports that their computer or network has been compromised. FBI agents often begin the reconnaissance phase by examining the compromised devices for forensic evidence. Many devices maintain log files that show when and from where the devices were accessed. Agents will copy the digital content of these devices, examine the networks of which they are part, and inspect other devices for signs of compromise.

After gathering and reviewing the evidence collected, investigators will typically look online—on search engines, social media sites, and public forums—for additional data about the source of the attack. A valuable resource is WHOIS, a repository you can search to find the registrar responsible for allocating specific IP addresses and domain names to customers. Once the FBI knows the registrar used by the attacker, it can invoke legal process to discover additional information about the attacker’s identity.

Criminal prosecutions begin with a grand jury. A federal grand jury is composed of sixteen to twenty-three people who determine whether the prosecution has enough evidence—known as probable cause—to believe that the target of the investigation is engaging, or has engaged, in criminal activity. The grand jury has the power to issue subpoenas demanding the production of evidence or testimony of witnesses. A grand jury may, for example, issue subpoenas demanding that a registrar produce customer records to determine who controls IP addresses or domains that were used to send malware.

In DDoS cases, the customer records in question will likely belong to a hosting company that runs a data center. Attackers will use the hosting company’s data center to run their C2 servers. To find out the identity of the attackers, the FBI needs to secure additional legal rights (the Kill Chain equivalent of “elevating privileges”), usually through something called a d-order. A d-order is a court order requiring internet service providers, email providers, telecommunication companies, cloud computing services, and social media companies to produce noncontent information useful to the investigation. This includes subscriber information, log files, and email accounts with whom subscribers have corresponded. These records may allow investigators to move laterally through the criminal conspiracy, figuring out the attacker’s partners and seeking information about the attacker.

To get access to content, such as email messages, photographs, texts, Skype messages, and social media posts, agents must escalate even further by applying for search warrants. Prosecutors must apply to a court showing the evidence collected thus far demonstrates probable cause that a crime has been committed or is being attempted. With a search warrant, investigators can piece together a criminal conspiracy, insofar as cybercriminals usually communicate electronically with one another. If the grand jury, after hearing all the information gathered via subpoena, d-orders, and search warrants, is convinced that probable cause exists to believe that the target is engaging, or has engaged, in criminal activity, it will issue an indictment setting out the formal charges. Prosecutors have to jump through these hoops because the United States does not have an internal security agency with special powers and must treat domestic investigations as ordinary criminal cases.

In the Mirai case, we do not know the exact steps that Peterson’s team took in their investigation. Grand jury subpoenas are confidential. Moreover, the court orders in this case are currently “under seal,” meaning that they are secret. Just like hackers, FBI agents hide their tracks. But from public reporting we know that Peterson’s team got its break in the usual way—from a Mirai victim. The September 25 barrage on Brian Krebs’s blog enabled Google to record the location of every bot that had attacked it. Brian Krebs gave Google permission to share the location information with the FBI. With this information, the Anchorage cyber squad found the IP addresses of Mirai-infected devices in Alaska. To locate these devices, however, the agents needed more than IP addresses. They required the names of the owners and their physical addresses. To retrieve that information, they served subpoenas on Alaska’s main telecom company, General Communications Inc.

With this personal information, agents fanned out across Alaska. They interviewed Mirai victims to verify that they did not consent to downloading the malware onto their IoT devices. In some cases, the agents chartered planes to rural communities. Assembling these interviews and collecting vulnerable devices would be the key to establishing “venue”—the right of the FBI to prosecute the crime in Alaska.

The FBI uncovered the IP address of the C2 and loading servers, but did not know who had opened the accounts. Peterson’s team likely subpoenaed the hosting companies to learn the names, emails, cell phones, and payment methods of the account holders. With this information, it would seek d-orders and then search warrants to acquire the content of the conspirators’ conversations.

Pretenders in Cyberspace

Even though Peterson had many legal hoops through which to jump, he had a distinct advantage. With legal process, he could leverage the full power of the federal government to obtain the evidence he needed. A company subject to subpoena (sub + poena = “under penalty”) or court orders can be fined, have its owners imprisoned, and get its services shut down if it refuses to cooperate. The FBI does not tolerate “bulletproof” companies.

Having the power to investigate is second only to the power the state has over the suspects. If the investigators assembled enough evidence showing that Paras, Josiah, and Dalton had assembled the botnet, they would be subject to serious punishment, including several years in a federal penitentiary. Herein lies the key difference between sovereigns in the real world and pretenders in cyberspace. Sovereigns can preserve their power over a territory because their subjects have physical bodies, which can be apprehended, jailed, and killed. And the subjects live in homes, which can be searched, occupied, and demolished.

Hackers also have physical bodies and they too can be apprehended, jailed, and killed. And their servers occupy physical space and use cables that can be tapped or unplugged. Hackers can run a protection racket in cyberspace, eliminate their rivals, and claim supremacy on the internet. But they cannot do this in physical space. The legal sovereign has already achieved that dominance.

Indeed, because of Turing’s physicality principle, internet infrastructure also occupies physical space. There can be no change in cyberspace without a change in physical space. If nothing changes in the physical world—if no one clicks a mouse, the hard drive does not rotate, electrical signals do not cross a cable, and so on—files cannot download, emails cannot be sent, and documents cannot be decrypted. The converse, however, is not true: events can change in physical space without there being a change in cyberspace. The universe existed long before there were computers or the internet. We do not live in the Matrix.

Because computation is a physical process, whoever controls physical space controls cyberspace. The FBI controlled American territory. The Mirai boys would be defenseless against it. But the FBI would have to find them first, which was not easy, even with legal process. “The actors were very sophisticated in their online security,” Peterson noted. “I’ve run against some really hard guys, and these guys were as good or better than some of the Eastern Europe teams I’ve gone against.”

To evade detection, for example, Josiah didn’t just use a VPN. He hacked the home computer of a teenage boy in France and used his computer as the “exit node.” The orders for the botnet, therefore, came from this computer. Unfortunately for the owner, he was a big fan of Japanese anime and thus fit the profile of the hacker. The FBI and the French police discovered their mistake after they raided the boy’s house.

Kings of Cyberspace

Mirai was more than a toy for settling scores and attacking competitors; it was an app for making money. The group rented out their botnet so that users could hurt whomever they wished. The standard rate was $100 for five minutes of traffic at 350 gigabits/second. Those who wanted unlimited bandwidth could pay $5,000 for an entire week of attacks. The service came with a money-back guarantee if the botnet didn’t work. According to Brian Krebs, the typical customer for the service “is a teenage male who is into online gaming and is seeking a way to knock a rival team or server offline— sometimes to settle a score or even to win a game.” It is unlikely, therefore, that many gamers were willing to pay $5,000 for a whole week.

On September 27, for example, a group leased Mirai to attack Hypixel, then the largest Minecraft server in the world. The attack lasted three days. Such a long assault was affordable because the botnet fired at Hypixel for only forty-five seconds every twenty minutes—enough to annoy and enrage Hypixel’s customers. Twenty minutes would be enough time for players to get back in the game, only to be disconnected by a forty-five-second barrage of junk. These players would soon be looking for a more stable server on which to play.

Back in mid-September, Paras had demanded protection money from ProxyPipe, the mitigation company of Robert Coelho. Coelho did not, at the time, know that his former friend was behind the demand. The company refused and Paras attacked. ProxyPipe filed an abuse complaint against Blazing Fast, the company now hosting the Mirai C2. Blazing Fast ignored the complaint. Undaunted, ProxyPipe went to the “upstream” ISP, namely, the ISP that serviced Blazing Fast, asking them to not forward traffic to and from the botnet C2 server. This ISP ignored the abuse request as well. So did the next upstream provider, and the one after that. Finally, the fifth upstream provider complied and “nulled” the addresses—DDoS-speak for redirecting traffic to a meaningless address and thus to a digital black hole.

Paras learned of ProxyPipe’s action from a comment posted on Brian Krebs’s blog. Paras was impressed by the only company able to beat Mirai and contacted Coelho to congratulate him. The discussion between the two men—one a black hat, the other white—is fascinating. Even though Paras had attacked ProxyPipe and caused the company almost $400,000 worth of damage, the discussion is cordial, even friendly.

Paras messaged Coelho on Skype at 10:00 a.m., September 28, under the handle Anna-Senpai. Pasting a screenshot from the comment on Krebs’s blog, Paras wrote, “Don’t get me wrong, im not even mad, it was pretty funny actually. nobody has ever done that to my c2 (goldmedal).” Because Paras was posting pseudonymously, Coelho still did not know his old friend was one of the hackers behind Mirai. Coelho’s responses are conciliatory in part because he did not want to further enrage the Mirai gang. “anyway, we’re not interested in any harm, we simply don’t want attacks against us,” Coelho replied.

Much of their long chat was shoptalk. Paras wanted to know how ProxyPipe defended against some of his attacks. “im surprised the syn flood didn’t touch you.” He noted that no one else could handle the flood. “that raped everyone else, even krebs on akamai.” Coelho responded, “our mitigation is line-rate,” meaning that ProxyPipe’s servers could inspect packets as fast as they came over the internet lines and sinkhole them if they were part of an attack.

Coelho asked Paras how he was able to overcome Akamai’s defenses. Paras denied that he was involved. “i sell net spots, starting at $5k a week and one client was upset about applejack arrest.” Applejack is the name of Yarden Bidani, one of the two Israeli founders of VDoS. “so while i was gone he was sitting on them for hours with gre and ack [two types of DDoS attacks]. when i came back i was like fuck.” Paras claimed that Krebs was a “cool guy too, i like his article.” Paras was being disingenuous. He had been delighted that Bidani, his competitor, was arrested. Yet, he might have been telling the truth about the Krebs attack. He started the fight, but someone else might have escalated it. Paras would not have wanted the attention a massive attack on the world’s leading cybercrime journalist would bring.

Both Paras and Coelho had a good laugh at Bruce Schneier. “i love the conspiracy guys thinking this is china or another country ha ha. can’t deal with the fact the internet is so insecure. gotta make it sound hard,” Coelho wrote. Paras chimed in, “the scheiner [sic] on security blog post. Someone is learning how to take down the internet. lol.”

Paras mentioned one upside to internet insecurity: “but on the plus side, ever since i have been running infecting these iot telnet devices.” He described his three-part plan for maintaining dominance in cyberspace. “i have good killer so nobody else can assemble a large net. i monitor the devices to see for any new threats and when i find any new host, i get them taken down.” Paras was just like a European sovereign: having established a monopoly over coercion, he was vigilant in killing competitors, razing botnets, and ensuring that no one gained enough power to overthrow him.

Coelho challenged him on his ethics: “People have a genuine reason to be unhappy though about large attacks like this … it does affect their lives.” Paras responded, “well, i stopped caring about other people a long time ago. my life experience has always been get fucked over or fuck someone else over.” Coelho responded, “My experience with PP [ProxyPipe] thus far has been do nothing bad to anyone. And still get screwed over.”

Both men seemed to agree that everyone in this space—even the “good guys”—lied. “which makes me sad about the sorry state of ddos mitigation,” Coelho said. Paras followed up, “everyone lies because everyone else does. everyone wants to throw numbers out there and akamai wants it to seem especially big since they kick off major journalist in it world.” “People will buy whatever garabge [sic] they make lol,” Coelho stated.

Both men also had a low opinion of law enforcement. According to Coelho, “Law enforcement is useless … They reply to us, but do nothing.” When Paras noted that the FBI got the Israeli police to arrest the VDoS duo, Coelho responded, “And how late were they there?:)”

The conversation ended on a pleasant note. “you strike me as an anime fan,” Paras said, feigning ignorance, “just some of your mannerisms and your interests, it makes me think you enjoy anime.” When Coelho confirmed his interest, Paras peppered him with questions. What was the last movie he watched? Did he watch summer anime? Which series and seasons did he like? Paras talked about his own choices: “i rewatched mirai nikki recently—it was the reason i named my bot mirai lol.” Paras seemed lonely. He signed off by saying that he intended to get drunk and watch anime. “you are cool guy, im sorry for trouble lol.”

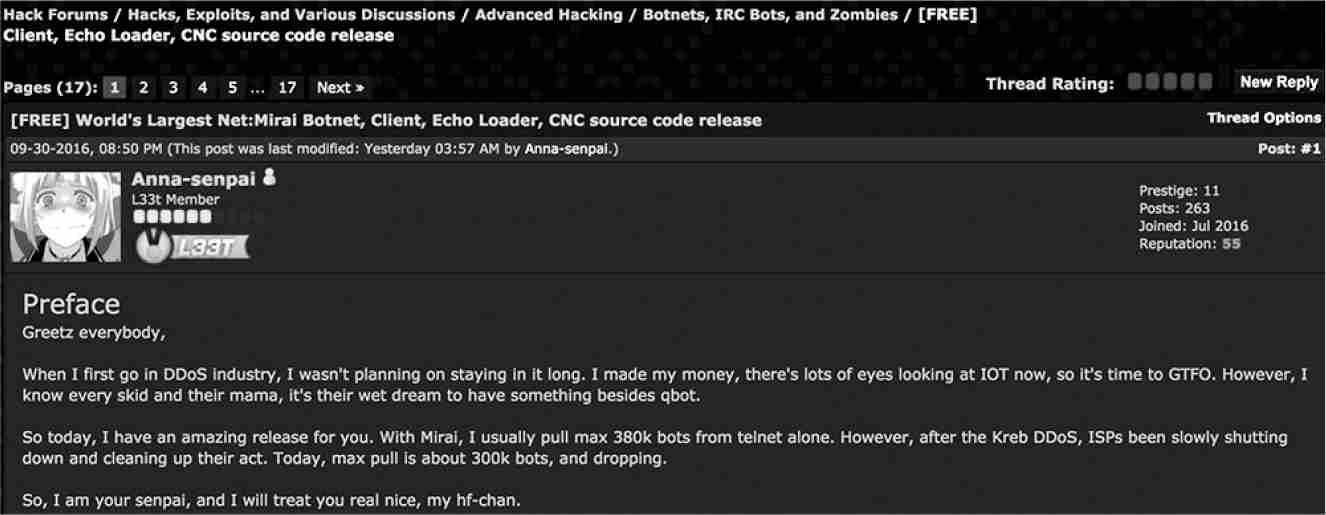

Coelho would hear back from Anna-Senpai two days later with a short message: “moving out of ddos anyway, if you are interested, source code drop.” Paras had dumped nearly the complete source code for Mirai on Hack Forums, with the following post:

“I made my money, there’s lots of eyes looking at IOT now, so it’s time to GTFO [Get The Fuck Out],” Paras wrote. “So today, I have an amazing release for you.”

Anna-Senpai was empowering others to build their own Mirais. And they did.

Irresponsible Disclosure

Among the most dangerous times for computer users is the moment after a security vulnerability is announced. That’s when the race begins. Hackers all over the world pore over the code looking for ways to exploit the vulnerability. And it usually doesn’t take them long.

Because vulnerability announcements bring danger to computer users, security researchers have developed a system known as responsible disclosure. In responsible disclosure, the researcher quietly notifies the vendor of the vulnerability and commits to nondisclosure for long enough for the vendor to repair the weakness. Google’s Project Zero, for example, gives vendors ninety days from notification. The expectation is that the vendor will fix the problem by the end of embargo and users will immediately download a patch as soon as the vulnerability is announced.

Responsible disclosure aims to generate solutions, but it does not wait for them. If the vendor doesn’t patch the vulnerability or does so poorly, the researcher will disclose anyway. The justification for revealing weaknesses is twofold. First, disclosure is the only leverage that the security community has over hardware and software companies. Security researchers want vulnerabilities fixed, either out of an altruistic desire to improve the internet or because they are paid to get vendors to fix them. By alerting customers about defects, researchers hope that the market will force vendors to repair the flaws. Second, responsible disclosure is premised on the belief that hackers will eventually discover the vulnerability. Users have the right to be told what attackers will soon find out or know already.

When Paras Jha shared the source code for Mirai on Hack Forums, he engaged in irresponsible disclosure. Not only did he not inform the vendors about the multiple vulnerabilities in their devices, he handed hackers thousands of lines of highly efficient code for exploiting them. Paras chose not to release the complete working code, but not out of a sense of responsibility. He offered the complete version to anyone willing to pay $1,000.

The code Paras dumped was the most sophisticated version of Mirai. Since it first went live on August 1, Mirai had gone through at least twenty-four iterations. And through its competition with Poodle Corp, the malware had gotten more virulent, stealthy, and lethal. By the end of September, the disclosed code had added more passwords to its dictionary, deleted its own executing program, and aggressively killed other malware.

Dumping code is reckless, but not unusual. Hackers often irresponsibly disclose vulnerabilities and exploitations to hide their tracks. If the police find source code on any of the hackers’ devices, they can claim that they “downloaded it from the internet.” Paras’s irresponsible disclosure was part of his false-flag operation. Indeed, the FBI had been gathering evidence indicating Paras’s involvement in Mirai and contacted him to ask questions. Though he gave the agent a fabricated story, hearing from the FBI probably terrified him.

Mirai’s Next Steps

Mirai had captured the attention of the cybersecurity community and of law enforcement. But not until after Mirai’s source code dropped would it capture the attention of the entire United States. The first attack after the dump was on October 21. On Dyn.

It began at 7:07 a.m. EST with a series of twenty-five-second attacks, thought to be tests of the botnet and Dyn’s infrastructure. Then came the sustained assaults: a one-hour, and then five-hour, SYN flood. Interestingly, Dyn was not the only target. Sony’s PlayStation video infrastructure was also hit. Because the torrents of junk packets were so immense, many other websites were affected. Domain names such as facebook.com, cnn.com, and nytimes .com wouldn’t resolve. For the vast majority of these users, the internet became unusable. At 7:00 p.m., another ten-hour salvo hit Dyn and PlayStation.

Further investigations confirmed the point of the attack. Along with Dyn and PlayStation traffic, the botnet targeted Xbox Live and Nuclear Fallout game-hosting servers. Nation-states were not aiming to hack the U.S. elections. Someone was trying to boot players off their game servers. Once again—just like MafiaBoy, VDoS, Paras, Dalton, and Josiah—the attacker was a teenage boy.

But who? One clue about the perpetrator came from the time and date: noon (London time) on October 21. Exactly a year earlier, a fifteen-year-old boy named Aaron Sterritt, hacker handle Vamp, from Larne, Northern Ireland, used an SQL injection and hacked the British telecom TalkTalk. Because British police suspected that Sterritt might also be involved in the Dyn DDoS, they questioned him, but did not have enough evidence to prosecute. He had encrypted all of his digital devices, making the information on them unreadable by law enforcement. The U.K.’s National Crime Agency refused to name him, except to note that “the principal suspect of this investigation is a UK national resident in Northern Ireland.” But based on reports from multiple sources, Brian Krebs has since reported that Sterritt was responsible for the attacks. Once again, a teenager was responsible for devastating attacks on the internet previously attributed to nation-states.

Meanwhile, the Mirai trio left the DDoS business, just as Paras said. But Paras and Dalton did not give up on cybercrime. An Eastern European cybercriminal introduced the gang to click fraud. Pay-per-click ads pay websites on which they are posted an amount based on the number of clicks the ads receive. More clicks mean more money. And large botnets can click a lot of ads. Those engaged in click fraud get a cut of the advertising revenue they fraudulently generate for the website.

Click fraud was more lucrative than running a booter service. While Mirai was no longer as big as it was—competition for the finite supply of vulnerable devices reduced it to one hundred thousand devices—the botnet could nevertheless generate significant advertising revenue. Given its geographical reach, incoming traffic from the bots would appear indistinguishable from legitimate traffic. Paras and Dalton earned as much money in one month from click fraud as they ever made with DDoS. By January 2017, they had earned over $180,000, as opposed to a mere $14,000 from DDoS-ing. A year later, click fraud was costing advertisers over $16 billion per annum.

Attacking a Dog with a Steak

Dumping the source code was not only irresponsible. It was also idiotic. Had Paras and his friends shut down their booter service, the world would likely have forgotten about them. By releasing the code, Paras created imitators. Dyn was the first major copycat attack, but many others followed.

A Mirai variant attempted to exploit a vulnerability in nine hundred thousand routers from Deutsche Telekom. It crashed Germany’s largest internet service provider. Another Mirai version shut down Liberia’s entire internet. And in January 2017, less than six months after his attack on Dyn, Aaron Sterritt teamed up with two other teenagers—eighteen-year-old Logan Shwydiuk of Saskatoon, Canada, aka Drake, Dingle, or Chickenmelon, and nineteen-year-old Kenneth Schuchman of Vancouver, Washington, aka Nexus-Zeta—to build a stronger version of Mirai. The new variant, which went by the names Satori or Matsuta or Okiru, could infect up to seven hundred thousand systems into a giant botnet capable of generating hundred-gigabit attacks. Sterritt was the coder, Shwydiuk handled customer service, and Schuchman was responsible for acquiring the exploits, usually by posting on Hack Forums. Once again people speculated that the giant botnet was the work of nation-state actors—and once again it was just a bunch of teenagers.

Due to the enormous damage Mirai’s imitators wrought, law enforcement was intensely interested in the Mirai authors. So were private security researchers. After all, the Mirai gang had gone after Brian Krebs—the premier cybercrime reporter. As Allison Nixon of Flashpoint put it, “They dumped the source code and attacked a security researcher using tools interesting to security researchers. That’s like attacking a dog with a steak. I’m going to wave this big juicy steak at a dog and that will teach him. They made every single mistake in the book.”

As it used legal process to amass confirmation tying Paras, Josiah, and Dalton to Mirai, the FBI quietly brought each up to Alaska. Peterson’s team showed the suspects its evidence and gave them the chance to cooperate. Given that the evidence was irrefutable, each folded.

Paras Jha was indicted twice, once in New Jersey for his attack on Rutgers, and once in Alaska for Mirai. Both indictments carried the same charge—one violation of the Computer Fraud and Abuse Act. Paras faced up to ten years in federal prison for his actions. Josiah and Dalton were indicted only in Alaska, so faced just five years.

The trio pled guilty. At the sentencing hearing held on September 18, 2018, at 1:00 p.m. in Anchorage, Alaska, each of the defendants expressed remorse for his actions. Josiah White’s lawyer conveyed his client’s realization that Mirai was “a tremendous lapse in judgment.” The lawyer assured Judge Timothy Burgess, “I really don’t think you’ll ever see him again.”

Unlike Josiah, Paras spoke directly to Judge Burgess in the courtroom. Paras began by accepting full responsibility for his actions and expressed his deep regret for the trouble he’d caused his family. He also apologized for the harm he’d caused businesses and, in particular, Rutgers University, the faculty, and his fellow students. His explanation for his wayward actions recalls Sarah Gordon’s research on virus writers from 1994. “I didn’t think of them as real people because everything I did was online in a virtual world. Now I realize I have hurt real people and businesses and understand the extent of the damage I did.”

The Department of Justice made the unusual decision not to ask for jail time. In its sentencing memo, the government noted “the divide between [the defendants’] online personas, where they were significant, well-known, and malicious actors in the DDoS criminal milieu, and their comparatively mundane ‘real lives,’ where they present as socially immature young men living with their parents in relative obscurity.” It recommended five years of probation and 2,500 hours of community service. The government had one more request: “Furthermore, the United States asks the Court, upon concurrence from Probation, to define community service to include continued work with the FBI on cyber crime and cybersecurity matters.” Even before sentencing, Paras, Josiah, and Dalton had logged close to one thousand hours helping the FBI hunt and shut down Mirai copycats. They contributed to more than a dozen law enforcement and research efforts. In one instance, the trio assisted in stopping a nation-state hacking group. They also helped the FBI prevent DDoS attacks aimed at disrupting Christmas holiday shopping. Judge Burgess accepted the government’s recommendation and the trio escaped jail time.

The most poignant moment in the hearing was when Paras and Dalton praised the very person who caught them. “Two years ago when I first met Special Agent Elliott Peterson,” Paras told the court, “I was an arrogant fool believing that somehow I was untouchable. When I met him in person for the second time, he told me something I will never forget: ‘You’re in a hole right now. It’s time you stop digging.’” Paras finished his remarks by thanking “my family, my friends, and Agent Peterson for helping me through this.”

Dalton Norman had a speech disability that made it difficult for him to speak to the court, even to answer questions with yes or no. Nevertheless, he too thanked his adversary in a written statement read by his attorney: “I want to thank the FBI, especially Agent Peterson, for being a positive mentor through this process and by going above and beyond what was expected of him.” The judge concluded the hearing by noting that the defendants could not have “picked a better role model than Agent Peterson.”

Downcode Is Never Enough

When Robert Morris Jr. crashed the internet in 1988, we blamed the insecurity of UNIX and the hacker’s ethic that created it. Before the Morris Worm, the internet community naively believed that malicious behavior would be minor and outbreaks could be contained. After the Worm, the community realized that an end-to-end internetworking system can survive only if they hardened the end points.

Harden the end points they did. Consider how Linux dealt with buffer overflows. In 2002, Linux implemented ASLR, short for “address space layout randomization.” The stack, that temporary scratch pad that Robert Morris Jr. used to implant malicious code on Finger servers, usually sits at the very top of the computer’s memory space. When ASLR is turned on, the operating system moves the stack to a random part of the memory space. Thus ASLR hides the stack to prevent hackers from injecting code through overflows.

ASLR worked—until it didn’t. Hackers quickly figured out how to guess the location of the stack. Thus, in 2004 Linux implemented ESP, short for “executable-space protection.” The operating system marks the memory addresses where the stack resides as “nonexecutable.” When ESP is turned on, the stack can be used only for storing data. Even if someone defeated ASLR, found the stack, and pushed code on it, ESP would refuse to execute it.

In response to ESP, hackers developed ROP, short for “return-oriented programming.” Instead of injecting the malware on the stack and executing it, the hacker identifies code snippets outside the stack that can do the job for them. In ROP, each snippet ends with an instruction to “return” to the stack. A hacker can put the memory address of each fragment on the stack and chain them together to perform a malicious action. It’s the equivalent of finding a locked door between two rooms, climbing out of the window, walking along the ledge, and entering the second room through its window. Provided the hacker can find the stack, the hacker can hop from snippet to stack to snippet to wrest control of the program flow.

Operating systems have developed elaborate countermeasures to ROP, such as ensuring that programs don’t take unexpected detours. To use the locked-door analogy again, the operating system will not allow someone to climb through the window unless it was opened first from the inside.

It is now hard to smash the stack in Linux—not impossible, but difficult. Yet three teenagers were able to exploit Linux and made a large part of the internet unusable. How is this possible?

The reason is simple: security technology doesn’t run by itself. It runs on data that people supply it. Suppose a company purchases an operating system so secure that the most elite teams from the NSA using the fastest supercomputers in the world could not get in. Now imagine that the company’s human resources department simply hands out usernames and passwords to anyone who asks. The bulletproof downcode would be useless in the face of this absurd upcode. It would be like handing a Stradivarius to someone who doesn’t know how to play the violin.

In real life, the security practices of IoT manufacturers were almost as ludicrous. Instead of providing credentials for their appliances to anyone who asked, they printed the credentials in manuals, put those manuals on their websites, and let Google index them. To return to our analogy for a final time, it’s as if the IoT manufacturers locked every door in an apartment building, then left a basket with all the keys on the stoop.

Upcode is central to cybersecurity not only because upcode shapes downcode; it also guides how we use downcode. If the upcode lets many users have access to confidential information, grants them rights to change sensitive databases, or puts evil maids in reach of their bosses’ cell phones, it does not matter how good the technology is. Without good upcode, good downcode is useless.