4. THE FATHER OF DRAGONS

Before Vienna, there was Jerusalem. Named after the location where it was first found, the Jerusalem computer virus contained a nasty “logic bomb.” On every Friday the thirteenth after 1987 (the first eligible date being May 13, 1988), Jerusalem displayed a black box on a user’s screen while it deleted every program the user had run that day. It also repeatedly infected executable files until they became so large that they crashed the system.

Before Jerusalem, there was Brain, the first virus designed for the IBM PC. Written in 1986 by two nineteen-year-old Pakistani brothers who owned Brain Computer Services and were upset over the piracy of their medical software, the virus infected the part of floppy disks containing code for booting the machine (known as the boot sector, usually Sector 0). When a computer booted with a tainted disk, Brain would load into memory and waylay the boot sector of floppies inserted into the disk drive. Brain did not damage files, but its payload printed out an ominous message:

Welcome to the Dungeon © 1986 Basit & Amjads (pvt).

Brain Computer Services 730 Nizam

Block Allama Iqbal Town Lahore-Pakistan Phone:

430791,443248,280530. Beware of this VIRUS …

Contact us for vaccination …

The brothers were genuinely surprised when they got angry calls from all over the world demanding that their disks be disinfected.

On November 10, 1983, Fred Cohen released a virus he had written for a security seminar at the University of Southern California, where he was doing doctoral research. With permission of the system administrator, Cohen conducted five tests on the local VAX machine. He posted vd, a system utility that presented file directories graphically, on the local network bulletin board. Unbeknownst to users, however, Cohen had appended a virus to the beginning of the utility. When vd executed, the virus spread. In one test, the virus gained root access, and thus control over all user accounts, in five minutes. Alarmed by its virulence, the system administrator refused to allow any more experiments.

Before Fred Cohen’s UNIX creations, there was Elk Cloner, the first virus for the Apple II, and the first to spread “in the wild.” The brainchild of a fifteen-year-old prankster who in 1982 placed malicious code on his school’s computer, the Elk Cloner lurked in memory and infected the boot sector of any floppy disk placed in its drive. When executed, the virus’s harmless payload printed out the following message:

Elk Cloner: The Program with a Personality

It will Get on All Your Disks

It will Infiltrate Your Chips

Yes, It’s Cloner!

It will Stick to You Like Glue

It will Modify Ram Too

Send in the Cloner!

Three years earlier, two computer scientists, John Shoch and Jon Hupp, began writing worms for Xerox PARC’s network. Their worms were highly sophisticated: not only did they self-replicate, but their segments also communicated with one another. Patterned explicitly after Nick’s creation in Brunner’s novel Shockwave Rider, these worms carried around tables of their segments. If a segment died, other segments created a new copy. The worms’ payloads performed useful tasks such as delivering a “cartoon of the day,” running diagnostics, and providing an alarm clock for alerts.

Despite the care with which Shoch and Hupp wrote their self-replicating programs, mishaps occurred. Early in their experiments, they ran a worm overnight on a few computers, out of a hundred on the network. “When we returned the next morning,” Shoch and Hupp reported, “we found dozens of machines dead, apparently crashed.” The code for one of the worm segments had become corrupted and went haywire. Worms desperately replicated and crashed machines they were trying to access. “To complicate matters,” they continued, “some machines available for running worms were physically located in rooms which happened to be locked that morning so we had no way to abort them. At this point, one begins to imagine a scene straight out of Brunner’s novel—workers running around the building, fruitlessly trying to chase the worm and stop it before it moves somewhere else.”

Shoch and Hopp’s worm experiments were inspired by the Creeper. Written in 1971 by Bob Thomas, one of the pioneers of the internet, the Creeper was designed to traverse the ARPANET. Its only payload was a screen message: “I’m the Creeper. Catch me if you can.” Ray Tomlinson, the inventor of email, wrote a worm called Reaper, whose sole task was to hunt the Creeper.

But even Bob Thomas could not claim credit for inventing self-replicating programs. That honor goes to the Hungarian mathematician and wunderkind John von Neumann. Von Neumann designed a self-reproducing automaton in 1949, decades before any other hacker. Even more astonishing, he wrote it without a computer.

Von Neumann’s accomplishment is not merely of historical interest. We will see that he was the first thinker to identify how self-replicating machines exploit metacode to make copies of themselves. Once we have a deeper appreciation of how self-replication works, we will understand why our computers are so susceptible to infection by malicious self-replicating programs.

Johnny von Neumann

Born in Budapest, Hungary, in 1903, John von Neumann was the eldest child of a wealthy Jewish family. His father was a banker, and wanted his son to have a practical education. He urged John to study chemical engineering; since John’s passion lay with pure mathematics, John pursued both degrees simultaneously. While enrolled at the University of Budapest to study mathematics, he studied chemical engineering in Germany and Switzerland, returning home to take math exams without having attended the courses. By the age of twenty-two, he earned a degree in chemical engineering and the next year a doctorate in mathematics, with minors in experimental physics and chemistry.

John could recite verbatim any book or article he had read, even years later. Herman Goldstine, a colleague at Princeton, tested John’s legendary memory by asking him to recite Dickens’s A Tale of Two Cities. Johnny, as he was known to his American friends, declaimed the novel perfectly. Convinced of his genius, Goldstine cut him off after ten or fifteen minutes.

In 1928, at the age of twenty-four, John von Neumann became the youngest faculty member at the University of Berlin—ever. In 1930, von Neumann began teaching at Princeton University and three years later received a lifetime position in mathematics at the Institute for Advanced Study, an independent research center closely affiliated with the university.

In contrast to his Princeton colleague Kurt Gödel, a loner and socially inept logician who had a morbid fear of being poisoned and would eat only his wife’s cooking (and thus starved to death in 1978 when his wife was hospitalized for six months), von Neumann was warm, gregarious, and corpulent. He was a bon vivant who always dressed in impeccably tailored three-piece suits and threw large parties at his Princeton home at least once a week. Johnny was celebrated for his quick wit, sparkling conversation, and ribald limericks.

There are few branches of mathematics in which von Neumann did not make fundamental contributions. According to a popular saying, “Mathematicians solve what they can, von Neumann solves what he wants.” His 1932 magnum opus, Mathematical Foundations of Quantum Mechanics, was an elegant reformulation of quantum mechanics that revolutionized its study. In the mid-1930s, von Neumann turned his attention to nonlinear partial differential equations. These devilishly difficult equations are central to the study of fluid dynamics and turbulent air flows. Von Neumann was intrigued by their complexity and quickly became an expert in the mathematics of explosions and shock waves.

Because von Neumann had expertise in modeling detonations—and a brilliant mind—the U.S. Air Force approached him for help. He began consulting in 1937. In 1944, he joined the Manhattan Project at Los Alamos. While working through the complex equations that describe neutron diffusion in nuclear reactions, he learned of the ENIAC, the world’s first electronic computer, then housed at the Ballistic Research Laboratory in Aberdeen, Maryland. The army had planned on using the humongous machine (it weighed thirty tons) to compute artillery tables. Because of its speed—the ENIAC could perform mathematical operations a thousand times faster than humans—von Neumann recognized its potential for nuclear research. Instead of calculating artillery tables, von Neumann christened the world’s first electronic computer with a simulation of neutron dispersal in a thermonuclear explosion. The simulation required a million IBM punch cards to run.

This encounter with the ENIAC ignited von Neumann’s interest in electronic computers. Computers were not simply superfast calculators. To von Neumann, they were artificial computational systems that could be used to study natural systems, such as biological cells and the human brain. Von Neumann, therefore, decided to design and build computers. His contributions to the design of the EDVAC, the successor to the ENIAC, were pivotal.

Many of von Neumann’s innovations for the EDVAC have become standard. ENIAC was an electronic computer that used eighteen thousand vacuum tubes to store and manipulate decimal symbols, similar to the way we’re taught to do math. (The vacuum tubes were arranged in rings of ten, and only one tube was on at a time, representing one digit.) Von Neumann understood that binary symbols are easier to encode electronically. Open circuits would count as zeros, closed circuits as ones. EDVAC became the world’s first digital computer.

Von Neumann is also credited with inventing the “stored program” computer, now known as the “von Neumann architecture.” For all its virtues, the ENIAC had one problem: code was hardwired into the machine. Whenever a user wanted to run a new program, a team of women, known as the programmers, manually changed ENIAC’s internal wiring to implement the code. A program might take two weeks just to load and test before it could run. Von Neumann’s design would load programs as software, rather than hardware. Code and data would be fed by punch cards into memory, where they would both be stored.

As we’ve seen, loading code with data made general computing practical by obviating the need for costly and tedious physical manipulation of computer hardware. But von Neumann’s architecture, by building on Turing’s principle of duality, also opens the way for hacking. Slipping code onto the tape when the computer expects data can compromise the security of the account running the program.

After working with the EDVAC team, von Neumann built a new computer in the basement of the Institute for Advanced Study. Von Neumann’s Princeton colleagues were displeased with his interest in practical subjects, which they found beneath him.

Von Neumann’s experience with designing computers prompted a new set of questions. Getting a computer up and running was a Herculean task. Everything had to be just right for it to work. These machines were also extremely fragile. If one tiny part malfunctioned, it might topple the whole colossus. Von Neumann wondered how biological organisms avoided this fate. Living organisms are extremely resilient, despite being more complex than the ENIAC. If cells in our bodies die, we don’t normally collapse. We continue to function.

Von Neumann speculated that the resilience of biological organisms could be attributed to their ability to self-replicate. When a blood cell dies, a new blood cell sprouts to take its place. Von Neumann wanted to understand the process of self-replication. If he could get a computer program to self-replicate, he might shed light on the way natural organisms are able to survive in forbidding environments.

The Mystery of Self-Replication

In 1649, Descartes was summoned to Sweden by Queen Christina. The twenty-three-year-old daughter of Gustavus Adolphus wanted the renowned philosopher to tutor her. Given her many duties, she insisted that he teach her philosophy at 5:00 a.m. Descartes hated the cold and seldom rose before 11:00 a.m., but he acceded to Her Majesty’s wishes. According to legend, he claimed during one of their sessions that animals are complex mechanical machines. Queen Christina seemed unimpressed. Pointing to a clock, she said, “See to it that it produces offspring.”

In 1949, von Neumann set out to do just that. He began a project on self-replicating machines, seeking to do for reproduction what Turing had done for computation. Just as Turing showed how a physical machine could compute, von Neumann sought to demonstrate how a physical device could reproduce itself.

This was no mean feat. As Queen Christina’s challenge suggests, physical self-replication is mysterious. Actually, the process seems impossible. How would a self-replicator even work?

One possibility is that the self-replicator disassembles itself, copies each part, and then assembles the fabricated copies into a clone. As von Neumann noted, this process is bound to fail. If a self-replicator tried to copy itself, it would have to engage in exquisitely complex surgery. It would have to amputate its limbs, remove its vital organs, take each apart, and copy the components. Even if it managed not to kill itself, the self-replicator would alter the delicate environment it was trying to copy. (Think about carving up your own brain without changing your brain in the process.) Von Neumann’s worry about self-replication was likely influenced by his research in quantum mechanics. According to the Heisenberg uncertainty principle, it is not possible to know the location and momentum of a subatomic particle at the same time. Observation changes reality. Similarly, disassembly changes the machine the self-replicator is trying to copy.

Here’s another possibility: Instead of taking itself apart, the self-replicator follows a blueprint inside the machine. The blueprint is a complete guide to making another self-replicator, containing instructions for building every piece. By assembling a new machine according to this internal blueprint, the self-replicator builds a perfect copy of itself. While more promising than the first process, it doesn’t work either. For the self-replicator to build a perfect copy of itself, the blueprint itself has to be complete. But since this complete blueprint is part of the self-replicator, the blueprint had to contain a second complete copy of itself. And this second blueprint, being a complete copy, has to contain a third copy of itself. And so on. Like mirrors that face each other and reflect their reflections ad infinitum, the blueprint would never end.

The Universal Constructor

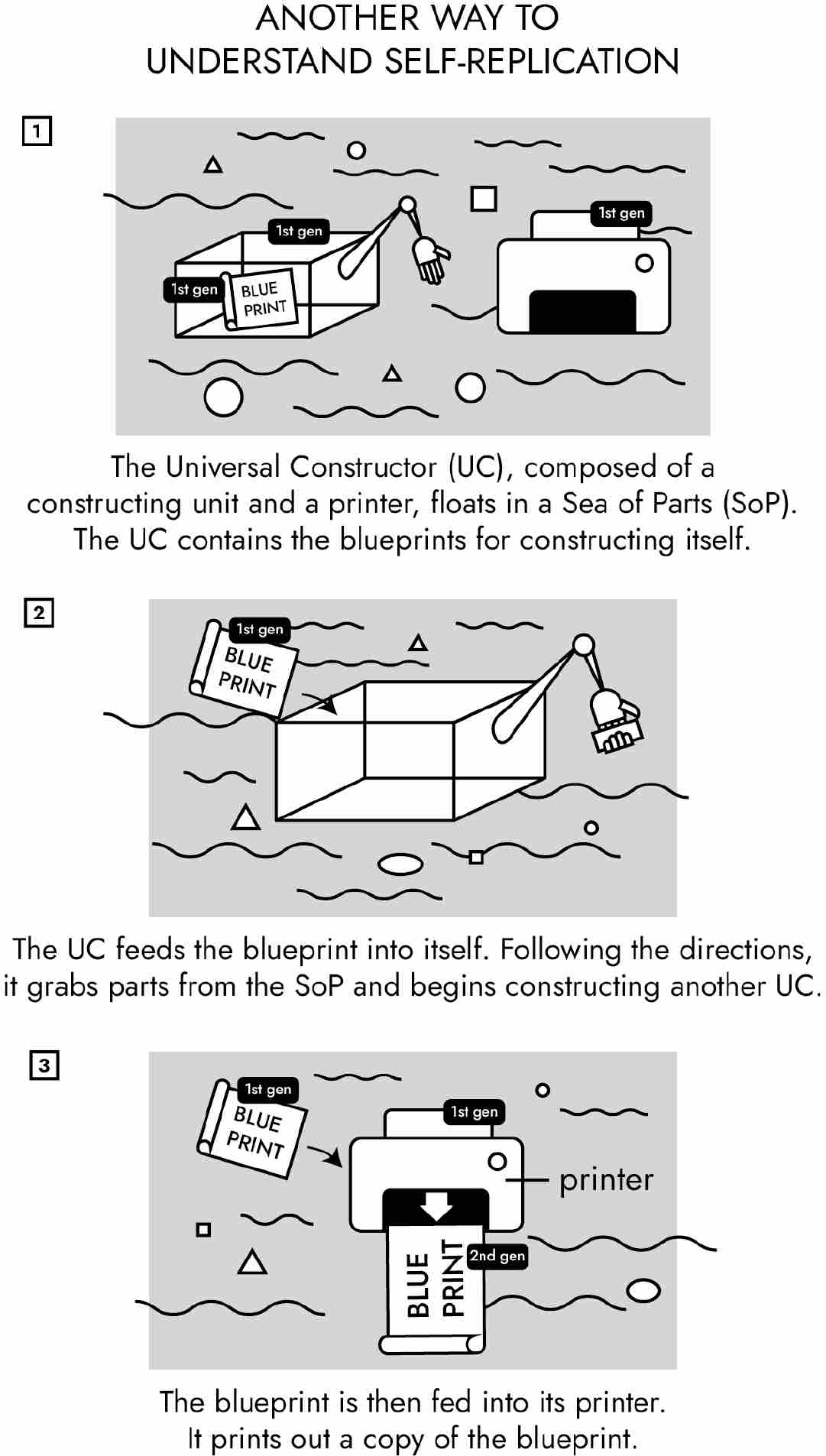

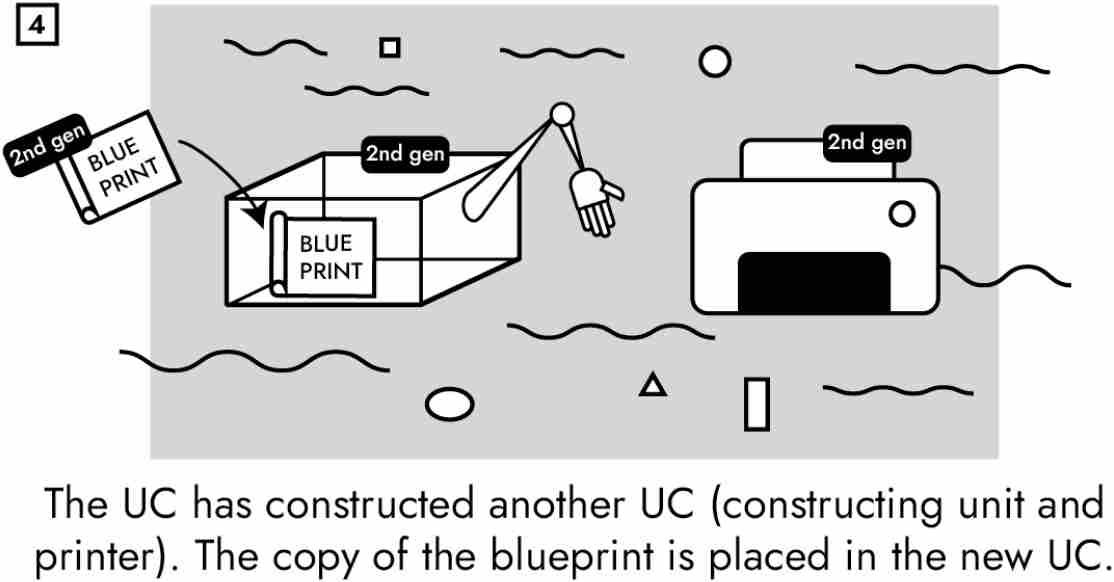

Descartes was renowned for building miniature clockwork dolls. It was said that he even fabricated a working replica of his daughter, who died of scarlet fever at age five, and carried it around in a tiny casket wherever he went. Von Neumann wisely decided against building a complicated physical prototype of a self-replicator. Instead, he followed in Turing’s footsteps yet again: he built a mathematical model of a “universal constructor” (UC for short).

Using mathematics to model self-replication makes sense if (a) you are a mathematician and/or (b) you want to use the model to construct mathematical proofs. For the rest of us, mathematical models are challenging to understand. Von Neumann’s model of the UC is particularly difficult. His UC is a “cellular automaton.” A cellular automaton is composed of many primitive computers known as cells that communicate with one another. Building a UC requires approximately two hundred thousand cells. Good luck working through those proofs.

Fortunately, mechanical models can often help us visualize mathematical concepts. Instead of conceptualizing the UC mathematically as a cellular automaton, we can think of it mechanically as a 3D printer. This 3D printer can print any object—baseballs, snow globes, the Mona Lisa, nerve cells, human hearts, rocket ships, and so on. Like real-world 3D printers, the UC constructs objects based on blueprints fed into the machine via a storage medium—in the UC’s case, a tape.

We can now reframe von Neumann’s question of self-replication by asking, “Can a 3D printer print itself?” If it can, then self-replication is possible.

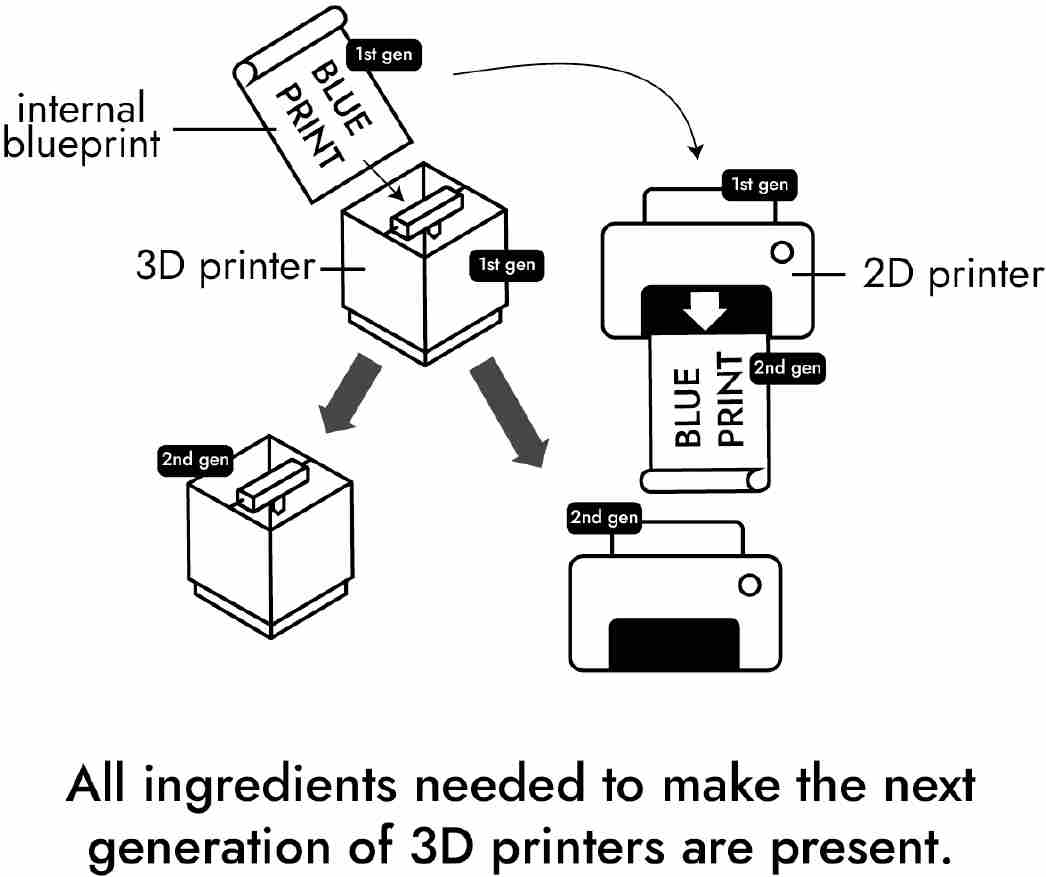

We saw that self-replication using internal blueprints leads to infinite regresses when the plans are required to be complete. Each complete blueprint must contain an endless sequence of complete blueprints. Von Neumann, therefore, made the internal blueprint incomplete. It contains instructions for everything except itself. In other words, the tape must contain the instructions for making a child, but not for printing the child’s internal blueprint.

Next von Neumann split self-replication into two parts: construction and copying. Self-replication begins when the UC’s control unit switches to the construction phase. In this first stage, the parent UC follows its internal blueprint and 3D prints a child UC. Since the internal blueprint is incomplete, the child does not yet contain an internal blueprint.

To get the blueprint into the child, von Neumann included a 2D copier. Its sole function is to copy the tape containing the blueprint. (You can actually buy a 3D printer with a copy machine from Amazon in the “Home Office” section!) Thus, during the second phase of self-replication—the copying phase—the control unit turns the copier on, which duplicates the blueprint. The parent UC then inserts the copied blueprint into its child.

By insisting that the blueprint be incomplete and that the UC contain a 2D copier as well as a 3D printer, von Neumann showed how self-replication is possible. The UC follows its internal blueprint to build a new UC sans the internal blueprint. The UC’s 2D copier then copies the internal blueprint, and the 3D printer inserts the copied blueprint into the new universal constructor. When the new machine is turned on, it copies itself. The process continues until the universal constructors run out of building material, energy, or room for construction.

Though the UC’s blueprint spreads uncontrollably until it exhausts the available resources, it is neither a virus nor a worm. It is not a virus because users play no role in its execution. Each parent UC turns on its child. Nor is it a worm because it does not spread through networks. It proliferates by constructing its own UC children rather than infecting existing machines.

The blueprint is, therefore, a hybrid creature—it is self-replicating software that, like a worm, is self-executing but, like a virus, does not spread through networks. We can call this new creature a wirus (self-executing, stand-alone), adding it to our menagerie of worms (self-executing, networked), viruses (user-executed, stand-alone), and vorms (user-executed, networked).

Containing Your Own Code

Philosophers have long noted that most objects do not contain their own blueprints. Tables are made from detailed plans but do not contain these plans. If you took apart your refrigerator, you would search in vain for its technical specifications.

Human beings are different. Our physical development is determined by the genetic code embedded in our DNA. Similarly, our mental lives are shaped by plans internal to our minds. If I decide to go to the store tomorrow, I contain code—my intention—to go to the store. That my behavior is determined by my intention contributes to my autonomy. Autonomy means “self-law-giving.” Because I generated the code, and that code is internal to me, I give laws to myself.

Objects must contain their own code if they are to be autonomous. Von Neumann showed another class of things that must have internal blueprints: self-replicating entities. For something to copy itself, it must contain the plan originally used to create it. It must also contain a 3D printer to execute the plan and a 2D copier to copy the plan. Like von Neumann’s UC, amoebas, protozoa, coronaviruses, fruit flies, leeches, wombats, penguins, horses, and humans need the equivalent of 3D printers and 2D copiers, internal blueprints and control units to switch between construction and copying, to self-replicate—in other words, for life.

In just under a decade after von Neumann lectured on self-replication, molecular genetics confirmed his insights. Beginning with Watson and Crick’s confirmation of DNA’s genetic role in 1953, scientists have shown that biological cells are biological UCs. Biological cells contain their own internal codes—genomes in the form of nucleic acid base pairs. Cells also contain “3D printers” in the form of messenger RNA and ribosomes. During gene expression—the construction phase—messenger RNA transcribes DNA segments, and ribosomes assemble amino acid chains from messenger RNA templates to make proteins. Cells also possess copy machines for their internal code set out in the “DNA tape.” During DNA replication—the copying phase—proteins unzip the double helix and a special enzyme known as DNA polymerase builds new DNA helices from each strand.

In a universal constructor, therefore, the internal blueprint serves two different functions. First, the blueprint functions as a set of instructions. When the UC reads the tape, it executes the operations written on it. Second, the internal blueprint is a carrier of genetic information. The internal code does not merely tell the present machine what to do—it contains the information that will determine future machines. When the tape is copied, it is not executed. Instead of treating the written symbols as code, the UC treats it as data.

The blueprint of a UC, therefore, is what biologists call a genome. A genome is an internal blueprint used to build an organism and carry genetic information for building future generations. DNA is a genome because it not only regulates the development and function of biological organisms, but also contains the genetic material used in self-replication. Thus, our DNA makes us who we are and our children who they are. The tape in von Neumann’s self-replicating automaton is a genome because the 3D printer treats the blueprint as code, the 2D copier treats it as data.

Earlier we noted how von Neumann’s “stored program” architecture exploits Turing’s principle of duality by feeding code and data into the same machine. We can now see how von Neumann leveraged duality yet again for self-replication. The UC can duplicate itself because it treats the symbols printed on its blueprint tape as code to follow as well as data to copy.

Exploiting metacode, however, is dangerous. Just as hacking can abuse the duality principle by substituting code for data and vice versa, self-replicating malware manipulates the same ambiguity. Let us return to Vienna. When a user runs a Vienna-infected file, the operating system starts executing the program. Since an infected program always jumps to the virus first, the computer treats Vienna as code. But since Vienna instructs the operating system to copy the entire file, the computer treats Vienna as data. The copy will contain a new version of Vienna, thus resulting in self-replication.

The duality principle, as we have seen, is a double-edged sword. It permits general computing but also malicious hacking. It enables self-replicating life, but also self-replicating malware. You can’t have the good without the bad. To stop viruses and worms, you have to stop computers from treating symbols both as code and as data, which would be the end not only of copying good software, but also of loading and running it.

We can also see why computers are so vulnerable to viruses. Viruses are genomes, and computers running an operating system contain universal constructors. Vienna doesn’t build itself—it hijacks the building and copying functions of the operating system and storage devices to do the job instead. The high school hacker who built Vienna, therefore, had a much easier job than John von Neumann. Vienna’s creator had universal constructors and copying machines already at their disposal. They just had to create the genome. Von Neumann, on the other hand, had to build every part from scratch. Without a computer. Using a cellular automaton. With two hundred thousand cells. In 1949.

We now know what viruses are and how they work. We are ready to face the really hard question: Why? Are there bugs in the mental upcode of virus writers that lead them to indiscriminately destroy data from innocent victims? Or is there a more charitable explanation for this cruel behavior?

The answer would come from a former social worker who recognized this delinquent behavior. And her most valuable informant would be the virus writer who was not only the most dangerous, but also the most innovative. Standing in the same scientific tradition that von Neumann has inaugurated, he developed new ways of exploiting viral genomes that threatened to render every personal computer on the planet unusable.

Enter Sarah Gordon

Sarah Gordon did not start her career as a virus researcher, or even in the tech industry. She grew up in extreme poverty in East St. Louis, in a house that had no heat or running water. She dropped out of school when she was fourteen and ran away from home. At seventeen, she received her high school diploma by passing every exam the school offered, despite not having taken any of the classes. She attended university for two years, studying theater and dance, before dropping out in the mid-1970s.

Having worked since she was nine, she had held many jobs: juvenile crisis counselor, foster parent, songwriter, and apartment swimming pool custodian. She grew her own food. And she liked to play with computers. In 1990, she bought her first personal computer, a secondhand IBM PC XT.

As Sarah familiarized herself with her pre-owned computer, she noticed something curious: whenever she accessed files on her disk drive at the half-hour mark, a small “ball” (actually, the bullet character, •) would ricochet around the screen. Her files seemed fine, but the ping-ponging ball was irritating. Sarah had no idea what was happening, so she asked around. But no one else knew either. In 1990, few Americans had encountered a computer virus.

As Sarah attempted to figure out what had infected her computer (it turned out to be the Ping-Pong virus, variant B), she logged onto FidoNet, the network that connected the virus exchanges. Virus writers, she could tell, swore like sailors and traded malware like baseball cards. She noticed that one user was treated with reverence—Dark Avenger.

Sarah was haunted by Dark Avenger. He felt familiar. Given her background in juvenile correction and youth in crisis, she recognized the rebellious relationship that troubled young men often have with authority figures. Sarah knew how to draw these young men out. She managed to correspond with other virus writers she met on FidoNet. Dark Avenger, however, was not interested in talking.

She posted on a bulletin board that she wanted to have a virus named after her. A few weeks later, her wish came true. Dark Avenger uploaded new malware to the bulletin board. In the source code to the virus, he commented, “We dedicate this little virus to Sara [sic] Gordon, who wanted to have a virus named after her.” This virus would be known as Dedicated.

Sarah would later regret making such a flippant request. Asking someone to name a virus after her was an invitation for Dark Avenger to create destructive code that could cause much damage. It was irresponsible solicitation.

But that was not all. The virus that Dark Avenger wrote was ensconced within another piece of malware that he also built. This program was a “polymorphic virus engine,” a tool for creating mutated viruses. (Polymorphic = poly [many] + morphic [form], “occurring in different forms or shapes,” such as genetic variations.)

Dark Avenger’s polymorphic Mutation Engine, usually referred to as MtE, is not a virus. It is a program that gives viruses polymorphic superpowers—the ability to shape-shift. The virus passes certain information to the MtE, such as its location, length, and size, and the MtE does the rest. Without affecting the virus’s function, the MtE mutates viral code every time the virus infects a new file.

No one had ever seen a polymorphic virus engine before the MtE. By mutating viruses, the engine threatened to vanquish all antivirus software. When viruses emerged from Dark Avenger’s mutation engine, their altered genome was unrecognizable by the existing detectors, which recognized only preset code patterns. Even worse, the MtE was an off-the-shelf program that anyone with a virus could use. It was small, a little over two thousand bytes, named “MTE.OBJ.” No one needed to understand how the polymorphic engine worked. Indeed, people didn’t even need to know how the virus they were using worked. A beginner could use the MtE to create undetectable, self-reproducing malware.

Existential fear shot through the computer industry: Would Dark Avenger’s virus engine produce hordes of invincible digital monsters terrorizing cyberspace and making it uninhabitable? Sarah Gordon had innocently requested a BB gun. She got a nuclear weapon instead.

Mutation Engine

Antivirus software works in three basic ways: behavior checking, integrity checking, and scanning. First, antivirus software can check for suspicious behavior. Benign programs don’t normally rifle through directories looking for command files. They also don’t copy themselves when they are executed. These are fishy, viruslike activities.

In integrity checking, antivirus software checks to see if files have been altered without authorization. To check, antivirus programs log file attributes, such as name, size, type, and permissions. If a command file suddenly balloons, then the program might suspect that the file is infected. If the increase is exactly 648 bytes, then the program will likely conclude that the file is infected with Vienna.

The first two antivirus techniques—simple behavior and integrity checkers—suffer from the same drawback: they can detect viruses only after infection. If you want to prevent those infections, however, you need a scanner. Recall that computer viruses spread because they are simultaneously code and data. When they run as code, they search for hosts to infect and prepare their targets for infection. When they execute their copying instructions, they treat themselves as data. The code does not execute itself, but transfers information to the prepared host, as it would other data.

While viruses exploit the duality of code and data, it is also their vulnerability. If viruses are not only code, but also data, then scanners can identify viruses based on their data. A unique sequence of symbols in a genome is called a genetic signature. Scanners review questionable code for signatures of known viruses. If the program contains the signature, it is either rejected or disinfected. Because scanners treat viral code as data, a virus writer might try to fool scanners by adding junk instructions. For example, he might add the instructions “Put the value 23 into Register A. Take 23 out of Register A.” These dummy commands are functionally irrelevant, but they change the virus’s genetic signature.

Of course, researchers who stock antivirus software know these tricks. They hunt for unique signatures, strings of code essential to the proper working of the virus. Adding junk code would not disguise a viral signature.

Here is where Dark Avenger’s Mutation Engine comes in. The MtE mutates a virus’s code. By mutating the code, the MtE enables the virus to evade detection by antivirus software by scrambling its digital signature.

To mutate the code, the MtE scrambled the sequence of instructions so that no scanner could tell that the new code was functionally the same as the old code.

|

Parent Instruction 1 Instruction 2 Instruction 3 Instruction 4 Stop |

Child Instruction 1 Jump to 2 Instruction 2 Jump to 3 Instruction 4 Jump to Stop Instruction 3 Jump to 4 |

Aside from confusing scanners, mutated viruses can drive antivirus researchers bananas. Working out the logic of these mutations, with all their shaggy-dog twists and turns, is exhausting.

In creating a mutation engine for computer viruses, Dark Avenger stands in the same scientific tradition as John von Neumann. Von Neumann showed that automata must contain their own genetic information to construct new copies of themselves. Dark Avenger was now showing how to edit that genetic information. To use an analogy from current genetic engineering, if the tape of a universal constructor is akin to DNA, the MtE is similar to CRISPR, the popular laboratory tool used to edit base pairs of genomes.

By editing the “DNA” of a virus, MtE constituted a major threat to the fledgling antivirus industry. This killer app was capable of evading every antivirus program then existing. Indeed, it would take the industry several years to develop a defense.

If mutations render scanners useless, protective software has to run suspected viruses and hope to catch them in flagrante delicto, doing viruslike actions. Instead of treating them as data, antivirus programs treat them as code. They turn to behavior checkers to see what they do.

To prevent the virus from spreading to the rest of the computer, next- generation antiviral software created tiny “virtual machines.” A virtual machine is code that simulates a separate computer—a computer within a computer. The operating system stores and executes virtual machines in memory spaces that are sealed off from the real machine. In this way, what happens in the virtual machine stays in the virtual machine.

Modern antiviral software also examines the computer’s power con- sumption. According to the Physicality Principle, code needs energy to run, and malware is code. If the CPU draws more power than the software expects, the software will flag the anomaly as a sign that nefarious activities are afoot.

Silicon Valley of the East

Though Vesselin spent his days and nights battling viruses, he did not dislike those who wrote them. After all, some of these writers were his friends. And he understood why they were writing viruses.

According to Vesselin, “The first and most important [reason] of all is the existence of a huge army of young and extremely qualified people, computer wizards, who are not actively involved in the economic life.” The main reason why so many of his countrymen were writing viruses was the lack of another outlet for their technical skills and creativity. In short, they were bored.

Indeed, the Bulgarian virus writers had the ideal skills for creating malware. From its first five-year plan, covering 1967–72, through the end of Communist rule, Bulgaria invested heavily in reverse engineering and copying Western computers. It formed a massive consortium of industry and academe—known as ZIT—to reverse engineer and copy IBM mainframes and DEC minicomputers. These plans funded computer-science departments that taught their students how to dismantle computers for analysis. Once a computer’s inner logic and engineering had been deciphered, engineers would devise a process for replicating the machine.

Reverse engineering was not officially taught in classes, but informally in labs. As Kiril Boyanov, a onetime engineer at ZIT who rose to manage a laboratory of 1,200 researchers, described the process, “I would take the best students and choose them to study with me for their doctorates. I was teaching them to analyze equipment and duplicate it. For instance, we would get the latest IBM logic boards and figure out how they worked. Sometimes we would find mistakes and fix them.” Boyanov took pride in that mission and the accomplishments of his countrymen. “In the U.S.A., they needed tools to construct products. Here, we needed tools to deconstruct these products … The quality was not as good, but they worked. We built an economy on this.”

As the 1980s began, the Bulgarian Communist Party focused on the new entry into personal computers, the Apple personal computer. The Communist leader of Bulgaria, Todor Zhikov, selected his hometown of Pravetz as the home of the new Bulgarian personal computer. The Pravetz-82, as it was known, was just the Apple II Plus, with Cyrillic letters swapped in for the Latin alphabet, and a cheesy wood-grained plastic chassis. These knockoffs were sent to schools across Bulgaria. By the late eighties, Bulgarian students had access to more computers than any of their counterparts in other socialist bloc countries.

Vesselin understood that these young men were trained with a high-tech skill but had nothing to use it on. Bulgaria had few software companies, and the salaries were minuscule. Writing cute and clever viruses was an outlet for creativity—like graffiti artists using buildings as canvases to paint.

But the psychological need to create was not the only reason for the Bulgarian virus factory. Vesselin worked his way up the upcode stack and showed how multiple norms contributed to Bulgaria’s virus epidemic. Since software piracy was so widespread in Bulgaria—according to Vesselin it “was, in fact, a kind of state policy”—infections were, too. When everyone copies programs instead of buying them from the manufacturer, viruses have an easy way of moving from disk to disk, computer to computer. Software manufacturers could do nothing about this piracy because Bulgaria had no copyright laws.

Nor was writing or releasing viruses a criminal offense in Bulgaria. Law enforcement had no authority to stop those who wrote self-replicating malicious code and intentionally released it. In Bulgaria’s defense, not only was the country dealing with the collapse of Communism and the disintegration of its economy, but the United States had enacted a law criminalizing unauthorized access only five years before, in the CFAA of 1986. And the CFAA did not prohibit writing viruses. In fact, virus writing is likely protected speech under the First Amendment of the U.S. Constitution. The CFAA criminalized the intentional release of malicious code leading to unauthorized access. Still, the CFAA did not prohibit all unauthorized access—only government and bank computers were covered. Robert Morris was convicted of releasing his worm because he released it on the early internet, thereby ensuring that he would access a government computer. But it was unclear how federal authorities would handle purely DOS viruses.

According to Vesselin, the lack of civil or criminal penalties for pirating software was a symptom of a larger problem: “There is no such thing as ownership of computer information in Bulgaria. Therefore, the modification or even the destruction of computer information is not considered a crime since no one’s property is damaged.” Even public opinion was on the virus writer’s side. Bulgarians did not think they were doing anything wrong, even when these same people were harmed by viruses. “The victims of a computer virus attack consider themselves victims of a bad joke, not victims of a crime.”

Vesselin understood the widespread harm that viruses were creating. He regarded the new national pastime as irresponsible and juvenile. He even criticized his friend Teodor in print for writing viruses (though he used his initials, T. P.). Even if this activity was not justifiable, it was at least understandable.

Vesselin could not, however, understand Dark Avenger. His exploits were so destructive, so malevolent, that their creator had to be psychologically abnormal. “While the other Bulgarian virus writers seem to be just irresponsible or with childish mentality, the Dark Avenger can be classified as a ‘technopath.’” The feeling was mutual. Dark Avenger despised Vesselin as well and called him “the weasel.”

In part, the antipathy is understandable. They were natural enemies: Dark Avenger, the virus writer, was the viper; Vesselin Bontchev, the antivirus researcher, the mongoose. How could they not dislike each other?

But the natural antipathy between virus and antivirus writers cannot fully explain the mutual loathing. Dark Avenger was likely hurt by Vesselin’s harsh critique of his viruses. When analyzing Dark Avenger’s creations in Computer for You, Vesselin savaged the code, calling it sloppy and pointing out errors. While the rest of the virus world thought of Dark Avenger as a viral deity, Vesselin portrayed him as a rank amateur.

Vesselin thought the Dark Avenger hated him because Vesselin got all the credit for Dark Avenger’s hard work. Dark Avenger was the artist, Vesselin merely the critic. Vesselin’s colleague, Katrin Totcheva, had a different theory. Dark Avenger was a fan of heavy-metal music. His viruses were loaded with references to Iron Maiden (although he had a soft spot for Princess Diana). Heavy-metal fans wear dark T-shirts and dislike people who wear suits. Vesselin wore a suit all the time—the same suit. Katrin had never seen him unsuited. Vesselin Bontchev and Dark Avenger were from rival taste cultures: Vesselin the clean-cut authority figure, Dark Avenger the unwashed outlaw.

Another possible explanation for this enmity is that, even in a world of eccentrics, both men stood out as extreme. Vesselin was mercilessly severe in his critique of virus writers, regardless of whether they were strangers or friends. As a founding member of CARO, he advocated a zero-tolerance policy for virus writing. He yelled at the head of the Bulgarian Academy of Sciences for even suggesting that he was trafficking in viruses. Dark Avenger wrote viruses that were not only highly contagious, but also the most malicious. He was not merely toying with harmless viruses, like others in the Bulgarian virus factory; his payloads were meticulously designed to destroy data.

Whatever the reason for the antipathy, Dark Avenger lashed out by revising Eddie and inserting a new string into the code: “Copyright (C) 1989 by Vesselin Bontchev.” Dark Avenger was trying not only to frame Vesselin, but also to thwart his antivirus software. When run, Eddie.2000 (so named because it was exactly two thousand bytes long) would search files for Vesselin’s name, a sign that the system was running his antivirus software. When Eddie.2000 detected the string, it would hang the system.

Dark Avenger and Vesselin developed a codependent relationship. Each needed the other for notoriety, so much so that rumors began circulating that Dark Avenger and Vesselin Bontchev were the same person. Gossips claimed that Dark Avenger was Vesselin’s “sock puppet,” a deceptive online identity. Many of those who did not believe the rumors, however, thought that Vesselin was unnecessarily antagonistic, publicly taunting and provoking Dark Avenger to lash out with even greater rage.

Dark Avenger’s hatred of Vesselin likely motivated him to write the Mutation Engine. In 1991, Dark Avenger sent the following announcement to FidoNet:

Hello, all antivirus researchers who are reading this message. I am glad to inform you that my friends and I are developing a new virus that will mutate in 1 OF 4,000,000,000 different ways! It will not contain any constant information. No virus scanner can detect it. The virus will have many other new features that will make it completely undetectable and very destructive!

The responses, however, were uniformly negative, some abusively so. The idea of creating a mutation engine to defeat all forms of antivirus software was deemed too dangerous. Virus writers have upcode too, and Dark Avenger violated even their lax rules. He did not take it well:

I received no friendly replies to my message. That’s why I will not reply to all these messages saying “Fuck you.” That’s why I will not say more about my plans.

But one person was friendly to Dark Avenger: Sarah Gordon.

Moral Psychology of the Virus Writer

Because computer-virus writing was a relatively new phenomenon, social scientists had not studied virus writers. Sensational reports from the media drove a stereotype. “The virus writer has been characterized by some as a bad, evil, depraved, maniac, terrorist, technopathic, genius gone mad, sociopath,” Sarah Gordon reported in 1994. She set out to discover whether this stereotype was true. Were virus writers morally abnormal?

To find out, she needed to find her subjects. She estimated that there were a maximum 4,500 virus writers in the world because approximately 4,500 viruses were thought to then exist. The vast majority of the 4,500 were zoo viruses, written for research purposes, or solely for submission to antivirus companies. Sarah focused on those who wrote and released viruses. In 1993, there were estimated to be 150 viruses “in the wild.” She estimated that a total of one hundred virus writers were responsible for them because some writers, such as Dark Avenger, had written multiple wild viruses.

Sarah sent detailed surveys to underground bulletin boards in the United States, Germany, Australia, Switzerland, Holland, and South America. For those who did not want to fill out the surveys, she conducted detailed interviews over email, internet relay chat, telephone, and in person. She received responses from sixty-four virus writers, three of whom were hostile.

When she collated the responses, she discovered that there was no such thing as the “generic virus writer.” Virus writers varied in age, location, income level, educational level, and taste. Based on the responses, she identified four groups of virus writers: (1) the Adolescent, ages 13–17; (2) the College Student, ages 18–24; (3) the Adult / Professionally Employed, post-college or adult and professionally employed; (4) the Ex–Virus Writer, who has quit writing and releasing viruses. Every virus writer she studied identified as male. Sarah interviewed only two women: the girlfriend of a virus writer, and another who had been involved with the virus-writing group NuKE. There was no evidence, however, that either had written viruses.

To test whether these virus writers were ethically normal, Sarah used the framework for moral development expounded by the psychologist Lawrence Kohlberg. Kohlberg described three stages of moral development through which humans normally pass. At the first level, the child learns to respond to external threats of punishment and reward. During the second phase, the adolescent internalizes moral rules, though the motivation depends on the costs and benefits of compliance, largely through the reactions of family and peers. In the third level, the adult respects the moral rules for their own sake and develops a personal code of ethics.

In assessing her subjects, Sarah found that, with one exception, the virus writers were ethically normal according to the Kohlberg framework. Adolescents were either average or above average in intelligence, showed at least some respect for parents and authority, and did not take responsibility for problems caused by their creations when they ended up in the wild—typical responses for their age. College students were similarly intelligent, and recognized that illegal behavior is morally wrong, but were not especially concerned with the negative consequences of their virus writing. The ex–virus writers appeared socially well-adjusted. They gave up virus writing because they lacked the time and ultimately found it boring. They harbored no ill will toward other virus writers but were unsure about the ethics of the behavior.

The only group that seemed morally stunted were the adult virus writers. They were unable to rise above the second level of moral development. These men consistently viewed “society” as the enemy. They refused to see virus writing or distribution as illegal or morally problematic.

While Sarah recognized that the research was far from definitive, she hypothesized that virus writing and releasing is an activity that young people typically “age out” of. Virus writing is no different from other deviant activities—adolescents and young men eventually mature and become good members of society. “A lot of the people who do this are, in all aspects, normal, decent people,” she reported. The adults in the third group were ethically abnormal, she surmised, because they were the few that did not age out. They never grew up enough to leave virus writing behind.

The majority of virus writers surveyed were like the youths in crisis she recognized from her work with juvenile offenders—indeed, from her personal history. They were developing human beings experimenting with deviant behavior and testing boundaries. In public, they sounded tough and rebelled against authority. But in private, they were thoughtful. In one-on-one sessions, they would express “frustration, anger and general dissatisfaction followed by small glimpses of conscience—often resulting in a decision to at least consider the consequences of their actions.” Sarah noted how the online space distorted their moral judgment. Because they didn’t see the harm they caused, they thought that they caused no harm. “It’s very possible that sometimes when virus writers say, ‘Viruses don’t really hurt people,’ they believe that. They haven’t seen that other person crying because they lost their thesis.”

Aging Out

In the 1940s, Sheldon and Eleanor Glueck conducted a massive study entitled Unraveling Juvenile Delinquency. The Gluecks followed five hundred male youth offenders, with ages ranging from ten to seventeen at the start of the study. The study was comprehensive, with data collected when the subjects were fourteen, twenty-five, and thirty-two years old, including detailed physical examinations and interviews of teachers, neighbors, and employers. Such data collection would almost certainly be blocked by modern institutional review boards, which makes the data set unique. One of the study’s most significant discoveries was that the crime rate is not steady over a person’s life, but declines at about thirty years of age. “All this seems to point to the effect of ‘maturation’—a time of slowing up and more effective emotional and physiological integration,” Eleanor Glueck proposed.

In the 1990s, sociologists Robert Sampson and John Laub extended and deepened the Gluecks’ analysis by expanding their data set. They returned to the Glueck subjects at age seventy, reviewing continued criminal histories and death records and interviewing fifty-two of the remaining subjects. According to Sampson and Laub, a close look at the extended data set revealed that such traits as low self-control, antisocial behavior, or a lower socioeconomic status did not explain long-term offending. Rather, offending was best predicted by looking at both age and social ties. In particular, adolescent and adulthood experiences and environments could change criminal trajectories (both positively and negatively), and Sampson and Laub dubbed these new experiences and environments turning points.

These studies lend support to Sarah Gordon’s thesis that those who write viruses for fun are not monsters. They have a dual nature, or, as philosophers would say, they are moral agents with free will. While they often act out in rebellious and destructive ways, they also have the capacity to be decent and productive members of society. Adolescence is a period of experimentation when people play with this ethical duality. Even when they make poor choices early on, these young men tend to mellow with age. Maturation takes longer for some, while positive turning points hasten the process for others, but the basic trajectory is reduced delinquency over time.

Sarah’s report on her research, “The Generic Virus Writer,” was an instant hit. When she presented it at the 4th International Virus Bulletin Conference in September 1994, she received her first job offer in the computer industry. The press picked up the story and began calling. Those in the antivirus community, however, were unhappy. They dismissed her research. To them, it did not matter what virus writers said about their motivations. Virus writing is bad, so virus writers are bad. Vesselin was particularly scathing about her work; in private industry forums, he would later attack Sarah personally, calling her “incompetent” and “irresponsible.”

In part, the antivirus industry is built on fear. If users don’t fear viruses, they won’t buy antivirus products or fund antivirus research. Since malware is a real threat, the fear that the industry needs for survival is rational and justifiable. We should be afraid of malware and should buy protection. Nevertheless, anything that undercuts that dread is not good for the industry. Sarah Gordon’s findings threatened to humanize the virus writer and thus ease ambient anxiety. Understandably, the antivirus industry did not love that message.

Another possibility was suggested by Peter Radatti, founder of CyberSoft, one of the original antivirus companies. Industry insiders had strong reactions to Sarah Gordon because she was “the only woman in the entire industry. I mean, like, there was only one: Sarah. And she was extremely attractive and intelligent. She was really good at what she did. But there was a lot of testosterone. And they didn’t like that Sarah disagreed with them. She was unfairly attacked.” Additionally, at the time, Sarah Gordon was working toward her bachelor’s degree at Indiana University. This undergraduate had just shown credentialed bigwigs that their male-dominated industry was built on unscientific hype.

Sadly, the same barriers to acceptance that Sarah Gordon faced in the forums persist in full force today—for hackers as well as security professionals. Cambridge University professor Alice Hutchings and University of Alabama professor Yi Ting Chua elaborate on the social and economic factors that keep women out of hacking: stereotypes and societal expectations for women, the existing gender gap in the sciences, and, most notably, the barriers they face when trying to be accepted by the gaming community and the hacking community that it fosters. When Hutchings interviewed a young male hacker, he gave the following explanation: “So what happens is that when you get a girl that says she can do these things, she gets scrutinized more, more people will work against her, because they hold such prejudices against her. So it’s just not worth it.”

Dark Avenger and Sarah Gordon

Sarah was shocked when Dark Avenger dedicated his demo virus attached to the MtE to her. She reached out to him with her survey but got a dismissive response, routed through an intermediary: “You should see a doctor. Normal women don’t spend their time talking about computer viruses.”

Undeterred, she laboriously composed a message in Bulgarian asking Dark Avenger whether he would answer some questions. She passed it to an American security researcher who was in regular contact with him. He quickly responded. Soon they were corresponding over the internet.

Sarah Gordon and Dark Avenger communicated for five months. She has never made those messages public, except for excerpts that she published in 1993 (with Dark Avenger’s permission). These snippets are revealing. They show that Dark Avenger expressed remorse for his behavior and considered the moral consequences of his actions. They also showed that he was belligerent, resentful, and prone to blaming his victims.

Sarah was one of those who initially suspected that Dark Avenger and Vesselin Bontchev were the same person. Sarah’s interactions with both convinced her otherwise. When Dark Avenger sent her Dedicated, Sarah asked him how she could confirm that he had written the virus. Dark Avenger mailed her a package with the printout of the source code, a floppy disk with a new virus on it (Commander Bomber), a handwritten letter (with excellent penmanship), and a photograph. The photo was not of Vesselin Bontchev. Sarah has spoken to both men and they have different voices. She once messaged with Dark Avenger at the same time that Vesselin was giving a talk.

Her interactions with Dark Avenger convinced her that Vesselin was wrong. Dark Avenger was not a crazed technopath. Indeed, he did not fit into any of her four groups of virus writers: “He has very little in common with the usual crop of virus writers I have talked to. He is, all in all, a unique individual.”

Sarah’s main area of questioning concerned motivation. Why did Dark Avenger write destructive viruses? And why did he seem so unconcerned by the damage he was causing?

SG: Some time ago, in the FidoNet virus echo, when you were told one of your viruses was responsible for the deaths of thousands, possibly, you responded with an obscenity. Let’s assume for the moment this story is true. Tell me, if one of your viruses was used by someone else to cause a tragic incident, how would you really feel?

DA: I am sorry for it. I never meant to cause tragic incidents. I never imagined that these viruses would affect anything outside computers. I used the nasty words because the people who wrote to me said some very nasty things to me first.

Sarah found this explanation surprising. After all, Dark Avenger knew that he caused damage because he designed his viruses that way. His notoriety depended on his creations being highly contagious and destructive. His nemesis was hired to combat the virus epidemic that he helped start. Claiming ignorance was just not believable.

SG: Do you mean you were not aware that there could be any serious consequences of the viruses? Don’t computers in your country affect the lives and livelihoods of people?

DA: They don’t, or at least at that time they didn’t. PCs were just some very expensive toys nobody could afford and nobody knew how to use. They were only used by some hotshots (or their children) who had nothing else to play with. I was not aware that there could be any consequences. This virus was so badly written, I never imagined it would leave the town. It all depends on human stupidity, you know. It’s not the computer’s fault that viruses spread.

In this one answer, Dark Avenger listed the most common defenses virus writers use for their activities: (1) no one had computers to infect; (2) only rich people had computers to infect; (3) computers are toys, so damaging their data is not harmful; (4) I had no idea there could be damaging consequences; (5) my viruses were not intended to infect other computers; (6) viruses don’t infect computers, people infect computers when they use pirated software.

Sarah had heard these excuses before and decided to drill down on Dark Avenger’s reasons. She began by asking him why he started to write viruses. He responded that he wrote them out of curiosity. Ironically, the motivation for writing his first virus, Eddie, was reading the translated article in Computer for You that Vesselin had helped to correct. “In its May 1988 issue there was a stupid article about viruses, and a funny picture on its cover. This particular article was what made me write that virus.” Eddie, however, was extremely destructive, and Dark Avenger expressed remorse for its payload. “I put some code inside [Eddie] that intentionally destroys data, and I am sorry for it.”

When Sarah asked him whether he thought destroying data is morally acceptable, Dark Avenger was direct: “I think it’s not right to destroy someone else’s data.” If so, why did he put the destructive payload in Eddie? “As for the first virus, the truth is that I didn’t know what else to put in it. Also, to make people try to get rid of the virus, not just let it live.” Dark Avenger seems to be claiming that he could not think of any other payload except for one that slowly and silently destroyed data and all backups. That he would think that people would try to get rid of Eddie, rather than pass it on, papers over how Eddie was designed to be undetectable before it had spread and caused terrible damage.

Class resentment comes out several times in the exchanges between Sarah and Dark Avenger. For example, Dark Avenger repeated his allegation that only rich people had computers: “At that time there were few PCs in Bulgaria, and they were only used by a bunch of hotshots (or their kids). I just hated it when some asshole had a new powerful 16MHz 286 and didn’t use it for anything, while I had to program on a 4.77 MHz XT with no hard disk (and I was lucky if I could ever get access to it at all).” He also blamed computer users for their software piracy. “The innocent users would be much less affected if they bought all the software they used … If somebody instead of working plays pirated computer games all day long, then it’s quite likely that at some point they will get a virus.”

Dark Avenger admitted to enjoying the fame and power. He loved when his viruses made their way into Western programs. He was feared and his handiwork could not be ignored. He also regarded his viruses as extensions of his identity, parts of him that could escape dreary Bulgaria and explore the world: “I think the idea of making a program that would travel on its own and go to places its creator could never go was the most interesting for me. The American government can stop me from going to the U.S., but they can’t stop my virus.” Indeed, Dark Avenger inserted the string “Copy me—I want to travel” into Eddie.2000.

Dark Avenger’s strongest reactions, however, were reserved for Vesselin: “The weasel can go to hell.” Dark Avenger even insinuated that Vesselin was to blame for the Bulgarian virus factory: “His articles were a plain challenge to virus writers, encouraging them to write more. Also they were an excellent guide how to write them for those who wanted to, but did not know how.”

Sarah Gordon had expected that Dark Avenger would not like Vesselin. They were on opposite sides of a battle. But she was perplexed by the vitriol: “There is such an animosity between the two of you, which seems unlikely to exist for two ‘strangers.’ Why is this?”

Dark Avenger replied, “Please, let’s not talk about him ever again. I don’t want you to talk to him.”

When Dark Avenger read on the internet that Sarah Gordon was engaged to be married, their correspondence turned ugly. Their contact ended shortly after her marriage. “I think he may have been one of the kindest people I have met,” Sarah told me twenty-five years later, “and one of the most dangerous.”

Who Is Dark Avenger?

After Sarah Gordon published her dialogue with Dark Avenger, rumors began to circulate that she was Dark Avenger. But Sarah Gordon wasn’t. Nor was Vesselin Bontchev. Who, then, was Dark Avenger?

When I asked Vesselin Bontchev, who currently works at the National Laboratory of Computer Virology at the Bulgarian Academy of Sciences, the lab he founded over thirty years ago, he refused to answer. He said that he was careful not to accuse people of virus writing. Vesselin did mention that he saw Dark Avenger once. After a lecture Vesselin gave at the University of Sofia about a Dark Avenger virus, Name of the Beast, a group of men approached to discuss his analysis. One person, whom Vesselin described as “short and angry,” stood listening but said nothing. Afterward, Vesselin’s friends in the virus scene confirmed that he was Dark Avenger.

In my conversations with him, Vesselin was adamant that he does not—and would not—“name names.” However, he did—on camera. In a 2004 German documentary entitled Copy Me—I Want to Travel, three women set out to discover Dark Avenger’s true identity. They do not succeed. But in the film, they interview Vesselin Bontchev and ask him about the true identity of Dark Avenger. His answer: Todor Todorov. Todorov, aka Commander Tosh, is the Bulgarian who started the vX bulletin board in 1990.

Circumstantial evidence links Todorov to Dark Avenger. Dark Avenger stopped releasing viruses in 1993, a few weeks before Todorov left Bulgaria for three years. Then, in January 1997, a hacker calling himself Dark Avenger gained root access to the University of Sofia network. For two days, he controlled the largest university system in the country. Todor Todorov had returned to Sofia a month earlier, in December 1996.

When David Bennahum, a writer for Wired, contacted Todor Todorov in 1998, he got a hostile response. “What do you think of Vesselin Bontchev?” Bennahum asked.

“He’s an idiot!”

“And Sarah Gordon?”

“She’s a nice lady.”

When Bennahum asked Todorov what he thought of Dark Avenger, he replied, “I do not want to talk about him. That time is gone. It is finished! I will not talk about it.”

Sarah would not tell me the identity of Dark Avenger. He was one of her subjects, and research subjects are owed anonymity. But Sarah did answer one question. I asked whether Vesselin and Bennahum were correct in thinking that Todor Todorov is Dark Avenger. She responded, “Incorrect.”

I wondered how Vesselin and Bennahum could be so wrong. Why did they think that Todor Todorov was Dark Avenger when, according to Sarah Gordon, he definitely was not? One hypothesis, raised by both Vesselin and Sarah, is that Dark Avenger is both a “he” and a “they.”

According to Vesselin, the Dark Avenger is the short, angry man he once met at a talk. But “Dark Avenger” also refers to a group of friends from the University of Sofia. Different members of this group provided ideas and snippets of code to their short, angry friend, who constructed the viruses and spread them around.

Sarah’s version is slightly different. “Dark Avenger” initially referred to the person who wrote the first viruses and whose identity only she knows. But as Dark Avenger’s fame grew, others assumed his identity as well. Even if not the original Dark Avenger, Todor Todorov might have assumed that cloak at some point. Indeed, writers might have collaborated. A collaboration would help explain the dramatic improvement in the quality of the later viruses.

Dark Avenger’s true identity remains a mystery two decades later. That someone, or some group, could wreak havoc on a global scale and remain anonymous is remarkable, especially considering that Bulgaria is a small country that had an intimate virus scene. Dark Avenger’s obscurity was a harbinger of things to come. As the golden age of Bulgarian virus writing came to an end, a new generation would also don the cloak of anonymity to act with total impunity.