7. HOW TO MUDGE

Billy Rinehart is an environmental activist who founded Blue Uprising, a political action committee dedicated to protecting the oceans. His picture on the organization’s website shows him at the helm of a sailboat, with a blond beard, barrel chest, muscular arms, and the broad smile of someone who lives for the spray. The thirty-three-year-old activist is also an avid surfer and frequently travels to Hawaii with his wife to chase the waves. But on March 22, 2016, Billy wasn’t in Honolulu to surf. He was running the Clinton campaign for the upcoming Democratic primary contest in Hawaii. As he awoke in his hotel at 4:00 a.m. (Hawaii standard time), he had no idea what was about to hit him.

Still groggy, Billy opened his laptop to find an email from Fancy Bear. Fancy Bear is a nickname for the computer hacking unit of the GRU (Glavnoye Razvedyvatelnoye Upravlenie—literally, Main Intelligence Directorate of the General Staff, Russia’s military intelligence agency). Fancy Bear wanted the password to Billy’s Gmail account. It hoped to fish out sensitive communications from the Clinton campaign about rival candidate Bernie Sanders. Billy gave Fancy Bear his password, got dressed, and went to campaign headquarters.

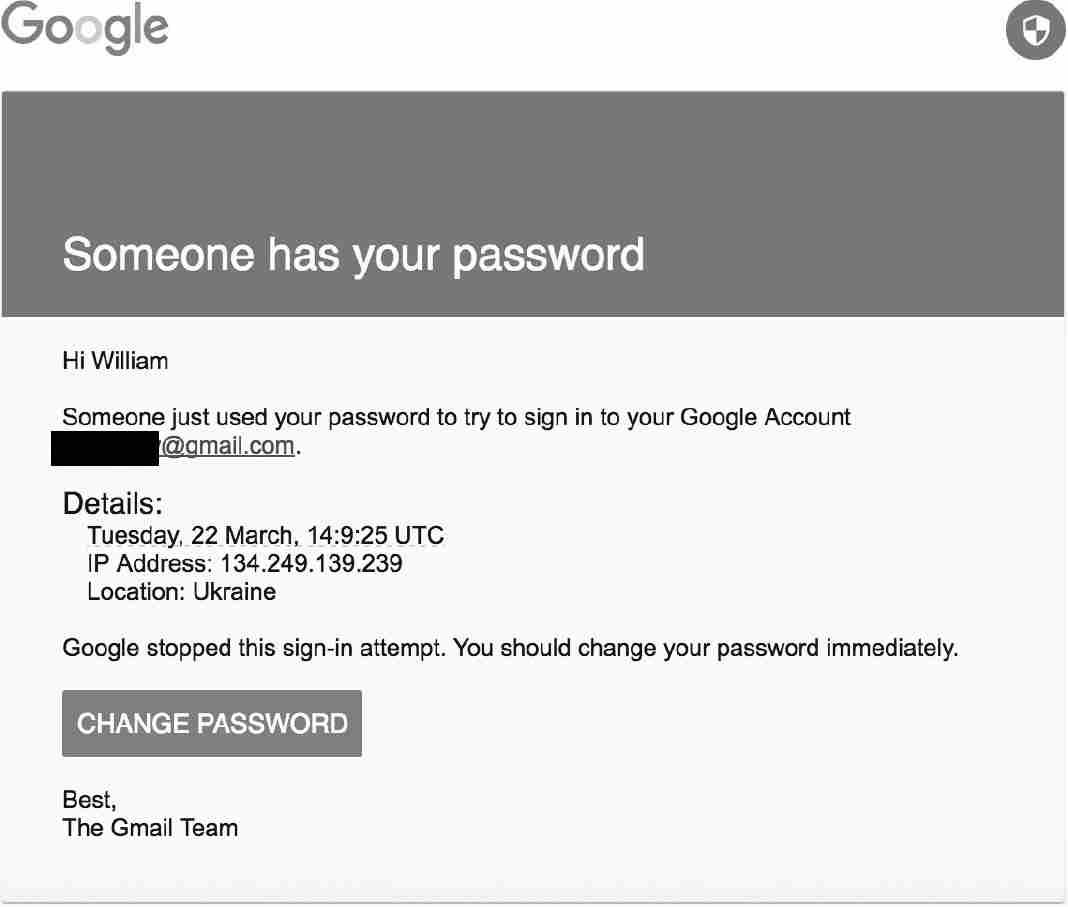

Billy was not a Russian mole in the Clinton campaign. Nor was he the only staffer to provide his password. Rather, Billy had fallen for a ruse. The email sent by Fancy Bear looked just like a message from Google. There was a red banner at the top reading, “Someone has your password”; technical information in the body of the message detailing the time, IP address, and location of the attempted hack; and a blue box at the bottom with the words “CHANGE PASSWORD” superimposed in all caps.

When Billy clicked on the blue box, he was taken to a website that looked exactly like a Gmail password-reset page. But it wasn’t. The website was fake, set up by Fancy Bear to trick Clinton staffers into revealing their credentials.

When cybersecurity experts are asked to identify the weakest link in any computer network, they almost always cite “the human element.” Computers are only as secure as the users who operate them, and the brain is extremely buggy. It is almost tragicomically vulnerable.

Fancy Bear was extremely adept at exploiting these psychological vulnerabilities. Of the thousands of phishing emails sent, six out of ten targets clicked on the link at least once. A click-through rate of 60 percent would be the envy of any digital marketer.

Though Fancy Bear was highly skilled at phishing—attempting to obtain sensitive information over email from another by impersonating a trustworthy person or organization—its tradecraft was not rocket science. It wasn’t even computer science; it was cognitive science. Cognitive science is the systematic study of how humans think. From this perspective, the phishing emails Fancy Bear sent the Clinton staffers were perfect, as if they had been precision engineered in a psych lab to exploit the vulnerabilities of mental upcode. Fancy Bear caught its phish because its bait was just that good.

Linda the Feminist Bank Teller

Linda is thirty-one years old, single, outspoken, and very bright. She majored in philosophy. As a student, she was deeply concerned with issues of discrimination and social justice, and she also participated in antinuclear demonstrations. Which is more probable?

- Linda is a bank teller.

- Linda is a bank teller and is active in the feminist movement.

In numerous studies, approximately 80 percent of participants thought it more likely that Linda was a teller active in the feminist movement. To them, Linda seems like a feminist. Indeed, she fits the feminist stereotype to a T: a young woman who cares about social justice, is unafraid to speak her mind, and is politically active.

While these reactions are psychologically normal, they are also deeply irrational. The probability that Linda is both a bank teller and a feminist can’t be higher than the probability that Linda is a bank teller. After all, some tellers aren’t feminists. Surely the total number of bank tellers can’t be lower than the number of feminist bank tellers.

The 80 percent of participants who chose option 2 violated a cardinal rule of probability theory: the conjunction rule. The conjunction rule states that the probability of two events occurring can never be greater than the probability of either of those events occurring by itself:

Conjunction Rule: Prob(x) ≥ Prob(x AND y)

Thus, the probability that a coin will land heads twice in a row (for two tosses) cannot be greater than the probability that a coin lands heads just once (for one toss). Similarly, the probability that Linda is a feminist bank teller cannot be greater than the probability that Linda is a bank teller.

The Linda problem, first formulated by the Israeli psychologists Daniel Kahneman and Amos Tversky, is perhaps the most famous example of human violations of the basic rules of probability theory. Kahneman and Tversky spent their careers uncovering how mistaken our judgments and choices can be. The human mind is riddled with upcode that causes us to make biased predictions and irrational choices.

By showing how human beings routinely violate the rules of rational belief and action, Kahneman and Tversky helped initiate a scientific revolution. Before the publication of their seminal research in the early 1970s, dominant theories in the social sciences were based on the “rational choice” model. According to this school of thought, humans are rational agents. When we form beliefs about the world, we normally follow the dictates of probability and statistics. Of course, most people don’t know the exact tools they are supposed to use. They can’t recite Bayes’ theorem (the mathematical equation describing how new evidence should change prior beliefs) or run a linear regression (the statistical process for determining how features of events are correlated with outcomes). Nevertheless, rational choice theorists believe that we have an intuitive appreciation for the insights of probability and statistical theory. We know that the chances of getting two heads in two coin tosses cannot be greater than getting one head in one toss. And when we encounter data that disconfirms our prior beliefs, we lower our confidence in those beliefs accordingly.

Rational choice theorists not only maintain that we form our beliefs rationally—they also claim that we choose rationally as well. As is the case for probability and statistics, we don’t know the exact rules of economic decision theory. But we intuitively understand how to balance risks and rewards. We don’t compare the benefits and costs directly; we compare expected values—the benefits and costs discounted by the probability that they occur. We may intuitively decide, for example, that a choice yielding a very large payoff, but with low probability, has a small expected value and hence a bad ranking compared to other options, even those with lower payoffs.

Rational choice theorists recognize that human beings occasionally make mistakes. But our mistakes are generally not disastrous—if they were, rational choice theorists argue, we wouldn’t be alive. And since our mistakes are random, they argue, irrational choices cancel out when our decisions are considered in aggregate. As a group, our collective decision-making can therefore be predicted and explained by assuming rational behavior.

Kahneman and Tversky challenged this picture of human nature. In their view, human beings are not intuitive statisticians or economists. Our minds work differently than rational choice theorists claim. Kahneman and Tversky instead hypothesized a series of psychological mechanisms—called heuristics—that explain why we think and choose as we do.

Consider children born into a family in the following order: girl, girl, girl, boy, boy, boy. Most respondents say that this sequence is significantly less likely than girl, boy, girl, boy, boy, girl. They tend to underestimate sequences that have patterns when compared to a representative random sequence. Because of its pattern, GGGBBB appears less random than GBGBBG, even though each order is equally probable. Kahneman and Tversky argued that people associate a stereotype with each class—a mental image that represents the members of the class. When asked how likely it is that someone is a member of a class, we compare that proposed member to our stereotype. The closer the resemblance, the more likely it is judged to be—a rule of thumb that Kahneman and Tversky call the Representativeness Heuristic.

The Representativeness Heuristic neatly explains the Linda results. We don’t think that Linda is a bank teller because she doesn’t match the stereotype. However, she closely resembles our mental image of the Representative Feminist. Hence, we consider it much more likely that Linda is a feminist bank teller than that she is a bank teller. This response makes no rational sense, but it makes a great deal of psychological sense.

People may not be intuitive statisticians, but hackers are intuitive cognitive scientists. They understand how the human mind works. They know how to exploit its many heuristics to compromise our security.

Representative Emails

To see how Fancy Bear tried to trick Clinton campaign staffers into handing over their passwords, let’s look at a legitimate Gmail security alert.

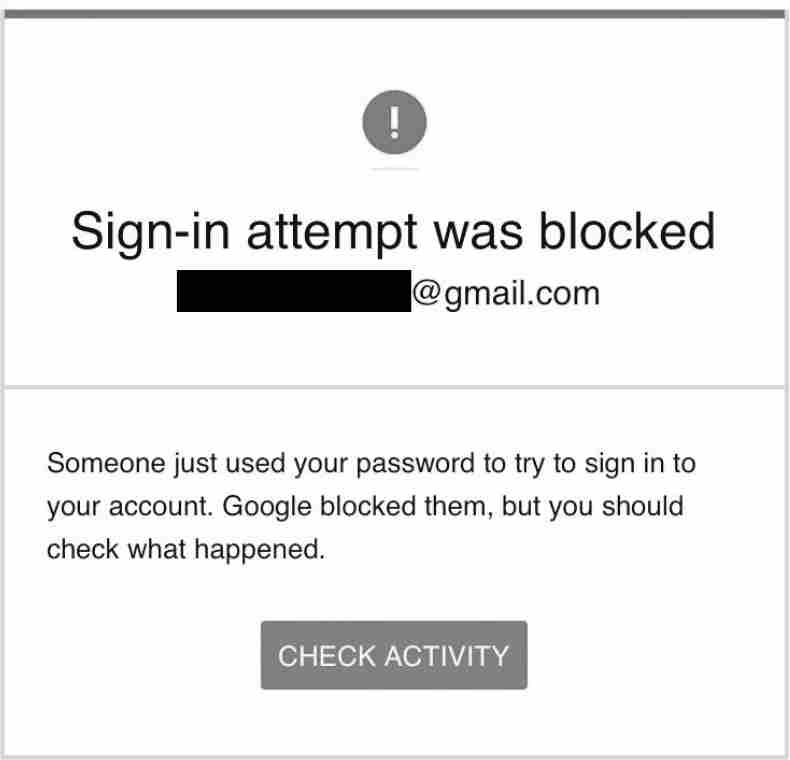

The legitimate security alert leads with the Google logo and an exclamation point on a circular red background. The header tells the owner of the account that a sign-in attempt was blocked. The body of the message repeats the warning: someone has used the account holder’s password to attempt to access the account, and Google blocked access. The alert does not say that someone else has this password. Nor does it suggest that the owner should change this password. It simply advises the owner to check for suspicious activity.

Fancy Bear deemed this alert insufficiently alarming. To persuade staffers to divulge their passwords, the Russians upped the ante. Thus, at the top of the email, superimposed on a red banner, the alert warns, “Someone has your password.” The threat is clear: someone other than you definitely has your password.

To reinforce the threat, the fake email explicitly directs the recipient to take emergency action: “You should change your password immediately.” The big blue link says, “CHANGE PASSWORD.” The legitimate email advises nothing of the sort. After alerting the user of a blocked sign-in, it gently suggests that “you should check what happened,” and the red button offers to “CHECK ACTIVITY.”

By making the security alert scarier, Fancy Bear also introduced a risk of being discovered. A recipient like Billy might wonder, How exactly would Google know that Billy was not the one who tried to log in to his Gmail account? The email says that a sign-in had been made in Ukraine. Couldn’t Billy have been in Ukraine or used a service (like a VPN) that made it appear as though he was in Ukraine? Even if someone in Ukraine tried to sign in to the account, that person could have been Billy’s spouse, child, or colleague. The fake email is too definitive: it directs the user to change the password and to do so immediately. The legitimate email is noncommittal because Google typically does not know enough to make a stronger warning.

To make the message appear authentic, Fancy Bear exploited multiple heuristics discovered by Kahneman and Tversky. Consider the Representativeness Heuristic. According to the Representativeness Heuristic, we judge the likelihood that an object falls into a class based on the similarity of that object to our representatives for that class. Thus, to make their fake email resemble our stereotype of a genuine security alert, Fancy Bear emphasized the visual aspects of a legitimate email. They made the fake email look like a real email.

As many studies have shown, we judge online materials by their look. The visual appeal of a website is one of the most important cues in our assessment of its trustworthiness. We look for websites that have the right balance of text and graphics. Too much text is hard to read, while too many graphics are confusing. Just as animals recognize poisonous prey by their bright colors, users tend to treat garish websites as fraudulent and dangerous. We judge emails similarly. According to a recent study, the most important cues used by participants for distinguishing legitimate from phishing emails were the logo, email address, and presence of a copyright statement. Other factors affecting judgment included the general layout of the email, the wording used, and whether the message “seemed legitimate.” According to one participant, “There is a ‘look’ to [legitimate emails], and when there is not, something is off.”

Corroborating these scientific studies is a famous bit by the comedian John Mulaney. Mulaney tells of having his email hacked and spam sent to everyone in his contact list. One of his friends clicked on an embedded URL, which took him to a website selling herbal Viagra. “He clicked on the link? Who the hell clicks on spam links?” Mulaney says in disbelief. “And this was the ugliest internet link I’ve ever seen in my life. It had dollar signs and swastikas in it.” Mulaney is particularly insulted that his friend thought Mulaney was trying to sell him erection dysfunction cures through a hideous website. Mulaney imagines how he would have pitched the friend if he was really selling herbal Viagra for a living: “I hope you can read pink on purple, as that is the design we have chosen.”

Fancy Bear’s email, therefore, used the same font as Google security alerts and the same clean design. Color is used sparingly but appropriately—scary red for the alert banner, safe blue for the password reset link, all on a white background. Fancy Bear made sure to insert the Google logo and security icon as well.

Fancy Bear sent the email from hi.mymail@yandex.com. Since Yandex is the Google of Russia and that would raise suspicions, Fancy Bear spoofed users by substituting the normal-looking email address no-reply@accounts.googlemail.com. Just as people sending regular mail can put any return address they want on a letter, email senders can put any email address in the “From:” line. Spam filters can often detect fraudulent email addresses, but Gmail’s spam filter failed to do so in this case because accounts.googlemail.com is a legitimate Google domain name. Google, however, does not use the domain, preferring no-reply@accounts.gmail.com instead.

Trying to fool recipients by making fake emails look real may seem incredibly obvious. And it is obvious—to us—because we are visual creatures and favor visual stereotypes. It is estimated that 30 percent of our brains are devoted to visual processing. Because our vision is so highly developed, we normally use our eyes to authenticate identity (as opposed to our sense of smell, which my cat favors). Online authentication is so difficult for us because visual cues in cyberspace are unreliable, and more reliable cues are generally unavailable to regular people.

Billy Rinehart could neither see nor hear the person sending the security alert. Nor could he smell or touch them. Billy had to figure out the identity of the sender at 4:00 a.m. in a Hawaiian hotel. So, he relied on the most familiar kind of cue. Since the email looked legit, he decided it was legit.

Availability and Affect

“If a random word is taken from an English text, is it more likely that the word starts with a K, or that K is the third letter?”

When Kahneman and Tversky asked participants this question, most responded that words beginning with K are more common than those that have K in the third position. This is the wrong answer. In English, the letter K is three times more common in the third position.

Kahneman and Tversky explained these misestimations by the comparative ease of recall. English speakers can think of many words that begin with K (kite, kitchen, key) but have greater trouble with those where K is in the third position (ask, take, baker). Kahneman and Tversky hypothesized that when people are asked questions about how common objects are, they often respond to a different, easier question. Instead of “How common is this?” they answer, “How memorable is this?” According to the Availability Heuristic, the more available an object is in memory, the more common it will be judged to be.

Because we assume that memorability is correlated with frequency, the media greatly affects our judgments. Accidents, for example, are thought to be greater causes of death than diabetes because car and plane crashes are covered by the news, while the more common diabetes deaths are not. The Availability Heuristic, therefore, biases our perception of frequency toward exceptional, especially vivid, events. Shark attacks are extremely rare. But because they are terrifying and sensational, they are on the news. And because they are on the news, we recall them more quickly than other causes of death. Ironically, the less frequent an event, the more conspicuous its occurrence, the more available it is to memory, and hence the more common it is thought to be.

Fancy Bear tried to trigger the Availability Heuristic by alleging that the sign-in attempt occurred in Ukraine. The choice of Ukraine was deliberate. In addition to the hazy stereotypes that stem from the emergence of a lot of cybercrime from Eastern Europe, there was another factor: Russia had been conducting nonstop cyberattacks against Ukraine since 2014, when the Euromaidan Movement ousted its Kremlin-backed president, Viktor Yanukovych. In fact, Fancy Bear was one of the main antagonists. In 2015, its hackers attempted to compromise 545 Ukrainian accounts, including those of half a dozen ministers, two dozen legislators, and the new Ukrainian president, Petro Poroshenko. Russian cyberattacks were routinely reported in the media. In November 2015, for example, The Wall Street Journal covered the shutdown of Ukraine’s power grid by Russia with the headline “Cyberwar’s Hottest Front.” The notoriety of Ukrainian hacks would have led Clinton staffers to attribute high likelihood to the alleged attack originating in Ukraine. Of course, Ukraine was not the source of the Russian hacks; it was the target. But the Availability Heuristic works by association. Since Ukraine was associated with hacking, the heuristic lent credence to the claim that the hacking came from Ukraine.

For similar reasons, phishing emails routinely refer to current events, like natural disasters and infectious diseases, when asking for donations. Because these events are vivid and sensational, the Availability Heuristic lends credibility to scams that mention them. The scams are believable because the events they mention are memorable.

Closely related to the Availability Heuristic is the Affect Heuristic. The Affect Heuristic substitutes questions of affect, or emotion, for questions of risks and benefits. Instead of asking “How should I think about X?” the Affect Heuristic asks, “How does X make me feel?” If you like a course of action, you are likely to exaggerate the benefits and downplay the risks. Conversely, if you dislike an option, you’ll exaggerate its risks and downplay its benefits.

Suppose you get to pick a ball at random out of a transparent glass urn. If the ball is red, you win $100; otherwise, you don’t win anything. You are given a choice of two urns. The first urn has ten balls, one red and nine blue. The second urn has one hundred balls, eight red and ninety-two blue. In studies, most participants pick the second urn, despite 8 percent being less than 10 percent. People systematically choose the less favorable urn because they have a positive reaction to the numerator. Drawing from an urn with eight red balls “feels” like a bet with many chances of winning, despite the ninety-two blue balls that dilute the advantage.

The Affect Heuristic not only inflates our expectations about outcomes we like; it also deflates our expectations about outcomes we don’t. The Affect Heuristic leads us to treat benefits and risks as “inversely correlated.” Proponents of nuclear power assign it high benefits and low costs. Opponents of nuclear power make the converse judgment: low benefits and high risks.

In reality, however, benefits and risks tend to be directly correlated. Nuclear power is controversial precisely because the benefits and the costs are significant. The same is true for pesticide use, geoengineering to combat climate change, and genetically engineered crops. The Affect Heuristic reduces the cognitive dissonance of our having to balance high benefits with high costs. It is much easier going through life thinking that choices are easier than they really are.

Studies have shown that time pressure greatly magnifies the role of affect in decision-making. The less time people have to make a decision, the higher the chance they’ll assume inverse correlation of benefits and costs. That’s why television commercials tell viewers to order their miracle product “before midnight tonight.” If you like what you see but have little time to decide, you will minimize the costs of the decision.

The phishing email shows how Fancy Bear leveraged the Affect Heuristic. Fear is a visceral emotion that plays a powerful role in decision-making. By ratcheting up anxiety, Fancy Bear hoped to persuade campaign staffers that clicking on the blue link would have high benefits and low risks. Fear, in other words, was designed to mask any contradictory evidence suggesting that the link was malicious. To magnify this effect, Fancy Bear added time pressure. The recipient must click the link immediately.

Loss Aversion

We’ve all gotten those Nigerian Prince phishing emails. But the Nigerian Astronaut version pushes this internet scam to eleven. It purports to be from Dr. Bakare Tunde, the cousin of Nigerian astronaut and air force major Abacha Tunde. Major Tunde, the doctor informs us, was the first African in space when he made a secret flight to the Salyut 6 space station in 1979. He was on a later spaceflight to the (also secret) Soviet military space station Salyut 8T in 1989. Major Tunde, however, became stranded when the Soviet Union fell in 1991. Supply flights have kept him going, but he wants to come home. Fortunately, Major Tunde has been on the air force payroll the whole time and has accumulated salary and interest of $15 million. Tunde must return to Earth to access his impressive savings. The Russian Space Agency charges $3 million per rescue flight but requires a down payment of $3,000 before they send a ship. In return for the down payment, the grateful astronaut agrees to pay a hefty reward when he lands on planet Earth.

In contrast to the Fancy Bear security alert, which warns of a risk to the recipient’s email account, the Nigerian Astronaut message offers the recipient the chance to win a large sum of money. It is not a threat of a loss, but the promise of a gain. As Kahneman and Tversky showed, this change produces a powerful psychological reversal.

Consider Jack and Jill.

Today, Jack and Jill each have a wealth of $5 million.

Yesterday, Jack had $1 million and Jill had $9 million.

Are they equally happy?

Economists would answer yes because Jack and Jill are equally well-off. Humans, however, know better. Jill would be miserable if she lost $4 million in a day, whereas Jack would be over the moon to win that amount. As Kahneman and Tversky argued, happiness is not only a function of one’s “endowments” (the stuff one owns), but also changes in it. Jack and Jill have the same endowments, but Jack’s has gone up and Jill’s has gone down.

Kahneman and Tversky argued that human beings are “loss averse”: we are far more sensitive to losses than to gains. Put bluntly, we really hate to lose. Kahneman and Tversky demonstrated the power of loss aversion by offering participants in studies the choice of several gambles. Here’s the first choice:

Option 1: Gamble with an 80 percent chance of winning $4,000 and a 20 percent chance of winning $0.

Option 2: Gamble with a 100 percent chance of winning $3,000.

Four out of five participants picked Option 2. Winning $3,000 for sure was deemed more desirable than an 80 percent chance of winning $4,000. The participants were “risk averse.”

Kahneman and Tversky then presented the participants with a second choice, changing all the gains to losses:

Option 1: Gamble with an 80 percent chance of losing $4,000 and a 20 percent chance of losing $0.

Option 2: Gamble with a 100 percent chance of losing $3,000.

This time, 92 percent of the participants chose Option 1. They reasoned that Option 1 at least gave them the chance of not losing, whereas Option 2 made it certain that they would lose. When losses were substituted for gains, participants reversed course and became risk loving.

Loss aversion shows why phishing emails that promise gains—such as the Nigerian Prince scams—are less effective than those that threaten losses—like the Fancy Bear security alert. Because human beings are normally risk averse, we are less likely to take a chance for a large gain if there is a significant chance of a loss as well. In the case of the Nigerian Prince scam, most people figure out that they need to risk money up front to get the reward. At that point, most back out.

Indeed, some researchers have argued that the inherent ridiculousness of these scams is a feature, not a bug. These cons are absurd—and that’s the point. Nigerian astronauts trapped in secret space stations for decades, or Nigerian princes with millions in bank accounts frozen because of civil wars, are scams concocted to flush out the rubes. “Phishermen,” as they are called, don’t want to draw the attention of people with common sense. For most, loss aversion eventually kicks in, and the target will ultimately reject the risk. Phishermen, however, pursue easy marks. Mass phishing scams work by identifying the small number of highly gullible chumps willing to invest in get-rich-quick schemes, even in the face of impending losses. (By contrast, targeted phishing, also known as spear phishing, typically works by sending believable messages to the target, as we will soon see.)

Fancy Bear wisely chose to emphasize the possibility of loss. The recipient had to change his or her password to avoid being hacked, rather than to receive a reward. By threatening a certain loss, Fancy Bear triggered risk-loving behavior.

To see this, let’s carefully lay out Billy Rinehart’s choice. He could have changed his password in one of two ways. He could have used his browser to log in to his Gmail account through the Gmail website, go to account settings, click on the security link, and change his password. But changing your password through a browser is a pain in the neck. It is a certain time-wasting irritation, but a relatively minor one. Billy’s other option was to click on the link. Changing his password this way would be relatively painless, though there would be a small chance of losing big by handing over credentials to hackers.

Change password via browser: 100 percent chance of a small loss.

Change password via email link: big chance of no loss, small chance of big loss.

Because people hate to lose, they will often choose a risky gamble to avoid a definite loss. Unsurprisingly, Billy Rinehart clicked the link. He wanted to avoid the hassle of changing his password the safe way at four in the morning.

Indeed, the Loss Aversion Heuristic may be one of the biggest factors affecting cybersecurity. We don’t like to invest time and effort in proper cyberhygiene because the costs are certain but the benefits uncertain. If you spend money and nothing bad happens, then why did you spend the money? And if you spend money and something bad happens, then why did you spend the money? The median American company budgets approximately 10 percent for IT, and 24 percent of that on security. That’s a little over 2 percent budgeted to protecting mission-critical activities.

Typosquatting

When judging the legitimacy of a website, we care a lot about how it looks. Our browsers couldn’t care less. A website can contain pink text on a purple background filled with dollar signs and swastikas, for all our browsers care. Browsers judge the authenticity of a website by its security certificates. A security certificate is like a website’s ID card. It certifies that the website is being operated by the owner of that website. If I type “www.gmail.com” into the address bar of my browser, my browser will take me to www.gmail.com only if the website has security certificates attesting that the website is overseen by the owner of Gmail.com, namely, Alphabet, the parent company of Google. A website impersonating www.gmail.com, even one that looked exactly like a legitimate Gmail page, would not have those certificates. My browser would not trust it and would warn me not to trust it, too.

Website security certificates are issued by private companies, known as certification authorities, or CAs. CAs such as Verisign and DigiCert form the trust anchors for authentication on the web. These companies vouch for the identities of the organizations that own websites. If they have directly issued a security certificate attesting that www.wellsfargo.com is owned and controlled by Wells Fargo Bank, clients are almost certainly handing over their financial information to their bank and not to some impersonating hacker.

Website security, therefore, depends on downcode and upcode. Browser downcode looks for data—the security certificates—that will authenticate a website. But the data provided to the browser is determined by industry upcode deeming certain firms sufficiently trustworthy. Only a company with an excellent reputation can be a CA and issue security certificates. Indeed, any blemish on a firm’s record can lead to instant collapse. For example, hackers broke into DigiNotar—a large and respected Dutch CA—and forged five hundred digital certificates. Some of these fraudulent certificates were used to spy on Iranian human rights activists. When news broke of the hack, browsers added DigiNotar to their blocklists and no longer trusted any of their certificates. DigiNotar filed for bankruptcy later in the week.

Why did Billy Rinehart’s browser not alert him that the Gmail password reset page was fake? The answer is that only websites set up to handle the https protocol have security certificates. HTTP is the “hypertext transfer protocol”—the original protocol used for transferring web pages. HTTPS stands for “HTTP secure.” In contrast to HTTP, HTTPS recognizes security certifications and encrypts web communication. When HTTPS is enabled, you will see a lock near the address bar in your browser. If you click on the lock, you can see the website’s security certificate. All your communications with this website will be encrypted.

Fancy Bear’s fake Gmail page did not use HTTPS. Thus, when Billy Rinehart clicked the link, his browser did not show the lock in the address bar. But it also did not warn him that the page might be fake. The browser assumed that Billy would authenticate the page himself. So did Fancy Bear. And Fancy Bear did everything in its power to nudge Billy to accept it as real. (Two years later, Google would change its Chrome browser to warn users explicitly that HTTP pages are “not secure.”)

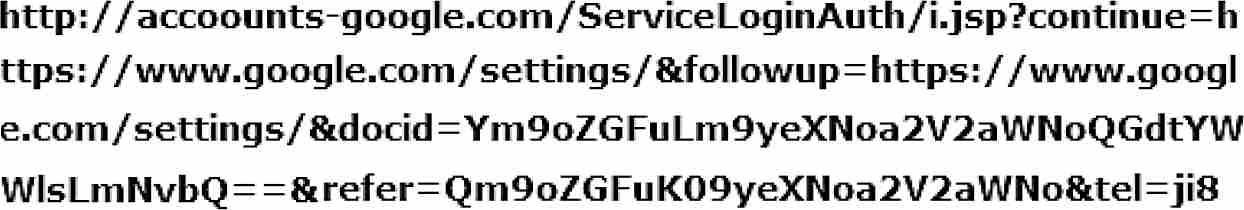

When Billy clicked on the blue “CHANGE PASSWORD” banner, his email client would have sent the following URL to the browser: bit.ly/4Fe55DC0X. The bit.ly URL is generated by Bitly, a URL shortening service. It allows users to convert long domain addresses into shorter ones. Businesses use URL shorteners to make their marketing messages tidier. Social media influencers use them to save space in their posts. And hackers use them to hide the true destinations of their links from users and—critically—their spam filters.

The browser would have expanded the shortened URL to the following:

Notice the misspelling: “accoounts-google,” not “accounts-google.” This technique is known as typosquatting. Notice also that the fake website uses HTTP, not HTTPS. The website had no security certificate and hence no way for the browser to determine whether it was fake.

Billy would have had no reason to inspect the URL too closely, however, because the fake page looked like a real password-reset page. Billy entered his password into the conveniently prefilled web form. Fancy Bear was on the other end.

Physicality Revisited

It is tempting to conclude from this brief survey of psychological heuristics that human beings are fundamentally irrational. Judging the frequency of an event by whether you heard it on the news, how it makes you feel, or whether you are facing a certain loss is not sound statistical practice.

To see why human psychology is not fundamentally irrational, let us return to Turing’s physicality principle. Physicality maintains that computation is the physical manipulation of symbols. As mentioned in chapter 2, adding numbers with pencil and paper is the paradigmatic example of physical symbol manipulation. When we add 88 + 22, we start from the right, add 8 plus 2, write 0, carry the 1 by writing it at the top of next left column, add that column, write 1, carry the 1 by writing at the top of next left column, add that column, write 1, and finish. Indeed, Turing took adding with paper and pencil as his model for computer computation.

As Turing showed, computing devices need perform only three basic actions of symbol manipulation: read symbols from a paper tape, write symbols to the tape, and move along the tape. The device picks one of these actions by following an internal instruction table. As long as the instruction table sequences these primitive actions in the right order, the device (traditionally known as a Turing Machine) will physically compute the correct answer.

Because our world is filled with billions of physical computing devices, we take physicality for granted. We should pause for a moment, however, to appreciate the boldness of Turing’s claim. Somehow, a series of mindless manipulations performed by a mechanical contraption obeying the witless laws of physics adds up to something intelligent.

In the seventeenth century, the mismatch between matter and mind propelled René Descartes, the father of modern philosophy, to deny physicality. Descartes advanced an alternative doctrine known as dualism, which maintains that the mind is not physical, but a metaphysically different kind of substance. Unlike the body, which has mass and extension and whose properties can be measured using scientific instruments, the mind is a ghostly presence not existing in physical space and cannot be seen, touched, or measured.

Turing tried to allay these Cartesian worries by showing how mindless matter could produce intelligent action. The intelligence in computation lies not in any particular step, Turing explained, but in their sequence—just as music isn’t in the individual notes, but in their relationship to one another. Every operation the Turing Machine performs is primitive, trivial, monotonous, unintelligent, arational, stupid. Yet, by ordering many of these basic operations in just the right way, the mindless Turing Machine yields an intelligent solution.

Turing’s discovery of the physicality principle was profound: he conjectured that the basic physical instructions—reading, writing, and movement—underlie all computing. Provided a machine can manipulate symbols using these three simple instructions, it can use them (if sequenced correctly) to solve any solvable problem. One machine can add numbers: if you write the numbers 2 and 2 on the tape, the machine will output 4. Another machine can calculate the billionth digit in the decimal expansion of pi. One can even build a Turing Machine that can model how carbon taxes will affect global warming. Running that simulation would require trillions of symbol manipulations and would take many centuries to complete. But in principle, it can be done.

In practice, however, no usable computing device can run without extensive shortcuts. Any mechanism that physically manipulates symbols requires energy, time, and space to step through algorithms. The longer the computation, the more energy, time, and space required. The laws of physics govern cyberspace as they do the “meatspace,” i.e., the physical world.

Digital computers make extensive use of heuristics all through the downcode stack. For example, modern CPUs run so quickly not only because semiconductor manufacturers have been able to cram billions of transistors onto each chip, but also because the microcode that regulates them uses heuristics to accelerate computation. For example, all modern microprocessors use “speculative execution”: they continually guess which instructions may come next and calculate results assuming their predictions are correct. Speculative execution dramatically cuts down on delays at runtime because so much of the computation has taken place even before it is needed.

Operating systems also rely on extensive heuristics. Authentication through credentials is one such shortcut. When I log in to my laptop, the operating system does not conduct an extensive analysis of all the information it has available to determine whether I am really “Scott Shapiro.” It just asks me for my password. Using credentials is fast, is easy, and consumes relatively few resources. Recall that Corby, the creator of the multiuser system CTSS, instituted this heuristic to reduce the demands on his time-sharing system. Passwords were limited to four characters to save precious memory space.

What is true for computers is also true for brains. The computing devices housed inside our skulls must obey the same laws of physics as silicon-based microchips. And they must also use extensive shortcuts if they are to serve one of their main functions, which is to keep us alive.

Systems 1 and 2

Let’s return to human psychology. For creatures that have to survive in a world of myriad threats, truth is not the only concern. Speed is also essential. We questioned whether the human mind is irrational because it uses heuristics that are often biased and inaccurate. Heuristics will appear to be irrational, however, only if we focus single-mindedly on their accuracy. If a green tube appears in our visual field, it is best for our brain’s upcode to take over and respond to the possible threat—even before the threat has been identified. If the tube is a snake, then your quick reaction might have saved your life. But if the tube is a garden hose, then your brain just made you look stupid.

Heuristics are crucial for survival because they are fast and automatic. We don’t execute them. They are triggered by external cues, not by internal volitions. Heuristics work without our intervention, which is good—we couldn’t reason out an answer in the time required. Heuristics cut through everything we know, every event in memory, every commitment we’ve made, every fantasy, fear, desire, preference, and conviction, and generate a good-enough result for the demands of the moment.

Speed is especially challenging for the mind because the physical hardware it runs on—the human brain—is absurdly slow. Neurons do not transmit signals at the speed of light, like a computer chip does. The axon of a nerve cell is not a wire; it is a chemical pump that propels sodium ions through its body. When the ions hit the end of the nerve cell, the dendrites release chemicals across the gap in cells known as a synapse. The fastest neurons conduct signals at 275 miles per hour, as opposed to 66 billion miles per hour for light—a six-order-of-magnitude difference. Neurons are also voracious consumers of energy. Though the brain occupies only 3 percent of the body, it uses 20 percent of the fuel. Someone who ingests two thousand calories per day burns through four hundred of those calories in brain activity alone. The brain, therefore, has neither the computational horsepower nor the energy efficiency to run without using heuristics to conserve scarce resources. Upcode and downcode can be changed, but we’re stuck with metacode. Physicality gives our brains, and our devices, a hard limit. We have only so many neurons, and our neurons can transmit signals only at a certain rate and can store only so much information at one time.

Heuristics play an essential role in what psychologists call dual-process theories of thinking and choosing. According to dual-process theories, our cognitive life comprises two systems of mental upcode. The first one, which Kahneman called System 1, is the fast system. It automatically and rapidly produces answers to a variety of questions, usually concerning beliefs that must be formed, and actions that must be performed, immediately. System 1 relies almost entirely on heuristics, which work through substitution. Instead of answering cognitively demanding answers to questions such as “How common is the letter K?,” the Availability Heuristic of System 1 substitutes easier-to-answer queries such as “How easy is it to think of words with the letter K?” and delivers its verdict.

System 1 pipes its outputs to System 2. System 2 is the realm of reasoning and analysis, the home of upcode that constitutes our rational selves. System 2 respects logical connections, reasons abstractly, and demands evidence and justification. Unfortunately, System 2 is slow. It also requires effort and attention. While System 2 works on one question, we find it next to impossible to work on another at the same time (try multiplying 17 by 54 while reading the next sentence). System 1 is great at multitasking; System 2 finds it difficult to read and multiply at the same time.

The job of System 1 is to supply inputs to System 2. System 2’s upcode, however, does not have to accept them. It can scrutinize, challenge, and ultimately reject the deliverables of System 1. Rejecting System 1, however, requires work, and System 2 is lazy. Unless it has compelling reasons to doubt System 1, it will simply accept its output as correct.

To fool computer users, hackers trigger System 1 to produce biased judgments, but do so in a way that minimizes surprise to System 2. By creating a scary security alert, Fancy Bear triggered the Loss Aversion Heuristic and hence the desire to eliminate the threat. But it also created surprise. After all, how would Google know that someone besides Billy Rinehart had his password? Fancy Bear’s goal, therefore, was to shut down System 2. Fancy Bear did so by triggering a set of heuristics—Representative, Availability, Affect—that all gave the same answer: your email account has been hacked, click the link, change your password. Add in the time pressure for good measure, and it’s easy to see why System 2 gladly capitulated to System 1’s suggestions.

Hackers, we might say, do the opposite of what the economist Richard Thaler and legal scholar Cass Sunstein have called nudging. A nudge alters the choice situation to avoid triggering heuristics that lead to irrational behavior. For example, when the standard default on employee retirement plans is “no contribution,” employees tend not to save for their retirements. They act imprudently because their choice is framed to trigger loss aversion: the “no contribution” default is treated as part of their endowment and any change—any contribution—is treated as a certain loss. Thaler and Sunstein advocated a nudge: flip the choice by making employee contribution the default. In this way, employee contributions would be built into the endowments and hence would not count as a loss. In Austria, with a “no contribution” default, the contribution rate is 12 percent; in Germany, with a contribution default, the contribution rate is 99.98 percent.

We now know two major ways in which hackers compromise computer accounts. The first is by manipulating the duality principle: they substitute downcode when computers expect data, substitute data when computers expect downcode. The second way is by manipulating the physicality principle: they exploit the heuristics that physical devices use to conserve resources. (The Morris Worm exploited both by overflowing a limited buffer with junk data and malicious code.)

Like nudgers, hackers change our choices as well. But their aim is not to improve our welfare; it is to improve theirs. They predict situations in which our heuristics lead us to act irrationally, then deliberately create those situations. These changes are anti-nudges, or mudges, for “malicious nudges” (and the handle of a legendary hacker we will meet in the next chapter).

When we are the computing devices, hackers mudge us to trigger our System 1 heuristics and generate biased judgments, ones that do not serve our interests and would not survive scrutiny by System 2. As we will see, Fancy Bear did not merely trigger the System 1 of campaign staffers. It mudged its way to the top.