CONCLUSION: THE DEATH OF SOLUTIONISM

The critic Evgeny Morozov has called the idea that technology can and will solve our social problems “solutionism.” The solutionist response to famines is irrigation systems. To global warming, reengineering the environment by, say, seeding the oceans with CO2-absorbing algae. Nuclear disasters? Construct remote-controlled drones to maintain reactors and remove any accidental fallout. And labor-market inefficiencies? Websites that enable gig workers to manage their own schedules. A classic example of solutionism is the article published by Wired in 2012: “Africa? There’s an App for That.” Great news! We can reverse centuries of imperialism, revolution, and poverty with our cell phones.

Solutionism is ubiquitous in cybersecurity. Every cybersecurity firm promises that its technology will keep your data safe. Walk through any trade show and you will see miles of vendors hyping a different silver bullet. They pitch “next-generation” everything: firewalls, antimalware software, intrusion- detection services, intrusion-prevention services, security-information and event-management utilities, network-traffic analyzers, document taggers, log visualizers, and unified threat-management dashboards. If you ask vendors what separates their products from their competitors’, they will say the same thing: “The ‘secret sauce’ is our AI. It’s the best in the business.”

Politicians talk about cybersecurity in solutionist terms, too. They assume that the right response to our cyber-insecurity is to invest greater amounts of time and money in technology. Politicians talk about a cyber Manhattan Project or a cyber Moonshot as if these massive technological efforts are the ultimate fix.

To see the limits of solutionism, consider a brief analogy: famines. For centuries, people assumed that famines are caused by food shortages. This lack of food was believed to result from natural events like drought, flooding, typhoons, and pestilence, or man-made disasters such as wars, genocide, and agricultural labor deficiencies. The Nobel Prize–winning economist Amartya Sen nonetheless pushed back on this familiar narrative with hard data. In his groundbreaking book Poverty and Famines (1981), Sen argued that food shortages are not the principal cause of famine. Famine arises despite the availability of food. In 1943, for example, Bengal suffered a devastating crisis in which almost 3 million people died, even though there was 13 percent more to eat than in 1941, when there was no famine. Likewise, Ethiopia experienced a famine in 1973 even though food stocks were no different than they’d been in the previous years.

Sen argued that political failures, not agricultural ones, cause famines. In the case of Bengal, there was plenty of food, but workers could not afford it. World War II drove food prices up 300 percent, but laborers’ wages rose only 30 percent. The British government in India could have addressed the problem by adjusting labor markets or allowing imports to make up for the inflationary shortfall. But it didn’t. The 1973 famine in Ethiopia was the result of poor transportation between regions. Again, the country had plenty of food, but not enough ways to get that food to those who needed it.

Sen’s explanation of the causes of famine pointed to an alternative solution. If famines are due to political failures, then the solution should be political as well. Even the most advanced agricultural technology will not make up for shortsighted policy.

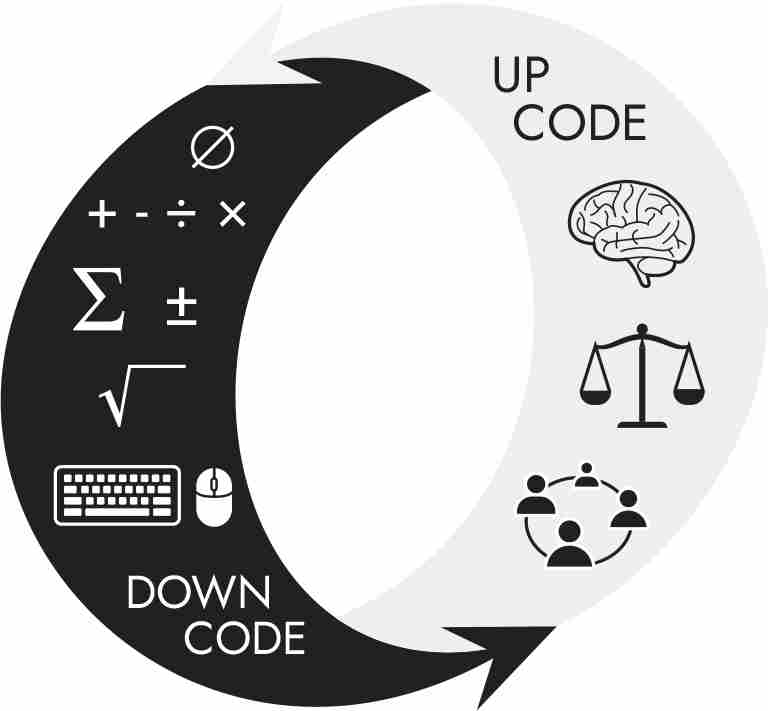

What is true for famines is also true for cybersecurity. Cybersecurity is not a primarily technological problem that requires a primarily engineering solution. It is a human problem that requires an understanding of human behavior. We need to pay attention to our upcode, determine where the vulnerabilities lie, and fix those rules so that we produce better downcode.

Upcode Solutions

One main takeaway of this book is that upcode shapes the production of downcode. Developers write downcode because they respond to existing upcode. Upcode, therefore, is causally upstream from downcode. Change the upcode and you will change the kind of downcode produced.

This relationship between upcode and downcode opens up a new possibility: instead of patching insecure downcode, we patch the upcode that is responsible for the insecure downcode. Solving problems in the upcode stack can correct technical messes downstream.

Here is a simple example of an upcode solution. The Mirai malware built its botnets by exploiting default-password devices in IoT devices. In 2018, Governor Jerry Brown signed the Security of Connected Devices bill, which required devices connected to the internet sold or offered for sale in California to have “reasonable security features.” A reasonable security feature is one that is either unique to each device or requires the user to choose a new password before first use. California is a huge market, and the Security of Connected Devices law forced IoT manufacturers wanting to sell products there to replace default passwords with reasonable security features. The vulnerability exploited by Mirai has now been patched for all new IoT devices. The code downstream has been fixed because of a change in the code upstream.

Here’s another example. The Securities and Exchange Commission (SEC) has recently proposed regulations designed to nudge companies into making better security decisions. It requires corporate boards to periodically report on their policies to identify and manage cybersecurity risks. The companies must disclose how directors are overseeing that risk, and how management is assessing it and implementing cybersecurity procedures. By requiring this reporting upcode, the SEC forced security considerations to become integrated into corporate decision-making at the highest level. Cybersecurity risks became “mission-critical” concerns that directors and management could not ignore and had to disclose to investors.

Unlike the targeted California IoT law, the SEC regulation is a systemic change to upcode. It is not designed to patch any particular vulnerability. It changes the incentives that govern corporate decision-making. Fixing upcode at this level does not limit itself to fixing one downcode vulnerability, but aims to produce better security practices across a range of applications and services.

There is no such thing as “solving” the “problem” of cybersecurity. There are only trade-offs between different aspects of our information security, and between our information and physical securities. We have to balance the costs and benefits before we decide whether and how to patch upcode. For each defensive move taken, a change will occur in offensive tactics. Even at the level of upcode, the cat-and-mouse game never ends. Our aim is to slow the game so that the cat is winning most of the time.

These games take three relevant forms: crime, espionage, and war. The Morris Worm, the Melissa vorm, the Paris Hilton hack, and the Mirai botnet were crimes. Cozy Bear engaged in espionage. Fancy Bear might have engaged in an act of war. Each game requires its own measures.

A. CYBERCRIME

Beginning in the 1990s, the Uniform Crime reports, the FBI’s official statistics on criminal activity in the United States, showed a pronounced drop in every category—both property crimes (theft, burglary, fraud) and violent crimes (assault, rape, murder). It appeared as though crime was plummeting across the country. Politicians and law enforcement ballyhooed the miraculous success of their policies and leadership.

This miracle turned out, at least in part, to be illusory. When criminologists turned to victimization reports—large-scale surveys asking people whether they have suffered a crime in the previous year—they discovered that property crime had not declined. It had migrated online. The statistical drop was an artifact of incomplete reporting of cybercrimes. Researchers now believe that at least half of property crime is committed on the internet. In the U.K., more than half of property crime is online.

Asking to stop cybercrime, therefore, is no different from asking to stop crime. You can’t. Crime is a part of life. While there is no magic wand that will eradicate crime, online or off, crime can be reduced in humane and cost-effective manners.

To date, the only upcode solution seriously discussed has been law enforcement. The constant plea is for greater efforts from prosecutors and bigger budgets from politicians: more cyber-agents, more intensive training of prosecutors, and larger investment in technology to track cybercrime.

These proponents are aware of the difficulties in prosecuting cybercrime. Credit card fraud might be perpetrated from Russia using a Romanian C2 server against a French bank by conscripting a security camera in New York to be part of a botnet that distributed malware written in Ukraine to a computer in Brazil owned by a Chinese company. Unlike pickpocketing, where the perpetrator and victim are in the same place, cybercriminals need not be in the same country, or side of the world, as the victim. To prosecute such transnational activity, states usually need cooperation from other states. Romanian servers and Russian ISP records may contain essential evidence for prosecuting the fraudsters.

Under international law, however, no state is legally obligated to help another prosecute crimes. Global upcode—with its system of sovereign states—treats law enforcement as a domestic matter. Romania is under no legal duty to provide the FBI access to servers on its territory, and Russia is not obliged to hand over a criminal suspect in its midst.

Legal fixes are available. Many states sign extradition treaties. According to these agreements, states are required to hand over suspects to their treaty partners upon request. Extradition treaties are simple internetwork devices—they enable different legal systems to request cooperation from one another on matters of transnational concern.

If you are a cybercriminal living in a country, such as the Russian Federation, that does not have an extradition treaty with the United States or any other country, you should be careful not to travel to a country that does. Knowing which states have extradition treaties is crucial upcode for traveling cybercriminals. Surprisingly, not all bear this in mind.

Vladislav Klyushin, age forty-two, ran M13, a cybersecurity company catering to the top echelon of Russian society and government. M13’s website, for example, claims that it provides security for the Russian presidency. In 2020, Vladimir Putin bestowed the Medal of Freedom on Klyushin. The FBI, however, suspected that Klyushin had a side hustle making tens of millions of dollars on stock trading from hacked corporate-earnings information.

In the spring of 2021, federal agents learned that Klyushin was traveling to Switzerland. On March 21, a private jet from Moscow touched down at Sion Airport in southwestern Switzerland. Shortly after leaving the plane, with a helicopter standing by to take him to the ski resort of Zermatt, Klyushin was detained by Swiss police and taken to a nearby prison. His wife, five children, and business associate traveling with him continued on to Zermatt and stayed at a luxury chalet for almost ten days before returning to Moscow.

Both Russia and the United States asked Swiss courts to extradite Klyushin back to their respective countries. In the meantime, the Department of Justice secured indictments against Klyushin and Ivan Yermakov. Yermakov, you might recall, did the reconnaissance and some phishing for Fancy Bear in 2016. He had since left the GRU and joined M13. The insider-trading charges were Yermakov’s second indictment, as he’d been charged for espionage in 2018 for the DNC hacks. The indictments against Klyushin and Yermakov alleged that they hacked into the servers of two agencies used by U.S. publicly traded companies to file their quarterly reports and obtained them shortly before their release. With this pilfered information, these men made investment decisions on companies such as IBM, Snap, Tesla, and Microsoft, earning profits of $82.5 million.

The United States had another interest in Klyushin. Because he provided cybersecurity services for the Russian presidency, he likely had documentation on how the GRU hacked the DNC in 2016. Russia was obviously keen to keep Klyushin out of American hands, but Swiss courts sided with the United States. A high-level Kremlin insider now sits in a federal prison in Boston awaiting trial on insider-trading charges.

In addition to seeking extradition, states have formed intelligence- and resource-sharing alliances for criminal prosecutions. Consider the recent takedown of the massive botnet Emotet. Emotet began in 2014 as a banking Trojan—malware that steals financial information from banking apps. In 2016, it evolved into a general cybercriminal platform. Emotet C2s could download many types of malware onto its bots—banking Trojans, spamming applications, DDoS software, keyloggers, ransomware, clickjackers, and so forth. Emotet was among the most professional and longest-lasting crime-as-a-service platforms in the world, extracting an estimated $2.5 billion from the global economy. By 2020, the botnet was active on a million computers.

Under the auspices of EMPACT (European Multidisciplinary Platform Against Criminal Threats), Germany, the Netherlands, the United States, the United Kingdom, France, Lithuania, Canada, and Ukraine collaborated to seize Emotet’s geographically distributed infrastructure. Once asserting control over the servers, EMPACT pushed an update instructing bots to download a self-destruct program from its C2. When the bots ran the update, these digital lemmings killed themselves.

Cyberdiplomacy and the development of global upcode for transnational prosecution will be important going forward. But more aggressive prosecutions are no panacea. Many countries, especially corrupt ones that shelter cybercriminals, refuse to cooperate with states that prosecute cybercriminals. Even among cooperative states, cross-border investigations and prosecutions are costly. As one law enforcement officer told the Oxford sociologist Jonathan Lusthaus, we “can’t arrest our way out” of this problem. As with the Bulgarian virus writers, those who resort to cybercrime often have no other viable alternatives given their skill set. There may be cheaper ways to intervene before a conspiracy becomes so large that it is an international concern.

The systemic interventions I will suggest can be called the three Ps: pathways to cybercrime, payments for cybercrime, and penalties for vulnerable software. The three Ps are not silver bullets that will solve our problem of cyber-insecurity, but they are more efficient than constant patch-and-pray, which has characterized our digital lives until now.

Cyber-Enabled and Cyber-Dependent Crime

Most cybercriminals do not hack or rely on sophisticated technical skills. Consider “market” cybercrimes—the online sale of contraband such as Social Security numbers, identity documents, credit card information, prescription drugs, illicit drugs, weapons, malware, child sexual abuse materials, body parts, and sex. Participation in these illicit markets merely requires knowing how to use a Tor Browser and a cryptocurrency wallet. Similarly, hacking plays no role in garden-variety online fraud, such as advance-fee schemes, eBay scams, spear phishing, whaling, and catfishing.

Security theorists call these types of crimes “cyber-enabled”—they are traditional crimes facilitated by computers. Cyber-enabled crimes are distinguished from “cyber-dependent” crimes—such as unauthorized access, spamming, DDoS-ing, and malware distribution—which can only be perpetrated with computers.

While hackers participate in cyber-dependent crimes, not everyone who participates in cyber-dependent crimes is a hacker. Crime-as-a-service websites let nonhackers commit cyber-dependent crime. Like the booter services set up by VDoS and the Mirai gang that enabled cheap DDoS-ing, these websites enable anyone to “stress” websites, gain unauthorized access to computer networks, implant malware, and create phishing emails. Hacking has become like ordering anything online: point, click, attack.

Even when hackers engage in sophisticated cyber-dependent crime, they almost always partner with nonhackers. To see why, imagine a hacker who steals credit card numbers. Since he wants to profit from the theft, he needs to convert the stolen information into money—in the lingo, to “cash out.” To cash out by himself, he buys a credit card machine to encode blank cards with the stolen information. He then uses the freshly minted cards to buy expensive goods (such as handbags, watches, and video game consoles) from reputable businesses, being careful to split the purchases over numerous establishments so as not to raise suspicion. He might also buy these items online, then sell them back on eBay at a discount. Then there is the packing and shipping. And the collecting, depositing, and laundering the funds. Computing not only obeys the physicality principle; criming does, too.

Cashing out on cybercrime is also dangerous. The police caught Cameron LaCroix after the Paris Hilton hack by discovering a credit card machine, blanks, and game consoles in his brother’s car. It is safer and more efficient for hackers to outsource cashing out to less skilled accomplices. They might partner with recruiters who assemble groups to purchase consumer goods for them. They might hire shipping services that package items for resale over eBay. They might employ mules to deposit cash in banks, gift cards, or wire services like Western Union. They might also retain money launderers if they make too much cash.

Portrait of the Hacker as a Young Man

The universal feature shared by the cybercriminals in this book is that they have engaged in cyber-dependent crime: unauthorized access, release of viruses, SQL injections, token stealing, DDoS-ing, and so forth. Moreover, they started young. Cameron LaCroix was ten years old, Robert Morris twelve, Yarden Bidani and Itay Huri of the VDoS gang and MafiaBoy fourteen, Dalton Norman and Paras Jha sixteen. In Sarah Gordon’s survey of virus writers, the majority were under age twenty-two and all were male.

Another notable feature of our hackers is their initial motivations: they all started because it was fun. Though some ended up making money from cyber-dependent crime, Robert Morris Jr., Cameron LaCroix, Paras Jha, the Defonic Team Screen Name Club crew, and Dark Avenger began hacking as play. They were taken with the intellectual challenge and derived satisfaction from solving puzzles. Many were also seeking respect. They wanted to be known as elite hackers (in hacker-speak, leet or 1337).

Our hackers also learned from one another. With the exception of Robert Morris Jr., who learned from his dad, a world expert in cybersecurity, the young hackers picked up their craft from internet bulletin boards. The Bulgarian virus writers used the vX and FidoNet, Cameron LaCroix and the Defonic crew cut their teeth on AOL, and Paras Jha learned from Hack Forums. These boards were instrumental in teaching these boys how to hack. They also fostered the peer pressure that encouraged escalation of deviant activities, from game cheats and booting to profit-oriented cybercrime.

Fortunately, new research confirms the portrait that emerges from these case studies. Alice Hutchings, the director of the University of Cambridge’s Cybercrime Centre, had done the most extensive study of criminal hackers since Sarah Gordon’s work in the 1990s. As a graduate student at Griffith University in Australia, Hutchings used many of the same methods as Sarah Gordon two decades earlier: surveys and detailed, qualitative interviews with hackers. Her findings further confirm many of Gordon’s early insights and the portrait of cyber-dependent criminals drawn in this book.

The cyber-offenders Hutchings interviewed were not worried about getting caught. A young male hacker is quoted as saying, “Um, it is hard to get caught. The penalties are severe, but, I mean, the chances of getting caught are quite low, especially if you take the proper precautions.” Hackers also have a low opinion of law enforcement’s ability to investigate online crimes. Rather, it is career and relationship choices that tend to cause offenders to call it quits, and for younger offenders, these choices often coincide with maturing and entering adult life. As Sarah Gordon argued, and the Gluecks’ data set from chapter 4 showed, criminals usually age out of crime. One hacker that Hutchings interviewed described his choice to stop as follows: “Um, no real reason to be honest. Nothing really happened that I thought ‘I’d better stop doing this.’ I just kind of started spending my time doing other things … Hanging out with people in real life a lot more.”

Unlike in traditional crime, where education and employment reduce the likelihood of offending, in cyber-dependent crime criminals enjoy comparatively higher education and employment status. There is also a sharp gender disparity. When women are cybercriminals, they are rarely hackers and are more likely to engage in cyber-enabled offending. The source of the disparity derives in part from the pipeline: technical offenders often get their start as part of an online video game culture that is hostile to women. When these male gamers segue into hacking, they are joining another community that rarely accepts women.

Hutchings also found hackers to be moral agents, possessing a sense of justice, purpose, and identity. They attack targets that they believe have caused harm to others and avoid targets that they believe to be undeserving. They also justify their hacks with obtuse excuses that stem from the moral distortions of online environments—e.g., “Which individual am I really harming?” and “It’s their fault, they should have secured their computer!” But they rarely deny responsibility for their actions.

As we will see when examining possible intervention, recognizing the upcode that hackers follow is key for any proposed intervention to work. Indifference or disrespect for a hacker’s reasoning and principles may turn an intervention from a positive to a negative force, increasing criminal behavior rather than encouraging desistance. As Sarah Gordon once observed to an audience at the Santa Fe Institute, everyone wants to be Neo, the hacker from The Matrix played by Keanu Reeves.

Diverting at-risk and low-level cyber-offenders is crucial because hacking tends to escalate. As criminologists have long known, breaking the law once makes breaking it again easier. Marginal criminality often escalates into more serious violations. Paras Jha echoed a similar explanation at his sentencing hearing: “What started off as a small mistake continued down a slippery slope to a point where I am ashamed to admit what I have become.”

Interventions

Alice Hutchings’s research provides further evidence for what should already be obvious: hackers get a lot out of hacking. As we saw with Mirai, the Bulgarian virus factory, and DFNCTSC, community and status are crucial to hacker motivation. According to the U.K. National Cyber Crime Unit study, “Social relationships, albeit online, are key. Forum interaction and building of reputation scores drives young cyber criminals. The hacking community (based largely around forums) is highly social. Whether it is idolising a senior forum member or gaining respect and reputation from other users for sharing knowledge gained, offenders thrive on their online relationships.” According to an eighteen-year-old arrested for hacking into the U.S. government, “I did it to impress the people in the hacking community, to show them I had the skills to pull it off … I wanted to prove myself … that was my main motivation.”

Because hacking is a social activity where one of the major benefits is peer recognition, peer contagion is a threat to the success of interventions. Having delinquent peers is one of the most consistent predictors of recidivism, especially for young people, as these peers model criminal behavior, reward it, and inflict punishment (such as rejection) on those who choose not to engage.

Cyber-dependent crime is especially tempting because it combines the thrill of hacking with the belief in invincibility. As mentioned earlier, hackers rarely think they’ll get caught. Positive alternatives, therefore, have to deliver comparable benefits for hackers, or they need to be convinced that they can be prosecuted (or both). These interventions must also presuppose that hackers have a sense of justice and will be persuaded more by morality than fear.

Consider targeted warnings and cautions, which have been used to great effect in many settings. For instance, a rigorous study in which eighteen thousand negligent drivers were sent warning letters found that low-threat caution letters deterred violations better than no letters (and also better than high-threat letters). While there is little published data for cybercrime, one study has shown that moral messages turn out to be more effective in reducing the damage hackers do when trespassing. In a 2016 study, for example, a compromised computer displayed an altruistic message asking hackers not to steal data: “Greetings friend, We congratulate you on gaining access to our system but must request that you not negatively impact our system. Sincerely, Overworked admin.” Compared to no warning, ambiguous warnings, and legal-threat warnings, the altruistic message had a significant effect in reducing further bad action (the ambiguous and legal warnings, if anything, increased hackers’ subsequent bad actions). As the Cambridge Cybercrime Centre research group concluded, “Where offenders see their actions as warranted because the ‘system’ is unfair, their responses to official intervention may be ones of defiance or resistance.”

A year before it joined up with VDoS to form PoodleCorp in 2016, LizardStresser’s booter database was hacked and the information leaked. Six LizardStresser customers were subsequently arrested for buying DDoS attackers, while fifty people who registered with LizardStresser but did not seem to have carried out attacks received a visit from the police. There was no follow-up data, unfortunately, to assess the efficacy of these “cease-and-desist” visits. But they ought to be retried and studied.

As black hats have transitioned into white hats, including Robert Morris Jr., Mudge, the Mirai trio, and famous hackers such as Kevin Mitnick and Kevin Poulsen, diverting young offenders to cybersecurity programs that encourage them to harness their skills and provide a sense of power and purpose is promising. The U.K. and Dutch cyber-diversion programs not only run hacking competitions, where teams compete to hack a target network, but also seek to match hackers up with older security personnel to act as mentors and direct their charges into the legitimate cybersecurity industry. As a report from the National Cyber Crime Unit of the National Crime Agency, the U.K.’s top cybercrime unit, notes, “Role models will often be the cyber-criminal at the top of the ladder the young people are trying to climb. Ex-offenders who managed to cease their activities and gain an education or career in technology have credited this change to a positive mentor, or someone who gave them an opportunity to use their skills positively.”

While the data on cybercrime mentoring effectiveness is scant, there are promising results on mentoring for other sorts of crime, especially when mentors contribute emotional support and advocacy. Sarah Gordon and Elliott Peterson are excellent models for what effective counselors and program leaders could be like: adults who respond with empathy, push back respectfully, and have experience with cybersecurity. Mentors who work in tech or the cybersecurity industries would seem to be a good fit, since they can interact virtually, and hackers find virtual relationships to be strong ones.

The hackers studied by Alice Hutchings and those we have met in this book are not universal stereotypes. They live in Western countries with robust market economies. The criminal hackers one finds in the United States, the U.K., and Western Europe tend to be younger than offenders in other areas of crime and are drawn into criminal hacking largely through gaming forums.

As Jonathan Lusthaus, the sociologist from Oxford University, has found, however, technical offenders in Eastern Europe tend to be older than their Western counterparts. Lusthaus estimates the age of the 250 cybercriminals he has interviewed to be approximately thirty. They also have formal technical training, usually in some STEM field. These offenders turn to hacking not out of sense of community or political outrage. They hack because they can’t find jobs in the tech industry that pay enough given their skills.

To address these overskilled and underemployed hackers, Lusthaus has proposed that cybersecurity firms recruit in Eastern Europe. If these men go into cybercrime because they lack legitimate opportunities where they live, we might try to bring them to where the jobs are: “There is a large pool of unemployed, underemployed and underpaid programmers looking for work in Eastern Europe’s challenging economies.” Providing these struggling coders legitimate jobs removes the main reason they have resorted to cybercrime.

We might learn here from our adversaries. Recall that the head of Fancy Bear, Viktor Netyksho, helped design curricula at and recruited from technical high schools. Fancy Bear also looks to the black hat community to draft talent. Law enforcement might redirect technical offenders into the white hat security community to protect computer systems. Turning black hats into white hats is a double win. Not only is there one less attacker, there is also one more defender. The need is quite urgent. Industry leaders estimate that the field needs 3.5 million new workers just to keep pace with demand. If we could fill some of those positions with budding hackers, we will need fewer positions.

Indeed, recruiting hackers from Eastern Europe has become easier since Russia’s invasion of Ukraine. Given the Russian crackdown on dissent over the war and the severe sanctions imposed by the West, throngs of highly skilled professionals have fled the country. The brain drain for IT professionals has been especially severe. An IT job with a decent salary and benefits would not only provide work for those escaping tyranny, but also remove the incentive many programmers have for resorting to cybercrime.

Payment Systems

Recall that the VDoS gang used to accept PayPal as payment for their DDoS service. That ended in 2015, when PayPal began cracking down on these services. PayPal was prodded by a team of academic researchers from George Mason University, UC Berkeley, and the University of Maryland. This team posed as buyers of booter services in order to trace the PayPal accounts that these services were using. When PayPal found out, it seized these accounts and balances. As Brian Krebs put it, PayPal launched “their own preemptive denial-of-service attacks against the payment infrastructure for these services.”

Like other booter services, VDoS tried to fill the gap with Bitcoin. But since customers found paying with cryptocurrency harder than using PayPal, booter services lost money. Researchers noted that booter services still insisted on using PayPal as a payment service even though these accounts were routinely banned and their contents confiscated.

Going after payment systems disrupts cybercriminal enterprises because they are choke points in marketing. Cybercriminals are not irrational. If they cannot get paid, providing their illicit services is pointless. In 2011, another group of academic researchers purchased counterfeit pharmaceuticals and software advertised by spam emails to discover which banks were handling the payments. When they found the payment processors, these researchers lodged complaints with the International AntiCounterfeiting Coalition, a nonprofit group that helps brands combat trademark violations. The IACC then notified Visa and Mastercard. Since banks are contractually prohibited from processing credit card payments for goods that are illegal to purchase in the United States, Visa and Mastercard began imposing fines on these banks as a response to these dogged academic detectives. Almost overnight, the sales of counterfeit Viagra and Microsoft Windows plummeted. In the words of one Russian spammer posting on a public forum: “Fucking Visa is burning us with Napalm.”

Most cybercriminals today use cryptocurrencies, such as Bitcoin. When ransomware encrypts someone’s hard drive or a company’s network, making their data unreadable, and demands that the victim pay ransom for the decryption key, the medium of exchange is almost always Bitcoin. To target the payment systems of modern cybercrime we should, therefore, target Bitcoin.

Bitcoin is often advertised as an anonymous form of payment, like cash. When you hand over a dollar for gum, the cashier doesn’t need to know who you are. The cashier just takes your money. But Bitcoin is not like cash in this respect. Bitcoin is not anonymous—it is pseudonymous.

People paying with Bitcoin have to use a name to identify themselves. That name is known as a Bitcoin address. A Bitcoin address is an ugly string of alphanumeric characters, such as 1BvBMSEYstWetqTFn5Au4m4GFg7xJaNVN2. A Bitcoin address reveals how much Bitcoin is associated with that address. That information is stored on a public ledger known as a blockchain. Thus, when someone tries to pay with Bitcoin, those who maintain the blockchain check it to see whether the sender has enough Bitcoin. If so, the transaction is listed on the blockchain, so that future sellers will know that the Bitcoins associated with that address have been transferred to a new Bitcoin address.

Everyone who has access to an internet browser can tell how much money is associated with a Bitcoin address. It is public information. But the identity of the owner of that address is not public. I may own the Bitcoins at 1BvBMSEYstWetqTFn5Au4m4GFg7xJaNVN2, but the information that I’m the owner is not posted on the blockchain.

The identity of Bitcoin owners would be anonymous, except for one wrinkle: the vast majority of Bitcoin owners buy their currency from cryptocurrency exchanges. Exchanges such as Coinbase and Crypto allow people to use dollars or other state-sponsored fiat money (euros, shekels, lires) to buy cryptocurrency. These exchanges are subject to special regulations to prevent money laundering, known as KYC, for “know your customer.” Opening an account on cryptocurrency exchanges is like opening a bank account. Customers have to provide their Social Security numbers, government-issued identification, and other personal identifying information. These Bitcoin owners are therefore not completely anonymous. Their exchange knows who they are.

Since Bitcoin transactions are public and cryptocurrency exchanges know the true identity of customers, states can use the legal process to force exchanges to reveal this private information. But here’s the rub: some big exchanges do not follow KYC regulations, for they are incorporated in countries that do not impose these requirements. Smaller exchanges, known as over-the-counter brokers, are also exempt from KYC regulations. Unsurprisingly, cybercriminals use these exchanges and brokers.

To disrupt the cybercriminal industry, states should impose KYC requirements on all brokers. They should also impose restrictions on the ability of exchanges to do business with exchanges and brokers that do not comply with KYC requirements. By ensuring that the true identity of a Bitcoin holder is known to exchanges, law enforcement can learn the identity of bad actors that use Bitcoin as part of malicious activities. Law enforcement can also force exchanges to exclude these actors from their platforms.

Indeed, the United States has started to sanction cryptocurrency exchanges for laundering ransomware proceeds. The Department of Treasury’s Office of Foreign Assets Control (OFAC) designated the cryptocurrency exchange SUEX a sanctioned entity. U.S. citizens and financial institutions are generally banned from doing business with sanctioned entities. OFAC also warned U.S. entities that they can be sanctioned if they pay ransomware to sanctioned entities, even if they are unaware that the entities are sanctioned.

Liability

As Shoshana Zuboff has argued, we live in the age of “surveillance capitalism.” Entire industries exist for the sole purpose of harvesting consumer information and selling it to advertisers. Google, Facebook, and Twitter want consumers to use their platforms so they can collect reams of personal information. Even companies such as Amazon, Best Buy, and Target, which sell actual things like books, televisions, and socks, relentlessly surveil and amass data on their customers. That information is then used to personalize promotions for return business. It’s called behavioral targeting.

In the age of surveillance capitalism, the hacking of firms that collect our personal information is a constant threat. Since 2017, Capital One, Macy’s, Adidas, Sears, Kmart, Delta, TaskRabbit, Best Buy, Saks Fifth Avenue, Lord & Taylor, Panera Bread, Whole Foods, GameStop, and Arby’s have suffered massive data breaches. In the most damaging breach of all, the personal information of 147 million applicants was stolen from the credit-rating firm Equifax, including credit card numbers, driver’s licenses, Social Security numbers, dates of birth, phone numbers, and email addresses.

Data breaches are major betrayals of trust. We divulge highly personal information to companies because we trust them to protect our privacy. In many cases, these companies that enrich themselves on our data have failed to take adequate precautions to protect the data. In the Equifax case, for example, the breach occurred because—incredibly—IT failed to patch a computer vulnerability rated a ten (out of ten) for dangerousness. When its database was breached in 2018, Marriott International, the world’s largest hotel chain, revealed that it did not use encryption for the passport numbers of over five million guests.

These outrageous betrayals occur because the legal system imposes few financial penalties for data breaches. Legal consequences are laughably slight. Every state in the United States requires that large organizations disclose major data breaches, usually within ninety days of their occurrence. Most states also require companies to offer identity-theft monitoring services for one to two years—usually at $19.95 per year. American courts make it extremely difficult to sue for any other form of relief.

Tech companies, therefore, have had little financial incentive to make serious investments in security—not just in creating secure downcode, but also in developing and enforcing secure corporate upcode. The Equifax disaster occurred not simply because someone in IT failed to patch a dangerous software vulnerability. It happened because of a corporate culture that did not value its customers’ security. The downcode remained vulnerable because the corporate upcode was vulnerable. If you don’t try to build a strong IT department, you don’t get a secure computer system.

The law, however, can change the corporate calculus. By imposing stiffer penalties and allowing victims to sue for data breaches, the law would make cybersecurity a financial imperative. Here we can learn from banking fraud. In the United States, if a customer disputes an ATM transaction, the onus is on the bank to prove that the customer is not telling the truth. As a result, U.S. banks take ATM fraud seriously. These banks invested in effective anti-fraud measures because they are ultimately liable for the transactions, not the customers. But in Britain, Norway, and the Netherlands, the burden was reversed. The onus lay on customers to prove that they did not authorize the transactions. Banks in these countries did not police fraud nearly as carefully because they had no incentive to do so. The levels of banking fraud there were much higher than in the United States as a result.

If data companies were also held financially responsible for privacy breaches, we should expect to see greater vigilance. We have recently begun to hold these firms accountable. In 2019, Equifax agreed to pay at least $575 million, and potentially up to $700 million, as part of a global settlement with the Federal Trade Commission (FTC), the Consumer Financial Protection Bureau, and fifty U.S. states, for failing to take reasonable steps to prevent the data breach that affected approximately 147 million people. This mammoth fine isn’t the only example of legal action related to surveillance capitalism, nor is it the biggest. In the same year, Facebook agreed to pay a $5 billion penalty to settle charges after the company violated a 2012 FTC order by deceiving users about its ability to control the privacy of their personal information.

Accountability is materializing, but in a chaotic, rather than systematic, fashion. Jane Chong, an assistant U.S. attorney for the Southern District of New York, aptly characterizes the problem: “Efforts to enforce software security will continue but could well result in a body without bones: big occasional settlements that strike fear into the hearts of vendors, but paired with little substantive development of the law to reliably guide vendors’ development, monitoring and patching practices.” The FTC, currently the agency that has done the most to hold data companies accountable for breaches, has the legal authority to take on companies that have “unfair or deceptive acts and practices in or affecting commerce.” But this tiny snippet of upcode does little to address the complex legal problems that need to be solved: What about when the software is free? Partially open-source? Put on your computer by an intermediary? Congress has not, thus far, provided any guidance for software liability regulation, other than immunizing software companies. Without clear legal upcode, companies can’t know which part, and how much, of their corporate upcode around data needs to change to avoid legal penalties.

Treating software malfunctions and breaches more like how we treat defective toasters carries some risks. But we might look to the auto industry for a lesson here. Chong points out that, in the 1960s, people were hesitant to impose liability on automobile manufacturers for unsafe vehicles. In a court ruling on whether General Motors was negligent for not including widespread safety features in a 1961 station wagon, the court rejected the claim because a “manufacturer is not under a duty to make his automobile accident-proof or foolproof.” But in 1966, a year after Ralph Nader published Unsafe at Any Speed, in which he charged car companies with resisting safety improvements, Congress passed the National Traffic and Motor Vehicle Safety Act, refocusing automobile safety on the vehicle, rather than the driver.

Today, similar concerns are expressed about software liability: tech is too important to our society to be slowed down, yada yada yada. Software liability would considerably slow progress if the goal was to create software as secure as possible. Remember when the military sought to build the highly secure VAX VMM Security Kernel but canceled the project because the technology became obsolete before it was finished? But that’s the lesson of the auto industry: legislation can be nuanced. We don’t need perfect security, just reasonable precautions, and until now, between Equifax, Marriott, and T-Mobile, reasonableness has been in short supply.

Illicit markets exist because people are willing to buy and sell illegal goods. If we can stop the supply of illicit goods, we can kill the market. Imposing financial penalties for data breaches is one way to ensure that companies do not furnish cybercriminals with the goods they need to run their businesses.

B. CYBERESPIONAGE

Recall the SolarWinds hack described in the introduction: Russian intelligence (most likely Cozy Bear) infiltrated eighteen thousand computer networks across the globe through a clever supply-chain attack. It compromised SolarWinds’ update servers and planted malware inside “patches.” When the company pushed an update in March 2020, Russian intelligence had access to the thousands of companies and government agencies who trusted SolarWinds.

Given the enormity of the compromise, American politicians responded with fury. President Biden vowed that President Putin would have “a price to pay.” Echoing President Biden’s vow of retaliation, Senator John Cornyn, a Republican member of the Senate Intelligence Committee, called for “old-fashioned deterrence.” In April 2021, the White House imposed sanctions on the Russian Federation. In addition to OFAC freezing the assets of sixteen Russian citizens involved in the 2016 DNC hack and preventing U.S. entities from doing business with them, the Department of the Treasury placed restrictions on Russia’s ability to sell its own sovereign debt.

These actions make sense politically, but not legally. As we saw in chapter 8, the United States recognizes that espionage is legal according to international law. Every state spies on every other state and believes that it has the legal right to do so. As we noted earlier, while American law forbids Russia from spying on America, it permits America to spy on Russia. Vice versa for Russian law.

The SolarWinds hack was espionage. The supply-chain attack was designed to infiltrate the networks of U.S. government organizations and major corporations to collect information relevant to Russia’s national security. Espionage is different from the cybercrime we have been discussing. Cybercrime is usually committed by private individuals who are seeking some benefit—usually money, but also fame, fun, revenge, or some ideological objective. Espionage is usually committed by state actors acting on orders from superiors seeking information relevant to state objectives. Striking back for the SolarWinds hack would be akin to retaliating against Russia for building more aircraft carriers: acting in its national self-interest by shoring up its defenses.

Not every hack of one state by another is legal according to international law. We will later discuss cyber-conflict—or, as it is often called, cyberwar. In these cases, states don’t merely seek and collect information about matters of national security. They degrade their rivals’ systems to change facts on the ground—e.g., disabling air defense systems, crashing their power grid, altering official records, or releasing classified information. If these actions are illegal, retaliation may be appropriate.

That global upcode distinguishing espionage and war explains, as we saw, why the FBI did not react to the 2015 DNC hack by Cozy Bear with greater alarm. As far as the FBI was concerned, the DNC hacks were normal statecraft.

The bellicose response to the SolarWinds hack is even more off-key when viewed in light of the United States’ own past behavior. Russia did not invent the supply-chain internet attack. As Edward Snowden revealed in 2013, the NSA routinely uses this tactic. According to one such operation, NSA agents waited until Cisco routers sold to foreign countries passed customs. They then opened the boxes, replaced the original chips with backdoor versions, and resealed them—less elegant than what Cozy Bear did to SolarWinds, but just as effective.

It cannot be emphasized enough: the United States, like every other country in the world, spies on other states, allies included. Indeed, the United States may well be the leading spy on the planet. The United States not only breaks into computer systems at a greater rate and on a larger scale than any other intelligence service in the world, it crows about its prowess. “We are moving into a new area where a number of countries have significant capacities,” President Barack Obama said in September 2016. “And frankly we have more capacity than any other country.”

America’s voracious appetite for foreign intelligence did not begin with 9/11 or the birth of the internet. The intelligence community has been hoovering up global communications for decades. The Echelon program was established in the mid-1960s as a network of listening posts strategically situated around the globe. The NSA partnered with the Five Eyes group (United States, U.K., Canada, Australia, and New Zealand) to put in place ground stations, telecom splitters, and cable taps to intercept satellite, telephone, and microwave transmissions. By collaborating with the Five Eyes, the NSA was able to access the major communication hubs on the earth: United States and Canada (North and South America), United Kingdom (North Atlantic and Europe), Australia and New Zealand (Asia). The NSA knows how to exploit the physicality principle.

Brad Smith, the president of Microsoft, called the SolarWinds hack the most spectacular attack the world had ever seen. Maybe not. In 2020, The Washington Post reported on the Swiss company Crypto AG, which manufactures cryptographic equipment for military and diplomatic use. Since 1970, however, Crypto AG had worked closely and secretly with the CIA to embed backdoors in Crypto AG’s machines. For fifty years, the American intelligence community had exquisite access to foreign military and diplomatic intelligence. By contrast, the SolarWinds hack lasted approximately nine months.

Economic Espionage

If we classified cyberattacks by downcode, we would miss crucial distinctions between cyber-espionage, cybercrime, and cyberwar. Whether Cozy Bear used a buffer overflow or phishing to access the SolarWinds update server is irrelevant to how the United States and others should react to these attacks. The proper response is determined by upcode. And that upcode permits states to spy on one another.

If global upcode permits espionage, it is tempting to consider changing it. The major spying states could assemble and negotiate an international treaty to prohibit espionage. Hacking for the sake of national security would be as illegal as piracy on the high seas.

Life is rarely that easy. It is not enough to change global upcode—states have to follow it. Simply signing a treaty won’t change behavior unless signatories have incentives to change their behavior. Otherwise, a treaty is a mere piece of paper.

Unfortunately, states do not have reason to change their behavior. No rational state would give up its right to spy on threats. The prime directive of a state is to protect its people from aggression. It would be irrational to expect countries to act irrationally. Without spying, states would not know the real threats they face. Like crime, espionage is a fact of life.

While no rational state would sincerely forswear espionage, some changes to upcode might alleviate the situation. Consider economic espionage. Chinese hackers had been breaking into the computer networks of U.S. companies for close to two decades and stealing fortunes in intellectual property. In one brazen hack, the Chinese pilfered the entire set of blueprints for the F-35 fighter jet. They burrowed deep within the networks of technology giants such as Google and Facebook. China did not spare the U.S. government. In 2013, it compromised the system of the Office of Personnel Management and exfiltrated the personnel files of the 22 million federal employees. The People’s Republic of China was now in possession of the highly personal information of 7 percent of the U.S. population, all of whom worked (at one time or another) with the federal government. As FBI director James Comey said in 2014, “There are two kinds of big companies in the United States. There are those who’ve been hacked by the Chinese and those who don’t know they’ve been hacked by the Chinese.”

When President Obama threatened China with sanctions if the behavior continued, President Xi backed down. In 2015, the heads of the two greatest cyberpowers signed a historic agreement to limit their hacking. The agreement stated that the two countries would not “conduct or knowingly support cyber-enabled theft of intellectual property, including trade secrets or other confidential business information, with the intent of providing competitive advantages to companies or commercial sectors.”

The last qualification was crucial to both countries. The United States and China had no intention to stop spying on each other. They would continue to break into each other’s systems in the name of national security. They simply agreed not to hack each other for financial gain. Analysts reported that China abided by this agreement, at least until President Trump imposed tariffs on Chinese goods and launched a trade war.

Domestic Espionage

While some changes to global upcode would be welcome, each state should assume that other states are hacking into its networks. There is no way to stop it, though there are ways to shape and limit it. By focusing on foreign hackers, however, we risk losing sight of the dangers posed by domestic surveillance. Every state hacks not only other states, but its own citizens as well.

Domestic surveillance is not necessarily sinister: criminal investigations and counterintelligence operations routinely require the interception of communications between suspects and retrieval of stored messages—emails, texts, phone calls, voice mails, WhatsApp messages, Facebook comments, and so on. Some citizens do commit crimes, assist foreign terrorists, and seek to harm innocent people.

The state, however, is a security mechanism and exhibits moral duality: it can be used either for good or for evil. The state can prosecute criminals, stop terrorists, and fight wars; it can also sow fear, persecute minorities, and punish dissent. Expanding the power of the state increases its ability to protect its people, but also to repress political opposition.

As we learned in chapter 5, since the U.S. government has control over the territory of the United States and much of the global infrastructure of the internet is in the United States, the U.S. government has control over most of cyberspace. If you are an enemy of the United States, this is not good news. But say you are a citizen of the United States. How worried should you be?

If you are a criminal, the answer is “Very.” In the Mirai case, for example, the FBI was able to catch three seemingly anonymous teenagers by harnessing the power of the federal government over private enterprise. Using grand jury subpoenas, search warrants, and d-orders, Elliott Peterson’s team was able to collect IP addresses from telecom companies, chat messages from social media companies, and telephone numbers from cell phone providers. Anna-Senpai never stood a chance.

If you are an agent of a foreign power, you should also be concerned. To get a FISA warrant, the FBI need only show probable cause to believe that you are an agent of a foreign power and a source of foreign intelligence information. If the Foreign Intelligence Surveillance Court agrees, it may authorize the full resources of the U.S. government to surveil you and collect information about you and your associates. In 2016, for example, the FISC approved a FISA warrant to surveil Carter Page, an American citizen and an adviser to the Trump campaign, after the FBI suspected that he was an agent of the Russian government.

Regardless of whether an American is a criminal or an agent of a foreign power, they are still legally protected by the Fourth Amendment to the U.S. Constitution. The FBI cannot target them without an order from a federal court issued by a judge confirmed by the U.S. Senate. Illegal surveillance is a serious crime. FISA makes it a felony for a federal official to surveil Americans on American soil without a warrant, punishable by up to five years in prison.

Even though strong legal protections are in place against the surveillance of American citizens, the Snowden leaks in 2013 caused great alarm in the United States. As Snowden revealed, the NSA spies on American soil without judicial warrants. Some saw Edward Snowden as a hero for disclosing this information; others, as a traitor. But everyone was shaken. In 2013, Gallup found that hacking had become Americans’ top two crime fears, with 69 percent afraid of hacks that harvest credit card information and 62 percent frightened of any kind of hack.

These fears are understandable. But are they justified? Should American citizens be concerned that their government will use its formidable powers against them?

What Snowden Revealed

The Snowden revelations are best remembered for how they exposed the NSA’s immense capabilities in foreign intelligence collection, how it ruthlessly hacks adversaries overseas and widely shares data with allies. But those revelations about menacing-sounding programs such as MUSCULAR and BOUNDLESS INFORMANT were to come later. The first stories written about the leaks focused on domestic surveillance instead.

“The National Security Agency is currently collecting the telephone records of millions of US customers of Verizon, one of America’s largest telecom providers, under a top-secret court order issued in April,” Glenn Greenwald wrote on June 5, 2013, in The Guardian. The next day, both The Washington Post and The Guardian published articles describing a program code-named PRISM, which both papers claimed allowed the NSA to directly access the servers of major tech companies to retrieve the content of international messages. “The National Security Agency and the FBI are tapping directly into the central servers of nine leading U.S. Internet companies, extracting audio and video chats, photographs, e-mails, documents, and connection logs that enable analysts to track foreign targets,” wrote Barton Gellman and Laura Poitras on June 6, 2013. Greenwald’s and Gellman and Poitras’s articles would win The Guardian and The Washington Post the Pulitzer Prize in Public Service.

Snowden not only caused an intelligence crisis for the national security community—a Pentagon review concluded that his theft of secrets was the “largest in American history”—but he also created a political crisis. The NSA, under directions from the Obama administration, was indeed spying on Americans. Surprisingly, however, Snowden did not create a legal crisis. No one accused the NSA of engaging in criminal activity. Except for possibly the leaker, no one was going to jail. The emerging story was not of a lawless NSA running amok, spies blithely flouting the rules to satisfy their lust for power. If anything, the legality of the surveillance programs was part of the scandal.

As we saw at the end of chapter 5, the Bush administration went to Congress and asked for legislation explicitly authorizing warrantless domestic surveillance. In 2007 and 2008, Congress amended FISA to permit a lot of what Bush, and then Obama, ordered the NSA to do. Under the updated legislation, the NSA was permitted to engage in electronic surveillance on U.S. soil under limited conditions without a FISA warrant.

There was one big problem: the surveillance programs that Obama approved and Snowden revealed collected vastly more information than a plain reading of the new FISA legislation allowed. The Guardian and The Washington Post reported, however, that the Foreign Intelligence Surveillance Court had interpreted the new FISA expansively. The FISC acquiesced to the Obama administration’s aggressive surveillance of American citizens, such as permitting the NSA to engage in bulk collection of all American phone records. Intelligence programs—both foreign and domestic—grew under the Democratic president who, as a candidate, criticized the surveillance practices of his predecessor.

As a secret court, the Foreign Intelligence Surveillance Court holds its proceedings in a windowless vault on the third floor of the U.S. District Courthouse in Washington, D.C. The proceedings are ex parte: one side only—the government. These one-sided hearings are held in secret and their transcripts are not released. In 2013, the FISC’s legal rulings were kept secret. The FISC’s order linked in the June 6, 2013, article not only compels Verizon to hand over all call records daily but also forbids Verizon or anyone else from disclosing the existence of the order.

Snowden did not, therefore, reveal any illegality. The NSA had been following the congressional statutes, and court orders, when they engaged in warrantless surveillance of American citizens. But without Snowden, we would not have known that a court of unelected judges—not Congress—was secretly determining surveillance policy in the United States. Under the cloak of national security, the FISC evolved from a court that scrutinized warrants based on government-provided evidence to a regulatory agency setting the national security practices of the U.S. intelligence community. All. In. Secret.

Snowden’s disclosures about domestic surveillance are disturbing beyond any aggressive action the NSA might have taken after 9/11. They show how the Bush and then the Obama administrations exploited the secrecy of the FISC’s rulings to hide dubious legal interpretations. To use a downcode metaphor, two presidential administrations hacked the upcode by getting the surveillance court to issue top-secret rulings. As Senator Ron Wyden complained in the well of the Senate on May 26, 2011, during the Patriot Act reauthorization debate, “It’s almost as if there are two Patriot Acts, and many members of Congress haven’t even read the one that matters. Our constituents, of course, are totally in the dark. Members of the public have no access to the executive branch’s secret legal interpretations, so they have no idea what their government thinks this law means.”

The rule of law demands that laws be public, so that citizens may know what is being done in their name. While Americans do not have a right to know what the NSA is doing, they have the right to know what it is legally entitled to do. It is bad enough that the FISA statute is nearly inscrutable, perhaps intentionally so. Snowden revealed that the U.S. government was actively subverting the rule of law by hiding upcode behind a secret court so as to conceal what the NSA was doing with downcode. The American people therefore had no way of knowing what they were authorizing, and their representatives did not know what they were enacting, because the U.S. government had made the law a highly classified state secret.

NSA Has Upcode Too

In Laura Poitras’s riveting documentary Citizenfour, a wan and tired Edward Snowden is shown at the moments he leaks to the journalists in his Hong Kong hotel room. He shows them the infamous PowerPoint deck of forty-one slides explaining the workings of PRISM, the surveillance program that collects internet communications from various U.S. companies. The camera turns to Glenn Greenwald, who is slack-jawed: “This is massive and extraordinary. It’s amazing. Even though you know it, even though you know that … to see it, like, the physical blueprints of it, and sort of the technical expressions of it, really hits home in like a super visceral way.”

Greenwald’s astonishment is reasonable. The rational response to great power is great concern. Security tools, we have noted many times, possess a moral duality. They can be used to protect or to oppress.

Security technology, however, does not run itself. It is run by people following upcode. And here, the NSA upcode is much stricter than many realize. The only time an NSA analyst can collect the content of communication in the United States without a warrant is when the call is international and the one party outside the country is not a U.S. person. Otherwise, the NSA has to turn the case over to the FBI for an individualized FISA warrant. If the analyst wants to target the international communications of a foreign terrorist outside the United States, the analyst can do so. But getting the information is not like in the movies. An analyst doesn’t just type selectors, such as an email address or phone number, into a computer and get the raw intelligence associated with the target.

NSA analysts must fill out tasking requests. These requests are sent to reviewers who check the legality of the targeting. Once cleared, tasking requests go to Target and Mission Management, which does a final review and releases the requests to the FBI. Yes, the FBI. The NSA does not run PRISM, or any domestic surveillance program—the FBI does.

Some might be skeptical. How do we know that the NSA and the FBI actually follow these procedures? We know not because the NSA told us. We learned about these protocols from Snowden. The information in the last paragraph comes directly from the second slide of the NSA’s top-secret PowerPoint deck.

Early on in Citizenfour, Snowden explains to the journalists why he was blowing the whistle: “And I’m sitting there, uh, every day getting paid to design methods to amplify that state power. And I’m realizing that if, you know, the policy switches that are the only things that restrain these states were changed … you couldn’t meaningfully oppose these.”

Snowden is right. Because the NSA has terrifying capabilities at its control, the policy switches, what we have been calling upcode, are the only things that stand between us and political repression. Any relaxation of the rules, or any effort to hide these relaxations, not only increases state power, but also increases the likelihood of lock-in. As state surveillance grows, so does its ability to stop those who want to roll back these changes. Calls to ease the upcode governing domestic surveillance should, therefore, be met with great caution.

Regardless of what you think of Edward Snowden, his revelations have led to a healthy backlash against state surveillance and a welcome tightening of the rules and increase in transparency. In 2019, Congress killed the infamous Bush-era, then Obama-era, program for bulk collection of telephone metadata. The NSA is no longer permitted to search the email messages of Americans for identifying terms—such as IP addresses—of foreign targets if they are not corresponding with those targets. Significant legal interpretations of FISA are now made public. Advocates representing the public interest may appear before the FISC. Since 2014, the director of national intelligence has published annual statistics on FISA activity as part of its transparency responsibilities.

At the moment, Americans do not have much to fear from the NSA. But in the future, they might. All will depend on what upcode the U.S. government develops and what the electorate permits.

C. CYBERWAR

A gag running through this book has been about mistaken identity: people routinely suspect nation-states of committing cyberattacks when it turns out to be teenage boys. While these mistaken-identity stories make clear that panicked, doomsday rhetoric about cyberwar is getting current events wrong, it is hard to derive much comfort from that. After all, that three teenage boys can take down the internet might seem to prove one of the doomsayers’ contentions right: a determined nation-state can use the internet to unleash massive devastation.

In 2010, Richard Clarke published a bestselling book entitled Cyber War, in which he warned about the impending dangers. He described a cyber blitzkrieg where hackers overtake the Pentagon’s network, destroy refineries and chemical plants, ground all air travel, unplug the electrical grid, throw the global banking system into crisis, and kill thousands immediately. How easy would this be? “A sophisticated cyberwar attack by one of several nation-states could do that today, in fifteen minutes,” Clarke answered.

How concerned should we be about cyberwar? Was Clarke right about the threat? And what might we do to reduce the risk of the digital Armageddon he describes?

What Is Cyberwar?

Let’s recall our earlier distinction between cyber-enabled and cyber- dependent crime and apply it to cyberwar. In cyber-enabled war, states use computers as part of traditional warfare. Digital networks control artillery banks, air-defense batteries, unmanned aerial vehicles (i.e., drones), and missile guidance systems. Soldiers use email to communicate. States disseminate propaganda on social media.

In cyber-dependent war, by contrast, states use computers to attack the computers of another state. When Russia launched a three-week DDoS on Estonia in 2007, it was waging a cyber-dependent war. The United States also engaged in cyber-dependent war when, in partnership with Israel, it used the Stuxnet worm to infiltrate the computer networks at the Iranian nuclear facility in Natanz.

Richard Clarke’s apocalyptic scene is also cyber-dependent. He imagines a terrorist hacking into the computer networks of oil refineries, power plants, airports, and banks to wreak havoc. These attacks target “critical infrastructure,” resources so vital to the physical security, economic stability, and public health or safety that their incapacitation or destruction would have debilitating effects on a society. In the United States, the Cybersecurity and Infrastructure Security Agency (CISA) is charged with protecting the critical infrastructure of the country. After the Fancy Bear hacks of 2016, CISA included the election system as part of critical infrastructure.

When people speak about the possibility of “cyberwar,” they usually mean cyber-dependent war. After all, modern military conflict is thoroughly cyber-enabled. Cyber-dependent war, by contrast, doesn’t use computers to control weapons—computers are the weapons.

Cyber-dependent war has worried analysts because “cyber-physical” systems—systems that use computers to control physical devices so as to maximize efficiency, reliability, and convenience—have become commonplace. The Internet of Things that Mirai exploited is a cyber-physical internetwork, as are industrial control systems used in power plants, chemical processing, and manufacturing, which were exploited by Stuxnet. By hacking into computer networks, attackers can now cause physical destruction and disruption using only streams of zeros and ones.

Hyperspecialized Weapons

With the exception of Live Free or Die Hard, the 2007 movie in which Bruce Willis saves the United States from a cyberterrorist who shuts down the entire country, the world has not seen anything like Clarke’s doomsday fantasy. We are now in a position to see why. Cyberweapons are not like bombs, which can obliterate anything in their blast radius. Cyberweapons are more akin to chemical and biological weapons. Just as chemical and biological weapons are specialized to specific organisms—we get sick from anthrax but fish do not—cyberweapons work on a restricted set of digital systems.

To see the limitations of cyberweapons, let’s return to the Morris Worm. The Morris Worm was a hyperspecialized program. It targeted only computers that contained distinctive hardware and software. The instructions Robert Morris Jr. encoded in his buffer overflow ran only on certain computers—in particular, on VAX and Sun machines. It was useless on those made by PDP, IBM, or Honeywell, which employed different instruction sets in their microprocessors. When hackers exploit the distinction between code and data, their exploits will work only on machines that run the same code.

The Morris Worm was limited in other ways, too. Even if it found a VAX or Sun machine, the exploit worked only on servers that used the insecure version of Finger. If the version of Finger being used checked for buffer overflows, the exploit would fail. This is why Robert Morris used four attack vectors—because he knew that his buffer overflow would not work on many machines.

The malware we have seen in this book has also been hyperspecialized. The Vienna virus replicated only in DOS environments. The vorms such as Melissa and ILOVEYOU worked only on machines running Windows and Microsoft Office, not on Windows machines running WordPerfect or Eudora (a popular email program), Apple machines, or UNIX or Linux systems. The Cameron LaCroix hack on T-Mobile worked only on T-Mobile’s poorly built authentication system. Whereas the vorms we’ve seen worked only on Windows machines, Mirai worked only on Linux devices, and only on those manufactured with default passwords.

The failure to acknowledge the hyperspecialization of malware is long-standing. In 1988, Vesselin Bontchev objected to the Bulgarian news reports claiming that the Morris Worm could infect every computer on the planet. But if the malware like the Morris Worm is so hyperspecialized, why was the Morris Worm so disruptive? The answer is that the internet was in its infancy then, with few types of computers and few versions of operating systems. To use another biological metaphor, the early internet was akin to a “monoculture.” In the absence of genetic diversity, monocultures are at serious risk of devastating disease. Hybridity, by contrast, promotes resiliency. Diseases that attack one kind of crop are ineffective against others with varying genetic makeups.

Fortunately, the internet in the United States is no longer a downcode monoculture. There are many kinds of computers and myriad versions of operating systems. The heterogeneity of downcode is virtually guaranteed by the upcode of the United States. In a federal system, with fifty separate states—all of whom have autonomy over their own computer systems— cyber-diversity is the norm. The odds that every state will purchase and maintain the same version of the same operating system, web server, database managers, programmable logic controllers, and network configuration are small. By contrast, the NotPetya worm of 2017 spread so quickly and caused $10 billion in damage because the GRU released the malware through an update to M.E.Doc, a software package used by 80 percent of Ukrainian businesses to prepare and pay their taxes.

The technology of hacking suggests that the large-scale destruction envisioned by some authors and Hollywood screenwriters is not likely. But as the SolarWinds hacks show, massive infiltration of the cyber-infrastructure is not impossible. The more popular the software in use, the more likely infiltration will cause widespread damage.

The possibility of cyberwar does not, however, depend purely on downcode. It depends on upcode as well. As we will now see, the upcode of conflict suggests that devastating cyberattacks on superpowers like the United States would be very surprising.

Cyberweapons of the Weak

In 1974, the political scientist James Scott spent two years living in a small rice-growing village in Malaysia to observe the interactions between poor peasants and rich landowners. He was interested in the historical question of why peasant revolts are so rare. One standard answer, favored by Marxists especially, is that peasants do not rebel because they suffer from “false consciousness.” The oppressed have internalized the ideology of their oppressors, believing that their unequal status is the result of a fair and impartial practice of justice, rather than one promoting the self-interest of the ruling class.

Scott’s stay in a small village in Malaysia convinced him that the Marxist response was wrong. Peasant insurrections aren’t rare because peasants suffer from false consciousness. They’re rare because open insubordination is perilous. Peasants have too much to lose and are likely to be crushed. But the absence of defiance does not imply compliance. Peasants fight back, tenaciously, relentlessly—but covertly. Peasants deploy what Scott called “weapons of the weak.” These weapons are everyday forms of resistance, which he listed as: “foot dragging, dissimulation, false compliance, pilfering, feigned ignorance, slander, arson, sabotage, and so forth.” Peasants mount persistent, low-level, and, most important, deniable assaults that seek to undermine the self-interest and moral authority of the ruling class without engaging in open conflict.

Scott’s theory of everyday peasant resistance provides the key to understanding the current state of cyber-conflict. One reason we have not seen a large-scale cyberattack on the critical infrastructure of the United States is that there isn’t anyone one who can pull it off. But even if it was technically possible, it would not be in the attackers’ interest to do so. Any such strike would be catastrophic for the aggressor.

It should come as no surprise that the states that have launched cyberattacks on the United States are geopolitically weak. Consider North Korea and Iran. North Korea is a nuclear power, but it has no military partners and virtually no economy. It routinely struggles with food shortages and has experienced several famines. Iran is a Shiite power with few allies in the Muslim world. It has just emerged from a crippling period of economic sanctions imposed by the United Nations Security Council and the European Union to halt its nuclear weapons program.

North Korea and Iran are the geopolitical equivalent of peasants. They resent their inferior status and feel humiliated by the West. But since they cannot afford to engage in outright rebellion against more dominant nations, they settle for the everyday, low-level harassment characteristic of weak powers.

North Korea’s cyberattacks have the marks of weapons of the weak. The main objectives of these hacks have been vandalism and pilfering. The devastating hack on Sony in 2014 was a classic response to humiliation. Insulted over The Interview, a comedy about the assassination of North Korean leader Kim Jong Un, North Korean hackers wiped the Sony network clean, exfiltrating and releasing personal information about Sony employees and executive salaries, embarrassing emails, plans for future movies, and copies of unreleased films. North Korea has never claimed responsibility for these attacks. They used a front operation—the Lazarus Group—to hide their identity. Severe economic sanctions have also forced North Korea to rely on cybercrime to fund their nuclear research. In 2018, the Lazarus Group attempted to steal a billion dollars from the Bank of Bangladesh’s account at the New York Federal Reserve. The hackers were intercepted when a sharp-eyed regulator noticed a typo—the hackers had misspelled foundation as fandation. He halted the transfer, though $81 million had already disappeared.