Generative adversarial networks (GANs) are a type of deep learning model designed by Ian Goodfellow and his colleagues in 2014.

The invention of GANs has occurred pretty unexpectedly. The famous researcher, then, a PhD fellow at the University of Montreal, Ian Goodfellow, landed on the idea when he was discussing with his friends – at a friend's going away party – about the flaws of the other generative algorithms. After the party, he came home with high hopes and implemented the concept he had in mind. Surprisingly, everything went as he hoped in the first trial, and he successfully created the generative adversarial networks (shortly, GANs).

According to Yann LeCun, the director of AI research at Facebook and a professor at New York University, GANs are “the most interesting idea in the last 10 years in machine learning.”

Method

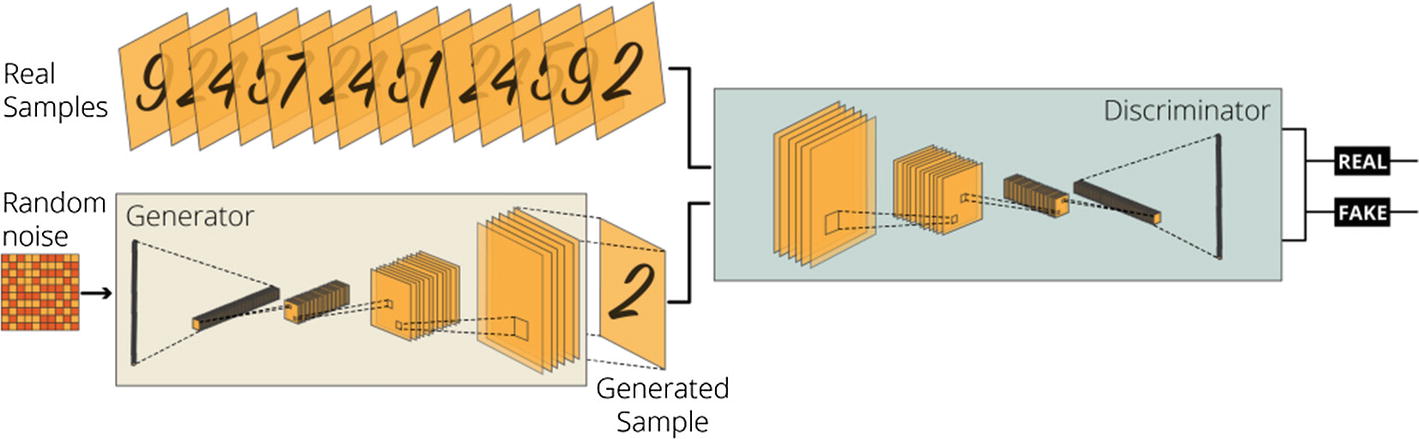

In a GAN architecture, there are two neural networks (a generator and a discriminator) competing with each other in a game. After being exposed to a training set, the generator learns to generate new samples with similar characteristics. The discriminator, on the other hand, tries to figure out if the generated data is authentic or manufactured. Through training, the generator is forced to generate near-authentic samples so that the discriminator cannot differentiate them from the training data. After this training, we can use the generator to generate very realistic samples such as images, sounds, and text.

GANs are initially designed to address unsupervised learning tasks. However, recent studies showed that GANs show promising results in supervised, semi-supervised, and reinforcement learning tasks as well.

Architecture

A Visualization of a Generative Adversarial Network

GAN Components

Generative Network

A generator network takes a fixed-length random vector (starting with random noise) and generates a new sample. It uses a Gaussian distribution to generate new samples and usually starts with a one-dimensional layer, which is reshaped into the shape of the training data samples in the end. For example, if we use the MNIST dataset to generate images, the output layer of the generator network must correspond to the image dimensions (e.g., 28 x 28 x 1). This final layer is also referred to as latent space or vector space.

Discriminator Network

A discriminator network works in a relatively reversed order. The output of the generative network is used as input data in the discriminator network (e.g., 28 x 28 x 1). The main task of a discriminator network is to decide if the generated sample is authentic or not. Therefore, the output of a discriminator network is provided by a single neuron dense layer outputting the probability (e.g., 0.6475) of the authenticity of the generated sample.

Latent Space

Latent space (i.e.,vector space) functions as the generator network's output and the discriminator network's input. The latent space in a generative adversarial model usually has the shape of the original training dataset samples. Latent Space tries to catch the characteristic features of the training dataset so that the generator may successfully generate close to authentic samples.

A Known Issue: Mode Collapse

Weak discriminative network

Wrong choice of objective function

Therefore, playing around with the size and depth of our network, as well as with objective function, may fix the issue.

Final Notes on Architecture

It is essential to maintain healthy competition between generator and discriminator networks to build useful GAN models. As long as these two networks work against each other to perfect their performances, you can freely design the internal structure of these networks, depending on the problem. For example, when you are dealing with sequence data, you can build two networks with LSTM and GRU layers as long as one of them acts as a generator network, whereas the other acts as a discriminator network. Another example would be our case study. When to generate images with GANS, we add our networks a number of Convolution or Transposed Convolution layers since they decrease the computational complexity of the image data.

Applications of GANs

Fashion, art, and advertising

Manufacturing and R&D

Video games

Malicious applications and deep fake

Other applications

Art and Fashion

Generative adversarial networks are capable of “generating” samples. So, they are inherently creative. That’s why one of the most promising fields for generative adversarial networks is art and fashion. With well-trained GANs, you can generate paintings, songs, apparels, and even poems. In fact, a painting generated by Nvidia’s StyleGAN network, “Edmond de Belamy, from La Famille de Belamy,” was sold in New York for $432,500. Therefore, you may clearly see how GAN has the potential to be used in the art world.

Manufacturing, Research, and R&D

GANs can be used to predict computational bottlenecks in scientific research projects as well as in industrial applications.

GAN networks can also be used to increase the definition of images based on statistical distributions. In other words, GANs can predict the missing pieces using statistical distributions and generate suitable pixel values, which would increase the quality of the images taken by telescopes or microscopes.

Video Games

GANs may be used to obtain more precise and sharper images using small definition images. This ability may be used to make old games more appealing to new generations.

Malicious Applications and Deep Fake

GANs may be used to generate close-to-authentic fake social profiles or fake videos of celebrities. For example, a GAN algorithm may be used to fabricate fake evidence to frame someone. Therefore, there are a number of malicious GAN applications and also a number of GANs to detect the samples generated by the malicious GANs and label them as fake.

Miscellaneous Applications

For early diagnosis in the medical industry

To generate photorealistic images in architecture and internal design industries

To reconstruct three-dimensional models of objects from images

For image manipulation such as aging

To generate protein sequences which may be used in cancer studies

To reconstruct a person’s face by using their voice.

The generative adversarial network applications are vast and limitless, and it is a very hot topic in the artificial intelligence community. Now that we covered the basics of generative adversarial networks, we can start working on our case study. Note that we will do our own take from deep convolutional GAN tutorial released by the TensorFlow team.1

Case Study | Digit Generation with MNIST

In this case study, step by step, we build a generative adversarial network (GAN), which is capable of generating handwritten digits (0 to 9). To be able to complete this task, we need to build a generator network as well as a discriminator network so that our generative model can learn to trick the discriminator model, which inspects what the generator network manufactures. Let’s start with our initial imports.

Initial Imports

In the upcoming parts, we also use other libraries such as os, time, IPython.display, PIL, glob, and imageio, but to keep them relevant with the context, we only import them when we will use them.

Load and Process the MNIST Dataset

We already covered the details of the MNIST dataset a few times. It is a dataset of handwritten digits with 60,000 training and 10,000 test samples. If you want to know more about the MNIST dataset, please refer to Chapter 7.

Now our data is processed and cleaned. We can move on to the model-building part.

Build the GAN Model

As opposed to the other case studies, the model-building part of this case study is slightly more advanced. We need to define custom loss, training step, and training loop functions. It may be a bit more challenging to grasp what is happening. But I try to add as much comment as possible to make it easier for you. Also, consider this case study as a path to becoming an advanced machine learning expert. Besides, if you really pay attention to the comments, it is much easier than how it looks.

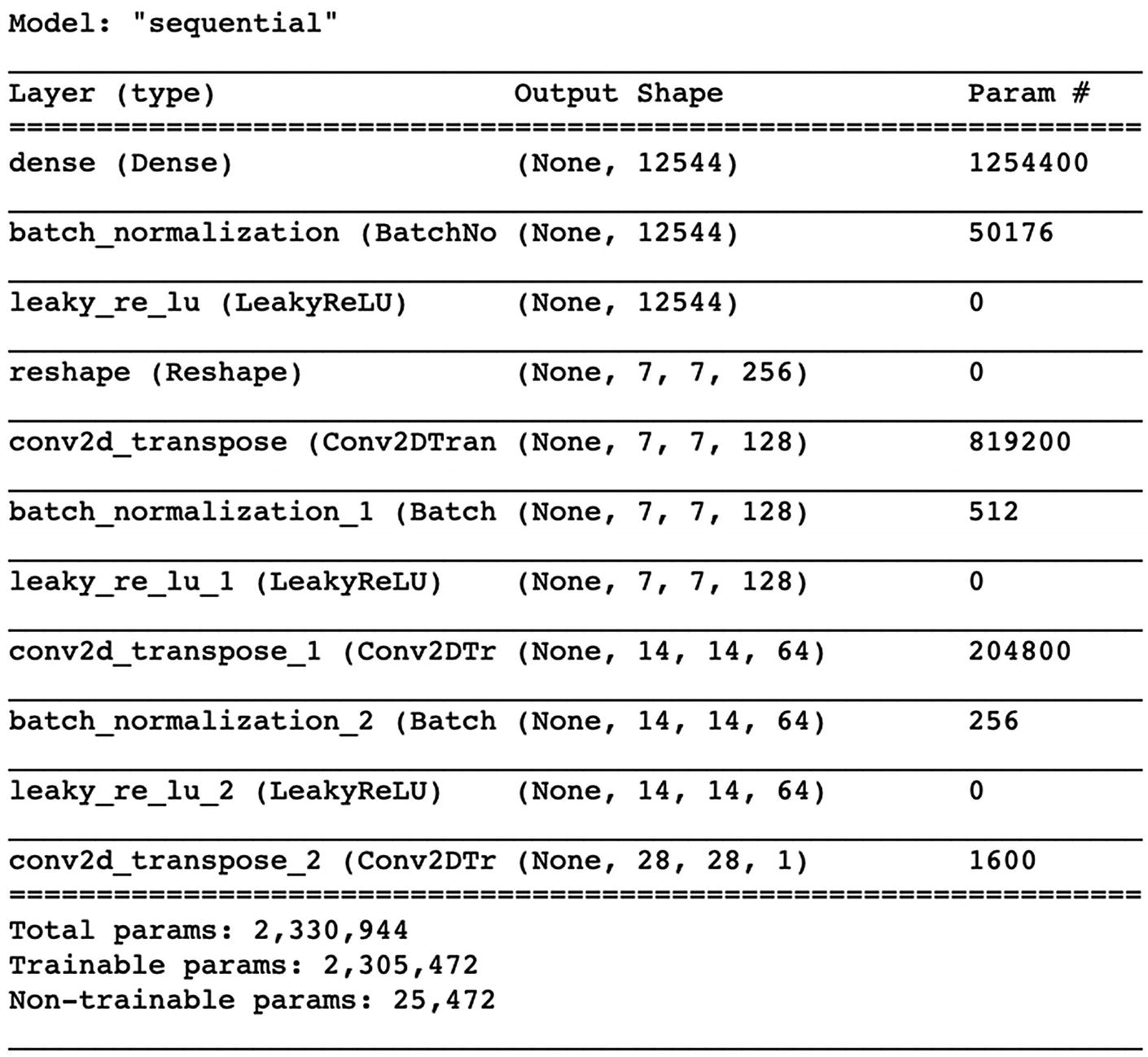

Generator Network

The Summary of Our Generator Network

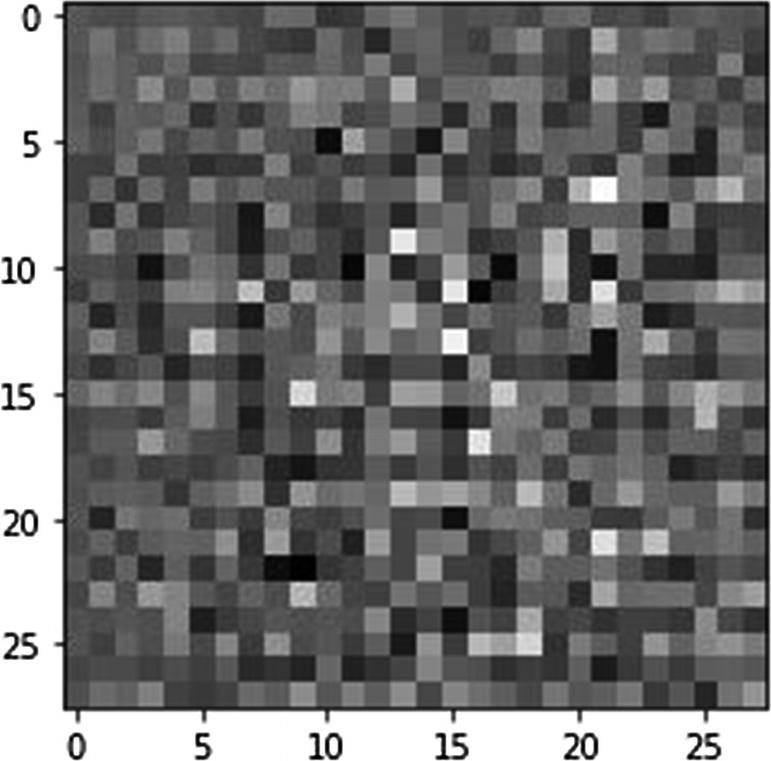

An Example of the Randomly Generated Sample Without Training

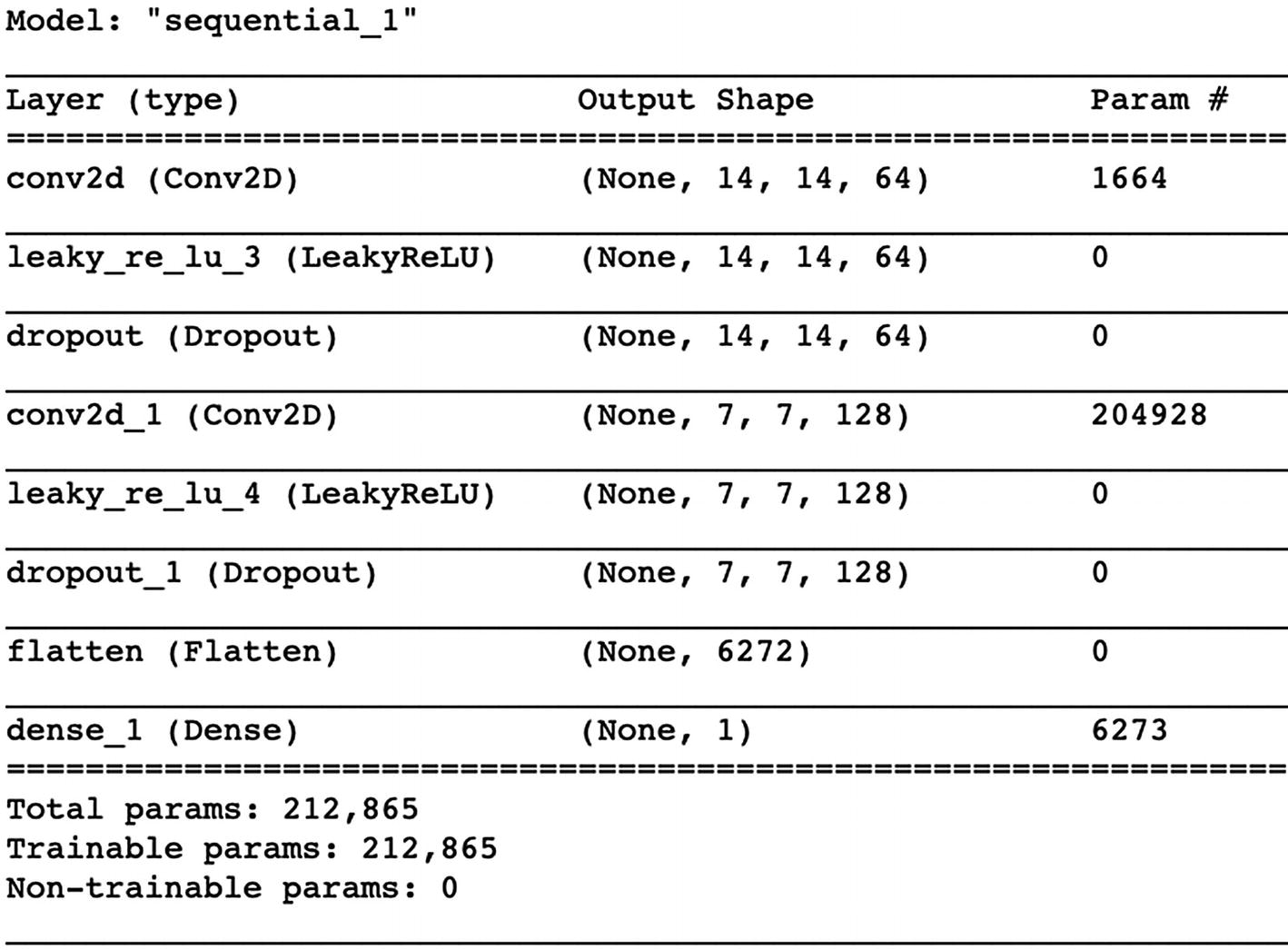

Discriminator Network

The Summary of Our Discriminator Network

As you can see, our output is less than zero, and we can conclude that this particular sample generated by the untrained generator network is fake.

Configure the GAN Network

As part of our model configuration, we need to set loss functions for both the generator and the discriminator. In addition, we need to set separate optimizers for both of them as well.

Loss Function

We start by creating a Binary Crossentropy object from tf.keras.losses module. We also set from_logits parameter to true. After creating the object, we fill them with custom discriminator and generator loss functions.

Our discriminator loss is calculated as a combination of (i) the discriminator’s predictions on real images to an array of ones and (ii) its predictions on generated images to an array of zeros.

Our generator loss is calculated by measuring how well it was able to trick the discriminator. Therefore, we need to compare the discriminator’s decisions on the generated images to an array of ones.

Optimizer

Set the Checkpoint

Training the GAN network takes longer than other networks due to the complexity of the network. We have to run the training for at least 50–60 epochs to generate meaningful images. Therefore, setting checkpoints is very useful to use our model later on.

Train the GAN Model

Our seed is the noise that we use to generate images on top of. The following code generates a random array with normal distribution with the shape (16, 100).

The Training Step

This is the most unusual part of our model: We are setting a custom training step. After defining the custom train_step() function by annotating the tf.function module, our model will be trained based on the custom train_step() function we defined.

Now that we defined our custom training step with tf.function annotation, we can define our train function for the training loop.

The Training Loop

- During the training

Start recording time spent at the beginning of each epoch

Produce GIF images and display them

Save the model every 5 epochs as a checkpoint

Print out the completed epoch time

Generate a final image in the end after the training is completed

Image Generation Function

Generate images by using the model.

Display the generated images in a 4 x 4 grid layout using Matplotlib.

Save the final figure in the end.

Now that we defined our custom image generation function, we can safely call our train function in the next part.

Start the Training

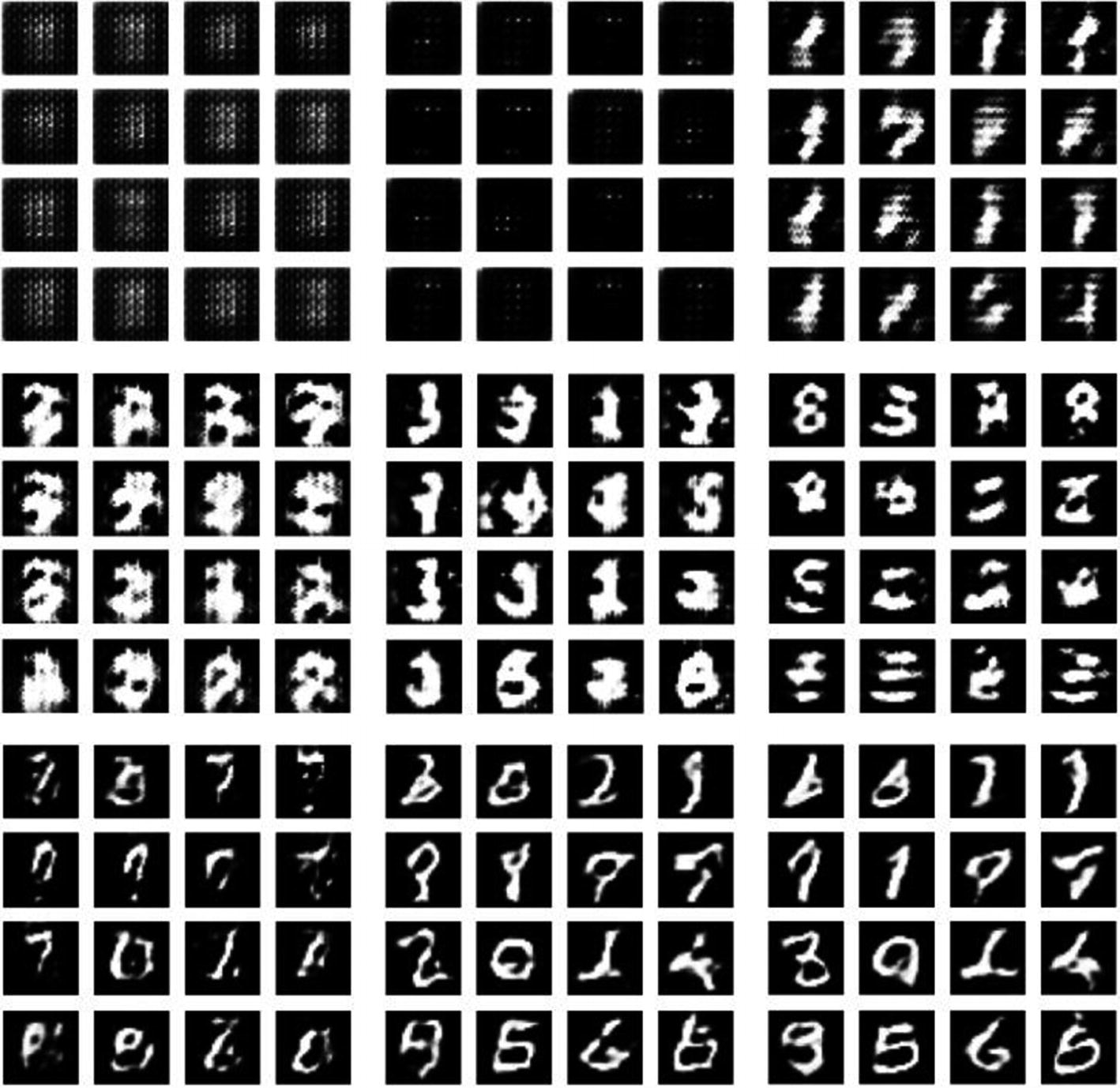

The Generated Images After 60 Epochs in 4 x 4 Grid Layout

As you can see in Figure 12-5, after 60 epochs, the generated images are very close to proper handwritten digits. The only digit I cannot spot is the digit two (2), which could just be a coincidence.

Animate Generated Digits During the Training

During the training, our generate_and_save_images() function successfully saved a 4 x 4 generated image grid layout at each epoch. Let’s see how our model’s generative abilities evolve over time with a simple exercise.

The Display of the Latest PNG File Generated by the GAN Model. Note That They Are Identical to Samples Shown in Figure 12-5. Since We Restored the Model from the Last Checkpoint

Figure 12-7 shows several frames from the GIF image we created:

Generated Digit Examples from the Different Epochs. See How the GAN Model Learns to Generate Digits Over Time

Conclusion

In this chapter, we covered our last neural network architecture, generative adversarial networks, which are mainly used for generative tasks in fields such as art, manufacturing, research, and gaming. We also conducted a case study, in which we trained a GAN model which is capable of generating handwritten digits.