To make things a bit easier, from this point in the chapter, we’ll use the term iPhone to refer collectively to all iOS-based devices. Also, we’ll use the terms iPhone and iOS interchangeably, except where a distinction is required.

To make things a bit easier, from this point in the chapter, we’ll use the term iPhone to refer collectively to all iOS-based devices. Also, we’ll use the terms iPhone and iOS interchangeably, except where a distinction is required.The iPhone, iPod Touch, iPad, and iPad mini are among the most interesting and useful new devices to be introduced into the market in recent years. The styling and functionality of the devices make them a “must have” for many people when on the go. For just these reasons, the adoption of the iPhone and related devices over the last few years has risen to more than 500 million units sold as of early 2013. This has been great news for Apple and users alike. With the ability to purchase apps, music, and other media easily, and to browse the Web from a full-featured version of the Safari web browser, people have simply been able to get more done with less.

From a technical perspective, the iPhone has also become a point of interest for engineers and hackers alike. People have spent a great deal of time learning about the iPhone’s internals, including what hardware it uses, how the operating system works, what security protections are in place, and so on. There is certainly plenty to talk about in terms of security. The mobile operating system used by the iPhone, known as iOS, has had an interesting evolution from what was initially a fairly insecure platform to its current state as one of the most secure consumer-grade offerings on the market.

The closed nature of the iPhone has also served as a catalyst for research into the platform’s security. The iPhone, by default, does not allow third parties to modify the operating system in any way. This means, for example, that users cannot access their devices remotely, nor can they install any software not available from Apple’s App Store, as they would normally be able to do with a desktop operating system. There are, of course, many people who want to do these things and much more, and so a community of developers has formed that has driven substantial research into the platform’s internal workings. Much of what we know about the iPhone’s security comes as a result of community efforts to bypass restrictions put in place by Apple to prevent users from gaining full access to their devices.

Given the broad adoption that the iPhone has seen, it seems reasonable to consider the platform’s security-related risks. A desktop computer may contain sensitive information, but you aren’t likely to forget it in a bar (iPhone prototypes!). You’re also not as likely to carry your laptop with you everywhere you go. The iPhone’s relatively good track record with regard to security incidents has led many people to believe that the iPhone can’t be hacked. This perception, of course, leads, in some cases, to folks lowering their guard. If their device is super secure, then what’s the point in being cautious. Right? For these reasons and many others, we need to consider the iPhone’s security from a slightly different perspective—that of a highly portable device that is always on and always with the user.

In this chapter, we’re going to look at security for the iPhone from various angles. First, we’re going to provide some context by reviewing the history of the platform, starting in the mid-1980s and moving forward to present day. After this, we’ll take a look at the platform’s evolution from a security perspective since initial public release until now. We’ll then get a bit more technical by jumping into how to unlock your own phone’s full potential. Once you’ve learned how to hack into your own device, you’ll learn how to hack into devices not under your direct control. This is all so you can then take a step back to consider the measures that exist to defend an iPhone from attack. Let’s get started then by taking a look at the history of the iPhone!

iOS has an interesting history, and it helps to understand more about it when learning to hack the platform. Development on what would later become iOS began many moons ago, in the mid-1980s at NeXT, Inc. Steve Jobs, having recently left Apple, founded NeXT. NeXT developed a line of higher-end workstations intended for use in educational and other nonconsumer markets. NeXT chose to produce its own operating system, originally named NeXTSTEP. NeXTSTEP was developed in large part by combining open source software with internally developed code. The base operating system was derived primarily from Carnegie Mellon University’s Mach kernel, with some functionality borrowed from BSD Unix. An interesting decision was made regarding the programming language of choice for developing applications for the platform. NeXT chose to adopt the Objective-C programming language and provided most of their programming interfaces for the platform in this language. At the time, it was a break from convention, as C was the predominant programming language for application development on other platforms up to that point. Thus, application development for NeXTSTEP typically consisted of Objective-C programming, leveraging extensive class libraries provided by NeXT.

In 1996, Apple purchased NeXT and, with that purchase, came the NeXTSTEP operating system (by that time, renamed to OPENSTEP). NeXTSTEP was then chosen as the basis for a next-generation operating system to replace the aging Mac OS “classic.” In a prerelease version of the new platform, codenamed Rhapsody, the interface was modified to adopt Mac OS 9 styling. This styling was eventually replaced with what would become the UI for Mac OS X (codenamed Aqua). Along with UI changes, work on the operating system and bundled applications continued, and on March 24, 2001, Apple publicly released Mac OS X, their next-generation operating system, to the world.

Six years later, in 2007, Apple boldly entered into the mobile phone market with the introduction of the iPhone. The iPhone, an exciting new smartphone, introduced many novel features, including industry-leading design of the phone itself as well as a new mobile operating system known initially as iPhone OS. iPhone OS, later renamed somewhat controversially to iOS (owing to its similarity to Cisco’s Internetwork Operating System, or IOS), is derived from the NeXTSTEP/Mac OS X family and is more or less a pared-down fork of Mac OS X. The kernel remains Mach/BSD-based with a similar programming model, and the application programming model remains Objective-C based with heavy dependence on class libraries provided by Apple.

Following the release of the iPhone, several additional devices powered by iOS were released by Apple, including the iPod Touch 1G (2007), Apple TV (2007), and iPad (2010) and iPad mini (2012). The iPod Touch and iPad are highly similar to the iPhone in terms of their internals (both hardware and software). Apple TV varies a bit from its sister products in that it is more of an embedded device intended for use in the home rather than a mobile device. However, Apple TV still runs iOS and functions roughly the same (the most notable differences being the user interface and lack of official support for installation and execution of apps).

From a security perspective, all of this is mentioned to provide some context, or some hints in terms of where the focus tends to be when attempting to attack or provide security for iOS-based devices. Inevitably, attention has turned to learning about the operating system architecture, including how to program for Mach, and navigation of the application programming model, including, in particular, how to work with, analyze, design, and/or modify programs built primarily using Objective-C and the frameworks provided by Apple.

A final note on iOS-based devices relates to the hardware platform chosen by Apple. To date, all devices powered by iOS have had, at their heart, an ARM processor, as opposed to an x86 or some other type of processor. The ARM architecture introduces a number of differences that need to be accounted for when working with the platform. The most obvious difference is that, when reversing or performing exploit development, all instructions, registers, values, and so on, differ from what you would find on other platforms. In some ways, however, ARM is easier to work with. For example, all ARM instructions are of a fixed length (either 2 or 4 bytes); the overall instruction set contains fewer instructions than that of other platforms; and there are no 64-bit concerns for the time being, as ARM processors in use by the current generation iPhone and similar products are 32-bit only.

To make things a bit easier, from this point in the chapter, we’ll use the term iPhone to refer collectively to all iOS-based devices. Also, we’ll use the terms iPhone and iOS interchangeably, except where a distinction is required.

To make things a bit easier, from this point in the chapter, we’ll use the term iPhone to refer collectively to all iOS-based devices. Also, we’ll use the terms iPhone and iOS interchangeably, except where a distinction is required.

Before moving on to a discussion of iOS security, here are some references for further reading, should you be interested in learning more about iOS internals or the ARM architecture:

• Mac OS X Internals: A Systems Approach, Amit Singh (Addison-Wesley, 2006)

• Mac OS X and iOS Internals: To the Apple’s Core, Jonathan Levin (Wrox, 2012)

• OS X and iOS Kernel Programming, Ole Henry Halvorsen (Apress, 2011)

• iOS Hacker’s Handbook, Charlie Miller et al. (Wiley, 2012)

• The Mac Hacker’s Handbook, Charlie Miller et al. (Wiley, 2009)

• Programming under Mach, Joseph Boykin et al. (Addison-Wesley, 1993)

• ARM System Developer’s Guide: Designing and Optimizing System Software, Andrew Sloss et al. (Morgan Kaufmann, 2004)

• ARM Reference Manuals, infocenter.arm.com/help/topic/com.arm.doc.subset. architecture.reference/index.html#reference

• The base operating system source code for Mac OS X, opensource.apple.com (Portions of this code are shared with iOS and often serve as a helpful resource when attempting to determine how something works in iOS.)

iOS has been with us for about six years now. During that period of time, the platform has greatly evolved, in particular, in terms of the operating system and application security model. When the iPhone was first released, Apple indicated publicly that it did not intend to allow third-party apps to run on the device. Developers and users alike were instructed to build or use web applications and to access these applications via the iPhone’s built-in web browser. For a period of time, this meant that, with only Apple-bundled software running on devices, security requirements were somewhat lessened. However, the lack of third-party apps also kept users from taking full advantage of their devices. In short order, hackers began to find ways to root or “jailbreak” devices and to install third-party software. In response to this and also in response to user demand for the capability to install apps on their devices, in 2008, Apple released an updated version of iOS that included support for a new service, known as the App Store. The App Store gave users the opportunity to purchase and install third-party apps. Since the launch of the App Store, over 800,000 apps have been released for purchase, with a total of over 40 billion apps having been downloaded (see apple.com/pr/library/2013/01/28Apple-Updates-iOS-to-6-1.html). Apple also began to include additional security measures with this and subsequent releases of iOS.

Early versions of iOS provided little in terms of security protections. All processes ran with superuser (root) privileges. Processes were not sandboxed or restricted in terms of what system resources they could access. Code signing was not employed to verify the origin of applications (and to control execution of said applications). No Address Space Layout Randomization (ASLR) or Position Independent Executable (PIE) support was provided for the kernel, other system components, libraries, or applications. Also, few hardware controls were put in place to prevent hacking of devices.

As time passed, Apple began to introduce improved security functionality. In short order, third-party apps were executed under a less-privileged user account named mobile. Sandboxing support was added, restricting apps to a limited set of system resources. Support was added for code signature verification. With this addition, apps installed on a device had to be signed by Apple to allow their execution. Ultimately, code signature verification was implemented at both load time (within code responsible for launching an executable) as well as at runtime (in an effort to prevent new code from being added to memory and then executed). Eventually, ASLR for the kernel, other operating system components, and libraries were added, as well as a compile-time option for Xcode known as PIE. PIE, when combined with recent versions of iOS, requires an app to load at a different base address upon every execution, making exploitation of app-specific vulnerabilities more difficult.

All of these changes and enhancements bring us to the present day. iOS has made great gains in terms of its security model. In fact, the overall App Store–based app distribution process coupled with the current set of security measures implemented in the operating system have made iOS one of the most secure consumer-grade operating systems available. This take on the operating system has largely been validated by the relative absence of known malicious attacks on the platform, even when considering earlier less secure versions.

However, although iOS has made great strides, it would be naïve to think that the platform is impervious to attack. For better or for worse, this is not the case. While we have not currently seen much in the way of malicious code targeting the platform, we can draw from some examples as a means for demonstrating that iOS does, in fact, have its weaknesses, that it can be hacked, and that it does deserve careful consideration within the context of an end user or organization’s security posture.

iOS security researcher Dino Dai Zovi’s paper on iOS 4.x security discusses iOS’s ASLR, code signing, sandboxing, and more, and should be considered required reading for those interested in iOS hacking. See trailofbits.files.wordpress.com/2011/08/apple-ios-4-security-evaluation-whitepaper.pdf.

iOS security researcher Dino Dai Zovi’s paper on iOS 4.x security discusses iOS’s ASLR, code signing, sandboxing, and more, and should be considered required reading for those interested in iOS hacking. See trailofbits.files.wordpress.com/2011/08/apple-ios-4-security-evaluation-whitepaper.pdf.

When we talk about security in general, we tend to think about target systems being attacked and ways either to carry out those attacks or defend ourselves from them. We don’t usually think about a need for rooting systems under our own control. Funny as it may sound, in the case of mobile security, this is a new problem that needs to be dealt with. In order to learn more about our mobile devices or to have the flexibility needed when using them for security-related or really any other nonvendor-supported purpose, we find ourselves in the position of having to hack into them. In the case of iOS, Apple has toiled at length to prevent their customers from gaining full access to their own devices. With every action, there is, of course, a reaction, and in the case of iOS, it has manifested itself as a steady stream of tools that provide you with the capability to jailbreak the iPhone.

Thus, we begin our journey into the realm of iPhone hacking by discussing how to hack into your very own phone. As a first step toward this goal, it is useful to consider exactly what is meant by the term jailbreaking. Jailbreaking can be described as the process of taking full control of an iOS-based device. This can generally be done by using one of several tools available for free online or, in some cases, by simply visiting a particular website. The end result of a successful jailbreak is that you can tweak your iPhone with custom themes, install utility apps or extensions to apps, configure the device to allow remote access via SSH or VNC, install other arbitrary software, or even compile software directly on the device.

The fact that you can relatively easily liberate your device and use it to learn about the operating system, or just get more done, is certainly a good thing. Jailbreaking has some downsides, however, that you should keep in mind. First, there is always a sliver of doubt with regard to exactly what jailbreak software does to a device. The jailbreak process involves exploiting a series of vulnerabilities to take over a device. During this process, an attacker could insert or modify something relatively easily, without a user noticing. For well-known jailbreak applications, although this has never been observed, it is worth remembering. Alternatively, on at least one occasion, fake jailbreak software was released that was designed to tempt eager users looking to jailbreak versions of iOS for which no free/confirmed-working jailbreak had been released into installing the software. Jailbroken phones may also lose some functionality, as vendors have been known to include checks in their apps that report errors or cause an app to exit on startup (iBooks is an example of this). Another important aspect of jailbreaking that you should consider is the fact that, as part of the process, code signature validation is disabled. This is one of a series of changes required for users to be able to run arbitrary code on their devices (one of the goals of jailbreaking). The downside to this is, of course, that unsigned malicious code is also able to run, increasing the risk to the user of just such a thing occurring. Otherwise, some potential exists for “bricking,” or rendering a device unusable, during the jailbreak process, and as jailbreaking voids a device’s warranty, there’s likely no way to bring the device back from the dead if this happens.

It is important to consider the pros and cons of jailbreaking. On the one hand, you end up with a device that can be leveraged to the fullest extent possible. On the other hand, you expose yourself to a variety of attack vectors that could lead to the compromise of your device. Few security-related issues have been reported affecting jailbroken phones, and, in general, the benefits of jailbreaking outweigh the risks. With that said, users should be cautious about jailbreaking devices on which sensitive information will be stored. For example, users should think twice before jailbreaking a primary phone that they use to store contact information or pictures or to take phone calls.

The jailbreak community has, in general, done more to advance the security of iOS than any other entity, perhaps with the exception of Apple. Providing unrestricted access to the platform has allowed substantial security research to be carried out and has helped drive the evolution of iOS’s security model from its early insecure state to where it is today. Thanks should be given to this community for their continued hard work and for their ability to impress, from a technical perspective, with the release of each new jailbreak.

The jailbreak community has, in general, done more to advance the security of iOS than any other entity, perhaps with the exception of Apple. Providing unrestricted access to the platform has allowed substantial security research to be carried out and has helped drive the evolution of iOS’s security model from its early insecure state to where it is today. Thanks should be given to this community for their continued hard work and for their ability to impress, from a technical perspective, with the release of each new jailbreak.

Having covered what it means to jailbreak a device, what jailbreaking achieves, and the pros and cons to keep in mind when jailbreaking, let’s move on to the nitty-gritty. There are at least a few ways to jailbreak an iPhone. The first technique involves taking control of the device during the boot process and ultimately pushing a customized firmware image to the device. This technique can be used for older devices (iPhone 3G/3GS/4G devices as well as the iPod 4G and iPad 1). The second technique can be described as an entirely remote technique; it involves loading a file onto a device that first exploits and takes control of a userland process, and then exploits and takes control of the kernel. This second case is best represented on the website jailbreakme.com, which, in the last few years, has been used to host multiple remote jailbreaks. A third technique was developed in early 2012 to accommodate more recent devices such as the iPhone 4S and iPad 2/3 running iOS version 5 and is commonly referred to as the corona or absinthe jailbreak. The most recent jailbreak, known as evasi0n, was released in 2013 to provide support for the iPhone 5, iPod 5G, iPad 4, and iPad mini running iOS version 6.x (thank you evad3r  ).

).

Let’s take a look at the boot-based jailbreak technique first. The general process for using this technique to jailbreak a device involves these steps:

1. Obtain the firmware image (also known as an IPSW) that corresponds to the iOS version and device model that you want to jailbreak. Every device model has a different corresponding firmware image. For example, the firmware image for iOS 5.0 for an iPhone 4 is not the same as the one for an iPod 4. You must locate the correct firmware image for the particular device model. Firmware images are hosted on Apple download servers and can typically be located via a Google search. For example, if we search Google for “iPhone 4 firmware 4.3.3”, the second result (at the time of this writing) includes a link to the following download location:

appldnld.apple.com/iPhone4/041-1011.20110503.q7fGc/iPhone3,1_4.3.3_8J2_Restore.ipsw

This is the IPSW needed to jailbreak iOS 4.3.3 for an iPhone 4 device.

These files tend to be large, so be sure to download them before you need them. We suggest storing a collection of IPSWs locally for the device models and iOS versions that you work with on a regular basis.

These files tend to be large, so be sure to download them before you need them. We suggest storing a collection of IPSWs locally for the device models and iOS versions that you work with on a regular basis.

2. Obtain the jailbreak software you’re going to use. You have several options available. A few of the most popular applications for this purpose include Redsn0w, greenpois0n, and limera1n.

We’ll use Redsn0w in this section, which you can grab from the following location:

blog.iphone-dev.org/

3. Connect the device to the computer hosting the jailbreak software via a standard USB cable.

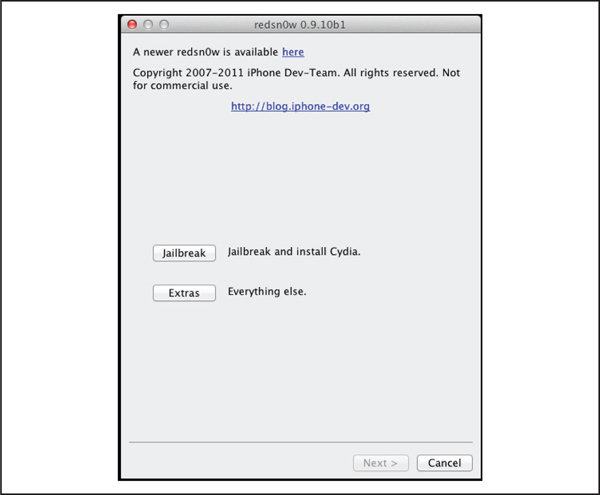

4. Launch the jailbreak application by clicking the Jailbreak button, as shown in Figure 3-1.

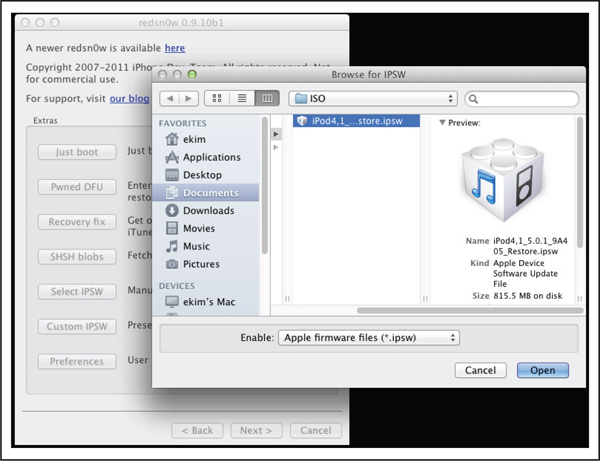

5. Via the jailbreak application’s user interface, select the previously downloaded IPSW, as shown in Figure 3-2. The jailbreak software typically customizes the IPSW, and this process may take a few seconds.

6. Switch the device into Device Firmware Update (DFU) mode. To do this, power off the device. Once powered off, press and hold the power and home buttons simultaneously for 10 seconds. At the 10-second mark, release the power button, while continuing to press the home button. Hold the home button for an additional 5 to 10 seconds, after which you can release it. The device’s screen is not powered on when put into DFU mode, so it can be a bit challenging to determine whether the mode switch has actually occurred or not. Fortunately, jailbreak applications such as Redsn0w include a screen that walks the user through this process and that alerts the user when the device has been successfully switched into DFU mode, as shown in Figure 3-3.

If you’re attempting to do this but have issues, search YouTube for assistance. There are a number of videos that visually walk you through the process of switching a device into DFU mode.

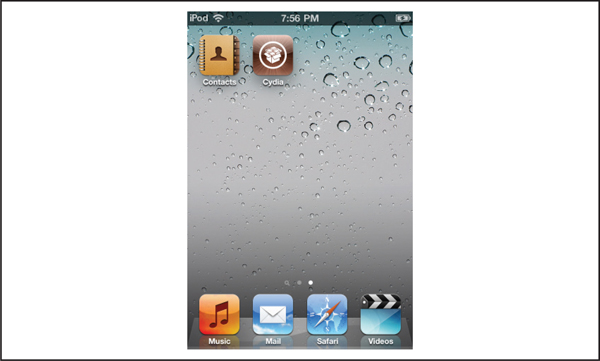

7. Once the switch into DFU mode occurs, the jailbreak software automatically begins the jailbreak process. From here, wait until the process completes. This typically involves loading the firmware image onto the device, some interesting output to the device’s screen, followed by a reboot. After reboot, the device should launch in the same way as a normal iPhone, but with an exciting new addition to the “desktop”—Cydia. Cydia is shown in Figure 3-4.

Figure 3-1 Launching the Redsn0w jailbreak app

Figure 3-2 Selecting the IPSW in Redsn0w

Figure 3-3 Redsn0w’s helpful “wizard” screens

Figure 3-4 Cydia—you’ve been jailbroken!

The second-generation AppleTV can be jailbroken using a process similar to the one described in this section. An application frequently used for this purpose is FireCore’s Seas0nPass.

The second-generation AppleTV can be jailbroken using a process similar to the one described in this section. An application frequently used for this purpose is FireCore’s Seas0nPass.

Boot-based jailbreaking is the bread and butter of gaining full access to a device. However, its technical requirements raise the bar slightly for the user attempting to perform the jailbreak. A user has to grab a firmware image, load it into the jailbreak application, and switch his or her device into DFU mode. This can present some challenges for the less technical among us. For the more technical, although this is not a huge hurdle to overcome, it can be slightly more time consuming than using what is known as a remote jailbreak. In the case of a remote jailbreak, such as that provided by jailbreakme.com, the process is as simple as loading a specially crafted PDF into the iPhone’s Mobile Safari web browser. The specially crafted PDF takes care of exploiting and taking control of the browser and then the operating system and ultimately for providing the user with unrestricted access to the device.

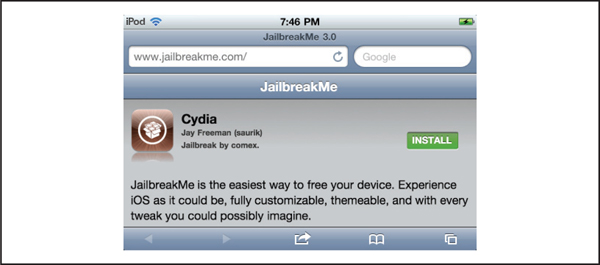

In July 2011, iOS hacker Nicholas Allegra (aka comex) released version 3.0 of a remote jailbreak technique for iOS 4.3.3 and earlier, via the website jailbreakme.com. This particular jailbreak technique has been dubbed “JailbreakMe 3.0,” or JBME3.0 for short. The process for jailbreaking a device using this technique only requires loading the website’s home page into Mobile Safari, as shown in Figure 3-5. Once at the home page, a user needs only to tap the install button, and presto, the device has been jailbroken.

Figure 3-5 The JailbreakMe 3.0 app

This jailbreak technique was originally very handy but has become significantly less useful over time as it does not support more recent versions of iOS such as 5.x or 6.x.

This jailbreak technique was originally very handy but has become significantly less useful over time as it does not support more recent versions of iOS such as 5.x or 6.x.

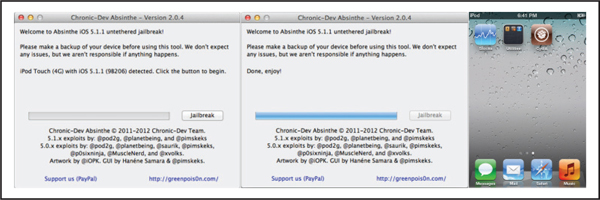

Jailbreaking an iOS 5.x device with the corona/absinthe jailbreak tool is generally a piece of cake. The main prerequisite is to have a fourth-generation device such as an iPhone 4, iPod 4G or iPad1, or an iPhone 4S, iPad2, or iPad3 running iOS 5.1.1. You simply connect your device to your computer, launch the Absinthe app, click the Jailbreak button, and wait for the magic to happen, as shown in Figure 3-6!

Figure 3-6 From left to right, Absinthe on startup, at completion, and with the addition of Cydia to the device’s SpringBoard

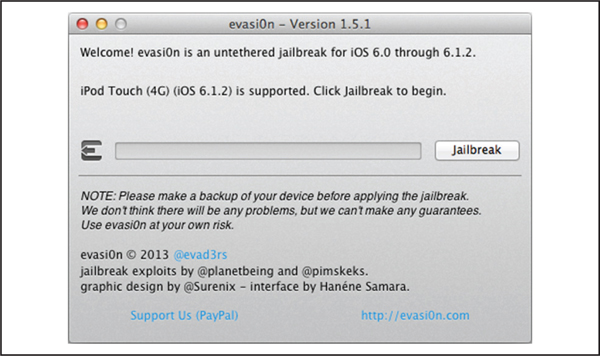

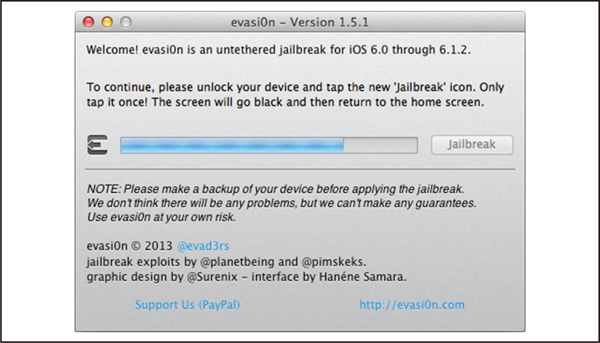

The evasi0n jailbreak was released in early 2013. After nearly a year, evasi0n gave us the capability to jailbreak devices running iOS 6.x, including the iPhone 5, iPod 5, iPad 4, and iPad mini. Using evasi0n is similar to using other jailbreak tools. Connect your device, begin the jailbreak process, and wait for it to complete. One small difference is that about two-thirds of the way through the process, you have to unlock your device’s display and manually tap an icon one time to complete the jailbreak.

You can see the evasi0n app’s interface in Figure 3-7. You need only click the Jailbreak button to get things started.

Figure 3-7 The evasi0n app’s interface

In Figure 3-8, the user is prompted to unlock his or her device and tap the new Jailbreak icon (one time only!).

Figure 3-8 The evasi0n app prompting the user to tap the Jailbreak icon

Figure 3-9 shows the Jailbreak icon that you need to tap. One tap is all it takes to continue with the jailbreaking process.

Figure 3-9 Tapping the Jailbreak icon

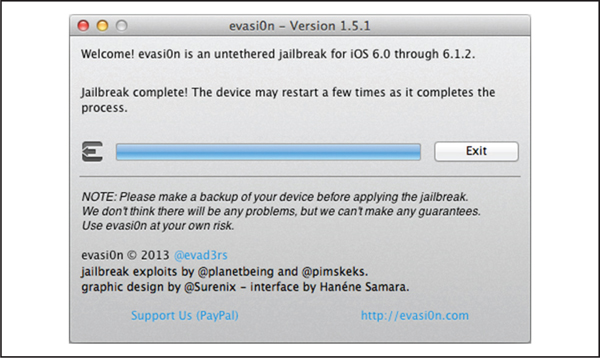

Finally, Figure 3-10 shows the evasi0n app’s interface indicating that the jailbreak has been completed successfully. At this point, you can unlock your device and scroll over to find the beloved Cydia icon!

Figure 3-10 All done! The user’s device is now jailbroken!

Up to this point, we’ve talked about a number of things that we can do to unleash the full functionality of an iPhone through jailbreaking. Now let’s shift our attention in a new direction. Instead of focusing on how to hack into our own iPhone, let’s look into how we might go about hacking into someone else’s device.

In this section, we look at a variety of incidents, demos, and issues related to gaining access to iOS-based devices. We’ve seen that when targeting iOS, the options available for carrying out a successful attack are limited relative to other platforms. iOS has a minimal network profile, making remote network-based attacks largely inapplicable. Jailbroken devices when running older or misconfigured network services do face some risk when connected to the network. However, as jailbroken devices make up a somewhat small percentage of the total number of devices online, presence of these services can’t be relied on as a general method for attack. In some ways, iOS has followed the trend of desktop client operating systems such as Windows in disabling access to most or all network services by default. A major difference is that, unlike Windows, network services are not later reenabled for interoperability with file sharing or other services. This means that, for all intents and purposes, approaching iOS from the remote network-side to gain access is a difficult proposition.

Of course, an attacker has other options available, aside from traditional remote network-based attacks. Most of these options depend on some combination of exploiting client-side vulnerabilities, local network access, or physical access to a device. The viability of local network- or physical access–based attacks depends heavily on the target in question. Local network-based attacks can be useful if the goal is simply to affect any vulnerable system connected to the local network. Bringing a malicious WAP online at an airport, coffee shop, or any other point with heavy foot traffic where WiFi is frequently used could be one way to launch an attack of this sort. If a particular user or organization is the target, then an attacker first needs to gain remote access to the local network to which the target device is connected or, alternatively, be within physical proximity of the target user in order to connect to a shared, unsecured wireless network, or else lure the user into connecting to a malicious WAP. In both cases, the barrier to entry is high and the likelihood of success is reduced, as gaining remote access to a particular local network or luring a target user onto a specific wireless network is complicated at best.

An attacker with physical access to a device has a broader set of options available. With the capability to perform a boot-based jailbreak on some iPhone models, to access the file system, and to mount attacks against the keychain as well as other protective mechanisms, the likelihood of successfully extracting information from a device becomes higher. However, coming into physical possession of a device is a challenge as it implies physical proximity and theft. For these reasons, physical attacks on a device deserve serious consideration, given the fact that one’s own device could easily be lost or stolen, but they are somewhat impractical from the perspective of developing a general set of tools and methodologies for hacking into iOS-based devices.

The practical options left to an attacker generally come down to client-side attacks. Client-side attacks have been found time and again in apps bundled with iOS, in particular, in Mobile Safari. With the list of known vulnerabilities affecting these apps and other components, an attacker has at his or her disposal a variety of options from which to choose when targeting an iPhone for attack. The version of iOS running on a device plays a significant role as it relates to the ease with which a device can be owned. In general, the older the version of iOS, the easier it is to gain access. As far as launching attacks, methods available are similar to those for desktop operating systems, including hosting malicious files on web servers or delivering them via email. Attacks are not limited to apps bundled with iOS but can also be extended to third-party apps. Vulnerabilities found and reported in third-party apps serve to demonstrate that vectors for attack do exist beyond what ships by default with iOS. With the ever-growing number of apps available via the App Store, as well as via alternative markets such as the Cydia Store, it is reasonable to assume that app vulnerabilities and client-side attacks, in general, will continue to be one of the primary vectors for gaining initial access to iOS-based devices.

Gaining initial access to iOS by exploiting app vulnerabilities may meet an attacker’s requirements if his or her motive for the attack is to obtain information accessible within the app’s sandbox. If an attacker wants to gain full control over a device, then the barrier to entry increases significantly. The first step in this process, after having gained control over an app, is to break out of the sandbox by exploiting a kernel-level vulnerability. As kernel-level vulnerabilities are few and far between, and as the skill level required to find and groom these issues into reliable, working exploits is a capability that few possess, we can say that breaking out of the sandbox with a new kernel-level exploit is much easier said than done. This is particularly the case when targeting iOS 6, as ASLR has been implemented in this version of the operating system at the kernel level as well, making the kernel even more difficult to attack. For most attackers, a more viable approach is simply to wait for exploits to appear and to repurpose them so they can target users during the period in which no update has been released to fix the vulnerability or to target users running older versions of iOS.

As a final note before we look at some specific attack examples, it’s worth mentioning that in comparison to other platforms, relatively few tools exist expressly for the purpose of gaining unauthorized access to iOS. The majority of security-related tools made available for iOS center around jailbreaking (which is effectively authorized activity, assuming it’s implemented by the device’s consenting owner or his/her delegate). Many of these tools can serve a dual purpose. For example, boot-based jailbreaks can be used to gain access to a device when an attacker has physical possession of it. Similarly, exploits picked up from jailbreakme.com, more recent jailbreaks, or other sources can be repurposed to gain access to devices connected to a network. In general, when targeting iOS for malicious purposes, an attacker is left to repurpose existing tools “for bad,” or to invest copious amounts of time developing new techniques and tools from scratch.

OK, now that we’ve taken the 50,000-foot view, let’s drill into some specific attack examples.

The JailbreakMe3.0 Vulnerabilities

The JailbreakMe3.0 VulnerabilitiesWe’ve already seen some of the most popular iOS attacks to date: the vulnerabilities exploited to jailbreak iPhones. Although these are generally exploited “locally” during the jailbreak process, there is nothing to stop enterprising attackers from exploiting similar vulnerabilities remotely—for example, by crafting a malicious document that contains an exploit capable of taking control of the application into which it is loaded. The document can then be distributed to users via a website, email, chat, or some other frequently used medium. In the PC world, this method of attack has served as the basis for a number of malware infections and intrusions in recent years. iOS, despite being fairly safe from remote network attack and despite boasting an advanced security architecture, has shown some weakness in dealing with these kinds of attacks as well.

The foundation for such an attack is best demonstrated by the JailbreakMe 3.0 (or JBME3.0) example discussed earlier in the chapter. There, you learned JBME3.0 exploits two vulnerabilities: one a PDF bug, the other a kernel bug. Apple’s security bulletin for iOS 4.3.4 (support.apple.com/kb/HT4802) gives us a bit more detail about the two vulnerabilities. The first issue, CVE-2011-0226, is described as a FreeType Type 1 Font–handling bug that could lead to arbitrary code execution. The vector inferred is inclusion of a specially crafted Type 1 font into a PDF file, that when loaded leads to the aforementioned code execution. The second issue, CVE-2011-0227, is described as an invalid type conversion bug affecting IOMobileFrameBuffer that could lead to the execution of arbitrary code with system-level privileges.

For an excellent write-up on the mechanics of CVE-2011-0226, take a look at esec-lab.sogeti.com/post/Analysis-of-the-jailbreakme-v3-font-exploit.

For an excellent write-up on the mechanics of CVE-2011-0226, take a look at esec-lab.sogeti.com/post/Analysis-of-the-jailbreakme-v3-font-exploit.

The initial vector for exploitation is the loading of a specially crafted PDF into Mobile Safari. At this point, a vulnerability is triggered in code responsible for parsing the document, after which the exploit logic contained within the corrupted PDF is able to take control of the app. From this point, the exploit continues on to exploit a kernel-level vulnerability and, ultimately, to take full control of the device. For the casual user looking to jailbreak his or her iPhone, this is no big deal. However, for the security-minded individual, the fact that this is possible should raise some eyebrows. If the JBME3.0 technique can leverage a pair of vulnerabilities to take full control of a device, what’s to stop a technique similar to this from being used for malicious purposes? For better or for worse, the answer is—not much.

Apple released iOS 4.3.4 in July 2011 to remedy the issues exploited by JBME3.0. Most devices are no longer running vulnerable versions of iOS (4.3.3 and below) and are not susceptible to this attack vector.

Apple released iOS 4.3.4 in July 2011 to remedy the issues exploited by JBME3.0. Most devices are no longer running vulnerable versions of iOS (4.3.3 and below) and are not susceptible to this attack vector.

JBME3.0 Vulnerability Countermeasures

JBME3.0 Vulnerability CountermeasuresDespite our techie infatuation with jailbreaking, keeping your operating system and software updated with the latest patches is a security best practice, and jailbreaking makes that difficult or dicey on many fronts. One, you have to keep iOS vulnerable for the jailbreak to work, and two, once the system is jailbroken, you can’t obtain official updates from Apple that patch those vulnerabilities and any others subsequently discovered. Unless you’re willing to constantly re-jailbreak your phone every time a new update comes out, or get your patches from unofficial sources, we recommend you keep your device “stock” and install over-the-air iOS updates as soon as they become available (over-the-air update support was introduced with iOS 5.0.1). Also remember to update your apps regularly as well (you’ll see the notification bubble on the App Store when updates are available for your installed apps).

iKee Attacks!

iKee Attacks!The year: 2009. The place: Australia. You’ve recently purchased an iPhone 3GS and are eager to unlock its true potential. To this end, you connect your phone to your computer via USB, fire up your trusty jailbreak application and—click—you now have a jailbroken iPhone! Of course, the first thing to do is launch Cydia and then install OpenSSH. Why have a jailbroken phone if you can’t get to the command line, right? From this point, you continue to install your favorite tools and apps: vim, gcc, gdb, nmap, and so on. An interesting program appears on TV. You set your phone down to watch for a bit, forgetting to change the default password for the root account. Later you pick it up, swipe to unlock, and to your delight find that the wallpaper for your device has been changed to a mid-1980s photo of the British pop singer Rick Astley (see Figure 3-11). You’ve just been rickrolled! Oh noes!

In November 2009, the first worm targeting iOS was observed in the wild. This worm, known as iKee, functioned by scanning IP blocks assigned to telecom providers in the Netherlands and Australia. The scan logic was straightforward: identify devices with TCP port 22 open (SSH), and then attempt to log in with the default credentials “root” and “alpine” (which is the default login for jailbroken iPhones). Variants such as iKee.A took a few basic actions on login, such as disabling the SSH server that was used to gain access, changing the wallpaper for the phone, as well as making a local copy of the worm binary. From this point, infected devices were used to scan for and infect other devices. Later variants such as iKee.B introduced botnet-like functionality, including the capability to control infected devices remotely via a command and control channel.

iKee marked an interesting milestone in the history of security issues affecting the iPhone. It was and continues to be the first and only publicly released, clear-cut, non-proof-of-concept example of malware successfully targeting iOS. Although it leveraged a basic configuration weakness, and although the functionality of early variants was relatively benign, it nonetheless served to demonstrate that iOS does face real-world threats and that it is, indeed, susceptible to attack.

You can obtain the source code for the iKee worm, as originally published in November of 2009, from pastie.org/693452.

You can obtain the source code for the iKee worm, as originally published in November of 2009, from pastie.org/693452.

While iKee proved that iOS can, under certain circumstances, be hacked into remotely, it doesn’t necessarily indicate an inherent vulnerability in iOS. In fact, the opposite is probably a fairer case to make. iOS is a Unix-like operating system, related in architecture to Mac OS X. This means the platform can be attacked in a manner similar to how you would attack other Unix-like operating systems. Options for launching an attack include, but are not limited to, remote network attacks involving the exploitation of vulnerable network services; client-side attacks, including exploitation of vulnerable app vulnerabilities; local network attacks, such as man-in-the-middling (MiTM) of network traffic; and physical attacks that depend on physical access to a target device. Note, however, that certain iOS characteristics make some of these techniques less effective than for most other platforms.

For example, the network profile for a fresh out-of-the-box iPhone leaves very little to work with. Only one TCP port, 62087, is left open. No known attacks have been found for this service, and although this is not to say that none will ever be found, it is safe to say that the overall network profile for iOS is quite minimal. In practice, gaining unauthorized access to an iPhone (that has not been jailbroken) from a remote network is close to impossible. None of the standard services that we’re accustomed to targeting during pen tests, such as SSH, HTTP, and SMB, are to be found, leaving little in terms of an attack surface. Hats off to Apple for providing a secure configuration for the iPhone in this regard.

A few remote vulnerabilities have been seen, including one related to handling ICMP requests that could cause a device reset (CVE-2009-1683) and another identified by Charlie Miller in iOS’s processing of SMS (text) messages (CVE-2009-2204). Other potential areas for exploitation that may gain more attention in the future include Bonjour support on the local network and other radio interfaces on the device, including baseband, the Wi-Fi driver, Bluetooth, and so on.

A few remote vulnerabilities have been seen, including one related to handling ICMP requests that could cause a device reset (CVE-2009-1683) and another identified by Charlie Miller in iOS’s processing of SMS (text) messages (CVE-2009-2204). Other potential areas for exploitation that may gain more attention in the future include Bonjour support on the local network and other radio interfaces on the device, including baseband, the Wi-Fi driver, Bluetooth, and so on.

Remember, however, mobile devices can be attacked remotely via their IP network interface, as well as their cellular network interface.

Remember, however, mobile devices can be attacked remotely via their IP network interface, as well as their cellular network interface.

Of course, there are variables that affect iOS’s vulnerability to remote network attack. If a device is jailbroken and if services such as SSH have been installed, then the attack surface is increased (as iKee aptly demonstrates). User-installed apps may also listen on the network, further increasing the risk of remote attack. However, as they are generally only executed for short periods of time, they are not a reliable means for gaining remote access to a device. This could change in the future, as only a limited amount of research has been published related to app vulnerabilities exploitable from the network side and as there may be useful vulnerabilities still to be found.

Statistics published in 2009 by Pinch Media indicate that between 5 and 10 percent of users had jailbroken their devices. A post to the iPhone dev-team blog in January 2012 indicated that nearly 1 million iPad2 and iPhone 4S (A5) users had jailbroken their devices in the three days following the release of the first jailbreak for that hardware platform. Data published by TechCrunch in early 2013 indicates that there are about 22-million jailbroken device users actively using Cydia, which can be interpreted to be about 5 percent of the total iOS user base.

Statistics published in 2009 by Pinch Media indicate that between 5 and 10 percent of users had jailbroken their devices. A post to the iPhone dev-team blog in January 2012 indicated that nearly 1 million iPad2 and iPhone 4S (A5) users had jailbroken their devices in the three days following the release of the first jailbreak for that hardware platform. Data published by TechCrunch in early 2013 indicates that there are about 22-million jailbroken device users actively using Cydia, which can be interpreted to be about 5 percent of the total iOS user base.

iKee Worm/SSH Default Credentials Countermeasures

iKee Worm/SSH Default Credentials CountermeasuresThe iKee Worm was at its root only possible because of misconfigured jailbroken iPhones being connected to the network. The first and most obvious countermeasure to an attack of this sort is: don’t jailbreak your iPhone! OK, if you must, change the default credentials for a jailbroken device immediately after installing SSH—and only while connected to a trusted network. In addition, network services like SSH should only be enabled when they are needed. Utilities such as SBSettings can be installed and used to enable or disable features like SSH quickly and easily from the Springboard. Otherwise, for jailbroken devices in general, upgrade to the latest jailbreakable version of iOS when possible, and install patches for vulnerabilities provided by the community as soon as practicable.

The FOCUS 11 Man-in-the-Middle Attack

The FOCUS 11 Man-in-the-Middle AttackIn October 2011, at the McAfee FOCUS 11 conference held in Las Vegas, Stuart McClure and the McAfee TRACE team demonstrated a series of hacks that included the live hack of an iPad. The attack performed involved setting up a MacBook Pro laptop with two wireless network interfaces and then configuring one of the interfaces to serve as a malicious wireless access point (WAP). The WAP was given an SSID similar to the SSID for the conference’s legitimate WAP. They did this to show that users could easily be tricked into connecting to the malicious WAP.

The laptop was then configured to route all traffic from the malicious WAP through to the legitimate WAP. This gave tools running on the laptop the capability to man-in-the-middle traffic sent to or from the iPad. To make things a bit more interesting, support was added for man-in-the-middle of SSL connections, through an exploit for the CVE-2011-0228 X.509 certificate chain validation vulnerability, as reported by Trustwave SpiderLabs.

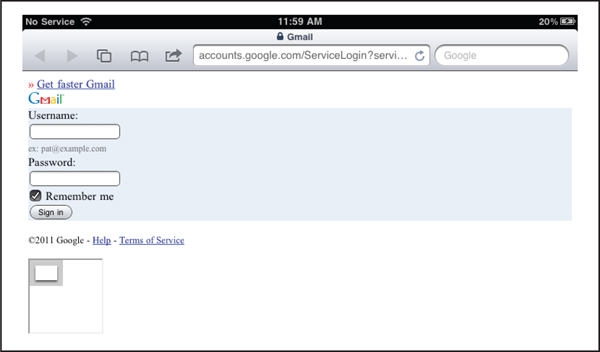

With this setup in place, the iPad was used to browse to Gmail over SSL. Gmail was loaded into the iPad’s browser, but with a new addition to the familiar interface—an iframe containing a link to a PDF capable of silently rooting the device, as shown in Figure 3-12. The PDF loaded was the same as the JBME3.0 PDF, but it was modified to avoid observable changes to the Springboard, such as the addition of the Cydia icon. The PDF was then used to load a custom freeze.tar.xz file, containing the post-jailbreak file and corresponding packages required to install SSH and VNC on the device.

Figure 3-12 A fake man-in-the-middle Gmail login page rendered on an iPhone with a JBME3.0 PDF embedded via iframe to “silently” root the device

The FOCUS 11 hack was designed to drive a couple of points home. Some people have the impression that the iPhone, or iPad in this case, is safe from attack. The demo was designed to underscore the fact that this is not the case and that it is possible to gain unauthorized access to iOS-based devices. The hack combined exploitation of the client-side vulnerabilities used by the JBME3.0 technique with an SSL certificate validation vulnerability and a local network-based attack to demonstrate that not only can iOS be hacked, but it can also be hacked in a variety of ways. In other words, breaking iOS is not a one-time thing, nor are there are only a few limited options or ways to go about it; rather sophisticated attacks involving the exploitation of multiple vulnerabilities are possible. Finally, the malicious WAP scenario demonstrated that the attack was not theoretical but rather quite practical. The same setup is something that could be easily reproduced, and the overall attack scenario is something that could be carried out in the real world.

FOCUS 11 Countermeasures

FOCUS 11 CountermeasuresThe FOCUS 11 attack leveraged a set of vulnerabilities and a malicious WAP to gain unauthorized access to a vulnerable device. The fact that several basic operating system components were subverted leaves little in the way of technical countermeasures that could have been implemented to prevent the attack.

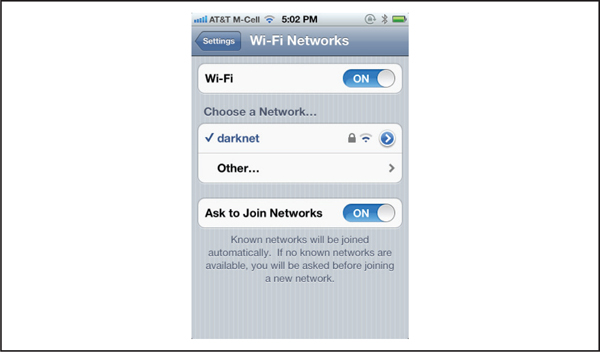

The first step to take to prevent this particular attack is to update your device and to keep it up to date, as outlined in “JBME3.0 Vulnerability Countermeasures.” Another simple countermeasure is to configure your iOS device to Ask To Join Networks, as shown in Figure 3-13. Your device will already join known networks automatically, but you will be asked to join new, unknown networks, which at least gives you a chance to decide if you want to connect to a potentially malicious network. Yes, the FOCUS11 hack used a WiFi network name that looked “friendly”; perhaps a corollary piece of advice is: don’t connect to unknown wireless networks. The likelihood of anyone actually following that advice nowadays is, of course, near zero (how else are you going to check Facebook while at Starbucks?!?), but hey, we warned you!

Figure 3-13 Setting an iPhone to Ask To Join Networks

Assuming network connectivity is likely irresistible on a mobile device, defending against this sort of attack ultimately boils down to evaluating the value of data stored on a device. For example, if a device will never process sensitive data, or be placed in the position of having access to such data, then there is little risk from a compromise. As such, connecting to untrusted wireless networks and accessing the Web or other resources is basically fine. For a device that processes sensitive data, or that could be used as a launching point for attacks against systems that store or process sensitive data, much greater care should be taken. Of course, keeping sensitive data completely off a mobile device can be harder than we’ve laid out here; email, apps, and web browsing are just a few examples of channels through which sensitive data can “leak” onto a system.

In any case, the FOCUS 11 demo showed that by simply connecting to a wireless network and browsing to a web page it was possible to take complete control of a device. This was possible even over SSL. As such, users should register the fact that this can happen and should judge carefully what networks they connect to, to avoid putting their devices or sensitive information at risk.

Malicious Apps: Handy Light, InstaStock

Malicious Apps: Handy Light, InstaStockOther client-side methods can, of course, be used to gain unauthorized access to iOS. One of the most obvious, yet more complicated, methods of attack involves tricking a user into installing a malicious app onto his or her device. The challenge in this case is not only limited to tricking the user, but also involves working around Apple’s app distribution model. Earlier in the chapter, we mentioned that iOS added support for installing third-party apps shortly after introducing the iPhone. Apple chose to implement this as a strictly controlled ecosystem, whereby all apps must be signed by Apple and can only be distributed and downloaded from the official App Store. For an app to be made available on the App Store, it must first be submitted to Apple for review. If issues are found during the review process, the submission is rejected, after which point it’s simply not possible to distribute the app (at least, to non-jailbroken iPhone users).

Apple does not publicly document all of the specifics of their review process. As such, there is a lack of clarity in terms of what it checks when reviewing an app. In particular, there is little information on what checking is done to determine whether or not an app is malicious. It is true that little in the way of “malware” has made it to release on the App Store. A few apps leaking sensitive information such as telephone numbers, contact information, or other device or user-specific information have been identified and pulled from sale. This might lead you to think that although the details of the review process are unknown, that it must be effective; otherwise, we would be seeing reports of malware on a regular basis. This might be a reasonable conclusion, if not for a few real-world examples that call into question the effectiveness of the review process from a security perspective, as well as the overall idea that malware can’t be or is not already present on the App Store.

In mid-2010, a new app named Handy Light was submitted to Apple for review, passed the review process, and was later posted to the App Store for sale. This app appeared on the surface to be a simple flashlight app, with a few options for selecting the color of the light to be displayed. Shortly after release, it was discovered that the Handy Light app included a hidden tethering feature. This feature allowed users to tap the flashlight color options in a particular order that then launched a SOCKS proxy server on the phone that could be used to tether a computer to the phone’s cellular Internet connection. Once the presence of this feature became public, Apple removed the app from sale. Apple did this because it does not allow apps that include support for tethering to be posted to the App Store.

What’s interesting in all of this is that Apple, after having reviewed Handy Light, approved the app despite the fact that it included the tethering feature. Why did Apple do this? We have to assume that because the tethering functionality was hidden, that it was simply missed during the review process. Fair enough, mistakes happen. However, if functionality such as tethering can be hidden and slipped by the review process—what’s to stop other, more malicious functionality from being hidden and slipped by the review process as well?

In September 2011, well-known iOS hacker Charlie Miller submitted an app named InstaStock to Apple for review. The app was reviewed, approved, and then posted to the App Store for download. InstaStock ostensibly allowed users to track stock tickers in real time and was reportedly downloaded by several hundred users. Hidden within InstaStock, however, was logic designed to exploit a 0-day vulnerability in iOS that allowed the app to load and execute unsigned code. Owing to iOS’s runtime code signature validation, this should not have been possible. However, with iOS 4.3, Apple introduced the functionality required for InstaStock to work its magic. In effect, Apple introduced the ability for unsigned code to be executed under a very limited set of circumstances. In theory, this capability was only for Mobile Safari and only for the purpose of enabling Just in Time (JIT) compilation of JavaScript. As it turns out, an implementation error made this capability available to all apps, not just Mobile Safari. This vulnerability, now documented as CVE-2011-3442, made it possible for the InstaStock app to call the mmap system call with a particular set of flags, ultimately resulting in the capability to bypass code signature validation. Given the capability to execute unsigned code, the InstaStock app was able to connect back to a command and control server, to receive and execute commands, and to perform a variety of actions such as downloading images and contact information from “infected” devices. Figure 3-14 shows the InstaStock app.

Figure 3-14 The InstaStock app written by Charlie Miller, which hid functionality to execute arbitrary code on iOS

In terms of attacking iOS, the Handy Light and InstaStock apps provide us with proof that mounting an attack via the App Store is, although not easy, also not impossible. There are many unknowns related to this type of attack. It must be assumed that Apple is working to improve its review process, and that as time passes, it will become more difficult to successfully hide malicious functionality. It is also unclear what exactly can be slipped past the process. In the case of the InstaStock app, as a previously unknown vulnerability was leveraged, there was most likely little in the way of observably malicious code included in the app that was submitted for review. Absent a 0-day, more code would need to be included directly in the app, making it more likely that the app would be flagged during the review process and then rejected.

An attacker could go through this trouble, and might do so if his or her goal is simply to gain access to as many devices as possible. The imprecise but broad distribution of apps available on the App Store could prove to be a tempting vector for spreading malicious apps. However, if an attacker were interested in targeting a particular user, then attacking via the App Store would become a more complex proposition. The attacker would have to build a malicious app, slip it past the review process, and then find a way to trick the target user into installing the app on his or her device. An attacker could combine some social engineering, perhaps by pulling data from the user’s Facebook page and then building an app tailored to the target’s likes and dislikes. The app could then be posted for sale, with an itms:// link being sent to the intended target via a Facebook wall post. It doesn’t require much effort to dream up a number of such scenarios, making it likely that we’ll see something similar to all of this in the not-too-distant future.

App Store Malware Countermeasures

App Store Malware CountermeasuresThe gist of the Handy Light and InstaStock examples is that unwanted or malicious behavior can be slipped past review and onto Apple’s App Store. Although Apple would surely prefer this not to be the case, and would most likely prefer that people not consider themselves to be at risk because of what they download from the App Store, nonetheless, some level of risk is present. As in the FOCUS 11 case, countermeasures or protections that can be put in place related to unwanted or malicious apps hosted on the App Store are few to none. As Apple does not allow security products that integrate with the operating system to be installed on devices, no vendors have yet found a way to develop and bring such products to market. Furthermore, few products or tools have been developed for iOS security in general (for use on-device, the network, or otherwise), owing to the low number of incidents and the complexity of successfully integrating such products into the iOS “ecosystem.” This means that, for the most part, you can’t protect yourself from malicious apps hosted on the App Store, apart from careful consideration when purchasing and installing apps. A user can feel relatively comfortable that most apps are safe, as next to no malware has been found and published to date. Apps from reputable vendors are also likely to be safe and can most likely be installed without issue. For users who store highly sensitive data on their devices, it is recommended that apps be installed only when truly necessary, and only from trustworthy vendors, to whatever degree possible. Otherwise, install the latest firmware when possible, as new firmware versions often resolve issues that could be used by malware to gain elevated privileges on a device (for example, the JBME3.0 kernel exploit or the InstaStock unsigned code execution issue).

Vulnerable Apps: Bundled and Third Party

Vulnerable Apps: Bundled and Third PartyIn the early 2000s, the bread-and-butter technique for attackers was remote exploitation of vulnerable network service code. On an almost weekly basis, it seemed like a new remote execution bug was discovered in some popular Unix or Windows network service. During this time, consumer operating systems such as Windows XP shipped with no host firewall and a number of network services enabled by default. This combination of factors led to relatively easy intrusion into arbitrary systems over the network. As time passed, vendors began to take security more seriously and invest in locking down network service code as well as the default configurations for client operating systems. By the late 2000s, security in this regard had taken a notable turn for the better. In reaction to this tightening of security, vulnerability research began to shift to other areas, including, in particular, to client-side vulnerabilities. From the mid-2000s on, a large number of issues were uncovered in popular client applications such as Internet Explorer, Microsoft Office, Adobe Reader and Flash, the Java runtime, and QuickTime. Client application vulnerabilities such as these were then leveraged to spread malware or to target particular users as in the case of spear phishing or Advanced Persistent Threat (APT)–style attacks.

Interestingly, for mobile platforms such as iOS, although nearly no remote network attacks have been observed, neither has substantial research been performed in the area of third-party app risk. This is not to say that app vulnerability research has not been performed, as many critical issues have been identified in apps bundled with iOS, including, most notably, a number of issues affecting Mobile Safari. We can say, however, that for unbundled apps, only a handful of issues have been identified and published. This could be explained, in part, by the fact that because few third-party apps have been adopted as universally as something like Flash on Windows, that there has simply been little incentive to spend time poking around in this area.

In any event, app vulnerabilities serve as one of the most practical vectors for gaining unauthorized access to iOS-based devices. Over the years, a number of app vulnerabilities affecting iOS have been discovered and reported. A quick Internet search turns up nearly 100 vulnerabilities affecting iOS. Of these issues, a large percentage, nearly 40 percent, relate in one way or another to the Mobile Safari browser. When considering Mobile Safari only, we find 30 to 40 different weaknesses that can be targeted to extract information from, or gain access to, a device (depending on the version of iOS being run on the device). Many of these weaknesses are critical in nature and allow for arbitrary code execution when exploited.

Aside from apps that ship with iOS by default, some vulnerabilities have been identified and reported as affecting third-party apps. In 2010, an issue, now documented as CVE-2010-2913, was reported as affecting the Citi Mobile app versions 2.0.2 and below. The gist of the finding was that the app stored sensitive banking-related information locally on the device. If the device were to be remotely compromised, lost, or stolen, then the sensitive information could be extracted from the device. This vulnerability did not provide remote access and was quite low in severity, but it does help to illustrate the point that third-party apps for iOS, like their desktop counterparts, can suffer from poor security-related design.

Another third-party app vulnerability, now documented as CVE-2010-4211, was reported in November 2010. This time, the PayPal app was reported as being affected by an X.509 certificate validation issue. In effect, the app did not validate that server hostname values matched the subject field in X.509 server certificates received for SSL connections. This weakness allowed an attacker with local network access to man-in-the-middle users in order to obtain or modify traffic sent to or from the app. This vulnerability was more serious than the Citi Mobile vulnerability in that it could be leveraged via local network access and without having to first take control of the app or device. The requirement for local network access, however, made exploitation of the issue difficult in practice.

In September 2011, a cross-site scripting vulnerability was reported as affecting the Skype app, versions 3.0.1 and below. This vulnerability made it possible for an attacker to access the file system of Skype app users by embedding JavaScript code into the “Full Name” field of messages sent to users. Upon receipt of a message, the embedded JavaScript was executed and, when combined with an issue related to handling of URI schemes, allowed an attacker to grab files, such as the contacts database, and upload them to a remote system. This vulnerability is of particular interest because it is one of the first examples of a third-party app vulnerability that could be exploited remotely, without requiring local network or physical access to a device.

In April 2012, it was reported that multiple popular apps for iOS, including the Facebook app and the Dropbox app, were affected by a vulnerability that resulted in values used for authentication being stored on the local device without further protection. It was demonstrated that an attacker could attach to a device using an application such as iExplorer, browse the device’s file system, and copy these files. The attacker could then copy these files to another device and log in using the “borrowed” credentials.

In November 2012, it was reported that the Instagram app version 3.1.2 for iOS was affected by an information disclosure vulnerability. This vulnerability allowed an attacker who had the ability to man-in-the-middle a device’s network connection to capture session information that could then be reused to retrieve or delete data.

In January 2013, it was reported that the ESPN ScoreCenter app version 3.0.0 for iOS was affected by not one but two issues: an XSS vulnerability as well as a cleartext authentication vulnerability. In effect, the app was not sanitizing user input and was also passing sensitive values, including usernames and passwords, over the network unencrypted.

It’s worth mentioning that, whether targeting apps included with iOS or third-party apps installed after the fact, that gaining control over an app is only half the battle when it comes to hacking into an iPhone. Because of restrictions imposed by app sandboxing and code signature verification, even after successfully owning an app, obtaining information from the target device is more difficult, as is the attack persisting across app executions, than has traditionally been possible in the desktop application world. To truly own an iPhone, attackers must combine app-level attacks with the exploitation of kernel-level vulnerabilities. This sets the barrier to entry fairly high for those looking to break into iOS. The average attacker will most likely attempt to repurpose existing kernel-level exploits, whereas more sophisticated attackers will most likely attempt to develop kernel-level exploits for yet-to-be identified issues. In either case, apps included, by default, with iOS, when combined with the 800,000+ apps available for download on the App Store, provide an attack surface large enough to ensure that exploitation of app vulnerabilities will continue to be a reliable way to gain initial access to iOS-based devices for some time to come.

App Vulnerability Countermeasures

App Vulnerability CountermeasuresIn the case of app vulnerabilities, countermeasures come down to the basics: keep your device updated with the latest version of iOS, and keep apps updated to their latest versions. In general, as vulnerabilities in apps are reported, vendors update them and release fixed versions. It may be a bit difficult to track when issues are found, or when they are resolved via updates, so the safe bet is simply to keep iOS and all installed apps as up to date as possible.

Physical Access

Physical AccessNo discussion of iPhone hacking would be complete without considering the options available to an attacker who comes into physical possession of a device. In fact, in some ways, this topic is now much more relevant than in the past, as with the migration to sophisticated smartphones such as the iPhone, more of the sensitive data previously stored and processed on laptops or desktop systems is now being carried out of the safe confines of the office or home and into all aspects of daily life. The average person, employee, or executive is now routinely glued to his or her smartphone, whether checking and sending email or receiving and reviewing documents. Depending on the person and his or her role, the information being processed, from contacts to PowerPoint documents to sensitive internal email messages, could damage the owner or owning organization if it were to fall into the wrong hands. At the same time, this information is being carried into every sort of situation or place that one can imagine. For example, it’s not uncommon to see an executive sending and receiving email while out for dinner with clients. A few too many cervezas, and the phone might just be forgotten on the table or even lifted by an unscrupulous character during a moment of distraction.

Once a device falls into an attacker’s hands, it takes only a few minutes to gain access to the device’s file system and then to the sensitive data stored on the device. Take, for example, the demonstration produced by the researchers at the Fraunhofer Institute for Secure Information Technology (SIT). Staff from this organization published a paper in February 2011 outlining the steps required to gain access to sensitive passwords stored on an iPhone. The process from end-to-end takes about six minutes and involves using a boot-based jailbreak to take control of a device in order to gain access to the file system, followed by installation of an SSH server. Once access is gained via SSH, a script is uploaded that, using only values obtained from the device, can be executed in order to dump passwords stored in the device’s keychain. As the keychain is used to store passwords for many important applications, such as the built-in email client, this attack allows an attacker to recover an initial set of credentials that he or she can then use to gain further access to assets belonging to the device’s owner. Specific values that can be obtained from the device depend, in large part, on the version of iOS installed. With older versions such as iOS 3.0, nearly all values can be recovered from the keychain. With iOS 5.0, Apple introduced additional security measures to minimize the amount of information that can be recovered. However, many values are still accessible and this method continues to serve as a good example of what can be done when an attacker has physical access to an iPhone.

For more information on the attack described in this section, see sit.sit.fraunhofer.de/studies/en/sciphone-passwords.pdf and sc-iphone-passwords-faq.pdf.

For more information on the attack described in this section, see sit.sit.fraunhofer.de/studies/en/sciphone-passwords.pdf and sc-iphone-passwords-faq.pdf.

An alternative and perhaps easier approach to recovering some data from an iPhone is to use an application such as iExplorer. iExplorer provides an easy-to-use point-and-click interface and can be used to browse portions of the file system for all existing iOS devices. You can simply install the application on your desktop or laptop computer, connect your iPhone, and begin poking around the device’s file system. While you won’t full have access to every portion of the file system, you can dig up some interesting data without having to resort to more sophisticated and time-consuming methods for gaining access.

One last approach that might prove to be easiest of all, depending on iOS version, is to simply hack around the iOS screen lock. In January 2013, a technique was published for bypassing the screen lock in iOS 6.0.1 through 6.1. The technique described involved a variety of button presses and screen swipes that ultimately result in access being granted to the phone app. From this screen, an attacker can review contacts, call history, and place calls!

Physical Access Countermeasures

Physical Access CountermeasuresIn the case of attacks involving the physical possession of a device, your options are fairly limited in terms of countermeasures. The primary defense that can be employed against this type of attack is to ensure that all sensitive data on the device has been encrypted. Options for encrypting data include using features provided by Apple, as well as support provided by third-party apps, including those from commercial vendors such as McAfee, Good, and so on. In addition, devices that store sensitive information should have a passcode of at least six digits in length set and in use at all times. This has the effect of strengthening the security of some values stored in the keychain and on the file system, as well as making brute-force attacks against the passcode more difficult to accomplish. Other options available to help thwart physical attacks on a device include the installation of software that can be used to remotely track the location of a device or to remotely wipe sensitive data.

You’d be forgiven for wanting to live “off the grid” after reading this chapter! It’s impossible to neatly summarize the many things we’ve discussed here, so we won’t belabor much further. Here are some key considerations for mobile security discussed in this chapter:

• Evaluate the purpose of your device and the data carried on it, and adapt your behavior and configuration to the purpose/data. For example, carry a separate device for sensitive business communications and activity, and configure it much more conservatively than you would a personal entertainment device.

• Enable device lock. Remember, all touch-screen-based unlock mechanisms might leave tell-tale smudges that can easily be seen, allowing someone to unlock your device easily (see pcworld.com/businesscenter/article/203060/smartphone_security_thwarted_by_fingerprint_smudges.html). Use screen wipes to clean your screen frequently, or use repeated digits in your unlock PIN to reduce information leakage from smudges (see skeletonkeysecurity.com/post/15012548814/pins-3-is-the-magic-number).

• Physical access remains the attack vector with the greatest probability of success. Keep physical control of your device, and enable wipe functionality as appropriate using local or remote features.

• Keep your device software up-to-date. Ideally, install over-the-air iOS updates as soon as they become available (over-the-air update support was introduced with iOS 5.0.1). Don’t forget to update your apps regularly as well!

• Unless used solely for entertainment/research (that is, high-value/sensitive data does not traverse the device), don’t root/jailbreak your device. Such privileged access circumvents the security measures implemented by the operating system and interferes with keeping software up to date or makes it too hard to do regularly. Many in-the-wild exploits have targeted out-of-date software/configurations on rooted/jailbroken devices.

• Configure your device to “Ask To Join Networks,” rather than automatically connecting. This prevents inadvertent connection to malicious wireless networks that can easily compromise your device at multiple layers.

• Be very selective about the apps you download and install. Although Apple does “curate” the App Store, there are known instances of malicious and vulnerable apps slipping through. Once you’ve executed unknown code, you’ve … well, executed unknown code.

• Install security software, such as Lookout or McAfee Mobile Security. If your organization supports it (and they should), use mobile device management (MDM) software and services for your device, especially if it is intended to handle sensitive information. MDM offers features such as security policy specification and enforcement, logging and alerting, automated over-the-air updates, anti-malware, backup/restore, device tracking and management, remote lock and wipe, remote troubleshooting and diagnostics, and so on.