Appendix C: Tool and Equipment Validation Program

Introduction

All digital forensic tools and equipment work differently, and may behave differently, when used on different evidence sources. Before using any tools or equipment to gather or process evidence, investigators have to be familiar with how those tools operate by practicing on a variety of evidence sources.

This testing must demonstrate that these tools and equipment follow the proven principles, methodologies, and techniques used throughout digital forensic science. This process of testing introduces a level of assurance that the tools and equipment being used by investigators are forensically sound, will not result in the evidence being inadmissible or discredited.

Standards and Baselines

For data to be admissible as evidence in legal proceedings, testing and experimentation must be completed that generates repeatable1 and reproducible2 results; meaning that results must consistently produce the same results.

As a result of the Daubert Standard, all digital forensic tools and equipment must be validated and verified to meet specific evidentiary and scientific criteria in order for evidence to be admissible in legal proceedings. In the context of applying the Daubert Standard to software testing, there is a clear distinction between the activities and steps performed as part of both validation3 and verification.4

Building a Program

The ability to design, implement, and maintain a defensible validation and verification program is an essential characteristic that a digital forensic professional should have. With this type of program in place, the digital forensic team will be able to provide a level of assurance of what the capabilities of their tools and equipment are as well as to identify what, if any, limitations exist so that compensating actions can be applied; such as acquiring other tools and equipment or creating additional procedures.

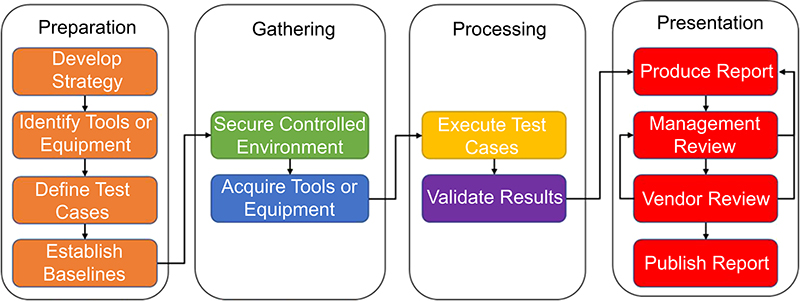

The methodology for performing tools and equipment testing consists of several distinct activities and steps that must be completed in a linear workflow. To formalize this workflow, a process model must be implemented to govern the completion of each activity and step in the sequence they must be executed. Illustrated in Figure C.1, the phases proposed in chapter “Investigative Process Models” for both the high-level digital forensic process model and digital forensic readiness process model are consistently applied to the activities and steps performed in tool and equipment testing. Consisting of four phases, the digital forensic tool testing process model focuses on the basic categories of tools and equipment testing.

Preparation

Similar to how a project charter establishes scope, schedule, and cost, the testing plan of digital forensic tool or equipment must follow an identical structure. The starting point of this strategy is to define and capture the objective and expectation of the testing specific to how the tool or equipment is expected to behave. Following the objective setting, further details of the testing plan must be documented using a formalized structure to include, at a minimum, the following sections:

• Introduction: A summary of the objectives and goals of the testing

• Background: Description of why the testing is being performed

• Purpose/scope: Specifications of technical and business functionality the testing is expected to generate

• Approach: Procedures, activities, and steps that will be followed throughout the testing

• Deliverables: Definition of test cases and the success criteria for testing documented functionality

• Assumptions: Identification of circumstances and outcomes that are taken for granted in the absence of concrete information

• Constraints: Administrative, technical, or physical activities or steps that directly or indirectly restrict the testing

• Dependencies: Administrative, technical, or physical activities or steps that must be completed prior to testing

• Project team: Visualization of the testing team structure and governing bodies

Gathering

This phase of the testing program is either the longest and most time-consuming or the easiest and fastest. The determination for this depends on how well the plan’s objectives, scope, and schedule were documented during the preparation phase that took place previously. In this phase, the tactical approaches outlined in the plan’s strategy are completed to acquire the tool or equipment that will be subject to the testing. Prior to making any purchases, it is essential that both parties enter into contractual agreement with each other; such as a nondisclosure agreement (NDA) and statement of work (SOW).

• An NDA is a formal document that creates a mutual relationship between parties to protect nonpublic, confidential, or proprietary information and specifies the materials, knowledge, or information that will be shared but must remain restricted from disclosure to other entities.

• An SOW is a formal document that contains details often viewed as legally equivalent to a contract to capture and address details of the testing.

Both documents contain terms and conditions that are considered legally binding between the parties involved. These documents must be reviewed and approved by appropriate legal representatives before each party commits to them by signing. In the absence of providing wording for how the content within these documents should be structured, at a minimum the following sections should be included:

• Introduction: A statement referring to NDA as the governing agreement for terms and provisions incorporated in the SOW

• Location of work: Describes where people will perform the work and the location of tools or equipment

• Deliverables schedule: A listing of the items that will be produced, the start and finish time, and the individuals responsible for providing results

• Success criteria: Baselines that will be used for measuring the success criteria against each test case including the criteria for measuring success or failure, scenario for how the test will be executed, tools or equipment subject to the test, and the business value for conducting the test case

• Assumptions: Identification of circumstances and outcomes that are taken for granted in the absence of concrete information

• Key personnel: Provides contact information for the individuals, from all parties, who will be involved in the testing

• Payment schedule: A breakdown of the fees, if any, that will be paid—up front, in phases, or upon completion—to cover expenses for individuals and tools or equipment involved in the testing

• Miscellaneous: Items that are not part of the terms or provisions but must be listed because they are relevant to the testing and should not overlooked

Following the creation of these formal documents, the tools or equipment that is subject to testing can be procured. In parallel, the team can secure and build the controlled environment where the test cases, as defined in the SOW, will be executed. This controlled environment must be built following previously defined baselines as well as the SOW deliverables. Once the controlled environment is created, and before it is used for the testing, the environment itself must be tested and validated to ensure that it matches the specifications of the baselines and test cases. By documenting the validity of the controlled environment, it can easily be reused in future testing because a level of assurance has been established.

Processing

Software testing is one of many activities used as part of the systems development life cycle (SDLC) to determine if the output results meet the input requirements. This phase is where the documented test cases are executed and success criteria are measured to verify and validate the functionality and capabilities of the tool or equipment. Before starting the activities and steps involved in executing test cases, it is important to understand the differences between verification and validation.

Verification

In general terms, a verification process answers the question “did you build the right thing” by objectively assessing if the tool or equipment was built according to the requirement and specifications. Verification focuses on determining if the accompanied documentation consistently, accurately, and thoroughly describes the design and functionality of the tool or equipment being tested. Techniques used during the verification tools or equipment can be split into two distinct categories:

• Dynamic analysis involves executing test cases against the tool or equipment using a controlled data set to assess the tool’s or equipment’s documented functionality. This category applies a combination of black box5 and white box6 testing methodologies to support:

• functional assessments of documented features to identify and determine actual capabilities

• structural review of individual components to further assess specific functionalities

• random evaluation to detect faults or unexpected output from documented features

• Static analysis involves performing a series of test cases using the tool or equipment following manual or automated techniques to assess the nonfunctional components. This category applies a series of programmatic testing methodologies to support:

• consistency of internal coding properties such as syntax, typing, parameters matching between procedures, and translation of specifications

• measurement of internal coding properties such as structure, logic, and probability for error

Validation

In general terms, a validation process confirms through objective examination and provisioning if “you built it right” to prove that requirements and specifications have been implemented correctly and completely. Validation activities rely on the application of an all-inclusive testing methodology that happens both during and after the SDLC. Techniques used during the validation of tools or equipment can be performed by:

• intentionally initiating faults into different components (eg, hardware, software, memory) to observe the response

• determining what the probability of reoccurrence is for a weakness identified in different components (eg, hardware, software, memory), and subsequently selecting countermeasures as a means of reducing or mitigating exposures

Completing test cases can be a lengthy and time-consuming process. Completing test cases should be thorough because it is fundamental in proving that the tool or equipment maintains and protects the admissibility and credibility of digital evidence; ultimately protecting the credibility of forensic professionals. While there are indirect factors such as caseload or other work responsibilities that impact the amount of time spent on testing, the following direct influences cannot be overlooked and must be maintained during testing.

• Regulating testing processes within secure and controlled lab environments

• Limiting the duplication of test results from others without subsequent validation

• Preventing use of generalized processes or technologies that suggest arbitrary use

As the test cases are being executed, it is important to keep a record of all actions taken and the outputted results. Using a formalized document to track test case execution provides a level of assurance that the tests have been completed as specified in the strategy plan and SOW. A formalized test case report template has been provided in the Templates section of this book, which includes a matrix for recording and tracking execution of each test case.

Presentation

Once testing has concluded a summary of all activities, test results, conclusions, etc. must be prepared using a formalized test case report; as seen in the template provided in the Templates section of this book. While the initial draft of the final report might be performed by a single person, it should be reviewed for accuracy and authenticity by peers and management before being finalized. This review process will ensure that, as illustrated in the test case report template, the scope and results of the testing meet the specific business objectives so that when it comes time to obtain approvals to finalize, the testing process will not be challenged.

Having obtained final authorizations and approvals on the test case report, it can now be published and distributed to stakeholders who will be influenced as a result of the testing outcomes. Using the testing results, these stakeholders can now develop standard operating procedures (SOP) to use the tool or equipment for gathering and processing digital evidence.

Summary

Maintaining the integrity of digital evidence throughout its lifetime is an essential requirement of every digital forensics investigation. Organizations must consistently demonstrate their due diligence by providing a level of assurance that the principles, methodologies, and techniques used during a digital forensic investigation are forensically sound.