2 Nature: or growth of living matter

Perhaps the most remarkable attribute of natural growth is how much diversity is contained within the inevitable commonality dictated by fundamental genetic makeup, metabolic processes, and limits imposed by combinations of environmental factors. Trajectories of all organismic growth must assume the form of a confined curve. As already noted, many substantial variations within this broad category have led to the formulation of different growth functions devised in order to find the closest possible fits for specific families, genera or species of microbes, plants or animals or for individual species. S-shaped curves are common, but so are those conforming to confined exponential growth, and there are (both expected and surprising) differences between the growth of individuals (and their constituent parts, from cells to organs) and the growth of entire populations.

Decades-long neglect of Verhulst’s pioneering growth studies postponed quantitative analyses of organismic growth until the early 20th century. Most notably, in his revolutionary book Darwin did not deal with growth in any systematic manner and did not present any growth histories of specific organisms. But he noted the importance of growth correlation—“when slight variations in any one part occur, and are accumulated through natural selection, other parts become modified” (Darwin 1861, 130)—and, quoting Goethe (“in order to spend on one side, nature is forced to economise on the other side”), he stressed a general growth principle, namely that “natural selection will always succeed in the long run in reducing and saving every part of the organization, as soon as it is rendered superfluous, without by any means causing some other part to be largely developed in a corresponding degree” (Darwin 1861, 135).

This chapter deals with the growth of organisms, with the focus on those living forms that make the greatest difference for the functioning of the biosphere and for the survival of humanity. This means that I will look at cell growth only when dealing with unicellular organisms, archaea and bacteria—but will not offer any surveys of the genetic, biochemical and bioenergetic foundations of the process (both in its normal and aberrative forms) in higher organisms. Information on such cell growth—on its genetics, controls, promoters, inhibitors, and termination—is available in many survey volumes, including those by Studzinski (2000), Hall et al. (2004), Morgan (2007), Verbelen and Vissenberg (2007), Unsicker and Krieglstein (2008) and Golitsin and Krylov (2010).

The biosphere’s most numerous, oldest and simplest organisms are archaea and bacteria. These are prokaryotic organisms without a cell nucleus and without such specialized membrane-enclosed organelles as mitochondria. Most of them are microscopic but many species have much larger cells and some can form astonishingly large assemblages. Depending on the species involved and on the setting, the rapid growth of single-celled organisms may be highly desirable (a healthy human microbiome is as essential for our survival as any key body organ) or lethal. Risks arise from such diverse phenomena as the eruptions and diffusion of pathogens—be they infectious diseases affecting humans or animals, or viral, bacterial and fungal infestations of plants—or from runaway growth of marine algae. These algal blooms can kill other biota by releasing toxins, or when their eventual decay deprives shallow waters of their normal oxygen content and when anaerobic bacteria thriving in such waters release high concentrations of hydrogen sulfide (UNESCO 2016).

The second subject of this chapter, trees and forests—plant communities, ecosystems and biomes that are dominated by trees but that could not be perpetuated without many symbioses with other organisms—contain most of the world’s standing biomass as well as most of its diversity. The obvious importance of forests for the functioning of the biosphere and their enormous (albeit still inadequately appreciated and hugely undervalued) contribution to economic growth and to human well-being has led to many examinations of tree growth and forest productivity. We now have a fairly good understanding of the overall dynamics and specific requirements of those growth phenomena and we can also identify many factors that interfere with them or modify their rates.

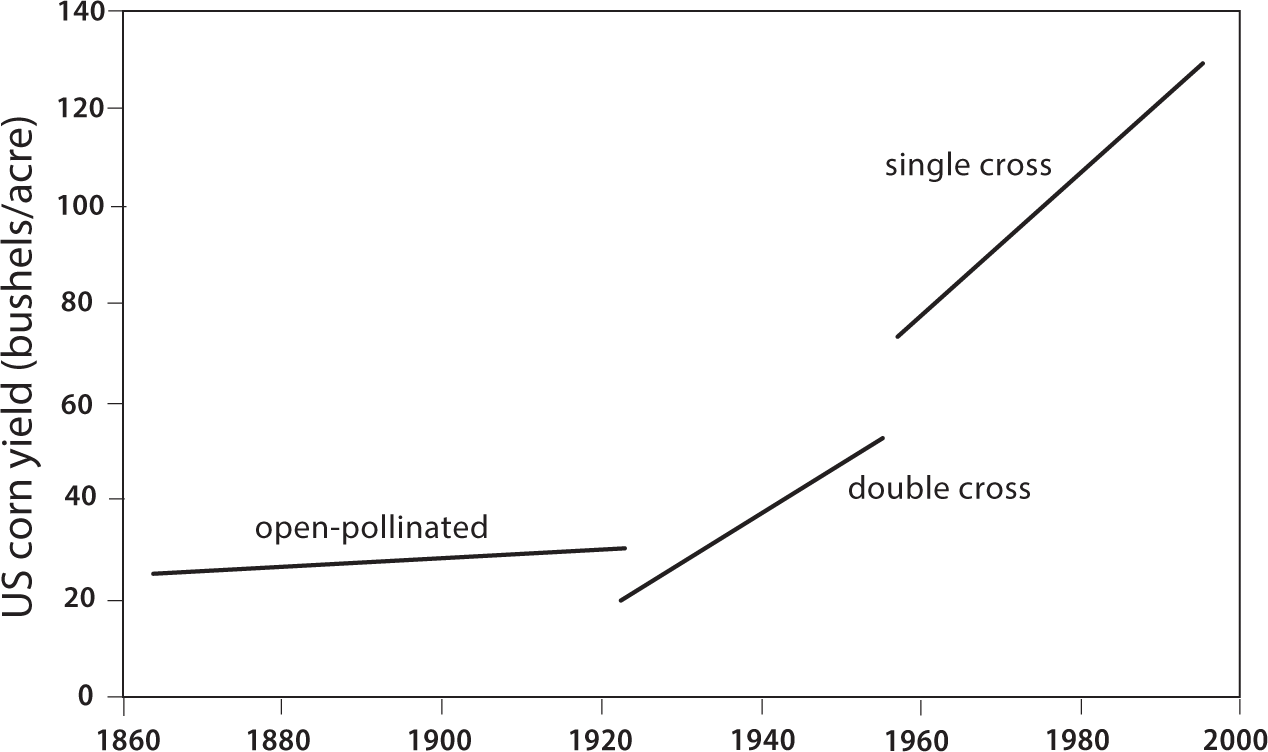

The third focus of this chapter will be on crops, plants that have been greatly modified by domestication. Their beginnings go back to 8,500 BCE in the Middle East, with the earliest domesticates being einkorn and emmer wheat, barley, lentils, peas, and chickpeas. Chinese millet and rice were first cultivated between 7,000 and 6,000 BCE and the New World’s squash was grown as early as 8,000 BCE (Zohary et al. 2012). Subsequent millennia of traditional selection brought incremental yield gains, but only modern crop breeding (hybrids, short-stalked cultivars), in combination with improved agronomic methods, adequate fertilization, and where needed also irrigation and protection against pests and weeds, has multiplied the traditional crop yields. Further advances will follow from the future deployment of genetically engineered plants.

In the section on animal growth I will look first at the individual development and population dynamics of several important wild species but most of it will be devoted to the growth of domestic animals. Domestication has changed the natural growth rates of all animals reared for meat, milk, eggs, and wool. Some of these changes have resulted in much accelerated maturation, others have also led to commercially desirable but questionable body malformations. The first instance is illustrated with pigs, the most numerous large meat animals. In traditional Asian settings, animals were usually left alone to fend for themselves, rooting out and scavenging anything edible (pigs are true omnivores). As a result, it may have taken more than two years for them to reach slaughter weight of at least 75–80 kg. In contrast, modern meat breeds kept in confinement and fed a highly nutritious diet will reach slaughter weight of 100 kg in just 24 weeks after the piglets are weaned (Smil 2013c). Heavy broiler chickens, with their massive breast muscles, are the best example of commercially-driven body malformations (Zuidhof et al. 2014).

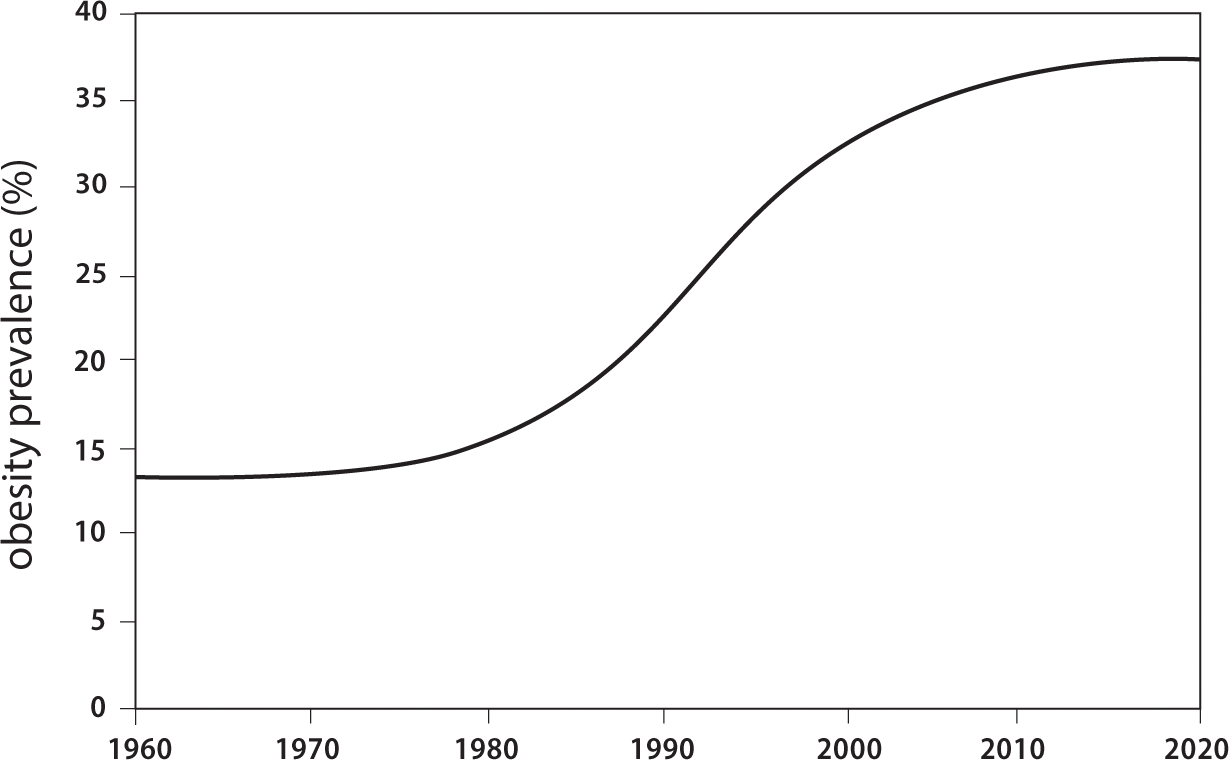

The chapter will close with the examination of human growth and some of its notable malfunctions. I will first outline the typical progress of individual growth patterns of height and body mass from birth to the end of adolescence and the factors that promote or interfere with the expected performance. Although the global extent of malnutrition has been greatly reduced, shortages of food in general or specific nutrients in particular still affect too many children, preventing their normal physical and mental development. On the opposite end of the human growth spectrum is the worrisome extent of obesity, now increasingly developing in childhood. But before turning to more detailed inquiries into the growth of major groups of organisms, I must introduce the metabolic theory of ecology. This theory outlines the general approach linking the growth of all plants and all animals to their metabolism, and it has been seen by some as one of the greatest generalizing advances in biology while others have questioned its grand explanatory powers (West et al. 1997; Brown et al. 2004; Price et al. 2012). Its formulation arises from the fact that many variables are related to body mass according to the equation y = aM b where y is the variable that is predicted to change with body mass M, a is a scaling coefficient and b is the slope of allometric exponent. The relationship becomes linear when double-logged: log y = log a + b log M.

The relationship of body size to metabolic characteristics has long been a focus of animal studies, but only when metabolic scaling was extended to plants did it become possible to argue that the rates of biomass production and growth of all kinds of organisms, ranging from unicellular algae to the most massive vertebrates and trees, are proportional to metabolic rate, which scales as the ¾ power of body mass M (Damuth 2001). Metabolic scaling theory for plants, introduced by West et al. (1997), assumes that the gross photosynthetic (metabolic) rate is determined by potential rates of resource uptake and subsequent distribution of resources within the plant through branching networks of self-similar (fractal) structure.

The original model was to predict not only the structural and functional properties of the vertebrate cardiovascular and respiratory system, but also those of insect tracheal tubes and plant vascular system. Relying on these assumptions, Enquist et al. (1999) found that annual growth rates for 45 species of tropical forest trees (expressed in kg of dry matter) scale as M3/4 (that is, with the same exponent as the metabolism of many animals) and hence the growth rates of diameter D scale as D1/3. Subsequently, Niklas and Enquist (2001) confirmed that a single allometric pattern extends to autotrophic organisms whose body mass ranged over 20 orders of magnitude and whose lengths (either cell diameter or plant height) spanned over 22 orders of magnitude, from unicellular algae to herbs and to monocotyledonous, dicotyledonous, and conifers trees (figure 2.1).

Allometric pattern of autotrophic mass and growth rate. Based on Niklas and Enquist (2001).

And a few years later Enquist et al. (2007) derived a generalized trait-based model of plant growth. The ¾ scaling also applies to light-harvesting capacity measured either as the pigment content of algal cells or as foliage phytomass of plants. As a result, the relative growth rate of plants decreases with increasing plant size as M−1/4, and primary productivity is little affected by species composition: an identical density of plants with similar overall mass will fix roughly the same amount of carbon. The uniform scaling also means that the relative growth rate decreases with increasing plant size as M−1/4. Light-harvesting capacity (chlorophyll content of algal cells or foliage in higher plants) also scales as M3/4, while plant length scales as M1/4.

Niklas and Enquist (2001) had also concluded that plants—unlike animals that have similar allometric exponents but different normalization constants (different intercepts on a growth graph)—fit into a single allometric pattern across the entire range of their body masses. This functional unity is explained by the reliance on fractal-like distribution networks required to translocate photosynthate and transpire water: their evolution (hierarchical branching and shared hydro- and biomechanics) had maximized metabolic capacity and efficiency by maximizing exchange surfaces and throughputs while minimizing transport distances and transfer rates (West et al. 1999).

But almost immediately ecologists began to question the universality of the metabolic scaling theory in general, and the remarkable invariance of exponents across a wide range of species and habitats in particular. Based on some 500 observations of 43 perennials species whose sizes spanned five of the 12 orders of magnitude of size in vascular plants, Reich et al. (2006) found no support for ¾-power scaling of plant nighttime respiration, and hence its overall metabolism. Their findings supported near-isometric scaling, that is exponent ~ 1, eliminated the need for fractal explanations of the ¾-power scaling and made a single size-dependent law of metabolism for plants and animals unlikely.

Similarly, Li et al. (2005), using a forest phytomass dataset for more than 1,200 plots representing 17 main forest types across China, found scaling exponents ranging from about 0.4 (for boreal pine stands) to 1.1 (for evergreen oaks), with only a few sites conforming to the ¾ rule, and hence no convincing evidence for a single constant scaling exponent for the phytomass-metabolism relationship in forests. And Muller-Landau et al. (2006) examined an even large data set from ten old-growth tropical forests (encompassing more than 1.7 million trees) to show that the scaling of growth was clearly inconsistent with the predictions based on the metabolic theory, with only one of the ten sites (a montane forest at high elevation) coming close to the expected value.

Their results were consistent with an alternative model that also considered competition for light, the key photosynthetic resource whose availability commonly limits tropical tree growth. Scaling of plant growth that depends only on the potential for capturing and redistributing resources is wrong, and there are no universal scaling relationships of growth (as well as of tree mortality) in tropical forests. Coomes and Allen (2009) confirmed these conclusions by demonstrating how Enquist et al. (1999), by failing to consider asymmetric competition for light, underestimated the mean scaling exponent for tree diameter growth: rather than 0.33, its average across the studied Costa Rican forest species should be 0.44.

Rather than testing the metabolic theory’s predictions, Price at al. (2012, 1472) looked at its physical and chemical foundations and at its simplifying assumptions and concluded that there is still no “complete, universal and causal theory that builds from network geometry and energy minimization to individual, species, community, ecosystem and global level patterns.” They noted that the properties of distribution model are mostly specific to the cardiovascular system of vertebrates, and that empirical data offer only limited support for the model, and cited Dodds et al. (2001), who argued that ¾ scaling could not be derived from hydraulic optimization assumed by West et al. (1997). Consequently, these might be the best conclusions regarding the metabolic scaling theory: it provides only coarse-grained insight by describing the central tendency across many orders of magnitude; its key tenet of ¾ scaling is not universally valid as it does not apply to all mammals, insects or plants; and it is only a step toward a truly complete causal theory that, as yet, does not exist. Not surprisingly, the growth of organisms and their metabolic intensity are far too complex to be expressed by a single, narrowly bound, formula.

Microorganisms and Viruses

If the invisibility without a microscope were the only classification criterion then all viruses would be microorganisms—but because these simple bundles of protein-coated nucleic acids (DNA and RNA) are acellular and are unable to live outside suitable host organisms they must be classified separately. There are some multicellular microorganisms, but most microbes—including all archaea and bacteria—are unicellular. These single-celled organisms are the simplest, the oldest, and by far the most abundant forms of life—but their classification is anything but simple. The two main divisions concern their structure and their metabolism. Prokaryotic cells do not have either a nucleus or any other internal organelles; eukaryotic cells have these membrane-encased organelles.

Archaea and Bacteria are the two prokaryotic domains. This division is relatively recent: Woese et al. (1990) assigned all organisms to three primary domains (superkingdom categories) of Archaea, Bacteria, and Eucarya, and the division relies on sequencing base pairs in a universal ribosomal gene that codes for the cellular machinery assembling proteins (Woese and Fox 1977). Only a tiny share of eukaryotes, including protozoa and some algae and fungi, are unicellular. The basic trophic division is between chemoautotrophs (able to secure carbon from CO2) and chemoheterotrophs, organisms that secure their carbon by breaking down organic molecules. The first group includes all unicellular algae and many photosynthesizing bacteria, the other metabolic pathway is common among archaea, and, of course, all fungi and animals are chemoheterotrophs.

Further divisions are based on a variety of environmental tolerances. Oxygen is imperative for growth of all unicellular algae and many bacteria, including such common genera as Bacillus and Pseudomonas. Facultative anaerobes can grow with or without the element and include such commonly occurring bacterial genera as Escherichia, Streptococcus and Staphylococcus, as well as Saccharomyces cerevisiae, fungus responsible for alcoholic fermentation and raised dough. Anaerobes (all methanogenic archaea and many bacterial species) do not tolerate oxygen’s presence. Tolerances of ambient temperature divide unicellular organisms among psychrophilic species able to survive at low (even subzero) temperatures, mesophilic species that do best within a moderate range, and thermophiles that can grow and reproduce at temperatures above 40°C.

Psychrophiles include bacteria causing food spoilage in refrigerators: Pseudomonas growing on meat, Lactobacillus growing on both meat and dairy products, and Listeria growing on meat, seafood, and vegetables at just 1–4°C. Some species metabolize even in subzero temperatures, either in supercooled cloud droplets, in brine solutions or ice-laden Antarctic waters whose temperature is just below the freezing point (Psenner and Sattler 1998). Before the 1960s no bacterium was considered more heat-tolerant than Bacillus stearothermophilus, able to grow in waters of 37–65°C; it was the most heat-tolerant known bacterium before the discoveries of hyperthermophilic varieties of Bacillus and Sulfolobus raised the maximum to 85°C during the 1960s and then to 95–105°C for Pyrolobus fumarii, an archaeon found in the walls of deep-sea vent chimneys spewing hot water; moreover, Pyrolobus stops growing in waters below 90°C (Herbert and Sharp 1992; Blöchl et al. 1997; Clarke 2014).

Extremophilic bacteria and archaea have an even larger tolerance range for highly acidic environments. While most species grow best in neutral (pH 7.0) conditions, Picrophilus oshimae has optimal growth at pH 0.7 (more than million times more acid than the neutral environment), a feat even more astonishing given the necessity to maintain internal (cytoplasmic) pH of all bacteria close to 6.0. Bacteria living in acidic environments are relatively common at geothermal sites and in acid soils containing pyrites or metallic sulfides and their growth is exploited commercially to extract copper from crushed low-grade ores sprinkled with acidified water.

In contrast, alkaliphilic bacteria cannot tolerate even neutral environments and grow only when pH is between 9 and 10 (Horikoshi and Grant1998). Such environments are common in soda lakes in arid regions of the Americas, Asia, and Africa, including Mono and Owens lakes in California. Halobacterium is an extreme alkaliphile that can tolerate pH as high as 11 and thrives in extremely salty shallow waters. One of the best displays of these algal blooms, with characteristic red and purple shades due to bacteriorhodopsin, can be seen in dike-enclosed polders in the southern end of San Francisco Bay from airplanes approaching the city’s airport.

And there are also polyextremophilic bacteria able to tolerate several extreme conditions. Perhaps the best example in this category is Deinococcus radiodurans, commonly found in soils, animal feces and sewage: it survives extreme doses of radiation, ultraviolet radiation, desiccation and freeze-drying thanks to its extraordinary capacity to repair damaged DNA (White et al. 1999; Cox and Battista 2005). At the same time, for some bacterial growth even small temperature differences matter: Mycobacterium leprae prefers to invade first those body parts that are slightly cooler and that is why leprotic lesions often show up first in the extremities as well as ears. Not surprisingly, optimum growth conditions for common bacterial pathogens coincide with the temperature of the human body: Staphylococcus aureus responsible for skin and upper respiratory infections prefers 37°C as does Clostridium botulinum (producing dangerous toxin) and Mycobacterium tuberculosis. But Escherichia coli, the species most often responsible for the outbreaks of diarrhea and for urinary tract infections, has optimum growth at 40°C.

Microbial Growth

Microbial growth requires essential macro- and micronutrients, including nitrogen (indispensable constituent of amino acids and nucleic acids), phosphorus (for the synthesis of nucleic acids, ADP and ATP), potassium, calcium, magnesium, and sulfur (essential for S-containing amino acids). Many species also need metallic trace elements, above all copper, iron, molybdenum, and zinc, and must also obtain such growth factors as vitamins of the B group from their environment. Bacterial growth can be monitored best by inoculating solid (agar, derived from a polysaccharide in some algal cell walls) or liquid (nutrient broth) media (Pepper et al. 2011).

Monod (1949) outlined the succession of phases in the growth of bacterial cultures: initial lag phase (no growth), acceleration phase (growth rate increasing), exponential phase (growth rate constant), retardation phase (growth rate slowing down), and stationary phase (once again, no growth, in this case as a result of nutrient depletion or presence of inhibitory products) followed by the death phase. This generalized sequence has many variations, as one or several phases may be either absent or last so briefly as to be almost imperceptible; at the same time, trajectories may be more complex than the idealized basic outline. Because the average size of growing cells may vary significantly during different phases of their growth, cell concentration and bacterial density are not equivalent measures but in most cases the latter variable is of the greater importance.

The basic growth trajectory of cultured bacterial cell density is obviously sigmoidal: figure 2.2 shows the growth of Escherichia coli O157:H7, a commonly occurring serotype that contaminates raw food and milk and produces Shiga toxin causing foodborne colonic escherichiosis. Microbiologists and mathematicians have applied many standard models charting sigmoidal growth—including autocatalytic, logistic and Gompertz equations—and developed new models in order to find the best fits for observed growth trajectories and to predict the three key parameters of bacterial growth, the duration of the lag phase, the maximum specific growth rate, and the doubling time (Casciato et al. 1975; Baranyi 2010; Huang 2013; Peleg and Corradini 2011).

Logistic growth of Escherichia coli O157:H7. Plotted from data in Buchanan et al. (1997).

Differences in overall fit among commonly used logistic-type models are often minimal. Peleg and Corradini (2011) showed that despite their different mathematical structure (and also despite having no mechanistic interpretation), Gompertz, logistic, shifted logistic and power growth models had excellent fit and could be used interchangeably when tracing experimental isothermal growth of bacteria. Moreover, Buchanan et al. (1997) found that capturing the growth trajectory of Escherichia coli does not require curve functions and can be done equally well by a simple three-phase linear model. A logical conclusion is that the simplest and the most convenient model should be chosen to quantify common bacterial growth.

Under optimal laboratory conditions, the ubiquitous Escherichia coli has generation time (the interval between successive divisions) of just 15–20 minutes, its synchronous succinate-grown cultures showed generation time averaging close to 40 minutes (Plank and Harvey 1979)—but its doubling takes 12–24 hours in the intestinal tract. Experiments by Maitra and Dill (2015) show that at its replication speed limit (fast-growth mode when plentiful nutrition is present) Escherichia duplicates all of its proteins quickly by producing proportionately more ribosomes—protein complexes that a cell makes to synthesize all of the cell’s proteins—than other proteins. Consequently, it appears that energy efficiency of cells under fast growth conditions is the cell’s primary fitness function.

Streptococcus pneumoniae, responsible for sinusitis, otitis media, osteomyelitis, septic arthritis, endocarditis, and peritonitis, has generation time of 20–30 minutes. Lactobacillus acidophilus—common in the human and animal gastrointestinal tract and present (together with other species of the same genus, Lactobacillus bulgaricus, bifidus, casei) in yoghurt and buttermilk—has generation time of roughly 70–90 minutes. Rhizobium japonicum, nitrogen-fixing symbiont supplying the nutrient to leguminous crops, divides slowly, taking up to eight hours for a new generation. In sourdough fermentation, industrial strains of Lactobacillus used in commercial baking reach their peak growth between 10–15 hours after inoculation and cease growing 20–30 hours later (Mihhalevski et al. 2010), and generation time for Mycobacterium tuberculosis averages about 12 hours.

Given the enormous variety of biospheric niches inhabited by microorganisms, it comes as no surprise that the growth of archaea, bacteria, and unicellular fungi in natural environments—where they coexist and compete in complex assemblages—can diverge greatly from the trajectories of laboratory cultures, and that the rates of cellular division can range across many orders of magnitude. The lag phases can be considerably extended when requisite nutrients are absent or available only in marginal amounts, or because they lack the capacity to degrade a new substrate. The existing bacterial population may have to undergo the requisite mutations or a gene transfer to allow further growth.

Measurements of bacterial growth in natural environments are nearly always complicated by the fact that most species live in larger communities consisting of other microbes, fungi, protozoa, and multicellular organisms. Their growth does not take place in monospecific isolation and it is characterized by complex population dynamics, making it very difficult to disentangle specific generation times not only in situ but also under controlled conditions. Thanks to DNA extraction and analysis, we came to realize that there are entire bacterial phyla (including Acidobacteria, Verrucomicrobia, and Chloroflexi in soils) prospering in nature but difficult or impossible to grow in laboratory cultures.

Experiments with even just two species (Curvibacter and Duganella) grown in co-culture indicate that their interactions go beyond the simple case of direct competition or pairwise games (Li et al. 2015). There are also varied species-specific responses to pulsed resource renewal: for example, coevolution with Serratia marcescens (an Enterobacter often responsible for hospital-acquired infections) caused Novosphingobium capsulatum clones (able to degrade aromatic compounds) to grow faster, while the evolved clones of Serratia marcescens had a higher survival and slower growth rate than their ancestor (Pekkonen et al. 2013).

Decomposition rates of litterfall (leaves, needles, fruits, nuts, cones, bark, branches) in forests provide a revealing indirect measure of different rates of microbial growth in nature. The process recycles macro- and micronutrients. Above all, it controls carbon and nitrogen dynamics in soil, and bacteria and fungi are its dominant agents on land as well as in aquatic ecosystems (Romaní et al. 2006; Hobara et al. 2014). Their degradative enzymes can eventually dismantle any kind of organic matter, and the two groups of microorganisms act in both synergistic and antagonistic ways. Bacteria can decompose an enormous variety of organic substrates but fungi are superior, and in many instances, the only possible decomposers of lignin, cellulose, and hemicellulose, plant polymers making up woody tissues and cell walls.

Decomposition of forest litter requires the sequential breakdown of different substrates (including waxes, phenolics, cellulose, and lignin) that must be attacked by a variety of metallomic enzymes, but microbial decomposers live in the world of multiple nutrient limitations (Kaspari et al. 2008). Worldwide comparison, using data from 110 sites, confirmed the expected positive correlation of litter decomposition with decreasing latitude and lignin content and with increasing mean annual temperature, precipitation, and nutrient concentration (Zhang et al. 2008). While no single factor was responsible for a high degree of explanation, the combination of total nutrients and carbon: nitrogen ratio explained about 70% of the variation in the litter decomposition rates. In relative terms, decomposition rates in a tropical rain forest were nearly twice as high as in a temperate broadleaved forest and more than three times as fast as in a coniferous forest. Litter quality, that is the suitability of the substrate for common microbial metabolism, is clearly the key direct regulator of litter decomposition at the global scale.

Not surprisingly, natural growth rates of some extremophilic microbes can be extraordinarily slow, with generation times orders of magnitude longer than is typical for common soil or aquatic bacteria. Yayanos et al. (1981) reported that an obligate barophilic (pressure-tolerant) isolate, retrieved from a dead amphipod Hirondella gigas captured at the depth of 10,476 meters in Mariana Trench (the world’s deepest ocean bottom), had the optimal generation times of about 33 hours at 2°C under 103.5 MPa prevailing at its origin. Similarly, Pseudomonas bathycetes, the first species isolated from a sediment sample taken from the trench, has generation time of 33 days (Kato et al. 1998). In contrast, generation times of thermophilic and piezophilic (pressure-tolerant) microbes in South Africa’s deep gold mines (more than 2 km) are estimated to be on the order of 1,000 years (Horikoshi 2016).

And then there are microbes buried deep in the mud under the floor of the deepest ocean trenches that can grow even at gravity more than 400,000 times greater than at the Earth’s surface but “we cannot estimate the generation times of [these] extremophiles … They have individual biological clocks, so the scale of their time axis will be different” (Horikoshi 2016, 151). But even the span between 20 minutes for average generation time of common gut and soil bacteria and 1,000 years of generation time for barophilic extremophiles amounts to the difference of seven orders of magnitude, and it is not improbable that the difference may be up to ten orders of magnitude for as yet undocumented extremophilic microbes.

Finally, a brief look at recurrent marine microbial growths that are so extensive that they can be seen on satellite images. Several organisms—including bacteria, unicellular algae and eukaryotic dinoflagellates and coccolithophores—can multiply rapidly and produce aquatic blooms (Smayda 1997; Granéli and Turner 2006). Their extent may be limited to a lake, a bay, or to coastal waters but often they cover areas large enough to be easily identified on satellite images (figure 2.3). Moreover, many of these blooms are toxic, presenting risks to fish and marine invertebrates. One of the most common blooms is produced by species of Trichodesmium, cyanobacteria growing in nutrient-poor tropical and subtropical oceans whose single cells with gas vacuoles form macroscopic filaments and filament clusters of straw-like color but turning red in higher concentrations.

Algal bloom in the western part of the Lake Erie on July 28, 2015. NASA satellite image is available at https://

The pigmentation of large blooms of Trichodesmium erythraeum has given the Red Sea its name, and recurrent red blooms have a relatively unique spectral signature (including high backscatter caused by the presence of gas vesicles and the absorption of its pigment phycoerythrin) that makes them detectable by satellites (Subramaniam et al. 2002). Trichodesmium also has an uncommon ability to fix nitrogen, that is to convert inert atmospheric dinitrogen into ammonia that can be used for its own metabolism and to support growth of other marine organisms: it may generate as much as half of all organic nitrogen present in the ocean, but because nitrogen fixation cannot proceed in aerobic conditions it is done inside the cyanobacteria’s special heterocysts (Bergman et al. 2012). Trichodesmium blooms produce phytomass strata that often support complex communities of other marine microorganisms, including other bacteria, dinoflagellates, protozoa, and copepods.

Walsh et al. (2006) outlined a sequence taking place in the Gulf of Mexico whereby a phosphorus-rich runoff initiates a planktonic succession once the nutrient-poor subtropical waters receive periodic depositions of iron-rich Saharan dust. The availability of these nutrients allows Trichodesmium to be also a precursor bloom for Karenia brevis, a unicellular dinoflagellate infamous for causing toxic red tides. This remarkable sequence, an excellent example of complex preconditions required to produce high rates of bacterial growth, is repeated in other warm waters receiving more phosphorus-rich runoff from fertilized cropland and periodic long-range inputs of iron-rich dust transported from African and Asian deserts. As a result, the frequency and extent of red tides have become more common during the 20th century. They are now found in waters ranging from the Gulf of Mexico to Japan, New Zealand, and South Africa.

Pathogens

Three categories of microbial growth have particularly damaging consequences: common pathogens that infect crops and reduce their harvests; a wide variety of microbes that increase human morbidity and mortality; and microorganisms and viruses responsible for population-wide infections (epidemics) and even global impacts (pandemics). A few exceptions aside, the names of bacterial and fungal plant pathogens are not recognized outside expert circles. The most important plant pathogenic bacteria are Pseudomonas syringae with its many pathovars epiphytically growing on many crops, and Ralstonia solanacearum responsible for bacterial wilts (Mansfield et al. 2012). The most devastating fungal pathogens are Magnaporthe oryzae (rice blast fungus) and Botrytis cinerea, a necrophytic grey mold fungus attacking more than 200 plant species but best known for its rare beneficial role (producing noble rot, pourriture noble) indispensable in the making of such sweet (botrytic) wines as Sauternes and Tokaj (Dean et al. 2012).

Although many broad-spectrum and targeted antibiotics have been available for more than half a century, many bacterial diseases still cause considerable mortality, particularly Mycobacterium tuberculosis in Africa, and Streptococcus pneumococcus, the leading cause of bacterial pneumonia among older people. Salmonella is responsible for frequent cases of food contamination, Escherichia coli is the leading cause of diarrhea, and both are ubiquitous causes of morbidity. But widespread use of antibacterial drugs has shortened the span of common infections, accelerated recovery, prevented early deaths, and extended modern lifespans.

The obverse of these desirable outcomes has been the spread of antibiotic-resistant strains that began just a few years after the introduction of new antimicrobials in the early 1940s (Smith and Coast 2002). Penicillin-resistant Staphylococcus aureus was found already in 1947. The first methicillin-resistant strains of Staphylococcus aureus (MRSA, causing bacteremia, pneumonia, and surgical wound infections) emerged in 1961, have spread globally and now account for more than half of all infection acquired during intensive hospital therapy (Walsh and Howe 2002). Vancomycin became the drug of last resort after many bacteria acquired resistance to most of the commonly prescribed antibiotics but the first vancomycin-resistant staphylococci appeared in 1997 in Japan and in 2002 in the US (Chang et al. 2003).

The latest global appraisal of the state of antibiotics indicates the continuation of an overall decline in the total stock of antibiotic effectiveness, as the resistance to all first-line and last-resort antibiotics is rising (Gelband et al. 2015). In the US, antibiotic resistance causes more than 2 million infections and 23,000 deaths every year and results in a direct cost of $20 billion and productivity losses of $35 billion (CDC 2013). While there have been some significant declines in MRSA in North America and Europe, the incidence in sub-Saharan Africa, Latin America, and Australia is still rising, Escherichia coli and related bacteria are now resistant to the third-generation cephalosporins, and carbapenem-resistant Enterobacteriaceae have become resistant even to the last-resort carbapenems.

The conquest of pathogenic bacterial growth made possible by antibiotics is being undone by their inappropriate and excessive use (unnecessary self-medication in countries where the drugs are available without prescription and overprescribing in affluent countries) as well as by poor sanitation in hospitals and by massive use of prophylactic antibiotics in animal husbandry. Preventing the emergence of antibiotic-resistant mutations was never an option but minimizing the problem has received too little attention for far too long. As a result, antibiotic-resistance is now also common among both domestic and wild animals that were never exposed directly to antibiotics (Gilliver et al. 1999).

The quest to regain a measure of control appears to be even more urgent as there is now growing evidence that many soil bacteria have a naturally occurring resistance against, or ability to degrade, antibiotics. Moreover, Zhang and Dick (2014) isolated from soils bacterial strains that were not only antibiotic-resistant but that could use the antibiotics as their sources of energy and nutrients although they were not previously exposed to any antibiotics: they found 19 bacteria (mainly belonging to Proteobacteria and Bacteriodetes) that could grow on penicillin and neomycin as their sole carbon sources up to concentrations of 1 g/L.

The availability of antibiotics (and better preventive measures) have also minimized the much larger, population-wide threat posed by bacteria able to cause large-scale epidemics and even pandemics, most notably Yersinia pestis, a rod-shaped anaerobic coccobacillus responsible for plague. The bacterium causing plague was identified, and cultured, in 1894 by Alexandre Yersin in Hong Kong in 1894, and soon afterwards Jean-Paul Simond discovered the transmission of bacteria from rodents by flea bites (Butler 2014). Vaccine development followed in 1897, and eventually streptomycin (starting in 1947) became the most effective treatment (Prentice and Rahalison 2007).

The Justinian plague of 541–542 and the medieval Black Death are the two best known historic epidemics (not pandemics, as they did not reach the Americas and Australia) due to their exceptionally high mortality. The Justinian plague swept the Byzantine (Eastern Roman) and Sassanid empires and the entire Mediterranean littoral, with uncertain death estimates as high as 25 million (Rosen 2007). A similarly virulent infestation returned to Europe eight centuries later as the Black Death, the description referring to dying black skin and flesh. In the spring of 1346, plague, endemic in the steppe region of southern Russia, reached the shores of the Black Sea, and then it was carried by maritime trade routes via Constantinople to Sicily by October 1346 and then to the western Mediterranean, reaching Marseilles and Genoa by the end of 1347 (Kelly 2006).

Flea-infested rats on trade ships carried the plague to coastal cities, where it was transmitted to local rat populations but subsequent continental diffusion was mainly by direct pneumonic transmission. By 1350 plague had spread across western and central Europe, by 1351 it reached northwestern Russia, and by 1853 its easternmost wave was reconnected to the original Caspian reservoir (Gaudart et al. 2010). Overall European mortality was at least 25 million people, and before it spread to Europe plague killed many people in China and in Central Asia. Between 1347 and 1351 it also spread from Alexandria to the Middle East, all the way to Yemen.

Aggregate mortality has been put as high as 100 million people but even a considerably smaller total would have brought significant depopulation to many regions and its demographic and economic effects lasted for generations. Controversies regarding the Black Death’s etiology were definitely settled only in 2010 by identification of DNA and protein signatures specific for Yersinia pestis in material removed from mass graves in Europe (Haensch et al. 2010). The study has also identified two previously unknown but related Yersinia clades associated with mass graves, suggesting that 14th century plague reached Europe on at least two occasions by distinct routes.

Gómez and Verdú (2017) reconstructed the network connecting the 14th-century European and Asian cities through pilgrimage and trade and found, as expected, that the cities with higher transitivity (a node’s connection to two other nodes that are also directly connected) and centrality (the number and intensity of connections with the other nodes in the network), were more severely affected by the plague as they experienced more exogenous reinfections. But available information does not allow us to reconstruct reliably the epidemic trajectories of the Black Death as it spread across Europe in the late 1340s.

Later, much less virulent, outbreaks of the disease reoccurred in Europe until the 18th century and mortality records for some cities make it possible to analyze the growth of the infection process. Monecke et al. (2009) found high-quality data for Freiburg (in Saxony) during its plague epidemics between May 1613 and February 1614 when more than 10% of the town’s population died. Their models of the epidemic’s progress resulted in close fits to the historical record. The number of plague victims shows a nearly normal (Gaussian) distribution, with the peak at about 100 days after the first deaths and a return to normal after about 230 days.

Perhaps the most interesting findings of their modeling was that introducing even a small number of immune rats into an otherwise unchanged setting aborts the outbreak and results in very few deaths. They concluded that the diffusion of Rattus norvegicus (brown or sewer rats, which may develop partial herd immunity by exposure to Yersinia because of its preference for wet habitats) accelerated the retreat of the 17th-century European plague. Many epidemics of plague persisted during the 19th century and localized plague outbreaks during the 20th century included those in India (1903), San Francisco (1900–1904), China (1910–1912) and India (Surat) in 1994. During the last two generations, more than 90% of all localized plague cases have been in African countries, with the remainder in Asia (most notably in Vietnam, India, and China) and in Peru (Raoult et al. 2013).

Formerly devastating bacterial epidemics have become only a matter of historic interest as we have taken preventive measures (suppressing rodent reservoirs and fleas when dealing with plague) and deployed early detection and immediate treatment of emerging cases. Viral infections pose a greater challenge. A rapid diffusion of smallpox, caused by variola virus, was responsible for well-known reductions of aboriginal American populations that lacked any immunity before their contact with European conquerors. Vaccination eventually eradicated this infection: the last natural outbreak in the US was in 1949, and in 1980 the World Health Organization declared smallpox eliminated on the global scale. But there is no prospect for an early elimination of viral influenza, returning annually in the form of seasonal epidemics and unpredictably as recurrent pandemics.

Seasonal outbreaks are related to latitude (Brazil and Argentina have infection peaks between April and September), they affect between 10% and 50% of the population and result in widespread morbidity and significant mortality among elderly. Annual US means amount to some 65 million illnesses, 30 million medical visits, 200,000 hospitalizations, 25,000 (10,000–40,000) deaths, and up to $5 billion in economic losses (Steinhoff 2007). As with all airborne viruses, influenza is readily transmitted as droplets and aerosols by respiration and hence its spatial diffusion is aided by higher population densities and by travel, as well as by the short incubation period, typically just 24–72 hours. Epidemics can take place at any time during the year, but in temperate latitudes they occur with a much higher frequency during winter. Dry air and more time spent indoors are the two leading promoters.

Epidemics of viral influenza bring high morbidity but in recent decades they have caused relatively low overall mortality, with both rates being the highest among the elderly. Understanding of key factors behind seasonal variations remains limited but absolute humidity might be the predominant determinant of influenza seasonality in temperate climates (Shaman et al. 2010). Recurrent epidemics require the continuous presence of a sufficient number of susceptible individuals, and while infected people recover with immunity, they become again vulnerable to rapidly mutating viruses as the process of antigenic drift creates a new supply of susceptible individuals (Axelsen et al. 2014). That is why epidemics persist even with mass-scale annual vaccination campaigns and with the availability of antiviral drugs. Because of the recurrence and costs of influenza epidemics, considerable effort has gone into understanding and modeling their spread and eventual attenuation and termination (Axelsen et al. 2014; Guo et al. 2015).

The growth trajectories of seasonal influenza episodes form complete epidemic curves whose shape conforms most often to a normal (Gaussian) distribution or to a negative binomial function whose course shows a steeper rise of new infections and a more gradual decline from the infection peak (Nsoesie et al. 2014). More virulent infections follow a rather compressed (peaky) normal curve, with the entire event limited to no more than 100–120 days; in comparison, milder infections may end up with only a small fraction of infected counts but their complete course may extend to 250 days. Some events will have a normal distribution with a notable plateau or with a bimodal progression (Goldstein et al. 2011; Guo et al. 2015).

But the epidemic curve may follow the opposite trajectory, as shown by the diffusion of influenza at local level. This progression was studied in a great detail during the course of the diffusion of the H1N1 virus in 2009. Between May and September 2009, Hong Kong had a total of 24,415 cases and the epidemic growth curve, reconstructed by Lee and Wong (2010), had a small initial peak between the 55th and 60th day after its onset, then a brief nadir followed by rapid ascent to the ultimate short-lived plateau on day 135 and a relatively rapid decline: the event was over six months after it began (figure 2.4). The progress of seasonal influenza can be significantly modified by vaccination, notably in such crowded settings as universities, and by timely isolation of susceptible groups (closing schools). Nichol et al. (2010) showed that the total attack rate of 69% in the absence of vaccination was reduced to 45% with a preseason vaccination rate of just 20%, to less than 1% with preseason vaccination at 60%, and the rate was cut even when vaccinations were given 30 days after the outbreak onset.

Progression of the Hong Kong influenza epidemic between May and September 2009. Based on Lee and Wong (2010).

We can now get remarkably reliable information on an epidemic’s progress in near real-time, up to two weeks before it becomes available from traditional surveillance systems: McIver and Brownstein (2014) found that monitoring the frequency of daily searches for certain influenza- or health-related Wikipedia articles provided an excellent match (difference of less than 0.3% over a period of nearly 300 weeks) with data on the actual prevalence of influenza-like illness obtained later from the Centers for Disease Control. Wikipedia searches also accurately estimated the week of the peak of illness occurrence, and their trajectories conformed to the negative binomial curve of actual infections.

Seasonal influenza epidemics cannot be prevented and their eventual intensity and human and economic toll cannot be predicted—and these conclusions apply equally well to the recurrence of a worldwide diffusion of influenza viruses causing pandemics and concurrent infestation of the world’s inhabited regions. These concerns have been with us ever since we understood the process of virulent epidemics, and it only got more complicated with the emergence of the H5N1 virus (bird flu) in 1997 and with a brief but worrisome episode of severe acute respiratory syndrome (SARS). In addition, judging by the historical recurrence of influenza pandemics, we might be overdue for another major episode.

We can identify at least four viral pandemics during the 18th century, in 1729–1730, 1732–1733, 1781–1782, and 1788–1789, and there have been six documented influenza pandemics during the last two centuries (Gust et al. 2001). In 1830–1833 and 1836–1837, the pandemic was caused by an unknown subtype originating in Russia. In 1889–1890, it was traced to subtypes H2 and H3, most likely coming again from Russia. In 1918–1919, it was an H1 subtype with unclear origins, either in the US or in China. In 1957–1958, it was subtype H2N2 from south China, and in 1968–1969 subtype H3N2 from Hong Kong. We have highly reliable mortality estimates only for the last two events, but there is no doubt that the 1918–1919 pandemic was by far the most virulent (Reid et al. 1999; Taubenberger and Morens 2006).

The origins of the 1918–1919 pandemic have been contested. Jordan (1927) identified the British military camps in the United Kingdom (UK) and France, Kansas, and China as the three possible sites of its origin. China in the winter of 1917–1918 now seems the most likely region of origin and the infection spread as previously isolated populations came into contact with one another on the battlefields of WWI (Humphries 2013). By May 1918 the virus was present in eastern China, Japan, North Africa, and Western Europe, and it spread across entire US. By August 1918 it had reached India, Latin America, and Australia (Killingray and Phillips 2003; Barry 2005). The second, more virulent, wave took place between September and December 1918; the third one, between February and April 1919, was, again, more moderate.

Data from the US and Europe make it clear that the pandemic had an unusual mortality pattern. Annual influenza epidemics have a typical U-shaped age-specific mortality (with young children and people over 70 being most vulnerable), but age-specific mortality during the 1918–1919 pandemic peaked between the ages of 15 and 35 years (the mean age for the US was 27.2 years) and virtually all deaths (many due to viral pneumonia) were in people younger than 65 (Morens and Fauci 2007). But there is no consensus about the total global toll: minimum estimates are around 20 million, the World Health Organization put it at upward of 40 million people, and Johnson and Mueller (2002) estimated it at 50 million. The highest total would be far higher than the global mortality caused by the plague in 1347–1351. Assuming that the official US death toll of 675,000 people (Crosby 1989) is fairly accurate, it surpassed all combat deaths of US troops in all of the wars of the 20th century.

Pandemics have been also drivers of human genetic diversity and natural selection and some genetic differences have emerged to regulate infectious disease susceptibility and severity (Pittman et al. 2016). Quantitative reconstruction of their growth is impossible for events before the 20th century but good quality data on new infections and mortality make it possible to reconstruct epidemic curves of the great 1918–1919 pandemic and of all the subsequent pandemics. As expected, they conform closely to a normal distribution or to a negative binomial regardless of affected populations, regions, or localities. British data for combined influenza and pneumonia mortality weekly between June 1918 and May 1919 show three pandemic waves. The smallest, almost symmetric and peaking at just five deaths/1,000, was in July 1918. The highest, a negative binomial peaking at nearly 25 deaths/1,000 in October, and an intermediate wave (again a negative binomial peaking at just above 10 deaths/1,000) in late February of 1919 (Jordan 1927).

Perhaps the most detailed reconstruction of epidemic waves traces not only transmission dynamics and mortality but also age-specific timing of deaths for New York City (Yang et al. 2014). Between February 1918 and April 1920, the city was struck by four pandemic waves (also by a heat wave). Teenagers had the highest mortality during the first wave, and the peak then shifted to young adults, with total excess mortality for all four waves peaking at the age of 28 years. Each wave was spread with a comparable early growth rate but the subsequent attenuations varied. The virulence of the pandemic is shown by daily mortality time series for the city’s entire population: the second wave’s peak reached 1,000 deaths per day compared to the baseline of 150–300 deaths (figure 2.5). When compared by using the fractional mortality increase (ratio of excess mortality to baseline mortality), the trajectories of the second and the third wave came closest to a negative binomial distribution, with the fourth wave displaying a very irregular pattern.

Daily influenza mortality time series in New York between February 1918 and April 1920 compared to baseline (1915–1917 and 1921–1923). Simplified from Yang et al. (2014).

Very similar patterns were demonstrated by analyses of many smaller populations. For example, a model fitted to reliable weekly records of incidences of influenza reported from Royal Air Force camps in the UK shows two negative binomial curves, the first one peaking about 5 weeks and the other one about 22 weeks after the infection outbreak (Mathews et al. 2007). The epidemic curve for the deaths of soldiers in the city of Hamilton (Ontario, Canada) between September and December 2018 shows a perfectly symmetrical principal wave peaking in the second week of October and a much smaller secondary wave peaking three weeks later (Mayer and Mayer 2006).

Subsequent 20th-century pandemics were much less virulent. The death toll for the 1957–1958 outbreak was about 2 million, and low mortality (about 1 million people) during the 1968–1969 event is attributed to protection conferred on many people by the 1957 infection. None of the epidemics during the remainder of the 20th century grew into a pandemic (Kilbourne 2006). But new concerns arose due to the emergence of new avian influenza viruses that could be transmitted to people. By May 1997 a subtype of H5N1 virus mutated in Hong Kong’s poultry markets to a highly pathogenic form (able to kill virtually all affected birds within two days) that claimed its first human victim, a three-year-old boy (Sims et al. 2002). The virus eventually infected at least 18 people, causing six deaths and slaughter of 1.6 million birds, but it did not spread beyond South China (Snacken et al. 1999).

WHO divides the progression of a pandemic into six phases (Rubin 2011). First, an animal influenza virus circulating among birds or mammals has not infected humans. Second, the infection occurs, creating a specific potential pandemic threat. Third, sporadic cases or small clusters of disease exist but there are no community-wide outbreaks. Such outbreaks mark the fourth phase. In the next phase, community-level outbreaks affect two or more countries in a region, and in the sixth phase outbreaks spread to at least one other region. Eventually the infections subside and influenza activity returns to levels seen commonly during seasonal outbreaks. Clearly, the first phase has been a recurrent reality, and the second and third phases have taken place repeatedly since 1997. But in April 2009, triple viral reassortment between two influenza lineages (that had been present in pigs for years) led to the emergence of swine flu (H1N1) in Mexico (Saunders-Hastings and Krewski 2016).

The infection progressed rapidly to the fourth and fifth stage and by June 11, 2009, when WHO announced the start of an influenza pandemic, there were nearly 30,000 confirmed cases in 74 countries (Chan 2009). By the end of 2009, there were 18,500 laboratory-confirmed deaths worldwide but models suggest that the actual excess mortality attributable to the pandemics was between 151,700 and 575,400 cases (Simonsen et al. 2013). The disease progressed everywhere in typical waves, but their number, timing, and duration differed: there were three waves (spring, summer, fall) in Mexico, two waves (spring-summer and fall) in the US and Canada, three waves (September and December 2009, and August 2010) in India.

There is no doubt that improved preparedness (due to the previous concerns about H5N1 avian flu in Asia and the SARS outbreak in 2002)—a combination of school closures, antiviral treatment, and mass-scale prophylactic vaccination—reduced the overall impact of this pandemic. The overall mortality remained small (only about 2% of infected people developed a severe illness) but the new H1N1 virus was preferentially infecting younger people under the age of 25 years, while the majority of severe and fatal infections was in adults aged 30–50 years (in the US the average age of laboratory confirmed deaths was just 37 years). As a result, in terms of years of life lost (a metric taking into account the age of the deceased), the maximum estimate of 1.973 million years was comparable to the mortality during the 1968 pandemic.

Simulations of an influenza pandemic in Italy by Rizzo et al. (2008) provide a good example of the possible impact of the two key control measures, antiviral prophylaxis and social distancing. In their absence, the epidemic on the peninsula would follow a Gaussian curve, peaking about four months after the identification of the first cases at more than 50 cases per 1,000 inhabitants, and it would last about seven months. Antivirals for eight weeks would reduce the peak infection rate by about 25%, and social distancing starting at the pandemic’s second week would cut the spread by two-thirds. Economic consequences of social distancing (lost school and work days, delayed travel) are much more difficult to model.

As expected, the diffusion of influenza virus is closely associated with population structure and mobility, and superspreaders, including health-care workers, students, and flight attendants, play a major role in disseminating the virus locally, regionally, and internationally (Lloyd-Smith et al. 2005). The critical role played by schoolchildren in the spatial spread of pandemic influenza was confirmed by Gog et al. (2014). They found that the protracted spread of American influenza in fall 2009 was dominated by short-distance diffusion (that was partially promoted by school openings) rather than (as is usually the case with seasonal influenza) long-distance transmission.

Modern transportation is, obviously, the key superspreading conduit. Scales range from local (subways, buses) and regional (trains, domestic flights, especially high-volume connections such as those between Tokyo and Sapporo, Beijing and Shanghai, or New York and Los Angeles that carry millions of passengers a year) to intercontinental flights that enable rapid global propagation (Yoneyama and Krishnamoorthy 2012). In 1918, the Atlantic crossing took six days on a liner able to carry mostly between 2,000 and 3,000 passengers and crew; now it takes six to seven hours on a jetliner carrying 250–450 people, and more than 3 million passengers now travel annually just between London’s Heathrow and New York’s JFK airport. The combination of flight frequency, speed, and volume makes it impractical to prevent the spread by quarantine measures: in order to succeed they would have to be instantaneous and enforced without exception.

And the unpredictability of this airborne diffusion of contagious diseases was best illustrated by the transmission of the SARS virus from China to Canada, where its establishment among vulnerable hospital populations led to a second unexpected outbreak (PHAC 2004; Abraham 2005; CEHA 2016). A Chinese doctor infected with severe acute respiratory syndrome (caused by a coronavirus) after treating a patient in Guangdong travelled to Hong Kong, where he stayed on the same hotel floor as an elderly Chinese Canadian woman who got infected and brought the disease to Toronto on February 23, 2003.

As a result, while none of other large North American cities with daily flights to Hong Kong (Vancouver, San Francisco, Los Angeles, New York) was affected, Toronto experienced a taxing wave of infections, with some hospitals closed to visitors. Transmission within Toronto peaked during the week of March 16–23, 2003 and the number of cases was down to one by the third week of April; a month later, the WHO declared Toronto SARS-free—but that was a premature announcement because then came the second, quite unexpected wave, whose contagion rate matched the March peak by the last week of May before it rapidly subsided.

Trees and Forests

Now to the opposite end of the size spectrum: some tree species are the biosphere’s largest organisms. Trees are woody perennials with a more or less complex branching of stems and with secondary growth of their trunks and branches (Owens and Lund 2009). Their growth is a marvel of great complexity, unusual persistence, and necessary discontinuity, and its results encompass about 100,000 species, including an extreme variety of forms, from dwarf trees of the Arctic to giants of California, and from tall straight stems with minimal branching to almost perfectly spherical plants with omnidirectional growth. But the underlying mechanisms of their growth are identical: apical meristems, tissues able to produce a variety of organs and found at the tips of shoots and roots, are responsible for the primary growth, for trees growing up (trunks) and sideways and down (branches). Thickening, the secondary growth, produces tissues necessary to support the elongating and branching plant.

As Corner (1964, 141) succinctly put it,

The tree is organized by the direction of its growing tips, the lignification [i.e. wood production] of the inner tissues, the upward flow of water in the lignified xylem, the downward passage of food in the phloem, and the continued growth of the cambium. It is kept alive, in spite of its increasing load of dead wood, by the activity of the skin of living cells.

The vascular cambium, the layer of dividing cells and hence the generator of tree growth that is sandwiched between xylem and phloem (the living tissue right beneath the bark that transports leaf photosynthate), is a special meristem that produces both new phloem and xylem cells.

New xylem tissue formed in the spring has lighter color than the tissue laid down in summer in smaller cells, and these layers form distinct rings that make it easy to count a tree’s age without resorting to isotopic analysis of wood. The radial extent of the cambium is not easy to delimit because of the gradual transition between phloem and xylem, and because some parenchymal cells may remain alive for long periods of time, even for decades. Leaves and needles are the key tissues producing photosynthate and enabling tree growth and their formation, durability, and demise are sensitive to a multitude of environmental factors. Tree stems have been studied most closely because they provide fuelwood and commercial timber and because their cellulose is by far the most important material for producing pulp and paper.

Roots are the least known part of trees: the largest ones are difficult to study without excavating the entire root system, the hair-like ones that do most nutrient absorption are ephemeral. In contrast, crown forms are easiest to observe and to classify as they vary not only among species but also among numerous varieties. The basic division is between excurrent and decurrent forms; the latter form, characteristic of temperate hardwoods, has lateral branches growing as long or even longer than the stem as they form broad crowns, the former shape, typical in conifers, has the stem whose length greatly surpasses the subtending laterals.

Massed trees form forests: the densest have completely closed canopies, while the sparsest are better classified as woodland, with canopies covering only a small fraction of the ground. Forests store nearly 90% of the biosphere’s phytomass and a similarly skewed distribution applies on other scales: most of the forest phytomass (about three-quarters) is in tropical forests and most of that phytomass (about three-fifths) is in the equatorial rain forests where most of it is stored in massive trunks (often buttressed) of trees that form the forest’s closed canopy and in a smaller number of emergent trees whose crowns rise above the canopy level. And when we look at an individual tree we find most of its living phytomass locked in xylem (sapwood) and a conservative estimate put its share at no more than 15% of the total (largely dead) phytomass.

Given the substantial differences in water content of plant tissues (in fresh tissues water almost always dominates), the only way to assure data comparability across a tree’s lifespan and among different species is to express the annual growth rates in mass units of dry matter or carbon per unit area. In ecological studies, this is done in grams per square meter (g/m2), t/ha or in tonnes of carbon per hectare (t C/ha). These studies focus on different levels of productivity, be it for an entire plant, a community, an ecosystem, or the entire biosphere. Gross primary productivity (GPP) comes first as we move from the most general to the most restrictive productivity measure; this variable captures all photosynthetic activity during a given period of time.

Primary Productivity

Total forest GPP is about half of the global GPP assumed to be 120 Gt C/year (Cuntz 2011). New findings have changed both the global total and the forest share. Ma et al. (2015) believe that forest GPP has been overestimated (mainly due to exaggerated forest area) and that the real annual total is about 54 Gt C or nearly 10% lower than previous calculations. At the same time, Welp et al. (2011) concluded that the global GPP total should be raised to the range of 150–175 Gt C/year, and Campbell et al. (2017) supported that finding: their analysis of atmospheric carbonyl sulfide records suggests a large historical growth of total GPP during the 20th century, with the overall gain of 31% and the new total above 150 Gt C.

A large part of this newly formed photosynthate does not end up as new tree tissue but it is rapidly reoxidized inside the plant: this continuous autotrophic respiration (RA) energizes the synthesis of plant biopolymers from monomers fixed by photosynthesis, it transports photosynthates within the plant and it is channeled into repair of diseased or damaged tissues. Autotrophic respiration is thus best seen as the key metabolic pathway between photosynthesis and a plant’s structure and function (Amthor and Baldocchi 2001; Trumbore 2006). Its intensity (RA/GPP) is primarily the function of location, climate (above all of temperature), and plant age, and it varies widely both within and among species as well as among ecosystems and biomes.

A common assumption is that RA consumes about half of the carbon fixed in photosynthesis (Litton et al. 2007). Waring et al. (1998) supported this conclusion by studying the annual carbon budgets of diverse coniferous and deciduous communities in the US, Australia, and New Zealand. Their net primary productivity (NPP)/GPP ratio was 0.47 ± 0.04. But the rate is less constant when looking at individual trees and at a wider range of plant communities: RA at 50% (or less) is typical of herbaceous plants, and the actual rates for mature trees are higher, up to 60% of GPP in temperate forests and about 70% for a boreal tree (black spruce, Picea mariana) as well as for primary tropical rain forest trees (Ryan et al. 1996; Luyssaert et al. 2007).

In pure tree stands, RA rises from 15–30% of GPP during the juvenile stage to 50% in early mature growth, and it can reach more than 90% of GPP in old-growth forests and in some years high respiration can turn the ecosystem into a weak to moderate carbon source (Falk et al. 2008). Temperature-dependent respiration losses are higher in the tropics but due to higher GPP the shares of RA are similar to those in temperate trees. Above-ground autotrophic respiration has two major components, stem and foliar efflux. The former accounts for 11–23% of all assimilated carbon in temperate forests and 40–70% in tropical forests’ ecosystems, respectively (Ryan et al. 1996; Chambers et al. 2004). The latter can vary more within a species than among species, and small-diameter wood, including lianas, accounts for most the efflux (Asao et al. 2015; Cavaleri et al. 2006).

The respiration of woody tissues is between 25% and 50% of the total above-ground RA and it matters not only because of the mass involved but also because it proceeds while the living cells are dormant (Edwards and Hanson 2003). Because the autotrophic respiration is more sensitive to increases in temperature than is photosynthesis, many models have predicted that global warming would produce faster increases in RA than in overall photosynthesis, resulting in declining NPP (Ryan et al. 1996). But acclimation may negate such a trend: experiments found that black spruce may not have significant respiratory or photosynthetic changes in a warmer climate (Bronson and Gower 2010).

Net primary productivity cannot be measured directly and it is calculated by subtracting autotrophic respiration from gross primary productivity (NPP = GPP − RA): it is the total amount of phytomass that becomes available either for deposition as new plant tissues or for consumption by heterotrophs. Species-dependent annual forest NPP peaks early: in US forests at 14 t C/ha at about 30 years for Douglas fir, followed by a rapid decline, while in forests dominated by maple beech, oak, hickory, or cypress it grows to only 5–6 t C/ha after 10 years, when it levels off (He et al. 2012). As a result, at 100 years of age the annual NPP of American forests ranges mostly between 5 and 7 t C/ha (figure 2.6). Michaletz et al. (2014) tested the common assumption that NPP varies with climate due to a direct influence of temperature and precipitation but found instead that age and stand biomass explained most of the variation, while temperature and precipitation explained almost none. This means that climate influences NPP indirectly by means of plant age, standing phytomass, length of the growing season, and through a variety of local adaptations.

Bounds of age-related NPP for 18 major forest type groups in the US: the most common annual productivities are between 5 and 7 t C/ha. Modified from He et al. (2012).

Heterotrophic respiration (RH, the consumption of fixed photosynthate by organisms ranging from bacteria and insects to grazing ungulates) is minimal in croplands or in plantations of rapidly growing young trees protected by pesticides but considerable in mature forests. In many forests, heterotrophic respiration spikes during the episodes of massive insect invasions that can destroy or heavily damage most of the standing trees, sometimes across vast areas. Net ecosystem productivity (NEP) accounts for the photosynthate that remains after all respiratory losses and that enlarges the existing stores of phytomass (NEP = NPP-RH), and post-1960 ecosystemic studies have given us reliable insights into the limits of tree and forest growth.

The richest tropical rain forests store 150–180 t C/ha above ground (or twice as much in terms of absolutely dry phytomass), and their total phytomass (including dead tissues and underground growth) is often as much as 200–250 t C/ha (Keith et al. 2009). In contrast, boreal forests usually store no more than 60–90 t C/ha, with above-ground living tissues of just 25–60 t C/ha (Kurz and Apps 1994; Potter et al. 2008). No ecosystem stores as much phytomass as the old-growth forests of western North America. Mature stands of Douglas fir (Pseudotsuga menziesii) and noble fir (Abies procera) store more than 800 t C/ha, and the maxima for the above-ground phytomass of Pacific coastal redwoods (Sequoia sempervirens) are around 1,700 t C/ha (Edmonds 1982). Another instance of very high storage was described by Keith et al. (2009) in an Australian evergreen temperate forest in Victoria dominated by Eucalyptus regnans older than 100 years: its maximum density was 1,819 t C/ha in living above-ground phytomass and 2,844 t C/ha for the total biomass.

Not surprisingly, the world’s heaviest and tallest trees are also found in these ecosystems. Giant sequoias (Sequoiadendron giganteum) with phytomass in excess of 3,000 t in a single tree (and lifespan of more than 3,000 years) are the world’s most massive organisms, dwarfing blue whales (figure 2.7). But the comparison with large cetaceans is actually misleading because, as with every large tree, most of the giant sequoia phytomass is dead wood, not living tissue. Record tree heights are between 110 m for Douglas firs and 125 m for Eucalyptus regnans (Carder 1995).

Group of giant sequoia (Sequoiadendron giganteum) trees, the most massive terrestrial organisms, in Sequoia National Park. National Park Service image is available at https://

Net ecosystem productivity is a much broader growth concept than the yield used in forestry studies: it refers to the entire tree phytomass, including the steam, branches, leaves, and roots, while the traditional commercial tree harvest is limited to stems (roundwood), with stumps left in the ground and all branches and tree tops cut off before a tree is removed from the forest. New ways of whole-tree harvesting have changed and entire trees can be uprooted and chipped but the roundwood harvests still dominate and statistical sources use them as the basic wood production metric. After more than a century of modern forestry studies we have accumulated a great deal of quantitative information on wood growth in both pure and mixed, and natural and planted, stands (Assmann l970; Pretzsch 2009; Weiskittel et al. 2011).

There are many species-specific differences in the allocation of above-ground phytomass. In spruces, about 55% of it is in stem and bark, 24% in branches, and 11% in needles, with stumps containing about 20% of all above-ground mass, while in pines 67% of phytomass is in stem and bark, and the share is as high as 78% in deciduous trees. This means that the stem (trunk) phytomass of commercial interest (often called merchantable bole) may amount to only about half of all above-ground phytomass, resulting in a substantial difference between forest growth as defined by ecologists and wood increment of interest to foresters.

Patterns of the growth of trees over their entire lifespans depend on the measured variable. There are many species-specific variations but two patterns are universal. First, the rapid height growth of young trees is followed by declining increments as the annual growth tends to zero. Second, there is a fairly steady increase of tree diameter during a tree’s entire life and initially small increments of basal area and volume increase until senescence. Drivers of tree growth are difficult to quantify statistically due to covarying effects of size- and age-related changes and of natural and anthropogenic environmental impacts ranging from variability of precipitation and temperature to effects of nitrogen deposition and rising atmospheric CO2 levels (Bowman et al. 2013).

Tree Growth

Height and trunk diameter are the two variables that are most commonly used to measure actual tree growth. Diameter, normally gauged at breast height (1.3 m above ground) is easy to measure with a caliper, and both of these variables correlate strongly with the growth of wood volume and total tree phytomass, a variable that cannot be measured directly but that is of primary interest to ecologists. In contrast, foresters are interested in annual stem-wood increments, with merchantable wood growth restricted to trunks of at least 7 cm at the smaller end. Unlike in ecological studies, where mass or energy units per unit area are the norm, foresters use volumetric units, cubic meters per tree or per hectare, and as they are interested in the total life spans of trees or tree stands, they measure the increments at intervals of five or ten years.

The US Forest Service (2018) limits the growing stock volume to solid wood in stems “greater than or equal to 5.0 inches in diameter at breast height from a one-foot high stump to a minimum 4.0-inch top diameter outside bark on the central stem. Volume of solid wood in primary forks from the point of occurrence to a minimum 4-inch top diameter outside bark is included.” In addition, it also excludes small trees that are sound but have a poor form (those add up to about 5% of the total living tree volume). For comparison, the United Nations definition of growing stock includes the above-stump volume of all living trees of any diameter at breast height of 1.3 m (UN 2000). Pretzsch (2009) gave a relative comparison of the ecological and forestry metrics for a European beech stand growing on a mediocre site for 100 years: with GPP at 100, NPP will be 50, net tree growth total 25, and the net stem growth harvested only 10. In mass units, harvestable annual net stem growth of 3 t/ha corresponds to GPP of 30 t/ha.

Productivity is considerably higher for fast-growing species planted to produce timber or pulp, harvested in short rotations, and receiving adequate fertilization and sometimes also supplementary irrigation (Mead 2005; Dickmann 2006). Acacias, pines, poplars, and willows grown in temperate climates yield 5–15 t/ha, while subtropical and tropical plantings of acacias, eucalypts, leucaenas, and pines will produce up to 20–25 t/ha (ITTO 2009; CNI 2012). Plantation trees grow rapidly during the first four to six years: eucalypts add up to 1.5–2 m/year, and in Brazil—the country with their most extensive plantations, mostly in the state of Minas Gerais, to produce charcoal—they are now harvested every 5–6 years in 15-year to 18-year rotations (Peláez-Samaniegoa 2008).