3 Energies: or growth of primary and secondary converters

The growth of any organism or of any artifact is, in fundamental physical terms, a transformation of mass made possible by conversion of energy. Solar radiation is, of course, the primary energizer of life via photosynthesis, with subsequent autotrophic and heterotrophic metabolism producing an enormous variety of organisms. Inevitably, our hominin ancestors, relying solely on somatic energies as hunters and gatherers, were subject to the same energetic limits. The boundaries of their control were expanded by the mastering of fire: burning of phytomass (whose supply was circumscribed by photosynthetic limits) added the first extrasomatic energy conversion and opened the way to better eating, better habitation, and better defense against animals. Cooking was a particularly important advance because it had greatly enlarged both the range and quality of consumed food (Wrangham 2009). Prehistoric human populations added another extrasomatic conversion when they began to use, about 10,000 years ago, domesticated animals for transport and later for field work.

The subsequent history of civilization can be seen as a quest for ever higher reliance on extrasomatic energies (Smil 2017a). The process began with the combustion of phytomass (chemical energy in wood, later also converted to charcoal, and in crop residues) to produce heat (thermal energy) and with small-scale conversions of water and wind flows into kinetic energy of mills and sails. After centuries of slow advances, these conversions became more common, more efficient, and available in more concentrated forms (larger unit capacities), but only the combustion of fossil fuels opened the way to modern high-energy societies. These fuels (coals, crude oils, and natural gases) amount to an enormous store of transformed biomass produced by photosynthesis over the span of hundreds of millions of years and their extraction and conversion has energized the conjoined progression of urbanization and industrialization. These advances brought unprecedented levels food supply, housing comfort, material affluence, and personal mobility and extended expected longevity for an increasing share of the global population.

Appraising the long-term growth of energy converters (both in terms of their performance and their efficiency) is thus the necessary precursor for tracing the growth of artifacts—anthropogenic objects whose variety ranges from the simplest tools (lever, pulley) to elaborate structures (cathedrals, skyscrapers) and to astonishingly complex electronic devices—that will be examined in chapter 4. I use the term energy converter in its broadest sense, that is as any artifact capable of transforming one form of energy into another. These converters fall into two basic categories.

The primary ones convert renewable energy flows and fossil fuels into a range of useful energies, most often into kinetic (mechanical) energy, heat (thermal energy), light (electromagnetic energy), or, increasingly, into electricity. This large category of primary converters includes the following machines and assemblies: traditional waterwheels and windmills and their modern transformations, water and wind turbines; steam engines and steam turbines; internal combustion engines (gasoline- and diesel-fueled and gas turbines, either stationary or mobile); nuclear reactors; and photovoltaic cells. Many forms of electric lighting and electric motors are now by far the most abundant secondary converters that use electricity to produce light and kinetic energy for an enormous variety of stationary machines used in industrial production as well as in agriculture, services, and households, and for land-based transportation.

Even ancient civilizations relied on a variety of energy converters. During antiquity, the most common designs for heating in cold climates ranged from simple hearths (still in common use in Japanese rural households during the 19th century) to ingenious Roman hypocausts and their Asian variants, including Chinese kang and Korean ondol. Mills powered by animate labor (slaves, donkeys, oxen, horses) and also by water (using wheels to convert its energy to rotary motion) were used to grind grains and press oil seeds. Oil lamps and wax and tallow candles provided (usually inadequate) illumination. And oars and sails were the only two ways to propel premodern ships.

By the end of the medieval era, most of these converters saw either substantial growth in size or capacity or major improvements in production quality and operational reliability. The most prominent new converters that became common during the late Middle Ages were taller windmills (used for pumping water and for a large variety of crop processing and industrial tasks), blast furnaces (used to smelt iron ores with charcoal and limestone to produce cast iron), and gunpowder propelled projectiles (with chemical energy in the mixture of potassium nitrate, sulfur and charcoal instantly converted into explosive kinetic energy used to kill combatants or to destroy structures).

Premodern civilizations also developed a range of more sophisticated energy converters relying on gravity or natural kinetic energies. Falling water powered both simple and highly elaborate clepsydras and Chinese astronomical towers—but the pendulum clock dates from the early modern era: it was invented by Christiaan Huygens in 1656. And for wonderment and entertainment, rich European, Middle Eastern and East Asian owners displayed humanoid and animal automata that included musicians, birds, monkeys and tigers, and also angels that played, sang, and turned to face the sun, and were powered by water, wind, compressed air, and wound springs (Chapuis and Gélis 1928).

The construction and deployment of all traditional inanimate energy converters intensified during the early modern period (1500–1800). Waterwheels and windmills became more common and their typical capacities and conversion efficiencies were increasing. Smelting of cast iron in charcoal-fueled blast furnaces reached new highs. Sail ships broke previous records in displacement and maneuverability. Armies relied on more powerful guns, and manufacturing of assorted automata and other mechanical curiosities reached new levels of complexity. And then, at the beginning of the 18th century, came, slowly, the epochal departure in human energy use with the first commercial installations of steam engines.

The earliest versions of the first inanimate prime mover energized by the combustion of coal—fossil fuel created by photosynthetic conversion of solar radiation 106–108 years ago—were extremely wasteful and delivered only reciprocating motion. As a result, they were used for decades only for water pumping in coal mines, but once the efficiencies improved and once new designs could deliver rotary motion, the engines rapidly conquered many old industrial and transportation markets and created new industries and new travel options (Dickinson 1939; Jones 1973). A greater variety of new energy converters was invented and commercialized during the 19th century than at any other time in history: in chronological sequence, they include water turbines (starting in 1830s), steam turbines, internal combustion engines (Otto cycle), and electric motors (all three during the 1880s), and diesel engines (starting in the 1890s).

The 20th century added gas turbines (first commercial applications during the 1930s), nuclear reactors (first installed in submarines in the early 1950s, and for electricity generation since the late 1950s), photovoltaic cells (first in satellites during the late 1950s), and wind turbines (modern designs starting during the 1980s). I will follow all of these advances in a thematic, rather than chronological order, dealing first with harnessing wind and water (traditional mills and modern turbines), then with steam-powered converters (engines and turbines), internal combustion engines, electric light and motors, and, finally, with nuclear reactors and photovoltaic (PV) cells.

Harnessing Water and Wind

We cannot provide any accurate timing of the earliest developments of two traditional inanimate prime movers, waterwheels (whose origins are in Mediterranean antiquity) and windmills (first used in early Middle Ages). Similarly, their early growth can be described only in simple qualitative terms and we can trace their subsequent adoption and the variety of their uses but have limited information about their actual performance. We get on a firmer quantitative ground only with the machines deployed during the latter half of the 18th century and we can trace accurately the shift from waterwheels to water turbines and the growth of these hydraulic machines.

In contrast to the uninterrupted evolution of water-powered prime movers, there was no gradual shift from improved versions of traditional windmills to modern wind-powered machines. Steam-powered electricity generation ended the reliance on windmills in the early 20th century, but it was not until the 1980s that the first modern wind turbines were installed in a commercial wind farm in California. The subsequent development of these machines, aided both by subsidies and by the quest for the decarbonization of modern electricity generation, brought impressive design and performance advances as wind turbines have become a common (even dominant) choice for new generation capacity.

Waterwheels

The origins of waterwheels remain obscure but there is no doubt that the earliest use of water for grain milling was by horizontal wheels rotating around vertical axes attached directly to millstones. Their power was limited to a few kW and larger vertical wheels (Roman hydraletae), with millstones driven by right-angle gears, became common in the Mediterranean world at the beginning of the common era (Moritz 1958; White 1978; Walton 2006; Denny 2007). Three types of vertical wheels were developed to match best the existing water flow or to take advantage of an artificially enhanced water supply delivered by stream diversions, canals, or troughs (Reynolds 2002). Undershot wheels (rotating counterclockwise) were best suited for faster-flowing streams, and the power of small machines was often less than 100 W, equivalent to a steadily working strong man. Breast wheels (also rotating counterclockwise) were powered by both flowing and falling water, while gravity drove overshot wheels with water often led by troughs. Overshot wheels could deliver a few kW of useful power, with the best 19th-century designs going above 10 kW.

Waterwheels brought a radical change to grain milling. Even a small mill employing fewer than 10 workers would produce enough flour daily to feed more than 3,000 people, while manual grinding with quern stones would have required the labor of more than 200 people for the same output. Waterwheel use had expanded far beyond grain milling already during the Roman era. During the Middle Ages, common tasks relying on water power ranged from sawing wood and stone to crushing ores and actuating bellows for blast furnaces, and during the early modern era English waterwheels were often used to pump water and lift coal from underground mines (Woodall 1982; Clavering 1995).

Premodern, often crudely built, wooden wheels were not very efficient compared to modern metal machines but they delivered fairly steady power of unprecedented magnitude and hence opened the way to incipient industrialization and large-scale production. Efficiencies of early modern wooden undershot wheels reached 35–45%, well below the performance of overshots at 52–76% (Smeaton 1759). In contrast, later all-metal designs could deliver up to 76% for undershots and as much as 85% for overshots (Müller 1939; Muller and Kauppert 2004). But even the 18th-century wheels were more efficient than the contemporary steam engine and the development of these two very different machines expanded in tandem, with wheels being the key prime movers of several important pre-1850 industries, above all textile weaving.

In 1849 the total capacity of US waterwheels was nearly 500 MW and that of steam engines reached about 920 MW (Daugherty 1927), and Schurr and Netschert (1960) calculated that American waterwheels kept supplying more useful power than all steam engines until the late 1860s. The Tenth Census showed that in 1880, just before the introduction of commercial electricity generation, the US had 55,404 waterwheels with a total installed power of 914 MW (averaging about 16.5 kW per wheel), which accounted for 36% of all power used in the country’s manufacturing, with steam supplying the rest (Swain 1885). Grain milling and wood sawing were the two leading applications and Blackstone River in Massachusetts had the highest concentration of wheels in the country, prorating to about 125 kW/ha of its watershed.

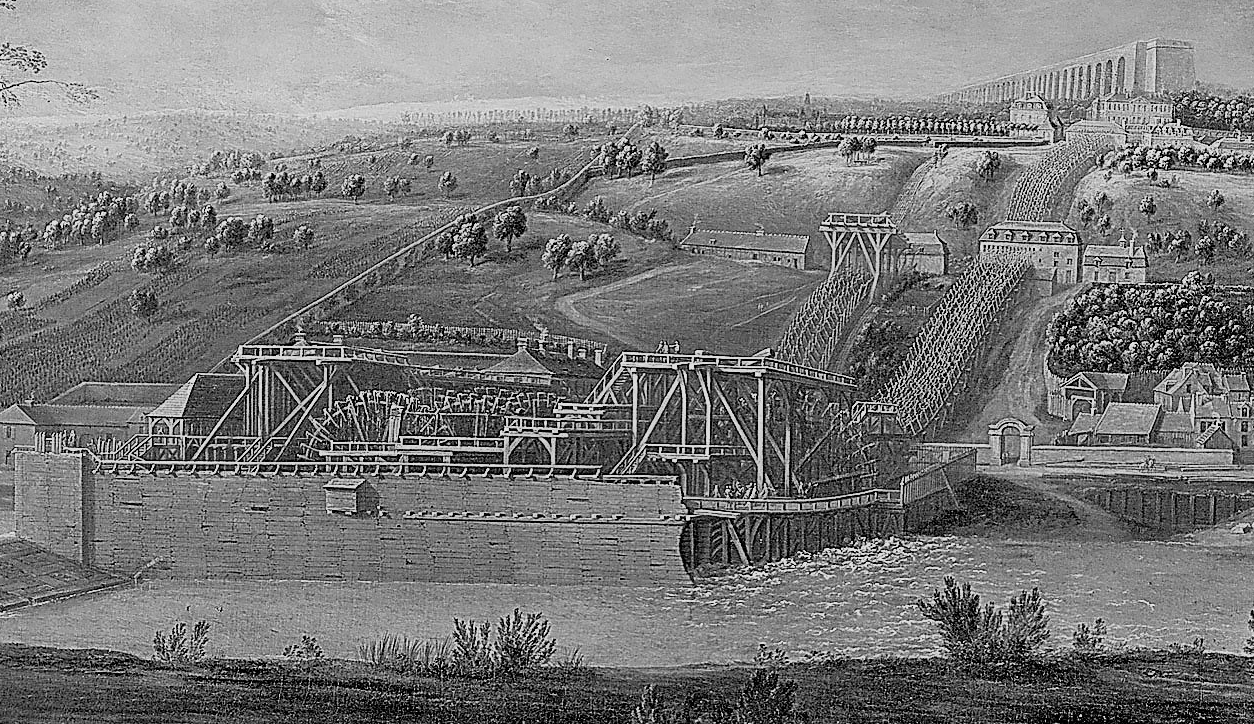

There is not enough information to trace the growth of average or typical waterwheel capacities but enough is known to confirm many centuries of stagnation or very low growth followed by steep ascent to new records between 1750–1850. The largest installations combined the power of many wheels. In 1684 the project designed to pump water for the gardens of Versailles with 14 wheels on the River Seine (Machine de Marly) provided about 52 kW of useful output, but that averaged less than 4 kW/wheel (Brandstetter 2005; figure 3.1). In 1840 the largest British installations near Glasgow had a capacity of 1.5 MW in 30 wheels (average of 50 kW/wheel) fed from a reservoir (Woodall 1982). And Lady Isabella, the world’s largest waterwheel built in 1854 on the Isle of Man to pump water from Laxey lead and zinc mines, had a theoretical peak of 427 kW and actual sustained useful power of 200 kW (Reynolds 1970).

Machine de Marly, the largest waterwheel installation of the early modern era, was completed in 1684 to pump water from the River Seine to the gardens of Versailles. Detail from a 1723 painting by Pierre-Denis Martin also shows the aqueduct in the background. The painting’s reproduction is available at wikimedia.

Installations of new machines dropped off rapidly after 1850 as more efficient water turbines and more flexible heat engines took over the tasks that had been done for centuries by waterwheels. Capacity growth of a typical installation across the span of some two millennia was thus at least 20-fold and perhaps as much as 50-fold. Archaeological evidence points to common unit sizes of just 1–2 kW during the late Roman era; at the beginning of the 18th century most European waterwheels had capacities of 3–5 kW and only few of them rated more than 7 kW; and by 1850 there were many waterwheels rated at 20–50 kW (Smil 2017a). This means that after a long period of stagnation (almost a millennium and a half) or barely noticeable advances, typical capacities grew by an order of magnitude in about a century, doubling roughly every 30 years. Their further development was rather rapidly truncated by the adoption of new converters, and the highly asymmetrical S-curve created by pre-1859 development had, first gradually and then rapidly, collapsed, with only a small number of waterwheels working by 1960.

Water Turbines

Water turbines were conceptual extensions of horizontal waterwheels operating under high heads. Their history began with reaction turbine designs by Benoît Fourneyron. In 1832 his first machine, with 2.4 m rotor operating with radial outward flow and head of just 1.3 m, had rated capacity of 38 kW, and in 1837 its improved version, installed at Saint Blaisien spinning mill, had power of 45 kW under heads of more than 100 m (Smith 1980). A year later, a better design was patented in the US by Samuel B. Howd and, after additional improvements, introduced in Lowell, MA by a British-American engineer, James B. Francis, in 1849; it became widely known as the Francis turbine. This design remains the most commonly deployed large-capacity hydraulic machine suitable for medium to high generating heads (Shortridge 1989). Between 1850 and 1880 many industries located on streams replaced their waterwheels by these turbines. In the US, Massachusetts was the leading state: by 1875, turbines supplied 80% of its stationary power.

With the introduction of Edisonian electricity systems during the 1880s, water turbines began to turn generators. The first small installation (12.5 kW) in Appleton, Wisconsin, began to operate in 1882, the same year Edison’s first coal-powered station was completed in Manhattan (Monaco 2011). By the end of 1880s, the US had about 200 small hydro stations and another turbine design to deploy. An impulse machine, suitable for high water heads and driven by water jets impacting the turbine’s peripheral buckets, was developed by Lester A. Pelton. The world’s largest hydro station, built between 1891 and 1895 at Niagara Falls, had ten 5,000 hp (3.73 MW) turbines.

In 1912 and 1913, Viktor Kaplan filed patents for his axial flow turbine, whose adjustable propellers were best suited for low water heads. The first small Kaplan turbines were built already in 1918, and by 1931 four 35 MW units began to operate at the German Ryburg-Schwörstadt station on the Rhine. Although more than 500 hydrostations were built before WWI, most of them had limited capacities and the era of large projects began in the 1920s in the Soviet Union and in the 1930s in the US, in both countries led by a state policy of electrification. But the largest project of the Soviet program of electrification was made possible only by US expertise and machinery: Dnieper station, completed in 1932, had Francis turbines rated at 63.38 MW, built at Newport News, and GE generators (Nesteruk 1963).

In the US, the principal results of government-led hydro development were the stations built by the Tennessee Valley Authority in the east, and the two record-setting projects, Hoover and Grand Coulee dams, in the west (ICOLD 2017; USDI 2017). The Hoover dam on the Colorado River was completed in 1936 and 13 of its 17 turbines rated 130 MW. Grand Coulee construction took place between 1933 and 1942 and each of the 18 original turbines in two power houses could deliver 125 MW (USBR 2016). Grand Coulee’s total capacity, originally just short of 2 GW, was never surpassed by any American hydro station built after WWII, and it was enlarged by the addition of a third powerhouse (between 1975 and 1980) with three 600 MW and three 700 MW turbines. Just as the Grand Coulee upgrading was completed (1984–1985), the plant lost its global primacy to new stations in South America.

Tucuruí on Tocantins in Brazil (8.37 GW) was completed in 1984. Guri on Caroní in Venezuela (10.23 GW) followed in 1986, and the first unit of what was to become the world’s largest hydro station, Itaipu on Paraná, on the border between Brazil and Paraguay, originally 12.6 GW, now 14 GW, was installed in 1984. Itaipu now has 20 700 MW turbines, Guri has slightly larger turbines than Grand Coulee (730 MW), and Tucuruí’s units rate only 375 and 350 MW. The record unit size was not surpassed when Sanxia (Three Gorges) became the new world record holder with 22.5 GW in 2008: its turbines are, as in Itaipu, 700 MW units. A new record was reached only in 2013 when China’s Xiangjiaba got the world’s largest Francis turbines, 800 MW machines designed and made by Alstom’s plant in Tianjin (Alstom 2013; Duddu 2013).

The historical trajectory of the largest water turbine capacities forms an obvious S-curve, with most of the gains taking place between the early 1930s and the early 1980s and the formation of a plateau once the largest unit size approaches 1,000 MW (figure 3.2). Similarly, when the capacity of generators is expressed in megavolt amperes (MVA), the logistic trajectory of maximum ratings rises from a few MVA in 1900 to 200 MVA by 1960 and to 855 MVA in China’s Xiluodu on the Jinsha River completed in 2013 (Voith 2017). The trajectory forecast points to only marginal gains by 2030 but we already know that the asymptote will be reached due to the construction of China’s (and the world’s) second-largest hydro project in Sichuan: Baihetan dam on the Jinsha River (between Sichuan and Yunnan, under construction since 2008) will contain 16 1,000 MW (1 GW) units when completed in the early 2020s.

Logistic growth of maximum water turbine capacities since 1895; inflection point was in 1963. Data from Smil (2008) and ICOLD (2017).

There is a very high probability that the unit capacity of 1 GW will remain the peak rating. This is because most of the world’s countries with large hydro generation potential have either already exploited their best sites where they could use such very large units (US, Canada) or have potential sites (in sub-Saharan Africa, Latin America, and monsoonal parts of Asia) that would be best harnessed with the 500–700 MW units that now dominate record-size projects in China, India, Brazil, and Russia. In addition, Baihetan aside, environmental concerns make the construction of another station with capacity exceeding 15 GW rather unlikely.

Windmills and Wind Turbines

The earliest Persian and Byzantine windmills were small and inefficient but medieval Europe eventually developed larger wooden post mills which had to be turned manually into the wind. Taller and more efficient tower mills became common during the early modern era not only in the Netherlands but in other flat and windy Atlantic regions (Smil 2017a). Improvements that raised their power and efficiency eventually included canted edge boards to reduce drag on blades and, much later, true airfoils (contoured blades), metal gearings, and fantails. Windmills, much as waterwheels, were used for many tasks besides grain milling: oil extraction from seeds and water pumping from wells were common, and draining of low-lying areas was the leading Dutch use (Hill 1984). In contrast to heavy European machines, American windmills of the 19th century were lighter, more affordable but fairly efficient machines relying on many narrow blades fastened to wheels and fastened at the top of lattice towers (Wilson 1999).

The useful power of medieval windmills was just 2–6 kW, comparable to that of early waterwheels. Dutch and English mills of the 17th and 18th centuries delivered usually no more than 6–10 kW, American mills of the late 19th century rated typically no more than 1 kW, while the largest contemporary European machines delivered 8–12 kW, a fraction of the power of the best waterwheels (Rankine 1866; Daugherty 1927). Between the 1890s and 1920s, small windmills were used in a number of countries to produce electricity for isolated dwellings, but cheap coal-fired electricity generation brought their demise and wind machines were resurrected only during the 1980s following OPEC’s two rounds of oil price increases.

Altamont Pass in northern California’s Diablo Range was the site of the first modern large-scale wind farm, built between 1981 and 1986: its average turbine was rated at just 94 kW and the largest one was capable of 330 kW (Smith 1987). This early experiment petered out with the post-1984 fall in world oil prices, and the center of new wind turbine designs shifted to Europe, particularly to Denmark, with Vestas pioneering larger unit designs. Their ratings rose from 55 kW in 1981 to 500 kW a decade later, to 2 MW by the year 2000, and by 2017 the largest capacity of Vestas onshore units reached 4.2 MW (Vestas 2017a). This growth is captured by a logistic growth curve that indicates only limited future gains. In contrast, the largest offshore turbine (first installed in 2014) has capacity of 8 MW, which can reach 9 MW in specific site conditions (Vestas 2017b). But by 2018 neither of the 10 MW designs—SeaTitan and Sway Turbine, completed in 2010 (AMSC 2012)—had been installed commercially.

Average capacities have been growing more slowly. The linear increase of nameplate capacities of American onshore machines doubled from 710 kW in 1998–1999 to 1.43 MW in 2004–2005 but subsequent slower growth raised the mean to 1.79 MW in 2010 and 2 MW in 2015, less than tripling the average in 17 years (Wiser and Bollinger 2016). Averages for European onshore machines have been slightly higher, 2.2 MW in 2010 and about 2.5 MW in 2015. Again, the growth trajectories of both US and EU mean ratings follow sigmoidal courses but ones that appear much closer to saturation than does the trajectory of maximum turbine ratings. Average capacities of a relatively small number of European offshore turbines remained just around 500 kW during the 1990s, reached 3 MW by 2005, 4 MW by 2012, and just over 4 MW in 2015 (EWEA 2016).

Comparison of early growth stages of steam (1885–1913) and wind (1986–2014) turbines shows that the recent expansion is not unprecedented: maximum unit capacities of steam turbines were growing faster (Smil 2017b).

During the 28 years between 1986 and 2014, the maximum capacities of wind turbines were thus growing by slightly more than 11% a year, while the Vestas designs increased by about 19% a year between 1981 and 2014, doubling roughly every three years and eight months. These high growth rates have been often pointed out by the advocates of wind power as proofs of admirable technical advances opening the way to an accelerated transition from fossil fuels to noncarbon energies. In reality, those gains have not been unprecedented as other energy converters logged similar, or even higher, gains during early stages of their development. In the 28 years between 1885 and 1913, the largest capacity of steam turbines rose from 7.5 kW to 20 MW, average annual exponential growth of 28% (figure 3.3). And while the subsequent growth of steam turbine capacities pushed the maximum size by two orders of magnitude (to 1.75 GW by 2017), wind turbines could never see similar gains, that is, unit capacities on the order of 800 MW.

Even another two consecutive doublings in less than eight years will be impossible: they would result in a 32 MW turbine before 2025. The Upwind project published a predesign of a 20 MW offshore turbine based on similarity scaling in 2011 (Peeringa et al. 2011). The three-bladed machine would have a rotor diameter of 252 m (more than three times the wing span of the world’s largest jetliner, an Airbus A380), hub diameter of 6 m, and cut-in and cut-out wind speeds of 3 and 25 m/s. But doubling turbine power is not a simple scaling problem: while a turbine’s power goes up with the square of its radius, its mass (that is its cost) goes up with the cube of the radius (Hameed and Vatn 2012). Even so, there are some conceptual designs for 50 MW turbines with 200 m long flexible (and stowable) blades and with towers taller than the Eiffel tower.

Of course, to argue that such a structure is technically possible because the Eiffel tower reached 300 m already in 1889 and because giant oil tankers and container ships are nearly 400 m long (Hendriks 2008) is to commit a gross categorical mistake as neither of those structures is vertical and topped by massive moving parts. And it is an enormous challenge to design actual blades that would withstand winds up to 235 km/h. Consequently, it is certain that the capacity growth of wind turbines will not follow the exponential trajectory established by 1991–2014 developments: another S-curve is forming as the rates of annual increase have just begun, inexorably, declining.

And there are other limits in play. Even as maximum capacities have been doubling in less than four years, the best conversion efficiencies of larger wind turbines have remained stagnant at about 35%, and their further gains are fundamentally limited. Unlike large electric motors (whose efficiency exceeds 99%) or the best natural gas-fired furnaces (with efficiencies in excess of 97%), no wind turbine can operate with similarly high efficiency. The maximum share of the wind’s kinetic energy that can be harnessed by a turbine is 16/27 (59%) of the total flow, a limit known for more than 90 years (Betz 1926).

Steam: Boilers, Engines, and Turbines

Harnessing steam generated by the combustion of fossil fuel was a revolutionary shift. Steam provided the first source of inanimate kinetic energy that could be produced at will, scaled up at a chosen site, and adapted to a growing variety of stationary and mobile uses. The evolution began with simple, inefficient steam engines that provided mechanical energy for nearly two centuries of industrialization, and it has reached its performance plateaus with large, highly efficient steam turbines whose operation now supplies most of the world’s electricity. Both converters must be supplied by steam generated in boilers, devices in which combustion converts the chemical energy of fuels to the thermal and kinetic energy of hot (and now also highly pressurized) working fluid.

Boilers

The earliest boilers of the 18th century were simple riveted copper shells where steam was raised at atmospheric pressure. James Watt was reluctant to work with anything but steam at atmospheric pressure (101.3 kPa) and hence his engines had limited efficiency. During the early 19th century, operating pressures began to rise as boilers were designed for mobile use. Boilers had to be built from iron sheets and assume a horizontal cylindrical shape suitable for placement on vessels or wheeled carriages. Oliver Evans and Richard Trevithick, the two pioneers of mobile steam, used such high-pressure boilers (with water filling the space between two cylindrical shells and a fire grate placed inside the inner cylinder) and by 1841 steam pressure in Cornish engines had surpassed 0.4 MPa (Warburton 1981; Teir 2002).

Better designs were introduced as railroad transportation expanded and as steam power conquered shipping. In 1845, William Fairbairn patented a boiler that circulated hot gases through tubes submerged in the water container, and in 1856 Stephen Wilcox patented a design with inclined water tubes placed over the fire. In 1867, Wilcox and George Herman Babcock established Babcock, Wilcox & Company to make and to market water tube boilers, and the company’s design (with numerous modifications aimed at improving safety and increasing efficiency) remained the dominant choice for high-pressure boilers during the remainder of the 19th century (Babcock & Wilcox 2017). In 1882 Edison’s first electricity-generating station in New York relied on four coal-fired Babcock & Wilcox boilers (each capable of about 180 kW) producing steam for six Porter-Allen steam engines (94 kW) that were directly connected to Jumbo dynamos (Martin 1922). By the century’s end, boilers supplying large compound engines worked with pressures of 1.2–1.5 MPa.

Growth of coal-fired electricity generation required larger furnace volumes and higher combustion efficiencies. This dual need was eventually solved by the introduction of pulverized coal-fired boilers and tube-walled furnaces. Before the early 1920s, all power plants burned crushed coal (pieces of 0.5–1.5 cm) delivered by mechanical stokers onto moving grates at the furnace’s bottom. In 1918, the Milwaukee Electric Railway and Light Company made the first tests of burning pulverized coal. The fuel is now fine-milled (with most particles of less than 75 μm in diameter, similar to flour), blown into a burner, and burns at flame temperatures of 1600–1800°C. Tube-walled furnaces (with steel tubes completely covering the furnace’s interior walls and heated by radiation from hot combustion gases) made it easier to increase the steam supply demanded by larger steam turbines.

The large boilers of the late 19th century supplied steam at pressures of no more than 1.7 MPa (standard for the ships of the Royal Navy) and temperature of 300°C; by 1925, pressures had risen to 2–4 MPa and temperatures to 425°C, and by 1955 the maxima were 12.5 MPa and 525°C (Teir 2002). The next improvement came with the introduction of supercritical boilers. At the critical point of 22.064 MPa and 374°C, steam’s latent heat is zero and its specific volume is the same as liquid or gas; supercritical boilers operate above that point where there is no boiling and water turns instantly into steam (a supercritical fluid). This process was patented by Mark Benson in 1922 and the first small boiler was built five years later, but large-scale adoption of the design came only with the introduction of commercial supercritical units during the 1950s (Franke 2002).

The first supercritical boiler (31 MPa and 621°C) was built by Babcock & Wilcox and GE in 1957 at Philo 6 unit in Ohio, and the design rapidly diffused during the 1960s and 1970s (ASME 2017; Franke and Kral 2003). Large power plants are now supplied by boilers producing up to 3,300 t of steam per hour, with pressures mostly between 25 and 29 MPa and steam temperatures up to 605°C and 623°C for reheat (Siemens 2017a). During the 20th century, the trajectories of large boilers were as follows: typical operating pressures rose 17 times (1.7 to 29 MPa), steam temperatures had doubled, maximum steam output (kg/s, t/h) rose by three orders of magnitude, and the size of turbogenerators served by a single boiler increased from 2 MW to 1,750 MW, an 875-fold gain.

Stationary Steam Engines

Simple devices demonstrating the power of steam have a long history but the first commercially deployed machine using steam to pump water was patented by Thomas Savery in England only in 1699 (Savery 1702). The machine had no piston, limited work range, and dismal efficiency (Thurston 1886). The first useful, albeit still highly inefficient, steam engine was invented by Thomas Newcomen in 1712 and after 1715 it was used for pumping water from coal mines. Typical Newcomen engines had power of 4–6 kW, and their simple design (operating at atmospheric pressure and condensing steam on the piston’s underside) limited their conversion efficiency to no more than 0.5% and hence restricted their early use only to coal mines with ready on-site supply of fuel (Thurston 1886; Rolt and Allen 1997). Eventually John Smeaton’s improvements doubled that low efficiency and raised power ratings up to 15 kW and the engines were also used for water pumping in some metal mines, but only James Watt’s separate condenser opened the way to better performances and widespread adoption.

The key to Watt’s achievement is described in the opening sentences of his 1769 patent application:

My method of lessening the consumption of steam, and consequently fuel, in fire engines consists of the following principles: First, that vessell in which the powers of steam are to be employed to work the engine, which is called the cylinder in common fire engines, and which I call the steam vessell, must during the whole time the engine is at work be kept as hot as the steam that enters it … Secondly, in engines that are to be worked wholly or partially by condensation of steam, the steam is to be condensed in vessells distinct from the steam vessells or cylinders, although occasionally communicating with them. These vessells I call condensers, and whilst the engines are working, these condensers ought at least to be kept as cold as the air in the neighbourhood of the engines by application of water or other cold bodies. (Watt 1769, 2)

When the extension of the original patent expired in1800, Watt’s company (a partnership with Matthew Boulton) had produced about 500 engines whose average capacity was about 20 kW (more than five times that of typical contemporary English watermills, nearly three times that of the late 18th-century windmills) and whose efficiency did not surpass 2.0 %. Watt’s largest engine rated just over 100 kW but that power was rapidly raised by post-1800 developments that resulted in much larger stationary engines deployed not only in mining but in all sectors of manufacturing, from food processing to metal forging. During the last two decades of the 19th industry, large steam engines were also used to rotate dynamos in the first coal-fired electricity-generating stations (Thurston 1886; Dalby 1920; von Tunzelmann 1978; Smil 2005).

The development of stationary steam engines was marked by increases of unit capacities, operating pressures, and thermal efficiencies. The most important innovation enabling these advances was a compound steam engine which expanded high-pressure steam first in two, then commonly in three, and eventually even in four stages in order to maximize energy extraction (Richardson 1886). The designed was pioneered by Arthur Woolf in 1803 and the best compound engines of the late 1820s approached a thermal efficiency of 10% and had slightly surpassed it a decade later. By 1876 a massive triple-expansion two-cylinder steam engine (14 m tall with 3 m stroke and 10 m flywheel) designed by George Henry Corliss was the centerpiece of America’s Centennial Exposition in Philadelphia: its maximum power was just above 1 MW and its thermal efficiency reached 8.5% (Thompson 2010; figure 3.4).

Corliss steam engine at America’s Centennial Exposition in Philadelphia in 1876. Photograph from the Library of Congress.

Stationary steam engines became the leading and truly ubiquitous prime movers of industrialization and modernization and their widespread deployment was instrumental in transforming every traditional segment of the newly industrializing economies and in creating new industries, new opportunities, and new spatial arrangements which went far beyond stationary applications. During the 19th century, their maximum rated (nameplate) capacities increased more than tenfold, from 100 kW to the range of 1–2 MW and the largest machines, both in the US and the UK, were built during the first years of the 20th century just at the time when many engineers concluded that low conversion efficiencies made these machines an inferior choice compared to rapidly improving steam turbines (their growth will be addressed next).

In 1902, America’s largest coal-fired power plant, located on the East River between 74th and 75th Streets, was equipped with eight massive Allis-Corliss reciprocating steam engines, each rated at 7.45 MW and driving directly a Westinghouse alternator. Britain’s largest steam engines came three years later. First, London’s County Council Tramway power station in Greenwich installed the first of its 3.5 MW compound engines, nearly as high as it was wide (14.5 m), leading to Dickinson’s (1939, 152) label of “a megatherium of the engine world.” And even larger steam engines were built by Davy Brothers in Sheffield. In 1905, they installed the first of their four 8.9 MW machines in the city’s Park Iron Works, where it was used for hot rolling of steel armor plates for nearly 50 years. Between 1781 and 1905, maximum ratings of stationary steam engines thus rose from 745 W to 8.9 MW, nearly a 12,000-fold gain.

The trajectory of this growth fits almost perfectly a logistic curve with the inflection point in 1911 and indicating further capacity doubling by the early 1920s—but even in 1905 it would have been clear to steam engineers that this not going to happen, that the machine, massive and relatively inefficient, had reached its performance peak. Concurrently, operating pressures increased from just above the atmospheric pressure of Watt’s time (101.3 kPa) to as much as 2.5 MPa in quadruple-expansion machines, almost exactly a 25-fold gain. Highest efficiencies had improved by an order of magnitude, from about 2% for Watt’s machines to 20–22% for the best quadruple-expansion designs, with the reliably attested early 20th-century records of 26.8% for a binary vapor engine in Berlin and 24% for a cross-compound engine in London (Croft 1922). But common best efficiencies were only 15–16%, opening the way for a rapid adoption of steam turbines in electricity generation and in other industrial uses, while in transportation steam engines continued to make important contributions until the 1950s.

Steam Engines in Transportation

Commercialization of steam-powered shipping began in 1802 in England (Patrick Miller’s Charlotte Dundas) and in 1807 in the US (Robert Fulton’s Clermont), the former with a 7.5 kW modified Watt engine. In 1838, Great Western, powered by a 335 kW steam engine, crossed the Atlantic in 15 days. Brunel’s screw-propelled Great Britain rated 745 kW in 1845, and by the 1880s steel-hulled Atlantic steamers had engines of mostly 2.5–6 MW and up to 7.5 MW, the latter reducing the crossing to seven days (Thurston 1886). The famous pre-WWI Atlantic liners had engines with a total capacity of more than 20 MW: Titanic (1912) had two 11.2 MW engines (and a steam turbine), Britannic (1914) had two 12 MW engines (and also a steam turbine). Between 1802 and 1914, the maximum ratings of ship engines rose 1,600-fold, from 7.5 kW to 12 MW.

Steam engines made it possible to build ships of unprecedented capacity (Adams 1993). In 1852 Duke of Wellington, a three-deck 131-gun ship of the line originally designed and launched as sail ship Windsor Castle and converted to steam power, displaced about 5,800 t. By 1863 Minotaur, an iron-clad frigate, was the first naval ship to surpass 10,000 t (10,690 t), and in 1906 Dreadnought, the first battleship of that type, displaced 18,400 t, implying post-1852 exponential growth of 2.1%/year. Packet ships that dominated transatlantic passenger transport between 1820 and 1860 remained relatively small: the displacement of Donald McKay’s packet ship designs grew from the 2,150 t of Washington Irving in 1845 to the 5,858 t of Star of Empire in 1853 (McKay 1928).

By that time metal hulls were ascendant. Lloyd’s Register approved their use in 1833, and in 1849 Isambard Kingdom Brunel’s Great Britain was the first iron vessel to cross the Atlantic (Dumpleton and Miller 1974). Inexpensive Bessemer steel was used in the first steel hulls for a decade before the Lloyd’s Register of Shipping accepted the metal as an insurable material for ship construction in 1877. In 1881 Concord Line’s Servia, the first large transatlantic steel-hull liner, was 157 m long with 25.9 m beam and a 9.8:1 ratio unattainable by a wooden sail ship. The dimensions of future steel liners clustered close to that ratio: Titanic’s (1912) was 9.6. In 1907 Cunard Line’s Lusitania and Mauritania displaced each nearly 45,000 t and just before WWI the White Star Line’s Olympic, Titanic, and Britannic had displacements of about 53,000 t (Newall 2012).

The increase of the maximum displacement by an order of magnitude during roughly five decades (from around 5,000 to about 50,000 t) implies an annual exponential growth rate of about 4.5% as passenger shipping was transformed by the transition from sails to steam engines and from wooden to steel hulls. Only two liners that were launched between the two world wars were much larger than the largest pre-WWI ships (Adams 1993). The Queen Mary in 1934 and Normandie in 1935 displaced, respectively, nearly 82,000 t and almost 69,000 t, while Germany’s Bremen (1929) rated 55,600 t and Italy’s Rex 45,800 t. After WWII, the United States came at 45,400 t in 1952 and France at 57,000 t in 1961, confirming the modal upper limit of great transatlantic liners at between 45,000 t and 57,000 t and forming another sigmoid growth curve (figure 3.5), and also ending, abruptly, more than 150 years of steam-powered Atlantic crossings. I will return to ships in chapter 4, where I will review the growth of transportation speeds.

Logistic curve of the maximum displacement of transatlantic commercial liners, 1849–1961 (Smil 2017a).

Larger ships required engines combining high power with the highest achievable efficiency (in order to limit the amount of coal carried) but inherent limits on steam engine performance opened the way for better prime movers. Between 1904 and 1908 the best efficiencies recoded during the British Marine Engine Trials of the best triple- and quadruple-expansion steam engines ranged between 11% and 17%, inferior to both steam turbines and diesels (Dalby 1920). Steam turbines (which could work with much higher temperatures) and Diesel engines began to take over marine propulsion even before WWI. For diesel engines, it was the first successful niche they conquered before they started to power trucks and locomotives (during the 1920s) and automobiles (during the 1930s).

But decades after they began their retreat from shipping, steam engines made a belated and very helpful appearance: they were chosen to power 2,710 WWII Liberty (EC2) class ships built in the US and Canada that were used to carry cargo and troops to Asia, Africa, and Europe (Elphick 2001). These triple-expansion machines were based on an 1881 English design, worked with inlet pressure of 1.5 MPa (steam came from two oil-fired boilers), and could deliver 1.86 MW at 76 rpm (Bourneuf 2008). This important development illustrates a key lesson applicable to many other growth phenomena: the best and the latest may not be the best in specific circumstances. Relatively low-capacity, inefficient, and outdated steam engines were the best choice to win the delivery war. Their building did not strain the country’s limited capacity to produce modern steam turbines and diesel engines for the Navy, and a large number of proven units could be made by many manufacturers (eventually by 18 different companies) inexpensively and rapidly.

Experiments with a high-pressure steam engine suitable to power railroad locomotives began at the same time as the first installations of steam engines in vessels (Watkins 1967). Richard Trewithick’s simple 1803 locomotive was mechanically sound, and many designs were tested during the next 25 years before the commercial success of Robert Stephenson’s Rocket in the UK (winner of 1829 Rainhill locomotive trails to select the best machine for the Liverpool and Manchester Railway) and The Best Friend of Charleston in the US in 1830 (Ludy 1909). In order to satisfy the requirements of the Rainhill trials of a locomotive weighing 4.5 tons needed to pull three times its weight with a speed of 10 mph (16 km/h) using a boiler operating at a pressure of 50 lbs/in2 (340 kPa). Stephenson’s Rocket, weighing 4.5 t, was the only contestant to meet (and beat) these specifications by pulling 20 t at average speed of 16 mph.

The approximate power (in hp) of early locomotives can be calculated by multiplying tractive effort (gross train weight in tons multiplied by train resistance, equal to 8 lbs/ton on steel rails) by speed (in miles/h) and dividing by 375. Rocket thus developed nearly 7 hp (about 5 kW) and a maximum of about 12 hp (just above 9 kW); The Best Friend of Charleston performed with the identical power at about 30 km/h. More powerful locomotives were needed almost immediately for faster passenger trains, for heavier freight trains on the longer runs of the 1840s and 1850s, and for the first US transcontinental line that was completed in May 1869; these engines also had to cope with greater slopes on mountain routes.

Many improvements—including uniflow design (Jacob Perkins in 1827), regulating valve gear (George H. Corliss in 1849), and compound engines (introduced during the 1880s and expanding steam in two or more stages)—made steam locomotives heavier, more powerful, more reliable, and more efficient (Thurston 1886; Ludy 1909; Ellis 1977). By the 1850s the most powerful locomotive engines had boiler pressures close to 1 MPa and power above 1 MW, exponential growth of close to 20%/year spanning two orders of magnitude in about 25 years. Much slower expansion continued for another 90 years, until the steam locomotive innovation ceased by the mid-1940s. By the 1880s the best locomotives had power on the order of 2 MW and by 1945 the maximum ratings reached 4–6 MW. Union Pacific’s Big Boy, the heaviest steam locomotive ever built (548 t), could develop 4.69 MW, Chesapeake & Union Railway’s Allegheny (only marginally lighter at 544 t) rated 5.59 MW, and Pennsylvania Railroad’s PRR Q2 (built in Altoona in 1944 and 1945, and weighing 456 t) had peak power of 5.956 MW (E. Harley 1982; SteamLocomotive.com 2017).

Consequently, the growth of maximum power ratings for locomotive steam engines—from 9 kW in 1829 to 6 MW in 1944, a 667-fold gain—was considerably slower than the growth of steam engines in shipping, an expected outcome given the weight limits imposed by the locomotive undercarriage and the bearing capacity of rail beds. Steam boiler pressure increased from 340 kPa in Stephenson’s 1829 Rocket to more than 1 MPa by the 1870s, and peak levels in high-pressure boilers of the first half of the 20th century were commonly above 1.5 MPa—record-breaking in its speed (203 km/h in July 1938), Mallard worked with 1.72 MPa—and reached 2.1 MPa in PRR Q2 in 1945, roughly a 6.2-fold gain in 125 years. That makes for a good linear fit with average growth of 0.15 MPa/decade. Thermal efficiencies improved from less than 1% during the late 1820s to 6–7% for the best late 19th and the early 20th-century machines. The best American pre-WWI test results were about 10%, and the locomotives of the Paris-Orleans Railway of France, rebuilt by André Chapelon, reached more than 12% thermal efficiency starting in 1932 (Rhodes 2017). Efficiency growth was thus linear, averaging about 1% per decade.

Steam Turbines

Charles Algernon Parsons patented the first practical turbine design in 1884 and immediately built the first small prototype machine with capacity of just 7.5 kW and low efficiency of 1.6% (Parsons 1936). That performance was worse than that of 1882 steam engine that powered Edison’s first power plant (its efficiency was nearly 2.5%) but improvements followed rapidly. The first commercial orders came in 1888, and in 1890 the first two 75 kW machines (efficiency of about 5%) began to generate electricity in Newcastle. In 1891, a 100 kW, 11% efficient machine for Cambridge Electric Lighting was the first condensing turbine that also used superheated steam (all previous models were exhausting steam against atmospheric pressure, resulting in very low efficiencies).

The subsequent two decades saw exponential growth of turbine capacities. The first 1 MW unit was installed in 1899 at Germany’s Elberfeld station, in 1903 came the first 2 MW machine for the Neptune Bank station near Newcastle, in 1907 a 5 MW 22% efficient turbine in Newcastle-on-Tyne, and in 1912 a 25 MW and roughly 25% efficient machine for the Fisk Street station in Chicago (Parsons 1911). Maximum capacities thus grew from 75 kW to 25 MW in 24 years (a 333-fold increase), while efficiencies improved by an order of magnitude in less than three decades. For comparison, at the beginning of the 20th century the maximum thermal efficiencies of steam engines were 11–17% (Dalby 1920). And power/mass ratios of steam turbines rose from 25 W/kg in 1891 (five times higher than the ratio for contemporary steam engines) to 100 W/kg before WWI. This resulted in compact sizes (and hence easier installations) and in large savings of materials (mostly metals) and lower construction costs.

The last steam engine-powered electricity-generation plant was built in 1905 in London but the obvious promise of further capacity and efficiency gains for steam turbines was interrupted by WWI and, after the postwar recovery, once again by the economic crisis of the 1930s and by WWII. Steam turbogenerators became more common as post-1918 electrification proceeded rapidly in both North America and Europe and US electricity demand rose further in order to supply the post-1941 war economy, but unit capacities of turbines and efficiencies grew slowly. In the US, the first 110 MW unit was installed in 1928 but typical capacities remained overwhelmingly below 100 MW and the first 220 MW unit began generating only in 1953.

But during the 1960s average capacities of new American steam turbogenerators had more than tripled from 175 MW to 575 MW, and by 1965 the largest US steam turbogenerators, at New York’s Ravenswood station, rated 1 GW (1,000 MW) with power/mass ratio above 1,000 W/kg (Driscoll et al. 1964). Capacity forecasts anticipated 2 GW machines by 1980 but reduced growth of electricity demand prevented such growth and by the century’s end the largest turbogenerators (in nuclear power plants) were the 1.5 GW Siemens at Isar 2 nuclear station and the 1.55 GW Alstom unit at Chooz B1 reactor.

The world’s largest unit, Alstom’s 1.75 GW turbogenerator, was scheduled to begin operation in 2019 at France’s Flamanville station, where two 1,382 MW units have been generating electricity since the late 1980s (Anglaret 2013; GE 2017a). The complete trajectory of maximum steam turbine capacities—starting with Parsons’s 7.5 kW machine in 1884 and ending in 2017 shows an increase by five orders of magnitude (and by three orders of magnitude, from 1 MW to 1.5 GW during the 20th century) and a near-perfect fit for a four-parameter logistic curve with the inflection year in 1963 and with unlikely prospects for any further increase (figure 3.6).

Growth of maximum steam turbine capacities since 1884. Five-parameter logistic curve, inflection year in 1954, asymptote has been already reached. Data from Smil (2003, 2017a).

As already noted in the section describing the growth of boilers, working steam pressure rose about 30-fold, from just around 1 MPa for the first commercial units to as high as 31 MPa for supercritical turbines introduced in the 1960s (Leyzerovich 2008). Steam temperatures rose from 180°C for the first units to more than 600°C for the first supercritical units around 1960. Plans for power plants with ultra-supercritical steam conditions (pressure of 35 MPa and temperatures of 700/720°C) and with efficiency of up to 50% were underway in 2017 (Tumanovskii et al. 2017). Coal-fired units (boiler-turbine-generator) used to dominate the largest power plants built after WWII, and at the beginning of the 20th century they still generated about 40% of the world’s electricity. But after a temporary increase, caused by China’s extraordinarily rapid construction of new coal-fired capacities after the year 2000, coal is now in retreat. Most notably, the share of US coal-fired generation declined from 50% in the year 2000 to 30% in 2017 (USEIA 2017a).

US historical statistics allow for a reliable reconstruction of average conversion efficiency (heat rates) at thermal power plants (Schurr and Netschert 1960; USEIA 2016). Rates rose from less than 4% in 1900 to nearly 14% in 1925, to 24% by 1950, surpassed 30% by 1960 but soon leveled off and in 2015 the mean was about 35%, a trajectory closely conforming to a logistic curve with the inflection point in 1931 (figure 3.7). Flamanville’s 1.75 GW Arabelle unit has design efficiency of 38% and its power/mass ratio is 1,590 W/kg (Modern Power Systems 2010). Further substantial growth of the highest steam turbine capacities is unlikely in any Western economy where electricity demand is, at best, barely increasing or even declining, and while the modernizing countries in Asia, Latin America, and Africa need to expand their large-scale generating capacities, they will increasingly rely on gas turbines as well as on new PV and wind capacities.

Logistic growth (inflection year in 1933, asymptote at 36.9%) of average efficiency of US thermal electricity-generating plants. Data from Schurr and Netschert (1960) and USEIA (2016).

Although diesel engines have dominated shipping for decades, steam turbines, made famous by powering record-breaking transatlantic liners during the early decades of the 20th century, have made some contributions to post-1950 waterborne transport. In 1972 Sea-Land began to use the first (SL-7 class) newly built container ships that were powered by GE 45 MW steam turbines. Diesels soon took over that sector, leaving tankers transporting liquefied natural gas (LNG) as the most important category of shipping relying on steam turbines. LNG tankers use boil-off gas (0.1–0.2% of the carrier’s capacity a day; they also use bunker fuel) to generate steam. America’s large aircraft carriers are also powered by turbines supplied by steam from nuclear reactors (ABS 2014).

Internal Combustion Engines

Despite their enormous commercial success and epoch-making roles in creating modern, high-energy societies, steam engines, inherently massive and with low power/mass ratio (the combination that could be tolerated for stationary designs and with low fuel costs), could not be used in any applications that required relatively high conversion efficiency and high power/mass ratio, limiting their suitability for mobile uses to rails and shipping and excluding their adoption for flight. High efficiency and high power/mass ratio were eventually supplied by steam turbines, but the requirements of mechanized road (and off-road) transport were met by two new kinds of the 19th-century machines, by internal combustion engines. Gasoline-fueled Otto-cycle engines power most of the world’s passenger cars and other light-duty vehicles and diesel engines are used for trucks and other heavy machinery and also for many European automobiles.

Reciprocating gasoline-fueled engines were also light enough to power propeller planes and diesels eventually displaced steam in railroad freight and marine transport. Gas turbines are the only 20th-century addition to internal combustion machines. These high power/mass designs provide the only practical way to power long-distance mass-scale global aviation, and they have become indispensable prime movers for important industrial and transportation systems (chemical syntheses, pipelines) as well as the most efficient, and flexible, generators of electricity.

Gasoline Engines

Steam engines rely on external combustion (generating steam in boilers before introducing it into cylinders), while internal combustion engines (fueled by gasoline or diesel fuel) combine the generation of hot gases and conversion of their kinetic energy into reciprocating motion inside pressurized cylinders. Developing such machines presented a greater challenge than did the commercialization of steam engines and hence it was only in 1860, after several decades of failed experiments and unsuccessful designs, that Jean Joseph Étienne Lenoir patented the first viable internal combustion engine. This heavy horizontal machine, powered by an uncompressed mixture of illuminating gas and air, had a very low efficiency (only about 4%) and was suitable only for stationary use (Smil 2005).

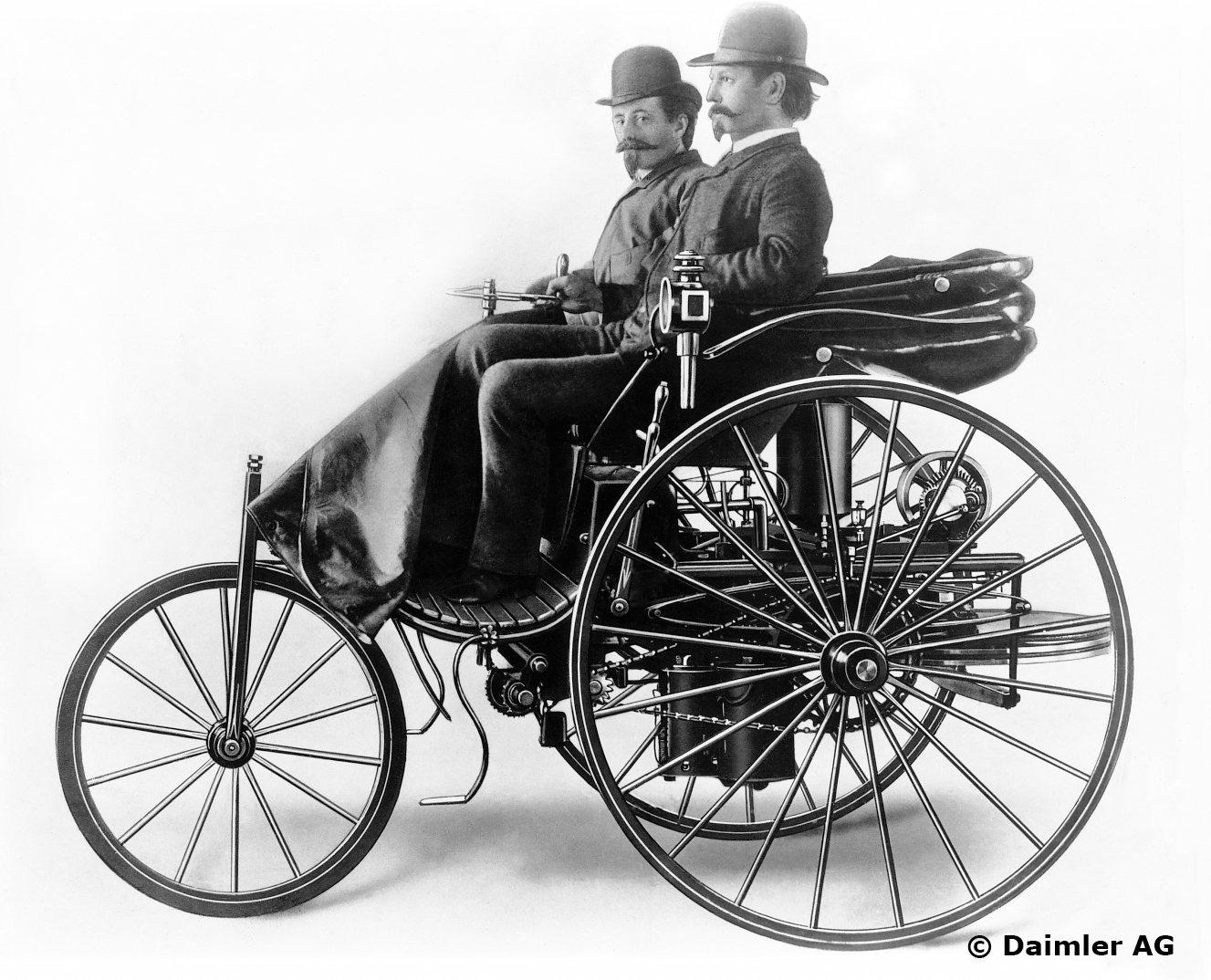

Fifteen years later, in 1877, Nicolaus August Otto patented a relatively light, low-power (6 kW), low-compression (2.6:1) four-stroke engine that was also fueled by coal gas, and nearly 50,000 units were eventually bought by small workshops (Clerk 1909). The first light gasoline-fueled engine suitable for mobile use was designed in Stuttgart in 1883 by Gottlieb Daimler and Wilhelm Maybach, both former employees of Otto’s company. In 1885 they tested its version on a bicycle (the prototype of a motorcycle) and in 1886 they mounted a larger (820 W) engine on a wooden coach (Walz and Niemann 1997). In one of the most remarkable instances of independent technical innovation, Karl Benz was concurrently developing his gasoline engine in Mannheim, just two hours by train from Stuttgart. By 1882 Benz had a reliable, small, horizontal gasoline-fueled engine, and then proceeded to develop a four-stroke machine (500 W, 250 rpm, mass of 96 kg) that powered the first public demonstration of a three-wheeled carriage in July 1886 (figure 3.8).

Carl Benz (with Josef Brecht) at the wheel of his patent motor car in 1887. Photograph courtesy of Daimler AG, Stuttgart.

And it was Benz’s wife, Bertha, who made, without her husband’s knowledge, the first intercity trip with the three-wheeler in August of 1888 when she took their two sons to visit her mother, driving some 104 km to Pforzheim. Daimler’s high-rpm engine, Benz’s electrical ignition, and Maybach’s carburetor provided the functional foundations of automotive engines but the early motorized wooden carriages which they powered were just expensive curiosities. Prospects began to change with the introduction of better engines and better overall designs. In 1890 Daimler and Maybach produced their first four-cylinder engine, and their machines kept improving through the 1890s, winning the newly popular car races. In 1891 Emile Levassor, a French engineer, combined the best Daimler-Maybach engine with his newly designed, car-like rather than carriage-like, chassis. Most notably, he moved the engine from under the seats in front of the driver (crankshaft parallel with the vehicle’s long axis), a design helping later aerodynamic body shape (Smil 2005).

During the last decade of the 19th century, cars became faster and more convenient to operate, but they were still very expensive. By 1900 cars benefited from such technical improvements as Robert Bosch’s magneto (1897), air cooling, and the front-wheel drive. In 1900 Maybach designed a vehicle that was called the “first modern car in all essentials” (Flink 1988, 33): Mercedes 35, named after the daughter of Emil Jellinek who owned a Daimler dealership, had a large 5.9 L, 26 kW engine whose aluminum block and honeycomb radiator lowered its weight to 230 kg. Other modern-looking designs followed in the early years of the 20th century but the mass-market breakthrough came only in 1908 with Henry Ford’s Model T, the first truly affordable vehicle (see figure 1.2). Model T had a 2.9 L, 1 -kW (20 hp) engine weighing 230 kg and working with a compression ratio of 4.5:1.

Many cumulative improvements were made during the 20th century to every part of the engine. Beginning in 1912, dangerous cranks were replaced by electric starters. Ignition was improved by Gottlob Honold’s high-voltage magneto with a new spark plug in 1902, and more durable Ni-Cr spark plugs were eventually replaced by copper and then by platinum spark plugs. Band brakes were replaced by curved drum brakes and later by disc brakes. The violent engine knocking that accompanied higher compression was eliminated first in 1923 by adding tetraethyl lead to gasoline, a choice that later proved damaging both to human health and to the environment (Wescott 1936).

Two variables are perhaps most revealing in capturing the long-term advances of automotive gasoline engines: their growing power (when comparing typical or best-selling designs, not the maxima for high-performance race cars) and their improving power/mass ratio (whose rise has been the result of numerous cumulative design improvements). Power ratings rose from 0.5 kW for the engine powering Benz’s three-wheeler to 6 kW for Ford’s Model A introduced in 1903, to 11 kW for Model N in 1906, 15 kW for Model T in 1908, and 30 kW for its successor, a new Model A that enjoyed record sales between 1927 and 1931. Ford engines powering the models introduced a decade later, just before WWII, ranged from 45 to 67 kW.

Larger post-1950 American cars had engines capable mostly of more than 80 kW and by 1965 Ford’s best-selling fourth-generation Fairlane was offered with engines of up to 122 kW. Data on the average power of newly sold US cars are available from 1975 when the mean was 106 kW; it declined (due to a spike in oil prices and a sudden preference for smaller vehicles) to 89 kW by 1981, but then (as oil prices retreated) it kept on rising, passing 150 kW in 2003 and reaching the record level of nearly 207 kW in 2013, with the 2015 mean only slightly lower at 202 kW (Smil 2014b; USEPA 2016b). During the 112 years between 1903 and 2015 the average power of light-duty vehicles sold in the US thus rose roughly 34 times. The growth trajectory was linear, average gain was 1.75 kW/year, and notable departures from the trend in the early 1980s and after 2010 were due, respectively, to high oil prices and to more powerful (heavier) SUVs (figure 3.9).

Linear growth of average power of US passenger vehicles, 1903–2020. Data from Smil (2014b) and USEPA (2016b).

The principal reason for the growing power of gasoline engines in passenger cars has been the increasing vehicle mass that has taken place despite the use of such lighter construction materials as aluminum, magnesium, and plastics. Higher performance (faster acceleration, higher maximum speeds) was a secondary factor. With few exceptions (German Autobahnen being the best example), speed limits obviate any need for machines capable of cruising faster than the posted maxima—but even small cars (such as Honda Civic) can reach maxima of up to or even above 200 km/h, far in excess of what is needed to drive lawfully.

Weight gains are easily illustrated by the long-term trend of American cars (Smil 2010b). In 1908 the curb weight of Ford’s revolutionary Model T was just 540 kg and three decades later the company’s best-selling Model 74 weighed almost exactly twice as much (1,090 kg). Post-WWII weights rose with the general adoption of roomier designs, automatic transmissions, air conditioning, audio systems, better insulation, and numerous small servomotors. These are assemblies made of a small DC motor, a gear-reduction unit, a position-sensing device (often just a potentiometer), and a control circuit: servomotors are now used to power windows, mirrors, seats, and doors.

As a result, by 1975 (when the US Environmental Protection Agency began to monitor average specifications of newly sold vehicles) the mean inertia weight of US cars and light trucks (curb weight plus 200 lbs, or 136 kg) reached 1.84 t. Higher oil prices helped to lower the mean to 1.45 t by 1981, but once they collapsed in 1985 cars began to gain weight and the overall trend was made much worse by the introduction of SUVS and by the increasing habit of using pickups as passenger cars. By 2004 the average mass of newly sold passenger vehicles reached a new record of 1.86 t, that was slightly surpassed (1.87 t) in 2011, and by 2016 this mean declined only marginally to 1.81 t (Davis et al. 2016; USEPA 2016b). The curb weight of the average American light-duty vehicle had thus increased roughly three times in a century.

Until the 1960s both European and Japanese cars weighed much less than US vehicles but since the 1970s their average masses have shown a similarly increasing trend. In 1973 the first Honda Civic imported to North America weighed just 697 kg, while the 2017 Civic (LX model, with automatic transmission and standard air conditioning) weighs 1,247 kg, that is about half a tonne (nearly 80%) more than 44 years ago. Europe’s popular small post-WWII cars weighed just over half a tonne (Citroen 2 CV 510 kg, Fiat Topolino 550 kg), but the average curb weight of European compacts reached about 800 kg in 1970 and about 1.2 t in the year 2000 (WBCSD 2004). Subsequently, average mass has grown by roughly 100 kg every five years and the EU car makers now have many models weighing in excess of 1.5 t (Cuenot 2009; Smil 2010b). And the increasing adoption of hybrid drives and electric vehicles will not lower typical curb weights because these designs have to accommodate either more complicated power trains or heavy battery assemblies: the Chevrolet Volt hybrid weighs 1.72 t, the electric Tesla S as much as 2.23 t.

The power/mass ratio of Otto’s heavy stationary horizontal internal combustion engine was less than 4 W/kg, by 1890 the best four-stroke Daimler-Maybach automotive gasoline engine reached 25 W/kg, and in 1908 Ford’s Model T delivered 65 W/kg. The ratio continued to rise to more than 300 W/kg during the 1930s and by the early 1950s many engines (including those in the small Fiat 8V) were above 400 W/kg. By the mid-1950s Chrysler’s powerful Hemi engines delivered more than 600 W/kg and the ratio reached a broad plateau in the range of 700–1,000 W/kg during the 1960s. For example, in 1999 Ford’s Taunus engine (high performance variant) delivered 830 W/kg, while in 2016 Ford’s best-selling car in North America, Escape (a small SUV), was powered by a 2.5 L, 125 kW Duratec engine and its mass of 163 kg resulted in a power/mass ratio of about 770 W/kg (Smil 2010b). This means that the power/mass densities of the automotive gasoline engine have increased about 12-fold since the introduction of the Model T, and that more than half of that gain took place after WWII.

Before leaving the gasoline engines I should note the recent extreme ratings of high-performance models and compare them to those of the car that was at the beginning of their evolution, Maybach’s 1901 Mercedes 35 (26 kW engine, power/mass ratio of 113 W/kg). In 2017 the world’s most powerful car was the Swedish limited-edition Megacar Koenigsegg Regera with a 5 L V8 engine rated at 830 kW and electric drive rated at 525 kW, giving it the actual total combined propulsion of 1.11 MW—while the top Mercedes (AMG E63-S) rated “only” 450 kW. This means that the maximum power of high-performance cars has risen about 42-fold since 1901 and that the Regera has more than eight times the power of the Honda Civic.

Finally, a brief note about the growth of gasoline-fueled reciprocating engines in flight. Their development began with a machine designed by Orville and Wilbur Wright and built by their mechanic Charles Taylor in 1903 in the brothers’ bicycle workshop in Dayton, Ohio (Taylor 2017). Their four-cylinder 91 kg horizontal engine was to deliver 6 kW but eventually it produced 12 kW, corresponding to a power/mass ratio of 132 W/kg. Subsequent improvement of aeroengines was very rapid. Léon Levavasseur’s 37 kW Antoinette, the most popular pre-WWI eight-cylinder engine, had a power/mass ratio of 714 W/kg, and the American Liberty engine, a 300 kW mass-produced machine for fighter aircraft during WWI, delivered about 900 W/kg (Dickey 1968). The most powerful pre-WWII engines were needed to power Boeing’s 1936 Clipper, a hydroplane that made it possible to fly, in stages, from the western coast of the United States to East Asia. Each of its four radial Wright Twin Cyclone engines was rated at 1.2 MW and delivered 1,290 W/kg (Gunston 1986).

High-performance aeroengines reached their apogee during WWII. American B-29 (Superfortress) bombers were powered by four Wright R-3350 Duplex Cyclone radial 18-cylinder engines whose versions were rated from 1.64 to 2.76 MW and had a high power/mass ratio in excess of 1,300 W/kg, an order of magnitude higher than the Wrights’ pioneering design (Gunston 1986). During the 1950s Lockheed’s L-1049 Super Constellation, the largest airplane used by airlines for intercontinental travel before the introduction of jetliners, used the same Wright engines. After WWII spark-ignited gasoline engines in heavy-duty road (and off-road) transport were almost completely displaced by diesel engines, which also dominate shipping and railroad freight.

Diesel Engines

Several advantageous differences set Diesel’s engines apart from Otto-cycle gasoline engines. Diesel fuel has nearly 12% higher energy density compared to gasoline, which means that, everything else being equal, a car can go further on a full tank. But diesels are also inherently more efficient: the self-ignition of heavier fuel (no sparking needed) requires much higher compression ratios (commonly twice as high as in gasoline engines) and that results in a more complete combustion (and hence a cooler exhaust gas). Longer stroke and lower rpm reduce frictional losses, and diesels can operate with a wide range of very lean mixtures, two to four times leaner than those in a gasoline engine (Smil 2010b).

Rudolf Diesel began to develop a new internal combustion engine during the early 1890s with two explicit goals: to make a light, small (no larger than a contemporary sewing machine), and inexpensive engine whose use by independent entrepreneurs (machinists, watchmakers, repairmen) would help to decentralize industrial production and achieve unprecedented efficiency of fuel conversion (R. Diesel 1913; E. Diesel 1937; Smil 2010b). Diesel envisaged the engine as the key enabler of industrial decentralization, a shift of production from crowded large cities where, he felt strongly, it was based on inappropriate economic, political, humanitarian, and hygienic grounds (R. Diesel 1893).

And he went further, claiming that such decentralized production would solve the social question as it would engender workers’ cooperatives and usher in an age of justice and compassion. But his book summarizing these ideas (and ideals)—Solidarismus: Natürliche wirtschaftliche Erlösung des Menschen (R. Diesel 1903)—sold only 300 of 10,000 printed copies and the eventual outcome of the widespread commercialization of Diesel’s engines was the very opposite of his early social goals. Rather than staying small and serving decentralized enterprises, massive diesel engines became one of the principal enablers of unprecedented industrial centralization, mainly because they reduced transportation costs, previously decisive determinants of industrial location, to such an extent that an efficient mass-scale producer located on any continent could serve the new, truly global, market.

Diesels remain indispensable prime movers of globalization, powering crude oil and LNG tankers, bulk carriers transporting ores, coal, cement, and lumber, container ships (the largest ones now capable of moving more than 20,000 standard steel containers), freight trains, and trucks. They move fuels, raw materials, and food among five continents and they helped to make Asia in general, and China in particular, the center of manufacturing serving the worldwide demand (Smil 2010b). If you were to trace everything you wear, and every manufactured object you use, you would find that all of them were moved multiple times by diesel-powered machines.

Diesel’s actual accomplishments also fell short of his (impossibly ambitious) efficiency goal, but he still succeeded in designing and commercializing an internal combustion engine with the highest conversion efficiency. He did so starting with the engine’s prototype, which was constructed with a great deal of support from Heinrich von Buz, general director of the Maschinenfabrik Augsburg, and Friedrich Alfred Krupp, Germany’s leading steelmaker. On February 17, 1897, Moritz Schröter, a professor of theoretical engineering at Technische Universität in Munich, was in charge of the official certification test that was to set the foundation for the engine’s commercial development. While working at its full power of 13.5 kW (at 154 rpm and pressure of 3.4 MPa), the engine’s thermal efficiency was 34.7% and its mechanical efficiency reached 75.5 % (R. Diesel 1913).

As a result, the net efficiency was 26.2%, about twice that of contemporary Otto-cycle machines. Diesel was justified when he wrote to his wife that nobody’s engine had achieved what his design did. Before the end of 1897 the engine’s net efficiency reached 30.2%—but Diesel was wrong when he claimed that he had a marketable machine whose development would unfold smoothly. The efficient prototype required a great deal of further development and the conquest of commercial markets began in 1903, and it was not on land but on water, with a small diesel engine (19 kW) powering a French canal boat. Soon afterwards came Vandal, an oil tanker operating on the Caspian Sea and on the Volga with three-cylinder engines rated at 89 kW (Koehler and Ohlers 1998), and in 1904 the world’s first diesel-powered station began to generate electricity in Kiev.

The first oceangoing ship equipped with diesel engines (two eight-cylinder four-stroke 783 kW machines) was the Danish Selandia, a freight and passenger carrier that made its maiden voyage to Tokyo and back to Copenhagen in 1911 and 1912 (Marine Log 2017). In 1912 Fionia became the first diesel-powered transatlantic steamer of the Hamburg-American Line. Diesel adoption proceeded steadily after WWI, got really underway during the 1930s, and accelerated after WWII with the launching of large crude oil tankers during the 1950s. A decade later came large container ships as marine diesel engines increased both in capacity and efficiency (Smil 2010b).

In 1897 Diesel’s third prototype engine had capacity of 19.8 bhp (14.5 kW), in 1912 Selandia’s two engines rated 2,100 bhp. In 1924 Sulzer’s engine for ocean liners had a 3,250 bhp engine, and a 4,650 bhp machine followed in 1929 (Brown 1998). The largest pre-WWII marine diesels were around 6,000 bhp, and by the late 1950s they rated 15,000 bhp. In 1967 a 12-cylinder engine was capable of 48,000 hp, in 2001 MAN B&W-Hyundai’s machine reached 93,360 bhp and in 2006 Hyundai Heavy Industries built the first diesel rated at more than 100,000 bhp: 12K98MC, 101,640 bhp, that is 74.76 MW (MAN Diesel 2007).

That machine held the record of the largest diesel for just six months until September 2006 when Wärtsilä introduced a new 14-cylinder engine rated at 80.1 MW (Wärtsilä 2009). Two years later modifications of that Wärtsilä engine increased the maximum rating to 84.42 MW and MAN Diesel now offers an 87.22 MW engine with 14 cylinders at 97 rpm (MAN Diesel 2018). These massive engines power the world’s largest container ships, with OOCL Hong Kong, operating since 2017, the current record holder (figure 3.10). Large-scale deployment of new machines saw efficiencies approaching 40% after WWI, in 1950 a MAN reached 45% efficiency, and the best two-stroke designs now have efficiencies of 52% (surpassing that of gas turbines at about 40%, although in combined cycle, using exhaust gas in a steam turbine, they now go up to 61%) and four-stroke diesels are a bit behind at 48%.

OOCL Hong Kong, the world’s largest container ship in 2019, carries an equivalent of 21,413 twenty-foot standard units. Diesels and container vessels have been the key prime movers of globalization. Photo available at wikimedia.

On land, diesels were first deployed in heavy locomotives and trucks. The first diesel locomotive entered regular service in 1913 in Germany. Powered by a Sulzer four-cylinder two-stroke V-engine, it could sustain speed of 100 km/h. After WWI yard switching locomotives were the first railroad market dominated by diesels (steam continued to power most passenger trains) but by the 1930s the fastest trains were diesel-powered. In 1934 streamlined stainless-steel Pioneer Zephyr had a 447 kW, eight-cylinder, two-stroke diesel-electric drive that made it possible to average 124 km/h on the Denver-Chicago run (Ellis 1977; ASME 1980).

By the 1960s steam engines in freight transport (except in China and India) were replaced by diesels. Ratings of American locomotive diesel engines increased from 225 kW in 1924 for the first US-made machine to 2 MW in 1939, and the latest GE Evolution Series engines have power ranging from 2.98 to 4.62 MW (GE 2017b). But modern locomotive diesels are hybrids using diesel-electric drive: the engine’s reciprocating motion is not transmitted to wheels but generates electricity for motors driving the train (Lamb 2007). Some countries converted all of their train traffic to electricity and all countries use electric drive for high-speed passenger trains (Smil 2006b).