Chapter 6

Identity and Access Control

Chapter 6 deals with two sides of the same coin: identity management and access control. The essence of information risk mitigation is ensuring that only the right people and processes can read, view, use, change, or remove any of our sensitive information assets, or use any of our most important information-based business processes. We also require the ability to prove who or what touched what information asset and when, and what happened when they did. We'll see how to authenticate that a subject user (be that a person or a software process) is who they claim to be; use predetermined policies to decide if they are authorized to do what they are attempting to do; and build and maintain accounting or audit information that shows us who asked to do what, when, where, and how. Chapter 6 combines decades of theory-based models and ideas with cutting-edge how-to insight; both are vital to an SSCP on the job.

Identity and Access: Two Sides of the Same CIANA+PS Coin

At the heart of all information security (whether Internet-based or not) is the same fundamental problem. Information is not worth anything if it doesn't move, get shared with others, and get combined with other information to make decisions happen. But to keep that information safe and secure, to meet all of our company's CIANA+PS needs, we usually cannot share that information with just anybody! The flip side of that also tells us that in all likelihood, any one person will not have valid “need to know” for all of the information our organization has or uses. Another way to think about that is that if you do not know who is trying to access your information, you don't know why to grant or deny their attempt.

Each one of the elements of the CIANA+PS security paradigm—which embraces confidentiality, integrity, availability, nonrepudiation, authentication, privacy, and safety—has this same characteristic. Each element must look at the entire universe of people or systems, and separate out those we trust with access to our information from those we do not, while at the same time deciding what to let those trusted people or systems do with the information we let them have access to.

What do we mean by “have access to” an object? In general, access to an object can consist of being able to do one or more of the following kinds of functions:

- Read part or all of the contents of the object

- Read metadata about the object, such as its creation and modification history, its location in the system, or its relationships with other objects

- Write to the object or its metadata, modifying it in whole or part

- Delete part or all of the object, or part or all of its metadata

- Load the object as an executable process (as an executable program, a process, process thread, or code injection element such as a dynamic link library [DLL file])

- Receive data or metadata from the object, if the object can perform such services

(i.e., if the object is a server of some kind)

- Read, modify, or delete security or access control information pertaining to the object

- Invoke another process to perform any of these functions on the object

- And so on…

This brings us right to the next question: who, or what, is the thing that is attempting to access our information, and how do we know that they are who they claim to be? It used to be that this identity question focused on people, software processes or services, and devices. The incredible growth in Web-based services complicates this further, and we've yet to fully understand what it will mean with Internet of Things (IoT) devices, artificial intelligences, and robots of all kinds joining our digital universal set of subjects—that is, entities requesting access to objects.

Our organization's CIANA+PS needs are at risk if unauthorized subjects—be they people or processes—can execute any or all of those functions in ways that disrupt our business logic:

- Confidentiality is violated if any process or person can read, copy, redistribute, or otherwise make use of data we deem private, or of competitive advantage worthy of protection as trade secrets, proprietary, or restricted information.

- Integrity is lost if any person or process can modify data or metadata, or execute processes out of sequence or with bad input data.

- Authorization—the granting of permission to use the data—cannot make sense if there is no way to validate to whom or what we are granting that permission.

- Nonrepudiation cannot exist if we cannot validate or prove that the person or process in question is in fact who they claim to be and that their identity hasn't been spoofed by a man-in-the-middle kind of attacker.

- Availability rapidly dwindles to zero if nothing stops data or metadata from unauthorized modification or deletion.

- Privacy can be violated (or our systems fail to be compliant with privacy requirements) if data can be moved, copied, viewed, or used in unauthorized ways

- Safety can be compromised if data or metadata can be altered or removed

The increasing use of remote collaboration technologies in telemedicine highlights these concerns. If clinical workers cannot trust that the patient they think they are treating, the lab results they believe they are reading, and the rest of that patient's treatment record have all been protected from unauthorized access, they lose the confidence they need to know that they are diagnosing and treating the right patient for the right reasons.

One more key ingredient needs to be added as we consider the vexing problems of managing and validating identities and protecting our information assets and resources from unauthorized use or access: the question of trust. In many respects, that old adage needs to be updated: it's not what you know, but how you know how much you can trust what you think you know, that becomes the heart of identity and access management concerns.

Identity Management Concepts

Identity management actually starts with the concept of an entity, which is a person, object, device, or software unit that can be uniquely and unambiguously identified. Each entity, whether it be human or nonhuman, can also have many different identities throughout the existence of that entity. Many of these identities are legitimate, lawful, and in fact necessary to create and use; some may not be.

Let's illustrate with a human being, for example. Most human names are quite common, even at the level of the full form of all parts of that name. On top of this, many information systems such as credit reporting agencies carry different versions of the names of individuals, through errors, abbreviations, or changes in usage. This can happen if I choose to use my full middle name, its initial, or no use of it at all on an account application or transaction. To resolve the ambiguity, it often takes far more data:

- Biometric data, such as fingerprints, retina scans, gait, facial measurements, and voiceprints

- DNA analysis

- Historical data regarding places of residence, schooling, or employment

- Circumstances of birth, such as place, parents, attending physician, or midwife

- Marriage, divorce, or other domestic partnership histories, as well as information regarding any children (naturally parented, foster, adopted, or other)

- Photographic and video images or recordings

For any given human, that can amount to a lot of data—far too much data to make for a useful, practical way to control access to a facility or an information system. This gives rise defining an identity as:

- A label or data element assigned to an entity, by another entity,

- for the purpose of managing and controlling that entity's access to, use of, or enjoyment of the systems, facilities, or information,

- which are under the administrative control of the identity-granting entity.

Note the distinctions here: entities are created, identities are assigned. And the process of granting permissions to enter, use, view, modify, learn from, or enjoy the resources or assets controlled by the identity-granting organization is known as entitlement. To add one further definition, a credential is a document or dataset that attests to the correctness, completeness, and validity of an identity's claim to be who and what that identity represents, at a given moment in time, and for the purposes associated with that identity.

We need a way to associate an identity, in clear and unambiguous ways, with exactly one such person, device, software process or service, or other subject, whether a part of our system or not. In legal terms, we need to avoid the problems of mistaken identity, just because of a coincidental similarity of name, location, or other information related to two or more people, processes, or devices. It may help if we think about the process of identifying such a subject:

- A person (or a device) offers a claim as to who or what it is.

- The claimant offers further supporting information that attests to the truth of that claim.

- We verify the believability (the credibility or trustworthiness) of that supporting information.

- We ask for additional supporting information, or we ask a trusted third party to authenticate that information.

- Finally, we conclude that the subject is whom or what it claims to be.

So how do we create an identity? It's one thing for your local government's office of vital records to issue a birth certificate when a baby is born, or a manufacturer to assign a MAC address to an Internet-compatible hardware device. How do systems administrators manage identities?

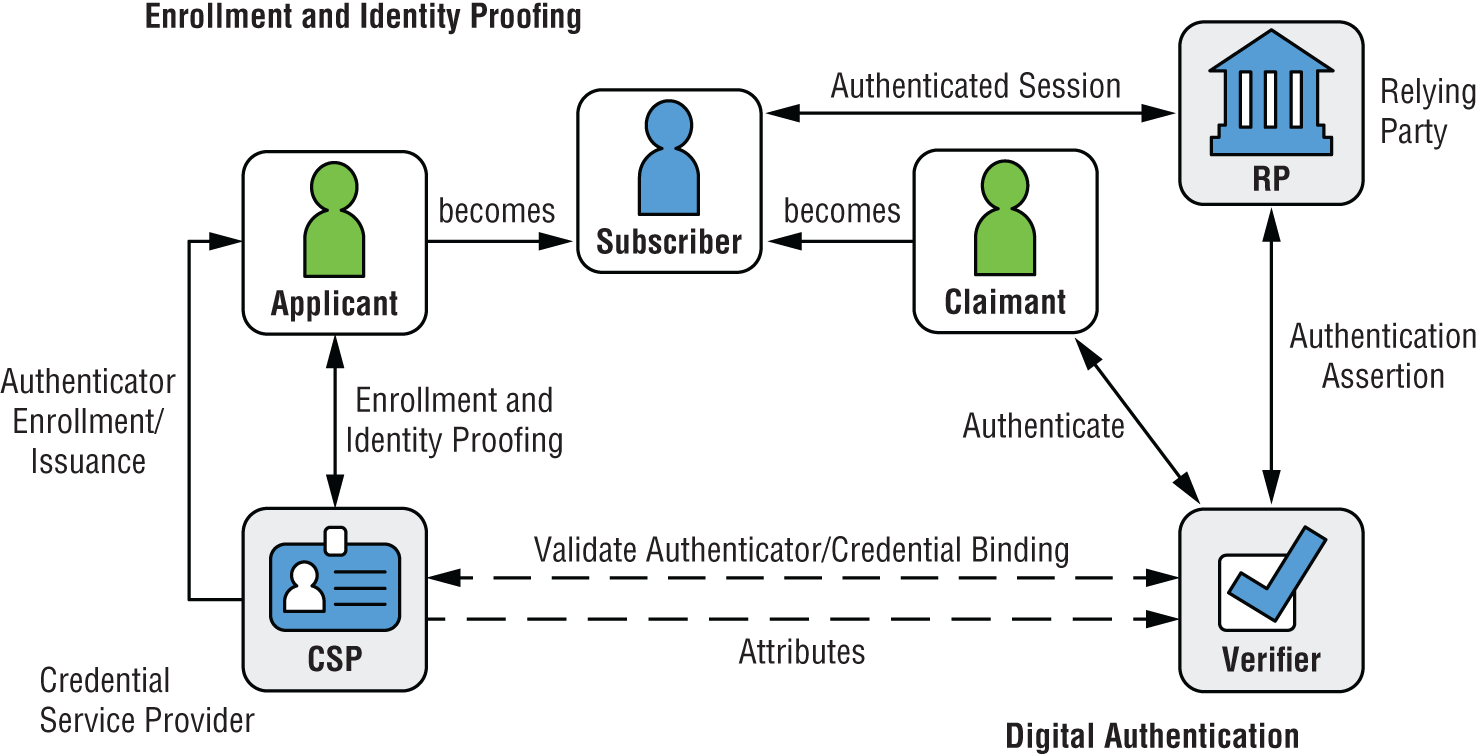

Identity Provisioning and Management

The identity management lifecycle describes the series of steps in which a subject's identity is initially created, initialized for use, modified as needs and circumstances change, and finally retired from authorized use in a particular information system. These steps are typically referred to as provisioning, review, and revocation of an identity:

- Provisioning starts with the initial claim of identity and a request to create a set of credentials for that identity; typically, a responsible manager in the organization must approve requests to provision new identities. (This demonstrates separation of duties by preventing the same IT provisioning clerk from creating new identities surreptitiously.) Key to this step is identity proofing, which separately validates that the evidence of identity as submitted by the applicant is truthful, authoritative, and current. Once created, the identity management functions have to deploy that identity to all of the access control systems protecting all of the objects that the new identity will need access to. Depending on the size of the organization, the complexity of its IT systems, and even how many operating locations around the planet the organization has, this “push” of a newly provisioned identity can take minutes, hours, or maybe even a day or more. Many larger organizations will use regularly scheduled update and synchronization tasks, running in the background, to bring all access control subsystems into harmony with each other. An urgent “right-now” push of this information can force a near-real-time update, if management deems it necessary.

- Review is the ongoing process that checks whether the set of access privileges granted to a subject are still required or if any should be modified or removed. Individual human subjects are often faced with changes in their job responsibilities, and these may require that new privileges be added and others be removed. Privilege creep happens when duties have changed and yet privileges that are no longer actually needed remain in effect for a given user. For example, an employee might be temporarily granted certain administrative privileges in order to substitute for a manager who has suddenly taken medical retirement, but when the replacement manager is hired and brought on board, those temporary privileges should be reduced or removed.

- Revocation is the formal process of terminating access privileges for a specific identity in a system. Such revocation is most often needed when an employee leaves the organization (whether by death, retirement, termination, or by simply moving on to other pastures). Employment law and due diligence dictate that organizations have policies in place to handle both preplanned and sudden departures of staff members, to protect systems and information from unauthorized access after such departure. Such unplanned departures might require immediate actions be taken to terminate all systems privileges within minutes of an authorized request from management.

Ongoing Manual Provisioning

Provisioning happens throughout the life of an identity within a particular system. Initially, it may require a substantial proofing effort, as examined earlier. Once the new identity has been created and enabled within the system, it will probably need changes (other than revocation or temporary suspension) to meet the changing needs of the organization and the individual, and some of these changes can and should be allocated to the identity holder themselves to invoke on an as-needed basis. Password changes, updates to associated physical addresses, phone numbers, security challenge questions, and other elements are often updated by the end users (entities using the assigned identities) directly, without requiring review, approval, or action by security personnel or access control administrators. Organizations may, of course, require that some of these changes be subject to approval or require other coordinated actions to be taken.

Just-in-Time Provisioning and Identity

The initial creation of an identity can also be performed right at the moment when an entity first requests access to a system's resources. This just-in-time identity (JIT identity, not JITI) is quite common on websites, blogs, and email systems where the system owner does not require strong identity proofing as part of creating a new identity. To support this, standardized identity assurance levels (IALs) have been created and are in widespread use, reflecting the degree of proofing required to support the assertion of an identity by an applicant. These levels are:

- IAL1: This is the lowest level of identity assertion, for which no effort is expended nor required to validate the authenticity of the information provided by an applicant.

- IAL2: This level requires that an online identity authentication service be able to validate the applicant's claim to use of an identity. This might be done by creating an account by using one's preexisting social media account (at LinkedIn, Facebook, or other).

- IAL3: This most stringent of IAL levels requires physical verification of the documents submitted by the applicant to prove their claim to an identity. These may be government-issued identity documents (which may also be required to be apostilled or otherwise authenticated as legitimate documents).

Just-in-time identity can also play a powerful role in privilege management, in which an identity that has elevated privileges associated with it is not actually granted use of these privileges until the moment they are needed. Systems administrators, for example, generally do not require root or superuser privileges to read their internal email or access trouble ticket systems. A common example of this is the super user do or sudo command in Unix and Linux systems, or the User Account Control feature on Windows-based systems. These provide ways to enforce security policies that prevent accidental and some malicious attempts to perform specific operations such as installing new software, often by requiring a second, specific confirmation by the end user.

The identity management lifecycle is supported by a wide range of processes and tools within a typical IT organization. At the simplest level, operating systems have built-in features that allow administrators to create, maintain, and revoke user identities and privileges. Most OS-level user creation functions can also create roaming profiles, which can allow one user identity to have access privileges on other devices on the network, including any tailoring of those privileges to reflect the location of the user's device or other conditions of the access request. What gets tricky is managing access to storage, whether on local devices or network shared storage, when devices and users can roam around. This can be done at the level of each device using built-in OS functions, but it becomes difficult if not impossible to manage as both the network and the needs for control grow. At some point, the organization needs to look at ways to manage the identity lifecycle for all identities that the organization needs to care about. This will typically require the installation and use of one or more servers to provide the key elements of identity and access control.

Identity and AAA

SSCPs often need to deal with the “triple-A” of identity management and access control, which refers to authentication, authorization, and accounting. As stated earlier, these are all related to identities, and are part of how our systems decide whether to grant access (and with which privileges) or not—so in that sense they sit on the edge of the coin between the two sides of our CIANA+PS coin. Let's take a closer look at each of these important functions.

Authentication is where everything must start. Authentication is the act of examining or testing the identity credentials provided by a subject that is requesting access, and based on information in the access control list, either granting (accepts) access, denying it, or requesting additional credential information before making an access determination:

- Multifactor identification systems are a frequent example of access control systems asking for additional information: the user completes one sign-on step, and is then challenged for the second (or subsequent) factor.

- At the device level, access control systems may challenge a user's device (or one automatically attempting to gain access) to provide more detailed information about the status of software or malware definition file updates, and (as you saw in Chapter 5, “Communications and Network Security”) deny access to those systems not meeting criteria, or route them to restricted networks for remediation.

Once an identity has been authenticated, the access control system determines just what capabilities that identity is allowed to perform. Authorization requires a two-step process:

- Assigning privileges during provisioning. Prior to the first access attempt, administrators must decide which permissions or privileges to grant to an identity, and whether additional constraints or conditions apply to those permissions. The results of those decisions are stored in access control tables or access control lists in the access control database.

- Authorizing a specific access request. After authenticating the identity, the access control system must then determine whether the specifics of the access request are allowed by the permissions set in the access control tables.

At this point, the access request has been granted in full; the user or requesting subject can now go do what it came to our systems to do. Yet, in the words of arms control negotiators during the Cold War, trust, but verify. This is where our final A comes into play. Accounting gathers data from within the access control process to monitor the lifecycle of an access, from its initial request and permissions being granted through the interactions by the subject with the object, to capturing the manner in which the access is terminated. This provides the audit trail by which we address many key information security processes, each of which needs to ascertain (and maybe prove to legal standards) who did what to which information, using which information:

- Software or system anomaly investigation

- Systems hardening, vulnerability mitigation, or risk reduction

- Routine systems security monitoring

- Security or network operations center ongoing, real-time system monitoring

- Digital forensics investigations

- Digital discovery requests, search warrants, or information requested under national security letters

- Investigation of apparent violations of appropriate use policies

- Incident response and recovery

- The demands of law, regulation, contracts, and standards for disclosure to stakeholders, authorities, or the public

Obviously, it's difficult if not impossible to accomplish many of those tasks if the underlying audit trail wasn't built along the way, as each access request came in and was dealt with.

Before we see how these AAA functions are implemented in typical information systems, we need to look further into the idea of permissions or capabilities.

Access Control Concepts

Access control is all about subjects and objects (see Figure 6.1). Simply put, subjects try to perform an action upon an object; that action can be reading it, changing it, executing it (if the object is a software program), or doing anything to the object. Subjects can be anything that is requesting access to or attempting to access anything in our system, whether data or metadata, people, devices, or another process, for whatever purpose. Subjects can be people, software processes, devices, or services being provided by other Web-based systems. Subjects are trying to do something to or with the object of their desire. Objects can be collections of information, or the processes, devices, or people that have that information and act as gatekeeper to it. This subject-object relationship is fundamental to your understanding of access control. It is a one-way relationship: objects do not do anything to a subject. Don't be fooled into thinking that two subjects interacting with each other is a special case of a bidirectional access control relationship. It is simpler, more accurate, and much more useful to see this as two one-way subject-object relationships. It's also critical to see that every task is a chain of these two-way access control relationships.

FIGURE 6.1 Subjects and objects

As an example, consider the access control system itself as an object. It is a lucrative target for attackers who want to get past its protections and into the soft underbellies of the information assets, networks, and people behind its protective moat. In that light, hearing these functions referred to as datacenter gatekeepers makes a lot of sense. Yet the access control system is a subject that makes use of its own access control tables, and of the information provided to it by requesting subjects. (You, at sign-on, are a subject providing a bundle of credential information as an object to that access control process.)

Subjects and Objects—Everywhere!

Let's think about a simple small office/home office (SOHO) LAN environment, with an ISP-provided modem, a Wi-Fi router, and peer-to-peer file and resource sharing across the half a dozen devices on that LAN. The objects on this LAN would include:

- Each hardware device, and its onboard firmware, configuration parameters, and device settings, and its external physical connections to other devices

- Power conditioning and distribution equipment and cabling, such as an UPS

- The file systems on each storage device, each computer, and each subtree and each file within each subtree

- All of the removable storage devices and media, such as USB drives, DVDs, and CDs used for backup or working storage

- Each installed application on each device

- Each defined user identity on each device, and the authentication information that goes with that user identity, such as username and password

- Each person who is a user, or is attempting to be a user (whether as guest or otherwise)

- Accounts at all online resources used by people in this organization, and the access information associated with those accounts

- The random-access memory (RAM) in each computer, as free memory

- The RAM in each computer allocated to each running application, process, process thread, or other software element

- The communications interfaces to the ISP, plain old telephone service, or other media

- And so on…

Note that third item: on a typical Windows 10 laptop, with 330 GB of files and installed software on a 500 GB drive, that's only half a million files—and each of those, and each of the 100,000 or so folders in that directory space, is an object. Those USB drives, and any cloud-based file storage, could add similar amounts of objects for each computer; mobile phones using Wi-Fi might not have quite so many objects on them to worry about. A conservative upper bound might be 10 million objects.

What might our population of subjects be, in this same SOHO office?

- Each human, including visitors, clients, family, and even the janitorial crew

- Each user ID for each human

- Each hardware device, including each removable disk

- Each mobile device each human might bring into the SOHO physical location with them

- Each executing application, process, process thread, or other software element that the operating system (of the device it's on) can grant CPU time to

- Any software processes running elsewhere on the Internet, which establish or can establish connections to objects on any of the SOHO LAN systems

- And so on…

That same Windows 10 laptop, by the way, shows 8 apps, 107 background processes, 101 Windows processes, and 305 services currently able to run—loaded in memory, available to Windows to dispatch to execute, and almost every one of them connected by Windows to events so that hardware actions (like moving a mouse) or software actions (such as an Internet Control Message Protocol packet hitting our network interface card will wake them up and let them run. That's 521 pieces of executing code. And as if to add insult to injury, the one live human who is using that laptop has caused 90 user identities to be currently active. Many of these are associated with installed services, but each is yet another subject in its own right.

Multiply that SOHO situation up to a medium-sized business, with perhaps 500 employees using its LANs, VPNs, and other resources available via federated access arrangements, and you can see the magnitude of the access control management problem.

Data Classification and Access Control

Next, let's talk layers. No, not layers in the TCP/IP or OSI 7-layer reference model sense! Instead, we need to look at how permissions layer onto each other, level by level, much as those protocols grow in capability layer by layer.

Previously, you learned the importance of establishing an information classification system for your company or organization. Such systems define broad categories of protection needs, typically expressed in a hierarchy of increasing risk should the information be compromised in some way. The lowest level of such protection is often called unclassified, or suitable for public release. It's the information in press releases or in content on public-facing webpages. Employees are not restricted from disclosing this information to almost anyone who asks. Next up this stack of classification levels might be confidential information, followed by secret or top secret (in military parlance). Outside of military or national defense marketplaces, however, we often have to deal with privacy-related information, as well as company proprietary data.

For example, the US-CERT (Computer Emergency Readiness Team) has defined a schema for identifying how information can or cannot be shared among the members of the US-CERT community. The Traffic Light Protocol (TLP) can be seen at www.us-cert.gov/tlp and appears in Figure 6.2. It exists to make sharing of sensitive or private information easier to manage so that this community can balance the risks of damage to the reputation, business, or privacy of the source against the needs for better, more effective national response to computer emergency events.

FIGURE 6.2 US-CERT Traffic Light Protocol for information classification and handling

Note how TLP defines both the conditions for use of information classified at the different TLP levels, but also any restrictions on how a recipient of TLP-classified information can then share that information with others.

Each company or organization has to determine its own information security classification needs and devise a structure of categories that support and achieve those needs. They all have two properties in common, however, which are called the read-up and write-down problems:

- Reading up refers to a subject granted access at one level of the data classification stack, which then attempts to read information contained in objects classified at higher levels.

- Writing down refers to a subject granted access at one level that attempts to write or pass data classified at that level to a subject or object classified at a lower level.

Shoulder-surfing is a simple illustration of the read-up problem, because it can allow an unauthorized person to masquerade as an otherwise legitimate user. A more interesting example of the read-up problem was seen in many login or sign-on systems, which would first check the login ID, and if that was correctly defined or known to the system, then solicit and check the password. This design inadvertently confirms the login ID is legitimate; compare this to designs that take both pieces of login information, and return “user name or password unknown or in error” if the input fails to be authenticated.

Writing classified or proprietary information to a thumb drive, and then giving that thumb drive to an outsider, illustrates the write-down problem. Write-down also can happen if a storage device is not properly zeroized or randomized prior to its removal from the system for maintenance or disposal.

Having defined our concepts about subjects and objects, let's put those read-up and write-down problems into a more manageable context by looking at privileges or capabilities. Depending on whom you talk with, a subject is granted or defined to have permission to perform certain functions on certain objects. The backup task (as subject) can read and copy a file, and update its metadata to show the date and time of the most recent backup, but it does not (or should not) have permission to modify the contents of the file in question, for example. Systems administrators and security specialists determine broad categories of these permissions and the rules by which new identities are allocated some permissions and denied others.

Bell-LaPadula and Biba Models

Let's take a closer look at CIANA+PS, in particular the two key components of confidentiality and integrity. Figure 6.3 illustrates a database server containing proprietary information and an instance of a software process that is running at a level not approved for proprietary information. (This might be because of the person using the process, the physical location or the system that the process is running on, or any number of other reasons.) Both the server and the process act as subjects and objects in their different attempts to request or perform read and write operations to the other. As an SSCP, you'll need to be well acquainted with how these two different models approach confidentiality and integrity:

- Protecting confidentiality requires that we prevent attempts by the process to read the data from the server, but we also must prevent the server from attempting to write data to the process. We can, however, allow the server to read data inside the process or associated with it. We can also allow the process to write its data, at a lower classification level, up into the server. This keeps the proprietary information safe from disclosure, while it assumes that the process running at a lower security level can be trusted to write valid data up to the server.

- Protecting integrity by contrast requires just the opposite: we must prevent attempts by a process running at a lower security level from writing into the data of a server running at a higher security level.

FIGURE 6.3 Bell-LaPadula (a) vs. Biba access control models (b)

The first model is the Bell-LaPadula model, developed by David Bell and Leonard LaPadula for the Department of Defense in the 1970s, as a fundamental element of providing secure systems capable of handling multiple levels of security classification. Bell-LaPadula emphasized protecting the confidentiality of information—that information in a system running at a higher security classification level must be prevented from leaking out into systems running at lower classification levels. Shown in Figure 6.3(a), Bell-LaPadula defines these controls as:

- The simple security property (SS) requires that a subject may not read information at a higher sensitivity (i.e., no “read up”).

- The * (star) security property requires that a subject may not write information into an object that is at a lower sensitivity level (no “write-down”).

The discretionary security property requires that systems implementing Bell-LaPadula protections must use an access matrix to enforce discretionary access control

Remember that in our examples in Figure 6.2, the process is both subject and object, and so is the server! This makes it easier to see that the higher-level subject can freely read from (or be written into) a lower-level process; this does not expose the sensitive information to something (or someone) with no legitimate need to know. Secrets stay in the server.

Data integrity, on the other hand, isn't preserved by Bell-LaPadula; clearly, the lower-security-level process could disrupt operations at the proprietary level by altering data that it cannot read. The other important model, developed some years after Bell-LaPadula, was expressly designed to prevent this. Its developer, Kenneth Biba, emphasized data integrity over confidentiality; quite often the non-military business world is more concerned about preventing unauthorized modification of data by untrusted processes, than it is about protecting the confidentiality of information. Figure 6.3(b) illustrates Biba's approach:

- The simple integrity property requires that a subject cannot read from an object which is at a lower level of security sensitivity (no “read-down”).

- The * (star) Integrity property requires that a subject cannot write to an object at a higher security level (no “write-up”).

Quarantine of files or messages suspected of containing malware payloads offers a clear example of the need for the “no-read-down” policy for integrity protection. Working our way down the levels of security, you might see that “business vital proprietary,” privacy-related, and other information would be much more sensitive (and need greater integrity protection) than newly arrived but unfiltered and unprocessed email traffic. Blocking a process that uses privacy-related data from reading from the quarantined traffic could be hazardous! Once the email has been scanned and found to be free from malware, other processes can determine if its content is to be elevated (written up) by some trusted process to the higher level of privacy-related information.

As you might imagine, a number of other access models have been created to cope with the apparent and real conflicts between protecting confidentiality and assuring the integrity of data. You'll probably encounter Biba and Bell-LaPadula on the SSCP exam; you may or may not run into some of these others:

- The Clark-Wilson model considers three things together as a set: the subject, the object, and the kind of transaction the subject is requesting to perform upon the object. Clark-Wilson requires a matrix that only allows transaction types against objects to be performed by a limited set of trusted subjects.

- The Brewer and Nash model, sometimes called the “Chinese Wall” model, considers the subject's recent history, as well as the role(s) the subject is fulfilling, as part of how it allows or denies access to objects.

- Non-interference models, such as Gogun-Meseguer, use security domains (sets of subjects), such that members in one domain cannot interfere with (interact with) members in another domain.

- The Graham-Denning model also use a matrix to define allowable boundaries or sets of actions involved with the secure creation, deletion, and control of subjects, and the ability to control assignment of access rights.

All of these models provide the foundational theories or concepts behind which access control systems and technologies are designed and operate. Let's now take a look at other aspects of how we need to think about managing access control.

Role-Based

Role-based access control (RBAC) grants specific privileges to subjects regarding specific objects or classes of objects based on the duties or tasks a person (or process) is required to fulfill. Several key factors should influence the ways that role-based privileges are assigned:

- Separation of duties takes a business process that might logically be performed by one subject and breaks it down into subprocesses, each of which is allocated to a different, separate subject to perform. This provides a way of compartmentalizing the risk to information security. For example, retail sales activities will authorize a sales clerk to accept cash payments from customers, put the cash in their sales drawer, and issue change as required to the customer. The sales clerk cannot initially load the drawer with cash (for making change) from the vault, or sign off the cash in the drawer as correct when turning the drawer in at the end of their shift. The cash manager on duty performs these functions, and the independent counts done by sales clerk and cash manager help identify who was responsible for any errors.

- Need to know, and therefore need to access, should limit a subject's access to information objects strictly to those necessary to perform the tasks defined as part of their assigned duties, and no more.

- Duration, scope or extent of the role should consider the time period (or periods) the role is valid over, and any restrictions as to devices, locations, or factors that limit the role. Most businesses, for example, do not routinely approve high-value payments to others after business hours, nor would they normally consider authorizing these when submitted (via their approved apps) from a device at an IP address in a country with which the company has no business involvement or interests. Note that these types of attributes can be associated with the subject (such as role-based), the object, or the conditions in the system and network at the time of the request.

Role-based access has one strategic administrative weakness. Privilege creep, the unnecessary, often poorly justified, and potentially dangerous accumulation of access privileges no longer strictly required for the performance of one's duties, can inadvertently put an employee and the organization in jeopardy. Quality people take on broader responsibilities to help the organization meet new challenges and new opportunities; and yet, as duties they previously performed are picked up by other team members, or as they move to other departments or functions, they often retain the access privileges their former jobs required. To contain privilege creep, organizations should review each employee's access privileges in the light of their currently assigned duties, not only when those duties change (even temporarily!) but also on a routine, periodic basis.

Attribute-Based

Attribute-based access control (ABAC) systems combine multiple characteristics (or attributes) about a subject, an object, or the environment to authorize or restrict access. ABAC uses Boolean logic statements to build as complex a set of rules to cover each situation as the business logic and its information security needs dictate. A simple example might be the case of a webpage designer who has limited privileges to upload new webpages into a beta test site in an extranet authorized for the company's community of beta testers but is denied (because of their role) access to update pages on the production site. Then, when the company prepares to move the new pages into production, they may need the designer's help in doing so and thus (temporarily) require the designer's ability to access the production environment. Although this could be done by a temporary change in the designer's subject-based RBAC access privileges, it may be clearer and easier to implement with a logical statement such as:

IF (it's time for move to production) AND (designer-X) is a member of (production support team Y) THEN (grant access to a, b, c…)

Attribute-based access control can become quite complex, but its power to tailor access to exactly what a situation requires is often worth the effort. As a result, it is sometimes known as externalized, dynamic, fine-grained, or policy-based access control or authorization management.

Subject-Based

Subject-based access control looks at characteristics of the subject that are not normally expected to change over time. For example, a print server (as a subject) should be expected to have access to the printers, the queue of print jobs, and other related information assets (such as the LAN segment or VLAN where the printers are attached); you would not normally expect a print server to access payroll databases directly! As to human subjects, these characteristics might be related to age, their information security clearance level, or their physical or administrative place in the organization. For example, a middle school student might very well need separate roles defined as a student, a library intern, or a software developer in a computer science class, but because of their age, in most jurisdictions they cannot sign contracts. The webpages or apps that the school district uses to hire people or contract with consultants or vendors, therefore, should be off limits to such a student.

Object-Based

Object-based access control uses characteristics of each object or each class of objects to determine what types of access requests will be granted. The simplest example of this is found in many file systems, where objects such as individual files or folders can be declared as read-only. More powerful OS file structures allow a more granular approach, where a file folder can be declared to have a set of attributes based on classes of users attempting to read, write, extend, execute, or delete the object. Those attributes can be further defined to be inherited by each object inside that folder, or otherwise associated with it, and this inheritance should happen with every new instance of a file or object placed or created in that folder.

Rule-Based Access Control

Rule-based access control (RuBAC), as the name suggests, uses systems of formally expressed rules that direct the access control system in granting or denying access to objects. These rules can be as simple or as complex as the organization's security policies might require and are normally constructed using Boolean logic or other set theory constructs. Elegant in theory, RuBAC can be hard to scale to large enterprises with many complex, overlapping use cases and conditions; they can also be hard to maintain and debug as a result. One common use for RuBAC is to selectively invoke it for special cases, such as for the protection of organizational members or employees (and the organization's data and systems) when traveling to or through higher-risk locations.

Risk-Based Access Control

Risk-based access control (which so far does not have an acronym commonly associated with it) is more of a management approach to overall access control system implementation and use. As you saw in previous chapters, the actual risk context that an organization or one of its systems faces can change on a day-to-day basis. Events within the organization and in the larger marketplaces and communities it serves can suggest that the likelihood of previously assessed risks might dramatically increase (or decrease) and do so quickly. Since all security controls introduce some amount of process friction (such as additional processing time, identity and authorization challenges, or additional reviews by managers), a risk-based access control system provides separate adjustable sensitivity controls for different categories of security controls. When risk managers (such as an organization's chief information security officer or chief risk officer) decide an increase is warranted, one such control might increase the frequency and granularity of data backups, while another might lower the threshold on transactions that would trigger an independent review and approval. Risk-based access control systems might invoke additional attributes to test or narrow the limits on acceptable values for those attributes; in some cases, such risk-based decision making might turn off certain types of access altogether.

Mandatory vs. Discretionary Access Control

One question about access control remains: now that your system has authenticated an identity and authorized its access, what capabilities (or privileges) does that subject have when it comes to passing along its privileges to others? The “write-down” problem illustrates this issue: a suitably cleared subject is granted access to read a restricted, proprietary file; creates a copy of it; and then writes it to a new file that does not have the restricted or proprietary attribute set. Simply put, mandatory (or nondiscretionary) access control uniformly enforces policies that prohibit any and all subjects from attempting to change, circumvent, or go around the constraints imposed by the rest of the access control system. Specifically, mandatory or nondiscretionary access prevents a subject from:

- Passing information about such objects to any other subject or object

- Attempting to grant or bequeath its own privileges to another subject

- Changing any security attribute on any subject, object, or other element of the system

- Granting or choosing the security attributes of newly created or modified objects (even if this subject created or modified them)

- Changing any of the rules governing access control

Discretionary access control, on the other hand, allows the systems administrators to tailor the enforcement of these policies across their total population of subjects. This flexibility may be necessary to support a dynamic and evolving company, in which the IT infrastructure as well as individual roles and functions are subject to frequent change, but it clearly comes with some additional risks.

Network Access Control

Connecting to a network involves performing the right handshakes at all of the layers of the protocols that the requesting device needs services from. Such connections either start at Layer 1 with physical connections, or start at higher layers in the TCP/IP protocol stack. Physical connections require either a cable, fiber, or wireless connection, and in all practicality, such physical connections are local in nature: you cannot really plug in a Cat 6 cable without being there to do it. By contrast, remote connections are those that skip past the Physical layer and start the connection process at higher layers of the protocol stack. These might also be called logical connections, since they assume the physical connection is provided by a larger network, such as the Internet itself.

Let's explore these two ideas by seeing them in action. Suppose you're sitting at a local coffee house, using your smartphone or laptop to access the Internet via their free Wi-Fi customer network. You start at the Physical layer (via the Wi-Fi), which then asks for access at the Data Link layer. You don't get Internet services until you've made it to Layer 3, probably by using an app like your browser to use the “free Wi-Fi” password and your email address or customer ID as part of the logon process. At that point, you can start doing the work you want to do, such as checking your email, using various Transport layer protocols or Application layer protocols like HTTPS. The connection you make to your bank or email server is a remote connection, isn't it? You've come to their access portal by means of traffic carried over the Internet, and not via a wire or wireless connection.

Network access control is a fundamental and vital component of operating any network large or small. Without network access control, every resource on your network is at risk of being taken away from you and used or corrupted by others. The Internet connectivity you need, for business or pleasure, won't be available if your neighbor is using it to stream their own videos; key documents or files you need may be lost, erased, corrupted, or copied without your knowledge. “Cycle-stealing” of CPU and GPU time on your computers and other devices may be serving the needs of illicit crypto-currency miners, hackers, or just people playing games. You lock the doors and windows of your house when you leave because you don't want uninvited guests or burglars to have free and unrestricted access to the network of rooms, hallways, storage areas, and display areas for fine art and memorabilia that make up the place you call home. (You do lock up when you leave home, don't you?) By the same token, unless you want to give everything on your network away, you need to lock it up and keep it locked up, day in and day out.

Network access control (NAC) is the set of services that give network administrators the ability to define and control what devices, processes, and persons can connect to the network or to individual subnetworks or segments of that network. It is usually a distributed function involving multiple servers within a network. A set of NAC protocols define ways that network administrators translate business CIANA+PS needs and policies into compliance filters and settings. Some of the goals of NAC include:

- Mitigation of non-zero day attacks (that is, attacks for which signatures or behavior patterns are known)

- Authorization, authentication, and accounting of network connections

- Encryption of network traffic, using a variety of protocols

- Automation and support of role-based network security

- Enforcement of organizational security policies

- Identity management

At its heart, network access control is a service provided to multiple devices and other services on the network; this establishes many client-server relationships within most networks. It's important to keep this client-server concept in mind as we dive into the details of making NAC work.

A quick perusal of that list of goals suggests that an organization needs to define and manage all of the names of people, devices, and processes (all of which are called subjects in access control terms) that are going to be allowed some degree of access to some set of information resources, which we call objects. Objects can be people, devices, files, or processes. In general, an access control list (ACL) is the central repository of all the identities of subjects and objects, as well as the verification and validation information necessary to authenticate an identity and to authorize the access it has requested. By centralized, we don't suggest that the entire ACL has to live on one server, in one file; rather, for a given organization, one set of cohesive security policies should drive its creation and management, even if (especially if!) it is physically or logically is segmented into a root ACL and many subtree ACLs.

Network access control is an example of the need for an integrated, cohesive approach to solving a serious problem. Command and control of the network's access control systems is paramount to keeping the network secure. Security operations center (SOC) dashboards and alarm systems need to know immediately when attempts to circumvent access control exceed previously established alarm limits so that SOC team members can investigate and respond quickly enough to prevent or contain an intrusion.

IEEE 802.1X Concepts

IEEE 802.1X provides a port-based standard by which many network access control protocols work, and does this by defining the Extensible Authentication Protocol (EAPOL). Also known as “EAP over LAN,” it was initially created for use in Ethernet (wired) networks, but later extended and clarified to support wired and wireless device access control, as well as the Fiber Distributed Data Interface (ISO standard 9314-2). Further extensions provide for secure device identity and point-to-point encryption on local LAN segments.

This standard has seen implementations in every version of Microsoft Windows since Windows XP, Apple Macintosh systems, and most distributions of Linux.

EAPOL defines a four-step authentication handshake, the steps being initialization, initiation, negotiation, and authentication. We won't go into the details here, as they are beyond the scope of what SSCPs will typically encounter (nor are they detailed on the exam), but it's useful to know that this handshake needs to use what the standard calls an authenticator service. This authenticator might be a RADIUS client (more on that in a minute), or almost any other IEEE 802.1X-compatible authenticators, of which many can function as RADIUS clients.

Let's look a bit more closely at a few key concepts that affect the way NAC as systems, products, and solutions is often implemented.

- Preadmission vs. postadmission reflects whether designs authenticate a requesting endpoint or user before it is allowed to access the network, or deny further access based on postconnection behavior of the endpoint or user.

- Agent vs. agentless design describes whether the NAC system is relying on trusted agents within access-requesting endpoints to reliably report information needed to support authentication requests, or whether the NAC does its own scanning and network inventory, or uses other tools to do this. An example might be a policy check on the verified patch level of the endpoint's operating system; a trusted agent, part of many major operating systems, can report this. Otherwise, agentless systems would need to interrogate, feature by feature, to check if the requesting endpoint meets policy minimums.

- Out-of-band vs. inline refers to where the NAC functions perform their monitoring and control functions. Inline solutions are where the NAC acts in essence as a single (inline) point of connection and control between the protected side of the network (or threat surface!) and the unprotected side. Out-of-band solutions have elements of NAC systems, typically running as agents, at many places within the network; these agents report to a central control system and monitoring console, which can then control access.

- Remediation deals with the everyday occurrence that some legitimate users and their endpoints may fail to meet all required security policy conditions—for example, the endpoint may lack a required software update. Two strategies are often used in achieving remediation:

- Quarantine networks provide a restricted IP subnetwork, which allows the endpoint in question to have access only to a select set of hosts, applications, and other information resources. This might, for example, restrict the endpoint to a software patch and update management server; after the update has been successfully installed and verified, the access attempt can be reprocessed.

- Captive portals are similar to quarantine in concept, but they restrict access to a select set of webpages. These pages would instruct the endpoint's user how to perform and validate the updates, after which they can retry the access request.

RADIUS Authentication

Remote Authentication Dial-In User Service (RADIUS) provides the central repository of access control information and the protocols by which access control and management systems can authenticate, authorize, and account for access requests. Its name reflects its history, but don't be fooled—RADIUS is not just for dial-in, telephone-based remote access to servers, either by design or use. It had its birth at the National Science Foundation, whose NSFNet was seeing increasing dial-up customer usage and requests for usage. NSF needed the full AAA set of access control capabilities—authenticate, authorize, and accounting—and in 1991 asked for industry and academia to propose ways to integrate its collection of proprietary, in-house systems. From those beginnings, RADIUS has developed to where commercial and open source server products exist and have been incorporated into numerous architectures. These server implementations support building, maintaining, and using that central access control list that we discussed earlier.

Without going into the details of the protocols and handshakes, let's look at the basics of how endpoints, network access servers, and RADIUS servers interact and share responsibilities:

- The network access server is the controlling function; it is the gatekeeper that will block any nonauthorized attempts to access resources in its span of control.

- The RADIUS server receives an authentication request from the network access server—which is thus a RADIUS client—and either accepts it, challenges it for additional information, or rejects it. (Additional information might include PINs, access tokens or cards, secondary passwords, or other two-factor access information.) Tokens can be either synchronous or asynchronous, depending upon whether the token uses system time information or an algorithm to determine the dynamic (per-use) part of the token.

- The network access server (if properly designed and implemented) then allows access, denies it, or asks the requesting endpoint for the additional information requested by RADIUS.

RADIUS also supports roaming, which is the ability of an authenticated endpoint and user to move from one physical point of connection into the network to another. Mobile device users, mobile IoT, and other endpoints “on the move” typically cannot tolerate the overhead and wall-clock time consumed to sign in repeatedly, just because the device has moved from one room or one hotspot to another.

RADIUS, used by itself, had some security issues. Most of these are overcome by encapsulating the RADIUS access control packet streams in more secure means, much as HTTPS (and PKI) provide very secure use of HTTP. When this is not sufficient, organizations need to look to other AAA services such as Terminal Access Controller Access-Control System Plus (TACACS+) or Microsoft's Active Directory.

Once a requesting endpoint and user subject have been allowed access to the network, other access control services such as Kerberos and Lightweight Directory Access Protocol (LDAP) are used to further protect information assets themselves. For example, as a student you might be granted access to your school's internal network, from which other credentials (or permissions) control your use of the library, entry into online classrooms, and so forth; they also restrict your student logon from granting you access to the school's employee-facing HR information systems.

A further set of enhancements to RADIUS, called Diameter, attempted to deal with some of the security problems pertaining to mobile device network access. Diameter has had limited deployment success in the 3G (third-generation) mobile phone marketplace, but inherent incompatibilities still remain between Diameter and network infrastructures that fully support RADIUS.

TACACS and TACACS+

The Terminal Access Controller Access Control System (TACACS, pronounced “tack-axe”) grew out of early Department of Defense network needs for automating authentication of remote users. By 1984, it started to see widespread use in Unix-based server systems; Cisco Systems began supporting it and later developed a proprietary version called Extended TACACS (XTACACS) in 1990. Neither of these were open standards. Although they have largely been replaced by other approaches, you may see them still being used on older systems.

TACACS+ was an entirely new protocol based on some of the concepts in TACACS. Developed by the Department of Defense as well, and then later enhanced, refined, and marketed by Cisco Systems, TACACS+ splits the authentication, authorization, and accounting into separate functions. This provides systems administrators with a greater degree of control over and visibility into each of these processes. It uses TCP to provide a higher-quality connection, and it also provides encryption of its packets to and from the TACACS+ server. It can define policies based on user type, role, location, device type, time of day, or other parameters. It integrates well with Microsoft's Active Directory and with LDAP systems, which means it provides key functionality for single sign-on (SSO) capabilities. TACACS+ also provides greater command logging and central management features, making it well suited for systems administrators to use to meet the AAA needs of their networks.

Implementing and Scaling IAM

The most critical step in implementing, operating, and maintaining identity management and access control (IAM) systems is perhaps the one that is often overlooked or minimized. Creating the administrative policy controls that define information classification needs, linking those needs to effective job descriptions for team members, managers, and leaders alike, has to precede serious efforts to plan and implement identity and access management. As you saw in Chapters 3 and 4, senior leaders and managers need to establish their risk tolerance and assess their strategic and tactical plans in terms of information and decision risk. Typically, the business impact analysis (BIA) captures leadership's deliberations about risk tolerance and risk as it is applied to key objectives, goals, outcomes, processes, or assets. The BIA then drives the vulnerability assessment processes for the information architecture and the IT infrastructure, systems, and apps that support it.

Assuming your organization has gone through those processes, it's produced the information classification guidelines, as well as the administrative policies that specify key roles and responsibilities you'll need to plan for as you implement an IAM set of risk mitigation controls:

- Who determines which people or job roles require what kind of access privileges for different classification levels or subsets of information? Who conducts periodic reviews, or reviews these when job roles are changed?

- Who can decide to override classification or other restrictions on the handling, storage, or distribution of information?

- Who has organizational responsibility for implementing, monitoring, and maintaining the chosen IAM solution(s)?

- Who needs to be informed of violations or attempted violations of access control and identity management restrictions or policies?

Choices for Access Control Implementations

Two more major decisions need to be made before you can effectively design and implement an integrated access control strategy. Each reflects in many ways the decision-making and risk tolerance culture of your organization, while coping with the physical realities of its information infrastructures. The first choice is whether to implement a centralized or decentralized access control system:

- Centralized access control is implemented using one system to provide all identity management and access control mechanisms. This system is the one-stop-shopping point for all access control decisions; every request from every subject, throughout the organization, comes to this central system for authentication, authorization, and accounting. Whether this system is a cloud-hosted service, or operates using a single local server or a set of servers, is not the issue; the organization's logical space of subjects and objects is not partitioned or segmented (even if the organization has many LAN segments, uses VPNs, or is geographically spread about the globe) for access control decision-making. In many respects, implementing centralized access control systems can be more complex, but use of systems such as Kerberos, RADIUS, TACACS, and Active Directory can make the effort less painful. Centralized access control can provide greater payoffs for large organizations, particularly ones with complex and dispersed IT infrastructures. For example, updating the access control database to reflect changes (temporary or permanent) in user privileges is done once, and pushed out by the centralized system to all affected systems elements.

- Decentralized access control segments the organization's total set of subjects and objects (its access control problem) into partitions, with an access control system and its servers for each such partition. Partitioning of the access control space may reflect geographic, mission, product or market, or other characteristics of the organization and its systems. The individual access control systems (one per partition) have to coordinate with each other, to ensure that changes are replicated globally across the organization. Windows Workgroups are examples of decentralized access control systems, in which each individual computer (as a member of the workgroup) makes its own access control decisions, based on its own local policy settings. Decentralized access control is often seen in applications or platforms built around database engines, in which the application, platform, or database uses its own access control logic and database for authentication, authorization, and accounting. Allowing each Workgroup, platform, or application to bring its own access control mechanisms to the party, so to speak, can be simple to implement, and simple to add each new platform or application to the organization's IT architecture; but over time, the maintenance and update of all of those disparate access control databases can become a nightmare.

The next major choice that needs to be made reflects whether the organization is delegating the fine-grained, file-by-file access control and security policy implementation details to individual to users or local managers, or is retaining (or enforcing) more global policy decisions with its access control implementation:

- Mandatory access control (MAC) denies individual users (subjects) the capability to determine the security characteristics of files, applications, folders, or other objects within their IT work spaces. Users cannot make arbitrary decisions, for example, to share a folder tree if that sharing privilege has not been previously granted to them. This implements the mandatory security policies as defined previously, and results in highly secure systems.

- Discretionary access control (DAC) allows individual users to determine the security characteristics of objects, such as files, folders, or even entire systems, within their IT work spaces. This is perhaps the most common access control implementation methodology, as it comes built-in to nearly every modern operating system available for servers and endpoint devices. Typically, these systems provide users the ability to grant or deny the privileges to read, write (or create), modify, read and execute, list contents of a folder, share, extend, view other metadata associated with the object, and modify other such metadata.

Having made those decisions, based on your organization's administrative security policies and information classification strategies, and with roles and responsibilities assigned, you're ready to start your IAM project.

“Built-in” Solutions?

Almost every device on your organization's networks (and remember, a device can be both subject and object) has an operating system and other software (or firmware) installed on it. For example, Microsoft Windows operating systems provide policy objects, which are software and data constructs that the administrators use to enable, disable, or tune specific features and functions that the OS provides to users. Such policies can be set at the machine, system, application, user, or device level, or for groups of those types of subjects. Policy objects can enforce administrative policies about password complexity, renewal frequency, allowable number of retries, lockout upon repeated failed login attempts, and the like. Many Linux distributions, as well as Apple's operating systems, have very similar functions built into the OS. All devices ship from the factory with most such policy objects set to “wide open,” you might say, allowing the new owner to be the fully authorized systems administrator they need to be when they first boot up the device. As administrator/owners, we're highly encouraged to use other built-in features, such as user account definitions and controls, to create “regular” or “normal” user accounts for routine, day-to-day work. You then have the option of tailoring other policy objects to achieve the mix of functionality and security you need.

For a small home or office LAN, using the built-in capabilities of each device to implement a consistent administrative set of policies may be manageable. But as you add functionality, your “in-house sysadmin” job jar starts to fill up quickly. That new NAS or personal cloud device probably needs you to define per-user shares (storage areas), and specify which family users can do what with each. And you certainly don't want the neighbors next door to be able to see that device, much less the existence of any of the shares on it! If you're fortunate enough to have a consistent base of user devices—everybody in the home is using a Windows 10 or macOS Mojave laptop, and they're all on the same make and model smartphone—then you think through the set of policy object settings once and copy (or push) them to each laptop or phone. At some point, keeping track of all of those settings overwhelms you. You need to centralize. You need a server that can help you implement administrative policies into technical policies, and then have that server treat all of the devices on your network as clients.

Before we look at a client-server approach to IAM, let's look at one more built-in feature in the current generation of laptops, tablets, smartphones, and phablets, which you may (or may not) wish to utilize “straight from the shrink wrap.”

Other Protocols for IAM

As organizations scale out their IT and OT infrastructures to include other resources and organizations, they need to be able to automate and control how these systems exchange identity, authentication, and authorization information with each other. RADIUS, as we've seen, provides one approach to delivering these sets of functions. Let's take a brief look at some of the others, which you may need to delve into more fully if your organization is using any of them.

LDAP

The Lightweight Directory Access Protocol (LDAP) is based on the International Telecommunications Union's Telecommunications Standardization Sector (ITU-T) X.500 standard, which came into effect in 1988. This is actually a set of seven protocols that together provide the interfaces and handshakes necessary for one system to query and retrieve information from another system's directory of subjects and objects. DAP, the original protocol, was constructed around the OSI 7-Layer protocol stack, and thus other implementations of the same functions were needed to support TCP/IP's protocol stack; LDAP has proven to be the most popular in this respect. It works in conjunction with other protocol suites, notably X.509, to establish trust relationships between clients and servers (such as confirmation that the client reached the URL or URI that they were intending to connect with). We'll look at this process in more detail in Chapter 12, “Cross-Domain Challenges.”

SAML and XACML

The Security Assertions Markup Language (SAML), published by OASIS, is a community-supported open standard for using extensible markup language (XML) to make statements or assertions about identities. These assertions are exchanged between security domains such as access control systems belonging to separate organizations, or between access control systems and applications platforms. SAML 2.0, the current version, was published in 2005, with a draft errata update released in 2019. As an XML-based language, SAML is human-readable; SAML assertions flow via HTTP (HTTPS preferably) over the Internet. These assertions provide information about an identity and any conditions that may apply to it.

XACML, the Extensible Access Control Markup Language, was designed to support SAML's basic authentication processes, and as such the two languages (and the rules for using them) are very strongly related. XACML is not bound to SAML, however; it can just as easily be used with other access control systems, especially when security policies require a finer level of detail than normally supported by SAML alone.

OAuth

The IETF created the Open Authorization (OAuth) process as a way of supporting applications to gain third-party access and use of an HTTP service. It's built around four basic roles:

- Resource owners are entities that can grant (or deny) access to their resources by a third party. These entities may be servers, systems, or humans (who are then known as end users in OAuth terms).

- Resource servers are the devices hosting the resources in question. For OAuth to function, these servers have to be able to receive and act upon an OAuth access token, to then allow (or deny) access to a protected resource on that server.

- Client applications are programs which are making the request to access a protected resource; they are doing this on behalf of, and with the authorization of, the resource owner. (Note that in this context, the word “client” does not imply anything about where this application is hosted, or what its general functions might be.)

- Authorization servers are responsible for authenticating the requesting client application, validating it by generating access tokens which are then passed to the resource servers for their use.

OAuth eliminated the need to send usernames and passwords to the third party system. OAuth 2.0, the current version (since 2012), has been made transport-dependent: it uses TLS, which forces it to be used via HTTPS rather than the unsecure HTTP. The access tokens are encrypted during transit as an additional precaution.

SCIM

The system for cross-domain identity management (SCIM) provides protocols used by cloud-based systems, applications, and services to streamline and automate the provisioning of identities across multiple applications. Larger-scale applications platforms, such as Salesforce, Microsoft's O365, and Slack, use SCIM. As a system based around Microsoft's Active Directory, it relies on the initial user provisioning process creating a specific type of AD record, which has a SCIM connector associated with it. This leads to that new user now having access to all SCIM-enabled applications. When the user no longer requires any of those access privileges (such as when they leave the organization), one action in the central directory to terminate that user's ID leads to terminating their access to all of those SCIM applications.

Multifactor Authentication

As mentioned at the start of this chapter, authentication of a subject's claim to an identity may require multiple steps to accomplish. We also have to separate this problem into two categories of identities: human users, and everything else. First, let's deal with human users. Traditionally, users have gained access to systems by using or presenting a user ID (or account ID) and a password to go with it. The user ID or account ID is almost public knowledge—there's either a simple rule to assign one based on personal names or they're easily viewable in the system, even by nonprivileged users. The password, on the other hand, was intended to be kept secret by the user. Together, the user ID and password are considered one factor, or subject-supplied element in the identity claim and authentication process.

In general, each type of factor is something that the user has, knows, or is; this applies to single-factor and multifactor authentication processes:

- Things the user has: These would normally be physical objects that users can reasonably be expected to have in their possession and be able to produce for inspection as part of the authentication of their identity. These might include identification cards or documents, electronic code-generating identity devices (such as key fobs or apps on a smartphone), or machine-readable identity cards. Matching of scanned images of documents with approved and accepted ones already on file can be done manually or with image-matching utilities, when documents do not contain embedded machine-readable information or OCR text.

- Information the user knows: Users can know personally identifying information such as passwords, answers to secret questions, or details of their own personal or professional life. Some of this is presumed to be private, or at least information that is not widely known or easily determined by examining other publicly available information.

- What the user is: As for humans, users are their physical bodies, and biometric devices can measure their fingerprints, retinal vein patterns, voice patterns, and many other physiological characteristics that are reasonably unique to a specific individual and hard to mimic. Each type of factor, by itself, is subject to being illicitly copied and used to attempt to spoof identity for systems access.

Use of each factor is subject to false positive errors (acceptance of a presented factor that is not the authentic one) or false negative errors (rejection of authentic factors), and can be things that legitimate users may forget (such as passwords, or leaving their second-factor authentication device or card at home). As you add more factors to user sign-on processes, you add complexity and costs. User frustration can also increase with additional factors being used, leading to attempts to cheat the system.