Chapter 5

Communications and Network Security

How do we build trust and confidence into the globe-spanning communications that our businesses, our fortunes, and our very lives depend on? Whether by in-person conversation, videoconferencing, or the World Wide Web, people and businesses communicate. Communications, as we saw in earlier chapters, involves exchanging ideas to achieve a common pool of understanding—it is not just about data or information. Effective communication requires three basic ingredients: a system of symbols and protocols, a medium or a channel in which those protocols exchange symbols on behalf of senders and receivers, and trust. Not that we always trust every communications process 100%, nor do we need to!

We also have to grapple with the convergence of communications and computing technologies. People, their devices, and their ways of doing business no longer accept old-fashioned boundaries that used to exist between voice, video, TXT and SMS, data, or a myriad of other computer-enabled information services. This convergence transforms what we trust when we communicate and how we achieve that trust. As SSCPs, we need to know how to gauge the trustworthiness of a particular communications system, keep it operating at the required level of trust, and improve that trustworthiness if that's what our stakeholders need. Let's look in more detail at how communications security can be achieved and, based on that, get into the details of securing the network-based elements of our communications systems.

To do this, we'll need to grow the CIA trinity of earlier chapters—confidentiality, integrity, and availability—into a more comprehensive framework that adds two key ideas to our stack of security needs. This is just one way you'll start thinking in terms of protocol stacks—as system descriptors, as roadmaps for diagnosing problems, and as models of the threat and risk landscape.

Trusting Our Communications in a Converged World

It's useful to reflect a bit on the not-too-distant history of telecommunications, computing, and information security. Don't panic—we don't have to go all the way back to the invention of radio or the telegraph! Think back, though, to the times right after World War II and what the communications and information systems of that world were like. Competing private companies with competing technical approaches, and very different business models, often confounded users' needs to bring local communications systems into harmony with ones in another city, in another country, or on a distant continent. Radio and telephones didn't connect very well; mobile two-way radios and their landside systems were complex, temperamental, and expensive to operate. Computers didn't talk with each other, except via parcel post or courier delivery of magnetic tapes or boxes of punched cards. Mail was not electronic.

By the 1960s, however, many different factors were pushing each of the different communications technologies to somehow come together in ways that would provide greater capabilities, more flexibility, and growth potential, and at lower total cost of ownership. Communications satellites started to carry hundreds of voice-grade analog channels, or perhaps two or three broadcast-quality television signals. At the same time, military and commercial users needed better ways to secure the contents of messages, and even secure or obscure their routing (to defeat traffic analysis attacks). The computer industry centered on huge mainframe computers, which might cost a million dollars or more—and which sat idle many times each day, and especially over holiday weekends! Mobile communications users wanted two-way voice communication that didn't require suitcase-sized transceivers that filled the trunk of their cars.

Without going too far into the technical, economic, or political, what transformed all of these separate and distinct communications media into one world-spanning Web and Internet? In 1969, in close cooperation with these (and other) industries and academia, the U.S. Department of Defense Advanced Research Projects Agency started its ARPANet project. By some accounts, the scope of what it tried to achieve was audacious in the extreme. The result of ARPANet is all around us today, in the form of the Internet, cell phone technology, VOIP, streaming video, and everything we take for granted over the Web and the Internet. And so much more.

One simple idea illustrates the breadth and depth of this change. Before ARPANet, we all thought of communications in terms of calls we placed. We set up a circuit or a channel, had our conversation, then took the circuit down so that some other callers could use parts of it in their circuits. ARPANet's packet-based communications caused us all to forget about the channel, forget about the circuit, and focus on the messages themselves. (You'll see that this had both good and bad consequences for information security later in this chapter.)

One of the things we take for granted is the convergence of all of these technologies, and so many more, into what seems to us to be a seamless, cohesive, purposeful, reliable, and sometimes even secure communications infrastructure. The word convergence is used to sum up the technical, business, economic, political, social, and perceptual changes that brought so many different private businesses, public organizations, and international standards into a community of form, function, feature, and intent. What we sometimes ignore, to our peril, is how that convergence has drastically changed the ways in which SSCPs need to think about communications security, computing security, and information assurance.

Emblematic of this change might be the Chester Gould's cartoon character Dick Tracy and his wristwatch two-way radio, first introduced to American readers in 1946. It's credited with inspiring the invention of the smartphone, and perhaps even the smartwatches that are all but taken for granted today. What Gould's character didn't explore for us were the information security needs of a police force whose detectives had such devices—nor the physical, logical, and administrative techniques they'd need to use to keep their communications safe, secure, confidential, and reliable.

To keep those and any other communications trustworthy, think about some key ingredients that we find in any communications system or process:

- Purpose or intent. Somebody has something they want to accomplish, whether it is ordering a pizza to be delivered or commanding troops into battle. This intention should shape the whole communication process. With a clear statement of intent, the sender can better identify who the target audience is, and whether the intention can be achieved by exchanging one key idea or a whole series of ideas woven together into some kind of story or narrative. This purpose or intent also contains a sense of who not to include in the communication, which may (or should) dictate choices about how the communication is accomplished and protected.

- Senders and recipients. The actual people or groups on both ends of the conversation or the call; sometimes called the parties to the communication.

- Protocols that shape how the conversation or communication can start, how it is conducted, and how it is brought to a close. Protocols include a choice of language, character or symbol set, and maybe even a restricted domain of ideas to communicate about. Protocols provide for ways to detect errors in transmission or receipt, and ways to confirm that the recipient both received and understood the message as sent. Other protocols might also verify whether the true purpose of the communication got across as well.

- Message content, which is the ideas we wish to exchange encoded or represented in the chosen language, character or symbol sets, and protocols.

- A communications medium, which is what makes transporting the message from one place to another possible. Communications media are physical—such as paper, sound waves, radio waves, electrical impulses sent down a wire, flashes of light or puffs of smoke, or almost anything else.

For example, a letter or holiday greeting might be printed or written on paper or a card, which is placed in an envelope and mailed to the recipient via a national or international postal system. Purpose, the communicating parties, the protocols, the content, and the medium all have to work together to convey “happy holidays,” “come home soon,” or “send lawyers, guns, and money” if the message is to get from sender to receiver with its meaning intact.

At the end of the day (or at the end of the call), both senders and receivers have two critical decisions to make: how much of what was communicated was trustworthy, and what if anything should they do as a result of that communication? The explicit content of what was exchanged has a bearing on these decisions, of course, but so does all of the subtext associated with the conversation. Subtext is about context: about “reading between the lines,” drawing inferences (or suggesting them) regarding what was not said by either party.

The risk that subtext can get it wrong is great! The “Hot Line” illustrates this potential for disaster. During the Cold War, the “Hot Line” communications system connected the U.S. national command authority and their counterparts in the Soviet Union. This system was created to reduce the risk of accidental misunderstandings that could lead to nuclear war between the two superpowers. Both parties insisted that this be a plain text teletype circuit, with messages simultaneously sent in English and Russian, to prevent either side from trying to read too much into the voice or mannerisms of translators and speakers at either end. People and organizations need to worry about getting the subtext wrong or missing it altogether. So far, as an SSCP, you won't have to worry about how to “secure the subtext.”

Communications security is about data in motion—as it is going to and from the endpoints and the other elements or nodes of our systems, such as servers. It's not about data at rest or data in use, per se. Chapter 8, “Hardware and Systems Security,” and Chapter 9, “Applications, Data, and Cloud Security,” will show you how to enhance the security of data at rest and in use, whether inside the system or at its endpoints. Chapter 11, “Business Continuity via Information Security and People Power,” will also look at how we keep the people layer of our systems communicating in effective, safe, secure, and reliable ways, both in their roles as users and managers of their company's IT infrastructures, but also as people performing their broader roles within the company or organization and its place in the market and in society at large.

CIANA+PS: Applying Security Needs to Networks

Chapter 2, “Information Security Fundamentals,” introduced the concepts of confidentiality, integrity, and availability as the three main attributes or elements of information security and assurance. We also saw that before we can implement plans and programs to achieve that triad, we have to identify what information must be protected from disclosure (kept confidential), its meaning kept intact and correct (ensure its integrity), and that it's where we need it, when we need it (that is, the information is available). As we dig further into what information security entails, we'll have to add four additional and very important attributes to our CIA triad: nonrepudiation, authentication, privacy, and safety.

To repudiate something means to attempt to deny an action that you've performed or something you said. You can also attempt to deny that you ever received a particular message or didn't see or notice that someone else performed an action. In most cases, we repudiate our own actions or the actions of others so as to attempt to deny responsibility for them. “They didn't have my informed consent,” we might claim; “I never got that letter,” or “I didn't see the traffic light turn yellow.” Thus, nonrepudiation is the characteristic of a communications system that prevents a user from claiming that they never sent or never received a particular message. This communications system characteristic sets limits on what senders or receivers can do by restricting or preventing any attempt by either party to repudiate a message, its content, or its meaning.

Authentication, in this context, also pertains to senders and receivers. Authentication (or authenticity) is the verification that the sender or receiver is who they claim to be, and then the further validation that they have been granted permission to use that communications system. Authentication might also go further by validating that a particular sender has been granted the privilege of communicating with a particular sender, regarding the content or intent of the message itself. These privileges—use of the system, and connection with a particular party—can also be defined with further restrictions, as we'll see later in Chapter 6, “Identity and Access Control.” Authentication as a process has one more “A” associated with it, and that is accountability. This requires that the system keep records of who attempts to access the system, who was authenticated to use it, and what communications or exchanges of messages they had with whom.

Adding safety to our security needs mnemonic reminds us that whether it is via operational technologies or not, far more modern IT systems can directly place people or property at risk of damage, injury, or death than many of us realize. We've already seen one death attributed to a ransomware attack (which crippled hospitals in Germany in 2019) and attempts to contaminate drinking water supplies in several countries. Cybercrime has also demonstrated the need for greater awareness of the need to protect the privacy of individuals. Initially, this was seen as protecting the ways in which personally identifying information (PII) was gathered, stored, used, and shared; increasingly, the need to protect a person's location and the data about what they are doing on the Internet is growing in urgency and importance. (Some analysts refer to this as “the death of the third-party cookie,” signifying the sea change in online tracking and advertising systems that we saw begin in 2021.)

Thus CIANA+PS: confidentiality, integrity, availability, nonrepudiation, authentication, privacy, and safety. As we'll see in this chapter, networks and their protocols provide significant support to the first five characteristics; safety and privacy are (so far) largely left to the care of applications, programs, and organizational practices that run on top of the Internet's protocol stack. As a result, throughout this chapter, we'll primarily refer to network security needs via CIANA and bring privacy and safety in where it makes sense.

Recall from earlier chapters that CIANA+PS crystallizes our understanding of what information needs what kinds of protection. Most businesses and organizations find that it takes several different but related thought processes to bring this all together in ways that their IT staff and information security team can appreciate and carry out. Several key sets of ideas directly relate to, or help set, the information classification guidelines that should drive the implementation of information risk reduction efforts:

- Strategic plans define long-term goals and objectives, identify key markets or target audiences, and focus on strategic relationships with key stakeholders and partners.

- The business impact analysis (BIA) links high-priority strategic goals, objectives, and outcomes with the business logic, processes, and information assets vital to achieving those outcomes.

- A communications strategy guides how the organization talks with its stakeholders, customers, staff, and other target audiences so that mutual understanding leads to behaviors that support achieving the organization's strategic goals.

- Risk management plans, particularly information and IT risk management plans, provide the translation of strategic thinking into near-term tactical planning.

The net result should be that the organization combines those four viewpoints into a cohesive and effective information risk management plan, which provides the foundation for “all things CIANA+PS” that the information security team needs to carry out. This drives the ways that SSCPs and others on that information security team conduct vulnerability assessments, choose mitigation techniques and controls, configure and operate them, and monitor them for effectiveness.

Threat Modeling for Communications Systems

With that integrated picture of information security needs, it's time to do some threat modeling of our communications systems and processes. Chapter 4, “Operationalizing Risk Mitigation,” introduced the concepts of threat modeling and the use of boundaries or threat surfaces to segregate parts of our systems from each other and from the outside world. Let's take a quick review of the basics:

- Notionally, the total CIANA+PS security needs of information assets inside a threat surface is greater than what actors, subjects, or systems elements outside of that boundary should enjoy.

- Subjects access objects to use or change them; objects are information assets (or people or processes) that exist for subjects to use, invoke, or otherwise interact with. A person reads from a file, possibly by invoking a display process that accesses that file, and presents it on their endpoint device's display screen. In that case, the display process is both an object (to the person invoking it) and a subject (as it accesses the file).

- The threat surface is a boundary that encapsulates objects that require a degree of protection to meet their CIANA+PS needs.

- Controlled or trusted paths are deliberately created by the system designers and builders and provide a channel or gateway that subjects on one side of the threat surface use to access objects on the other side. Such paths or portals should contain features that authenticate subjects prior to granting access.

Note that this subject-object access can be bidirectional; there are security concerns in both reading and writing across a security boundary or threat surface. We'll save the theory and practice of that for Chapter 6.

The threat surface thinks of the problem from the defensive perspective: what do I need to protect and defend from attack? By contrast, threat modeling also defines the attack surface as the set of entities, information assets, features, or elements that are the focus of reconnaissance, intrusion, manipulation, and misuse, as part of an attack on an information system. Typically, attack surfaces are at the level of vendor-developed systems or applications; thus, Microsoft Office Pro 2021 is one attack surface, while Microsoft Office 365 Home is another. Other attack surfaces can be specific operating systems, or the hardware and firmware packages that are our network hardware elements. Even a network intrusion detection system (NIDS) can be an attack surface!

Applying these concepts to the total set of organizational communications processes and systems could be a daunting task for an SSCP. Let's peel that onion a layer at a time, though, by separating it into two major domains: that which runs on the internal computer networks and systems, and that which is really people-to-people in nature. We'll work with the people-to-people more closely in Chapter 11.

For now, let's combine this concept of threat modeling with the most commonly used sets of protocols, or protocol stacks, that we use in tying our computers, communications, and endpoints together.

Internet Systems Concepts

As an SSCP, you'll need to focus your thinking about networks and security to one particular kind of networks—the ones that link together most of the computers and communications systems that businesses, governments, and people use. This is “the Internet,” capitalized as a proper name. It's almost everywhere; almost everybody uses it, somehow, in their day-to-day work or leisure pursuits. It is what the World Wide Web (also a proper noun) runs on. It's where we create most of the value of e-commerce, and where most of the information security threats expose people and business to loss or damage. This section will introduce the basic concepts of the Internet and its protocols; then, layer by layer, we'll look at more of their innermost secrets, their common vulnerabilities, and some potential countermeasures you might need to use. The OSI 7-layer reference model will be our framework and guide along the way, as it reveals some critical ideas about vulnerabilities and countermeasures you'll need to appreciate.

Communications and network systems designers talk about protocol stacks as the layers or nested sets of different protocols that work together to define and deliver a set of services to users. An individual protocol or layer defines the specific characteristics, the form, features, and functions that make up that protocol or layer. For example, almost since the first telephone services were made available to the public, The Bell Telephone Company in the U.S. defined a set of connection standards for basic voice-grade telephone service; today, one such standard is the RJ-11 physical and electrical connector for four-wire telephone services. The RJ-11 connection standard says nothing about dial tones, pulse (rotary dial), or Touch-Tone dual-tone multiple frequency signaling, or how connections are initiated, established, used, and then taken down as part of making a “telephone call” between parties. Other protocols define services at those layers. The “stack” starts with the lowest level, usually the physical interconnect standard, and layers each successively higher-level standard onto those below it. These higher-level standards can go on almost forever; think of how “reverse the charges,” advanced billing features, or many caller ID features need to depend on lower-level services being defined and working properly, and you've got the idea of a protocol stack.

This is an example of using layers of abstraction to build up complex and powerful systems from subsystems or components. Each component is abstracted, reducing it to just what happens at the interface—how you request services of it, provide inputs to it, and get services or outputs from it. What happens behind that curtain is (or should be) none of your concern, as the external service user. (The service builder has to fully specify how the service behaves internally so that it can fulfill what's required of it.) One important design imperative with stacks of protocols is to isolate the impact of changes; changes in physical transmission of signals should not affect the way applications work with their users, nor should adding a new application require a change in that physical media.

A protocol stack is a document—a set of ideas or design standards. Designers and builders implement the protocol stack into the right set of hardware, software, and procedural tasks (done by people or others). These implementations present the features of the protocol stack as services that can be requested by subjects (people or software tasks).

Datagrams and Protocol Data Units

First, let's introduce the concept of a datagram, which is a common term when talking about communications and network protocols. A datagram is the unit of information used by a protocol layer or a function within it. It's the unit of measure of information in each individual transfer. Each layer of the protocol stack takes the datagram it receives from the layers above it and repackages it as necessary to achieve the desired results. Sending a message via flashlights (or an Aldiss lamp, for those of the sea services) illustrates the datagram concept:

- An on/off flash of the light, or a flash of a different duration, is one bit's worth of information; the datagrams at the lamp level are bits.

- If the message being sent is encoded in Morse code, then that code dictates a sequence of short and long pulses for each datagram that represents a letter, digit, or other symbol.

- Higher layers in the protocol would then define sequences of handshakes to verify sender and receiver, indicate what kind of data is about to be sent, and specify how to acknowledge or request retransmission. Each of those sequences might have one or more message in it, and each of those messages would be a datagram at that level of the protocol.

- Finally, the captain of one of those two ships dictates a particular message to be sent to the other ship, and that message, captain-to-captain, is itself a datagram.

Note, however, another usage of this word. The User Datagram Protocol (UDP) is an alternate data communications protocol to Transmission Control Protocol, and both of these are at the same level (Layer 3, Internetworking) of the TCP/IP stack. And to add to the terminological confusion, the OSI model (as we'll see in a moment) uses protocol data unit (PDU) to refer to the unit of measure of the data sent in a single protocol unit and datagram to UDP. Be careful not to confuse UDP and PDU!

Table 5.1 may help you avoid some of this confusion by placing the OSI and TCP/IP stacks side by side. We'll examine each layer in greater detail in a few moments.

TABLE 5.1 OSI and TCP/IP side by side

| Types of layers | Typical protocols | OSI layer | OSI protocol data unit name | TCP/IP layer | TCP/IP datagram name |

| Host layers | HTTP, HTTPS, SMTP, IMAP, SNMP, POP3, FTP, … | 7. Application | Data | (Outside of TCP/IP model scope) | Data |

| Characters, MPEG, SSL/TLS, compression, S/MIME, … | 6. Presentation | ||||

| NetBIOS, SAP, session handshaking connections | 5. Session | ||||

| TCP, UDP | 4. Transport | Segment, except: UDP: datagram |

Transport | Segment | |

| Media layers | IPv4 / IPv6 IP address, ICMP, IPSec, ARP, MPLS, … | 3. Network | Packet | Network (or Internetworking) | Packet |

| Ethernet, 802.1, PPP, ATM, Fibre Channel, FDDI, MAC address | 2. Link | Frame | Data Link | Frame | |

| Cables, connectors, 10BaseT, 802.11x, ISDN, T1, … | 1. Physical | Symbol | Physical | Bits |

Handshakes

We'll start with a simple but commonplace example that reveals the role of handshaking to control and direct how the Internet handles our data communications needs. A handshake is a sequence of small, simple communications that we send and receive, such as hello and goodbye, ask and reply, or acknowledge or not-acknowledge, which control and carry out the communications we need. Handshakes are defined in the protocols we agree to use. Let's look at a simple file transfer to a server that I want to do via File Transfer Protocol (FTP) to illustrate this:

- I ask my laptop to run the file transfer client app.

- Now that it's running, my FTP client app asks the OS to connect to the FTP server.

- The FTP server accepts my FTP client's connection request.

- My FTP client requests to upload a file to a designated folder in the directory tree on that server.

- The FTP server accepts the request, and says “start sending” to my FTP client.

- My client sends a chunk of data to the server; the server acknowledges receipt, or requests a retransmission if it encounters an error.

- My client signals the server that the file has been fully uploaded, and requests the server to mark the received file as closed, updating its directories to reflect this new file.

- My client informs me of successfully completing the upload.

- With no more files to transfer, I exit the FTP app.

It's interesting to note that the Internet was first created to facilitate things like simple file transfers between computer centers; email was created as a higher-level protocol that used FTP to send and receive small files that were the email notes themselves.

To make this work, we need ways of physically and logically connecting end-user computers (or smartphones or smart toasters) to servers that can support those endpoints with functions and data that users want and need. What this all quickly turned into is the kind of infrastructure we have today:

- End-user devices (much like “endpoints” in our systems) hand off data to the network for transmission, receive data from other users via the network, and monitor the progress of the communications they care about. In most systems, a network interface card (NIC, or chip), acts as the go-between. (We'll look at this in detail later.)

- An Internet point of presence is a physical place at which a local Internet service provider (ISP) brings a physical connection from the Internet backbone to the user's NIC. Contractually, the user owns and is responsible for maintaining their own equipment and connections to the point of presence, and the ISP owns and maintains from there to the Internet backbone. Typically, a modem or combination modem/router device performs both the physical and logical transformation of what the user's equipment needs in the way of data signaling into what the ISP's side needs to see.

- The Internet backbone is a mesh of Internet working nodes and high-capacity, long-distance communications circuits that connect them to each other and to the ISPs.

The physical connections handle the electronic (or electro-optical) signaling that the devices themselves need to communicate with each other. The logical connections are how the right pair of endpoints—the user NIC and the server or other endpoint NIC—get connected with each other, rather than with some other device “out there” in the wilds of the Internet. This happens through address resolution and name resolution.

Packets and Encapsulation

Note in that FTP example earlier how the file I uploaded was broken into a series of chunks, or packets, rather than sent in one contiguous block of data. Each packet is sent across the Internet by itself (wrapped in header and trailer information that identifies the sender, recipient, and other important information we'll go into later). Breaking a large file into packets allows smarter trade-offs between actual throughput rate and error rates and recovery strategies. (Rather than resend the entire file because line noise corrupted one or two bytes, we might need to resend just the one corrupted packet.) However, since sending each packet requires a certain amount of handshake overhead to package, address, route, send, receive, unpack, and acknowledge, the smaller the packet size, the less efficient the overall communications system can be.

Sending a file by breaking it up into packets has an interesting consequence: if each packet has a unique serial number as part of its header, as long as the receiving application can put the packets back together in the proper order, we don't need to care what order they are sent in or arrive in. So if the receiver requested a retransmission of packet number 41, it can still receive and process packet 42, or even several more, while waiting for the sender to retransmit it.

Right away we see a key feature of packet-based communications systems: we have to add information to each packet in order to tell both the recipient and the next layer in the protocol stack what to do with it! In our FTP example earlier, we start by breaking the file up into fixed-length chunks, or packets, of data—but we've got to wrap them with data that says where it's from, where it's going, and the packet sequence number. That data goes in a header (data preceding the actual segment data itself), and new end-to-end error correcting checksums are put into a new trailer. This creates a new datagram at this level of the protocol stack. That new, longer datagram is given to the first layer of the protocol stack. That layer probably has to do something to it; that means it will encapsulate the datagram it was given by adding another header and trailer. At the receiver, each layer of the protocol unwraps the datagram it receives from the lower layer (by processing the information in its header and trailer, and then removing them), and passes this shorter datagram up to the next layer. Sometimes, the datagram from a higher layer in a protocol stack will be referred to as the payload for the next layer down. Figure 5.1 shows this in action.

FIGURE 5.1 Wrapping: layer-by-layer encapsulation

The flow of wrapping, as shown in Figure 5.1, illustrates how a higher-layer protocol logically communicates with its opposite number in another system by having to first wrap and pass its datagrams to lower-layer protocols in its own stack. It's not until the Physical layer connections that signals actually move from one system to another. (Note that this even holds true for two virtual machines talking to each other over a software-defined network that connects them, even if they're running on the same bare metal host!) In OSI 7-layer reference model terminology, this means that layer n of the stack takes the service data unit (SDU) it receives from layer n+1, processes and wraps the SDU with its layer- specific header and footer to produce the datagram at its layer, and passes this new datagram as an SDU to the next layer down in the stack.

We'll see what these headers look like, layer by layer, in a bit.

Addressing, Routing, and Switching

In plain old telephone systems (POTS), your phone number uniquely identified the pair of wires that came from the telephone company's central office switches to your house. If you moved, you got a new phone number, or the phone company had to physically disconnect your old house's pair of wires from its switch at that number's terminal, and hook up your new house's phone line instead. From the start (thanks in large part to the people from Bell Laboratories and other telephone companies working with the ARPANet team), we knew we needed something more dynamic, adaptable, and easier to use. What they developed was a way to define both a logical address (the IP or Internet Protocol address), the physical address or identity of each NIC in each device (its media access control or MAC address), and a way to map from one to the other while allowing a device to be in one place today and another place tomorrow. From its earliest ARPANet days until the mid-1990s, the Internet Assigned Numbers Authority (IANA) handled the assignment of IP addresses and address ranges to users and organizations who requested them.

Routing is the process of determining what path or set of paths to use to send a set of data from one endpoint device through the network to another. In POTS, the route of the call was static—once you set up the circuit, it stayed up until the call was completed, unless a malfunction interrupted the call. The Internet, by contrast, does not route calls—it routes individually addressed packets from sender to recipient. If a link or a series of communications nodes in the Internet itself go down, senders and receivers do not notice; subsequent packets will be dynamically rerouted to working connections and nodes. This also allows a node (or a link) to say “no” to some packets as part of load-leveling and traffic management schemes. The Internet (via its protocol stack) handles routing as a distributed, loosely coupled, and dynamic process—every node on the Internet maintains a variety of data that help it decide which of the nodes it's connected to should handle a particular packet that it wants to forward to the ultimate recipient (no matter how many intermediate nodes it must pass through to get there).

Switching is the process used by one node to receive data on one of its input ports and choose which output port to send the data to. (If a particular device has only one input and one output, the only switching it can do is to pass the data through or deny it passage.) A simple switch depends on the incoming data stream to explicitly state which path to send the data out on; a router, by contrast, uses routing information and routing algorithms to decide what to tell its built-in switch to properly route each incoming packet.

Another way to find and communicate with someone is to know their name and then somehow look that name up in a directory. By the mid-1980s, the Internet was making extensive use of such naming conventions, creating the Domain Name System (DNS). A domain name consists of sets of characters joined by periods (or “dots”); “bbc.co.uk” illustrates the higher-level domain “.co.uk” for commercial entities in the United Kingdom, and “bbc” is the name itself. Taken together that makes a fully qualified domain name. The DNS consists of a set of servers that resolve domain names into IP addresses, registrars that assign and issue both IP addresses and the domain names associated with them to parties who want them, and the regulatory processes that administer all of that.

Network Segmentation

Segmentation is the process of breaking a large network into smaller ones. “The Internet” acts as if it is one gigantic network, but it's not. It's actually many millions of internet segments that come together at many different points to provide seamless service. An internet segment (sometimes called “an internet,” lowercase) is a network of devices that communicate using TCP/IP and thus support the OSI 7-layer reference model. This segmentation can happen at any of the three lower layers of our protocol stacks, as we'll see in a bit. Devices within a network segment can communicate with each other, but which layer the segments connect on, and what kind of device implements that connection, can restrict the outside world to seeing the connection device (such as a router) and not the nodes on the subnet below it.

Segmentation of a large internet into multiple, smaller network segments provides a number of practical benefits, which affect the choice of how to join segments and at which layer of the protocol stack. The switch or router that runs the segment, and its connection with the next higher segment, are two single points of failure for the segment. If the device fails or the cable is damaged, no device on that segment can communicate with the other devices or the outside world. This can also help isolate other segments from failure of routers or switches, cables, or errors (or attacks) that are flooding a segment with traffic.

Subnets are different than network segments. We'll take a deep dive into the fine art of subnetting after we've looked at the overall protocol stack.

URLs and the Web

In 1990, Tim Berners-Lee, a researcher at CERN in Switzerland, confronted the problem that researchers were having: they could not find and use what they already knew or discovered, because they could not effectively keep track of everything they wrote and where they put it! CERN was drowning in its own data. Berners-Lee wanted to take the much older idea of a hyperlinked or hypertext-based document one step further. Instead of just having links to points within the document, he wanted to have documents be able to point to other documents anywhere on the Internet. This required that several new ingredients be added to the Internet:

- A unique way of naming a document that included where it could be found on the Internet, which came to be called a locator

- Ways to embed those unique names into another document, where the document's creator wanted the links to be (rather than just in a list at the end, for example)

- A means of identifying a computer on the Internet as one that stored such documents and would make them available as a service

- Directory systems and tools that could collect the addresses or names of those document servers

- Keyword search capabilities that could identify what documents on a server contained which keywords

- Applications that an individual user could run that could query multiple servers to see if they had documents the user might want, and then present those documents to the user to view, download, or use in other ways

- Protocols that could tie all of those moving parts together in sensible, scalable, and maintainable ways

By 1991, new words entered our vernacular: webpage, Hypertext Transfer Protocol (HTTP), Web browser, Web crawler, and URL, to name a few. Today, all of that has become so commonplace, so ubiquitous, that it's easy to overlook just how many powerfully innovative ideas had to come together all at once. Knowing when to use the right uniform resource locators (URLs) became more important than understanding IP addresses. URLs provide us with an unambiguous way to identify a protocol, a server on the network, and a specific asset on that server. Additionally, a URL as a command line can contain values to be passed as variables to a process running on the server. By 1998, the business of growing and regulating both IP addresses and domain names grew to the point that a new nonprofit, nongovernmental organization was created, the Internet Corporation for Assigned Names and Numbers (ICANN, pronounced “eye-can”).

The rapid acceptance of the World Wide Web and the HTTP concepts and protocols that empowered it demonstrates a vital idea: the layered, keep-it-simple approach embodied in the TCP/IP protocol stack and the OSI 7-layer model works. Those stacks give us a strong but simple foundation on which we can build virtually any information service we can imagine.

Topologies

I would consider putting in drawings with each topology. Some people are visual learners & need to see it to understand it.

The brief introduction (or review) of networking fundamentals we've had thus far brings us to ask an important question: how do we hook all of those network devices and endpoints together? We clearly cannot build one switch with a million ports on it, but we can use the logical design of the Internet protocols to let us build more practical, modular subsystem elements and then connect them in various ways to achieve what we need.

A topology, to network designers and engineers, is the basic logical geometry by which different elements of a network connect together. Topologies consist of nodes and the links that connect them. Experience (and much mathematical study!) gives us some simple, fundamental topologies to use as building blocks for larger systems:

- Point-to-point is the simplest topology: two nodes, with one link between them. This is sometimes called peer-to-peer if the two nodes have relatively the same set of privileges and responsibilities with respect to each other (that is, neither node is in control of the other). If one node fails, or the connection fails, the network cannot function; whether the other node continues to function normally or has to abnormally terminate processes is strictly up to that node (and its designers and users).

- Bus topologies or networks connect multiple nodes together, one after the other, in series, as shown in Figure 5.2. The bus provides the infrastructure for sending signals to all of the nodes, and for sending addressing information (sometimes called device select) that allows each node to know when to listen to the data and when to ignore it. Well-formed bus designs should not require each node to process data or control signals in order to pass them on to the next node on the bus. Backplanes are a familiar implementation of this; for example, the industry-standard PCI bus provides a number of slots that can take almost any PCI-compatible device (in any slot). A hot-swap bus has special design features that allow one device to be powered off and removed without requiring the bus, other devices, or the overall system to be shut down. These are extensively used in storage subsystems. Bus systems typically are limited in length, rarely exceeding three meters overall.

FIGURE 5.2 Bus topology

- Ring networks are a series of point-to-point-to-point connections, with the last node on the chain looped back to connect to the first, as shown in Figure 5.3. As point-to-point connections, each node has to be functioning properly in order to do its job of passing data on to the next node on the ring. This does allow ring systems nodes to provide signal conditioning that can boost the effective length of the overall ring (if each link has a maximum 10 meter length, then 10 nodes could span a total length of 50 meters out and back). Nodes and connections all have to work in order for the ring to function. Rings are designed to provide either a unidirectional or bidirectional flow of control and data.

FIGURE 5.3 Ring network topology

- Star networks have one central node that is connected to multiple other nodes via point-to-point connections. Unlike a point-to-point network, the node in the center has to provide (at least some) services to control and administer the network. The central node is therefore a server (since it provides services to others on the star network), and the other nodes are all clients of that server. This is shown in Figure 5.4.

FIGURE 5.4 Star (or tree) network topology

- Mesh networks in general provide multiple point-to-point connections between some or all of the nodes in the mesh, as shown in Figure 5.5. Mesh designs can be uniform (all nodes have point-to-point connections to all other nodes), or contain subsets of nodes with different degrees of interconnection. As a result, mesh designs can have a variety of client-server, server-to-server, or peer-to-peer relationships built into them. One mesh architecture you probably use every day is the mobile phone system, with its cellular design based on a mesh of base stations providing the connectivity needed. Mesh designs are used in datacenters, since they provide multiple paths between multiple CPUs, storage controllers, or Internet-facing communications gateways. Mesh designs are also fundamental to supercomputer designs, for the same reason. Mesh designs tend to be very robust, since normal TCP/IP alternate routing can allow traffic to continue to flow if one or a number of nodes or connections fail; at worst, overall throughput of the mesh and its set of nodes may decrease until repairs can be made.

FIGURE 5.5 Mesh network topology (fully connected)

With these in mind, a typical SOHO (small office/home office) network at a coffee house that provides Wi-Fi for its customers might use a mix of the following topology elements:

- A simple mesh of two point-to-point connections via ISPs to the Internet to provide a high degree of availability

- Point-to-point from that mesh to a firewall system

- Star connections to support three subnets: one for retail systems, one for store administration, and one for customer or staff Wi-Fi access. Each of these would be its own star network.

“Best Effort” and Trusting Designs

The fundamental design paradigm of TCP/IP and OSI 7-layer stacks is that they deliver “best-effort” services. In contract law and systems engineering, a best efforts basis sets expectations for services being requested and delivered; the server will do what is reasonable and prudent, but will not go “beyond the call of duty” to make sure that the service is performed, day or night, rain or shine! There are no guarantees. Nothing asserts that if your device's firmware does things the “wrong” way its errors will keep it from connecting, getting traffic sent and received correctly, or doing any other network function. Nothing also guarantees that your traffic will go where you want it to, and nowhere else, that it will not be seen by anybody else along the way, and will not suffer any corruption of content. Yes, each individual packet does have parity and error correction and detection checksums built into it. These may (no guarantees!) cause any piece of hardware along the route to reject the packet as “in error,” and request the sender retransmit it. An Internet node or the NIC in your endpoint might or might not detect conflicts in the way that fields within the packet's wrappers are set; it may or may not be smart enough to ask for a resend, or pass back some kind of error code and a request that the sender try again.

Think about the idea of routing a segment in a best-effort way: the first node that receives the segment will try to figure out which node to forward it on to, so that the packet has a pretty good chance of getting to the recipient in a reasonable amount of time. But this depends on ways of one node asking other nodes if they know or recognize the address, or know some other node that does.

The protocols do define a number of standardized error codes that relate to the most commonly known errors, such as attempting to send traffic to an address that is unknown and unresolvable. A wealth of information is available about what might cause such errors, how participants might work to resolve them, and what a recommended strategy is to recover from one when it occurs. What this means is that the burden for managing the work that we want to accomplish by means of using the Internet is not the Internet's responsibility. That burden of plan, do, check, and act is allocated to the higher-level functions within application programs, operating systems, and NIC hardware and device drivers that are using these protocols, or the people and business logic that actually invokes those applications in the first place.

In many respects, the core of TCP/IP is a trusting design. The designers (and the Internet) trust that equipment, services, and people using it will behave properly, follow the rules, and use the protocols in the spirit and manner in which they were written. Internet users and their equipment are expected to cooperate with each other, as each spends a fragment of their time, CPU power, memory, or money to help many other users achieve what they need.

One consequence we need to face head on of this trusting, cooperative, best-efforts nature of our Internet: security becomes an add-on. We'll see how to add it on, layer by layer, later in this chapter.

Two Protocol Stacks, One Internet

Let's look at two different protocol stacks for computer systems networks. Both are published, public domain standards; both are widely adopted around the world. The “geekiest” of the standards is TCP/IP, the Transmission Control Protocol over Internet Protocol standard (two layers of the stack right there!). Its four layers define how we build up networks from the physical interconnections up to what it calls the Transport layer, where the heavy lifting of turning a file transfer into Internet traffic starts to take place. TCP/IP also defines and provides homes for many of the other protocols that make addressing, routing, naming, and service delivery happen.

By contrast, the OSI 7-layer reference model is perhaps the most “getting-business-done” of the two stacks. It focuses on getting the day-to-day business and organizational tasks done that really are why we wanted to internetwork computers in the first place. This is readily apparent when we start with its topmost, or application, layer. We use application programs to handle personal, business, government, and military activities—those applications certainly need the operating systems that they depend on for services, but no one does their online banking just using Windows 10 or Red Hat Linux alone!

Many network engineers and technicians may thoroughly understand the TCP/IP model, since they use it every day, but they have little or no understanding of the OSI 7-layer model. They often see it as too abstract or too conceptual to have any real utility in the day-to-day world of network administration or network security. Nothing could be further from the truth! As you'll see, the OSI's top three levels provide powerful ways for you to think about information systems security—beyond just keeping the networks secure. In fact, many of the most troublesome information security threats that SSCPs must deal with occur at the upper layers of the OSI 7-layer reference model—beyond the scope of what TCP/IP concerns itself with. As an SSCP, you need a solid understanding of how TCP/IP works—how its protocols for device and port addressing and mapping, routing, and delivery, and network management all play together. You will also need an equally thorough understanding of the OSI 7-layer model, how it contrasts with TCP/IP, and what happens in its top three layers. Taken together, these two protocols provide the infrastructure of all of our communications and computing systems. Understanding them is the key to understanding why and how networks can be vulnerable—and provides the clues you need to choose the right best ways to secure those networks.

Complementary, Not Competing, Frameworks

Both the TCP/IP protocol stack and the OSI 7-layer reference model grew out of efforts in the 1960s and 70s to continue to evolve and expand both the capabilities of computer networks and their usefulness. While it all started with the ARPANet project in the United States, international business, other governments, and universities worked diligently to develop compatible and complementary network architectures, technologies, and systems. By the early 1970s, commercial, academic, military, and government-sponsored research networks were already using many of these technologies, quite often at handsome profits.

Transmission Control Protocol over Internet Protocol (TCP/IP) was developed during the 1970s, based on original ARPANet protocols and a variety of competing (and in some cases conflicting) systems developed in private industry and in other countries. From 1978 to 1992, these ideas were merged together to become the published TCP/IP standard; ARPANet was officially migrated to this standard on January 1, 1993; since this protocol became known as “the Internet protocol,” that date is as good a date to declare as the “birth of the Internet” as any. TCP/IP is defined as consisting of four basic layers. (We'll see why that “over” is in the name in a moment.)

The decade of the 1970s continued to be one of incredible innovation. It saw significant competition between ideas, standards, and design paradigms in almost every aspect of computing and communications. In trying to dominate their markets, many mainframe computer manufacturers and telephone companies set de facto standards that all but made it impossible (contractually) for any other company to make equipment that could plug into their systems and networks. Internationally, this was closing some markets while opening others. Although the courts were dismantling these near-monopolistic barriers to innovation in the United States, two different international organizations, the International Organization for Standardization (ISO) and the International Telegraph and Telephone Consultative Committee (CCITT), both worked on ways to expand the TCP/IP protocol stack to embrace higher-level functions that business, industry, and government felt were needed. By 1984, this led to the publication of the International Telecommunications Union (ITU, the renamed CCITT) Standard X.200 and ISO Standard 7498.

This new standard had two major components, and here is where some of the confusion among network engineers and IT professionals begins. The first component was the Basic Reference Model, which is an abstract (or conceptual) model of what computer networking is and how it works. This became known as the Open Systems Interconnection Reference Model, sometimes known as the OSI 7-layer model. (Since ISO subsequently developed more reference models in the open systems interconnection family, it's preferable to refer to this one as the OSI 7-layer reference model to avoid confusion.) The other major component was a whole series of highly detailed technical standards.

In many respects, both TCP/IP and the OSI 7-layer reference model largely agree on what happens in the first four layers of their model. But while TCP/IP doesn't address how things get done beyond its top layer, the OSI reference model does. Its three top layers are all dealing with information stored in computers as bits and bytes, representing both the data that needs to be sent over the network and the addressing and control information needed to make that happen. The bottommost layer has to transform computer representations of data and control into the actual signaling needed to transmit and receive across the network. (We'll look at each layer in greater depth in subsequent sections as we examine its potential vulnerabilities.)

Let's use the OSI 7-layer reference model, starting at the physical level, as our roadmap and guide through internetworking. Table 5.2 shows a simplified side-by-side comparison of the OSI and TCP/IP models and illustrates how the OSI model's seven layers fit within a typical organization's use of computer networks. You'll note the topmost layer is “layer 8,” the layer of people, business, purpose, and intent. (Note that there are many such informal “definitions” of the layers above layer 7, some humorous, some useful to think about using.) As we go through these layers, layer by layer, you'll see where TCP/IP differs in its approach, its naming conventions, or just where it and OSI have different points of view. With a good overview of the protocols layer by layer, we'll look in greater detail at topics that SSCPs know more about, or know how to do with great skill and cunning!

TABLE 5.2 OSI 7-layer model and TCP/IP 4-layer model in context

| System components | OSI layer | TCP/IP, protocols and services (examples) | Key address element | Datagrams are called… | Role in the information architecture |

| People | Name, building and room, email address, phone number, … | Files, reports, memos, conversations, … | Company data, information assets | ||

| Application software + people processes, gateways | 7 – Application | HTTP, email, FTP, … | URL, IP address + port | Upper-layer data | Implement business logic and processes |

| 6 – Presentation | SSL/TSL, MIME, MPEG, compression | ||||

| 5 – Session | |||||

| Load balancers, gateways | 4 – Transport | TCP, UDP | IP address + port | Segments | Implement connectivity with clients, partners, suppliers, … |

| Routers, OS software | 3 – Network | IPv4, IPv6, IPSec, ICMP, … | IP address + port | Packets | |

| Switches, hubs, routers | 2 – Data Link | 802.1X, PPP, … | MAC address | Frames | |

| Cables, antenna, … | 1 - Physical | Physical connection | Bits |

Layer 1: The Physical Layer

Layer 1, the Physical layer, is very much the same in both TCP/IP and the OSI 7-layer model. The same standards are used in both. It typically consists of hardware devices and electrical devices that transform computer data into signals, move the signals to other nodes, and transform received signals back into computer data. Layer 1 is usually embedded in the NIC and provides the physical handshake between one NIC and its connections to the rest of the network. It does this by a variety of services, including the following:

- Transmission media control, controlling the circuits that drive the radio, fiber-optic, or electrical cable transmitters and receivers. This verifies that the fiber or cable or Wi-Fi system is up and operating and ready to send or receive. In point-to-point wired systems, this is the function that tells the operating system that “a network cable may have come unplugged,” for example. (Note that this can be called media control or medium control; since most NICs and their associated interface circuits probably support only one kind of media, you might think that medium is the preferred term. Both are used interchangeably.)

- Collision detection and avoidance manages the transmitter to prevent it from interfering with other simultaneous transmissions by other nodes. (Think of this as waiting until the other people stop talking before you start!)

- The physical plug, socket, connector, or other mechanical device that is used to connect the NIC to the network transmission medium. The most standard form of such interconnection uses a Bell System RJ-45 connector and eight-wire cabling as the transmission medium for electrical signals. The eight wires are twisted together in pairs (for noise cancellation reasons) and can be with or without a layer of metalized Mylar foil to provide further shielding from the electromagnetic noise from power lines, radio signals, or other cabling nearby. Thus, these systems use either UTP (unshielded twisted pair) or STP (shielded twisted pair) to achieve speed, quality, and distance needs.

- Interface with the Data Link layer, managing the handoff of datagrams between the media control elements and the Data Link layer's functions

Multiple standards, such as the IEEE 802 series, document the details of the various physical connections and the media used at this layer.

At Layer 1, the datagram is the bit. The details of how different media turn bits (or handfuls of bits) into modulated signals to place onto wires, fibers, radio waves, or light waves are (thankfully!) beyond the scope of what SSCPs need to deal with. That said, it's worth considering that at Layer 1, addresses don't really matter! For wired (or fibered) systems, it's that physical path from one device to the next that gets the bits where they need to go; that receiving device has to receive all of the bits, unwrap them, and use Layer 2 logic to determine if that set of bits was addressed to it.

This also demonstrates a powerful advantage of this layers-of-abstraction model: nearly everything interesting that needs to happen to turn the user's data (our payload) into transmittable, receivable physical signals can happen with absolutely zero knowledge of how that transmission or reception actually happens! This means that changing out a 10BaseT physical media with Cat6 Ethernet gives your systems as much as a thousand-time increase in throughput, with no changes needed at the network address, protocol, or applications layers. (At most, very low-level device driver settings might need to be configured via operating systems functions, as part of such an upgrade.)

It's also worth pointing out that the physical domain defines both the collision domain and the physical segment. A collision domain is the physical or electronic space in which multiple devices are competing for each other's attention; if their signals out-shout each other, some kind of collision detection and avoidance is needed to keep things working properly. For wired (or fiber-connected) networks, all of the nodes connected by the same cable or fiber are in the same collision domain; for wireless connections, all receivers that can detect a specific transmitter are in that transmitter's collision domain. (If you think that suggests that typical Wi-Fi usage means lots of overlapping collision domains, you'd be right!) At the physical level, that connection is also known as a segment. But don't get confused: we segment (chop into logical pieces) a network into logical sub-networks, which we'll call subnets, at either Layer 2 or Layer 3, but not at Layer 1.

Layer 2: The Data Link Layer

Layer 2, the Data Link layer, performs the data transfer from node to node of the network. As with Layer 2 in TCP/IP, it manages the logical connection between the nodes (over the link provided by Layer 1), provides flow control, and handles error correction in many cases. At this layer, the datagram is known as a frame, and frames consist of the data passed to Layer 2 by the higher layer, plus addressing and control information.

The IEEE 802 series of standards further refine the concept of what Layer 2 in OSI delivers by setting forth two sublayers:

- The Media Access Control (MAC) sublayer uses the unique MAC addresses of the NICs involved in the connection as part of controlling individual device access to the network and how devices use network services. The MAC layer grants permission to devices to transmit data as a result.

- The Logical Link Control (LLC) sublayer links the MAC sublayer to higher-level protocols by encapsulating their respective PDUs in additional header/trailer fields. LLC can also provide frame synchronization and additional error correction.

The MAC address is a 48-bit address, typically written (for humans) as six octets—six 8-bit binary numbers, usually written as two-digit hexadecimal numbers separated by dashes, colons, or no separator at all. For example, 3A-7C-FF-29-01-05 is the same 48-bit address as 3A7CFF290105. Standards dictate that the first 24 bits (first three hex digit pairs) are the organizational identifier of the NIC's manufacturer, and 24 bits (remaining three hex digit pairs) are a NIC-specific address. The IEEE assigns the organizational identifier, and the manufacturer assigns NIC numbers as it sees fit. Each 24-bit field represents over 16.7 million possibilities, which for a time seemed to be more than enough addresses; not anymore. Part of IPv6 is the adoption of a larger, 64-bit MAC address, and the protocols to allow devices with 48-bit MAC addresses to participate in IPv6 networks successfully.

Note that one of the bits in the first octet (in the organizational identifier) flags whether that MAC address is universally or locally administered. Many NICs have features that allow the local systems administrator to overwrite the manufacturer-provided MAC address with one of their own choosing. This does provide the end user organization with a great capability to manage devices by using their own internal MAC addressing schemes, but it can be misused to allow one NIC to impersonate another one (so-called MAC address spoofing).

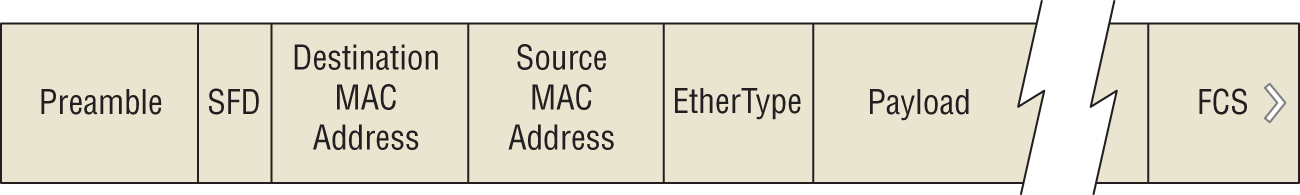

Let's take a closer look at the structure of a frame, shown in Figure 5.6. As mentioned, the payload is the set of bits given to Layer 2 by Layer 3 (or a layer-spanning protocol) to be sent to another device on the network. Conceptually, each frame consists of:

- A preamble, which is a 56-bit series of alternating 1s and 0s. This synchronization pattern helps serial data receivers ensure that they are receiving a frame and not a series of noise bits.

- The Start Frame Delimiter (SFD), which signals to the receiver that the preamble is over and that the real frame data is about to start. Different media require different SFD patterns.

- The destination MAC address.

- The source MAC address.

- The Ether Type field, which indicates either the length of the payload in octets or the protocol type that is encapsulated in the frame's payload.

- The payload data, of variable length (depending on the Ether Type field).

- A Frame Check Sequence (FCS), which provides a checksum across the entire frame, to support error detection.

The inter-packet gap is a period of dead space on the media, which helps transmitters and receivers manage the link and helps signify the end of the previous frame and the start of the next. It is not, specifically, a part of either frame, and it can be of variable length.

FIGURE 5.6 Data Link layer frame format

Layer 2 devices include bridges, modems, NICs, and switches that don't use IP addresses (thus called Layer 2 switches). Firewalls make their first useful appearance at Layer 2, performing rule-based and behavior-based packet scanning and filtering. Datacenter designs can make effective use of Layer 2 firewalls.

Layer 3: The Network Layer

Layer 3, the Network layer, is defined in the OSI model as the place where variable-length sequences of fixed-length packets (that make up what the user or higher protocols want sent and received) are transmitted (or received). Routing and switching happens at Layer 3. Logical paths between two hosts are created; data packets are routed and forwarded to destinations; packet sequencing, congestion control, and error handling occur here. Layer 3 is where we see a lot of the Internet's “best efforts” design thinking at work, or perhaps, not at work; it is left to individual designers who build implementations of the protocols to decide how Layer 3–like functions in their architecture will handle errors at the Network layer and below.

ISO 7498/4 also defines a number of network management and administration functions that (conceptually) reside at Layer 3. These protocols provide greater support to routing, managing multicast groups, address assignment (at the Network layer), and other status information and error handling capabilities. Note that it is the job of the payload—the datagrams being carried by the protocols—that make these functions belong to the Network layer, and not the protocol that carries or implements them.

The most common device we see at Layer 3 is the router; combination bridge-routers, or brouters, are also in use (bridging together two or more Wi-Fi LAN segments, for example). Layer 3 switches are those that can deal with IP addresses. Firewalls also are a part of the Layer 3 landscape.

Layer 3 uses a packet. Packets start with a packet header, which contains a number of fields of interest to us; see Figure 5.7. For now, let's focus on the IP version 4 format, which has been in use since the 1970s and thus is almost universally used:

- Both the source and destination address fields are 32-bit IPv4 addresses.

- The Identification field, Flags, and Fragment Offset participate in error detection and reassembly of packet fragments.

- The Time To Live (or TTL) field keeps a packet from floating around the Internet forever. Each router or gateway that processes the packet decrements the TTL field, and if its value hits zero, the packet is discarded rather than passed on. If that happens, the router or gateway is supposed to send an Internet Control Message Protocol (ICMP) packet to the originator with fields set to indicate which packet didn't live long enough to get where it was supposed to go. (The tracert function uses TTL in order to determine what path packets are taking as they go from sender to receiver.)

- The Protocol field indicates whether the packet is using ICMP, TCP, Exterior Gateway, IPv6, or Interior Gateway Routing Protocol (IGRP).

- Finally, the data (or payload) portion.

FIGURE 5.7 IPv4 packet format

You'll note that we went from MAC addresses at Layer 2, to IP addresses at Layer 3. This requires the use of Address Resolution Protocol (ARP), one of several protocols that span multiple layers. We'll look at those together after we examine Layer 7.

Layer 4: The Transport Layer

Now that we've climbed up to Layer 4, things start to get a bit more complicated. This layer is the home to many protocols that are used to transport data between systems; one such protocol, the Transport Control Protocol, gave its name (TCP) to the entire protocol stack! Let's first look at what the layer does, and then focus on some of the more important transport protocols.

Layer 4, the Transport layer, is where variable-length data from higher-level protocols or from applications gets broken down into a series of fixed-length packets; it also provides quality of service, greater reliability through additional flow control, and other features. In TCP/IP, Layer 4 is where TCP and UDP work; the OSI reference model goes on to define five different connection-mode transport protocols (named TP0 through TP4), each supporting a variety of capabilities. It's also at Layer 4 that we start to see tunneling protocols come into play.

Transport layer protocols primarily work with ports. Ports are software-defined labels for the connections between two processes, usually ones that are running on two different computers. The source and destination port, plus the protocol identification and other protocol-related information, are contained in that protocol's header. Each protocol defines what fields are needed in its header and prescribes required and optional actions that receiving nodes should take based on header information, errors in transmission, or other conditions. Ports are typically bidirectional, using the same port number on sender and receiver to establish the connection. Some protocols may use multiple port numbers simultaneously.

Over time, the use of certain port numbers for certain protocols became standardized. Important ports that SSCPs should recognize when they see them are shown in Table 5.3, which also has a brief description of each protocol.

TABLE 5.3 Common TCP/IP ports and protocols

| Protocol | TCP/UDP | Port number | Description |

| File Transfer Protocol (FTP) | TCP | 20/21 | FTP control is handled on TCP port 21, and its data transfer can use TCP port 20 as well as dynamic ports, depending on the specific configuration. |

| Secure Shell (SSH) | TCP | 22 | Used to manage network devices securely at the command level; secure alternative to Telnet, which does not support secure connections. |

| Telnet | TCP | 23 | Teletype-like unsecure command line interface used to manage network device. |

| Simple Mail Transfer Protocol (SMTP) | TCP | 25 | Transfers mail (email) between mail servers, and between end user (client) and mail server. |

| Domain Name System (DNS) | TCP/UDP | 53 | Resolves domain names into IP addresses for network routing. Hierarchical, using top-level domain servers (.com, .org, etc.) that support lower-tier servers for public name resolution. DNS servers can also be set up in private networks. |

| Dynamic Host Configuration Protocol (DHCP) | UDP | 67/68 | DHCP is used on networks that do not use static IP address assignment (almost all of them). |

| Trivial File Transfer Protocol (TFTP) | UDP | 69 | TFTP offers a method of file transfer without the session establishment requirements that FTP has; using UDP instead of TCP, the receiving device must verify complete and correct transfer. TFTP is typically used by devices to upgrade software and firmware. |

| Hypertext Transfer Protocol (HTTP) | TCP | 80 | HTTP is the main protocol that is used by Web browsers and is thus used by any client that uses files located on these servers. |

| Post Office Protocol (POP) v3 | TCP | 110 | POP version 3 provides client–server email services, including transfer of complete inbox (or other folder) contents to the client. |

| Network Time Protocol (NTP) | UDP | 123 | One of the most overlooked protocols is NTP. NTP is used to synchronize the devices on the Internet. Most secure services simply will not support devices whose clocks are too far out of sync, for example. |

| NetBIOS | TCP/UDP | 137/138/139 | NetBIOS (more correctly, NETBIOS over TCP/IP, or NBT) has long been the central protocol used to interconnect Microsoft Windows machines. |

| Internet Message Access Protocol (IMAP) | TCP | 143 | IMAP version 3 is the second of the main protocols used to retrieve mail from a server. While POP has wider support, IMAP supports a wider array of remote mailbox operations that can be helpful to users. |

| Simple Network Management Protocol (SNMP) | TCP/UDP | 161/162 | SNMP is used by network administrators as a method of network management. SNMP can monitor, configure, and control network devices. SNMP traps can be set to notify a central server when specific actions are occurring. |

| Border Gateway Protocol (BGP) | TCP | 179 | BGP is used on the public Internet and by ISPs to maintain very large routing tables and traffic processing, which involve millions of entries to search, manage, and maintain every moment of the day. |

| Lightweight Directory Access Protocol (LDAP) | TCP/UDP | 389 | LDAP provides a mechanism of accessing and maintaining distributed directory information. LDAP is based on the ITU-T X.500 standard but has been simplified and altered to work over TCP/IP networks. |

| Hypertext Transfer Protocol over SSL/TLS (HTTPS) | TCP | 443 | HTTPS is used in conjunction with HTTP to provide the same services but does it using a secure connection that is provided by either SSL or TLS. |

| Lightweight Directory Access Protocol over TLS/SSL (LDAPS) | TCP/UDP | 636 | LDAPS provides the same function as LDAP but over a secure connection that is provided by either SSL or TLS. |

| FTP over TLS/SSL (RFC 4217) |

TCP | 989/990 | FTP over TLS/SSL uses the FTP protocol, which is then secured using either SSL or TLS. |

It's good to note at this point that as we move down the protocol stack, each successive layer adds additional addressing, routing, and control information to the data payload it received from the layer above it. This is done by encapsulating or wrapping its own header around what it's given by the layers of the protocol stack or the application-layer socket call that asks for its service. Thus, the datagram produced at the Transport layer contains the protocol-specific header and the payload data. This is passed to the Network layer, along with the required address information and other fields; the Network layer puts that information into its IPv4 (or IPv6) header, sets the Protocol field accordingly, appends the datagram it just received from the Transport layer, and passes that on to the Data Link layer. (And so on…)

Most of the protocols that use Layer 4 either use TCP/IP as a stateful or connection- oriented way of transferring data or use UDP, which is stateless and not connection oriented. TCP bundles its data and headers into segments (not to be confused with segments at Layer 1), whereas UDP and some other Transport layer protocols call their bundles datagrams:

- Stateful communications processes have sender and receiver go through a sequence of steps, and sender and receiver have to keep track of which step the other has initiated, successfully completed, or asked for a retry on. Each of those steps is often called the state of the process at the sender or receiver. Stateful processes require an unambiguous identification of sender and recipient, and some kind of protocols for error detection and requests for retransmission, which a connection provides.

- Stateless communication processes do not require sender and receiver to know where the other is in the process. This means that the sender does not need a connection, does not need to service retransmission requests, and may not even need to validate who the listeners are. Broadcast traffic is typically both stateless and connectionless.

Layer 4 devices include gateways (which can bridge dissimilar network architectures together, and route traffic between them) and firewalls.