Chapter 10

Incident Response and Recovery

Anomalies happen. Tasks stop working right. Users can't connect reliably, or their connections don't stay up as they should. Servers get sluggish, as if they are handling an abnormally high demand for services. Hardware or software systems just stop working, either with “blue screens of death” or by using normal restart procedures but at unexpected times. On the factory floor, safety systems start malfunctioning; equipment and materials get damaged, people get hurt. Autonomous warehouse bots start dropping what they're carrying, or run into the shelf racks (or each other). Vehicles won't start—or stop. Networks go down, and take the VoIP systems with them. Now, your organization's computer emergency response team springs into action to characterize the incident, contain it, and get your systems back to operating normally.

What? Your organization doesn't have such a team? Let's jump right in, do some focused preparation, and improve your operational information security posture so that you can detect, identify, contain, eradicate, and restore after the next anomaly.

Defeating the Kill Chain One Skirmish at a Time

It's often been said that the attackers have to get lucky only once, whereas the defenders have to be lucky every moment of every day. When it comes to advanced persistent threats (APTs), which pose potentially the most damaging attacks to our information systems, another, more operationally useful rule applies. APTs must of necessity use a robust kill chain to discover, reconnoiter, characterize, infiltrate, gain control, and further identify resources to attack within the system; make their “target kill”; and copy, exfiltrate, or destroy the data and systems of their choice, cover their tracks, and then leave. Things get worse: for most businesses, nongovernmental organizations (NGOs), and government departments and agencies, they are probably the object of interest of dozens of different, unrelated attackers, each following its own kill chain logic to achieve its own set of goals (which may or may not overlap with those of other attackers). Taken together, there may be thousands if not hundreds of thousands of APTs out there in the wild, each seeking its own dominance, power, and gain. The millions of information systems owned and operated by businesses and organizations worldwide are their hunting grounds. As a senior official in the British Intelligence services remarked in 2021, cybercrime attacks have become big business because they work; they're part of a highly successful business model that embraces the entire Dark Web ecosystem and everyone that's a part of it. (Some forecasters predict that by 2030, if cybercrime were a nation it would have the fifth largest economy on the planet.)

The good news, however, is that as you've seen in previous chapters, SSCPs have some field-proven information risk management and mitigation strategies that they can help their companies or organizations adopt. These frameworks, and the specific risk mitigation controls, are tailored to the information security needs of your specific organization. With them, you can first deter, prevent, and avoid attacks. Then you can detect the ones that get past that first set of barriers, and characterize them in terms of real-time risks to your systems. You then take steps to contain the damage they're capable of causing, and help the organization recover from the attack and get back up on its feet.

You probably will not do battle with an APT directly; you and your team won't have the luxury (if we can call it that!) of trying to design to defeat a particular APT and thwart its attempts to seek its objectives at your expense. Instead, you'll wage your defensive campaign one skirmish at a time. You'll deflect or defeat one scouting party as you strengthen one perimeter; you'll detect and block a probe from gaining entry into your systems. You'll find where an illicit user ID has made itself part of your system, and you'll contain it, quarantine it, and ultimately block its attempts to expand its presence inside your operations. As you continually work with your systems' designers and maintainers, you'll help them find ways to tighten down a barrier here or mitigate a vulnerability there. Step by step, you strengthen your information security posture.

By now, you and your organization should be prepared to respond when those alarms start ringing. Right?

Why should SSCPs put so much emphasis on APTs and their use of the kill chain? In virtually every major data breach in the past decade, the attack pattern was low and slow: sequences of small-scale efforts designed to not cause alarm, each of which gathered information or enabled the attacker to take control of a target system. More low and slow attacks launched from that first target against other target systems. More reconnaissance. Finally, with all command, control, and hacking capabilities in place, the attack began in earnest to exfiltrate sensitive, private, or otherwise valuable data out of the target's systems.

Note that if any of those low and slow attack steps had been thwarted, or if any of those early reconnaissance efforts, or attempts to install command and control tools, had been detected and stopped, then the attacker might have given up and moved on to another lucrative target.

Preparation and planning are the keys to survival. In previous chapters, you've learned how to translate risk mitigation into specific physical, technical, and administrative controls that you'd recommend to management to implement as part of the organization's information systems security posture. You've also learned how to build in the detection capabilities that should raise the alarms when things aren't looking right. More importantly, you've grasped the need to aggregate alarm data with systems status, state, and health information to generate indications and warnings of a possible information security incident in the making and the urgent and compelling need to promptly escalate such potential bad news to senior management and leadership.

Kill Chains: Reviewing the Basics

In Chapter 1, “The Business Case for Decision Assurance and Information Security,” we looked briefly at the value chain, which models how organizations create value in the products or services they provide to their customers. The value chain brings together the sequence of major activities, the infrastructures that support them, and the key resources that they need to transform each input into an output. The value chain focuses our attention on both the outputs and the outcomes that result from each activity. Critical to thinking about the value chain is that each major step provides the organization a chance to improve the end-to-end experience by reducing costs (by reducing waste, scrap, and rework) and improving the quality of each output and outcome along the way. We also saw that every step along the value chain is an opportunity for something to go wrong. A key input could be delayed or fail to meet the required specifications for quality or quantity. Skilled labor might not be available when we need it; critical information might be missing, incomplete, or inaccurate.

The name kill chain comes from military operational planning (which, after all, is the business of killing the opponent's forces and breaking their systems). Kill chains are outcomes-based planning concepts and are geared to achieving national strategic, operational, or tactical outcomes as part of larger battle plans. These kill chains tend to be planned from the desired outcome back toward the starting set of inputs: if you want to destroy the other side's naval fleet while at anchor at its home port, you have to figure out what kind of weapons you have or can get that can destroy such ships. Then you work out how to get those weapons to where they can damage the ships (by air drop, surface naval weapons fire, submarine, small boats, cargo trucks, or other stealthy means). And so on. You then look at each way the other side can deter, defeat, or prevent you from attacking. By this point, you probably realize that you need to know more about their naval base, its defenses, its normal patterns of activity, its supply chains, and its communications systems. With all of that information, you start to winnow down the pile of options into a few reasonably sensible ways to defeat their navy while it's at home port, or you realize that's beyond your capabilities and you look for some other target that might be easier to attack that can help achieve the same outcome you want to achieve by defeating their navy.

With that as a starting point, we can see that an information systems kill chain is the total set of actions, plans, tasks, and resources used by an advanced persistent threat to

- Identify potential target information systems that suit their objectives.

- Gain access to those targets, and establish command and control over portions of those targets' systems.

- Use that command and control to carry out further tasks in support of achieving their objectives.

How do APTs apply this kill chain in practice? In broad general terms, APT actors do the following:

- Survey the marketplaces for potential opportunities to achieve an outcome that supports their objectives

- Gather intelligence data about potential targets, building an initial profile on each target

- Use that intelligence to inform the way they conduct probes against selected targets, building up fingerprints of the target's systems and potentially exploitable vulnerabilities

- Conduct initial intrusions on selected targets and their systems, gathering more technical intelligence

- Establish some form of command and control presence on the target systems

- Elevate privilege so as to enable broader, deeper search for exploitable information assets in the target's systems and networks

- Conduct further reconnaissance to discover internetworked systems that may be worth reconnaissance or exploitation

- Begin the exploitation of the selected information assets: exfiltrate the data, disrupt or degrade the targeted information processes, and so on

- Complete the exploitation activities

- Obfuscate or destroy evidence of their activities in the target's system

- Disconnect from the target

The more complex, pernicious APTs will use multiple target systems as proxies in their kill chains, using one target's systems to become a platform from which they can run reconnaissance and exploitation against other targets.

Events vs. Incidents

Let's suppose for a moment that your company and its information systems have caught the attention of an APT actor. How might their attentions show up as observable activities from your side of the interface? Most probably, your systems will experience a variety of anomalies, of many different types, which may seem completely unrelated. At some point, one of those anomalies catches your interest, or you think you see a pattern beginning to emerge from a sequence of events.

Back in Chapter 2, “Information Security Fundamentals,” we defined an event of interest as something that happens that might be an indicator of something that might impact your information's systems security. We looked at how an event of interest may or may not be a warning of a computer security incident in the making, or even the first stages of such an incident.

But what is a computer security incident? Several definitions by NIST, ITIL, and the IEFT* suggest that computer security incidents are events involving a target information system in ways that

- Are unplanned

- Are disruptive

- Are hostile, malicious, or harmful in intent

- Compromise the confidentiality, integrity, availability, authenticity, or other security characteristics of the affected information systems

- Willfully violate the system owners' policies for acceptable use, security, or access

Consider the unplanned shutdown of an email server within your systems. You'd need to do a quick investigation to rule out natural causes (such as a thunderstorm-induced power surge) and accidental causes (the maintenance technician who stumbled and pulled the power cord loose on his way to the floor). Yes, your vulnerability assessment might have discovered these and made recommendations as to how to reduce their potential for disruption. But if neither weather nor a hardware-level accident caused the shutdown, you still have a dilemma: was it a software design problem that caused the crash, or a vulnerability that was exploited by a person or persons unknown?

Or consider the challenges of differentiating phishing attacks from innocent requests for information. An individual caller to your main business phone number, seeking contact information in your IT team, might be an honest and innocent inquiry (perhaps from an SSCP looking for a job!). However, if a number of such innocent inquiries across many days have attempted to map out your entire organization's structure, complete with individual names, phone numbers, and email addresses, you're being scouted against!

What this leads to is that your organization needs to clearly spell out a triage process by which the IT and information security teams can recognize an event, quickly characterize it, and decide the right process to apply to it. Figure 10.1 illustrates such a process.

FIGURE 10.1 Incident triage and response process

Note that our role as SSCPs requires us to view these incidents from the overall information risk management and mitigation perspective as well as from the information systems security perspective. It's quite likely that the computer security perspective is the more challenging one, demanding a greater degree of rapid-fire analysis and decision making, so we'll focus on it from here on out.

Harsh Realities of Real Incidents

Before we take a deep dive into incident detection and response, it's probably useful to take a look at a number of real attacks that have taken place and see them broken down across time. You've heard that, on average, an attacker is inside their target's system for nearly six months before their presence is detected. It's natural to ask, “just what are those attackers actually doing during all of that time,” as a way of guiding your own thinking about planning and preparing to detect and respond to attackers.

Frameworks and theoretical models like the cyber kill chain are useful for part of this; they help organize our meta-thinking (our thinking about how we think) as we use them to build our own conceptual models of events such as complex cyberattacks. This prepares us to get down into the details; and as we saw in our previous investigations of vulnerability assessment and management, some of the best tools to help in that process are the national databases and query systems that gather, collate, and present the details of vulnerabilities that have been discovered and the exploits that have been used against them. MITRE's CVE data, for example, is a fundamental tool for every information security specialist. As of July 30, 2021, this database had 157,777 records of publicly disclosed vulnerability data regarding almost every form of IT and OT hardware, systems software, and applications in the world. Correlating your IT and OT architecture's system baseline with the applicable CVE items informs your vulnerability assessment process.

Organized another way, that same vulnerability and exploit reporting data can inform your incident response preparedness efforts. Let's see how.

MITRE's ATT&CK Framework

More than a decade of research efforts across the information security community formed the foundation of this framework, which was intended to produce a “living lab” capability that supports and encourages security practitioners and researchers to find new ways to derive meaning from the experiences of real-world attacks. In the words of its creators, it “is just as much about the mindset and process of using it as much as it is about the knowledge base itself.” Two views of this knowledge base illustrate this:

- From exploit to attack plan: Seen in bottom-up fashion, each exploit or group of exploits is placed in the context of purpose or intent; this reveals or emphasizes the why of the exploit, while keeping the details of how it is done a mouse click away. Individual tactics can then be grouped together as operationally larger steps in an overall attack plan, by cataloging each exploit based on what it might be useful for from the attacker's perspective.

- From campaign plan objectives to exploits: Attackers have large-scale purposes, whether these be disruption of a manufacturing system, extortion, or theft of protected or sensitive data. These larger-scale objectives break down into groups of common phases of activity, such as target selection, attaining access and control, and so forth. Driven by analyses of real-world attacks, these attack stages or phases are flowed down to the exploits themselves.

ATT&CK provides three defined views of this knowledge base, where the exploits in question (and thus their tactical use and objective or purpose) are organized in ways that best reflect attacks on three classes of targets: enterprise systems, mobile systems, and industrial control systems (ICSs). Figure 10.2 shows a small portion of the enterprise framework, which illustrates how individual exploits are bottom-up grouped by purpose and then by stage in an overall attack plan. It shows how botnets, for example, can serve multiple tactical needs during the resource development phase of an attack.

FIGURE 10.2 ATT&CK enterprise framework (partial)

Source: (This screen shot, and all other data from ATT&CK, is used with MITRE's grant to anyone for its use; see https://attack.mitre.org/resources/terms-of-use/ for details and then start using this tool in your own organization.)

ATT&CK is live data; as each new CVE report comes in, it is characterized (either by those who report it or by ATT&CK-affiliated researchers) and tagged so that it can be placed into its rightful places (plural) in these attack frameworks. And when attackers demonstrate new tactics and techniques and organize the ebb and flow of their tactical steps in new ways, ATT&CK is adjusted by its researcher-operators to reflect those new, painfully learned lessons.

Learning from Others' Painful Experiences

MITRE's ATT&CK team has rounded up a number of resources to help you become familiar with the tool, its data, and its use. Whether you want to do this with videos, blogs, ebooks, or other materials, your journey can begin at https://attack.mitre.org/resources/getting-started/.

Set this book down, take a break, and go spend some time digging into some of what's out there on ATT&CK; then, armed with more of an attacker's-eye view, come back, and let's start organizing, preparing, training, and equipping your own incident responders.

Incident Response Framework

All organizations, regardless of size or mission, should have a framework or process they use to manage their information security incident response efforts with. It is a vital part of your organization's business logic. Due care and due diligence both require it. The sad truth is, however, many organizations don't get around to thinking through their incident response process needs until after the first really scary information security incident has taken place. As they sweep up the digital broken glass from the break-in, assess their losses due to stolen or compromised data, and start figuring out how to get back into operation, they say “Never again!” They promise themselves that they'll write down the lessons they've just painfully learned and be better prepared.

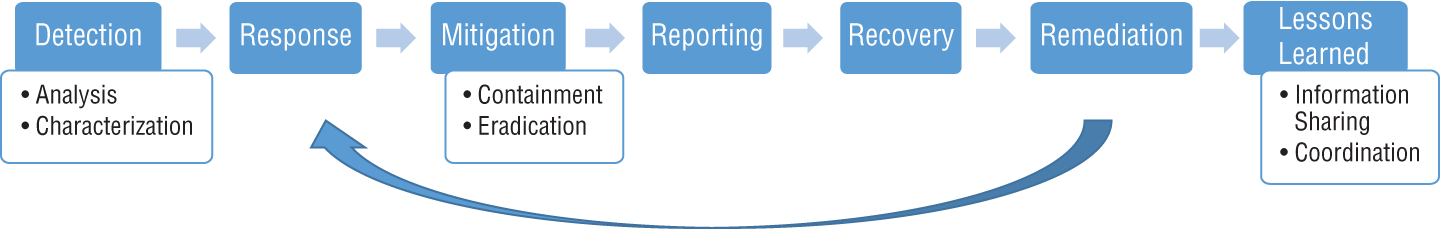

(ISC)2 and others define the incident response framework as a formal plan or process for managing the organization's response to a suspected information security incident. It consists of a series of steps that start with detection and run through response, mitigation, reporting, recovery, and remediation, ending with a lessons learned and onward preparation phase. Figure 10.3 illustrates this process. Please note that this is a conceptual flow of the steps involved; reality tells us that incidents unfold in strange and complex ways, and your incident response team needs to be prepared to cycle around these steps in different ways based on what they learn and what results they get from the actions they take.

FIGURE 10.3 Incident response process

NIST, in its special publication 800-61r2, adds an initial preparation phase to this flow and further focuses attention on the detection process by emphasizing the role of prompt analysis to support incident identification and characterization. NIST also refines the mitigation efforts by breaking them down into containment and eradication steps and the lessons learned phase into information sharing and coordination activities. These are shown alongside the simplified response flow in Figure 10.4.

FIGURE 10.4 NIST 800-61 incident response flow

Other publications and authorities such as ITIL publish their own incident response frameworks, each slightly different in specifics. ISO/IEC 27035:2016 is another good source of information technology security techniques and approaches to incident management. As we saw with risk management frameworks in Chapters 3, “Integrated Information Risk Management,” and 4, “Operationalizing Risk Mitigation,” the key is to find what works for your organization. These same major tasks ought to show up in your company's incident response management processes, policies, and procedures. They may be called by different names, but the same set of functions should be readily apparent as you read through these documents. If you're missing a step—if a critical task in either of these flows seems to be overlooked—then it's time to investigate.

Incident Response Team: Roles and Structures

Unless you're in a very small organization, and as the SSCP you wear all of the hats of network and systems administration, security, and incident response, your organization will need to formally designate a team of people who have the “watch-standing” duty of a real-time incident response team. This team might be called a computer emergency response team (CERT). CERTs can also be known as computer incident response teams, as a cyber incident response team (both using the CIRT acronym), or as computer security incident response teams (CSIRTs). For ease of reference, let's call ours a CSIRT for the remainder of this chapter. (Note that CERTs tend to have a broader charter, responding whether systems are put out of action by acts of nature, accidents, or hostile attackers. CERTs, too, tend to be more involved with broader disaster recovery efforts than a team focused primarily on security-related incidents.)

Your organization's risk appetite and its specific CIANA needs should determine whether this CSIRT provides around-the-clock, on-site support, or supports on a rapid-response, on-call basis after business hours. These needs will also help determine whether the incident response team is a separate and distinct group of people or is a part of preexisting groups in your IT, systems, or networks departments. In Chapter 5, “Communications and Network Security,” for example, we looked at segregating the day-to-day network operations jobs of the network operations center (NOC) from the time-critical security and incident response tasks of a security operations center (SOC).

Whether your organization calls them a CSIRT or an SOC, or they're just a subset of the IT department's staff, there are a number of key functions that this incident response team should perform. We'll look at them in more detail in subsequent sections, but by way of introduction, they are as follows:

Serve as a single point of contact for incident response. Having a single point of contact between the incident and the organization makes incident command, control, and communication much more effective. This should include the following:

-

- Focus reporting and rumor control with users and managers regarding suspicious events, systems anomalies, or other security concerns.

- Coordinate responses, and dispatch or call in additional resources as needed.

- Escalate computer security incident reports to senior managers and leadership.

- Coordinate with other security teams (such as physical security), and with local police, fire, and rescue departments as required.

Take control of the incident and the scene. Taking control of the incident, as an event that's taking place in real time, is vital. Without somebody taking immediate control of the incident, and where it's taking place, you risk bad decisions placing people, property, information, or the business at greater risk of harm or loss than they already are. Taking control of the incident scene protects information about the incident, where it happened, and how it happened. This preserves physical and digital evidence that may be critical to determining how the incident began, how it progressed, and what happened as it spread. This information is vital to both problem analysis and recovery efforts and legal investigations of fault, liability, or unlawful activity.

-

- Response procedures should specify the chain of command relationships, and designate who (by position, title, or name) is the “on-scene commander,” so to speak. Incident situations can be stressful, and often you're dealing with incomplete information. Even the simplest of decisions needs to be clearly made and communicated to those who need to carry it out; committees usually cannot do this very well in real time.

- The scene itself, and the systems, information, and even the rooms or buildings themselves, represent investments that the organization has made. Due care requires that the incident response team minimize further damage to the organization's property or the property of others that may be involved in the incident scene.

Investigate, analyze, and assess the incident. This is where all of your skills as a troubleshooter, an investigator, or just being good at making informed guesses start to pay off. Gather data; ask questions; dig for information.

Escalate, report, and engage with leadership. Once they've determined that a security-related incident might in fact be happening, the team needs to promptly escalate this to senior leadership and management. This may involve a judgment call on the response team chief's part, as preplanned incident checklists and procedures cannot anticipate everything that might go wrong. Experience dictates that it's best to err on the side of caution, and report or escalate to higher management and leadership.

Keep a running incident response log. The incident response team should keep accurate logs of what happened, what decisions got made (and by whom), and what actions were taken. Logging should also build a time-ordered catalog of event artifacts—files, other outputs, or physical changes to systems, for example. This time history of the event, as it unfolds, is also vital to understanding the event, and mitigating or taking remedial action to prevent its reoccurrence. Logs and the catalogs of artifacts that go with them are an important part of establishing the chain of custody of evidence (digital or other) in support of any subsequent forensics investigation.

Coordinate with external parties. External parties can include systems vendors and maintainers, service bureaus or cloud-hosting service providers, outside organizations that have shared access to information systems (such as extranets or federated access privileges), and others whose own information and information systems may be put at risk by this incident as it unfolds. By acting as the organization's focal point for coordination with external parties, the team can keep those partners properly informed, reduce risk to their systems and information, and make better use of technical, security, and other support those parties may be able to provide.

Contain the incident. Prevent it from infecting, disrupting, or gaining access to any other elements of your systems or networks, as well as preventing it from using your systems as launchpads to attack other external systems.

Eradicate the incident. Remove, quarantine, or otherwise eliminate all elements of the attack from your systems.

Recover from the incident. Restore systems to their pre-attack state by resetting and reloading network systems, routers, servers, and so forth as required. Finally, inform management that the systems should be back up and ready for operational use by end users.

Document what you've learned. Capture everything possible regarding systems deficiencies, vulnerabilities, or procedural errors that contributed to the incident taking place for subsequent mitigation or remediation. Review your incident response procedures for what worked and what didn't, and update accordingly.

Incident Response Priorities

No matter how your organization breaks up the incident response management process into a series of steps, or how they are assigned to different individuals or teams within the organization, the incident response team must keep three basic priorities firmly in mind.

The first one is easy: the safety of people comes first. Nothing you are going to try to accomplish is more important than protecting people from injury or death. It does not matter whether those people are your coworkers on the incident response team, or other staff members at the site of the incident, or even people who might have been responsible for causing the incident, your first priority is preventing harm from coming to any of them—yourself included! Your organization should have standing policies and procedures that dictate how calls for assistance to local fire, police, or emergency medical services should be made; these should be part of your incident response procedures.

The next two priority choices, when taken together, are actually one of the most difficult decisions facing an organization, especially when it's in the midst of a computer security incident: should it prioritize getting back into normal business operations or supporting a digital forensics investigation that may establish responsibility, guilt, or liability for the incident and resultant loss and damages. This is not a decision that the on-scene response team leader makes! Simply put, the longer it takes to secure the scene, and gather and protect evidence (such as memory dumps, systems images, disk images, log files, etc.), the longer it takes to restore systems to their normal business configurations and get users back to doing productive work. This is not a binary, either-or decision—it is something that the incident response team and senior leaders need to keep a constant watch over throughout all phases of incident response.

Increasingly, we see that government regulators, civic watchdog groups, shareholders, and the courts are becoming impatient with senior management teams that fail in their due diligence. This impatience is translating into legal and market action that can and will bring self-inflicted damage—negligence, in other words—home to roost where it belongs, and the reasonable fear of that should lead to tasking all members of the IT organization, including their information security specialists, with developing greater proficiency at being able to protect and preserve the digital evidence related to an incident, while getting the systems and business processes promptly restored to normal operations.

The details of how to preserve an incident scene for a possible digital forensics investigation, and how such investigations are conducted, is beyond the scope of the SSCP exam and this book. They are, however, great avenues for you to journey along as you continue to grow in your chosen profession as a white hat!

Preparation

You may have noticed that this step isn't shown in either of the flows in Figures 10.3 or 10.4. That's not an oversight—this should have been done as soon as you started your information risk management planning process. There is nothing to gain by waiting—and potentially everything to lose. NIST SP800-61 Rev. 2 provides an excellent “shopping list” of key preparation and planning tasks to start with and the information they should make readily available to your response team. But where do you start?

Let's break this preparation task down into more manageable steps, using the Plan-Do-Check-Act (PDCA) model we used in earlier chapters, as part of risk management and mitigation. It may seem redundant to plan for a plan, but it's not—you have to start somewhere, after all. Note that the boundaries between planning, doing, checking, and acting are not hard and fast; you'll no doubt find that some steps can and should be taken almost immediately, while others need a more deliberative approach. Every step of the way, keep senior management and leadership engaged and involved. This is their emergency response capability you're planning and building, after all.

Preparation Planning

This first set of tasks focuses on gathering what the organization already knows about its information systems and IT infrastructures, its business processes and its people, which become the foundation on which you can build the procedures, resources, and training that your incident responders will need. As you build those procedures and training plans, you'll also need to build out the support relationships you'll need when that first incident (or the next incident) happens.

Build, maintain, and use a knowledge base of critical systems support information. You'll need this information to identify and properly scope the CSIRT's monitoring and detection job, as well as identify the internal systems support teams, critical users, and recovery and restoration processes that already exist. As a living library, the CSIRT should have these information products available to them as reference and guidance materials. These include but are not limited to

-

- Information architecture documentation, plans, and support information

- IT systems documentation, such as servers, endpoints, special-purpose systems, etc.

- IT security systems documentation, including connectivity, current settings, alarm indications, and system documentation

- Clean, trusted backup images of systems and critical files, including digitally signed copies or cryptographic hashes, from which trusted restoration can take place

- Networks and other communications systems design, installation, and support, including data plane, control plane, and management plane views

- Platform and service systems documentation

- Physical layout drawings, showing equipment location, points of presence, alarm systems, entrances, and exits

- Power supply information, including commercial and backup sources, switching, power conditioning, etc.

- Current status of known vulnerabilities on all systems, connections, and endpoints

- Current status of systems, applications, platforms and database backups, age of last backup, and physical location of backup images

- Contact information or directory of key staff members, managers, and support personnel, both in-house and for any service providers, systems vendors, or federated access partners

Whether you put this information into a separate knowledge base for your incident responders, or it is part of your overall software, systems, and IT knowledge base, is perhaps a question of scale and of survivability. During an incident itself, you need this knowledge base reliably available to your responders, without having to worry if it's been tainted by this incident or a prior but undetected one.

Use that list to identify the set of business process, systems architecture, and technology-focused critical knowledge that each CSIRT team member must be proficient in, and add this to your team training and requalification planning set.

Assemble critical data collection, collation, and analysis tools. Characterizing an event in real time, and quickly determining its nature and the urgency of the response it demands, requires that your incident response team be able to analyze and assess what all of the information from your systems is trying to tell them. You do not help the team get this done by letting the team find the tools they need right when they're trying to deal with an ongoing incident. Instead, identify a broad set of systems and event information analysis tools, and bring them together in what we might call a responder's workbench. This workbench can provide your response team with a set of known, clean systems to use as they capture data, analyze it, and draw conclusions about the event in question. Some of the current generation of security information and event management systems may provide good starting points for growing your own workbench. Other tools may need to be developed in house, tailored to the nature of critical business processes or information flows, for example.

Establish minimum standards for event logging. Virtually all of your devices, be they servers, endpoints, or connectivity systems, have the capability to capture event information at the hardware, systems software, and applications levels. These logs can quickly narrow down your hunt for the broken or infected system, or the unauthorized subject(s) and the objects they've accessed. You'll also need to establish a comprehensive and uniform policy about log file retention if you're hoping to correlate logs from different systems and devices with each other in any meaningful way. Higher-priority, mission-critical systems should have higher levels of logging, capturing more events and at greater time granularity, to better empower your response capability regarding these systems.

Identify forensics requirements, capabilities, and relationships. Although many information security incidents may come and go without generating legal repercussions, you need to take steps now to prepare for those incidents that will. You'll need to put in place the minimum required capabilities to establish and maintain a chain of custody for evidence. This may surface the need for additional training for CSIRT team members and managers. Use this as the opportunity to understand the support relationships your team will need when (not if) such an incident occurs, and start thinking through how you'd select the certified forensics examiners you'd need when it does.

By the end of this preparation planning phase, you should have some concrete ideas about what you'll need for the CSIRT:

- SOC, or NOC? Does your organization need a security operations center, with its crew of watch-standers? Or can the CSIRT be an on-call team of responders drawn from the IT department's networks, systems, and applications support specialists? In either case, how many people will be needed for ongoing alert and monitoring during normal business hours, for round-the-clock watch-standing, and for emergency response?

- Physical work space, responder's workbenches, and communication needs must also be identified at this point. These will no doubt need to be budgeted for, and their acquisition, installation, and ongoing support needs to fit into your overall incident response budget and schedule.

- Reporting, escalation, and incident management chain of command procedures should be put together in draft form at this point; coordinate with management and leadership to gain their endorsement and commitment to these.

Put the Preparation Plan in Motion

This is where the doing of our PDCA gets going in earnest. Some of the actions you'll take are strictly internal and technical; some relate to improvements in administrative controls:

Synchronize all system clocks. Many service handshakes can allow up to 5 minutes or more misalignment of clocks across all elements participating in the service, but this can play havoc with attempts to correlate event logs.

Frequently profile your systems. System profiles help you understand the “normal” types, patterns, and amounts of traffic and load on the systems, as well as capturing key security and performance settings. Whether you use automated change-detection tools or manual inspection, comparing a current profile to a previous one may surface an indicator of an event of interest in progress or shed light on your search to find it, fix it, and remove it.

Establish channels for outside parties to report information security incidents to you. Whether these are other organizations you do routine business with or complete strangers, you make it much easier on your shared community of information security professionals when you set up an email form or phone number for anyone to report such problems to you. And it should go without saying that somebody in your response team needs to be paying attention to that email inbox, or the phone messages, or the forms-generated trouble tickets that flow from such a “contact us” page!

Establish external incident response support relationships. Many of the organizations you work with routinely—your cloud-hosting providers, other third-party services, your systems and software vendors and maintainers, even and especially your ISP—can be valuable teammates when you're in the midst of an incident response. Gather them up into a community of practice before the lightning strikes. Get to know each other, and understand the normal limits of what you can call upon each other for in the way of support. Clearly identify what you have to warn them about as you're working through a real-time incident response yourself.

Develop and document CSIRT response procedures. These will, of course, be living documents; as your team learns with each incident they respond to, they'll need to update these procedures as they discover what they were well-prepared and equipped to deal with effectively, and what caught them by surprise. Checklist-oriented procedures can be very powerful, especially if they're suitable for deployment to CSIRT team members' smartphones or phablets. Don't forget the value of a paper backup copy, along with emergency lighting and flashlights with fresh batteries, for when the lights go out!

Initiate CSIRT personnel training and certification as required. Take the minimum proficiency sets of knowledge, skills, and abilities (often called KSAs in human resources management terms), review the personnel assigned to the CSIRT and your recall rosters, and identify the gaps. Focus training, whether informal on-the-job or formal coursework, that each person needs, and get that training organized, planned, scheduled, and accomplished. Keep CSIRT proficiency qualification files for each team member, note the completion of training activities, and be able to inform management regarding this aspect of your readiness for incident response. (Your organization's HR team may be able to help you with these tasks, and with organizing the training recordkeeping.)

Are You Prepared?

Maybe your preparation achieves a “ready to respond” state incrementally; maybe you're just not ready for an incident at all, until you've achieved a certain minimum set of verified, in-place knowledge, tools, people, and procedures. Your organization's mission, goals, objectives, and risk posture will shape whether you can get incrementally ready or have to achieve an identifiable readiness posture. Regardless, there are several things you and the CSIRT should do to determine whether they are ready or not:

Understand your “business normal” as seen by your IT systems. Establish a routine pattern or rhythm for your incident response team members to steep themselves in the day-to-day normal of the business and how people in the business use the IT infrastructure to create value in that normal way. Stay current with internal and external events that you'd reasonably expect would change that normal—the weather-related shutdown of a branch office, or a temporary addition of new federation partners into your extranets. The more each team member knows about how “normal” is reflected in fine-grained system activity, the greater the chance that those team members will sniff out trouble before it starts to cause problems. They'll also be better informed and thus more capable of restoring systems to a useful normal state as a result.

While you're at it, don't forget to translate that business normal into fine-tuning of your automated and semiautomated security tools, such as your security incident event management systems (SIEMs), intrusion detection systems (IDS), intrusion prevention systems (IPS), or other tools that drive your alerting and monitoring channels. Business normal may also be reflected in the control and filter settings for access control and identity management systems, as well as for firewall settings and their access control lists. This is especially important if your organization's business activities have seasonal variations.

Routinely demonstrate and test backup and restore capabilities. You do not want to be in the middle of an incident response only to find out that you've been taking backup images or files all wrong and that none of them can be reloaded or work right when they are loaded.

Exercise your alert/recall, notification, escalation, and reporting processes. At the cost of a few extra phone calls and a bit of time from key leaders and managers, you gain confidence in two critical aspects of your incident response management process. For starters, you demonstrate that the phone tree or the recall and alert processes work; this builds confidence that they'll work when you really need them to. A second, add-on bonus is that you get to “table-top” or exercise the protocols you'd want to use had this been an actual information systems security incident.

Document your incident response procedures, and use these documents as part of training and readiness. Do not trust human memory or the memory of a well-intended and otherwise effective committee or team! Take the time to write up each major procedure in your incident response management process. Make it an active, living part of the knowledge base your responders will need. Exercise these procedures. Train with them, both as initial training for IT and incident response team members, line, and senior managers, and your general user base as applicable.

Taken all at once, that looks like a lot of preparation! Yet much of what's needed by your incident response team, if they're going to be well prepared, comes right from the architectural assessments, your vulnerability assessments, and your risk mitigation implementation activities. Other key information comes from your overall approach to managing and maintaining configuration control over your information systems and your IT infrastructure. And you should already be carrying out good “IT hygiene” and safety and security measures, such as clock synchronization, event logging, testing, and so forth. The new effort is in creating the team, defining its tasks, writing them up in procedural form, and then using those procedures as an active part of your ongoing training, readiness, and operational evaluation of your overall information security posture.

Detection and Analysis

On a typical day, a typical medium-sized organization might see millions of IP packets knocking on its point of presence, most of them in response to legitimate traffic generated inside the organization, solicited by its Web presence, or generated by its external partners, customers, prospective customers, and vendors. Internally, the traffic volume on the company's internetworks and the event loads on servers that support end users at their endpoints could be of comparable volume. Detecting that something is not quite right, and that that something might be part of an attack, is as much art as it is science. Three different factors combine to make this art-and-science difficult and challenging:

- Multiple, different means of detection: Many different technologies are in use to flag circumstances that might be a security-related incident in the making. Quite often, different technologies measure, assess, characterize, and report their observations at different levels of granularity and accuracy. Sometimes, technologies cannot detect a potential incident, and a human end user or administrator is the first to suspect something's not quite right. Often, however, the first signs of an incident in progress go undetected.

- Incredibly high volumes of events that might be incidents: Inline intrusion detection systems might detect and report a million or more events per day as possible intrusion-related events. Filtering approaches, even with machine learning capabilities, can reduce this, while introducing both false positive and false negative alarms into the response team's workload.

- Deep, specialist knowledge, along with considerable experience is required for a response team member to be able to make sense of the noise and find the signal (the real events worth investigating) in all of it.

So how does our response team sort through all of that noise and find the few important, urgent, and compelling signals to pay attention to?

Warning Signs

First, let's define some important terms related to incident detection. Earlier we talked about events of interest—that is, some kind of occurrence or activity that takes place that just might be worth paying closer attention to. Without getting too philosophical about it, events make something in our systems change state. The user, with hand on mouse, does not cause an event to take place until they do something with the mouse, and it signals the system it's attached to. That movement, click, or thumbwheel roll causes a series of changes in the system. Those changes are events. Whether they are interesting ones, or not, from a security perspective, is the question!

A precursor is a sign, signal, or observable characteristic of the occurrence of an event that in and of itself is not an attack but that might indicate that an attack could happen in the future. Let's look at a few common examples to illustrate this concept:

- Server or other logs that indicate a vulnerability scanner has been being used against a system

- An announcement of a newly found vulnerability by a systems or applications vendor, information security service, or reputable vulnerabilities and exploits reporting service that might relate to your systems or platforms

- Media coverage of events that put your organization's reputation at risk (deservedly or not)

- Email, phone calls, or postal mail threatening attack on your organization, your systems, your staff, or those doing business with you

- Increasingly hostile or angry content in social media postings regarding customer service failures by your company

- Anonymous complaints in employee-facing suggestion boxes, ombudsman communications channels, or even graffiti in the restrooms or lounge areas

Genuine precursors—ones that give you actionable intelligence—are quite rare. They are often akin to the “travel security advisory codes” used by many national governments. They rarely provide enough insight that something specific is about to take place. The best you can do when you see such potential precursors is to pay closer attention to your indicators and warnings systems, perhaps by opening up the filters a bit more. You might also consider altering your security posture in ways that might increase protection for critical systems, perhaps at the cost of reduced throughput due to additional access control processing.

An indicator is a sign, signal, or observable characteristic of the occurrence of an event indicating that an information security incident may have occurred or may be occurring right now. Again, a few very common examples will illustrate:

- Network intrusion detectors generate an alert when input buffer overflows might indicate attempts to inject SQL or other script commands into a webpage or database server.

- Antivirus software detects that a device, such as an endpoint or removable media, has a suspected infection on it.

- Systems administrators, or automated search tools, notice filenames containing unusual or unprintable characters.

- Access control systems notice a device attempting to connect, which does not have required software or malware definition updates applied to it.

- A host or an endpoint device does an unplanned restart.

- A new or unmanaged host or endpoint attempts to join the network.

- A host or an endpoint device notices a change to a configuration-controlled element in its baseline configuration.

- An applications platform logs multiple failed login attempts, seemingly from an unfamiliar system or IP address.

- Email systems and administrators notice an increase in the number of bounced, refused, or quarantined emails with suspicious content or ones with unknown addressees.

- Unusual deviations in network traffic flows or systems loading are observed.

One type of indicator worth special attention is called an indicator of compromise (IOC), which is an observable artifact that with high confidence signals that an information system has been compromised or is in the process of being compromised. Such artifacts might include recognizable malware signatures, attempts to access IP addresses or URLs known or suspected to be of hostile or compromising intent, or domain names associated with known or suspected botnet control servers. The information security community is working to standardize the format and structure of IOC information to aid in rapid dissemination and automated use by security systems.

In one respect, the fact that detection is a war of numbers is both a blessing and a curse; in many cases, even the first few low and slow steps in an attack may create dozens or hundreds of indicators, each of which may, if you're lucky, contain information that correlates them all into a suspicious pattern. Of course, you're probably dealing with millions of events to correlate, assess, screen, filter, and dig through to find those few needles in that field of haystacks.

Initial Detection

Initial incident detection is the iterative process by which human members of the incident response team assemble, collate, and analyze any number of indicators (and precursors, if available and applicable), usually with a SIEM tool or data aggregator of some sort, and then come to the conclusion that there is most likely an information security event in progress or one that has recently occurred. This is a human-centric, analytical, thoughtful process; it requires team members to make educated guesses (that is, generate hypotheses), test those hypotheses against the indicators and other systems event information, and then reasonably conclude that the alarm ought to be sounded.

That alarm might be best phrased to say that a “probable information security incident” has been detected, along with reporting when it is believed to have first started to occur and whether it is still ongoing.

Ongoing analysis will gather more data, from more systems; run tests, possibly including internal profiling of systems suspected to have been affected or accessed by the attack (if attack it was); and continue to refine its characterization or classification of the incident. At some point, the response team should consult predefined priority lists that help them allocate people and systems resources to continuing this analysis.

Note the dilemma here: paying too much attention, too soon, to too many alarms may distract attention, divert resources, and even build in a “Chicken Little” kind of reaction within management and leadership circles. When a security incident actually does occur, everyone may be just too desensitized to care about it. And of course, if you've got your thresholds set too high, you ignore the alarms that your investments in intrusion detection and security systems are trying to bring to your attention. Many of the headline-grabbing data breach incidents in the past 10 years, such as the attack that struck Target stores in 2013, suffered from having this balance between the costs of dealing with too many false rejections (or Type 1 errors) and the risk of missing a few more dangerous false acceptances (or Type 2 errors) set wrong.

Timeline Analysis

This may seem obvious, but one of the most powerful analytical tools is often overlooked. Timeline analysis reconstructs the sequence of events in order to focus analysis, raise questions, generate insight, and aid in organizing information discovered during the response to the incident. Responders should start building their own reconstructed event timeline or sequence of events, starting from well before the last known good system state, through any precursor or indicator events, and up to and including each new event that occurs. The timeline is different than the response team's log—the log chronicles actions and decisions taken by the response team, directions they've received from management, and key coordination the team has had with external parties.

Timeline correlation and analysis depends upon having a common time reference for all of the data sources being used. Most architectures and infrastructures can do this by making use of a stable, reliable network time service provider, and ensuring that all systems, servers, endpoints, and communications equipment synchronizes with the same time service. Note that in October 2020, the IETF published RFC 8915, Network Time Security for the Network Time Protocol, which significantly improves the security of time services to all devices on the Internet (and on isolated LAN segments with their own network time servers).

Some IDS, IPS, or SIEM product systems may contain timeline analysis tools that your teams can use. Digital forensic workbenches usually have excellent timeline analysis capabilities. Even a simple spreadsheet file can be used to record the sequence of events as it reveals itself to the responders, and as they deduce or infer other events that might have happened.

This last is a powerful component of timeline analysis. Timeline analysis should focus you on asking, “How did event A cause event B?” Just asking the question may lead you to infer some other event that event A actually caused, with this heretofore undiscovered event being the actual or proximate cause of event B. Making these educated guesses, and making note of them in your timeline analysis, is a critical part of trying to figure out what happened.

And without figuring out what happened, your search for all of the elements that might have caused the incident to occur in the first place will be limited to lucky guesswork.

Notification

Now that the incident response team has determined that an incident probably already occurred or is ongoing, the team must notify managers and leaders in the organization. Each organization should specify how this notification is to be done and who the team contacts to deliver the bad news. In some organizations, this may direct that some types of incidents need immediate notification to all users on the affected systems; other circumstances may dictate that only key departmental or functional managers be advised. In any event, these notification procedures should specify how and when to inform senior leadership and management. (It's a sign of inadequate planning and preparation if the incident responders have to ask, “Who should we call?” in the heat of battle.)

Notification also includes getting local authorities, such as fire or rescue services, or law enforcement agencies, involved in the real-time response to the incident. This should always be coordinated with senior leadership and management, even if the team phones them immediately after following the company's process for calling the fire department.

Senior leadership and management may also have notification and reporting responsibilities of their own, which may include very short time frames in which notification must be given to regulatory authorities, or even the public. The incident response team should not have to do this kind of reporting, but it does owe its own leadership and management the information they will need to meet these obligations.

As incident containment, eradication, and recovery continue, the CSIRT will have continuing notification responsibilities. Management may ask for their assistance or direct them to reach out directly via webpage updates, updated voice prompt menus on the IT Help Desk contact line, emails, or phone calls to various internal and external stakeholders. Separate voice contact lines may also need to be used to help coordinate activities and keep everyone informed.

Prioritization

There are several ways to prioritize the team's efforts in responding to an incident. These consider the potential for impact to the organization and its business objectives; whether confidentiality, integrity, or availability of information resources will be impacted; and just how possible it will be to recover from the incident should it continue. Let's take a closer look at these:

- Functional impact looks to the nature of the business processes, objectives, or outcomes that are put at risk by the incident. At one end of this spectrum are the mission-critical systems, the failure of which puts the very survival of the organization at risk. At the other end might be routine but necessary business processes, for which there are readily available alternatives or where the impact is otherwise tolerable. A hospital, for example, might consider systems that directly engage with real-time patient care—instrumentation control, laboratory and pharmacy, and surgical robots—as mission-critical (since losing a patient, terminally, because of an IT systems failure can severely jeopardize the hospital's ongoing existence!). On the other hand, the same hospital could consider post-release patient follow-up care management to be less urgent (no one will die today if this system fails to work today).

- Information impact considers whether the incident risks unauthorized disclosure, exfiltration, corruption, deletion, or other unauthorized changes to information assets, and the relative strategic, tactical, or operational value or sensitivity of that information asset to the organization. The annual holiday party plans, if compromised or deleted, probably have a very low impact to the organization; exfiltration of business proposals being developed with a strategic partner, on the other hand, could have significant impact to both organizations.

- Recoverability involves whether the impact of the incident is eliminated or significantly reduced if the incident is promptly and thoroughly contained. A data exfiltration attack that is detected and contained before copies of sensitive data have left the facility is a recoverable incident; after the copies of PII, customer credit card, or other sensitive data has left, it is not.

Taken together, these factors help the incident response team advise senior leadership and management on how to deal with the incident. It's worth stressing, again, that senior leadership and management need to make this prioritization decision; the SSCPs on the incident response team must advise their leaders by means of the best, most complete, and most current assessment of the incident and its impacts that they can develop. That advice also should address options for containment and eradication of the incident and its effects on the organization.

Containment and Eradication

These two goals are the next major task areas that the CSIRT needs to take on and accomplish. As you can imagine, the nature of the specific incident or attack in question all but defines the containment and eradication tactics, techniques, and procedures you'll need to bring to bear to keep the mess from spreading and to clean up the mess itself.

More formally, containment is the process of identifying the affected or infected systems elements, whether hardware, software, communications systems, or data, and isolating them from the rest of your systems to prevent the disruption-causing agent and the disruption it is causing from affecting the rest of your systems or other systems external to your own. Pay careful attention to the need to not only isolate the causal agent, be that malware or an unauthorized user ID with superuser privileges, but also keep the damage from spreading to other systems. As an example, consider a denial of service (DoS) attack that's started on your systems at one local branch office and its subnets and is using malware payloads to spread itself throughout your systems. You may be able to filter any outbound traffic from that system to keep the malware itself from spreading, but until you've thoroughly cleansed all hosts within that local set of subnets, each of them could be suborned into launching DoS attacks on other hosts inside your system or out on the Internet.

Some typical containment tactics might include:

- Logically or physically disconnecting systems from the network or network segments from the rest of the infrastructure

- Disconnecting key servers (logically or physically), such as domain name system (DNS), dynamic host configuration protocol (DHCP), or access control systems

- Disconnecting your internal networks from your ISP at all points of presence

- Disabling Wi-Fi or other wireless and remote login and access

- Disabling outgoing and incoming connections to known services, applications, platforms, sites, or services

- Disabling outgoing and incoming connections to all external services, services, applications, platforms, sites, or services

- Disconnecting from any extranets or VPNs

- Disconnecting some or all external partners and user domains from any federated access to your systems

- Disabling internal users, processes, or applications, either in functional or logical groups or by physical or network locations

A familiar term should come to mind as you read this list: quarantine. In general, that's what containment is all about. Suspect elements of your system are quarantined off from the rest of the system, which certainly can prevent damage from spreading. It also can isolate a suspected causal agent, allowing you a somewhat safer environment in which to examine it, perhaps even identify it, and track down all of its pieces and parts. As a result, containment and eradication often blur into each other as interrelated tasks rather than remain as distinctly different phases of activity.

This gives us another term worthy of a definition: a causal agent is a software process, data object, hardware element, human-performed procedure, or any combination of those that perform the actions on the targeted systems that constitute the incident, attack, or disruption. Malware payloads, their control and parameter files, and their carriers are examples of causal agents. Bogus user IDs, hardware sniffer devices, or systems on your network that have already been suborned by an attacker are examples of causal agents. As you might suspect, the more sophisticated APT kill chains may use multiple methods to get into your systems and in doing so leave multiple bits of stuff behind to help them achieve their objectives each time they come on in.

Eradication is the process of identifying every instance of the causal agent and its associated files, executables, etc. from all elements of your system. For example, a malware infection would require you to thoroughly scrub every CPU's memory, as well as all file storage systems (local and in the clouds), to ensure you'd found and removed all copies of the malware and any associated files, data, or code fragments. You'd also have to do this for all backup media for all of those systems in order to ensure you'd looked everywhere, removed the malware and its components, and clobbered or zeroized the space they were occupying in whatever storage media you found them on. Depending on the nature of the causal agent, the incident, and the storage technologies involved, you may need to do a full low-level reformat of the media and completely initialize its directory structures to ensure that eradication has been successfully completed.

Eradication should result in a formal declaration that the system, a segment or subsystem, or a particular host, server, or communications device has been inspected and verified to be free from any remnants of the causal agent. This declaration is the signal that recovery of that element or subsystem can begin.

It's beyond the scope of the SSCP exam to get into the many different techniques your incident response team may need to use as part of containment and eradication—quite frankly, there are just far too many potential causal agents out there in the wild, and more are being created daily. It's important to have a working sense of how detection and identification provided you the starting point for your containment, and then your eradication, of the threat.

Evidence Gathering, Preservation, and Use

During all stages of an incident, responders need to be gathering information about the status, state, and health of all systems, particularly those affected by the attack. They need to be correlating event log files from many different elements of their IT infrastructure, while at the same time constructing their own timeline of the event. Incident response teams are expected to figure out what happened, take steps to keep the damage from spreading, remove the cause(s) of the incident, and restore systems to normal use as quickly as they can.

There's a real danger that the incident response team can spread itself too thin if the same group of people are containing and eradicating the threat, while at the same time trying to gather evidence, preserve it, and examine it for possible clues. Management and leadership need to be aware of this conflict. They are the ones who can allocate more resources, either during preparation and planning, incident response, or both, to provide a digital forensics capability.

As in all things, a balance needs to be struck, and response team leaders need to be sensitive to these different needs as they develop and maintain their team's battle rhythm in working through the incident.

Constant Monitoring

From the first moment that the responders believe that an incident has occurred or is ongoing, the team needs to sharpen their gaze at the various monitoring tools that are already in place, watching over the organization's IT infrastructure. The incident itself may be starting to cause disruptions to the normal state of the infrastructure and systems; containment and eradication responses will no doubt further disrupt operations. All of that aside, a new monitoring priority and question now needs to occupy center stage for the response team's attention: are their chosen containment, eradication, and (later on) restoration efforts working properly?

On the one hand, the team should be actively predicting the most likely outcomes of each step they are about to take before they take it. This look-ahead should also be suggesting additional alarm conditions or signs of trouble that might indicate that the chosen step is not working correctly or in fact is adding to the impact the incident is causing. Training and experience with each tool and tactic is vital, as this gives the team the depth of specialist knowledge to draw on as they assess the situation, choose among possible actions to take, and then perform that action as part of their overall response.

The incident response team is, first and foremost, supposed to be managing their responses to the incident. Without well-informed predictions of the results of a selected action, the team is not managing the incident; they're not even experimenting, which is how we test such predictions as part of confirming our logic and reasoning. Without informed guesswork and thoughtful consideration of alternatives, the team is being out-thought by its adversaries; the attackers are still managing and directing the incident, and defense is trapped into reacting as they call the shots.

Recovery: Getting Back to Business

Recovery is the process by which the organization's IT infrastructure, applications, data, and workflows are reestablished and declared operational. In an ideal world, recovery starts when the eradication phase is complete, and the hardware, networks, and other systems elements are declared safe to restore to their required normal state. The ideal recovery process brings all elements of the system back to the moment in time just before the incident started to inflict damage or disruption to your systems. When recovery is complete, end users should be able to log back in and start working again, just as if they'd last logged off at the end of a normal set of work-related tasks.

It's important to stress that every step of a recovery process must be validated as correctly performed and complete. This may need nothing more than using some simple tools to check status, state, and health information, or using preselected test suites of software and procedures to determine whether the system or element in question is behaving as it should be. It's also worth noting that the more complex a system is, the more it may need to have a specific order in which subsystems, elements, and servers are reinitialized as part of an overall recovery and restart process.

With that in mind, let's look at this step by step, in general terms:

Eradication complete. Ideally, this is a formal declaration by the CSIRT that the systems elements in question have been verified to be free of any instances of the causal agent (malware, illicit user IDs, corrupted or falsified data, etc.).

Restore from bare metal to working OS. Servers, hosts, endpoints, and many network devices should be reset to a known good set of initial software, firmware, and control parameters. In many cases, the IT department has made standard image sets that they use to do a full initial load of new hardware of the same type. This should include setting up systems or device administrator identities, passwords, or other access control parameters. At the end of this task, the device meets your organization's security and operational policy requirements and can now have applications, data, and end users restored to it.

Ensure all OS updates and patches are installed correctly… …if any have been released for the versions of software installed by your distribution kits or pristine system image copies.

Restore applications as well as links to applications platforms and servers on your network. Many endpoint devices in your systems will need locally installed applications, such as email clients, productivity tools, or even multifactor access control tools, as part of normal operations. These will need to be reinstalled from pristine distribution kits if they were not in the standard image used to reload the OS. This set of steps also includes reloading the connections to servers, services, and applications platforms on your organization's networks (including extranets). This step should also verify that all updates and patches to applications have been installed correctly.

Restore access to resources via federated access controls and resources beyond your security perimeter out on the Internet. This step may require coordination with these external resource operators, particularly if your containment activities had to temporarily disable such access.

At this point, the systems and infrastructure are ready for normal operations. Aren't they?

Data Recovery

Remember that the IT systems and the information architecture exist because the organization's business logic needs to gather, create, make use of, and produce information to support decisions and action. Restoring the data plane of the total IT architecture is the next step that must be taken before declaring the system ready for business again.

In most cases, incident recovery will include restoring databases and storage systems content to the last known good configuration. This requires, of course, that the organization has a routine process in place for making backups of all of its operational data. Those backups might be

- Complete copies of every data item in every record in every database and file

- Incremental or partial copies, which copy a subset of records or files on a regular basis

- Differential, update, or change copies, which consist of records, fields, or files changed since a particular time

- Transaction logs, which are chronologically ordered sets of input data

Restoring all databases and file systems to their “ready for business as usual” state may take the combined efforts of the incident response team, database administrators, application support programmers, and others in the IT department. Key end users may also need to be part of this process, particularly as they are probably best suited to verifying that the systems and the data are all back to normal.

For example, a small wholesale distributor might use a backup strategy that makes a full copy of its databases once per week, and then a differential backup at the end of every business day. Individual transactions (reflecting customer orders, payments to vendors, inventory changes, etc.) would be reflected in the transaction logs kept for specific applications or by end users. In the event that the firm's database has been corrupted by an attacker (or a serious systems malfunction), it would need to restore the last complete backup copy, then apply the daily differential backups for each day since that backup copy had been made. Finally, the firm would have to step through each transaction again, either using built-in applications functions that recover transactions from saved log files or by hand.

Now, that distributor is ready to start working on new transactions, reflecting new business. Its CSIRT's response to the incident is over, and it moves on to the post-incident activities we'll look at in just a moment.

Post-Recovery: Notification and Monitoring

One of the last tasks that the incident response team has is to ensure that end users, functional managers, and senior leaders and managers in the organization know that the recovery operations are now complete. This notice serves several important purposes: