SG |

Side Glance: graphs |

And indeed, all limits to human action, once laid down as absolute laws—God’s law, natural law, moral law—are now falling before the onslaught of technology’s productive-destructive capabilities.

—Emanuele Severino, The Essence of Nihilism

Mundus vult decipi: the world wants to be deceived. The truth is too complex and frightening; the taste for the truth is an acquired taste that few acquire.

—Martin Buber, I and Thou

Although technology might be complex, the laws of IT—the business and process principles used in working with that technology—are surprisingly straightforward, or perhaps straightforwardly surprising if you haven’t tried overseeing an IT initiative yourself. Our intuitions are often wrong; the contractor-control model makes it hard for us to accept some of the basic principles that evidence shows guide IT. The old mental model encouraged us to—essentially—assign IT the blame (we usually called it “accountability”) for the uncertainty and inevitability that are inherent in business and technology. A few graphs may help.

Figure 1: Time versus Number of Workers1

Figure 1 is a classic graph in IT theory. In his 1975 book, The Mythical Man-Month, Fred Brooks argued that you can’t speed up a project that’s behind schedule by adding more engineers to it. There is at first a diminishing return from adding incremental developers, and then the return becomes negative. The explanation is that the more engineers you add, the more complicated their interactions and communications become. This is why modern IT is done in small teams.

Figure 2: Deploys per Day per Developer2

Figure 2 is a very recent graph from Accelerate, showing that when we use DevOps, the number of deployments per day per software developer—the best productivity measure we know of—actually goes up as you add more developers. In other words, with DevOps—which streamlines interactions between engineers—productivity can actually go up as you add developers (perhaps showing that Brooks’s Law no longer applies).

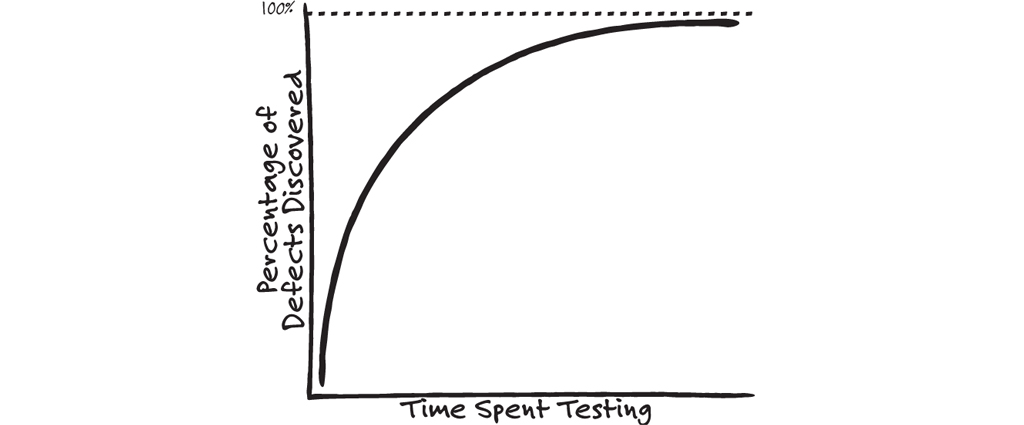

Figure 3: Diminishing Returns for Testing

Figure 3 illustrates this concept of diminishing returns on manual testing effort. In a waterfall project, the more time you spend manually testing a system, the fewer incremental bugs you find. You must decide on the optimal point for releasing the product, knowing that you’ll still have some defects but can’t spend an infinite amount of time looking for them. DevOps changes the equation because tests are automated and run in minutes. Each release is tiny and incremental, and all tests, including old ones, are run every time a change is made.

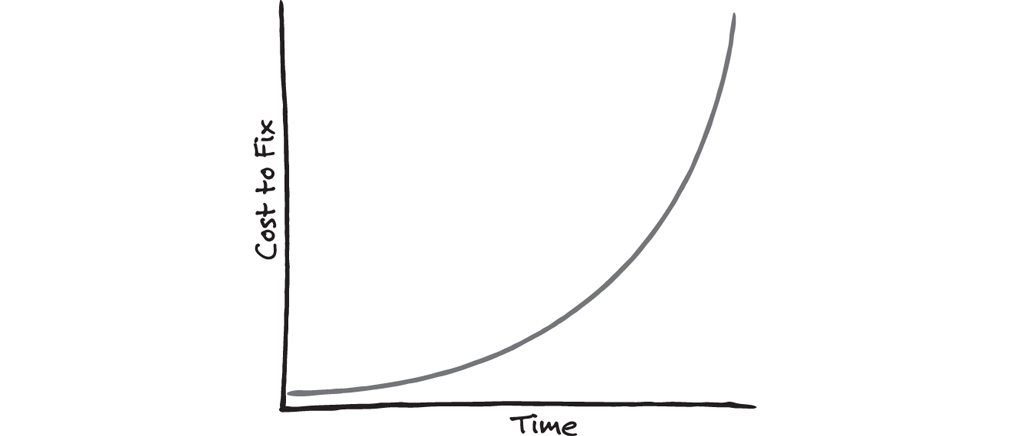

Figure 4: Cost to Fix a Defect versus Time to Discovery3

Figure 4 shows the cost of fixing a defect as a function of how long it took to discover it. In other words, the x-axis is how much time has elapsed since the defect was introduced, and the y-axis is the cost to fix it. There is a huge penalty for not finding and fixing a defect immediately. If the defect is not found until users discover it, the cost to fix it is orders of magnitude higher. DevOps provides very fast feedback: new code is immediately tested using automated scripts, code is merged with that of other developers to quickly discover conflicts, and feedback comes quickly from monitoring usage after code is released.

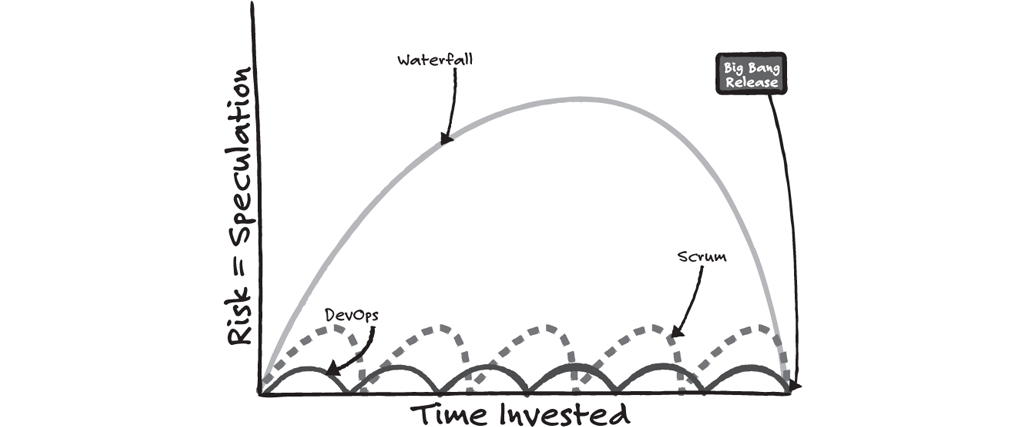

Figure 5: Risk Increases Based on Time Without a Release4

Figure 5 shows value delivered and risk levels over time for a waterfall project, an old-style Agile project, and a DevOps project. Any money spent on the project is at risk until code is released to users and the business can verify that it is adding value. For waterfall projects, the result is “speculation buildup”—money keeps flowing into the project on the speculation that it is adding value. The total amount of risk is the integral under the curve. Agile and DevOps initiatives maintain risk at a low level by constantly releasing software whose value can be ascertained, and capture considerably more value—especially given the time value of money.

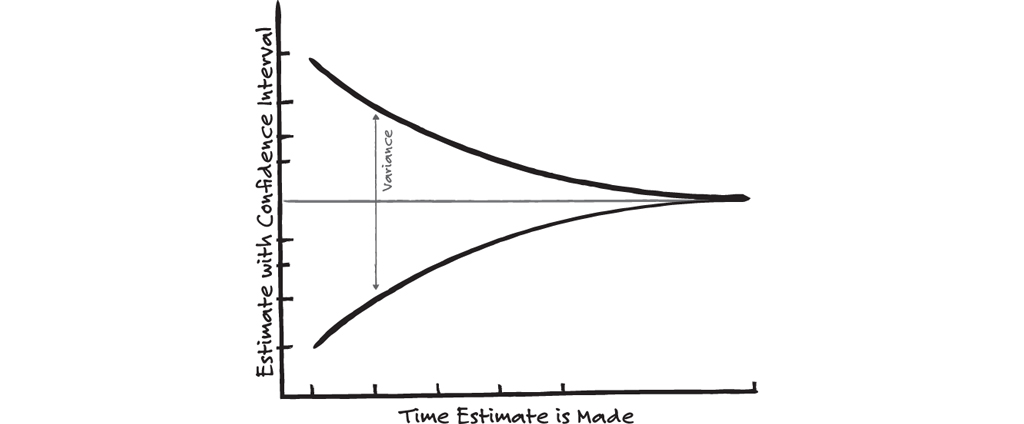

Figure 6: The Cone of Uncertainty

Figure 6 is another classic, called the Cone of Uncertainty. If you estimate a project before it starts, your estimate should have a very large confidence interval. The further into the project you get, the more information you have, so the confidence interval decreases. Early estimates should never be relied on—they are (legitimately) always wrong.

Figure 7: Vulnerable Downloads per Month5

Figure 7 illustrates something important to understand about security. Even though we know that certain pieces of software have security vulnerabilities, we’re still using them. This graph shows that businesses continued to download and use a piece of open-source software even after its vulnerability was apparent. One reason for this is that we’re afraid to patch our software because something might break. DevOps helps solve this by incorporating automated tests that quickly tell us whether that risk is real. To generalize the message of Figure 7: simple hygiene can considerably improve our security postures.

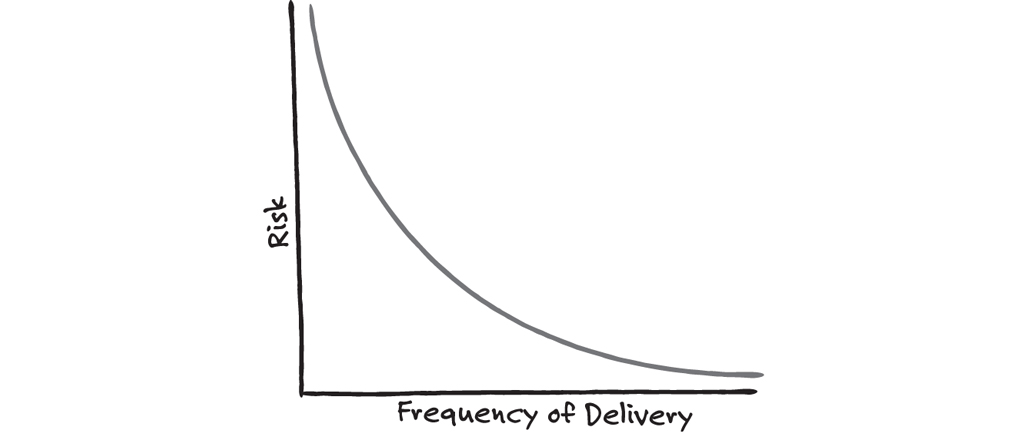

Figure 8: Risk versus Frequency of Delivery

Figure 8 shows delivery risk versus frequency of delivery. The more frequently you deploy, the better you become at it. And the smaller your deployments are, the less risk you have in each one.

Figure 9: Throughput versus Batch Size6

Figure 9 is a standard Lean graph that shows throughput deteriorates quickly as batch size increases. Think of an IT initiative as a batch of requirements (they sit in inventory, then they’re processed and completed). This is one reason why large projects fail. It is a good reason for limiting work in process—working a small number of requirements to completion, then moving on to the next ones.

Some takeaways: Work in small teams, create fast feedback cycles, reduce requirements-in-process, improve security hygiene, deliver small pieces of work frequently.