Chapter 2 reviewed the control and data planes. In this chapter, a lot of our focus will be on the continuously evolving OpenFlow proposal and protocols, viewed by many as the progenitor of the clean slate theory and instigator of the SDN discussion, but we will also discuss, in general terms, how SDN controllers can implement a network’s control plane, and in doing so, potentially reshape the landscape of an operator’s network.

OpenFlow was originally imagined and implemented as part of network research at Stanford University. Its original focus was to allow the creation of experimental protocols on campus networks that could be used for research and experimentation. Prior to that, universities had to create their own experimentation platforms from scratch. What evolved from this initial kernel of an idea was a view that OpenFlow could replace the functionality of layer 2 and layer 3 protocols completely in commercial switches and routers. This approach is commonly referred to as the clean slate proposition.

In 2011, a nonprofit consortium called the Open Networking Foundation (ONF) was formed by a group of service providers[35] to commercialize, standardize, and promote the use of OpenFlow in production networks. The ONF is a new type of Standards Development Organization in that it has a very active marketing department that is used to promote the OpenFlow protocol and other SDN-related efforts. The organization hosts an annual conference called the Open Networking Summit as part of these efforts.

In the larger picture, the ONF has to be credited with bringing attention to the phenomenon of software-defined networks.

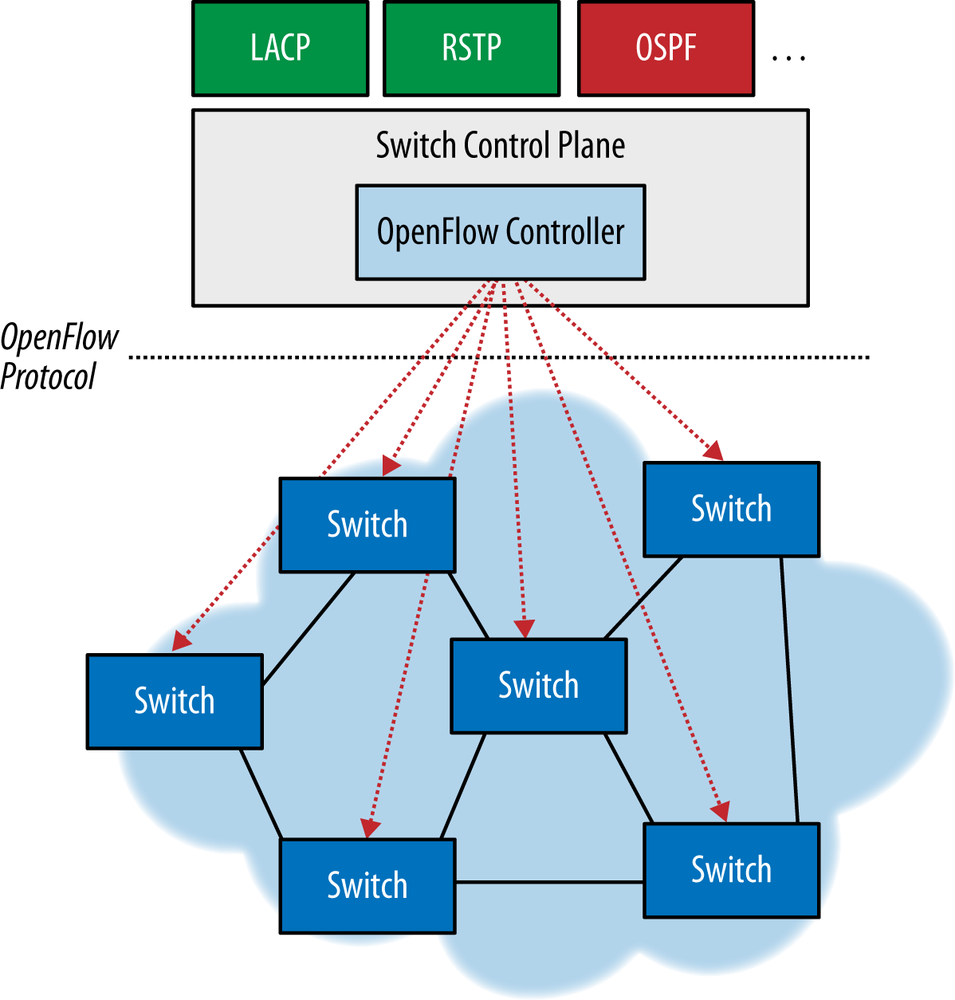

The key components of the OpenFlow model, as shown in Figure 3-1, have become at least part of the common definition of SDN, mainly:

Separation of the control and data planes (in the case of the ONF, the control plane is managed on a logically centralized controller system).

Using a standardized protocol between controller and an agent on the network element for instantiating state (in the case of OpenFlow, forwarding state).

Providing network programmability from a centralized view via a modern, extensible API.

OpenFlow is a set of protocols and an API, not a product per se or even a single feature of a product. Put another way, the controller does nothing without an application program (possibly more than one) giving instructions on which flows go on which elements (for their own reasons).

The OpenFlow protocols are currently divided in two parts:

A wire protocol (currently version 1.3.x) for establishing a control session, defining a message structure for exchanging flow modifications (flowmods) and collecting statistics, and defining the fundamental structure of a switch (ports and tables). Version 1.1 added the ability to support multiple tables, stored action execution, and metadata passing—ultimately creating logical pipeline processing within a switch for handling flows.

A configuration and management protocol, of-config (currently version 1.1) based on NETCONF (using Yang data models) to allocate physical switch ports to a particular controller, define high availability (active/standby) and behaviors on controller connection failure. Though OpenFlow can configure the basic operation of OpenFlow command/control it cannot (yet) boot or maintain (manage in an FCAPS context) an element.

In 2012, the ONF moved from “plugfests” to test interoperability and compliance, to a more formalized test (outsourced to Indiana University). This was driven by the complexity of the post-OpenFlow wire version 1.0 primitive set.

While the ONF has discussed establishing a reference implementation, as of this writing, this has not happened (there are many open source controller implementations).

OpenFlow protocols don’t directly provide the network slicing (an attractive feature that enables the ability to divide an element into separately controlled groups of ports or a network into separate administrative domains). However, tools like FlowVisor[36] (which acts as a transparent proxy between multiple controllers and elements) and specific vendor implementations (agents that enable the creation of multiple virtual switches with separate controller sessions) make this possible.

So, where does OpenFlow go that we haven’t been before?

First, it introduces the concept of substituting ephemeral state (flow entries are not stored in permanent storage on the network element) for the rigid and unstandardized semantics of various vendors’ protocol configuration.[37] Ephemeral state also bypasses the slower configuration commit models of past attempts at network automation.

For most network engineers, the ultimate result of such configuration is to create forwarding state (albeit distributed and learned in a distributed control environment). In fact, for many, the test of proper configuration is to verify forwarding state (looking at routing, forwarding, or bridging tables). Of course, this shifts some of the management burden to the controller(s)—at least the maintenance of this state (if we want to be proactive and always have certain forwarding rules in the forwarding table) versus the distributed management of configuration stanzas on the network elements.[38]

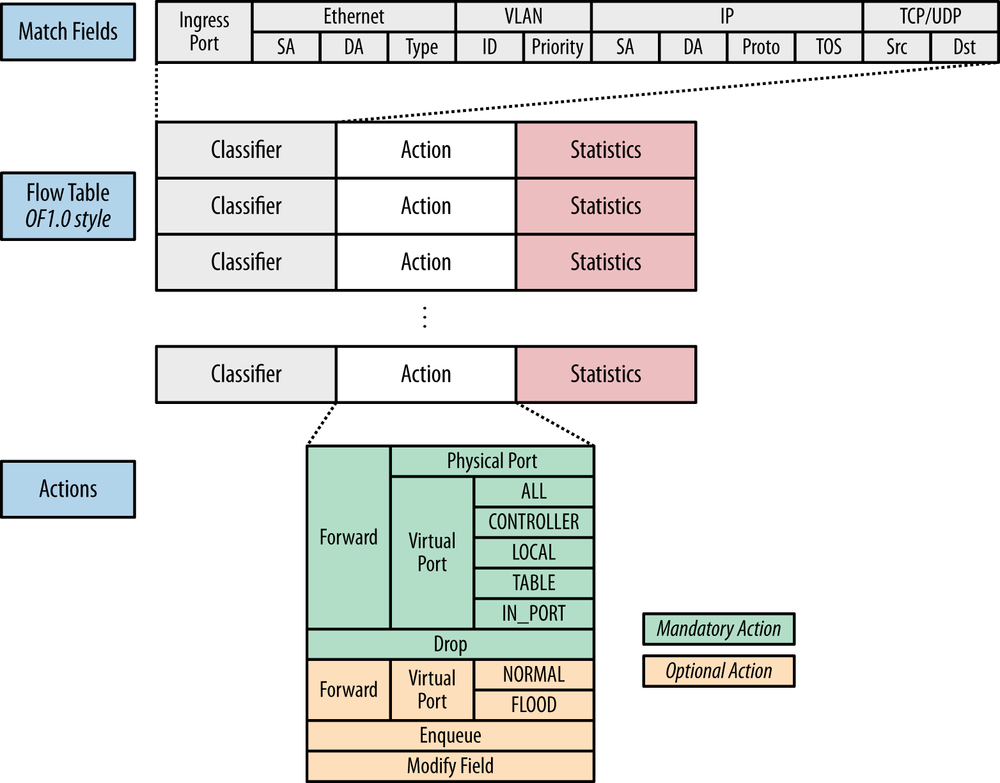

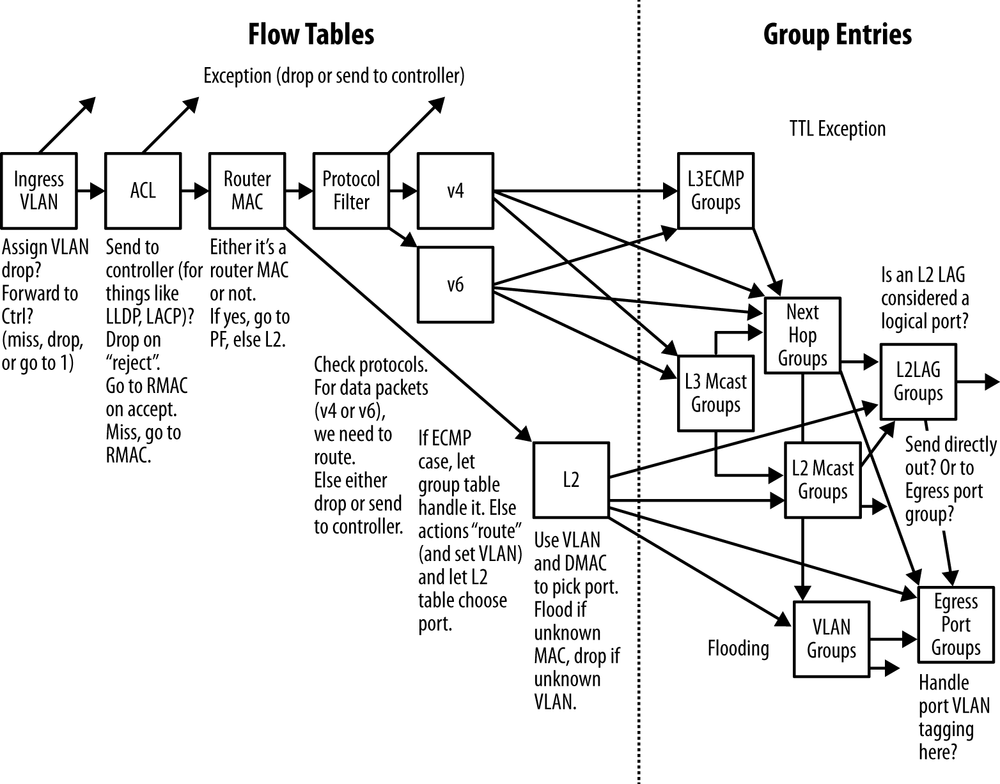

Second, in an OpenFlow flow entry, the entire packet header (at least the layer 2 and layer 3 fields) are available for match and modify actions, as shown in Figure 3-2. Many of the field matches can be masked[39]. These have evolved over the different releases of OpenFlow[40]. Figure 3-2 illustrates the complexity of implementing the L2+L3+ACL forwarding functionality (with next hop abstraction for fast convergence) can be. The combination of primitives supported from table to table leads to a very broad combination of contingencies to support.

This is a striking difference in breadth of operator control when compared with the distributed IP/MPLS model (OpenFlow has an 11-tuple match space). A short list of possibilities includes:

Because of the masking capability in the match instructions, the network could emulate IP destination forwarding behavior.

At both layer 2 and layer 3, the network can exhibit source/destination routing behavior.

There is no standardized equivalent (at present) to the packet matching strengths of OpenFlow, making it a very strong substitute for Policy Based Routing or other match/forward mechanisms in the distributed control environment.

Finally, there is the promise of the modify action. The original concept was that the switch (via an application running above the switch) could be made to behave like a service appliance, performing services like NAT or firewall). Whether or not this is realizable in hardware-based forwarding systems, this capability is highly dependent on vendor implementation (instructions supported, their ordering, and the budgeted number of operations to maintain line rate performance)[41]. However, with the label manipulation actions added to version 1.3 of the wire protocol, it is possible that an OpenFlow controlled element could easily emulate integrated platform behaviors like an MPLS LSR (or other traditional distributed platform functions).

The OpenFlow protocol is extensible through an EXPERIMENTER extension (which can be public or private) for control messages, flow match fields, meter operation, statistics, and vendor-specific extensions (which can be public or private).

Table entries can be prioritized (in case of overlapping entries) and have a timed expiry (saving clean-up operation in some cases, and setting a drop dead efficacy for flows in one of the controller loss scenarios).

OpenFlow supports PHYSICAL, LOGICAL, and RESERVED port types. These ports are used as ingress, egress, or bidirectional structures.

The RESERVED ports IN_PORT and ANY are self-explanatory.

TABLE was required to create a multitable pipeline (OpenFlow supports up to 255 un-typed tables with arbitrary GoTo ordering).

The remaining RESERVED ports enable important (and interesting) behaviors[42]:

- LOCAL

An egress-only port, this logical port allows OpenFlow applications access ports (and thus processes) of the element host OS.

- NORMAL

An egress-only port, this logical port allows the switch to function like a traditional Ethernet switch (with associated flooding/learning behaviors). According to the protocol functional specification, this port is only supported by a Hybrid switch.[43]

- FLOOD

An egress-only port, this logical port uses the replication engine of the network element to send the packet out all standard (nonreserved) ports. FLOOD differs from ALL (another reserved port) in that ALL includes the ingress port. FLOOD leverages the element packet replication engine.

- CONTROLLER

Allows the flow rule to forward packets (over the control channel) from data path to the controller (and the reverse). This enables PACKET_IN and PACKET_OUT behavior.

The forwarding paradigm offers two modes: proactive (pre-provisioned) and reactive (data-plane driven). In the proactive mode, the control program places forwarding entries ahead of demand. If the flow does not match an existing entry, the operator has two (global) options—to drop the flow or to use the PACKET_IN option to make a decision to create a flow entry that accommodates the packet (with either a positive/forward or negative/disposition)—in the reactive mode.

The control channel was originally specified as a symmetric TCP session (potentially secured by TLS). This channel is used to configure, manage (place flows, collect events, and statistics) and provide the path for packets from the switch to and from the controller/applications.

Statistics support covers flow, aggregate, table, port, queue, and vendor-specific counters.

In version 1.3 of the protocol, multiple auxiliary connections are allowed (TCP, UDP, TLS, or DTLS) that are capable of handling any OpenFlow message type or subtype. There is no guarantee of ordering on the UDP and DTLS channels, and behavioral guidelines are set in the specification to make sure that packet-specific operations are symmetric (to avoid ordering problems at the controller).[44]

OpenFlow supports the BARRIER message to create a pacing mechanism (creating atomicity or flow control) for cases where there may be dependencies between subsequent messages (the given example is a PACKET_OUT operation that requires a flow to first be placed to match the packet that enables forwarding).

OpenFlow provides several mechanisms for packet replication.

The ANY and FLOOD reserved virtual ports are used primarily for emulating/supporting the behaviors of existing protocols (e.g., LLDP, used to collect topology for the controller, often uses FLOOD as its output port).

Group tables allow the grouping of ports into an output port set to support multicasting, multipath, indirection, and fast-failover. Each group table is essentially a list of action buckets (where ostensibly one of the actions is output, and an egress port is indicated). There are four group table types, but only two are required:

- All

Used for multicast all action buckets in the list have to be executed[45]

- Indirect

Used to simulate the next hop convergence behavior in IP forwarding for faster convergence

Action lists in the Apply action (the Apply action was a singleton in OpenFlow version 1.0) allow successive replications by creating using a list of output/port actions.

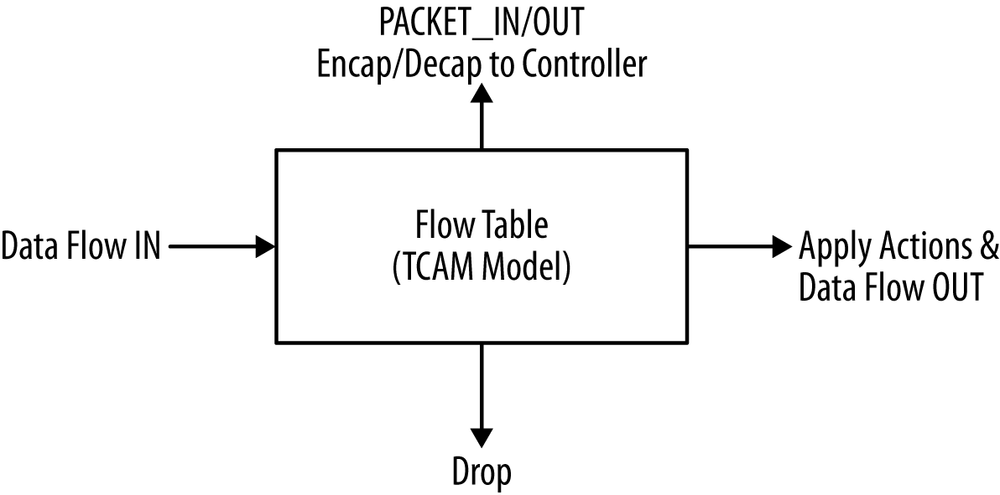

The model for an OpenFlow switch (Figure 3-3) works well on a software-based switch (eminently flexible in scale and packet manipulation characteristics) or a hardware-forwarding entity that conforms to some simplifying assumptions (e.g., large, wide, deep, and multi-entrant memories like a TCAM). But because not all devices are built this way, there’s a great deal of variation in the support of all the packet manipulations enabled by the set of OpenFlow primitives, multiple tables, and other aspects that give OpenFlow its full breadth and power.

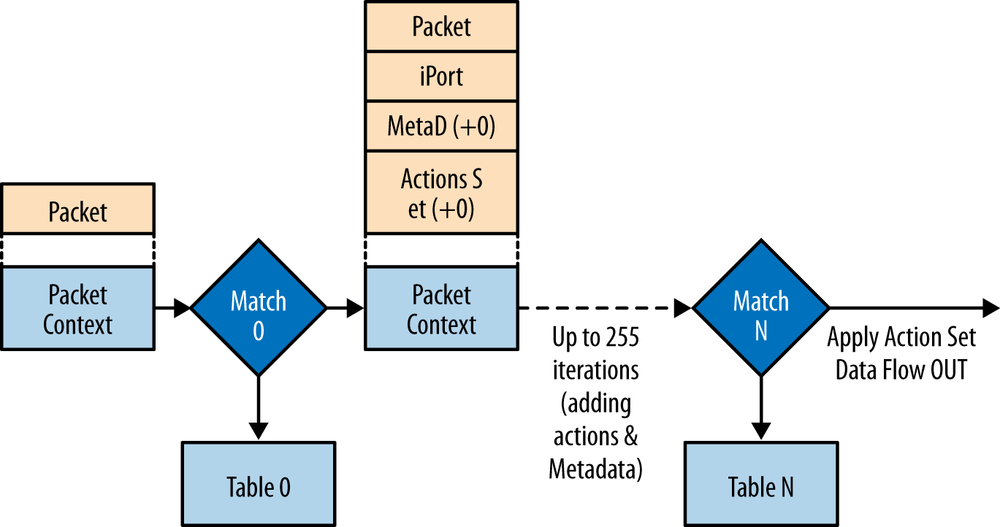

In general, the potential combinatorial complexity of OpenFlow version 1.1 (see Figure 3-4) and beyond do not work well on ASIC-based forwarders. For this reason, the level of abstraction chosen for OpenFlow has come into question, as has its applicability for ALL applications.

While this is a commonly held belief, an interview of Martin Casado (OpenFlow creator) is often cited in the more general argument about abstraction level.[46]

In full context of the interview, Martin cites a role for OpenFlow in Traffic Engineering applications, makes comments on the current limitations of implementing OpenFlow on existing ASICs (to the general point), and then makes a specific comment on the applicability of OpenFlow for Network Virtualization: “I think OpenFlow is too low-level for this.”)[47]

Figure 3-4. The post OpenFlow pipeline model in version 1.1 and beyond (very complex—combinations complexity O(n!* a(2^l)) paths where n = number of tables, a = number of actions and l = width of match fields)

The protocol had limited capability detection in earlier versions, which was refactored in version 1.3 to support some primordial table capability description (adding match type for each match field—e.g., exact match, wildcard, and LPM).

The following shortcomings were cited for the existing abstraction[48]:

Information loss

Information leakage

Weak control plane to data plane abstraction

Combinatorial state explosion

Data-plane-driven control events

Weak indirection infrastructure

Time-sensitive periodic messaging

Multiple control engines

Weak extensibility

Missing primitives

A separate workgroup, FAWG, is attempting a first-generation, negotiated switch model through table type patterns (TTPs).[49] FAWG has developed a process of building, identifying (uniquely), and sharing TTPs. The negotiation algorithm (built on a Yang model) and messaging to establish an agreed TTP between controller and switch is also being developed (a potential addition to of-config version 1.4).

A TTP model is a predefined switch behavior model (e.g., HVPLS_over_TE forwarder and L2+L3+ACL) represented by certain table profiles (match/mask and action) and table interconnections (a logical pipeline that embodies a personality). These profiles may differ based on the element’s role in the service flow (e.g., for the HVPLS forwarder, whether the element is head-end, mid-point, or egress).

Early model contributions suggest further extensions may be required to achieve TTP in OpenFlow version 1.3.x.

If FAWG is successful, it may be possible for applications above the controller to be aware of element capabilities, at least from a behavior profile perspective.

Here is a simple example of the need for TTP (or FPMOD).[50]

Hardware tables can be shared when they contain similar data and have low key diversity (e.g., a logical table with two views; MAC forwarding and MAC Learning). This table could be implemented many different ways, including as a single hardware table. An OpenFlow controller implementing MAC learning/bridging will have to have a separate table for MAC learning and a different table for MAC bridging (a limitation in expression in OpenFlow). There is no way today to tie these two potentially differing views together. In this simple example shown in Figure 3-5, there could arise timing scenarios where synchronization of table of flow mods from the two separate OpenFlow table entities may be necessary (i.e., you can’t do forwarding before learning).

Figure 3-5. Example of complexity behind TTP model for L2+L3+ACL/PBR TTP (source: D. Meyer and C. Beckmann of Brocade)

In Figure 3-5’s case, IPv4 and IPv6 tables point to group tables to emulate the use of the next hop abstraction in traditional FIBs (for faster convergence).

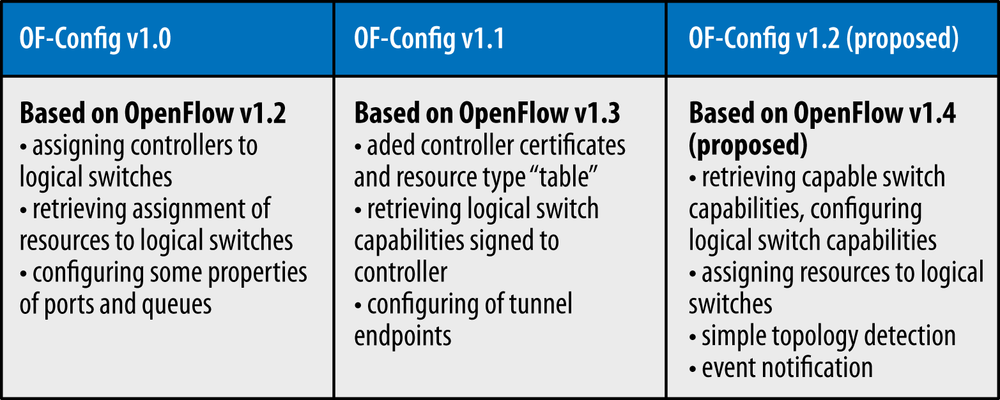

The of-config protocol was originally designed to set OpenFlow related information on the network element (of-config 1.0). The protocol is structured around XML schemas, Yang data models, and the NETCONF protocol for delivery.

Proposals to extend of-config can come from within the Config-Mgmt Working Group or from other groups (e.g., FAWG, Transport[51]).

As of version 1.1 of of-config, the standard decouples itself from any assumptions that an operator would run FlowVisor (or a similar, external slicing proxy) to achieve multiple virtual switch abstractions in a physical switch. This changes the working model to one in which the physical switch can have multiple internal logical switches, as illustrated in Figure 3-6.

Using of-config version 1.1, in addition to controllers, certificates, ports, queues, and switch capabilities operators can configure some logical tunnel types (IP-in-GRE, NV-GRE, VX-LAN). This extension requires the support of the creation of logical ports on the switch.

Proposals exist to expand of-config further in the areas of bootstrapping and to expand the abilities of the of-config protocol in version 1.2 (see Figure 3-7) to support even more switch/native functionality (e.g., the ability to configure a local/native OAM protocol daemon has been proposed as an extension).

By extending of-config into native components, the ONF may have inadvertently broached the topic of hybrid operation and may also have created some standards-related confusion[52].

One of the slated items for the Architecture group to study is a potential merge of the wire and configuration protocols. The Architecture group is not chartered to produce any protcols or specifications as its output, so that would have to be done at a future time by a different group.

The use of NETCONF may also be expanded in call home scenarios (i.e., switch-initiated connections), but the designation of BEEP (specified for NETCONF connections of this type in of-config) to a historical protocol may require some changes in the specification or cooperative work with the IETF.

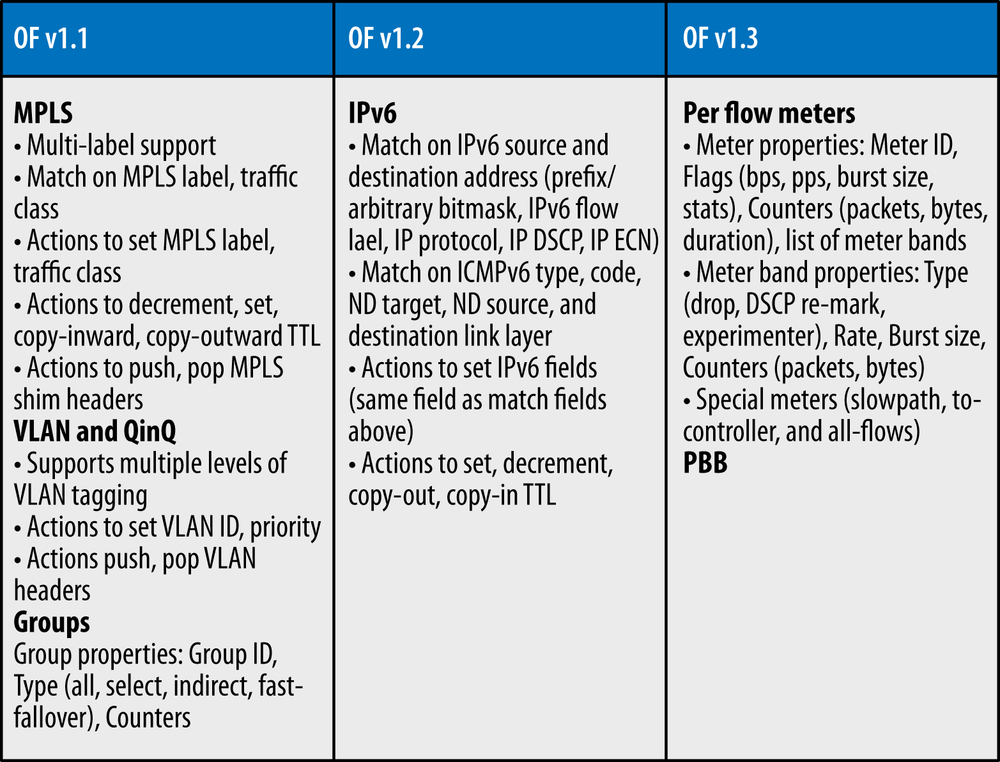

The Extensibility Working Group exists to vet proposed extensions to the wire protocol to add new functionality to OpenFlow (see Figure 3-8 for the general progression of the protocol).

In April 2012, when OpenFlow wire protocol version 1.3 was released, the ONF decided to slow down extensibility releases until there was a higher adoption rate of that version and to allow for interim bug-fix releases (e.g., allowing a 1.3.1 release to fix minor things in 1.3).[53]

The major extension candidates for the OpenFlow wire protocol version 1.4 come from a newly formed Transport Discussion Group,[54] whose focus is on an interface between OpenFlow and optical transport network management systems to create a standard, multivendor transport network control (i.e., provisioning) environment.[55]

Note

Look for full coverage of OpenFlow version 1.4 enhancements in a future edition of this book.

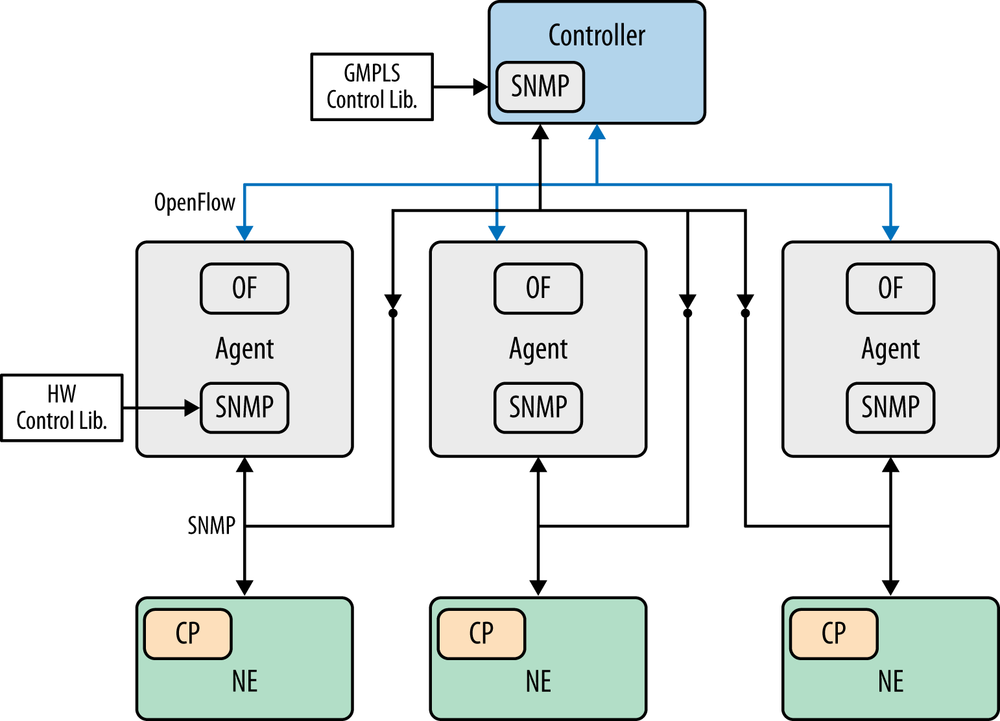

The first efforts at the integration of transport and OpenFlow demonstrated capability by abstracting the optical network into an understandable switch model for OpenFlow—an abstract view to create a virtual overlay.

The architecture of the currently proposed transport solution(s) coming out of the discussion group will combine the equipment level information models (i.e., OTN-NE, Ethernet NE, and MPLS-TP NE) and network level information models (MTOSI, MTNM) in combination with an OpenFlow driven control plane—a direct control alternative.[56]

Even in the direct control scenario, questions remain about various hybrid control plane scenarios. This is the case over whether or not there will be a combination of traditional EMS/NMS protocols and OpenFlow-driven control on the same transport network. This is illustrated in Figure 3-9.

While OpenFlow provides a standardized southbound (controller to element agent) protocol for instantiating flows, there is no standard for either the northbound (application facing) API or the east/west API.

The east/west state distribution on most available controllers is based on a database distribution model, which allows federation of a single vendor’s controllers but doesn’t allow an interoperable state exchange.

The Architecture Working Group is attempting to address this at least indirectly—defining for SDN a general SDN architecture. The ONF has a history of marrying the definition of SDN and OpenFlow. Without these standardized interfaces, the question arises whether the ONF definition of SDN implies openness.

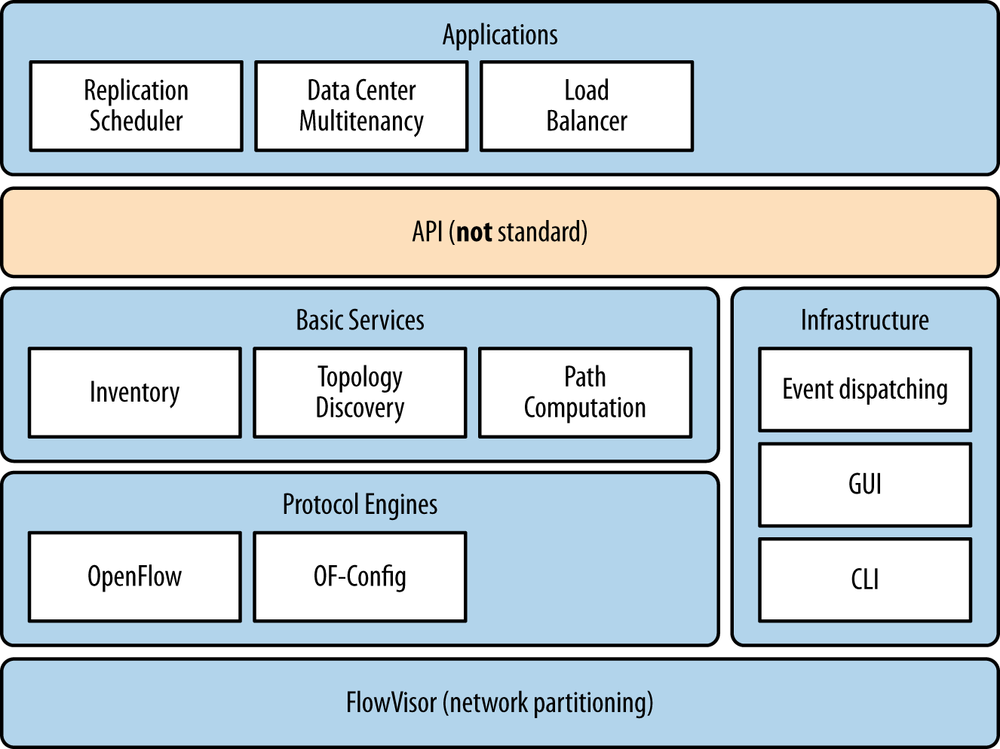

Most OpenFlow controllers (Figure 3-10) provide a basic set of application services: path computation, topology (determined through LLDP, which limits topology to layer 2), and provisioning. To support of-config, they need to support a NETCONF driver.

The ongoing questions about SDN architecture and OpenFlow are around whether the types of application services provided by an OpenFlow controller (and the network layer at which OpenFlow operates) are sufficient for all potential SDN applications.[57]

Research into macro topics around the OpenFlow model (e.g., troubleshooting, the expression of higher level policies with OpenFlow semantics, and the need for a verification layer between controllers and elements) are being conducted in many academic and research facilities, but specifically at the Open Network Research Center (ONRC).

The ONF did spawn a Hybrid Working Group. The group proposed architectures for a Ships in the Night (SIN) model of operation and an Integrated Hybrid model. The board only accepted the recommendations of the SIN model.

The Integrated Hybrid model spawned a series of questions around security and the inadvertent creation of a hybrid network.

Assuming a controlled demarcation point is introduced in the network element (between the OpenFlow and native control planes), the security questions revolve around how the reserved ports (particularly CONTROLLER, NORMAL, FLOOD, and LOCAL) could be exploited to allow access to native daemons on the hybrid (applications on the controller or OpenFlow ports spoofing IGP peers and other protocol sessions to insert or derive state) or the native network.

The security perimeter expands in the case of an unintended connection that creates a hybrid network. This occurs when one end of an external/non-loopback network link is connected to an OpenFlow domain and the other end to a native domain.

Note

A newly forming Security Working Group could address hybrid security concerns, which at the time of this writing didn’t encompass enough material for a separate discussion. Look for more on this in future editions of this book.

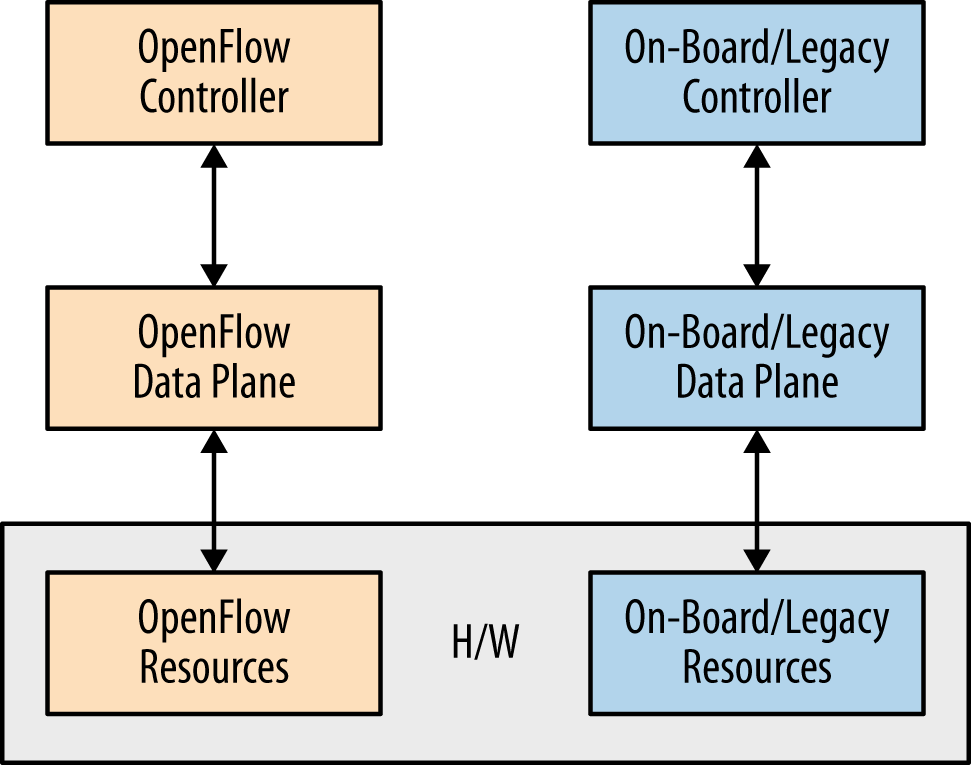

The Ships in the Night proposition assumes that a port (physical or logical) can only be used for OpenFlow or native, but not both (see Figure 3-11). The focus of SIN was on:

Bounding the allocated resources of the OpenFlow process and such that they couldn’t impede the operation of the native side (and the reverse). Suggestions included the use of modern process level segregation in the native host OS (or by virtualization).

Avoiding the need to synchronize state or event notifications between the control planes.

Strict rules for the processing of flows that included the use of the LOCAL, NORMAL, and FLOOD reserved ports (with explicit caveats25).

SIN expands the preceding ONF definition of hybrid (as reflected in the definition of NORMAL).

The SIN model allowed port segregation by logical port or VLAN and recommended the use of MSTP for spanning tree in such an environment (a step that is actually necessary for certain types of integrated hybrids).

Lastly, SIN pointed out the ambiguities in the interactions of the reserved ports and the looseness of the port delegation model as potential areas of improvement for a SIN hybrid.

The recommendations of the Hybrid Working Group’s Integrated Architecture white paper were rejected by the ONF. The board later recommended the formation of a Migration Working Group to assist OpenFlow adopters in the deployment of OpenFlow network architecture without a transitory period through hybrid use. However, demand for integrated hybrids still remains, and the newly formed Migration Working Group may address hybrid devices and hybrid networks.[58]

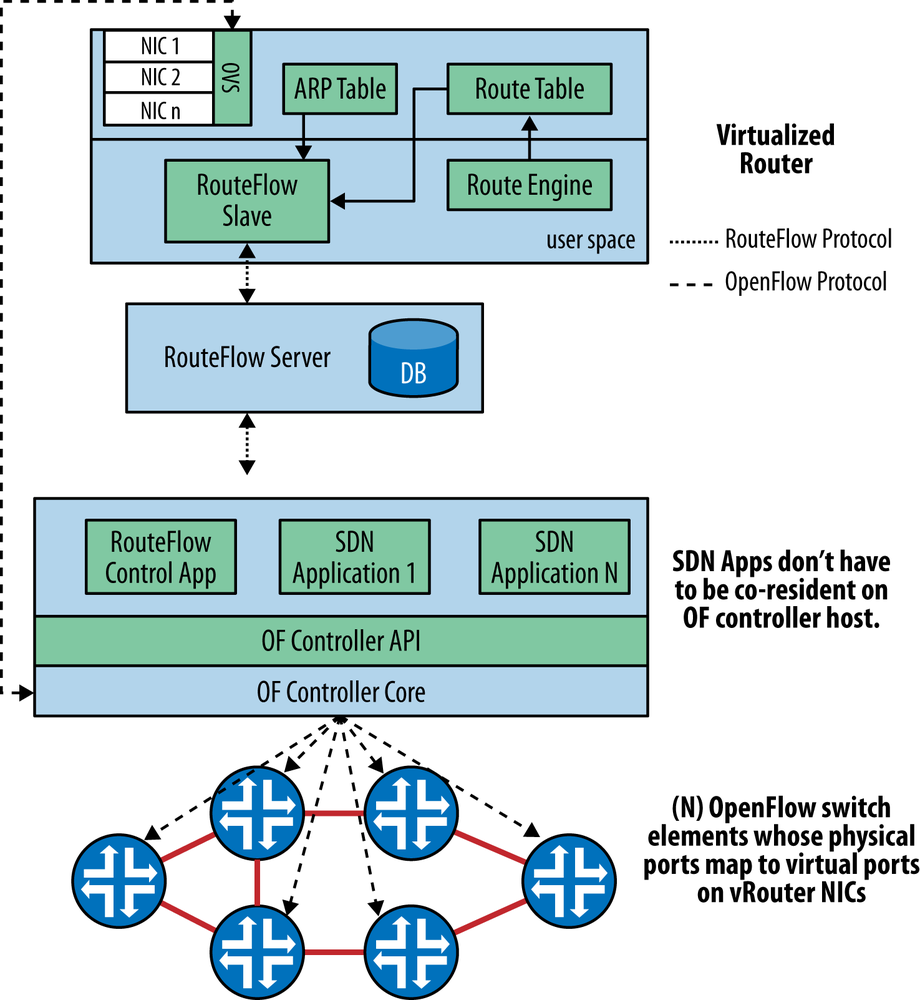

One of the existing/deployed models of integration is to integrate the OpenFlow domain with the native domain at the control level (e.g., RouteFlow). Unlike the integrated hybrid, this purposely builds a hybrid network (see Figure 3-12).

The general concept behind this approach is to run a routing stack on a virtual host and bind the virtual ports on the hypervisor vswitch in that host to physical ports on associated OpenFlow switches. Through these ports, the virtual router forms IGP and/or BGP adjacencies with the native network at appropriate physical boundary points by enabling the appropriate protocol flows in the flow tables of the boundary switches. The virtual router then advertises the prefixes assigned to the OpenFlow domain through appropriate boundary points (appearing to the native network as if they were learned through an adjacent peer). Additionally, (by using internal logic and policies) the virtual router creates flow rules in the OpenFlow domain that direct traffic toward destination prefixes learned from neighbors in this exchange using flow rules that ultimately point to appropriate ports on the boundary switch.

One potential drawback of this hybrid design is that flow management and packet I/O take place serially on a common TCP session, which brings the design back around to the problems that needed to be addressed in the traditional distributed control plane: blocking, control packet I/O, latency, queue management, and hardware programming speed. Some of these problems may be ameliorated by the use of alternative control channels (proposed in OpenFlow 1.3), as these ideas progress and mature in the OpenFlow wire protocol.

The tools we have at hand to form an integrated hybrid connection (in the OpenFlow protocol and the native protocols on the same device) are tables and interfaces.

A table-based solution could be crafted that uses the GoToTable semantics of OpenFlow to do a secondary lookup in a native table. Today, OpenFlow has no knowledge of tables other than its own and no way to acquire this knowledge. A solution could be crafted that allows the discovery of native tables during session initialization. The problems with this solution are as follows:

The table namespace in OpenFlow is too narrow for VRF table names in native domains.

There can be a great deal of dynamic table creation on the native side, particularly on a provider edge or data center gateway device that would need to be updated to the controller (restarting the session could be onerous and dynamic discovery requires even more standardization effort).

The native domain could have more than 64 tables on certain devices.

Though a GoToTable solution would be elegant (incorporating all our assumptions for transparency above), it seems like a complicated and impactful route.

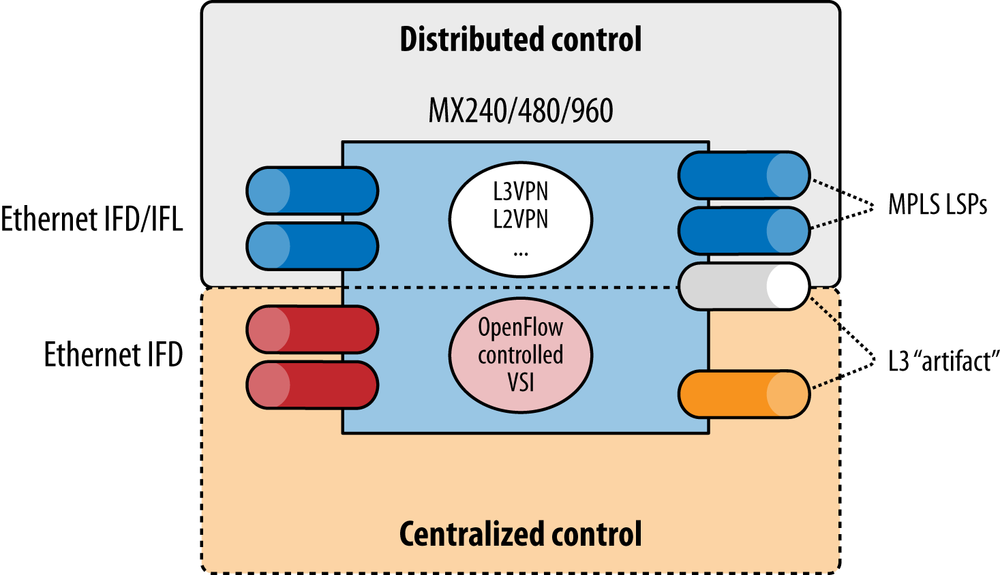

There currently are unofficial, interface-based solutions to achieve bidirectional flow between domains. The most common is to insert a layer 3 forwarding artifact in the OpenFlow switch domain. That artifact can then be leveraged through a combination of NORMAL behavior, DHCP, and ARP, such that end stations can discover a forwarding gateway device in an OpenFlow domain. While this works, it is far from robust. The NORMAL logical port is an egress-only port on the OpenFlow side, so flowmods to control the traffic in the reverse direction are not possible. Further, some administrators/operators do not like to use the NORMAL construct for security reasons.

It is possible to create rules directly cross-connecting a layer 3 artifact with OpenFlow controlled ports to allow ingress and egress rules, if we move forward with some extension to the interface definition that allows us to tag the interface as a layer 3 forwarder or native port (the semantics are our least concern). For example, in the Junos OS (Juniper Networks), there is a construct called a logical tunnel (see Figure 3-13). This construct can have one end in the OpenFlow domain and one in any routing domain on the native side. For an operator, this provides a scalable, transparent hybrid solution, but the only tag the operator can hang on the port (to discover its dual nature) is its name (which is unfortunately unique to Juniper Networks).

An interface-based solution would then require the following:

At minimum, an extension to port description to tag it as a native artifact (an access point between domains). Other additional attributes may indicate the nature of the domains (e.g., IP/MPLS) and the routing-instances that they host. These attributes can be exchanged with the vendor agent during port-status message or in Features-reply message, as part of port-info. (These are proposed extensions to the OpenFlow standard.)

The vendor agent should implement any MAC-related functionality required for bidirectional traffic flow (e.g., auto-associate the MAC of the artifact with any prefixes assigned to or point to the artifact in the native table).

The vendor agent should support OpenFlow-ARP-related functionality so that devices in the OpenFlow domain can discover the MAC of the artifact.

The native port can be implemented as an internal loopback port (preferable) or as an external loopback (i.e., a symmetric solution is preferred over an asymmetric solution).

It will be preferable if certain applications, such as topology discovery by LLDP, exclude the native artifacts/ports. (This is a prescribed operational behavior.)

The integrated hybrid should support virtual interfaces (e.g., sharing a link down to the level of a VLAN tag). External/native features of any shared link (such as a ports supporting a VLAN trunk) should work across traffic from both domains (where the domains operate in parallel but do not cross-connect)[59]. Further, native interface features may be applied at the artifact (that connects the domains), but there is no assumption that they have to be supported. This behavior is vendor dependent, and support, consequences (unexpected behaviors), and ordering of these features need to be clearly defined by the vendor to their customers.

OpenFlow (and its accompanying standards organization, the ONF) is credited with starting the discussion of SDN and providing the first vestige of modern SDN control: a centralized point of control, a northbound API that exposes topology, path computation, and provisioning services to an application above the controller, as well as a standardized southbound protocol for instantiating forwarding state on a multivendor infrastructure.

Unfortunately, the OpenFlow architecture does not provide a standardized northbound API, nor does it provide a standardized east-west state distribution protocol that allows both application portability and controller vendor interoperability. Standardization may progress through the newly spawned Architecture Working Group, or even the new open source organization OpenDaylight Project.

OpenFlow provides a great deal of flow/traffic control for those platforms that can exploit the full set of OpenFlow primitives. The ONF has spawned a working group to address the description/discovery of the capabilities of vendor hardware implementations as they apply to the use of the primitive set to implement well-known network application models.

Even though there are questions about the level of abstraction implemented by OpenFlow and whether its eventual API represents a complete SDN API, there is interest in its application, and ongoing efforts around hybrid operation may make it easier to integrate its capability for matching/qualifying traffic in traditional/distributed networks or at the borders between OpenFlow domains and native domains.

[35] There are currently 90+ members of the ONF, including academic and government institutions, enterprises, service providers, software companies, and equipment manufacturers.

[36] FlowVisor will introduce some intermediary delay since it has to handle packets between the switch and controller.

[37] The ability to create ephemeral state in combination with programmatic control may only be a temporary advantage of OpenFlow, as there are proposals to add this functionality existing programmatic methods (like NETCONF).

[38] This is not a unique proposition in that PCRF/PCEF/PCC systems (with associated Diameter interactions) have done this in the past in mobile networks on a per-subscriber basis. Standards organizations have been working on a clear definition and standardized processing of the interchanged messages and vendor interoperability between components of the overall system. There is no doubt that the mobile policy systems could evolve into SDN systems and have SDN characteristics. When this happens, the primary distinctions between them and OpenFlow may be flexibility (simplicity, though objective, may also be appropriate).

[39] The type of match supported (contiguous or offset based) is another platform-dependent capability.

[40] Unfortunately, backward compatibility was broken between version 1.2 and prior versions when a TLV structure was added to ofp_match (and match fields were reorganized). In fact, version 1.2 was considered non-implementable because of the number and types of changes (though there was an open source agent that finally did come out in 2012). There were changes to the HELLO handshake to do version discovery, and incompatible switch versions fail to form sessions with the controller.

[41] A later use case explores creating such an application above the controller or virtualizing it in a virtual service path.

[42] CONTROLLER is the only required reserved port in this particular set (the others are optional). The other ports are ANY, IN_PORT, ALL, and TABLE, which are all required. The combinations listed here are interesting for their potential interactions in a hybrid.

[43] The original definition of a “hybrid” was a switch that would behave both as an OpenFlow switch and a layer 2 switch (for the ports in the OpenFlow domain).

[44] There is a rather complete commentary regarding these changes to the protocol, particularly the change of the protocol from a session-based channel to UDP in a JIRA ticket filed by David Ward.

[45] The specification claims the “all” group type is usable for multipath, but this is not multipath in the IP forwarding sense, as the packet IS replicated to both paths. This behavior is more aligned with live/live video feeds or other types of multipathing that require rectification at an end node.

[46] http://searchnetworking.techtarget.com/news/2240174517/Why-Nicira-abandoned-OpenFlow-hardware-control (Subscription required to read full article.)

[47] This expression of OpenFlow complexity courtesy of David Meyer.

[48] FPMODs and Table Typing Where To From Here? (David Meyer/Curt Beckmann) ONF TAG-CoC 07/17/12.

[49] A more complex solution was proposed (under the title Forwarding Plane Models - FPMOD) by the OpenFlow Future Discussion Group but was tabled for the simpler table type profile model being developed in FAWG (suggested by the TAG). This solution is less a set of models and more an extensible set of primitives that are mapped at the switch Hardware Abstraction Layer coding time instead of at the controller (of course, based on a negotiated model of behavior, but not necessarily a static predefined model limited to a pipeline description).

[50] This example also comes from the Meyer/Beckman reference cited earlier in this chapter.

[51] Much of optical switch configuration is static and persistent, so some of the extensions required may be better suited to of-config.

[52] Because of-config uses NETCONF/Yang, the working group is establishing their own Yang data models for these entities (tunnels, OAM). From an SDO perspective, this may not be a good model going forward.

[53] Later, the ONF moved to require new extensibility and config-management suggestions to be implemented as prototypes using the extension parts of the protocol as a working proof of concept (somewhat like other SDO’s requirements for working code to accompany a standard).

[54] A proposal to extend the wire protocol to support optical circuit switching (EXT-154). This extension deals with simple wavelength tuning and further definitions of a port.

[55] There is some merit to the claim that GMPLS was supposed to provide this standardization but that the definitions, interpretations, and thus the implementations of GMPLS are inconsistent enough to void a guarantee of multivendor interoperability.

[56] The solution will either allow direct control of the elements or leverage the proxy slicing functionality of a FlowVisor-like layer and introduce the concept of a client controller for each virtual slice of the optical/transport network (to fit the business applications common or projected in the transport environment).

[57] There has been an ongoing debate as to whether OpenFlow is SDN.

[58] For those that desire a hybrid network, a hybrid-network design proposal (the Panopticon hybrid) with a structure similar to a Data Center overlay model (using pseudowires for the overlay) was presented at ONS 2013.

[59] Customers have requested the ability to use QoS on the physical port in a way that prevents VLANs from one or the other domain (native or OF) from consuming an inordinate amount of bandwidth on a shared link.