15

Platforms Are Infrastructures on Fire

Paul N. Edwards

Highways, electric power grids, the internet, telephones, pipelines, railroads: we call these things “infrastructures.” They’re the large-scale, capital-intensive essential systems that underlie modern societies and their economies. These infrastructures took decades to develop and build, and once established, they endure for decades, even centuries. Infrastructures may be slow, but they don’t burn easily.

Once you have a few infrastructures in place, you can build others “on top” of them by combining their capabilities. UPS, FedEx, and similar services join air, rail, and trucking to deliver packages overnight, using barcodes and computerized routing to manage the flow. National 911 services deploy telephone and radio to link police, fire, ambulance, and other emergency services. The internet is a gigantic network of computer networks, each one built separately. These second-order infrastructures seem to present a different temporality—a different sense and scale of time—in many cases due to the rise of networked software platforms. Today’s platforms can achieve enormous scales, spreading like wildfire across the globe. As Facebook and YouTube illustrate, in just a few years a new platform can grow to reach millions, even billions, of people. In cases such as Airbnb and Uber, platforms set old, established systems on fire—or, as their CEOs would say, “disrupt” them. Yet platforms themselves burn much more readily than traditional infrastructures; they can vanish into ashes in just a few years. Remember Friendster? It had 115 million users in 2008. What about Windows Phone, launched in 2010? Not on your radar? That’s my point. Platforms are fast, but they’re flammable.

In this chapter I argue that software platforms represent a new way of assembling and managing infrastructures, with a shorter cycle time than older, more capital-intensive counterparts. I then speculate about the future of platform temporalities, drawing on examples from apartheid South Africa and contemporary Kenya. These examples suggest that African infrastructures, often portrayed as backward or lagging, may instead represent global futures—leapfrogging over the slower, heavier processes of more typical infrastructure.

Temporalities of Infrastructure

“Infrastructure” typically refers to mature, deeply embedded sociotechnical systems that are widely accessible, shared, and enduring. Such systems are both socially shaped and society-shaping. Major infrastructures are not optional; basic social functions depend on them. Many are also not easily changed, both because it would be expensive and difficult to do so, and because they interact with other infrastructures in ways that require them to remain stable. Archetypal infrastructures fitting this definition include railroads, electric power grids, telephone networks, and air traffic control systems. A large subfield of infrastructure studies, comprising history, anthropology, sociology, and science and technology studies, has traced many aspects of these systems.1

Susan Leigh Star, a sociologist of information technology, famously asked, “When is an infrastructure?” Her question calls out the ways infrastructure “emerges for people in practice, connected to activities and structures.” Systems rarely function as infrastructure for everyone all of the time, and one person’s smoothly functioning infrastructure may be an insurmountable barrier to another, as sidewalks without curb cuts are to people in wheelchairs.2 Here I want to ask related but different questions: How fast is an infrastructure? What are the time frames of infrastructure, as both historical and social phenomena?

A temporal pattern is clearly visible in the case of “hard” physical infrastructures such as canals, highways, and oil pipelines. Following an initial development phase, new technical systems are rapidly built and adopted across an entire region, until the infrastructure stabilizes at “build-out.” The period from development to build-out typically lasts between thirty and one hundred years.3 This consistent temporal range is readily explained by the combination of high capital intensity; uncertainty of returns on investment in the innovation phase; legal and political issues, especially regarding rights-of-way; and government regulatory involvement.

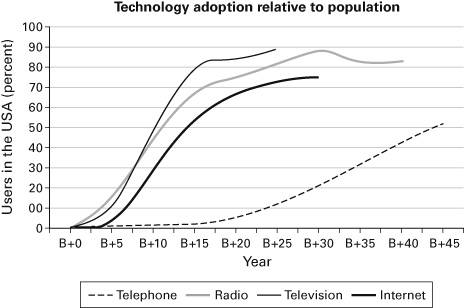

Figure 15.1 Technology adoption relative to population in the United States, starting from base year B defined as the year of commercial availability: telephone 1878, radio 1920, television 1945, and internet 1989. B+5 is 5 years after commercialization, and so on. (Source: Graph after Gisle Hannemyr, “The Internet as Hyperbole: A Critical Examination of Adoption Rates,” Information Society 19, no. 2 [2003], figure 2, extended with additional data from the Pew internet survey.)

Major communication infrastructures display a similar pattern. On one account, radio, television, and the internet all took about twenty years to reach 80 percent of the US population (figure 15.1),4 but these time lines might be closer to thirty to forty years if the innovation and early development phases were included. The telephone network took much longer, but it was the first personal telecommunication system, and unlike the other three, it required laying landlines to every home and business. The rapid spread of radio and television resulted in part from the lesser capital intensity of their original physical infrastructure, which reached thousands of receivers through a single broadcast antenna. Later, of course, cable television required large capital investments of the same order as landline telephony. The internet relied mainly on preexisting hardware, especially telephone and TV cables, to connect local and wide-area computer networks to each other.

As they mature, infrastructures enter another temporality, that of stability and endurance. Major infrastructures such as railroads, telephone networks, Linnaean taxonomy, interstate highways, and the internet last for decades, even centuries. They inhabit a temporal mesoscale, enduring longer than most people, corporations, institutions, and even governments.

Each new generation is thus born into a preexisting infrastructural landscape that presents itself as a quasi-natural background of lifeways and social organization.5 Infrastructure enrolls its inhabitants in its own maintenance, whether passively as consumers (whose payments support it) or actively as owners, maintainers, or regulators of infrastructural systems.6 Thus, infrastructures are “learned as part of membership” in communities, nations, and lifeworlds.7 The qualities of ubiquity, reliability, and especially durability create a nearly unbreakable social dependency—including the potential for social and economic trauma during episodes of breakdown, such as urban blackouts or major internet outages.

To answer the question “How fast is an infrastructure?”: historically, major infrastructures appear to have shared a thirty- to one-hundred-year growth trajectory. As they spread, they became deeply embedded in social systems and intertwined with other infrastructures, increasing human capabilities but simultaneously inducing dependency. Societies then found themselves locked into temporally indefinite commitments to maintain them. Yet infrastructures’ very invisibility and taken-for-grantedness have also meant that the financial and social costs of maintenance are borne grudgingly—and are frequently neglected until an advanced state of breakdown presents a stark choice between disruptive major repairs and even more disruptive total failure.8 Infrastructures are slow precisely because they represent major commitments of capital, training, maintenance, and other social resources.

Second-Order Large Technical Systems: Platforms as Fast Infrastructures

A third temporality appears when we consider what the sociologist Ingo Braun named “second-order large technical systems” (LTSs) built on top of existing infrastructures.9 Braun’s example was the European organ transplant network, which uses information technology to integrate emergency services, air transport, and patient registries to locate fresh donor organs, match them to compatible transplant patients, and deliver them rapidly across the continent. Other examples include the global supply chains of huge enterprises such as Walmart, Ikea, and Alibaba, emerging systems of trans-institutional electronic patient records, and large-scale “enterprise management” software.

Certain major infrastructures introduced since the 1970s are second-, third-, or nth-order LTSs. Software is the critical core element. For example, as the internet emerged and became publicly available from the mid-1980s through the 1990s, it relied mainly on existing equipment constructed for other purposes: computers, electric power, telephone lines, and TV cables. The critical elements that turned this hodgepodge of gear into “the internet” were software and standards: the TCP/IP protocols that govern how data is packetized and routed from one network to another, and the domain name system that governs internet addresses. Similarly, the World Wide Web rides “on top of” the internet. With rising demand for ever higher bandwidth, the internet has increasingly become a physical infrastructure project in its own right, requiring dedicated undersea cables, fiber-optic landlines, and server farms to handle exponentially increasing traffic—but telephone, TV cable, and cellular telephony, all originally installed as part of other infrastructures, remain the principal modes of last-mile delivery. The web, by contrast, is constructed entirely from standards, protocols (HTML, HTTP, etc.), and software such as web browsers, and it is filled with content from millions of sources, including individuals, firms, news agencies, and governments.

The currently popular vocabulary of “platforms” reflects the increasing importance of software-based second-order infrastructures. The origins of platform terminology, however, predate the role of software. In the early 1990s, management and organization studies researchers began to identify “platforms” as a generic product strategy applicable to almost any industry. For these scholars and practitioners, platforms are architectures comprising three key elements:

- • Core components with low variability (the platform)

- • Complementary components with high variability

- • Interfaces that connect core and complementary components

The platform strategy lowers the cost of variation and innovation, because it avoids designing entirely new products to address related but different needs. A celebrated example is the Chrysler K-car platform (1981–1988), essentially a single chassis and drive train built to accommodate many different car and truck bodies. This approach dates to the early days of the American automobile industry, when Ford fitted its Model T chassis with bodies ranging from open touring cars to sedans to trucks. (There was even a snowmobile.) Successful platforms often attract ecosystems of smaller firms, with producers of complementary components and interfaces forming loose, “disaggregated clusters” around the producer of the core component.10 In the 1990s, management scholars promoted “platform thinking” as a generic corporate strategy.11

Also in the 1990s, the computer industry adopted the “platform” vocabulary, applying it agnostically to both hardware and software. Microsoft described its Windows operating system as a platform, while Netscape defined a “cross-platform” strategy (i.e., availability for all major computer operating systems) for its web browser. Some computer historians argue that the so-called IBM PC, introduced in 1981, should really be known as the “Microsoft-Intel PC”—or platform. Lacking control of its core components (Microsoft’s operating system and the Intel chips on which the OS ran), IBM rapidly lost dominance of the PC market to reverse-engineered clones of its design, manufactured by Compaq, Hewlett-Packard, Dell, and other firms. Arguably, IBM’s loss of control actually made the Microsoft-Intel PC platform even more dominant by driving down prices.12

Web developers soon extended the computer industry’s notion of platform to web-based applications, abandoning the previous model of purchased products in favor of subscriptions or rentals. As web guru Tim O’Reilly put it, in many cases today “none of the trappings of the old [packaged or purchased] software industry are present . . . No licensing or sale, just usage. No porting to different platforms so that customers can run the software on their own equipment.”13 For web-based “platforms,” the underlying hardware is essentially irrelevant. Web service providers own and operate servers, routers, and other devices, but the services themselves are built entirely in software: databases, web protocols, and the APIs (application program interfaces) that permit other pieces of software to interact with them.

APIs act like software plugs and sockets, allowing two or more pieces of unrelated software to interoperate. APIs are thus readily described as interfaces between core components (e.g., operating systems, browsers, Facebook) and complementary components (e.g., Android apps, browser and Facebook plug-ins). Using APIs, this modular architecture can be extended indefinitely, creating chains or networks of interoperating software. Since the early 2000s, the dramatic expansions of major web-based companies such as Google, Facebook, and Apple have demonstrated the power of the platform strategy.

This detachment from hardware corresponds with a layering phenomenon commonly observed in computing: software depends on and operates within hardware, yet it can be described and programmed without any knowledge of or even any reference to that hardware. Higher-level applications are built on top of lower-level software such as networking, data transport, and operating systems. Each level of the stack requires the capabilities of those below it, yet each appears to its programmers as an independent, self-contained system.

The key ingredient for many platforms is user contribution: product and movie reviews, videos, posts, comments and replies, and so on. Platforms also invisibly capture data about users’ transactions, interests, and online behavior—data which can be used to improve targeted marketing and search results, or to serve more nefarious agendas. App developers also furnish content of their own, as well as functionality and alternative interfaces.

Digital culture scholar Tarleton Gillespie notes that social media companies such as YouTube and Facebook deploy the term “platform” strategically, using its connotations to position themselves as neutral facilitators and downplay their own agency. Recent public debates about the legal and regulatory status of Uber and Airbnb illustrate this strategy. Unlike taxi companies and hotels, these enterprises started with neither cars nor buildings, presenting themselves instead as platforms that “merely” connect car or property owners with potential customers. In this context, “platform” is both “specific enough to mean something, and vague enough to work across multiple venues for multiple audiences,” such as developers, users, advertisers, and (potentially) regulators.14

Thus, a key role of what we might call “platform discourse” is to render the platform itself as a stable, unremarkable, unnoticed object, a kind of empty stage, such that the activity of users—from social media posts to news, videos, reviews, connecting travelers with drivers and apartments for rent—obscures its role as the enabling background. As Sarah Roberts argues in this volume, platforms “operate on a user imagination of . . . unfettered self-expression, an extension of participatory democracy that provides users a mechanism to move seamlessly from self to platform to world.” Aspirationally, platforms are like infrastructures: widely accessible, reliable, shared, “learned as part of membership” in modern social worlds—and to this extent, no parent of teenagers possessed of (by?) smartphones could disagree.

Platforms and the “Modern Infrastructural Ideal”

City governments and planners of the mid-nineteenth century began to conceive cities as coherent units furnishing certain key services, such as roads, sewers, police, emergency services, and public transportation, as public goods—a vision the urban sociologists Simon Graham and Simon Marvin call the “modern infrastructural ideal.”15 Some infrastructures originated as private enterprises; as the modern infrastructural ideal took hold, many became publicly regulated monopolies. Many national governments also provided and regulated railroads, highways, PTTs (post, telegraph, and telephone services), and other infrastructures—including the early internet.

The modern infrastructural ideal began to decline in the late 1970s, as neoliberal governments sought to shift public services to private enterprise. Rather than operate or oversee monopoly suppliers of public goods, these governments wanted to break up those monopolies so as to increase competition. As a corollary, they renounced the public-facing responsibilities implied by the modern infrastructural ideal.

The history of networked computing offers a striking view of this tectonic shift. In the 1960s, many analysts saw computer power as a significant resource that might be supplied by what they called a “computer utility.” Such a utility would own and operate huge computers; using the then-new technology of time-sharing, thousands of people could share these machines, just as electric utility customers share the output of huge electric power plants. Economies of scale, they argued, would reduce prices. The computer utility would provide, on demand, sufficient computer power for almost any task.16 At a time when a single computer could easily cost over $500,000 (in 2019 dollars), this argument made excellent sense. By the late 1960s, in fact, it became the business model for companies such as the very successful CompuServe. Industry observers traced out the logic of the modern infrastructural ideal: computer services would eventually become public, regulated monopoly utilities.17

Early internet history, from the late 1960s through the late 1980s, also traced the terms of the modern infrastructural ideal. Large government investments funded development and rollout, via the US Defense Department’s Advanced Research Projects Agency and later the US National Science Foundation (NSF). Justifications for this support were first military, later scientific, but always had a compelling public purpose in view. Exactly as predicted by the “computer utility” model, the NSF required universities it provisioned with supercomputers and networks to connect other, less-well-resourced institutions.18 The single most complete realization of the computer utility model was the French Minitel, introduced in 1980. Reaching 6.5 million French citizens by 1990, the government-owned and -operated Minitel used centralized servers communicating with dumb terminals over existing telephone lines. Minitel hosted (and profited from) numerous commercial services, but also offered free public services such as a national telephone directory. Minitel was explicitly developed as a public good, with terminals distributed at no cost to millions of French households and also available at post offices.19

Just as it did with other infrastructures, the United States mostly abandoned the public-good model of the internet in the late 1980s (although the federal government continues to regulate some aspects of internet services). The crucial steps in this splintering process were privatization of internet backbone lines (from 1987) and removal of restrictions on commercial use (from 1992). The history of networked computing can thus be seen as the transformation of a traditional monopoly infrastructure model into the deregulated, privatized, and splintered—we might say “platformized”—infrastructure model prevalent in many sectors today.20

To summarize, the rise of ubiquitous, networked computing and changing political sentiment have created an environment in which software-based platforms can achieve enormous scales, coexist with infrastructures, and in some cases compete with or even supplant them. With my colleagues Plantin, Lagoze, and Sandvig, I have argued elsewhere that two of today’s largest web firms, Google and Facebook, display characteristics of both platforms and infrastructures.21 Like platforms, they are second-order systems built “on top of” the internet and the web, and they provide little content of their own. Like infrastructures, they have become so important and so basic to daily life and work in large parts of the world that their collapse would represent a catastrophe. Further, these firms have invested substantially in physical systems, such as undersea cables surrounding the African continent.22

As Nathan Ensmenger shows in this volume, “the cloud” is really a factory: not just virtual but also physical and human. Platforms are no exception. Each one requires servers, routers, data storage, miles of cable, physical network connections, and buildings to house them all. Each involves a human organization that includes not only programmers but also accountants, maintainers, customer service people, janitors, and many others. And as Tom Mullaney observes in the introduction, like the assembly lines of the early twentieth century (and like most traditional infrastructures), physical fire—burning fossil fuels—powers much of their activity (though Google and Apple, in particular, have invested heavily in wind, solar, and geothermal power, aiming for 100 percent renewable balance sheets across their operations in the very near future).23

Yet the cloud also differs from factories in a key respect: because they are essentially made from code, platforms can be built, implemented, and modified fast. And as nth-order systems, their capital intensity is low relative to such infrastructures such as highways, power grids, and landline cables. My argument is that this flexibility and low capital intensity gives platforms a wildfire-like speed, as well as an unpredictability and ephemerality that stems from these same characteristics: competing systems can very quickly supplant even a highly successful platform, as Facebook did Myspace. Far from being stable and invisible, software platforms are mostly in a state of constant flux, including near-daily states of emergency (data breaches, denial of service attacks, spam). In major cases, such as Twitter and Facebook since 2016, their states of emergency are principal subjects of national and international politics, news, and anguished conversation. Platforms are infrastructures on fire.

Three Platforms in African Contexts

In this section, I trace the histories of three platforms significant to various African contexts. FidoNet, the “poor man’s internet,” became the first point of contact with electronic mail in many African countries; here I focus on its role in South Africa, where it helped anti-apartheid activists communicate with counterparts both inside and outside the country. M-Pesa, a mobile money system first rolled out in Kenya in 2007, is already used by the majority of Kenyans; it is fast emerging as a parallel financial infrastructure outside the traditional banking system. Finally, Facebook’s low-bandwidth “Free Basics” (a.k.a. internet.org) platform for mobile phones has already become a staple of daily life for some 95 million Africans.

FidoNet

FidoNet began in the 1980s as computer networking software supporting the Fido Bulletin Board System (BBS) for personal computers. Like other bulletin board services, it allowed users to send email, news, and other text-only documents to other FidoNet users. By the late 1980s, it could connect to the emerging internet by means of UUCP (Unix-to-Unix copy) gateways. Created in San Francisco in 1984 by the Fido BBS developer, Tom Jennings, FidoNet ran over telephone lines using modems. In that year, the AT&T monopoly had just been unbundled, but despite the emergence of competitors, long-distance telephone calls remained quite expensive in the United States. All providers charged differential rates: highest during the business day, lowest usually between eleven p.m. and seven a.m. The FidoNet approach took advantage of these differential rates to exchange messages in once-daily batches during a late-night “zonemail hour,” dependent on time zone and region. While many FidoNet nodes remained open at other times, zonemail hour ensured that all nodes could take advantage of the lowest-cost alternative.

Dozens of other networking systems were also developed in the mid-1980s. A 1986 review article listed FidoNet alongside some twenty-six other “notable computer networks,” as well as six “metanetworks” such as the NSFNET.24 These included Usenet, BITNET (an academic network), and the fledgling internet itself. Each of these deployed its own unique addressing and communication techniques, leading to a cacophony of largely incompatible standards. Only in the 1990s did the internet’s TCP/IP protocols become the dominant norm.25

Usenet, BITNET, NSFNET, and the internet were initially populated mainly by university faculty and students working on time-shared mainframes and minicomputers, using dumb terminals rather than personal computers (PCs). As PCs spread in the 1980s, commercial “networks” such as Prodigy (founded 1984) and America Online (circa 1985 as an online services provider) also sprang up, aimed at private individuals and small businesses. In practice, these walled-garden online services operated like large-scale, centralized BBSs, rather than like networks in today’s sense of the term. Most of them deliberately prevented users from communicating with other computer networks, which they viewed as a threat to their revenue. FidoNet presented a low-budget, decentralized alternative to these commercial and academic networks and online services.

The FidoNet software was designed specifically for small local operators using only PCs as hosts (i.e., servers). Originally written for Microsoft’s MS-DOS PC operating system, it was also eventually ported to other personal computer types, as well as to Unix, MVS, and other minicomputer operating systems. However, DOS-based PCs always comprised the large majority of FidoNet nodes, especially outside the US and Europe.26 FidoNet had its own email protocols, netmail and echomail. Netmail was designed for private email, but “due to the hobbyist nature of the network, any privacy between sender and recipient was only the result of politeness from the owners of the FidoNet systems involved in the mail’s transfer.”27 Echomail performed essentially the same function as UUCP: it copied large groups of files from one node to another. This capability could be used both to send batches of many email messages destined for individuals and to broadcast news automatically to other FidoNet nodes.

FidoNet became the base for a PC-based, grassroots collective of local BBS operators who sought to develop long-distance email and shared news services. Unlike most of the commercial services and academic networks, FidoNet software was freely distributed and required no particular affiliation. Many operators charged fees to support their operations and recover the costs of the nightly long-distance calls, but in general these fees were low. Randy Bush, a major promoter of FidoNet, emphasized the “alternative infrastructure” aspect of this work: “From its earliest days, FidoNet [was] owned and operated primarily by end users and hobbyists more than by computer professionals. . . . Tom Jennings intended FidoNet to be a cooperative anarchism [sic] to provide minimal-cost public access to email.” A quasi-democratic governance system evolved in which node operators elected local, regional, and zone coordinators to administer the respective levels.

As for the technical underpinnings of this “anarchism,” Bush wrote:

Two very basic features of FidoNet encourage this. Every node is self-sufficient, needing no support from other nodes to operate. But more significant is that the nodelist contains the modem telephone number of all nodes, allowing any node to communicate with any other node without the aid or consent of technical or political groups at any level. This is in strong contrast to the uucp network, BITNET, and the internet.28

In addition to the all-volunteer, anarchical quality of the organization and its technical standards, developers’ commitment to keeping costs low soon gave FidoNet a reputation as “the poor man’s internet.” Starting in 1986, FidoNet administrators published a series of simple standards documents. These allowed distant participants with relatively minimal computer skills to set up FidoNet nodes.

The number of FidoNet nodes grew rapidly. Starting at twelve nodes in 1984, it hit 10,000 nodes in 1990, mushrooming to nearly 40,000 nodes in 1996. It then declined, nearly symmetrically with its growth, dropping below 10,000 nodes again in 2004 as a direct result of the spread of internet protocols. Although some 6,000 nodes were still listed in 2009, these saw little traffic. Today the network exists only as a nostalgia operation.29

In the mid-1980s, progressive nongovernmental organizations (NGOs) began to build their own dial-up bulletin board systems, such as GreenNet (UK), EcoNet/PeaceNet (California), and many others. These activist networks soon allied and consolidated, forming in 1986 the Institute for Global Communications based in the USA, and in 1990 the much larger Association for Progressive Communications (APC), whose founders included NGO networks in Brazil and Nicaragua as well as the Global North. The critical importance to activists of creating and running their own online networks—as well as the chaotic diversity of the online world emerging in the late 1980s—was captured by one of APC’s founders, writing in 1988:

Countless online commercial services [offer] data bases [sic] of business, academic, government and news information. DIALOG, World Reporter, Data Star, Reuters, Dow Jones. You can easily spend up to $130 (£70) an hour on these services (GreenNet costs £5.40 an hour, PeaceNet costs $10 an hour peak—half at night or weekends). Then there are the information supermarkets—The Source or Compuserve in the US, Telecom Gold in the UK—offering electronic mail, stock market information, and conferencing to the general public. Finally, there are the non-profit academic and special interest networks—Bitcom, Janet, Usenet, MetaNet. All of these data bases and networks—except the last group—are owned and operated by large corporations.

How are the APC networks different? They are a telecommunications service closely linked to citizen action. They are non-profit computer networks connecting over 3000 users and 300 organizations working for the future of our planet. They enable immediate, cost-effective exchange of information between people in 70 countries . . . working on peace, environmental, economic development, and social justice issues. Their present operation, history, and future development have no real parallels in the communications industry. . . . [APC] email facilities include . . . gateways for sending messages to users on more than twenty commercial and academic networks. . . .30

By the late 1980s, numerous NGOs in the Global South had joined this movement. Many used FidoNet to link with APC networks. In 1990, in London, GreenNet’s Karen Banks set up the GnFido (GreenNet Fido) gateway node, which translated between FidoNet protocols and the UUCP protocol widely used in the Global North; her work made it possible for African, South Asian, and Eastern European NGOs to use FidoNet to communicate directly with internet users.31 Banks maintained GnFido until 1997; in 2013, this work earned her a place in the Internet Hall of Fame alongside FidoNet promoter Randy Bush and South Africa NGONet (SANGONet) founder Anriette Esterhuysen.

FidoNet standards had many advantages for developing-world conditions:

The FidoNet protocol was a particularly robust software [sic], which made it very appropriate for use in situations where phone line quality was poor, electricity supply was unreliable, costs of communications were expensive, and where people had access to low specification hardware. . . . FidoNet provided very high data compression, which reduced file size and therefore reduced transmission costs . . . and it was a “store-and-forward” technology (meaning people could compose and read their email offline, also reducing costs).32

These were not the first uses of FidoNet in Africa, however. In the late 1980s, South African computer scientist Mike Lawrie, working at Rhodes University in Grahamstown, met Randy Bush at a conference in the United States and learned about FidoNet. He convinced Bush to help him and his colleagues communicate with counterparts outside the country. At that time, due to international sanctions against the apartheid government, South Africa was prohibited from connecting directly to the internet. But FidoNet had gateways to the UUCP network, which could be used to transmit email to internet users. The necessary conversions and addressing schemes were not exactly simple; Lawrie details a twelve-step process for sending and receiving internet mail via FidoNet to UUCP. Bush allowed the Rhodes group to place calls to the FidoNet node at his home in Oregon. Through that node, they could then access the internet; for about a year, this was South Africa’s only email link to the USA.33

The attractions of the low-tech FidoNet platform and its anarchist political culture ultimately proved insufficient to maintain FidoNet as an alternative infrastructure. Instead, the desire to communicate across networks led to FidoNet’s demise. Like the Rhodes University computer scientists, the activist networks soon created “gateways” or hubs that could translate their UUCP traffic into the previously incompatible format used by FidoNet (and vice versa), enabling worldwide communication regardless of protocol.

FidoNet’s temporal profile thus looks very different from that of an infrastructure like a highway network. Its phase of explosive growth lasted just five years, from about 1991 to 1996. Its decline was nearly as rapid. By 2010 it was all but dead. As a second-order communication system based on PCs and long-distance telephony, capable of connecting to the internet but using its own “sort of” compatible addressing scheme, FidoNet was rapidly replaced as internet protocols and services spread throughout the world. Yet due to its simplicity and low cost, for over a decade FidoNet played a disproportionate role in connecting the African continent to the rest of the world via email. For many African users, FidoNet was the only alternative. This “poor man’s internet” served them, however briefly, as infrastructure.

M-Pesa

M-Pesa is a cellphone-based mobile money system, first rolled out in Kenya in 2007 by mobile phone operator Safaricom. (“Pesa” means “money” in Swahili.) When clients sign up for M-Pesa, they receive a new SIM card containing the M-Pesa software. Clients “load” money onto their phones by handing over cash to one of the 160,000+ authorized M-Pesa agents. Friends, relatives, or employers can also load money to the client’s phone. Clients can use the money to buy airtime or to pay bills at hundreds of enterprises.

To date, however, clients have used M-Pesa principally for “remittances,” the common practice of wage-earning workers sending money to family or friends elsewhere. M-Pesa offers a safe, secure method of storing money in an environment where many workers are migrants supporting family members in remote areas and robbery is common, especially when traveling. Businesses now use M-Pesa for mass payments such as payrolls—not only a major convenience but also a far more secure method than cash disbursements. The service charges small fees (maximum $1–3 US dollars) for sending money or collecting withdrawals from an agent. Safaricom earns more on large business transactions.

M-Pesa has enjoyed enormous success in Kenya. By 2011, just four years after it was launched, over thirteen million users—representing some 70 percent of all Kenyan households—had signed up.34 Other mobile phone companies quickly mounted their own mobile money systems, but none have gained nearly as much traction. According to Safaricom, in 2015 some 43 percent of the country’s $55 billion GDP passed through the system.35 By the end of 2018 M-Pesa subscribers in Kenya numbered 25.5 million—half the country’s total population.

While M-Pesa originally focused on domestic transfers among Kenyans, Safaricom and part-owner Vodacom (South Africa) have since expanded the business to seven other African countries. M-Pesa also operates in Romania and Afghanistan, as well as in India, one of the world’s largest markets for such services. Given that international remittances constitute a $300 billion global business, prospects seem very bright for a low-cost, user-friendly mobile money system that can operate on even the simplest cellphones.

The M-Pesa project began in 2003 with a handful of individuals at London-based Vodafone, as a response to the United Nations’ Millennium Development Goals. Supported in part by “challenge funds” from the UK government for development work, these developers formed a partnership with Sagentia, a small British technology consulting group (now known as Iceni Mobile), and Faulu Kenya, a microfinance firm that would furnish a business test bed. Just as with FidoNet, these designers sought simplicity and extreme low cost. Project leaders Nick Hughes and Susie Leonie, in their account of its early days, note that “the project had to quickly train, support, and accommodate the needs of customers who were unbanked, unconnected, often semi-literate, and who faced routine challenges to their physical and financial security.”36

Since M-Pesa would have to run on any cellphone, no matter how basic, the hardware platform chosen for M-Pesa was the lowly SIM card itself. The software platform was the SIM Application Toolkit, a GSM (Global System for Mobile Communications) standard set of commands built into all SIM cards. These commands permit the SIM to interact with an application running on a GSM network server, rather than on the SIM or the handset, neither of which has enough computing power or memory for complex tasks. PINs would protect cellphone owners, while agents would confirm transactions using special phones furnished by Safaricom. The simple, menu-driven system operates much like SMS.

Since many customers would be making cash transfers to remote locations, Safaricom established an extensive country-wide network of agents (mostly existing Safaricom cellphone dealers), as well as methods for ensuring that agents neither ran out of cash nor ended up with too large an amount of it on hand. Training these agents to handle the flow of cash and maintain PIN security proved challenging, especially in remote areas, but they eventually caught on, as did users.

Businesses soon began using the system as well, but smooth, standardized techniques for batch-processing large numbers of transactions remained elusive. Every change to Safaricom’s software caused breakdowns in this process and required businesses to rework their own methods, causing them to clamor for a genuine API. A 2012 assessment of M-Pesa noted that the eventual API performed poorly, but also that

a mini-industry of software developers and integrators has started to specialize in M-Pesa platform integration. . . . These bridge builders fall into two broad categories: 1) those that are strengthening M-Pesa’s connections with financial institutions for the delivery of financial products, and 2) those that are strengthening M-Pesa’s ability to interoperate with other mobile and online payment systems. The lack of a functional M-Pesa API is hindering bridge-building, but several companies have devised tools for new financial functions and online payments nonetheless.37

This trajectory reflects the typical platform pattern of development. Once established, the core component, namely the M-Pesa software, acquired a number of developers building complementary components. Other developers built better interfaces between the core and the complementary components, leading to M-Pesa-based financial services such as pension schemes, medical savings plans, and insurance offerings. This “mobile money ecosystem” is a kind of parallel universe to traditional banking.38 Indeed, today a Google search on M-Pesa categorizes it simply as a “bank.”

Is the short-cycle temporality of platforms and second-order systems the future of infrastructure? M-Pesa went from drawing board to multibillion-dollar business in less than ten years. More importantly for my argument, M-Pesa rapidly acquired the status of fundamental infrastructure for the majority of Kenyan adults, serving as a de facto national banking system. According to one specialist in mobile money, “Africa is the Silicon Valley of banking. The future of banking is being defined here. . . . It’s going to change the world.”39

Yet the jury is still out on its ultimate value for low-income Kenyans. A widely noted study concluded that between 2008 and 2014, the increase in “financial inclusion” afforded by mobile money lifted 194,000 households, or around 2 percent of Kenya’s population, out of poverty.40 However, this result has been strongly criticized as a “false narrative” that fails to account for such negative effects as increasing “over-indebtedness” among Kenyans, high costs for small transactions, and the incursion of social debts related to kinship structures which may outweigh any economic advantages.41

Facebook and WhatsApp

Facebook, which opened for business in 2004 and issued its IPO in 2012, is currently the globe’s sixth largest publicly traded company, with a book value of over $510 billion in 2019. With more than 2.4 billion monthly active users—nearly one-third of the world’s total population—it is currently the world’s largest (self-described) “virtual community.” At this writing in 2019, Facebook founder Mark Zuckerberg is all of thirty-five years old.

Facebook presents itself as the ultimate platform, filled largely with content provided by users. Early on, Facebook became an alternative to internet email for many users—especially younger people, whose principal online communications were with their friends and peer groups rather than the wider world. While users’ photos, videos, and reports of daily events make up much of Facebook’s content, a large and increasing amount of it is simply forwarded from online publishers and the open web.

Facebook is deliberately designed to keep its customers within the platform. Once web content is posted to Facebook, it can readily be viewed there, but additional steps are required to reach the original web source. Through its APIs, Facebook also brings third-party apps, games, news, and more recently paid advertising directly into its closed universe. Meanwhile, private Facebook posts cannot be crawled by third-party search engines, though the company offers its own, rather primitive search facilities. As many commentators have observed, Facebook appears to be creating a “second internet,” a parallel universe largely inaccessible to Google and other search engines, reachable only through Facebook. The sociologist of information Ann Helmond has called this the “platformization of the web,” while others have labeled Facebook’s goal “internet imperialism.”42

As mentioned above, in 2011 Facebook began offering a low-end version of its software that required little or no data service. The initial release was aimed at “feature phones” (an intermediate, image-capable level between basic phones, with voice and text only, and the more expensive smartphones). Over the next four years, this project underwent a series of transformations, all reflecting the complex tensions among (a) Facebook’s goal of attracting users and capturing their data, (b) the technical constraints of developing-world devices and mobile providers, and (c) users’ and governments’ interest in access to the full, open World Wide Web.

In 2013, Zuckerberg declared internet connectivity a “human right” and set a goal of making “basic internet services affordable so that everyone with a phone can join the knowledge economy.” To make this possible, he argued, “we need to make the internet 100 times more affordable.”43 The company launched the deceptively named internet.org, a shaky alliance with six other firms (Samsung, Ericsson, MediaTek, Opera, Nokia, and Qualcomm) seeking to create free internet access for the entire developing world. Internet.org launched four of its first five projects in African nations: Zambia, Tanzania, Kenya, and Ghana. The organization sought to define and promote standards for low-bandwidth web content; for example, Java and Flash cannot be used. Only websites that met these standards would be delivered by the platform envisaged for internet.org’s end users.

Zuckerberg made no secret of his ultimate goal. As a 2014 article in TechCrunch put it,

The idea, [Zuckerberg] said, is to develop a group of basic internet services that would be free of charge to use—“a 911 for the internet.” These could be a social networking service like Facebook, a messaging service, maybe search and other things like weather. Providing a bundle of these free of charge to users will work like a gateway drug of sorts—users who may be able to afford data services and phones these days just don’t see the point of why they would pay for those data services. This would give them some context for why they are important, and that will lead them to paying for more services like this—or so the hope goes.44

In 2014, internet.org held a “summit” in New Delhi, where Zuckerberg met with Indian Prime Minister Narendra Modi to promote the project. In May 2015, the coalition announced its intent to embrace an “open platform” approach that would not discriminate among third-party services or websites (provided they met the standards mentioned above).

However, under pressure from local operators suspicious of Facebook imperialism, India’s Telecom Regulatory Authority ultimately banned the program—now called “Free Basics”—in 2016, on net neutrality grounds. The regulator characterized the free service as a grab for customers using zero-rating (i.e., no-cost provision) as bait. The World Wide Web Foundation immediately applauded India’s decision, writing that “the message is clear: We can’t create a two-tier internet—one for the haves, and one for the have-nots. We must connect everyone to the full potential of the open Web.”45 Many others have since criticized the platform’s “curated, America Online-esque version of [the internet] where Facebook dictates what content and services users get to see.”46

The net neutrality controversy notwithstanding, the feature-phone version of Facebook’s software rapidly attracted a large user base. In 2016, 20 percent of all active Facebook users accessed the platform on feature phones.47 Some 95 million Africans were using this version of the platform, and in South Africa at least, this became a dominant mode. Although YouTube and other social media systems also have a presence, Facebook dominates the continent by far. Even in 2018, feature phones still accounted for well over half of African mobile phone sales, despite increasing uptake of smartphones.

To date, Facebook’s “internet” service in the developing world remains a vacillating and conflicted project. Much like the America Online of the 1990s, an undercurrent of its plans has been to channel the open web through its closed platform, seeking to retain users within its grasp. Reporting on research by the NGO Global Voices and citing public protests in Bangalore, the Guardian quoted advocacy director Ellery Biddle: “Facebook is not introducing people to open internet where you can learn, create and build things. It’s building this little web that turns the user into a mostly passive consumer of mostly western corporate content. That’s digital colonialism.”48

On the other hand, when confronted on this point, Zuckerberg has repeatedly changed course in favor of greater openness. He insists that full web access for all is the ultimate goal—albeit according to a set of standards that drastically reduce bandwidth demands, an entirely reasonable vision given current technical capacities and constraints. In the long run, Facebook certainly hopes to profit from “bottom of the pyramid” customers, but in the interim, the platform may (at least for a time) provide a crucial public good—in other words, an infrastructure.

WhatsApp, launched independently in 2011 but acquired by Facebook in 2014, offers extremely cheap text messaging and, more recently, image and voice service as well. Its low cost made WhatsApp very popular in developing-world contexts. At this writing in 2019, it has over 1.6 billion users, the largest number after Facebook and YouTube.49

WhatsApp’s extremely low cost was made possible by a gap in most mobile operators’ pricing structure, related to the legacy SMS service still in widespread use. Rather than deliver messages as costly SMS, WhatsApp sends them as data. It does the same with voice calls, also normally charged at a higher rate than data. It thus exploits a kind of hole in the fee structure of cellular providers. South African operators sell data packages for as little as R2 (2 rand, or about $0.10). Using WhatsApp, the 10MB that this buys is enough to make a ten-minute voice call or to send hundreds of text messages. By contrast, at typical voice rates of about R0.60 per minute, R2 would buy only three minutes of voice, or four to eight SMS (depending on the size of the bundle purchased). These huge cost savings largely account for WhatsApp’s immense popularity.

Although they can also be used via computers and wired internet services, Facebook Free Basics and WhatsApp services mainly target cellphones and other mobile devices, the fastest-growing segment of internet delivery, and by far the most significant in the developing world. Recently, researchers have adopted the acronym OTT (Over the Top) to describe software that runs “on top of” mobile networks—exactly the second-order systems concept introduced earlier in this chapter. Some cellular operators have begun to agitate for regulation of these systems, arguing that Facebook, WhatsApp, and similar platforms should help pay for the hardware infrastructure of cell towers, servers, and so on. Others retort that users already pay cellular operators for the data service over which these apps run.

The extremely rapid rise of Facebook and WhatsApp—from a few tens of thousands to well over one billion users in just a few years—again exemplifies a temporality very different from that of older forms of infrastructure. Like Uber and Airbnb, OTT systems do not own or invest in the physical infrastructure on top of which they run; their principal product is software, and their capital investment is limited to servers and internet routers. Competing apps such as Tencent QQ and WeChat, emerging from the dynamic Chinese market, may eventually displace Facebook and WhatsApp as the largest virtual “communities.”

Conclusion

In many parts of the world today, the modern infrastructural ideal of universal service and infrastructure stability through government regulation and/or stewardship has already crumbled. In others, including many African nations, it either died long ago (if it ever existed) or is disappearing fast. Not only corporate behemoths such as Google and Facebook but smaller entities such as Kenya’s Safaricom are taking on roles once reserved for the state or for heavily regulated monopoly firms. The speed with which they have done so—five to ten years, sometimes even less—is staggering, far outstripping the thirty- to one-hundred-year time lines for the rollout of older infrastructures.

Are the rapid cycle times of software-based systems now the norm? Are platforms and second-order systems—imagined, created, and provided by private-sector firms—the future of infrastructure? Will the swift takeoff of quasi-infrastructures such as M-Pesa be matched by equally swift displacement, as happened to FidoNet when internet protocols swept away its raison d’être? To me, history suggests an affirmative, but qualified, answer to all of these questions. My qualification is that the astoundingly rich tech giants clearly understand the fragile, highly ephemeral character of nearly everything they currently offer. To inoculate themselves against sudden displacement, they continually buy up hundreds of smaller, newer platform companies, as Facebook did with WhatsApp. This diversification strategy provides multiple fallbacks. Software platforms rise and fall, in other words, but the corporate leviathans behind them will remain.

If Africa is indeed “the Silicon Valley of banking,” perhaps we should look for the future of infrastructure there, as well as in other parts of the Global South. Yet despite the glory of its innovations and the genuine uplift it has brought to the lives of many, this future looks disconcertingly like a large-scale, long-term strategy of the neoliberal economic order. By enabling microtransactions to be profitably monetized, while collecting the (also monetizable) data exhaust of previously untapped populations, these systems enroll the “bottom of the pyramid” in an algorithmically organized, device-driven, market-centered society.

Notes

1. Thomas P. Hughes, Networks of Power: Electrification in Western Society, 1880–1930 (Baltimore: The Johns Hopkins University Press, 1983); Wiebe Bijker, Thomas P. Hughes, and Trevor Pinch, The Social Construction of Technological Systems (Cambridge, MA: MIT Press, 1987); Geoffrey C. Bowker and Susan Leigh Star, Sorting Things Out: Classification and Its Consequences (Cambridge, MA: MIT Press, 1999); Paul N. Edwards et al., Understanding Infrastructure: Dynamics, Tensions, and Design (Ann Arbor: Deep Blue, 2007); Christian Sandvig, “The Internet as an Infrastructure,” The Oxford Handbook of Internet Studies (Oxford: Oxford University Press, 2013).

2. Susan Leigh Star and Karen Ruhleder, “Steps Toward an Ecology of Infrastructure: Design and Access for Large Information Spaces,” Information Systems Research 7, no. 1 (1996): 112.

3. Arnulf Grübler, “Time for a Change: On the Patterns of Diffusion of Innovation,” Daedalus 125, no. 3 (1996).

4. Sources for figure 15.1: Gisle Hannemyr, “The Internet as Hyperbole: A Critical Examination of Adoption Rates,” Information Society 19, no. 2 (2003); Pew Research Center, “Internet/Broadband Fact Sheet” (June 12, 2019), https://www.pewinternet.org/fact-sheet/internet-broadband/. Pew survey data (originally expressed as percentage of the adult population) were adjusted by the author to correspond with percentage of the total population. Graph is intended to capture general trends rather than precise numbers.

5. Paul N. Edwards, “Infrastructure and Modernity: Scales of Force, Time, and Social Organization in the History of Sociotechnical Systems,” in Modernity and Technology, ed. Thomas J. Misa, Philip Brey, and Andrew Feenberg (Cambridge, MA: MIT Press, 2002).

6. Andrew Russell and Lee Vinsel, “Hail the Maintainers: Capitalism Excels at Innovation but Is Failing at Maintenance, and for Most Lives It Is Maintenance That Matters More” (April 7, 2016), https://aeon.co/essays/innovation-is-overvalued-maintenance-often-matters-more.

7. Bowker and Star, Sorting Things Out.

8. American Society of Civil Engineers, “2013 Report Card for America’s Infrastructure” (2014), accessed February 1, 2019, http://2013.infrastructurereportcard.org/.

9. Ingo Braun, “Geflügelte Saurier: Zur Intersystemische Vernetzung Grosser Technische Netze,” in Technik Ohne Grenzen, ed. Ingo Braun and Bernward Joerges (Frankfurt am Main: Suhrkamp, 1994).

10. Carliss Y. Baldwin and C. Jason Woodard, “The Architecture of Platforms: A Unified View,” Harvard Business School Finance Working Paper 09-034 (2008): 8–9.

11. Mohanbir S. Sawhney, “Leveraged High-Variety Strategies: From Portfolio Thinking to Platform Thinking,” Journal of the Academy of Marketing Science 26, no. 1 (1998).

12. Martin Campbell-Kelly et al., Computer: A History of the Information Machine (New York: Basic Books, 2014).

13. Tim O’Reilly, “What Is Web 2.0? Design Patterns and Business Models for the Next Generation of Software” (September 30, 2005), http://www.oreilly.com/pub/a/web2/archive/what-is-web-20.html.

14. Tarleton Gillespie, “The Politics of ‘Platforms,’” New Media & Society 12, no. 3 (2010).

15. Stephen Graham and Simon Marvin, Splintering Urbanism: Networked Infrastructures, Technological Mobilities and the Urban Condition (New York: Routledge, 2001).

16. Martin Greenberger, “The Computers of Tomorrow,” Atlantic Monthly 213, no. 5 (1964).

17. Paul N. Edwards, “Some Say the Internet Should Never Have Happened,” in Media, Technology and Society: Theories of Media Evolution, ed. W. Russell Neuman (Ann Arbor: University of Michigan Press, 2010).

18. Janet Abbate, Inventing the Internet (Cambridge, MA: MIT Press, 1999).

19. William L. Cats-Baril and Tawfik Jelassi, “The French Videotex System Minitel: A Successful Implementation of a National Information Technology Infrastructure,” MIS Quarterly 18, no. 1 (1994).

20. Paul N. Edwards, “Y2K: Millennial Reflections on Computers as Infrastructure,” History and Technology 15 (1998); Brian Kahin and Janet Abbate, eds., Standards Policy for Information Infrastructure (Cambridge, MA: MIT Press, 1995).

21. J.-C. Plantin et al., “Infrastructure Studies Meet Platform Studies in the Age of Google and Facebook,” New Media & Society 10 (2016).

22. Yomi Kazeem, “Google and Facebook Are Circling Africa with Huge Undersea Cables to Get Millions Online,” Quartz Africa (July 1, 2019), https://qz.com/africa/1656262/google-facebook-building-undersea-internet-cable-for-africa/.

23. Gary Cook et al., Clicking Clean: Who Is Winning the Race to Build a Green Internet? (Washington, DC: Greenpeace Inc., 2017).

24. John S. Quarterman and Josiah C. Hoskins, “Notable Computer Networks,” Communications of the ACM 29, no. 10 (1986).

25. Edwards, “Some Say the Internet Should Never Have Happened.”

26. Randy Bush, “Fidonet: Technology, Tools, and History,” Communications of the ACM 36, no. 8 (1993): 31.

27. The BBS Corner, “The Fidonet BBS Network,” The BBS Corner (February 10, 2010), http://www.bbscorner.com/bbsnetworks/fidonet.htm.

28. Bush, “Fidonet.”

29. Data source: FidoNet nodes by year, Wikimedia Commons, https://commons.wikimedia.org/wiki/File:Fidonodes.PNG.

30. Mitra Ardron and Deborah Miller, “Why the Association for Progressive Communications Is Different,” International Communications Association (1988): 1.

31. Karen Higgs, ed., The APC Annual Report 2000: Looking Back on APC’s First Decade, 1990–2000 (Johannesburg, South Africa: Association for Progressive Communications, 2001), 13.

32. Karen Banks, “Fidonet: The ‘Critical Mass’ Technology for Networking with and in Developing Countries,” in The APC Annual Report 2000: Looking Back on APC’s First Decade, 1990–2000, ed. Karen Higgs (Johannesburg, South Africa: Association for Progressive Communications, 2001), 35.

33. Mike Lawrie, “The History of the Internet in South Africa: How It Began” (1997), http://archive.hmvh.net/txtfiles/interbbs/SAInternetHistory.pdf, 2–3.

34. Jake Kendall et al., “An Emerging Platform: From Money Transfer System to Mobile Money Ecosystem,” Innovations 6, no. 4 (2012): 51.

35. Eric Wainaina, “42% of Kenya GDP Transacted on M-Pesa and 9 Takeaways from Safaricom Results,” Techweez: Technology News & Reviews (May 7, 2015), http://www.techweez.com/2015/05/07/ten-takeaways-safaricom-2015-results/.

36. Nick Hughes and Susie Lonie, “M-Pesa: Mobile Money for the ‘Unbanked,’” Innovations 2 (2007); Sibel Kusimba, Gabriel Kunyu, and Elizabeth Gross, “Social Networks of Mobile Money in Kenya,” in Money at the Margins: Global Perspectives on Technology, Financial Inclusion, and Design, ed. Bill Maurer, Smoki Musaraj, and Ian V. Small (London: Berghahn Books, 2018).

37. Kendall et al., “An Emerging Platform,” 58.

38. Kendall et al., “An Emerging Platform.”

39. Killian Fox, “Africa’s Mobile Economic Revolution,” Guardian (July 24, 2011), https://www.theguardian.com/technology/2011/jul/24/mobile-phones-africa-microfinance-farming.

40. Tawneet Suri and William Jack, “The Long-Run Poverty and Gender Impacts of Mobile Money,” Science 354, no. 6317 (2016).

41. Milford Bateman, Maren Duvendack, and Nicholas Loubere, “Is Fin-Tech the New Panacea for Poverty Alleviation and Local Development? Contesting Suri and Jack’s M-Pesa Findings Published in Science,” Review of African Political Economy (2019); Kusimba, Kunyu, and Gross, “Social Networks of Mobile Money in Kenya.”

42. Anne Helmond, “The Platformization of the Web: Making Web Data Platform Ready,” Social Media + Society 1, no. 2 (2015).

43. Dara Kerr, “Zuckerberg: Let’s Make the Internet 100x More Affordable,” CNET (September 30, 2013), https://www.cnet.com/news/zuckerberg-lets-make-the-internet-100x-more-affordable/.

44. Ingrid Lunden, “WhatsApp Is Actually Worth More Than $19b, Says Facebook’s Zuckerberg, and It Was Internet.org That Sealed the Deal,” TechCrunch (February 24, 2014), http://techcrunch.com/2014/02/24/whatsapp-is-actually-worth-more-than-19b-says-facebooks-zuckerberg/. Emphasis added.

45. World Wide Web Foundation, “World’s Biggest Democracy Stands Up for Net Neutrality” (February 8, 2016), https://webfoundation.org/2016/02/worlds-biggest-democracy-bans-zero-rating/.

46. Karl Bode, “Facebook Is Not the Internet: Philippines Propaganda Highlights Perils of Company’s ‘Free Basics’ Walled Garden,” TechDirt (September 5, 2018), https://www.techdirt.com/articles/20180905/11372240582/facebook-is-not-internet-philippines-propaganda-highlights-perils-companys-free-basics-walled-garden.shtml.

47. Simon Kemp, We Are Social Singapore, and Hootsuite, “Digital in 2017: A Global Overview,” LinkedIn (January 24, 2017), https://www.linkedin.com/pulse/digital-2017-global-overview-simon-kemp.

48. Olivia Solon, “‘It’s Digital Colonialism’: How Facebook’s Free Internet Service Has Failed Its Users,” Guardian (July 27, 2017), https://www.theguardian.com/technology/2017/jul/27/facebook-free-basics-developing-markets.

49. Simon Kemp, “Q2 Digital Statshot 2019: Tiktok Peaks, Snapchat Grows, and We Can’t Stop Talking,” We Are Social (blog) (April 25, 2019), https://wearesocial.com/blog/2019/04/the-state-of-digital-in-april-2019-all-the-numbers-you-need-to-know.