16

Typing Is Dead

Thomas S. Mullaney

In 1985, economist Paul David published the groundbreaking essay “Clio and the Economics of QWERTY,” in which he coined the term “path dependency”—by now one of the most influential economic theories of the twentieth and twenty-first centuries. He posed the question: Given how remarkably inefficient the QWERTY keyboard is, how has it maintained its market dominance? Why has it never been replaced by other keyboard arrangements—arrangements that, he and others have argued, were superior? How does inefficiency win the day?1

David’s answer has become a mainstay of economic thought: that economies are shaped not only by rational choice—which on its own would tend toward efficiency—but also by the vagaries of history. Each subsequent step in economic history is shaped in part, or parameterized, by the actions of the past. Future paths are not charted in a vacuum—rather, they are “dependent” on the paths already taken to that point. This “dependency,” he argues, helps us account for the endurance of inferior options.

David’s choice of target—the QWERTY keyboard—must have struck many readers as iconoclastic and exhilarating at the time. He was, after all, taking aim at an interface that, by the mid-1980s, enjoyed over one century of ubiquity in the realms of typewriting, word processing, and computing. Although concerned primarily with economic history, David was also lifting the veil from his readers’ eyes and revealing, QWERTY is not the best of all possible worlds! Another keyboard is possible!

David was not alone in this iconoclasm. In the April 1997 issue of Discover magazine, Jared Diamond (of Guns, Germs, and Steel notoriety) penned a scathing piece about QWERTY, lambasting it as “unnecessarily tiring, slow, inaccurate, hard to learn, and hard to remember.” It “condemns us to awkward finger sequences.” “In a normal workday,” he continues, “a good typist’s fingers cover up to 20 miles on a QWERTY keyboard.” QWERTY is a “disaster.”2

When we scratch at the surface of this exhilarating anti-QWERTY iconoclasm, however, things begin to look less revolutionary, if not remarkably late in the game. Whereas a vocal minority of individuals in the Anglophone Latin-alphabetic world has been questioning the long-accepted sanctity of the QWERTY keyboard since the 1980s, those outside of the Latin- alphabetic world have been critiquing QWERTY for nearly one hundred years longer. It was not the 1980s but the 1880s when language reformers, technologists, state builders, and others across modern-day East Asia, South Asia, Southeast Asia, the Middle East, North Africa, and elsewhere began to ask: How can we overcome QWERTY?

Moreover, the stakes involved in these earlier, non-Western critiques were profoundly higher than those outlined in the writings of David and Diamond. If Anglophone critics lamented the prevalence of wrist strain, or the loss of a few words-per-minute owing to the “suboptimal” layout of QWERTY, critics in China, for example, had to contemplate much starker realities: that the growing dominance of keyboard-based QWERTY interfaces, along with countless other new forms of Latin-alphabet-dominated information technologies (telegraphy, Linotype, monotype, punched card memory, and more), might result in the exclusion of the Chinese language from the realm of global technolinguistic modernity altogether. Likewise, reformers in Japan, Egypt, India, and elsewhere had to worry about the fate of their writing cultures and the future of their countries. By comparison, David and Diamond merely wanted to juice a bit more efficiency from the keyboard.

The ostensible “solutions” to QWERTY raised by David, Diamond, and others also begin to look naïve when viewed from a global perspective. Their proposed solution to overcoming QWERTY was the adoption of an alternate keyboard layout—the favorite being one designed in 1932 by August Dvorak, a professor of education at the University of Washington who started working on his new layout around 1914. Like so many who have criticized QWERTY in the Western world, David and Diamond celebrated the relatively scientific disposition of letters on the Dvorak keyboard, citing it as a kind of emancipatory device that would free typists from the “conspiracy” and “culprit” of QWERTY, as later iconoclasts phrased it.3

Technologists and language reformers in the non-Western world knew better than this. They knew that to “overcome” QWERTY required confronting a global IT environment dominated by the Latin alphabet, and that this confrontation would require far deeper and more radical courses of action than the mere rearrangement of letters on an interface surface. Whether for Chinese, Japanese, Arabic, Burmese, Devanagari, or any number of other non-Latin scripts, one could not simply “rearrange” one’s way out of this technolinguistic trap. As we will see, the strategies adopted to overcome QWERTY were not restricted merely to the replacement of one keyboard layout for another but required the conquest of far deeper assumptions and structures of keyboard-based text technologies. Ultimately, these reformers would overcome typing itself, bringing us to a present moment in which, as my title contends, typing as we have long known it in the Latin-alphabetic world is dead.

Specifically, I will show how QWERTY and QWERTY-style keyboards, beginning in the age of mechanical typewriters and extending into the domains of computing and mobile devices, have excluded over one half of the global population in terms of language use. I will also show how the “excluded half” of humanity went on to transcend (and yet still use) the QWERTY and QWERTY-style keyboard in service of their writing systems through a variety of ingenious computational work-arounds. In doing so, I will demonstrate how the following pair of statements are able to coexist, even as they seemingly contradict one another:

- • QWERTY and QWERTY-style keyboards and interfaces can be found everywhere on the planet, in use with practically every script in the world, including Chinese.

- • QWERTY and QWERTY-style keyboards, as originally conceived, are incompatible with the writing systems used by more than half of the world’s population.

Explaining this paradox, and reflecting on its implications, are the goals of this chapter.

The False Universality of QWERTY

Before delving in, we must first ask: What exactly is the QWERTY keyboard? For many, its defining feature is “Q-W-E-R-T-Y” itself: that is, the specific way in which the letters of the Latin alphabet are arranged on the surface of the interface. To study the QWERTY keyboard is to try and internalize this layout so that it becomes encoded in muscle memory.

This way of defining QWERTY and QWERTY-style keyboards, however, obscures much more than it reveals. When we put aside this (literally) superficial definition of QWERTY and begin to examine how these machines behave mechanically—features of the machine that are taken for granted but which exert profound influence on the writing being produced—we discover a much larger set of qualities, most of which are so familiar to an English-speaking reader that they quickly become invisible. Most importantly, we learn that the supposed universalism of QWERTY—from the age of mechanical typewriting but continuing into the present day—is a falsehood. These features include:

Auto-Advancing

- At its advent, QWERTY-style keyboard typewriters were auto-advancing: when you depressed a key, it created an impression on the page, and then automatically moved forward one space.

Monolinearity

- Letters fell on a single baseline (also known as monoline).

Isomorphism

- Letters did not change shape. (The shape and size of the letter “A” was always “A,” regardless of whether it followed the letter “C” or preceded the letter “X.”)

Isoposition

- Letters did not change position. (“A” was always located in the same position on the baseline, regardless of which other letters preceded or followed.)

Isolating

- Letters never connected. (The letter “a” and the letter “c,” while they might connect in handwritten cursive, never connected on a mechanical typewriter—each glyph occupied its own hermetically separate space within a line of text.)

Monospatial

- At the outset of typewriting, all letters occupied the same amount of horizontal space (whether typing the thin letter “I” or the wide letter “M”).

Unidirectional

- At the outset, all typewriters assumed that the script in use would run from left to right.

In addition to these many characteristics of the original QWERTY-style keyboard typewriters, there were also two deeper “logics” which are germane to our discussion:

Presence

- These machines abided by a logic of textual “presence,” meaning that users expected to find all of the components of the writing system in question—be it the letters of English, French, or otherwise—fully present in some form on the keys of the keyboard itself. When sitting down in front of an English-language machine, one expected the letters “A” through “Z” to be present on the keys, albeit not in dictionary order. By extension, when sitting down in front of a Hebrew typewriter or an Arabic typewriter, one expected the same of the letters alef (א) through tav (ת) and ‘alif (ا) through ya’ (ي). As seemingly neutral as this logic might appear to us at first, it is in fact a deeply political aspect of the machine, and one we will return to shortly.

Depression Equals Impression

- The second logic might be termed “what you type is what you get.” With the exception of the shift key, and a few others, the expectation is that, when one depresses a key, the symbol that adorns that key will be printed upon the page (or, in the age of computing, displayed on the screen). Depress “X” and “X” is impressed. Depress “2” and “2” appears. And so forth.4

Taken together, these features of QWERTY devices constitute the material starting point that engineers had to think through in order to retrofit the English-language, Latin-alphabetic machines to other orthographies.

Why are these features worthy of our attention, and even our concern? The answer comes when we begin to scrutinize these logics, particularly within a global comparative framework. In doing so, a basic fact becomes apparent that would, for the average English (or French, Russian, German, Italian, etc. ) speaking user, be non-obvious: namely, that the QWERTY-style keyboard machine as it has been conceptualized excludes the majority of writing systems and language users on the planet.

Consider that:

- • An estimated 467 million Arabic speakers in the world are excluded from this mesh of logics, insofar as Arabic letters connect as a rule and they change shape depending upon context. Considering the various mechanical logics of the keyboard noted above, at least five are violated: isomorphism, isoposition, isolation, monospatialism, and left-to-right unidirectionality.

- • More than seventy million Korean speakers are excluded as well, insofar as Hangul letters change both their size and position based upon contextual factors, and they combine in both horizontal and vertical ways to form syllables. Hangul, then, violates at least four of the logics outlined above: isomorphism, isoposition, isolation, and monolinearity.

- • An estimated 588 million Hindi and Urdu speakers, approximately 250 million Bengali speakers, and hundreds of millions of speakers of other Indic languages are excluded for many of the same reasons as Korean speakers. For although there is a relatively small number of consonants in Devanagari script, for example, they often take the form of conjuncts, whose shapes can vary dramatically from the original constituent parts.

- • For the estimated 1.39 billion Chinese speakers (not to mention the approximately 120 million speakers of Japanese, whose writing system is also based in part on nonalphabetic, Chinese character-based script), the QWERTY keyboard as originally conceptualized is fundamentally incompatible.5

Altogether, when one tallies up the total population of those whose writing systems are excluded from the logics of the keyboard typewriter, one’s calculations quickly exceed 50 percent of the global population. In other words, to repeat the point above: the majority of the people on earth cannot use the QWERTY-style keyboard in the way it was originally designed to operate. How, then, did this keyboard come to be a ubiquitous feature of twentieth- and twenty-first-century text technologies globally?

Universal Inequality

If Arabic, Devanagari, and other scripts were “excluded” from the logics of the QWERTY-style mechanical typewriter, how then were engineers able to build mechanical, QWERTY-style typewriters for Arabic, Hindi, and dozens of other non-Latin scripts in the first place? How could QWERTY be at once limited and universal?

The answer is, when creating typewriters for those orthographies with which QWERTY was incompatible, engineers effectively performed invasive surgery on these orthographies—breaking bones, removing parts, and reordering pieces—to render these writing systems compatible with QWERTY. In other words, the universalism of QWERTY was premised upon inequality, one in which the many dozens of encounters between QWERTY and non-Western orthographies took place as profoundly asymmetric, and often culturally violent, engagements. In Thailand (then called Siam), for example, the designers of the first two generations of mechanical Siamese typewriters—both of whom were foreigners living in Siam—could not fit all the letters of the Siamese alphabet on a standard Western-style machine. What they opted for instead was simply to remove letters of the Siamese alphabet, legitimating their decision by citing the low “frequency” of such letters in the Siamese language.

It was not only foreigners who advocated such forms of orthographic surgery. Shaped by the profound power differential that separated nineteenth- and twentieth-century Euro-American colonial powers from many parts of the modern-day non-West, some of the most violent proposals were advocated by non-Western elites themselves, with the goal of rendering their scripts, and thus perhaps their cultures, “compatible” with Euro-American technological modernity. In Korea, for example, some reformers experimented with the linearization of Hangul, which required lopping off the bottom half of Korean glyphs the subscript consonant finals (batchim 받침) and simply sticking them to the right of the graph (since, again, these same technologies could also not handle anything other than the standard single baseline of the Latin alphabet).6 In the Ottoman Empire, meanwhile, as well as other parts of the Arabic-speaking world, proposals appeared such as those calling for the adoption of so-called “separated letters” (hurûf-ı munfasıla) as well as “simplified” Arabic script: that is, the cutting up of connected Arabic script into isolated glyphs that mimicked the way Latin alphabet letters operated on text technologies like the typewriter or the Linotype machine (since such technologies could not handle Arabic script and its connected “cursive” form).7 Further language crises beset, almost simultaneously, Japanese, Hebrew, Siamese, Vietnamese, Khmer, Bengali, and Sinhalese, among many others.8

When we examine each of these “writing reform” efforts in isolation, they can easily seem disconnected. When viewed in concert, however, a pattern emerges: each of these writing reforms corresponded directly with one or more of the “logics” we identified above with regard to the QWERTY typewriter. “Separated letters” was a means of fulfilling, or matching, the logic of isomorphism, isolation, and isoposition; linearization was a means of matching the logics of monolinearity and isomorphism; and so forth.9 In other words, every non-Latin script had now become a “deviant” of one sort or another, wherein the deviance of each was measured in relationship to the Latin-alphabetic, English-language starting point. Each non-Latin script was a “problem,” not because of its inherent properties but because of the ways certain properties proved to be mismatched with the QWERTY machine.

What is more, it also meant that all non-Latin writing systems on earth (and even non-English Latin-alphabetic ones) could be assigned a kind of “difficulty score” based on a measurement of how much effort had to be expended, and distance covered, in order for the English/Latin-alphabetic “self” to “perform” a given kind of otherness. The shorter the distance, the better the deviant (like French or even Cyrillic). The greater the effort, the more perverse and worthy of mockery the deviant is (like Chinese).

In this model, one could array all writing systems along a spectrum of simplicity and complexity. For engineers seeking to retrofit the English-language QWERTY machine to non-English languages:

- French equaled English with a few extra symbols (accents) and a slightly different layout. [Easy]

- Cyrillic equaled English with different letters. [Easy/intermediate]

- Hebrew equaled English with different letters, backward. [Intermediate complexity]

- Arabic equaled English with different letters, backward, and in cursive. [Very complex]

- Korean Hangul equaled English with different letters, in which letters are stacked. [Very complex]

- Siamese equaled English with many more letters, in which letters are stacked. [Highly complex]

- Chinese equaled English with tens of thousands of “letters.” [Impossible?]

For the engineers involved, these formulations were not mere metaphors or discursive tropes; they were literal. To the extent that English-language text technologies were able to perform French easily, this led many to the interpretation that French itself was “easy”—not in a relative sense but in an absolute sense. Likewise, insofar as it was “harder” for English-language text technologies to perform “Arabic-ness” or “Siamese-ness,” this implied that Arabic and Siamese themselves were “complex” scripts—rather than the objective truth of the matter, which was that these and indeed all measurements of “simplicity” and “complexity” were Anglocentric and inherently relational evaluations of the interplay between the embedded, culturally particular logics of the technolinguistic starting point (the QWERTY-style machine) and the scripts whose orthographic features were simply different than those of the Latin alphabet.

In short, the kinds of appeals one frequently hears about the “inherent complexity” or the “inherent inefficiency” of computing in Chinese, Arabic, Burmese, Japanese, Devanagari, and more—especially those couched in seemingly neutral technical descriptions—are neither neutral nor innocuous. They are dangerous. They rehabilitate, rejuvenate, and indeed fortify the kinds of Eurocentric and White supremacist discourses one encountered everywhere in eighteenth- and nineteenth-century Western writings—all while avoiding gauche, bloodstained references to Western cultural superiority or the “fitness” of Chinese script in a social Darwinist sense. Instead, technologists have recast the writing systems used by billions of people simply as “complex scripts.” They have opined about the technological superiority of the Latin alphabetic script over the likes of Arabic and Indic scripts. And in certain cases, they have heaped ridicule on those writing systems they see as computationally “beyond the pale”—so “complex” that they are almost absurd to imagine computationally (see, for example, the 1983 cartoon in fig. 16.1, in which a Chinese computer keyboard was imagined by the artist as an object whose size matched the Great Wall of China).10 Creating a special class of writing systems and labeling them “complex” omits from discussion the question of how and why these writing systems came to be “complex” in the first place.

Figure 16.1 A 1981 issue of Popular Computing imagined a Chinese computer keyboard the size of the Great Wall of China (complete with rickshaw and driver).

Escaping Alphabetic Order: The Age of “Input”

By the midpoint of the twentieth century, the global information order was in a state of contradiction, at least as far as the keyboard was concerned. The keyboard had become “universal,” in that QWERTY-style keyboards could be found in nearly all parts of the globe. And yet it was haunted by specters both from within and from without. From within, the supposed neutrality of the QWERTY-style keyboard did not accord with the actual history of its globalization: in South Asia, the Middle East, North Africa, Southeast Asia, and elsewhere, the “price of entry” for using the QWERTY-style machine was for local scripts to bend and break, succumbing to various kinds of orthographic disfigurement.

Meanwhile, the Sinophone world and its more than one billion people were simply excluded from this “universality” altogether. For this quarter of humanity, that is, the very prospect of using a “keyboard,” whether QWERTY-style or otherwise, was deemed a technological impossibility—and yet never did this gaping hole in the map of this “universality” call such universality into question. Instead, the provincial origins of the QWERTY machine were entirely forgotten, replaced with a sanitized and triumphant narrative of universality. If anything was to blame for this incompatibility between Chinese and QWERTY, it was the Chinese writing system itself. Chinese was to blame for its own technological poverty.

In this moment of contradiction, 1947 was a watershed year. In this year, a new Chinese typewriter was debuted: the MingKwai, or “Clear and Fast,” invented by the best-selling author and renowned cultural critic Lin Yutang. MingKwai was not the first Chinese typewriter.11 It was, however, the first such machine to feature a keyboard. What’s more, this keyboard was reminiscent of machines in the rest of the world. It looked just like the real thing.

Despite its uncanny resemblance to a Remington, however, there was something peculiar about this keyboard by contemporary standards: what you typed was not what you got. Depression did not equal impression.

Were an operator to depress one of the keys, they would hear gears moving inside—but nothing would appear on the page. Depressing a second key, more gears would be heard moving inside the chassis of the device, and yet still nothing would appear on the page. After the depression of this second key, however, something would happen: in a small glass window at the top of the chassis of the machine—which Lin Yutang referred to as his “Magic Eye”—up to eight Chinese characters would appear. With the third and final keystroke using a bank of keys on the bottom—numerals 1 through 8—the operator could then select which of the eight Chinese character candidates they had been offered.

Phrased differently, Lin Yutang had designed his machine as a kind of mechanical Chinese character retrieval system in which the user provided a description of their desired character to the machine, following which the machine then offered up to eight characters that matched said description, waiting for the user to confirm the final selection before imprinting it on the page. Criteria, candidacy, confirmation—over and over.

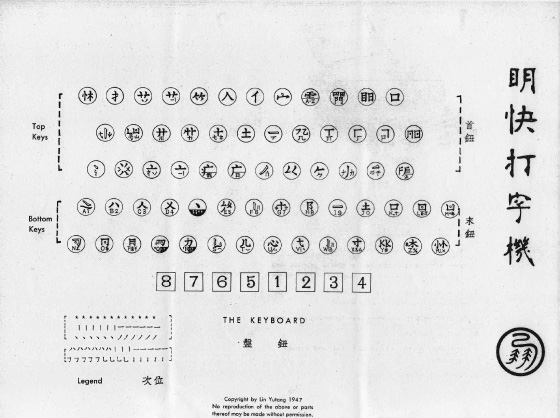

Figure 16.2 Keyboard layout of the MingKwai typewriter.

There was something else peculiar about this machine. For anyone familiar with Chinese, the symbols on the keyboard of the MingKwai would have struck them as both insufficient in number and peculiar in shape (fig. 16.2). There were nowhere near the tens of thousands of characters the machine claimed to be able to type, and the symbols that were included were, in many cases, neither Chinese characters nor any commonly accepted component of Chinese characters. Some of these symbols were peculiar, made-up shapes that Lin Yutang himself invented—“pseudo-Chinese” or “Chinese-ish” characters, perhaps, but certainly not Chinese in any accepted sense of the term in the 1940s or, indeed, at any other moment in recorded history.

There was a simple reason for all of this: although the MingKwai typewriter was an inscription technology, it was not premised upon the act of “typing.” The goal of depressing a key on the MingKwai typewriter was not to cause a particular symbol to appear on the page, as was the case for all other typewriters designed up to that point in history, but rather to provide criteria to the machine so as to describe which character the operator wanted to retrieve from the machine’s internal metal hard drive. The graphs on the keys did not, therefore, have to correspond in a one-to-one fashion with the characters one wished to type—they merely needed to help describe one’s desired characters to the device.

Lin Yutang invested a personal fortune in the project, but ultimately his timing did not prove fortuitous. Civil war was raging in China, and executives at Mergenthaler Linotype grew understandably worried about the fate of their patent rights if the Chinese Communists won—which of course they did. Later, Mergenthaler heard rumors that China’s new “Great Helmsman,” Chairman Mao Zedong, had called for the abolition of Chinese characters and their replacement by full-scale Romanization, which would have made MingKwai pointless. (This never happened.) Everything being too uncertain, the company decided to wait, placing Lin in a financially untenable position.

MingKwai may have failed as a potential commercial product, but as a proof of concept it opened up new vistas within the domain of text technology. It showed the possibility of a radically different relationship between a machine’s keyboard interface and its output. Specifically, Lin Yutang had created a typewriter on which what you typed was not what you got, changing the typewriter from a device focused primarily on inscription into one focused on finding or retrieving things from memory. Writing, in this framework, was no longer an act of typing out the spelling of a word, but rather using the keys of the keyboard in order to search for characters stored in memory. This departure from the long-standing logics of the keyboard opened up an entirely new terrain.

The first to venture into this new terrain was a professor of electrical engineering at MIT, Samuel Hawks Caldwell, just a few years after the MingKwai project stalled. Caldwell did not speak a word of Chinese, but as a student of Vannevar Bush he was an expert in logical circuit design. He was first exposed to the Chinese language thanks to informal dinnertime chats with his overseas Chinese students at MIT. As Caldwell and his students got to talking about Chinese characters, one seemingly rudimentary fact about the language caught him by surprise: “Chinese has a ‘spelling.’” “Every Chinese learns to write a character by using exactly the same strokes in exactly the same sequence.”12 Here Caldwell was referring to “stroke order” (bishun), as it is known in Chinese.

His curiosity piqued, he sought the help of a professor of Far Eastern languages at Harvard, Lien-Sheng Yang, relying upon Yang to analyze the structural makeup of Chinese characters and to determine the stroke-by-stroke “spelling” of approximately 2,000 common-usage graphs. Caldwell and Yang ultimately settled upon twenty-two “stroke-letter combinations” in all: an ideal number to place upon the keys of a standard QWERTY-style typewriter keyboard.13

Caldwell’s use of the word “spelling” is at once revealing and misleading. To “spell” the word pronounced /kəmˈpjutɚ/ using a typewriter was to depress a sequence of keys, C-O-M-P-U-T-E-R. The “spelling” of this word is said to be complete only when all eight letters are present, in the correct sequence. Such was not the case by which a user “spelled” with the Sinotype, as it was known. To depress keys on the Sinotype was not to see that same symbol on the page but as, Caldwell himself explained, “to furnish the input and output data required for the switching circuit, which converts a character’s spelling to the location coordinates of that character in the photographic storage matrix.” Like MingKwai before it, that is, Sinotype was not primarily an inscription device but rather a retrieval device. Inscription took place only after retrieval was accomplished.

While this distinction might at first seem minor, the implications were profound—as Caldwell quickly discovered. Not only did Chinese characters have a “spelling,” he discovered, but “the spelling of Chinese characters is highly redundant.” It was almost never necessary, that is, for Caldwell to enter every stroke within a character in order for the Sinotype to retrieve it unambiguously from memory. “Far fewer strokes are required to select a particular Chinese character than are required to write it,” Caldwell explained.14

In many cases, the difference between “spelling in full” and “minimum spelling” (Caldwell’s terms) was dramatic. For one character containing fifteen strokes, for example, it was only necessary for the operator to enter the first five or six strokes before the Sinotype arrived at a positive match. In other cases, a character with twenty strokes could be unambiguously matched with only four keystrokes.

Caldwell pushed these observations further. He plotted the total versus minimum spelling of some 2,121 Chinese characters, and in doing so determined that the median “minimum spelling” of Chinese characters fell between five and six strokes, whereas the median total spelling equaled ten strokes. At the far end of the spectrum, moreover, no Chinese character exhibited a minimum spelling of more than nineteen strokes, despite the fact that many Chinese characters contain twenty or more strokes to compose.

In short, in addition to creating the world’s first Chinese computer, Caldwell had also inadvertently stumbled upon what we now refer to as “autocompletion”: a technolinguistic strategy that would not become part of English-language text processing in a widespread way until the 1990s but was part of Chinese computing from the 1950s onward.15 The conceptual and technical framework that Lin Yutang and Samuel Caldwell laid down would remain foundational for Chinese computing into the present day. Whether one is using Microsoft Word, surfing the web, or texting, computer and new media users in the Sinophone world are constantly involved in this process of criteria, candidacy, and confirmation.16 In other words, when the hundreds of millions of computer users in the Sinophone world use their devices, not a single one of them “types” in the conventional sense of spelling or shaping out their desired characters. Every computer user in China is a “retrieval writer”—a “search writer.” In China, “typing” has been dead for decades.

The Keyboard Is Not the Interface

Beginning in the 1980s, the QWERTY-style keyboard extended and deepened its global dominance by becoming the text input peripheral of choice in a new arena of information technology: personal computing and word processing. Whether in Chinese computing—or Japanese, Korean, Arabic, Devanagari, or otherwise—computer keyboards the world over look exactly the same as they do in the United States (minus, perhaps, the symbols on the keys themselves). Indeed, from the 1980s to the present day, never has a non-QWERTY-style computer keyboard or input surface ever seriously competed with the QWERTY keyboard anywhere in the world.

As global computing has given way to a standardized technological monoculture of QWERTY-based interfaces (just as typewriting did before it) one might reasonably assume two things:

- In light of our discussion above, one might assume that writing systems such as Arabic, Chinese, Korean, Devanagari, and more should have continued to be excluded from the domain of computing, just as they were in typewriting, unable to render their “complex” scripts correctly on computers without fundamentally transfiguring the orthographies themselves to render them compatible.

- Given the stabilization of global computing around the QWERTY-style keyboard, one might also reasonably assume that such standardization would have resulted in standardized forms of human–computer interaction, even if these interactions were (as noted above) at a disadvantage when compared to the English-language Latin-alphabetic benchmark.

In a nutshell, the globalization and standardization of the QWERTY-style keyboard should have resulted in both a deepening of the inequalities examined above and an increased standardization of text input practices within each respective language market where QWERTY-style keyboards have become dominant.

The empirical reality of global computing defies both of these assumptions. First, while still at a profound disadvantage within modern IT platforms, Arabic has finally begun to appear on screen and the printed page in its correct, connected forms; Korean Hangul has finally begun to appear with batchim intact; Indic conjuncts have at last begun to appear in correct formats; and more. Collectively, moreover, Asia, Africa, and the Middle East are becoming home to some of the most vibrant and lucrative IT markets in the world, and ones into which Euro-American firms are clamoring to make inroads. How can an interface that we described above as “exclusionary” suddenly be able to help produce non-Latin scripts correctly? How did this exclusionary object suddenly become inclusionary?

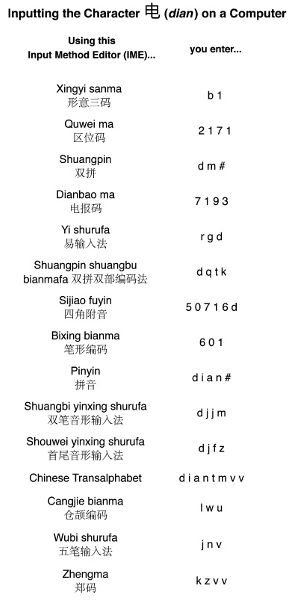

Second, with regards to stabilization, while all non-Latin computing markets have settled upon the QWERTY or QWERTY-style keyboard as their dominant interfaces, techniques of non-Latin computational text input have not undergone standardization or stabilization. To the contrary, in fact, the case of Chinese computing demonstrates how, despite the ubiquity of QWERTY keyboards, the number of Chinese input systems has proliferated during this period, with many hundreds of systems competing in what is sometimes referred to as the “Input Wars” of the 1980s and 1990s. (To gain a sense of the sheer diversity and number of competing input systems during this period, fig. 16.3 lists just a small sample of the hundreds of IMEs invented since the 1980s, including the codes an operator would have used to input the Chinese character dian, meaning “electricity,” for each.) How is it possible that the 1980s and ’90s was simultaneously a time of interface stabilization (in the form of the QWERTY keyboard) for Chinese computing but also a time of interface destabilization (in the form of the “Input Wars”)?

In trying to resolve these two paradoxes (How does an exclusionary object become inclusionary? How does interface stabilization lead, counterintuitively, to interface destabilization?), current literature is of little help. Existing literature, after all, is shaped primarily by the framework of the Latin alphabet—and, above all, of “typing”—as exemplified in the essays by David and Diamond described earlier. For David and Diamond—and for all critics of QWERTY—there is no (and can be no) conceptual separation between “the keyboard” and “the interface,” and they are understood to be one and the same thing. If the arrangement of keys on the keyboard stays consistent, whether as QWERTY, AZERTY, QWERTZ, DVORAK, or otherwise, by definition the “interface” is understood to have stabilized—and vice versa.

Figure 16.3 Fifteen ways (among hundreds) to input dian (“electricity”).

But in the case of the MingKwai machine, the Sinotype machine, or other examples of “input”—where writing is an act of retrieval rather than of inscription, and where one does not “type” in the classic sense of the word—the stabilization of the physical device (the keyboard) has no inherent or inevitable stabilizing effect on the retrieval protocol, insofar as there are effectively an infinite number of meanings one could assign to the keys marked “Q,” “W,” “E,” “R,” “T,” and “Y” (even if those keys never changed location). When inscription becomes an act of retrieval, our notion of “stabilization”—that is, our very understanding of what stabilization means and when it can be assumed to take place—changes fundamentally.17

To put these manifold changes in context, a helpful metaphor comes from the world of electronic music—and, in particular, Musical Instrument Digital Interfaces (MIDI). With the advent of computer music in the 1960s, it became possible for musicians to play instruments that looked and felt like guitars, keyboards, flutes, and so forth but create the sounds we associate with drum kits, cellos, bagpipes, and more. What MIDI effected for the instrument form, input effected for the QWERTY keyboard. Just as one could use a piano-shaped MIDI controller to play the cello, or a woodwind-shaped MIDI controller to play a drum kit, an operator in China can used a QWERTY-shaped (or perhaps Latin-alphabetic-shaped) keyboard to “play Chinese.” For this reason, even when the instrument form remained consistent (in our case, the now-dominant QWERTY keyboard within Sinophone computing), the number of different instruments that said controller could control became effectively unlimited.

Chinese computing is not unique in this regard. Across the non-Western, non-Latin world, a wide array of auxiliary technologies like input method editors can be found, used to “rescue” the QWERTY-style keyboard from its own deep-seated limitations and to render it compatible with the basic orthographic requirements of non-Latin writing systems. Thus, it is not in fact the QWERTY keyboard that has “globalized” or “spread around the world” by virtue of its own supremacy and power. Rather, it is thanks to the development of a suite of “compensatory” technologies that the QWERTY keyboard has been able to expand beyond its fundamentally narrow and provincial origins. In the end, we could say, it was the non-Western world that conquered QWERTY, not the other way around.

Collectively, there are at least seven different kinds of computational work-arounds, achieved by means of three different kinds of computer programs, that are now essential in order to render QWERTY-style keyboard computing compatible with Burmese, Bengali, Thai, Devanagari, Arabic, Urdu, and more (notice that I did not write “to make Burmese, etc., compatible with QWERTY”). The three kinds of programs are layout engines, rendering engines, and input method editors, and the seven kinds of reconciliations are (see fig. 16.4):

- 1. Input, required for Chinese (as we have seen), Japanese, Korean, and many other non-Latin orthographies18

- 2. Contextual shaping, essential for scripts such as Arabic and all Arabic-derived forms, as well as Burmese

- 3. Dynamic ligatures, also required for Arabic as well as Tamil, among others

- 4. Diacritic placement, required for scripts such as Thai that have stacking diacritics

- 5. Reordering, in which the order of a letter or glyph on the line changes depending on context (essential for Indic scripts such as Bengali and Devanagari in which consonants and following vowels join to form clusters)

- 6. Splitting, also essential for Indic scripts, in which a single letter or glyph appears in more than one position on the line at the same time

- 7. Bidirectionality, for Semitic scripts like Hebrew and Arabic that are written from right to left but in which numerals are written left to right

As should be evident from this list, the specific “problems” that these work-arounds were developed to resolve are the very same ones we examined at the outset of this essay: namely, they target the specific characteristics and logics of the original QWERTY typewriter, including presence, what-you-type-is-what-you-get, monospacing, isomorphism, isoposition, etc. What we are witnessing in the list above, then, are legacies of mechanical typewriting that were inherited directly by word processing and personal computing beginning in the second half of the twentieth century, and which have remained “problems” ever since.

The question becomes: What happens when the number of keyboards requiring “auxiliary” or “compensatory” programs outnumber the keyboards that work the way they supposedly “should”? What happens when compensatory technologies are more widespread than the supposedly “normal” technologies they are compensating for? What are the costs involved—the economic costs, the cultural costs, the psychic costs—when effectively the entire non-Western, nonwhite world is required to perform never-ending compensatory digital labor, not merely to partake in Latin-alphabet-dominated industrial- and postindustrial-age text technologies but, beyond that, to enable the Latin and overwhelmingly white Western world to slumber undisturbed in its now-centuries-old dream of its own “universality” and “neutrality”?

Figure 16.4 Examples of contextual shaping, dynamic ligatures, reordering, and splitting.

Conclusion

Let us consider three ironies that pervade the history discussed thus far, ironies that urge us to rethink the global history of information technology in radically new ways.

First, QWERTY and QWERTY-style keyboards are not the obstacles—it is rather the logics that are baked into QWERTY and QWERTY-style machines that are the obstacles. In other words, typing is the obstacle. It is only the “typists” of the world—those operating under the assumptions of presence and “what-you-type-is-what-you-get”—who believe that the overthrow of QWERTY requires the abandonment of QWERTY. For those in the realm of input, however, the baseline assumption is completely different. Across East Asia, South Asia, the Middle East, North Africa, and elsewhere, what one types is never what one gets, and one’s writing system is never fully present on the keyboard surface. Instead, the QWERTY or QWERTY-style keyboard are always paired with computational methods of varying sort—input method editors, shaping engines, rendering engines, and more—that harness computational and algorithmic power in order to reconcile the needs of one’s orthographies with a device that, by itself, fails to do so. In quite literally every part of the world outside of the Latin-alphabetic domain, that is, the QWERTY or QWERTY-style keyboard has been transformed into what in contemporary parlance might be referred to as a “smart” device—a once-noncomputational artifact (such as a refrigerator or a thermostat) retrofitted so as to harness computational capacities to augment and accelerate its functionality (and to create new functionalities that would have been prohibitively difficult or even impossible in noncomputational frameworks).

In doing so, the escape from QWERTY has not required throwing away the QWERTY keyboard or replacing it with another physical interface—only a capacity, and perhaps a necessity, to reimagine what the existing interface is and how it behaves. In a peculiar twist of fate, it has proven possible to exceed the QWERTY-style keyboard, even as one continues to use it, and even as the exclusionary logics outlined above continue to be embedded within it. As a result, despite the global, unrivaled ubiquity of the QWERTY-style keyboard, the escape from QWERTY has in fact already happened—or is, at the very least, well under way. This is an idea that is completely oxymoronic and unimaginable to David, Diamond, and other typing-bound thinkers who, unbeknownst to them, are conceptually confined to operating within the boundaries of the very object they believe themselves to be critiquing.19

The second irony is best captured in a question a student once posed to me at People’s University in Beijing at the close of a course I offered on the global history of information technology: “Does English have input? Is there an IME for English?”

The answer to this question is yes—or, at the very least: There is absolutely no reason why there couldn’t be. The QWERTY keyboard in the English-speaking world, and within Latin-alphabetic computing more broadly, is as much a computational device as it is within the context of computing for Chinese, Japanese, Korean, Arabic, or otherwise. Nevertheless, the overarching pattern of Anglophone computing is one in which engineers and interface designers have done everything in their considerable power to craft computational experiences that mimic the feeling of typing on, basically, a much faster version of a 1920s-era mechanical Remington. They have done everything in their power to preserve rather than interrogate the two core “logics” of mechanical typewriting: presence, and what-you-type-is-what-you-get.

To return to the MIDI analogy, the QWERTY keyboard in the Anglophone world is as much a MIDI piano as in the non-Latin world, the main difference being: Anglophone computer users have convinced themselves that the only instrument they can play with their MIDI piano is the piano. The idea that one could use this interface to “play English” in an alternate way is a distant idea for all but the most aggressive technological early adopters. As a result, whereas in the context of non-Latin computing, engineers and users have spent their time figuring out ways to harness the computational power of microcomputers to make their input experiences more intuitive or efficient, in the context of English-language Latin-alphabet computing, practically all of the algorithmic and computational power of the microcomputer is left on the table.

In a peculiar turn of history, then, the ones who are now truly captive to QWERTY are the ones who built (and celebrated) this prison in the first place. The rest of the world has fashioned radically new spaces within this open-air prison, achieving perhaps the greatest freedom available short of rewriting the history of Euro-American imperialism in the modern era.

The third and final irony is, in many ways, the most counterintuitive and insidious. Returning to David, Diamond, and the self-styled anti-QWERTY iconoclasts—let us now ask: What would happen if they actually got what they wanted? What would happen if “path dependence” could be overcome, and we really could replace the QWERTY keyboard with the Dvorak layout? What if all keyboards were magically jettisoned and replaced by speech-to-text algorithms (arguably the newest object of fetishization among anti-QWERTY agitators)? Might this constitute the long-desired moment of emancipation?

Absolutely not.

If either approach gained widespread usage, it would serve only to reconstitute “(Latin) alphabetic order” by other means. Instead of the QWERTY-based expectation of “presence” and “what you type is what you get,” we would simply have a Dvorak-based one. The “spell” of typing would remain unbroken. And, in the case of speech recognition, we would simply be replacing a keyboard-based logic with a speech-based homology: namely, what you say is what you get. Instead of text composition being an act of “spelling” words out in full on a keyboard-based interface, it would involve “sounding them out” in full through speech. However you cut it, it would be the same technolinguistic prison, only with different bars. (Indeed, either of these new prisons might be even more secure, insofar as we prisoners might be tempted to believe falsely that we are “free.”)

There is only one way to overcome our present condition, and that is to face facts: Typing is dead.

Notes

1. Paul A. David, “Clio and the Economics of QWERTY,” American Economic Review 75, no. 2 (1985): 332–337.

2. Jared Diamond, “The Curse of QWERTY: O Typewriter? Quit Your Torture!,” Discover (April 1997).

3. Eleanor Smith called QWERTY the “culprit” that prevents typists from exceeding the average speed of human speech (120 words per minute) with most of us “crawling” at 14 to 31 wpm. Robert Winder, meanwhile, called the QWERTY keyboard a “conspiracy.” Eleanor Smith “Life after QWERTY,” Atlantic (November 2013); Robert Winder, “The Qwerty Conspiracy,” Independent (August 12, 1995).

4. There is, in fact, another baseline “logic” of the QWERTY keyboard that, while we do not have time to address in this venue, needs to be emphasized: namely, that there is a “keyboard” at all. Early in the history of typewriting, and throughout the history of Chinese typewriting, typewriters did not necessarily have keyboards or keys to be considered “typewriters.” See Thomas S. Mullaney, The Chinese Typewriter: A History (Cambridge, MA: MIT Press, 2017), chapter 1.

5. Rick Noack and Lazaro Gamio, “The World’s Languages, in 7 Maps and Charts,” Washington Post (April 23, 2015), accessed July 1, 2018, https://www.washingtonpost.com/news/worldviews/wp/2015/04/23/the-worlds-languages-in-7-maps-and-charts/?utm_term=.fba65e53a929.

6. See Mullaney, The Chinese Typewriter; Andre Schmid, Korea Between Empires, 1895–1919 (New York: Columbia University Press, 2002).

7. See Nergis Ertürk, “Phonocentrism and Literary Modernity in Turkey,” Boundary 2 37, no. 2 (2010): 155–185.

8. Ilker Ayturk, “Script Charisma in Hebrew and Turkish: A Comparative Framework for Explaining Success and Failure of Romanization,” Journal of World History 21, no. 1 (March 2010): 97–130; Fiona Ross, The Printed Bengali Character and Its Evolution (Richmond: Curzon, 1999); Christopher Seeley, A History of Writing in Japan (Leiden and New York: E.J. Brill, 1991); Nanette Gottlieb, “The Rōmaji Movement in Japan,” Journal of the Royal Asiatic Society 20, no. 1 (2010): 75–88; Zachary Scheuren, “Khmer Printing Types and the Introduction of Print in Cambodia: 1877–1977,” PhD dissertation (Department of Typography & Graphic Communication, University of Reading, 2010); W. K. Cheng, “Enlightenment and Unity: Language Reformism in Late Qing China,” Modern Asian Studies 35, no. 2 (May 2001): 469–493; Elisabeth Kaske, The Politics of Language Education in China 1895–1919 (Leiden and New York: Brill, 2008); Mullaney, The Chinese Typewriter; Sandagomi Coperahewa, “Purifying the Sinhala Language: The Hela Movement of Munidasa Cumaratunga (1930s–1940s),” Modern Asian Studies 46, no. 4 (July 2012): 857–891. The simultaneity of these language crises was matched by their intensity.

9. It is important to note that internalist critiques of writing systems were met as well by internalist defenses thereof. Alongside Chinese reformers who called for the abolition of Chinese characters, there were those who argued vociferously against such viewpoints. In China, for example, a telling passage from 1917, written by leading reformer Hu Shi, is a case in point: “They say that Chinese doesn’t fit the typewriter, and thus that it’s inconvenient. Typewriters, however, are created for the purpose of language. Chinese characters were not made for the purpose of typewriters. To say that we should throw away Chinese characters because they don’t fit the typewriter is like ‘cutting off one’s toes to fit the shoe,’ only infinitely more absurd.” Hu Shi [胡適], “The Chinese Typewriter—A Record of My Visit to Boston (Zhongwen daziji—Boshidun ji) [中文打字機—波士頓記]),” in Hu Shi, Hu Shi xueshu wenji—yuyan wenzi yanjiu [胡适学术⽂集—语⾔⽂字研究] (Beijing: Zhonghua shuju, 1993).

10. Source for figure 16.1: “Computerizing Chinese Characters,” Popular Computing 1, no. 2 (December 1981): 13.

11. See Mullaney, The Chinese Typewriter.

12. Samuel H. Caldwell, “The Sinotype—A Machine for the Composition of Chinese from a Keyboard,” Journal of The Franklin Institute 267, no. 6 (June 1959): 471–502, at 474.

13. Now, importantly, Caldwell dispensed with Lin Yutang’s symbols and replaced them with different ones—but this is in many ways immaterial to our discussion. Just as “typing” is an act that is not limited to any one instantiation or style of typing (i.e., “typing” can take place in Russian just as well as in English or German), “input” too is a framework that is not tied to any one kind of input. The sine qua non of “input” is not the symbols on the keys, or even the mechanism by which the criteria-candidacy-confirmation process takes place—rather, input can be defined as any inscription process that uses the retrieval-composition framework first inaugurated on Lin’s MingKwai.

14. Samuel Caldwell, “Progress on the Chinese Studies,” in “Second Interim Report on Studies Leading to Specifications for Equipment for the Economical Composition of Chinese and Devanagari,” by the Graphic Arts Research Foundation, Inc., addressed to the Trustees and Officers of the Carnegie Corporation of New York. Pardee Lowe Papers, Hoover Institution Library and Archives, Stanford University, Palo Alto, CA, accession no. 98055-16, 370/376, box 276, p. 2.

15. Autocompletion is just one of the many new dimensions of text processing that emerge when engineers began to move away from the framework of “spelling” as conceptualized in Alphabetic Order to the framework of Input. For an examination of early Chinese contributions to predictive text, see Mullaney, The Chinese Typewriter.

16. Recently, moreover, this process has actually entered the cloud. So-called “cloud input” IMEs, released by companies like Sogou, Baidu, QQ, Tencent, Microsoft, Google, and others, have begun to harness enormous Chinese-language text corpora and ever more sophisticated natural-language-processing algorithms.

17. One additional factor that helps explain the sheer number of input systems at this time is that, whether knowingly or not, these inventors, linguists, developers, and hobbyists were in fact recycling methods that were first invented in China during the 1910s, ’20s, and ’30s—an era well before computing, of course, but one in which Chinese-language reform and educational reform circles were in the grips of what was then called the “character retrieval crisis” (jianzifa wenti), in which various parties debated over which among a wide variety of experimental new methods was the best way to recategorize and reorganize Chinese characters in such contexts as dictionaries, phone books, filing cabinets, and library card catalogs, among others. For example, Wubi shurufa (Five-Stroke input), which was one of the most popular input systems in the 1980s and ’90s—was in fact a computationally repurposed version of the Wubi jianzifa (Five-Stroke character retrieval method) invented by Chen Lifu. The nearly 100 experimental retrieval systems of the 1920s and ’30s, as well as the principles and strategies upon which they were based, thus formed a kind of archive (consciously or not) for those who, two and three generations later, were now concerned in the novel technolinguistic ecology of computing.

18. Sharon Correll, “Examples of Complex Rendering,” NSRI: Computers & Writing Systems, accessed January 2, 2019, https://scripts.sil.org/CmplxRndExamples; Pema Geyleg, “Rendering/Layout Engine for Complex Script,” presentation, n.d., accessed January 2, 2019, http://docplayer.net/13766699-Rendering-layout-engine-for-complex-script-pema-geyleg-pgeyleg-dit-gov-bt.html.

19. To suggest that the escape from QWERTY has happened, or that it is under way, is decidedly not the same as suggesting that the non-Western world has in some way become “liberated” from the profoundly unequal global information infrastructure we discussed at the outset. The argument I am making is more complicated and subtle than that, and suggests that contemporary IT has entered into a new phase in which Western Latin alphabet hegemony continues to exert material, and in many ways conceptual, control over the way we understand and practice information, but that within this condition new spaces have been fashioned. Even with this kind of cognitive firepower targeting these issues, however, problems remain today. Normal, connected Arabic text cannot be achieved on many Adobe programs, a remarkable fact when considering the kinds of advances made by this company, as well as the fact that more than one billion people use one form or another of Arabic/Arabic-derived writing system.