Chapter 51

The Neuroscience of Consciousness

Consciousness is one of the most enigmatic features of the universe. People—and presumably many other animals, too—not only act but feel: they see, hear, smell, recall, plan for the future. These activities are associated with subjective, ineffable, immaterial feelings that are tied in some manner to the material brain. The exact nature of this relationship—the classical mind-body problem—remains elusive and the subject of heated debate. These firsthand, subjective experiences pose a daunting challenge to the scientific method that has, in many other areas, proven so immensely fruitful. Science can describe events microseconds following the Big Bang, offer an increasingly detailed account of matter and how to manipulate it, and uncover the biophysical and neurophysiological nuts and bolts of the brain and its pathologies. However, this same method has as yet failed to provide a satisfactory account of how firsthand, subjective experience fits into the objective, physical universe. The brute fact of consciousness comes as a total surprise; it does not appear to follow from any phenomena in physics or biology. Indeed, some modern philosophers argue that consciousness is not logically supervenient to physics (Chalmers, 1996). That is, it is certainly logically possible to imagine a world identical to ours but without any conscious experience. Nothing in the known laws of physics—or of biology—seems to contradict this. Yet we do find ourselves in a world where each one of us has conscious experiences, sees a picture of the world and so on.

People willingly concede that when it comes to nuclear physics or molecular biology, specialist knowledge is essential; but many assume that there are few relevant facts about consciousness and therefore everybody is entitled to their own theory. Nothing could be further from the truth. There is an immense amount of relevant psychological, clinical, and neuroscientific data and observations that must be accounted for. Furthermore, the modern focus on the neuronal basis of consciousness in the brain—rather than on interminable philosophical debates—has given brain scientists tools to greatly increase our knowledge of the conscious mind.

Consciousness is a state-dependent property of certain types of complex, biological, adaptive, and highly interconnected systems. The best example of consciousness is found in a healthy and attentive human brain—for example, the reader of this chapter. Not all biological, adaptive systems appear to have consciousness; examples include the enteric nervous system and the immune system. Brain scientists are exploiting a number of empirical approaches that shed light on the neural basis of consciousness. This chapter reviews these approaches and summarizes what has been learned.

What Phenomena does Consciousness Encompass?

There are many definitions of consciousness (Searle, 2004). A common philosophical one is “Consciousness is what it is like to be something,” such as the experience of what it feels like to see red, to be me, or to be angry. This what-it-feels-like-from-within definition expresses the principal irreducible characteristic of the phenomenal aspect of consciousness: to experience something. “What it feels like to see red” also emphasizes the subjective or first-person perspective of consciousness: it is a subject, an I, who is having the experiences, and the experience is inevitably private.

A science of consciousness must explain the exact relationship between phenomenal, mental states, and brain states. This is the heart of the classical mind-body problem: What is the nature of the relationship between the immaterial, conscious mind and its physical basis in the electrochemical interactions in the body? This problem can be divided into several subproblems:

1. Why is there any experience at all? Or, put differently, why does any one brain state—that is, neurons over here firing, while neurons over there remain silent—feel like anything? In philosophy, this is referred to by some as the Hard Problem (note the capitalization), or as the explanatory gap between the material, objective world and the subjective, phenomenal world (Chalmers, 1996). Many scholars have argued that the exact nature of this relationship will remain a central puzzle of human existence, without an adequate reductionistic, scientific explanation. However, as similar sentiments have been expressed in the past for the problem of seeking to understand life or to determine what material the stars are made out of, it is best to put this question aside for the moment and not be taken in by defeatist arguments.

2. Why is the relationship among different experiences the way it is? For instance, red, yellow, green, cyan, blue, and magenta are all colors that can be mapped onto the topology of a circle. Why? Furthermore, as a group, these color percepts share certain commonalities that make them different from other percepts, such as seeing motion or smelling a rose.

3. Why are feelings private? As expressed by poets and novelists, we cannot communicate an experience to somebody else except by way of example. Try explaining vision to somebody blind from birth, or color to an achromat—rare individuals born without any cone photoreceptors.

4. How do feelings acquire meaning? Subjective states are not abstract states but have an immense amount of associated explicit and implicit feelings. Think of the unmistakable smell of dogs coming in from the rain or the crunchy texture of potato chips.

5. Why are only some behaviors associated with conscious states? Much brain activity and associated behavior occur without any conscious sensation.

The Neurobiology of Free Will

A further aspect of the mind-body problem is the question of free will, a vast topic. Answering this question goes to the heart of the way people think of themselves. The spectrum of views ranges from the traditional and deeply embedded belief that we are free, autonomous, and conscious actors to the view that we are biological machines driven by needs and desires beyond conscious access and with limited insight or voluntary control.

Of great relevance are the classical findings by Libet, Gleason, Wright, and Pearl (1983) of brain events that precede the conscious initiation of a voluntary action. In this seminal experiment, subjects were sitting in front of an oscilloscope, tracking a spot of light moving every 2.56 seconds around a circle. Every now and then, “spontaneously,” the subject had to carry out a specific voluntary action, here flexing their wrist. If this action is repeated sufficiently often while electrical activity around the vertex of the head is recorded, a readiness potential (Bereitschaftspotential) in the form of a sustained scalp negativity develops 1–2 sec before the muscle starts to move. Libet asked subjects to silently note the position of the spot of light when they first “felt the urge” to flex their wrist and to report this location afterward. This temporal marker for the awareness of willing an action occurs on average 200 msec before initiation of muscular action (with a standard error of about 20 msec), in accordance with commonsense notions of the causal action of free will. However, the readiness potential can be detected at least 350 msec before awareness of the action. In other words, the subject’s brain signals the action at least half a second before the subject feels that he or she has initiated it!

The original experiment has been replicated (Haggard & Eimer, 1999) as well as extended using fMRI, where precentral and parietal cortices can signal decisions up to ten seconds prior to entering awareness. Yet, because of its counterintuitive implication that conscious will has no causal role, the interpretation of these experiments continues to be vigorously debated (Haggard, 2008).

Psychological work in normal individuals and in patients reveals further dissociations between the conscious perception of a willed action and its actual execution: subjects believe that they perform actions that they did not do, while, under different circumstances, subjects feel that they are not responsible for actions that are, demonstrably, their own (Haggard, 2008; Wegner, 2002).

Yet whether volition is illusory or is free in some libertarian sense does not answer the question of how subjective states relate to brain states. The perception of free will, what psychologists call the feeling of agency or authorship (e.g., “I decided to lift my finger”), is a subjective state with an associated quale no different in kind from the quale of a toothache or seeing marine blue. So even if free will is a complete chimera, the subjective feeling of willing an action must have some neuronal correlate.

The quiddity of the sensation of agency has been strengthened by direct electrical brain stimulation during neurosurgery and fMRI experiments. These implicate posterior parietal, medial pre-motor and anterior cingulate cortices in generating the subjective feeling of triggering an action (Desmurgert et al., 2009; Haggard, 2008). In other words, the neural correlate for the feeling of apparent causation involves activity in these regions.

Consciousness in other Species

Data about subjective states come not only from people who can talk about their subjective experiences but also from nonlinguistic competent individuals—such as newborn babies or patients with complete paralysis of nearly all voluntary muscles (locked-in syndrome)—and, most importantly, from animals other than humans. There are three reasons to assume that many species, in particular those with complex behaviors such as mammals, share at least some aspects of consciousness with humans:

1. Similar neuronal architecture: Except for size, there are no large-scale genomic, architectonic, cellular, or neurophysiological differences between the cerebral cortex and thalamus of mice, monkeys, humans, and whales. They are not the same and obey slightly different anatomical scaling laws but are overall remarkably similar (Herculano-Houzel, 2009).

2. Similar behavior: Almost all human behaviors have precursors in the animal literature. Take the case of pain. The behaviors seen in humans when they experience pain and distress—facial contortions, moaning, yelping, or other forms of vocalization; motor activity such as writhing; avoidance behaviors at the prospect of a repetition of the painful stimulus—can be observed in all mammals and in many other species. Likewise for the physiological signals that attend pain—activation of the sympathetic autonomous nervous system, resulting in change in blood pressure, dilated pupils, sweating, increased heart rate, release of stress hormones, and so on. The discovery of cortical pain responses in premature babies (Slater et al., 2006) and of empathy and observational pain in mice (Jeon et al., 2010) shows the fallacy of relying on language as sole criteria for consciousness.

3. Evolutionary continuity: The first true mammals appeared at the end of the Triassic period, about 220 million years ago, with primates proliferating following the Cretaceous-Tertiary extinction event, about 60 million years ago, while humans and macaque monkeys did not diverge until 30 million years ago (Allman, 1999). Homo sapiens is part of an evolutionary continuum with its implied structural and behavioral continuity, rather than an independently developed organism.

While certain aspects of consciousness, in particular those relating to the self, introspection and to abstract, culturally transmitted knowledge, may not be widespread in nonhuman animals, there is little reason to doubt that other mammals share conscious feelings—sentience—with humans. To believe that humans are special, are singled out by the gift of consciousness above all other species, is a remnant of humanity’s atavistic, deeply held belief that Homo sapiens occupies a privileged place in the universe, a belief with no empirical basis.

The extent to which nonmammalian vertebrates, such as tuna, cichlid, and other fish; crows, ravens, magpies, parrots, and other birds; or even invertebrates, such as squids or bees, with complex, nonstereotyped behaviors including delayed-matching, non-matching-to-sample, and other forms of learning (Giurfa, Zhang, Jenett, Menzel, & Srinivasan, 2001) are conscious is difficult to answer at this point in time. Without a sounder understanding of the neuronal architecture necessary to support consciousness, it is unclear where in the animal kingdom to draw the Rubicon that separates species with at least some conscious percepts from those that never experience anything and that are nothing but pure automata (Edelman, Baars, & Seth, 2005, Koch, 2012).

Arousal and States of Consciousness

There are two quite distinct meanings of being conscious, relating to the intransitive and to the transitive usage of the verb. The former revolves around arousal and states of consciousness, while the latter deals with the content of consciousness and conscious states.

To be conscious of anything, the brain must be in a relatively high state of arousal. This is as true of wakefulness as it is of REM sleep that can be vividly, consciously experienced—though usually not remembered—in dreams (see Chapter 40). The level of brain arousal, measured by electrical or metabolic brain activity, fluctuates in a circadian manner, and is influenced by lack of sleep, drugs and alcohol, physical exertion, and so on in a predictable manner. High arousal states are always associated with some conscious state—a percept, thought, or memory—that has a specific content. We see and hear something, remember an incident, plan the future, or fantasize about sex. Indeed, it is not clear whether one can be awake without being conscious of something (with the possible exception of certain meditative states). Referring to such conscious states is conceptually quite distinct from referring to states of consciousness that fluctuate with different levels of arousal. Arousal can be measured behaviorally by the signal amplitude that triggers some criterion reaction (for instance, the sound level necessary to evoke an eye movement or a head turn toward the sound source).

The number of distinct conscious states is vast and encompasses every possible type of visual, auditory, visceral, olfactory, pain, vestibular, or other sensory experience; remembered or imagined experiences; events or thoughts; and emotional experiences (fear, surprise, happiness, dread, and so on).

Different levels or states of consciousness are associated with different kinds of conscious experiences. The normal, waking state is quite different from the dreaming state (for instance, the latter has little or no self-reflection) or from deep sleep. In all three cases, the basic physiology of the brain is changed, affecting the space of possible conscious experiences. Physiology is also different in altered states of consciousness—for instance, after taking psychedelic drugs, when events often have a stronger emotional connotation than in normal life.

In some obvious but difficult to rigorously define manner, the richness of conscious experience increases as an individual transitions from deep sleep to drowsiness to full wakefulness. This richness of possible conscious experience could be quantified using notions from complexity theory that incorporate both the dimensionality as well as the granularity of conscious experience (e.g., Tononi, 2008). For example, inactivating all of the visual cortex in an otherwise normal individual would significantly reduce the dimensionality of conscious experience, since no color, shape, motion, texture, or depth could be perceived or imagined. As behavioral arousal increases, so does the range and complexity of behaviors that an individual is capable of. A singular exception to this progression is REM sleep where most motor activity is shut down in the atonia that is characteristic of this phase of sleep, and the subject is difficult to wake up. Yet this low level of behavioral arousal goes, paradoxically, hand in hand with high metabolic and electrical brain activity and conscious, vivid states.

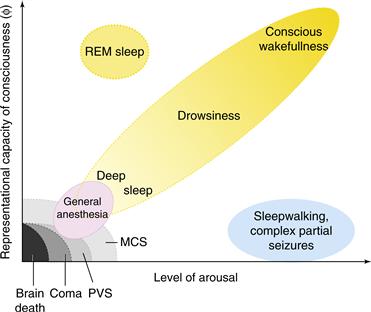

These observations suggest a two-dimensional graph (Fig. 51.1) in which the richness of conscious experience (its representational capacity) is plotted as a function of levels of behavioral arousal or responsiveness.

Figure 51.1 Normal and pathological brain states can be situated in a two-dimensional graph. Increasing levels of behaviorally determined arousal are plotted on the x-axis and the “richness” or “representational capacity of consciousness” is plotted on the y-axis. Increasing arousal can be measured by the threshold to obtain some specific behavior (for instance, spatial orientation to a sound). Healthy subjects cycle during a 24-hour period from deep sleep with low arousal and very little conscious experience to increasing levels of arousal and conscious sensation. In REM sleep, low levels of behavioral arousal go hand-in-hand with vivid consciousness. Conversely, various pathologies of clinical relevance are associated with little to no conscious content. Modified from Laureys (2005).

Global disorders of consciousness can likewise be mapped onto this plane. Clinicians speak of impaired states of consciousness as in “the comatose state,” “the vegetative state” (VS), and the “minimal conscious state” (MCS). Here, state refers to different levels of consciousness, from a total absence in the case of coma, VS, or general anesthesia, to a fluctuating and limited form of conscious sensation in MCS, sleepwalking, or during a complex partial epileptic seizure (Laureys, 2005; Schiff, 2010).

The repertoire of distinct conscious states or experiences that are accessible to a patient in MCS is presumably minimal (possibly including pain, discomfort, and sporadic sensory percepts), immeasurably smaller than the possible conscious states that can be experienced by a healthy brain. In the limit of brain death, the origin of this space has been reached with no experience at all (Fig. 51.1). More relevant to clinical practice is the case of global anesthesia, during which the patient should not experience anything so as to avoid traumatic memories and their undesirable sequelae.

Given the absence of any accepted theory for the minimal neuronal criteria necessary for consciousness, the distinction between a VS patient—who shows regular sleep-wave transitions and who may be able to move eyes or limbs or smile in a reflexive manner as in the widely publicized 2005 case of Terri Schiavo in Florida—and a MCS patient who can communicate (on occasion) in a meaningful manner (for instance, by differential eye movements) and who shows some signs of consciousness, can be difficult in a clinical setting. This drives the need for practical, bedside tests. Two empirico-computational assays being developed rely on the breakdown of cortico-cortical interconnectivity—that is, the loss of integration within the cortico-thalamic core, associated with loss of consciousness (Boly et al., 2011; Massimini et al., 2005; Rosanova et al., 2012).

An alternative is blood-oxygen-level-dependent (BOLD) functional magnetic resonance imaging (fMRI). Brain imaging of patients with global disturbances of consciousness (including akinetic mutism) reveals that dysfunction in a widespread cortical network including medial and lateral prefrontal cortex and parietal associative areas is associated with a global loss of consciousness (Laureys, 2005). Monti et al. (2010) imaged brain activity in 54 patients with disorders of consciousness to determine whether two different mental imagery tasks that involve different brain systems (“imagine playing tennis” versus “imagine navigating through your home”) can be used by the patient to communicate yes or no answers to simple questions. Five of these patients with traumatic brain injuries could willfully and reliably modulate their brain activity, with two of these having no overt sign of behavioral control during clinical assessment. This shows the promise of fMRI for establishing a two-way radio link (albeit at a very low baud rate) with a subset of VS patients.

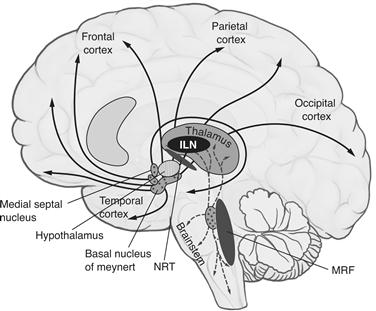

Destruction of circumscribed parts of the cerebral cortex can eliminate very specific aspects of consciousness, such as the ability to see moving stimuli or to recognize faces, without a concomitant loss of consciousness or even conscious vision in general. Yet relatively discrete bilateral injuries to midline (paramedian) subcortical structures can cause a complete loss of consciousness. These structures are therefore part of the enabling factors that control the level of brain arousal (as determined by metabolic or electrical activity) and that are needed for any conscious states to form. Consider the heterogeneous collection of more than 20 (on each side) nuclei in the upper brainstem (pons, midbrain, and in the posterior hypothalamus), often collectively referred to as the reticular activating system (Parvizi and Damasio, 2001). These nuclei—three-dimensional collections of neurons with their own cytoarchitecture and neurochemical identity—release distinct neuromodulators such as acetylcholine, noradrenaline/norepinephrine, serotonin, histamine, and orexin/hypocretin. They can widely influence the cortex through direct axonal projections (Fig. 51.2) or via intermediary relays in the thalamus (below) and in the basal forebrain. These neuromodulators mediate the alternation between wakefulness and sleep, as well as the general level of both behavioral and brain arousal. Acute lesions in the reticular activating system can result in loss of consciousness and coma. However, eventually the excitability of thalamus and forebrain can recover and consciousness can return (Villablanca, 2004).

Figure 51.2 Midline structures in the brainstem and thalamus necessary to regulate the level of brain arousal include the intralaminar nuclei of the thalamus (ILN), the thalamic reticular nucleus (NRT) encapsulating the dorsal thalamus, and the midbrain reticular formation (MRF) that includes the reticular activating system. Small, bilateral lesions in many of these nuclei cause a global loss of consciousness.

Another enabling factor for consciousness is the five or more intralaminar nuclei of the thalamus (ILN). These receive input from many brainstem nuclei and from frontal cortex and project strongly to the basal ganglia and, in a more distributed manner, into layer I of much of neocortex. Comparatively small (1 cm3 or less) bilateral lesions in the ILN can completely eliminate awareness (Bogen, 1995). Thus, the ILN are necessary for the state of consciousness but do not appear to be responsible for mediating specific conscious percepts or memories.

If a single substance is critical for consciousness, then acetylcholine is the most likely candidate. Two major cholinergic pathways originate in the brainstem and in the basal forebrain (Fig. 51.2). Brainstem cells send an ascending projection to the thalamus, where release of acetylcholine facilitates thalamo-cortical relay cells and suppresses inhibitory interneurons. Cholinergic cells are therefore well positioned to influence all of the cortex by controlling the thalamus. In contrast, cholinergic basal forebrain neurons send their axons to a wide array of target structures. Collectively, brainstem and basal forebrain cholinergic cells innervate the thalamus, hippocampus, amygdala, and neocortex.

Cholinergic activity fluctuates with the sleep-wake cycle. In general, increasing levels of spiking activity in cholinergic neurons are associated with wakefulness or REM sleep, while decreasing levels occur during non-REM or slow-wave sleep. Lastly, many neurological pathologies whose symptoms include disturbances of consciousness, such as Parkinson’s disease, Alzheimer’s disease, and other forms of dementia, are associated with a selective loss of cholinergic neurons.

In summary, a plethora of nuclei with distinct chemical signatures in the thalamus, midbrain, and pons must function for the brain of an individual to be sufficiently aroused to experience anything at all. These nuclei belong to the enabling factors for consciousness. It is likely that the specific content of any one conscious sensation is mediated by neurons in cortex and their associated satellite structures, including the amygdala, thalamus, claustrum, and the basal ganglia.

The Neuronal Correlates of Consciousness

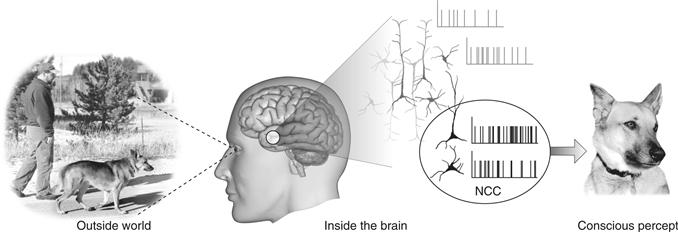

Progress in addressing the mind-body problem has come from focusing on empirically accessible questions rather than on eristic philosophical arguments with no clear resolution. One key objective has been to search for the neuronal correlates—and ultimately the causes—of consciousness. As defined by Crick and Koch (2003), the neuronal correlates of consciousness (NCC) are the minimal neuronal mechanisms jointly sufficient for any one specific conscious percept (Fig. 51.3).

Figure 51.3 The Neuronal Correlates of Consciousness (NCC) are the minimal set of neural events and structures—here synchronized action potentials in neocortical pyramidal neurons—sufficient for a specific conscious percept or memory. From Koch (2004).

This definition of the NCC stresses the word “minimal” because the question of interest is which subcomponents of the brain are actually needed. For example, of the 86 billion neurons in the human brain, 69 billion are in the cerebellum (Herculano-Houzel, 2009). That is, about four out of every five brain cells are small cerebellar granule neurons. Yet the main deficits of people born without a cerebellum—a rare occurrence—or that lose part of the cerebellum to stroke or other trauma are ataxia, slurred speech, and unsteady gait (Lemon & Edgley, 2010). Thus, the cerebellum is not part of the NCC. That is, trains of spikes in granule cells—or their absence—do not contribute in any substantive way to conscious states.

This definition does not focus on the necessary conditions for consciousness because of the great redundancy and parallelism found in neurobiological networks. While activity in some population of neurons may underpin a percept in one case, a different population might mediate a related percept if the former population is lost or inactivated.

Every phenomenal, subjective state will have associated NCC: one for seeing a red patch, another one for seeing grandmother, yet a third one for hearing a siren, and so on. Perturbing or inactivating the NCC for any one specific conscious experience will affect the percept or cause it to disappear. If the NCC could be induced artificially—for instance, by optogenetics or by cortical microstimulation—the subject would experience the associated percept.

What characterizes the NCC? What are the communalities between the NCC for seeing and for hearing? Will the NCC involve all pyramidal neurons in cortex at any given point in time? Or only a subset of long-range pyramidal cells in frontal lobes that project to the sensory cortices in the back? Only layer 5 cortical cells? Neurons that fire in a rhythmic manner? Neurons that fire in a synchronous manner? These are some of the proposals that have been advanced over the years (Chalmers, 2000).

The vast majority of experiments concerned with the NCC refer to either electrophysiological recordings in monkeys or functional brain imaging data in humans.

It is implicitly assumed by most neurobiologists that the relevant variables giving rise to consciousness are to be found at the neuronal level, among the synaptic release or the action potentials in one or more population of cells, rather than at the molecular level. A few scholars have proposed that macroscopic quantum behaviors underlie consciousness. Of particular interest here is entanglement, the observation that the quantum states of multiple objects, such as two coupled electrons, may be highly correlated even though they are spatially separated, violating our intuition about locality (entanglement is also the key feature of quantum mechanics exploited in quantum computers). The role of quantum mechanics for the photons received by the eye and for the molecules of life is not controversial. But there is no evidence that any components of the nervous system—a 37° Celsius wet and warm tissue strongly coupled to its environment—display quantum entanglement (although there is evidence for quantum coherence in photosynthetic proteins at room temperature; Collini et al., 2010). And even if quantum entanglement were to occur inside individual cells, diffusion and action potential generation and propagation—the principal mechanism for getting information into and out of neurons—would destroy superposition. At the cellular level, the interaction of neurons is governed by classical physics (Koch & Hepp, 2010).

The Neuronal Basis of Perceptual Illusions

The possibility of precisely manipulating visual percepts in time and space has made vision a preferred modality for seeking the NCC. Psychologists have perfected a number of techniques—masking, binocular rivalry, continuous flash suppression, motion-induced blindness, change blindness, inattentional blindness—in which the seemingly simple and unambiguous relationship between a physical stimulus in the world and its associated percept in the privacy of the subject’s mind is disrupted (Kim & Blake, 2005). With such techniques, a stimulus can be perceptually suppressed for seconds or even minutes at a time: the image is projected into one of the observer’s eyes but it is invisible, not seen. In this manner the neural mechanisms that respond to the subjective percept rather than the retinal stimulus can be isolated, permitting the footprints of visual consciousness to be tracked in the brain.

In a perceptual illusion, the physical stimulus remains fixed, while the percept fluctuates. One example is the Necker cube that can be perceived in one of two different ways in depth. Another one, that can be precisely controlled, is binocular rivalry (Blake & Logothetis, 2002). Here, a small image—for example, a horizontal grating—is presented to the left eye and another image—for example, a vertical grating—is shown to the corresponding location in the right eye. Despite the constant retinal stimulus, observers perceive the horizontal grating to alternate every few seconds with the vertical one. The brain does not allow for the simultaneous perception of both images.

Macaque monkeys can be trained to report whether they see the left or the right image. The distribution of the switching times and the way in which changing the contrast in one eye affects the reports leave little doubt that monkeys and humans experience the same basic phenomenon.

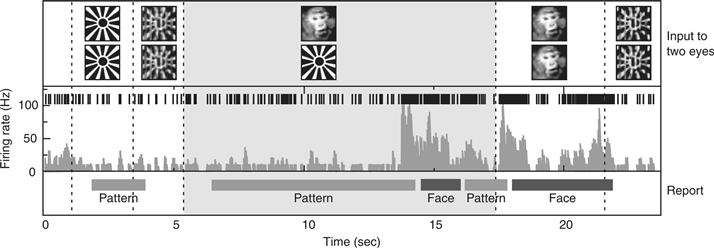

In a series of elegant experiments, Logothetis and colleagues (Logothetis, 1998) recorded from a variety of visual cortical areas in the awake macaque monkey while the animal performed a binocular rivalry task. In primary visual cortex (V1), only a small fraction of cells weakly modulate their response as a function of the percept of the monkey. The majority of cells responded to one or the other retinal stimulus with little regard to what the animal perceived at the time. In contrast, in a high-level cortical area such as the inferior temporal (IT) cortex along the ventral pathway, almost all neurons responded only to the perceptual dominant stimulus, that is, to the stimulus that was being reported. For example, when a face and a more abstract design were presented, one of these to each eye, a “face” cell fired only when the animal indicated by its performance that it saw the face and not the pattern presented to the other eye (Fig. 51.4). This result implies that the NCC involves activity in neurons in inferior temporal cortex.

Figure 51.4 A fraction of a minute in the life of a typical IT cell while a monkey experiences binocular rivalry. The upper row indicates the visual input to the two eyes, with dotted vertical boundaries marking stimulus transitions. The second row shows the individual spikes, the third the smoothed firing rate, and the bottom row the monkey’s behavior. The animal was taught to press a lever when it saw either one or the other image, but not both. The cell responded only weakly to either the sunburst design or to its optical superposition with the image of a monkey’s face. During binocular rivalry (gray zone), the monkey’s perception vacillated back and forth between seeing the face (“Face”) and seeing the bursting sun (“Pattern”). Perception of the face was consistently accompanied (and preceded) by a strong increase in firing rate. From N. Logothetis (private communication) as modified by Koch (2004).

In a related perceptual phenomena, flash suppression, the percept associated with an image projected into one eye is suppressed by flashing another image into the other eye (while the original image remains). Its methodological advantage over binocular rivalry is that the timing of the perceptual transition is determined by an external trigger rather than by an internal event. The majority of responsive cells in inferior temporal cortex and in the superior temporal sulcus follow the monkey’s behavior—and therefore its percept. That is, when the animal perceives a cell’s preferred stimulus, the neuron fires; when the stimulus is present on the retina but is perceptually suppressed, the cell falls silent, even though legions of V1 neurons fire vigorously to the same stimulus (Sheinberg & Logothetis, 1997). Single neuron recordings in the medial temporal lobe of epileptic patients during flash suppression likewise demonstrate abolition of their responses when their preferred stimulus is present on the retina but not consciously seen by the patient (Kreiman, Fried, & Koch, 2002).

A large number of fMRI experiments have exploited binocular rivalry and related illusions to identify the hemodynamic activity underlying visual consciousness in humans. They demonstrate quite conclusively that BOLD activity in the upper stages of the ventral pathway (e.g., the fusiform face area and the parahippocampal place area) follow the percept and not the retinal stimulus (Rees & Frith, 2007).

There is a lively debate about the extent to which neurons in primary visual cortex simply encode the visual stimulus or are directly responsible for expressing the subject’s conscious percept. That is, is V1 part of the NCC (Crick & Koch, 1995)? It is clear that retinal neurons are not part of the NCC for visual experiences. While retinal neurons often correlate with visual experience, the spiking activity of retinal ganglion cells does not accord with visual experience (for example, there are no photoreceptors at the blind spot; yet no hole in the field of view is apparent; in dreams vivid imagery occurs despite closed eyes and so on). A number of compelling observations link perception with fMRI BOLD activity in human V1 and even LGN (Lee, Blake, & Heeger, 2005; Tong, Nakayama, Vaughan, & Kanwisher, 1998). These data are at odds with single neuron recordings from the monkey. A plausible explanation is that V1 neurons receive feedback signals during conscious perception that are not, however, sufficient to drive the cells to spike (Maier et al., 2008).

Haynes and Rees (2005) exploited multivariate decoding techniques to read out perceptually suppressed information (the orientation of a masked stimulus) from V1 BOLD activity, even though the stimulus orientation was so efficiently masked that subjects performed at chance levels when trying to guess the orientation. That is, although subjects did not give any behavioral indication that they saw the orientation of the stimulus, its slant could be predicted on a single trial basis with better than chance odds from the V1 (but not from V2 or V3) BOLD signal. This finding supports the hypothesis that information present in V1 is not accessible to visual consciousness.

What all of these experiments demonstrate is that the neuronal basis of consciously experienced perceptions can be identified and studied using standard neuroscience tools.

Perceptual Puzzles of Contemporary Interest

The attributes of even simple percepts vary along a continuum. For instance, a patch of color has a brightness and a hue that are variable, just as a simple tone has an associated loudness and pitch. However, is it possible that each particular, consciously experienced, percept is all-or-none? Might a pure tone of a particular pitch and loudness be experienced as an atom of perception, either heard or not, rather than gradually emerging from the noisy background? The perception of the world around us would then be a superposition of many elementary, binary percepts (Sergent & Dehaene, 2004).

Is perception continuous, like a river, or does it consist of a series of discontinuous batches, rather like the discrete frames in a movie (Purves, Paydarfar, & Andrews, 1996; VanRullen & Koch, 2003a). In cinematographic vision (Sacks, 2004), a rare form of visual migraine, the subject sees the movement of objects as fractured in time, as a succession of different configurations and positions, without any movement in between. The hypothesis that visual perception is quantized in discrete batches of variable duration, most often related to EEG rhythms in various frequency ranges (from theta to beta), is an old one. This idea is being revisited in light of the discrepancies of timing of perceptual events within and across different sensory modalities. For instance, even though a change in the color of an object occurs simultaneous with a change in its direction of motion, it may not be perceived that way (Bartels & Zeki, 2006; Stetson, Cui, Montague, & Eagleman, 2006),

What is the relationship between endogenous, top-down attention and consciousness? Although these are frequently coextensive—subjects are usually conscious of what they attend to—there is considerable evidence that argues that these are distinct neurobiological processes (Koch & Tsuchiya, 2007). This question is receiving renewed “attention” due to the development of a powerful visual masking technique that can suppress stimuli for minutes at end (continuous flash suppression; Tsuchiya & Koch, 2005). Indeed, it has been shown that attention can be allocated to a perceptually invisible stimulus (Naccache, Blandin, & Dehaene, 2002; for a review of many of these experiments see van Boxtel, Tsuchiya, & Koch, 2010a). That is, subjects can pay attention to something without seeing what they are paying attention to! In other experiments, visual attention has an opposing effect on afterimage duration than visual consciousness (van Boxtel, Tsuchiya, & Koch, 2010b; Watanabe et al., 2011), dissociate selective, visual attention and consciousness—as assayed via stimulus visibility—in human primary visual cortex. Whether or not subjects see what they are looking at makes very little difference to the hemodynamic signal in V1, while attention strongly modulates the BOLD response. When exploring the neural basis of these processes, it is therefore critical to not confound consciousness with attention. Whether the converse also occurs—being conscious of something without attending to it—remains an open question.

Forward Versus Feedback Projections

Many actions in response to sensory inputs are fast, transient, with a limited behavioral repertoire, and unconscious (Milner & Goodale, 1995). Part of the habit system that controls automatic behavior (Shiffrin & Schneider, 1977), these behaviors can be thought of as cortical reflexes. They are characterized by rapid and stereotyped sensory-motor actions called zombie behaviors (Koch & Crick, 2001). Examples include eye and hand movements; limb adjustments; keyboard typing; driving a bicycle; executing well-rehearsed sequences in tennis, soccer, or any other athletic activity; and so on. Such automatic behaviors are complemented by a nonhabit, slower, all-purpose conscious mode that deals with more complex aspects of the sensory input (or a reflection of these, as in imagery) and takes time to decide on appropriate responses. A consciousness mode is needed because otherwise a vast number of different zombie modes would be required to react to unusual events. The conscious system may interfere somewhat with the concurrent zombie systems (Logan & Crump, 2009): focusing consciousness onto the smooth execution of a complex, rapid, and highly trained sensory-motor task—dribbling a soccer ball or typing on a keyboard—can interfere with its smooth execution, something well known to athletes and their trainers. Having both a zombie mode that responds in a well-rehearsed and stereotyped manner as well as a slower system that allows time for thinking and planning more complex behavior is a great evolutionary discovery. This latter aspect, planning, may be one of the principal functions of consciousness.

It seems possible that visual zombie modes in the cortex mainly use the dorsal stream in the parietal region (Milner & Goodale, 1995). However, parietal activity can affect consciousness by producing attentional effects on the ventral stream, at least under some circumstances. The basis of this inference is clinical case studies and fMRI experiments in normal subjects (Corbetta & Shulman, 2002). The conscious mode for vision depends largely on the early visual areas (beyond V1) and especially on the ventral “what” stream.

Seemingly complex visual processing (such as detecting animals in natural, cluttered images) can be accomplished by cortex within 130–150 msec (Thorpe, Fize, & Marlot, 1996; VanRullen & Koch, 2003b), far too slow for conscious perception to be involved. It is plausible that such behaviors are mediated by a purely feed-forward moving wave of spiking activity that passes from the retina through V1, into V4, IT and prefrontal cortex, until it affects motorneurons in the spinal cord that control the finger press (as in a typical laboratory experiment). The hypothesis that the basic processing of information is feedforward is supported most directly by the short times required for a selective response to appear in IT cells (Perrett et al., 1992). Indeed, Hung, Kreiman, Poggio, and DiCarlo (2005) were able to decode from the spiking activity of a couple of hundred neurons in monkey IT (over intervals as short as 12.5 msec and about 100 msec after image onset) the category, identity, and even position of a single image flashed onto the retina of the fixating animal. Coupled with a suitable motor output, such a feed-forward network implements a zombie behavior in the Koch and Crick (2001) sense—rapidly and efficiently subserving one task, here distinguishing animal from nonanimal pictures, in the absence of any conscious experience.

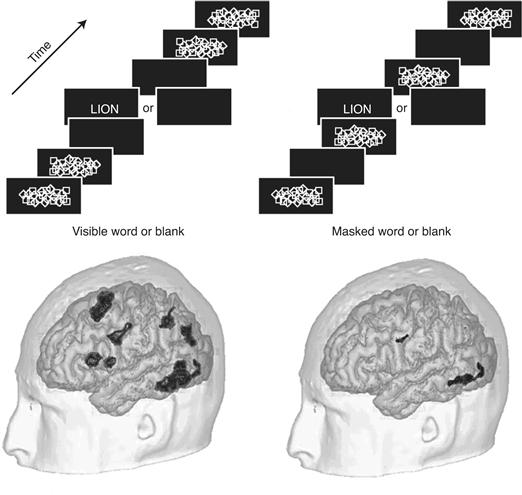

Conscious perception requires more sustained, reverberatory neural activity, most likely via global feedback from frontal regions of neocortex back to sensory cortical areas in the back (Crick & Koch, 1995; Lamme & Roelfsema, 2000; Fahrenfort, Scholte, & Lamme, 2008). Such feedback loops would explain why in backward masking a second stimulus, flashed 80–100 msec after onset of a first image, can still interfere with (mask) the percept of the first image. The reverberatory activity builds up over time until it exceeds a critical threshold. At this point, the sustained neural activity rapidly propagates to parietal, prefrontal, and anterior cingulate cortical regions; thalamus, claustrum, and related structures that support short-term memory; and multimodality integration, planning, speech, and other processes intimately related to consciousness. Dehaene et al. (2001) contrasted brain activity during presentation of flashed, masked words with the activity evoked when the words were not masked (Fig. 51.5). BOLD activity in the fusiform (visual) cortex and in a spatially extended network of midline prefrontal and inferior parietal cortices tracked conscious perception.

Figure 51.5 The Effect of Visual Masking. The brain’s response to seen and unseen words. Volunteers looked at a stream of briefly flashed images. No words were seen in the right stream, since each word was preceded and followed by a slide covered by random symbols, perceptually obscuring the letters. This had a dramatic effect on the cortical fMRI response (the activity following the image sequences with words was compared to the sequences with blanks). Both seen and masked words activated regions in the left ventral pathway, but of much different amplitude. Conscious perception triggered additional widespread activation in left parietal and prefrontal cortices. Modified from Dehaene et al. (2001).

This is the hypothesis at the heart of the global workspace model of consciousness (Baars, 1989; Dehaene & Changeux, 2011). Sensory information is processed in an array of feed-forward structures, such as primary sensory cortices. If it is powerful enough, it enters into a global neuronal workspace located in prefrontal cortical structures. From there it is widely broadcast by long-range cortico-cortical projections and placed into context—for instance, by accessing memory and planning systems. This interpretation feeds back to the sensory representation in visual cortex. Competition inside the global neuronal workspace prevents more than one or a very small number of percepts to be simultaneously and actively represented.

In summary, while rapid but transient neural activity in the thalamo-cortical system can mediate complex behavior without conscious sensation, it is surmised that consciousness requires sustained but well-organized neural activity dependent on integration across cortex.

One hitherto neglected structure in this regard is the claustrum. It is a thin (ca. 1 mm) sheet of neurons underneath the insular cortex, extending all the way into temporal, parietal, and frontal lobes, and above the putamen, buried in white matter structures. It projects to, and receives input from, perhaps all cortical regions. Crick and Koch (2005) surmised that the claustrum is critical to the integration of information underlying consciousness, akin to a conductor of the cortical orchestra.

An Information-Theoretical Theory of Consciousness

At present, it is not known to what extent animals whose nervous systems have an architecture considerably different from the mammalian neocortex are conscious. Furthermore, whether artificial systems, such as computers, robots, or the World Wide Web as a whole, which behave with considerable intelligence, are or can become conscious (as widely assumed in science fiction; e.g., the paranoid computer HAL in the film 2001), remains speculative (Koch and Tononi, 2011). What is needed is a theory of consciousness that explains in quantitative terms what type of systems, with what architecture, can possess conscious states.

Progress in the study of the NCC on the one hand, and of the neural correlates of nonconscious habit or zombie behaviors on the other, will hopefully lead to a better understanding of what distinguishes neural structures or processes that are associated with consciousness from those that are not. Yet such an opportunistic, data-driven approach will not lead to an understanding of why certain structures and processes have a privileged relationship with subjective experience. For example, why is it that neurons in corticothalamic circuits are essential for conscious experience, whereas cerebellar neurons, despite their huge numbers, are not? And what is wrong with cortical zombie systems that make them unsuitable to yield subjective experience? Or why is it that consciousness wanes during slow-wave sleep early in the night, despite levels of neural firing in the thalamocortical system that are comparable to the levels of firing in wakefulness?

Information theory may be such a tool that establishes at the fundamental level what consciousness is, how it can be measured, and what requisites a physical system must satisfy in order to generate it (Chalmers, 1996; Tononi & Edelman, 1998).

The only promising candidate for such a theoretical framework is the information integration theory of consciousness (Tononi, 2008). It posits that consciousness is extraordinarily informative. Any one particular conscious state rules out a huge number of alternative experiences. Classically, the reduction of uncertainty among alternatives constitutes information. For example, when a subject consciously experiences reading this particular phrase, a huge number of other possible experiences are ruled out (consider all possible written phrases that could have been written in this space, in all possible fonts, ink colors, and sizes; think of the same phrases spoken aloud, or read and spoken, and so on). Thus, every experience represents one particular conscious state out of a huge repertoire of possible conscious states.

Furthermore, information associated with the occurrence of a conscious state is also integrated. Any one experience is an indivisible whole. It cannot be subdivided into components that are experienced independently. Again, the conscious experience of this particular phrase cannot be experienced as subdivided into, say, the conscious experience of nouns independently of the verbs. Similarly, visual shapes cannot be experienced independently of their color, nor can the left half of the visual field of view be experienced independently of the right half.

Based on these two considerations, the theory claims that a physical system can generate consciousness to the extent that it integrates information. This requires that the system has a large repertoire of available states (information) that cannot be decomposed into a collection of causally independent subsystems (integration).

Importantly, the theory introduces a measure of a system’s capacity to integrate information. This measure, called Φ, is obtained by determining the minimum repertoire of different states that can be produced in one part of the system by perturbations of its other parts (Tononi, 2008). Φ can loosely be thought of as the representational capacity of the system (as in Fig. 51.1). Although Φ is not easy to calculate exactly for realistic systems (Balduzzi & Tononi, 2008), it can be estimated. Thus, by using simple computer simulations, it is possible to show that Φ is high for neural architectures that conjoin functional specialization with functional integration, like the mammalian thalamocortical system. Conversely, Φ is low for systems that are made up of small, quasi-independent modules, like the cerebellum, or for networks of randomly or uniformly connected units.

The notion that consciousness has to do with the brain’s ability to integrate information has been tested directly by transcranial magnetic stimulation (TMS). A coil is placed above the skull, and a brief and intense magnetic field generates a weak electrical current in the gray matter underlying the skull. Massimini et al. (2005) compared multichannel EEG of awake and conscious subjects in response to TMS pulses to the EEG when the same subjects were deeply asleep—a time during which consciousness is much reduced. During quiet wakefulness, an initial response at the stimulation site was followed by a sequence of waves that moved to connected cortical areas several centimeters away. During slow-wave sleep, by contrast, the initial response was stronger but was rapidly extinguished and did not propagate beyond the stimulation site. Thus, the fading of consciousness during certain stages of sleep, as well as during anesthesia (Alkire, Hudetz, & Tononi, 2008) and during loss of consciousness in patients (Rosanova et al., 2012), may be related, as predicted by the theory, to the breakdown of information integration among specialized thalamocortical modules.

Conclusion

Ever since the Greeks first considered the mind-body problem more than two millennia ago, it has been the domain of armchair speculations and esoteric debates with no apparent resolution. Yet many aspects of this ancient set of questions now fall squarely within the domain of science.

In order to advance the resolution of these and similar questions, it will be imperative to record from a large number of neurons simultaneously at many locations throughout the cortico-thalamo system and related satellites in people or behaving animals, such as monkeys or mice. Progress in understanding the circuitry of consciousness therefore demands a battery of behaviors (akin to but different from the well-known Turing test for intelligence) that the subject—a newborn infant, immobilized patient, monkey, or mouse—has to pass before considering him, her, or it to possess some measure of conscious perception. This is not an insurmountable step for mammals such as the monkey or the mouse that share many behaviors and brain structures with humans. For example, one particular mouse model of contingency awareness (Han et al., 2003) is based on the differential requirement for awareness of trace versus delay associative eyeblink conditioning in humans (Clark & Squire, 1998).

The growing ability of neuroscientists to manipulate in a reversible, transient, deliberate, and delicate manner genetically identified populations of neurons using methods from molecular biology (Gradinaru, Mogri, Thompson, Henderson, & Deisseroth, 2009; Lin et al., 2011) opens the possibility of moving from correlation—observing that a particular conscious state is associated with some neural or hemodynamic activity—to causation. Exploiting these increasingly powerful tools depends on the simultaneous development of appropriate behavioral assays and model organisms amenable to large-scale genomic analysis and manipulation, particularly in mice (Lein et al., 2007). For example, if the masking experiment of Dehaene and colleagues (Fig. 51.5) is repeated using appropriately adjusted parameters in the mouse’s visual system, all cortico-cortical feedback can be transiently inactivated to test to what extent this massive and sustained activity that in humans is associated with conscious perception depends on top-down connections.

It is the combination of such fine-grained neuronal analysis in mice and monkeys, ever more sensitive psychophysical and brain imaging techniques in patients and healthy individuals, and the development of a robust theoretical framework that lend hope to the belief that human ingenuity can, ultimately, understand in a rational manner one of the central mysteries of life.

References

1. Allman JM. Evolving brains New York: Scientific American Library; 1999.

2. Alkire AM, Hudetz AC, Tononi G. Consciousness and anesthesia. Science. 2008;322:876–880.

3. Baars BJ. A cognitive theory of consciousness. Cambridge, UK: Cambridge University Press; 1989.

4. Balduzzi D, Tononi G. Integrated information in discrete dynamical systems: motivation and theoretical framework. PLoS Computational Biology. 2008;4:e1000091.

5. Bartels A, Zeki S. The temporal order of binding visual attributes. Vision Research. 2006;46:2280–2286.

6. Blake R, Logothetis NK. Visual competition. Nature Reviews Neuroscience. 2002;3:13–21.

7. Bogen JE. On the neurophysiology of consciousness: I An Overview. Consciousness & Cognition. 1995;4:52–62.

8. Boly M, Garrido MI, Gosseries O, et al. Preserved feedforward but impaired top-down processes in the vegetative state. Science. 2011;332:858–862.

9. Chalmers DJ. The conscious mind: In search of a fundamental theory New York: Oxford University Press; 1996.

10. Chalmers DJ. What is a neural correlate of consciousness?. In: Metzinger T, ed. Neural correlates of consciousness: Empirical and conceptual questions. Cambridge: MIT Press; 2000;17–40.

11. Clark RE, Squire LR. Classical conditioning and brain systems: The role of awareness. Science. 1998;280:77–81.

12. Collini E, Wong CY, Wilk KE, Curmi PMG, Brumer P, Schoes GD. Coherently wired light-harvesting in photosynthetic marine algae at ambient temperature. Nature. 2010;463:644–648.

13. Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nature Reviews Neuroscience. 2002;3:201–215.

14. Crick FC, Koch C. Are we aware of neural activity in primary visual cortex?. Nature. 1995;375:121–123.

15. Crick FC, Koch C. A framework for consciousness. Nature Neuroscience. 2003;6:119–127.

16. Crick FC, Koch C. What is the function of the claustrum. Philosophical Transactions of the Royal Society of London Series B. 2005;360:1271–1279.

17. Dehaene S, Changeux J-P. Experimental and theoretical approaches to conscious processing. Neuron. 2011;70:200–227.

18. Dehaene S, Naccache L, Cohen L, et al. Cerebral mechanisms of word masking and unconscious repetition priming. Nature Neuroscience. 2001;4:752–758.

19. Desmurget M, Reilly KT, Richard N, Szathmari A, Mottolese C, Sirigu A. Movement intention after parietal cortex stimulations in humans. Science. 2009;324:811–813.

20. Edelman DB, Baars JB, Seth AK. Identifying hallmarks of consciousness in non-mammalian species. Consciousness & Cognition. 2005;14:169–187.

21. Fahrenfort JJ, Scholte HS, Lamme VAF. The spatiotemporal profile of cortical processing leading up to visual perception. Journal of Vision. 2008;8 12.1–12.

22. Giurfa M, Zhang S, Jenett A, Menzel R, Srinivasan MV. The concepts of “sameness” and “difference” in an insect. Nature. 2001;410:930–933.

23. Gradinaru V, Mogri M, Thompson KR, Henderson JM, Deisseroth K. Optical deconstruction of Parkinsonian neural circuitry. Science. 2009;324:354–359.

24. Haggard P, Eimer M. On the relation between brain potentials and conscious awareness. Experimental Brain Research. 1999;126:128–133.

25. Haggard P. Human volition: Towards a neuroscience of will. Nature Reviews Neuroscience. 2008;9:934–946.

26. Han CJ, O’Tuathaigh CM, van Trigt L, et al. Trace but not delay fear conditioning requires attention and the anterior cingulate cortex. Proceeding of the National Academy of Sciences of the United States of America. 2003;100:13087–13092.

27. Haynes J-D, Rees G. Predicting the orientation of invisible stimuli from activity in human primary visual cortex. Nature Neuroscience. 2005;8:686–691.

28. Herculano-Houzel S. The human brain in numbers: A linearly scaled-up primate brain. Frontiers in Human Neuroscience. 2009;3(31):1.

29. Hung CP, Kreiman G, Poggio T, DiCarlo JJ. Fast readout of object identity from macaque inferior temporal cortex. Science. 2005;310:863–866.

30. Jeon D, Kim S, Chetana M, et al. Observational fear learning involves affective pain system and CaV 1.2 Ca2+ channels in ACC. Nature Neuroscience. 2010;13:482–488.

31. Kim C-Y, Blake R. Psychophysical magic: Rendering the visible “invisible.”. Trends in Cognitive Sciences. 2005;9:381–388.

32. Koch C. The quest for consciousness: a neurobiological approach Denver, CO: Roberts; 2004.

33. Koch C. Consciousness: Confessions of a romantic reductionist Cambridge, MA: MIT Press; 2012.

34. Koch C, Crick FC. On the zombie within. Nature. 2001;411:893.

35. Koch C, Hepp K. The relation between quantum mechanics and higher brain functions: Lessons from quantum computation and neurobiology. In: Chiao RY, ed. Amazing light: New light on physics, cosmology and consciousness. Cambridge, UK: Cambridge University Press; 2010;584–600.

36. Koch C, Reid CR. Observatories of the mind. Nature. 2012;483:397–398.

37. Koch C, Tononi G. A turing test for consciousness. Scientific American. 2011;304:44–47.

38. Koch C, Tsuchiya N. Attention and consciousness: Two distinct brain processes. Trends in Cognitive Sciences. 2007;11:16–22.

39. Kreiman G, Fried I, Koch C. Single-neuron correlates of subjective vision in the human medial temporal lobe. Proceeding of the National Academy of Sciences of the United States of America. 2002;99:8378–8383.

40. Lamme VAF, Roelfsema PR. The distinct modes of vision offered by feedforward and recurrent processing. Trends in Neurosciences. 2000;23:571–579.

41. Laureys S. The neural correlate of (un)awareness: Lessons from the vegetative state. Trends in Cognitive Sciences. 2005;9:556–559.

42. Lee SH, Blake R, Heeger DJ. Traveling waves of activity in primary visual cortex. Nature Neuroscience. 2005;8:22–23.

43. Lein ES, Hawrylycz MJ, Ao N, et al. Genome-wide atlas of gene expression in the adult mouse brain. Nature. 2007;445:168–176.

44. Lemon RN, Edgley SA. Life without a cerebellum. Brain. 2010;133:649–654.

45. Libet B, Gleason CA, Wright EW, Pearl DK. Time of conscious intention to act in relation to onset of cerebral activity (readiness-potential): The unconscious initiation of a freely voluntary act. Brain. 1983;106:623–642.

46. Lin D, Boyle MP, Dollar P, et al. Functional identification of an aggression locus in the mouse hypothalamus. Nature. 2011;470:221–226.

47. Logan GD, Crump MJC. The left hand doesn’t know what the right hand is doing: The disruptive effects of attention to the hands in skilled typewriting. Psychological Science. 2009;20:1296–1300.

48. Logothetis N. Single units and conscious vision. Philosophical Transactions Royal Society of London B. 1998;353:1801–1818.

49. Maier A, Wilke M, Aura C, Zhu C, Ye FQ, Leopold DA. Divergence of fMRI and neural signals in V1 during perceptual suppression in the awake monkey. Nature Neuroscience. 2008;11:1193–1200.

50. Massimini M, Ferrarelli F, Huber R, Esser SK, Singh H, Tononi G. Breakdown of cortical effective connectivity during sleep. Science. 2005;309:2228–2232.

51. Milner AD, Goodale MA. The visual brain in action Oxford: Oxford University Press; 1995.

52. Monti MM, Vanhaudenhuyse A, Coleman MR, et al. Willful modulation of brain activity in disorders of consciousness. New England Journal of Medicine. 2010;362:579–589.

53. Naccache L, Blandin E, Dehaene S. Unconscious masked priming depends on temporal attention. Psychological Sciences. 2002;13:416–424.

54. Parvizi J, Damasio AR. Consciousness and the brainstem. Cognition. 2001;79:135–160.

55. Purves D, Paydarfar JA, Andrews TJ. The wagon wheel illusion in movies and reality. Proceeding of the National Academy of Sciences of the United States of America. 1996;93:3693–3697.

56. Rees G, Frith C. Methodologies for identifying the neural correlates of consciousness. In: Velmans M, Schneider S, eds. The blackwell companion to consciousness. Oxford: Blackwell; 2007;553–566.

57. Rosanova M, Gosseries O, Casarotto S, et al. Recovery of cortical effective connectivity and recovery of consciousness in vegetative patients. Brain. 2012;6:1–13.

58. Sacks O. In the river of consciousness. New York Review Books. 2004;51:41–44.

59. Schiff ND. Recovery of consciousness after brain injury. In: Gazzaniga MS, ed. The cognitive neurosciences. 4th ed. Cambridge, MA: MIT Press; 2010;1123–1136.

60. Searle J. Mind: A brief introduction New York, NY: Oxford University Press; 2004.

61. Sergent C, Dehaene S. Is consciousness a gradual phenomenon? Evidence for an all-or-none bifurcation during the attentional blink. Psychological Science. 2004;15:720–728.

62. Sheinberg DL, Logothetis NK. The role of temporal cortical areas in perceptual organization. Proceeding of the National Academy of Sciences of the United States of America. 1997;94:3408–3413.

63. Shiffrin R, Schneider W. Controlled and automatic human information processing: II Perceptual learning, automatic attending and a general theory. Psychological Review. 1977;84(2):127–190.

64. Slater R, Cantarella A, Gallella S, et al. Cortical pain responses in human infants. Journal of Neuroscience. 2006;26:3662–3666.

65. Stetson C, Cui X, Montague PR, Eagleman DM. Motor-sensory recalibration leads to reversal of action and sensation. Neuron. 2006;51:651–659.

66. Thorpe S, Fize D, Marlot C. Speed of processing in the human visual system. Nature. 1996;381:520–522.

67. Tong F, Nakayama K, Vaughan JT, Kanwisher N. Binocular rivalry and visual awareness in human extrastriate cortex. Neuron. 1998;21:753–759.

68. Tononi G. Consciousness as integrated information: A provisional manifesto. Biological Bulletin. 2008;215:216–242.

69. Tononi G, Edelman GM. Consciousness and complexity. Science. 1998;282:1846–1851.

70. Tsuchiya N, Koch C. Continuous flash suppression reduces negative afterimages. Nature Neuroscience. 2005;8:1096–1101.

71. VanRullen R, Koch C. Is perception discrete or continuous?. Trends in Cognitive Sciences. 2003a;7:207–213.

72. VanRullen R, Koch C. Visual selective behavior can be triggered by a feed-forward process. Cognitive Neuroscience. 2003b;15:209–217.

73. van Boxtel JA, Tsuchiya N, Koch C. Consciousness and attention: On sufficiency and necessity. Frontiers in Consciousness Research. 2010a;1:1–13.

74. van Boxtel JA, Tsuchiya N, Koch C. Opposing effects of attention and consciousness on afterimages. Proceeding of the National Academy of Sciences of the United States of America. 2010b;107 8883–8868.

75. Villablanca JR. Counterpointing the functional role of the forebrain and of the brainstem in the control of the sleep-waking system. Journal of Sleep Research. 2004;13:179–208.

76. Watanabe M, Cheng K, Murayama Y, et al. Attention but not awareness modulates the BOLD signal in the human V1 during binocular suppression. Science. 2011;334:829–831.

77. Wegner DM. The illusion of conscious will Cambridge, MA: MIT Press; 2003.