P. SCOTT MAKELA AND LAURIE HAYCOCK MAKELA

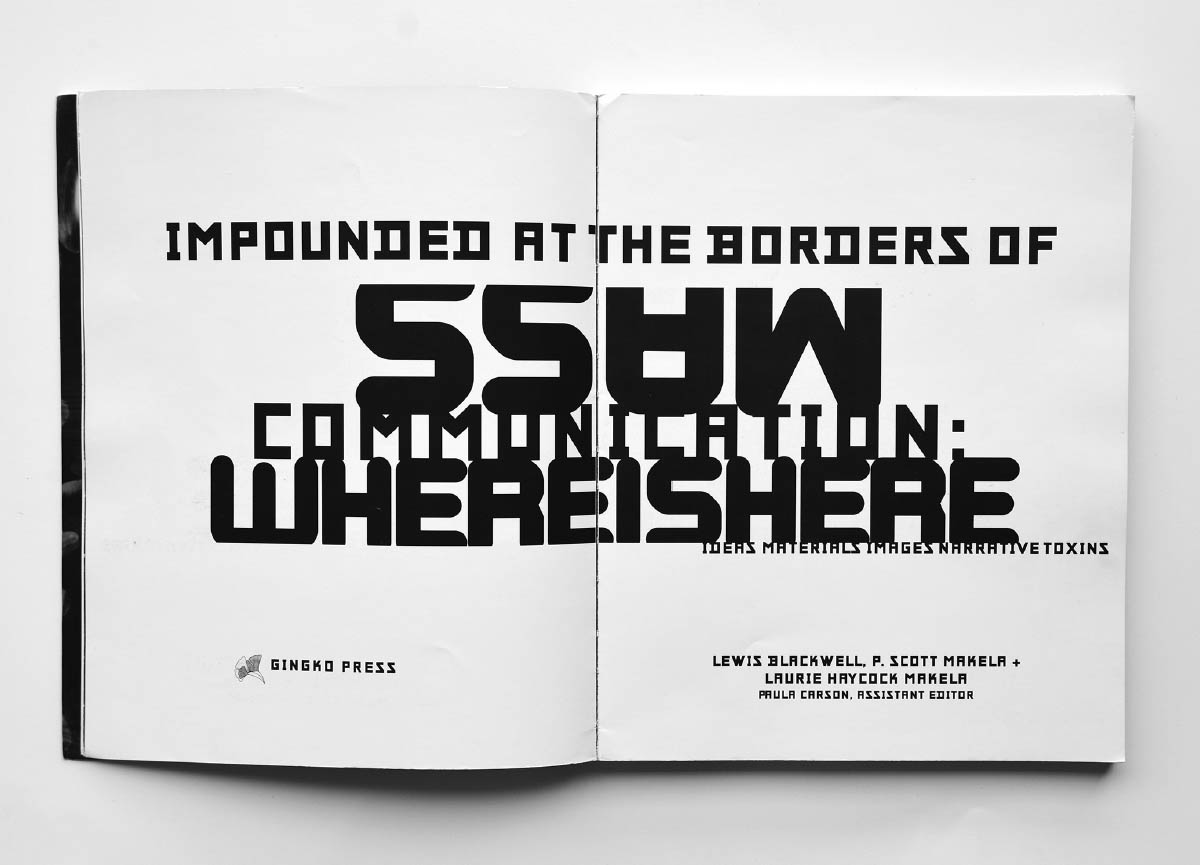

Spread from Whereishere, 1998. A collaboration with writer Lewis Blackwell, Whereishere expressed the multimedia frenzy spreading through the design world in the 1990s. At the time they were writing, the Makelas were resident co-chairs of 2-D design at Cranbrook Academy of Art.