1 Introduction to the Mind

Sixteen years ago, a patient I will call Sam suffered injuries that put him into a coma from which he never woke up. He showed no signs of awareness or ability to communicate. Observing Sam, lying in the care facility, it wouldn’t be unreasonable to conclude that “there’s nobody in there.” But is that true? Sam doesn’t move or respond, but does that mean he doesn’t have a mind? Is there any probability that his eyes, which appear to be vacantly staring into space, could be perceiving, and that these perceptions might be leading to thoughts?

These are the questions Lorina Naci and coworkers were asking when they placed Sam in a brain scanner that measured increases and decreases in electrical activity throughout his brain, and then showed him an eight-minute excerpt from an episode of Alfred Hitchcock Presents called “Bang! You’re Dead.”1 In this TV program, a five-year-old boy finds his uncle’s revolver, partially loads it with bullets, and begins playing with it in his room, making believe he is firing it—saying “bang, bang”—but not actually pulling the trigger.

The drama escalates when the boy enters a room where his parents are entertaining a number of people. He points the gun at people threateningly, saying “bang, bang,” to pretend he is shooting. Will he pull the trigger and actually shoot? Will someone be killed? These questions race through most viewers’ minds. (There was a reason Hitchcock was called “the master of suspense.”) At the end of the film, the gun goes off, the bullet smashes a mirror, the boy’s father grabs the gun, and the audience breathes a sigh of relief.

When this film was shown to healthy participants while they were in the scanner, changes in their brain activity were linked to what was happening in the movie. Activity was highest at suspenseful moments, such as when the child was pointing the gun at someone. So the viewer’s brains were not just responding to the pattern of light and dark on the screen, or to the images on the screen; their brain activity was being driven by what they were seeing and by the movie’s plot. And—here is the important point—to understand the plot, it was necessary to understand things that were not specifically presented in the movie, like why the gun is important (it is dangerous when loaded); what guns can do (they can kill people); and that the five-year-old boy was probably not aware of the danger that he could accidentally kill someone.

So how did Sam’s brain respond to the movie? Amazingly, his response was the same as that of the healthy participants: activity increased during periods of tension and decreased when danger wasn’t imminent. These findings indicate that Sam was not only seeing the images and hearing the soundtrack but reacting to the movie’s plot. His brain activity therefore indicated that Sam was consciously aware, so “someone was in there.”

This story about Sam, who appears to have a mental life despite appearances to the contrary, carries an important message as we embark on the adventure of understanding the mind. Perhaps the most important message is that the mind is hidden from view. Sam is an extreme case, because he can’t move or talk, but you will see that the “normal” mind also holds many secrets. Just as we can’t know exactly what Sam is experiencing, so we do not know exactly what other people are experiencing, even though they are able to tell us about their thoughts and observations.

And although you may be aware of your own thoughts and observations, you are unaware of most of what is happening in your mind. This means that as you understand what you are reading right now, hidden processes are operating within your mind, but beneath your awareness, that are making this understanding possible.

As you read this book, you will see how research has revealed many of these secret aspects of the mind’s operation. This is no trivial thing, because your mind not only makes it possible for you to read this text and understand the plots of movies but also is responsible for who you are and what you do. It creates your thoughts, perceptions, desires, emotions, memories, language, and physical actions. It guides your decision-making and problem-solving. It has been compared to a computer, although your “brain computer” outperforms your smart phone, laptop, or even a powerful supercomputer on many tasks. And, of course, your mind does something else that computers can’t even dream of (if only they could dream!): it creates your consciousness of what is out there, what is going on with your body, and, simply, what it is like to be you.

In this book, we will be exploring what the mind is, what it does, and how it does it. The first step is to look at some of the mind’s achievements. As we do, we will see that the mind is not monolithic but multifaceted, involving multiple functions and mechanisms.

The Multifaceted Mind

One way to appreciate the multifaceted nature of the mind is to consider some of the ways “mind” can be used in a sentence. Here are a few possibilities:

- He was able to call to mind what he was doing on the day of the accident. The mind as involved in memory.

- If you put your mind to it, I’m sure you can solve the math problem. The mind as problem solver.

- I haven’t made up my mind yet or I’m of two minds about that. The mind as decision maker.

- I know you well enough to read your mind or You read my mind. The mind as involved in social interactions.

- He is of sound mind and body or He is out of his mind. The mind as involved in mental health.

- A mind is a terrible thing to waste. The mind as valuable.

- He has a beautiful mind. Some people’s minds are especially creative or exemplary.2

These statements tell us some important things about what the mind does. Statements 1, 2, 3, and 4, which highlight the mind’s role in memory, problem-solving, decision-making, and interacting with other people, are related to the following definition of the mind:

The mind creates and controls functions such as perception, attention, memory, emotions, language, deciding, thinking, and reasoning, as well as taking physical actions to achieve our goals.

Statements 5, 6, and 7 emphasize the importance and amazing abilities of the mind. The mind is something related to our health, it is valuable, and we consider some people’s minds extraordinary. But one of the messages of this book is that the idea of the mind as amazing is not reserved for “extraordinary” minds, because even the most routine things—recognizing a person, having a conversation, deciding what food to buy at the supermarket—become amazing when we consider the properties of the mind that enable us to achieve these familiar activities.

What exactly are the properties of the mind? What are its characteristics? How does it operate? A book called The Mind has a lot to explain. Not only does the mind do a lot, but just about everything the mind is asked to achieve turns out to be more complicated than it first appears. Take, for example, opening your eyes and seeing a scene before you. You might think seeing the scene can’t be that complicated, because, after all, light reflected from the scene creates a picture of the scene on the retina that lines the back of your eye.

One reason for the difficulty of perception is that the picture of the scene on the retina is ambiguous. When the three-dimensional scene “out there” is represented by a picture on the flat surface of the retina, objects that are at different depths in the scene can appear right next to each other in the picture. If this isn’t obvious from looking out at the scene around you, close one eye, hold up one of your fingers, and place it next to a faraway object in the scene. When this scene-with-superimposed-finger becomes the picture on the retina, the finger and object appear adjacent to each other, even though they are far apart in the scene. The mind solves this “adjacency problem,” plus many others, so that we don’t have to deal with them; we just open our eyes, and we see!

Another example of something we achieve easily in the face of great complexity is understanding language. The stimulus for language, like the stimulus for perception, can be ambiguous, with the same word having different meanings, depending on the contents and structure of the sentence it appears in. Consider the following two sentences:

- Time flies like an arrow.

- Fruit flies like a banana.

Many things are going on in these sentences, including different meanings for “flies” (1: flies = moves; 2: flies = a type of bug) and “like” (1: could replace “like” with “similar to”; 2: could replace “like” with “appreciate”). Even simple, apparently straightforward sentences are more complicated than they appear. When we read “a car flew off the bridge,” we are pretty certain that the car doesn’t have wings and isn’t a special kind of flying machine. In fact, we would probably be right to guess that the car was involved in an accident, that it may have been submerged in water or smashed on the ground, that the bridge possibly sustained some damage, and that the driver was in danger of being injured or worse. All these conclusions from a simple six-word sentence!

As we describe how the mind tames the complexities of perception, language, and many other abilities, we will begin to appreciate that the mind is not simply an “identification machine” that catalogs objects and meanings but a sophisticated problem solver that uses mechanisms we are largely unconscious of. We will also see that these hidden mechanisms often use knowledge that we have accumulated about the world. We know, from past experience, that when a car “flies off a bridge,” it is unlikely that it will actually fly.

As we begin exploring the complexity of the mind’s operation, we will be looking at a number of the functions listed in our definition on page 4 but will narrow our focus by considering just some of the mind’s functions. We will narrow our focus further by basing our discussion on discoveries made using the scientific approach, in which hypotheses about the mind are tested by making controlled observations or running experiments. The scientific approach to the study of the mind has had an interesting history, which one could describe, with a nod to the Beatles, as “A Long and Winding Road.”

Approaches to the Mind

We begin our discussion of the scientific study of the mind by considering an experiment by Franciscus Donders, which highlights the fact that we can’t directly measure the mind.

Donders’s Pioneering Experiment

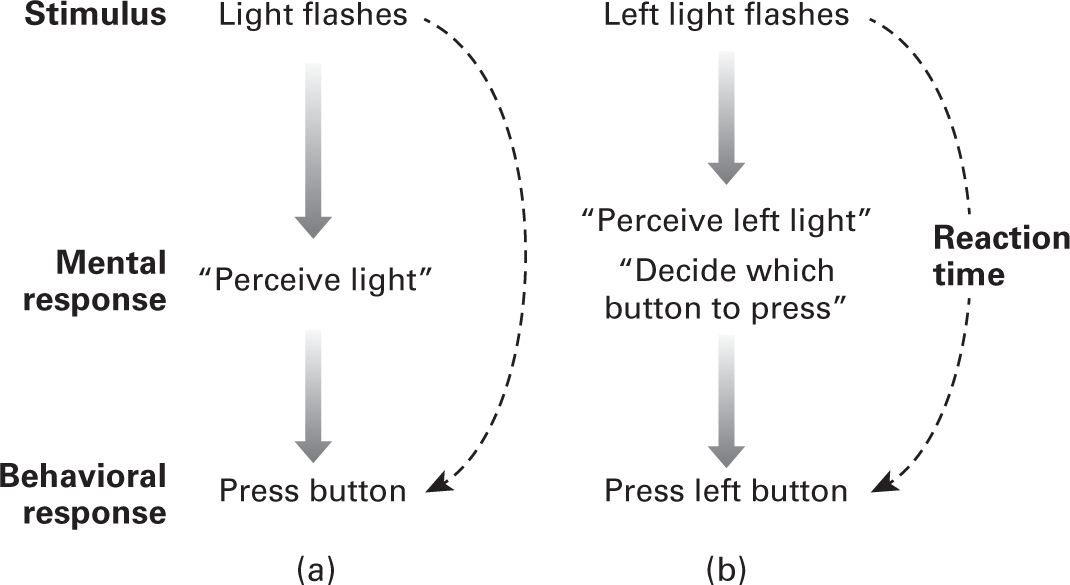

The first attempts to measure the mind in the scientific laboratory began in the mid-nineteenth century in the laboratory of Franciscus Donders (1818–1889), professor of physiology at the University of Utrecht in the Netherlands.3 Donders’s goal was to determine how long it took for a person to make a decision. He answered this question by measuring reaction times under two conditions. In the first condition, measuring simple reaction time, the participant sees a light flash on a screen and pushes a button as quickly as possible. The reaction time is the time between when the light appears and when the button is pushed. In the second condition, measuring choice reaction time, there are now two lights. The participant’s task is to press the left button if the left light flashes and the right button if the right light flashes. This condition involves the decision: which button should I press?

Donders found that the choice reaction time was about one-tenth of a second longer than the simple reaction time, and so concluded that it takes one-tenth of a second to make a decision in this situation. But the real importance of this experiment is in Donders’s reasoning, which we can appreciate by considering the diagrams in figure 1.1.

Sequence of events between presentation of the stimulus and the behavioral response in Donders’s experiments: (a) simple reaction time task; and (b) choice reaction time task. The dashed lines indicate the reaction times Donders measured—the time between the light flash and pressing the button. Note that the mental responses were not measured. Inferences about the mental response were made based on the reaction time measurements.

In both the simple condition (fig. 1.1a) and the choice condition (fig. 1.1b), the time between the stimulus (lights flashing) and the behavioral response (pushing a button) is measured. The mental response (seeing the light; deciding which button to push) is not measured. The fact that it took participants one-tenth of a second longer to respond in the choice reaction time condition caused Donders to infer that this was the extra “mental time” that it took to make a decision.

Think about Donders’s method. To determine how long it took to make a decision, he assumed that the additional mental activity in the choice task involved deciding which light had flashed and which button to push. He did not actually observe people making these decisions, so he inferred that these invisible decisions were what caused the slower response.

Donders was joined in the late nineteenth century by other researchers of the mind. The German psychologist Hermann Ebbinghaus (1850–1909) studied memory by determining how accurately lists of nonsense syllables, like IUL, ZRT, or FXP, can be remembered after different delays.4 The data he collected enabled him to determine “forgetting curves,” which plotted the decrease in the number of syllables remembered as a function of the time after they had been presented. Ebbinghaus’s results were important because they were one of the first demonstrations that characteristics of a function of the mind (remembering, in this case) could be plotted on a graph.

In the late nineteenth century, the study of the mind was off to a promising start, but just as momentum was building, events occurred that caused the study of the mind to come to a screeching halt. One of the events was Wilhelm Wundt’s (1832–1920) founding of the first laboratory of experimental psychology at the University of Leipzig in 1879. Wundt’s contributions, treating psychology as a science and establishing psychology as a separate field, were extremely important. But his preferred method, analytic introspection, contributed to the abandonment of the study of the mind in the early twentieth century.

Analytic introspection was a procedure in which a participant was asked to describe his or her experience. For example, in one experiment, Wundt asked participants to describe their experience of hearing a five-tone chord played on the piano. One of the questions Wundt hoped to answer was whether his participants were able to hear each of the individual notes that made up the chord.

Although “self-report” data of this kind were to reappear in psychology laboratories more than one hundred years later, the results from Wundt’s lab turned out to be highly variable from participant to participant. This variability bothered John B. Watson (1878–1958), who in 1900 was a graduate student in the psychology department at the University of Chicago. He also did not like that researchers had no way to check the accuracy of the participants’ description of their experience. Watson decided, therefore, that some changes were in order if psychology was going to be considered scientific, and he proceeded, with great enthusiasm, to banish the study of the mind from psychology.

The Study of the Mind Is Suspended by Behaviorism

Progress on studying the mind was put on hold in the early 1900s by Watson’s founding of behaviorism. The flavor of behaviorism is captured in the following quote from Watson’s 1913 paper “Psychology as the Behaviorist Views It”:

Psychology as the Behaviorist sees it is a purely objective, experimental branch of natural science. Its theoretical goal is the prediction and control of behavior. Introspection forms no essential part of its methods, nor is the scientific value of its data dependent upon the readiness with which they lend themselves to interpretation in terms of consciousness. … What we need to do is start work upon psychology making behavior, not consciousness, the objective point of our attack.5

In this passage, Watson rejects introspection as a method and proclaims that observable behavior, not events occurring in the mind (which involves unobservable processes such as thinking, emotions, and reasoning), should be psychology’s main topic of study. To emphasize his rejection of the mind as the topic of study, he further stated that “psychology … need no longer delude itself into thinking that it is making mental states the object of observation” (163).

In other words, Watson restricted psychology to behavioral data and rejected the idea of going beyond those data to draw conclusions about unobservable mental events. Watson’s most famous research paper described his “Little Albert” experiment, in which Watson and Rosalie Rayner6 used the classical conditioning procedure also used by Ivan Pavlov.7 In experiments begun in the 1890s, Pavlov paired food and a bell to cause dogs to salivate when they later heard the bell. Watson paired a loud noise with a small rabbit, which Albert had previously liked, to cause Albert to become afraid of the rabbit. Conditioning, according to Watson, provided explanations for many human behaviors, without having to make inferences about what is going on in the mind.

As behaviorism became the dominant force in American psychology, psychologists’ attention shifted from asking “What does behavior tell us about the mind?” to “What does the way people or animals react to stimuli tell us about behavior?”

Later, B. F. Skinner (1904–1990) developed a procedure for measuring behavior called operant conditioning in which rats and pigeons pressed bars to receive food rewards. Using this procedure, Skinner was able to demonstrate relationships between “schedules of reinforcement”—how often and in what pattern rewards were dispersed when animals pressed the bar—and the animals’ bar-pressing behavior. For example, if a rat is rewarded every time it presses the bar, its rate and pattern of bar pressing will be different from when it is rewarded every fifth time it presses the bar.8

The beauty of Skinner’s system was that it was objective and therefore “scientific.” Operant conditioning also led to practical applications for humans such as “behavior therapy,” in which the therapist applies Skinner’s reward principles to change a patient’s unwanted behaviors. However, beginning in the mid-1950s, changes were occurring in both psychology departments and popular culture that began the resurrection of the study of the mind.

A Paradigm Shift toward the Study of the Mind

As behaviorism was dominating psychology in the 1950s, there were stirrings indicating that a paradigm shift was about to occur in psychology, where a paradigm is a system of concepts and experimental procedures that dominate science at a particular time, and a paradigm shift is a change from one paradigm to another.9

An example of a paradigm shift in science is the shift from classical physics (associated with the work of Isaac Newton and other eighteenth- and nineteenth-century researchers) to modern physics (associated with Einstein’s theory of relativity and the development, by others, of quantum theory) that occurred at the beginning of the twentieth century. The paradigm shift in psychology was the shift from behaviorism, which focused solely on observable behavior, to cognitive psychology, which took the giant step of using observable behavior to make inferences about the operation of the mind.

An event that played an important role in triggering the shift from behaviorism to cognitive psychology occurred in 1954, when IBM introduced a computer that was available to the general public. These computers were still extremely large compared to the laptops of today, but they found their way into university research laboratories, where they were used both to analyze data and, most important for our purposes, to suggest a new way of thinking about the mind.

One of the characteristics of computers that captured the attention of psychologists was that computers processed information in stages, as illustrated in figure 1.2a. In this diagram, information is first received by an “input processor.” It is then stored in a “memory unit” before it is processed by an “arithmetic unit,” which then creates the computer’s output. Using this stage approach as their inspiration, some psychologists proposed the information-processing approach to studying the mind. According to the information-processing approach, the operation of the mind can be described as a sequence of mental operations.

Top: Simplified computer flow diagram; bottom: Broadbent’s flow diagram of the mind.

The diagram in figure 1.2b is an example of an early flow diagram of the mind, created by the British psychologist Donald Broadbent in 1958.10 This diagram was inspired by experiments designed to test people’s ability to focus on one message, when other messages are presented at the same time, as might occur if you are talking to a friend at a party, while ignoring all the other conversations that are occurring around you. This situation was studied in the laboratory by Colin Cherry,11 who presented participants with two auditory messages, one to the left ear and one to the right ear, and told them to focus their attention on one of the messages (the attended message) and to ignore the other one (the unattended message). For example, the participant might be told to attend to the left-ear message, which began with “Sam was looking forward to seeing his family over vacation …,” while simultaneously receiving, but not attending to, the right-ear message, “The mind is, according to some people, what makes us human …”

When people focused on the attended message, they could clearly hear it, and while they could tell that the unattended message was present, they were unaware of its contents. The flow diagram in figure 1.2b represents Broadbent’s idea of how this process might be occurring in the mind. The “input” represents many messages, as might be present at a party. The messages enter the “filter,” which lets through what your friend is saying while filtering out all the other conversations. Your friend’s message then enters the “detector,” and you hear what she is saying.

Broadbent’s flow diagram provided a way to analyze the operation of the mind in terms of a sequence of processing stages and proposed a model that could be tested by further experiments. But Cherry and Broadbent were not the only researchers pursuing new ways of studying the mind. At about the same time, John McCarthy, a young professor of mathematics at Dartmouth College, had an idea. Would it be possible, McCarthy wondered, to program computers to mimic the operation of the human mind? To address this question, McCarthy organized a conference at Dartmouth in the summer of 1956 to provide a forum for researchers to discuss ways that computers could be programmed to carry out intelligent behavior. The title of the conference, “Summer Research Project on Artificial Intelligence,” was the first use of the term “artificial intelligence.” McCarthy defined the artificial intelligence approach as “making a machine behave in ways that would be called intelligent if a human were so behaving.”12

Researchers from a number of different disciplines—psychologists, mathematicians, computer scientists, linguists, and experts in information theory—attended the conference, which spanned ten weeks. Two participants—Herb Simon and Alan Newell from the Carnegie Institute of Technology—demonstrated a computer program, called the Logic Theorist, at the end of the conference. What they demonstrated was revolutionary, because the Logic Theorist program was able to create proofs of mathematical theorems that involved principles of logic. This program, although primitive compared to modern artificial intelligence programs, created a real “thinking machine” because it did more than simply process numbers; it used humanlike reasoning processes to solve problems.

Shortly after the Dartmouth conference, in September of the same year, another pivotal conference was held, the “Massachusetts Institute of Technology Symposium on Information Theory.” This conference provided another opportunity for Newell and Simon to demonstrate their Logic Theorist program, and the attendees also heard George Miller, a Harvard psychologist, present a version of his paper “The Magical Number Seven, Plus or Minus Two,” which had just been published.13 In that paper, Miller presented the idea that our ability to process information has certain limits—that the information processing of the human mind is limited to about seven items (for example, the length of a telephone number not including area code).

The events I have described—Cherry’s experiment, Broadbent’s filter model, and the two conferences in 1956—represented the beginning of the paradigm shift in psychology that has been called the cognitive revolution. It is worth noting, however, that the shift from behaviorism to the cognitive approach, which was indeed revolutionary, occurred over a period of time. The scientists attending the conferences in 1956 had no idea that these conferences would, years later, be seen as historic events in the birth of a new way of thinking about the mind or that scientific historians would someday call 1956 “the birthday of cognitive science.”14

Ironically, another development that opened the mind as a topic of study was the publication, in 1957, of a book by B. F. Skinner titled Verbal Behavior.15 In this book, Skinner argued that children learn language through operant conditioning. According to this idea, children imitate speech that they hear, and repeat correct speech because it is rewarded. But in 1959, Noam Chomsky, a linguist at MIT, published a scathing review of Skinner’s book, in which Chomsky pointed out that children say many sentences that have never been rewarded by parents (“I hate you, Mommy,” for example), and that during the normal course of language development, they go through a stage in which they use incorrect grammar, such as “the boy hitted the ball,” even though this incorrect grammar may never have been reinforced.16

Chomsky saw language development as being determined not by imitation or reinforcement but by an inborn biological program that holds across cultures. Chomsky’s idea that language is a product of the way the mind is constructed, rather than a result of reinforcement, led psychologists to reconsider the idea that language and other complex behaviors, such as problem-solving and reasoning, can be explained by operant conditioning. Instead they began to realize that to understand complex cognitive behaviors, it is necessary not only to measure observable behavior but also to consider what this behavior tells us about how the mind works.

As more psychologists became interested in studying the mind, Ulrich Neisser published the first textbook titled Cognitive Psychology in 1967,17 and psychologists studying the mind began calling themselves “cognitive psychologists.” More flow diagrams followed, describing processes ranging from memory to language to problem-solving in terms of information processing, and as researchers embraced the information-processing approach, with its commitment to discovering the internal mechanisms of the mind, behaviorism began fading into the background.18

Meanwhile in Popular Culture …

The cognitive revolution was, for our purposes, the major psychological event of the 1950s and 1960s, because it paved the way for the reentry of the study of the mind into psychology. But during that period, another revolution was taking place: the sixties counterculture revolution. On the one hand, this revolution, in which some of the participants were hippies getting high during the Summer of Love in San Francisco, would seem unrelated to research being carried out in psychology laboratories. However, as chronicled in Adam Smith’s book Powers of Mind, the sixties revolution and the cognitive revolution had an important thing in common: they were both concerned with the mind.19

To appreciate why events that were occurring in society in the 1960s were called a revolution, let’s consider what was happening in society in the previous decade. In 1953, Dwight D. Eisenhower was president of the United States, and families were pursuing the American Dream of home ownership in newly developing suburban communities, which were accompanied by the development of shopping centers and fast-food restaurants, like McDonalds, which opened its first restaurant in 1956. Emblematic of the 1950s was the TV sitcom Leave It to Beaver, which premiered in 1957 and ran for six seasons. This sitcom featured the Cleaver family, which was the wholesome “ideal family” of the 1950s and 1960s TV, where dad Ward Cleaver arrives home from work and is greeted by mom June Cleaver, who has spent her day cleaning, cooking, and tending to the needs of their two sons.

But the idealized Cleaver family was not to last as a symbol of American normalcy. The United States entered the Vietnam War in the early 1960s, leading to widespread protests. Timothy Leary, then a professor at Harvard, began experimenting with the hallucinogenic drug lysergic acid diethylamide (LSD) and preaching his credo “Turn on, tune in, and drop out,” and marijuana use was becoming widespread among young people, both those who identified as part of the hippie counterculture and those who were simply members of the younger generation—who, four hundred thousand strong, showed up at the drug-drenched Woodstock music festival in the summer of 1969. The reason these events are relevant to the study of the mind is that one of the themes of the new counterculture of the 1960s was “mind expansion.” Drugs were one way of expanding the mind, and although many used drugs just for “fun,” there were also those who saw drugs as an entryway to discovering higher processes of the mind.20 Additionally, while hippies were getting high, scientists began studying LSD to determine how it works and how it could be used therapeutically.21 This research halted when psychedelics were made illegal in the United States in the late 1960s. But recently, researchers have begun applying twenty-first-century techniques to determine connections between drug experiences and physiological events in the brain.22

Another popular movement associated with the mind was the “human potential movement,” headquartered at the Esalen Institute. Founded in 1962 on a campus in Big Sur, California, perched high above the Pacific Ocean 160 miles south of San Francisco, Esalen featured self-awareness seminars, lectures, and activities such as yoga and meditation. Esalen, which still exists today, spawned many similar institutions across the country.

Particularly important among the activities nurtured at Esalen was meditation. Although many Americans in the sixties considered meditation “exotic” or “far out,” today it is widely practiced, and its mechanisms and benefits have been studied in thousands of research studies.23

These stories about the sixties—the cognitive revolution, which conceptualized the mind as an information processor; the popularization of psychoactive drugs; and the human potential movement, which included meditation practice—each involved the mind in some way. They also have something else in common: they involve phenomena that are created by the brain. “But, of course,” you might say, “isn’t everything we experience created by the brain?” My answer to this question, as you might expect, is “yes.” But before we begin considering research on the brain, let’s consider the idea, proposed by some, that the answer is “no.”

Mind-Brain Skepticism

The philosophical standard-bearer for the idea of a separation between mind and brain is René Descartes (1596–1650). Descartes’s stance relevant to the mind and the brain is called Cartesian dualism. He stated that the mind and the brain are made up of different “substances.” He located the pineal gland, at the base of the brain, as the place where the mind and brain interact, but he provided no details as to how this interaction might occur. The following reasoning behind the idea that the mind and the brain are two distinct substances appears in his Discourse on the Method:24

- I can pretend that I have no body or that there is no world out there.

- But because I can think, I can’t pretend that I don’t exist.

From this second idea comes the famous pronouncement Cogito ergo sum, “I think, therefore I am.” Descartes goes on to say that he is “a substance whose essence or nature is simply to think, and which does not require any place, or depend on any material thing, in order to exist” (6:32–33). The key thought here is that thinking does not depend on a “material thing,” and because the brain is a material thing, Descartes concluded that the thinking mind does not depend on the brain.

God and spirituality played a central role in Descartes’s philosophy, so it should not surprise us that he points to God as the source of both mind and body. Other spiritually centered positions have also subscribed to the separateness of mind and brain. For example, Geshe Kelsang Gyatso,25 a contemporary Buddhist monk, states: “Our brain is not our mind. The brain is simply a part of our body that, for example, can be photographed, whereas our mind cannot.” Another spiritualist approach that denies the necessity of the brain is taken by Deepak Chopra, a follower of Vedantic Hindu philosophy and a widely read author.26 He does not deny some role for the brain but emphasizes that because biological research has left many questions about the mind unanswered, we need to look beyond the brain to understand the mind, to properties of the “conscious universe.” Chopra’s reasoning, which is not easy to follow, leads him to conclude that everything in the universe is conscious—not just organisms with brains. (In chapter 2, we will see that consciousness is classified as a product of the mind, so when Chopra talks about consciousness, he is essentially referring to the mind.)

Another way the mind and brain have been seen as separate derives from descriptions of out-of-body experiences (OBEs). Out-of-body experiences occur when a person seems to be awake and feels that his or her self, or center of experience, is located outside of his or her body. Sometimes also occurring during an OBE is autoscopy, in which the person experiences his or her body as floating in space.27 Here is an example of one person’s OBE:

I was in bed and about to fall asleep when I had the distinct impression that “I” was at the ceiling level looking down at my body in the bed. I was very startled and frightened; immediately afterwards I felt that I was consciously back in the bed again.28

OBEs have been associated with psychiatric conditions such as schizophrenia, depression, and personality disorder, as well as with neurological problems such as epilepsy, and can also occur in 10 percent of the general public.29

There is no controversy regarding the existence of OBE, but there is controversy regarding its cause. There are two opposing points of view. One view states that OBE represents the projection of a person’s personality into space and is therefore an example of a separation of mind from body. This spiritual explanation of OBE is not, however, accepted by most psychologists, who explain OBE as the result of normal psychological and physiological processes.

Evidence connecting OBEs to brain processes includes the finding that drugs like marijuana and LSD increase the probability of experiencing an OBE, presumably through the drug’s action on the brain. Dirk De Ridder and coworkers demonstrated the brain-OBE link in a patient who was undergoing treatment for tinnitus (ringing in the ears) that included electrical stimulation of a location in the temporal lobe.30 Unfortunately, this stimulation did not relieve the patient’s tinnitus symptoms. However, it did cause the person to experience an OBE, and when the person’s brain was scanned during the OBE, areas in the temporal lobe were activated that are involved in the somatosensory system (sensing the body surface) and in the vestibular system (regulating the sense of balance). Findings such as these have led to the hypothesis that OBEs are caused by distorted somatosensory and vestibular processing.31

Sometimes OBE occurs in conjunction with the phenomenon of near-death experience (NDE). This phenomenon has been reported by people, such as cardiac arrest patients, who were near death and then were revived. They typically report experiencing OBE and seeing a tunnel, visions of a brilliant white or gold light, meeting other beings, and seeing their life passing by. The spiritualist explanation for NDE is that the person has left his or her body and experienced a higher spiritual world. This is sometimes called the “afterlife hypothesis.”32

Eben Alexander, a neurosurgeon, had an NDE and wrote a book, Proof of Heaven, about his experience and why it occurred.33 His explanation hinges on his assertion that “during my coma, my brain wasn’t working improperly—it wasn’t working at all.” In other words, according to Alexander, his NDE occurred when his brain was dead. From this he concluded that NDEs show that experience does not depend on the brain, and at least in his case, his experience occurred because he was actually in heaven. Others, such as Pim van Lommel in his book Consciousness beyond Life, have used similar reasoning to argue that the brain is not necessary for experience.34 Ironically, the subtitle of Van Lommel’s book is The Science of Near-Death Experience. And here’s the rub—although both Alexander and Van Lommel are physicians, the “science” they cite is simply inaccurate or speculative.35 A basic question that strikes at the central argument in both books is “Was the brain ‘dead’ when people were having NDEs?” After all, these people did live to tell their story, which means their brain was alive, as they were “coming back.” Serious scientific research on NDE indicates that patients’ experiences are most likely to occur as they are slowly regaining consciousness, and so the supposed “spiritual” experiences are due to brain processes occurring as the brain is becoming functional again.36

One way to think about NDEs and OBEs is that they are a type of hallucination created by the brain. The neurologist Oliver Sacks, in writing about Alexander’s book, rejects the idea that his vision was “nonphysiological.” In considering hallucinations in general, Sacks says:

Hallucinations, whether revelatory or banal, are not of supernatural origin; they are part of the normal range of human consciousness and experience. This is not to say that they cannot play a part in the spiritual life, or have great meaning for the individual. Yet while it is understandable that one might attribute value, ground beliefs, or construct narratives from them, hallucinations cannot provide evidence for the existence of any metaphysical beings or places. They provide evidence only of the brain’s power to create them.37

The idea that hallucinations are created by the brain is the centerpiece of research I mentioned earlier, which has begun to search for links between physiological changes in the brain caused by drugs like LSD and the drug-induced experiences, which are often described as “spiritual.”38

We have seen that, beginning with Descartes and continuing to the present, some people feel that experience does not depend solely on the brain. But most cognitive neuroscientists reject this idea in favor of the idea that all experience is created by the brain.39 The theme of this book is that although we still have much to learn about the mind, traditional scientific approaches offer the best pathway for figuring it out. It is relevant that in John Brockman’s 2013 book The Mind, which included articles by eighteen leading scientists, sixteen of the eighteen articles specifically mentioned the brain, and the other two described empirically based behavioral research.40 Brockman’s book reflects the preeminence of the scientific, empirical, and often biologically oriented approach to the study of the mind. It also reflects the approach of this book. We will focus on empirical research—with a little speculation thrown in—and will be looking at links between the brain and mind whenever possible.

Mind-Brain Connections

The human mind is a complex phenomenon built on the physical scaffolding of the brain.

—Danielle Bassett and Michael Gazzaniga41

How is our invisible mind created from the physical scaffolding of the brain? Answers to this question began appearing in the 1800s, when the predominant technique for determining mind-brain connections was analyzing the behavior of patients with brain damage. In 1861, Paul Broca (1824–1880) reported his study of a patient who had suffered damage to his frontal lobe and was called “Tan” because that was the only word he could say.

When Broca tested other patents with damage to their frontal lobe, in the area that came to be called Broca’s area,42 he found that their speech was slow and labored and often had jumbled sentence structure. Modern researchers have concluded that damage to Broca’s area causes problems creating meaning based on word order. Another area relevant to speech was identified by Carl Wernicke (1848–1905), who studied patients with damage to an area in their temporal lobe now called Wernicke’s area.43 Patients with damage in this area have difficulty understanding the meanings of words. The classic studies of Broca and Wernicke were precursors to modern research in neuropsychology: the study of the behavior of people with brain damage. Figure 1.3a shows the locations of Broca’s and Wernicke’s areas.

Results of some physiological research on the mind. (a) Location of Broca’s and Wernicke’s areas; (b) A neuron and some nerve fibers as visualized by Cajal. (c) Modern records of nerve impulses from a single neuron. The rate of nerve firing increases as stimulus intensity increases, from top to bottom. (d) An electroencephalogram (EEG) recorded with scalp electrodes. (e) fMRI record. Activity is determined for each voxel, where a voxel is a small volume of the cortex, indicated here by small squares. Colors, not shown here, indicate the amount of activity in each voxel.

The next major advance, looking farther “under the hood” of the brain, opened the way for work at the level of the neuron. The Spanish physiologist Santiago Ramón y Cajal (1852–1934), peering through his microscope at exquisitely stained slices of brain tissue, discovered that individual units called neurons were the basic building blocks of the brain (fig. 1.3b). Cajal also concluded that neurons communicate with one another to form neural circuits.44

Cajal’s idea of individual neurons that communicate with other neurons to form neural circuits laid the groundwork for later research on neural communication, which I discuss in more detail in chapter 6. These discoveries earned Cajal the Nobel Prize in 1906, and today he is recognized as “the person who made this cellular study of mental life possible.”45

Cajal succeeded in describing the structure of individual neurons and how they are related to other neurons, and he knew that these neurons transmitted electrical signals. However, although Cajal was able to see individual neurons, it wasn’t yet possible, early in the twentieth century, to measure the electrical signals that traveled down these neurons. Scientists faced two problems: (1) neurons are very small, and (2) so are the electrical signals that travel down the neurons.

In 1906, when Cajal received his Nobel Prize, the technology for measuring small responses in small neurons was not available, but by the 1920s techniques had been developed to isolate single neurons, and a device called the “three-stage amplifier” became available. With the aid of this new technology, the British physiologist Edgar Adrian (1889–1977) founded modern single-neuron electrophysiology—the recording of electrical signals from single neurons—an achievement for which he was awarded the Nobel Prize in 1932.46

Once Adrian had succeeded in recording electrical signals from neurons, the stage was set for research linking brain activity and experience. In an early experiment, Adrian recorded from a neuron receiving impulses from the skin of a frog. As he applied pressure to the skin, he found that pressure caused rapid signals called nerve impulses, and increasing the pressure caused the rate of nerve firing—the number of nerve impulses that traveled down the nerve fiber per second—to increase (fig. 1.3c). From this result, Adrian drew a connection between nerve firing and experience. He described this connection in his 1928 book The Basis of Sensation by stating that if nerve impulses “are crowded closely together the sensation is intense, if they are separated by long intervals the sensation is correspondingly feeble.”47 What Adrian is saying is that electrical signals are representing the intensity of the stimulus, so pressure that generates “crowded” electrical signals feels stronger than pressure that generates signals separated by long intervals.

Determining that faster nerve firing signaled more pressure was the first step toward determining the relation between electrical signals in the brain and experience and led to tens of thousands of papers using the single-neuron recording technique.48

Most of the single-neuron research was done on animals. The discovery of the electroencephalogram by Hans Berger in 1929 made it possible to record electrical signals by placing disc electrodes on the scalps of humans.49 These electrodes recorded the massed response of many neurons and led to research relating brain activity in humans to various states of consciousness, which I discuss in chapter 2 (fig. 1.3d).

Another technological advance that has furthered our understanding of mind-brain connections is the development of techniques for brain imaging. The first imaging technique, positron emission tomography (PET), was introduced in 1975, and was largely replaced by functional magnetic resonance imaging (fMRI) which was introduced in 1990.50 Functional magnetic resonance imaging is based on the fact that blood flow increases in areas of the brain activated by a cognitive task. Without going into the details, these changes in blood flow, measured in a brain scanner, are converted into images that indicate which areas of the brain become more activated by a particular task, and which areas become less activated (fig. 1.3e).

The introduction of brain imaging brings us back to the idea of paradigm shifts. The idea of paradigm shifts, introduced by Thomas Kuhn in his 1962 book The Structure of Scientific Revolutions, was based on the idea that a scientific revolution involves a shift in the way people think about a subject.51 This was clearly the case in the shift from the behavioral to the cognitive paradigm. But in addition to the shift in thinking, another kind of shift can occur: a shift in how people do science.52 This shift, which depends on new developments in technology, is what happened with the introduction of fMRI. NeuroImage, a journal devoted solely to reporting neuroimaging research, was founded in 1992, followed by Human Brain Mapping in 1993.53 From its starting point in the early 1990s, the number of fMRI papers published in all journals has steadily increased. It has been estimated that about forty thousand fMRI papers had been published as of 2015.54 As we will see in later chapters, fMRI has played a central role in studying the connection between brain function and experience.

Table 1.1 summarizes some of the physiological methods used to study mind-brain connections. As we will see in later chapters, other imaging techniques have been developed, which provide additional information about brain structure and function. In the next chapter, I focus on consciousness—the subjective inner life of the mind. The focus will be on experience and behavior, but we will return to the brain at the end of the chapter.

Physiological Methods for Studying Mind and Brain |

||

Method |

Early Work |

|

(a) Neuropsychology Study of the effect of brain damage on human behavior |

1861: Broca; 1868: Wernicke. Specific language functions are located in specific brain areas. See fig. 1.3a. |

|

(b) Neuroanatomy Determination of structures in the nervous system and their connections |

1894: Ramón y Cajal. Neurons create the transmission system of the brain. Often organized in neural circuits. See fig. 1.3b. |

|

(c) Single-cell electrophysiology Recording electrical signals from single neurons with microelectrodes and determining how they fire to sensory stimuli (mainly in animals) |

1928: Edgar Adrian. Demonstrated a relation between rate of nerve firing and magnitude of sensory stimuli. See fig. 1.3c. |

|

(d) Electroencephalography Recording electrical signals from the surface of the human scalp. |

1929: Hans Berger. Brain wave patterns are related to states of consciousness, especially during sleep. See fig. 1.3d. |

|

(e) Brain imaging Measuring change in blood flow in human brain caused by cognitive activity. |

1975: Ter-Pogossian et al. Positron-emission tomography (PET) 1990: Ogawa et al. Functional magnetic resonance imaging (fMRI): Specific areas of the brain are associated with specific functions. Also demonstrated widespread brain activation associated with even simple functions. See fig. 1.3e. |

|

Notes

1. L. Naci, R. Cusack, M. Anello, and A. M. Owen, “A Common Neural Code for Similar Conscious Experiences in Different Individuals,” Proceedings of the National Academy of Sciences 111 (2014): 14277–14282.

2. This phrase is taken from the title of the biography A Beautiful Mind, by Sylvia Nasser (1998), about John Forbes Nash, who had schizophrenia, but whose brilliance earned him a Nobel Prize in economics.

3. F. C. Donders, “On the Speed of Mental Processes,” in Attention and Performance II: Acta Psychologica, vol. 30, ed. W. G. Koster (1969), 412–431.(Original work published in 1868).

4. H. Ebbinghaus, Memory: A Contribution to Experimental Psychology, trans. H. A. Ruger and C. E. Bussenius (New York: Teachers College, Columbia University, 1913). (Original work, Über das Gedächtnis, published 1885.)

5. J. B. Watson, “Psychology as the Behaviorist Views It,” Psychological Review 20 (1913): 158, 176; emphasis added.

6. J. B. Watson and R. Raynor, “Conditioned Emotional Reactions,” Journal of Experimental Psychology 3 (1920): 1–14.

7. I. Pavlov, Conditioned Reflexes (London: Oxford University Press, 1927).

8. B. F. Skinner, The Behavior of Organisms (New York: Appleton Century, 1938).

9. F. J. Dyson, “Is Science Mostly Driven by Ideas or by Tools?” Science 338 (2012): 1426–1427; T. Kuhn, The Structure of Scientific Revolutions (Chicago: University of Chicago Press, 1962).

10. D. E. Broadbent, Perception and Communication (London: Pergamon Press, 1958).

11. E. C. Cherry, “Some Experiments on the Recognition of Speech, with One and with Two Ears,” Journal of the Acoustical Society of America 25 (1953): 975–979.

12. J. McCarthy, M. L. Minsky, N. Rochester, and C. E. Shannon, “A Proposal for the Dartmouth Summer Research Project on Artificial Intelligence,” August 31, 1955, http://www-formal.stanford.edu/jmc/history/dartmouth/dartmouth.html.

13. G. A. Miller, “The Magical Number Seven, Plus or Minus Two: Some Limits on Our Capacity for Processing Information,” Psychological Review 63 (1956): 81–97.

14. W. Bechtel, A. Abrahamsen, and G. Graham, “The Life of Cognitive Science,” in A Companion to Cognitive Science, ed. W. Bechtel and G. Graham, 2–104 (Oxford: Blackwell, 1998); G. A. Miller, “The Cognitive Revolution: A Historical Perspective,” Trends in Cognitive Sciences 7 (2003): 141–144; U. Neisser, “New Vistas in the Study of Memory,” in Remembering Reconsidered: Ecological and Traditional Approaches to the Study of Memory, ed. U. Neisser and E. Winograd, 1–10 (Cambridge: Cambridge University Press, 1988).

15. B. F. Skinner, Verbal Behavior (New York: Appleton-Century-Crofts, 1957).

16. N. Chomsky, “A Review of Skinner’s Verbal Behavior,” Language 35 (1959): 26–58.

17. U. Neisser, Cognitive Psychology (New York: Appleton-Century-Crofts, 1967).

18. Although behaviorism ceased to be the dominant paradigm in psychology, Skinner’s accomplishment should not be diminished. His explanation of how behavior can be controlled by reinforcements is still important, as evidenced by the everyday example of addictive cell phone usage, which is an example of reinforcements influencing behavior. The cognitive revolution pointed out that reinforcement theory not only ignores cognitive processes but also provides only a partial explanation of behavior.

19. A. Smith, Powers of Mind (New York: Simon & Schuster, 1975).

20. C. Castaneda, Journey to Ixtlan: The Lessons of Don Juan (New York: Simon & Schuster, 1972); A. Huxley, The Doors of Perception (London: Chatto & Windus, 1954).

21. J. R. MacLean, D. C. Macdonald, F. Oden, and E. Wilby, “LSD-25 and Mescaline as Therapeutic Adjuvants,” in The Use of LSD in Psychotherapy and Alcoholism, ed. H. Abramson (New York: Bobbs-Merrill, 1967), 407–426.

22. M. Pollan, “Guided Explorations: My Adventures with the Researchers and Renegades Bringing Psychedelics into the Mental Health Mainstream,” New York Times Magazine, May 20, 2018, 32–38, 61–65; M. Pollan, How to Change Your Mind: What the New Science of Psychedelics Teaches Us about Consciousness, Dying, Addiction, Depression, and Transcendence (New York: Penguin, 2018).

23. J. D. Creswell, “Mindfulness Interventions,” Annual Review of Psychology 68 (2017): 491–516; J. P. Pozuelos, B. R. Mean, M. R. Rueda, and P. Malinowski, “Short-Term Mindful Breath Awareness Training Improves Inhibitory Control and Response Monitoring,” Progress in Brain Research 244 (2019): 137–163.

24. R. Descartes, Discourse on the Method (1637).

25. Geshe Kelsang Gyatso, Transform Your Life (Ulverston, UK: Tharpa, 2002).

26. D. Chopra, “A Final Destination: The Human Universe,” address to the Tucson Science of Consciousness Conference, April 27, 2016.

27. O. Blanke, T. Landis, L. Spinelli, and M. Seeck, “Out-of-Body Experience and Autoscopy of Neurological Origin,” Brain 127 (2004): 243–258; S. Bunning and O. Blanke, “The Out-of-Body Experience: Precipitating Factors and Neural Correlates,” in Progress in Brain Research, vol. 150, ed. S. Laureys (New York: Elsevier, 2005).

28. O. Blanke and S. Arzy, “The Out-Of-Body Experience: Disturbed Self-Processing at the Temporo-Parietal Junction,” Neuroscientist 11, no. 1 (2005): 16.

29. S. J. Blackmore, Beyond the Body: An Investigation of Out-of-Body Experiences (London: Heinemann, 1982); H. Irwin, Flight of Mind: A Psychological Study of the Out-of-Body Experience (Metuchen, NJ: Scarecrow Press, 1985).

30. D. De Ridder, K. Van Laere, P. Dupont, T. Monovsky, and P. Van de Hyning, “Visualizing Out-of-Body Experience in the Brain,” New England Journal of Medicine 357 (2007): 1829–1833.

31. Bunning and Blanke, “The Out-of-Body Experience”; De Ridder et al., “Visualizing Out-of-Body Experience”; F. Tong, “Out-of-Body Experiences: From Penfield to Present,” Trends in Cognitive Sciences 7 (2003): 104–106.

32. S. Blackmore, Dying to Live: Near-Death Experiences (New York: Prometheus Books, 1993); B. Greyson and I. Stevenson, “The Phenomenology of Near-Death Experiences,” American Journal of Psychiatry 137 (1980): 1193–1196: K. R. Ring, Life at Death: A Scientific Investigation of the Near-Death Experience (New York: Coward, McCann & Geoghegan, 1980).

33. E. Alexander, Proof of Heaven: A Neurosurgeon’s Journey into the Afterlife (New York: Simon & Schuster, 2013).

34. P. van Lommel, Consciousness beyond Life: The Science of the Near-Death Experience (New York: HarperCollins, 2010).

35. G. M. Woerlee, Mortal Minds: The Biology of Near-Death Experience (New York: Prometheus Books, 2005); G. M. Woerlee, “Review of P. M. Lommel, Consciousness beyond Life” (2019), http://

neardth .com /consciousness -beyond -life .php. 36. S. Blackmore, Consciousness, 2nd ed. (New York: Routledge, 2010); L. Dittrich, “The Prophet,” Esquire, July 2, 2013; O. Sacks, “Seeing God in the Third Millennium,” Atlantic, December 12, 2012.

37. Sacks, “Seeing God in the Third Millennium.”

38. R. L. Carhart-Harris, S. Muthukumaraswamy, L. Roseman, et al., Neural Correlates of the LSD Experience Revealed by Multimodal Neuroimaging,” Proceedings of the National Academy of Sciences 113 (2016): 4853–4858.

39. J. Searle, “Theory of Mind and Darwin’s Legacy,” Proceedings of the National Academy of Sciences 110 (2013): 10343–10348.

40. J. Brockman, ed., The Mind: Leading Scientists Explore the Brain, Memory, Personality, and Happiness (New York: Harper, 2013).

41. D. S. Bassett and M. S. Gazzaniga, “Understanding Complexity in the Human Brain,” Trends in Cognitive Sciences 15 (2011): 200–209.

42. P. Broca, “Sur le volume et la forme du cerveau suivant les individus et suivant les races,” Bulletin Societé d’Anthropologie Paris 2 (1861): 139–207, 301–321, 441–446.

43. C. Wernicke, Der aphasische Symptomenkomplex (Breslau: Cohn, 1874).

44. Santiago Ramón y Cajal, “The Structure and Connections of Neurons: Nobel Lecture, December 12, 1906,” in Nobel Lectures, Physiology or Medicine, 1901–1921 (New York: Elsevier Science, 1967), 221–253.

45. E. R. Kandel, In Search of Memory (New York: Norton, 2006), 61.

46. E. D. Adrian, The Basis of Sensation (New York: Norton, 1928), 7; Adrian, The Mechanism of Nervous Action (Philadelphia: University of Pennsylvania Press, 1932).

47. Adrian, The Basis of Sensation, 7; The Mechanism of Nervous Action.

48. D. H. Hubel, “Exploration of the Primary Visual Cortex, 1955–1978,” Nature 299 (1982): 515–524; D. H. Hubel and T. N. Wiesel, “Receptive Fields of Single Neurons in the Cat’s Striate Cortex,” Journal of Physiology 148 (1959): 574–591; D. H. Hubel and T. N. Wiesel, “Receptive Fields and Functional Architecture in Two Non-striate Visual Areas (18 and 19) of the Cat,” Journal of Neurophysiology 28 (1965): 229–289.

49. H. Berger, Psyche (Jena: Gustav Fischer, 1940).

50. S. Ogawa, T. M. Lee, A. R. Kay, and D. W. Tank, “Brain Magnetic Resonance Imaging with Contrast Dependent on Blood Oxygenation,” Proceedings of the National Academy of Sciences, 87 (1990): 9868–9872. M. M. Ter-Pogossian, M. E. Phelps, E. J. Hoffman, and N. A. Mullani, “A Positron-Emission Tomograph for Nuclear Imaging (PET),” Radiology 114 (1975): 89–98.

51. T. Kuhn, The Structure of Scientific Revolutions (Chicago: University of Chicago Press, 1962).

52. F. J. Dyson, “Is Science Mostly Driven by Ideas or by Tools?” Science 338 (2012): 1426–1427; P. Galison, Image and Logic (Chicago: University of Chicago Press, 1997).

53. P. T. Fox, “Human Brain Mapping: A Convergence of Disciplines,” Human Brain Mapping 1 (1993): 1–2; A. Toga, “Editorial,” NeuroImage 1 (1992): 1.

54. A. Eklund, T. E. Nichols, and H. Knutsson, “Cluster Failure: Why fMRI Inferences for Spatial Extent Have Inflated False-Positive Rates,” Proceedings of the National Academy of Sciences 113 (2016): 7900–7905.