6

Model Fitting, Reduction, and Coupling

- Introduction

- 6.1 Parameter Estimation

- 6.2 Model Selection

- 6.3 Model Reduction

- 6.4 Coupled Systems and Emergent Behavior

- Exercises

- References

- Further Reading

Introduction

Cells and organisms are incredibly complex, and the only way to understand them is through simplified pictures. Simplicity comes from omitting irrelevant details. For instance, we may picture a pathway in isolation, pretending that its environment is given and constant, or we may neglect microscopic details within a system: Instead of considering all reaction events between single molecules, we average over them and see a smooth behavior of macroscopic concentrations.

Simplified models will be approximations, but approximations are exactly what we are aiming at: models that provide a good level of details and are easy to work with. Keeping in mind that cells are much more complex than our models (and that effective quantities like free energies summarize a lot of microscopic complexity in a single number), we can move between models of different scope whenever necessary. If models turn out to be too simple, we zoom in and consider previously neglected details, or zoom out and include parts of the environment into our model.

Early biochemical models were simple and mostly used for studying general principles, for example, the emergence of oscillations from feedback regulation. With increasing amounts of data, models of metabolism, cell cycle, or signaling pathways have become more complex, more accurate, and more predictive. As more data are becoming available, biochemical models are increasingly used to picture biological reality and specific experimental situations.

Building a useful model is usually a lengthy process. Based on literature studies and collected data, one starts by developing hypotheses about the biological system. Which objects and processes (e.g., substances, reactions, cell compartments) are relevant? Which mathematical framework is appropriate (continuous or discrete, kinetic or stochastic, spatial or nonspatial model)? Sometimes, model structures must meet specific criteria, for example, known robustness properties. The bacterial chemotaxis system, for instance, shows a precise adaptation to external stimuli, and a good model should account for this fact; models that implement perfect adaptation by their network structure will be even more plausible than models that require a fine-tuning of parameters (see Section 10.2).

Modeling often involves several cycles of model generation, fitting, testing, and selection [1,2]. To choose between different model variants, new experiments may be required. In practice, the choice of a model depends on various factors: Does a model reflect the basic biological facts about the system? Is it simple enough to be simulated and fitted? Does it explain known observations and can it predict previously unobserved behavior? Sometimes, a model's structure (e.g., stoichiometric matrix and rate laws) is already known, while the parameter values (e.g., rate constants or external concentrations) still have to be determined. They can be obtained by fitting the model outputs (e.g., concentration time series) to a set of experimental data. If the model is structurally identifiable (a property that will be explained below), if enough data are available, and if the data are free of errors, this procedure should allow us to determine the true parameter set: For all other parameter sets, data and model output would differ. In reality, however, these conditions are rarely met. Therefore, modelers put large efforts in parameter estimation and in developing efficient optimization algorithms.

Without comprehensive data, many parameters remain nonidentifiable and there will be a risk of overfitting. This calls for models that are simple enough to be fully validated by existing data, which limits the degree of details in realistic models. The process of model simplification can be systematized: Different hypotheses and simplifications may lead to different model variants, and statistical methods can help us select based on data. Using optimal experimental design [3], and based on preliminary models, one may devise experiments that are most likely to yield the information needed to select between possible models. This cycle of experiments and modeling enables us to overcome many limitations of one-step model selection. Model selection can be insightful: For instance, the fact that fast equilibrated processes cannot be resolved using data can tell us that also the cellular machinery may take these fast processes “for granted” when controlling processes on slower time scales.

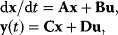

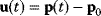

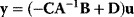

6.1 Parameter Estimation

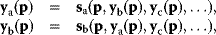

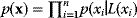

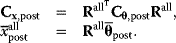

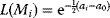

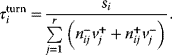

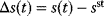

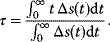

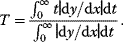

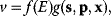

In mathematical models, for example, the kinetic models discussed before, each possible parameter set  is mapped to a set of predicted data values

is mapped to a set of predicted data values  via a function

via a function  . If measurement data are given, we can try to invert this procedure and reconstruct the unknown parameter set

. If measurement data are given, we can try to invert this procedure and reconstruct the unknown parameter set  from the data. There are three possible cases: (i) the parameters are uniquely determined; (ii) the parameters are overdetermined (i.e., no parameter set corresponds to the data) because data are noisy or the model is wrong; (iii) the parameters are underdetermined, that is, several or many parameter sets explain the data equally well.

from the data. There are three possible cases: (i) the parameters are uniquely determined; (ii) the parameters are overdetermined (i.e., no parameter set corresponds to the data) because data are noisy or the model is wrong; (iii) the parameters are underdetermined, that is, several or many parameter sets explain the data equally well.

Case (i) is mainly theoretical; in reality, when simple models are applied to complex phenomena, we do not expect perfect predictions. Cases (ii) and (iii) are what we normally encounter. Pragmatically, it is common to assume that a model is correct and that discrepancies between data and model predictions are caused by measurement errors. This resolves the problem of overdetermined parameters (case (ii)) and allows us to fit model parameters and to compare different models by their data fits. We can use data and statistical methods to obtain parameter estimates that approximate the true parameter values, as well as the uncertainty of the estimates [4]. Underdetermined parameters (case (iii)) can either be accepted as a fact or specific parameters can be chosen by additional regularization criteria. Common modeling tools such as COPASI [5] support parameter estimation.

6.1.1 Regression, Estimators, and Maximal Likelihood

6.1.1.1 Regression

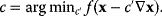

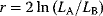

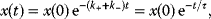

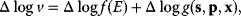

Linear regression is a typical example of parameter estimation. A linear regression problem is shown in Figure 6.1: A number of data points  have to be approximated by a straight line

have to be approximated by a straight line  . If the data points are indeed located exactly on a line, we can choose a parameter vector

. If the data points are indeed located exactly on a line, we can choose a parameter vector  such that

such that  holds for all data points

holds for all data points  . In practice, measured data will scatter, and we search for a regression line as close as possible to the data points. If the deviation between line and data is scored by the sum of squared residuals (SSR),

. In practice, measured data will scatter, and we search for a regression line as close as possible to the data points. If the deviation between line and data is scored by the sum of squared residuals (SSR),  , the regression problem can be seen as a minimization problem for the function

, the regression problem can be seen as a minimization problem for the function  .

.

Figure 6.1 Linear regression. (a) Artificial data  (gray) are generated by computing data points (black) from a model

(gray) are generated by computing data points (black) from a model  (straight line) and adding Gaussian noise. Each possible line is characterized by two parameters, slope

(straight line) and adding Gaussian noise. Each possible line is characterized by two parameters, slope  and offset

and offset  . The aim in linear regression is to reconstruct the unknown parameters from noisy data (in this case, artificial data

. The aim in linear regression is to reconstruct the unknown parameters from noisy data (in this case, artificial data  ). (b) The deviation between a possible line (four lines A, B, C, and D are shown) and data can be measured by the sum of squared residuals. Residuals are shown for line D (dashed lines). (c) Each of the lines A, B, C, and D corresponds to a point

). (b) The deviation between a possible line (four lines A, B, C, and D are shown) and data can be measured by the sum of squared residuals. Residuals are shown for line D (dashed lines). (c) Each of the lines A, B, C, and D corresponds to a point  in parameter space. The SSR as a function

in parameter space. The SSR as a function  in parameter space can be pictured as a landscape (shades of pink; dark pink indicates small SSR, that is, a good fit). Line D minimizes the SSR value. Small SSR values correspond to a large likelihood.

in parameter space can be pictured as a landscape (shades of pink; dark pink indicates small SSR, that is, a good fit). Line D minimizes the SSR value. Small SSR values correspond to a large likelihood.

6.1.1.2 Estimators and Maximal Likelihood

The use of the SSR as a distance measure is justified by statistical arguments, assuming that the data stem from some generative model with unknown parameters and additional random errors. As an example, we consider a curve  with an independent variable

with an independent variable  (e.g., time) and curve parameters

(e.g., time) and curve parameters  . For a number of time points

. For a number of time points  , the model yields the output values

, the model yields the output values  , which can be seen as a vector

, which can be seen as a vector  . By adding random errors

. By adding random errors  , we obtain the noisy data:

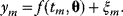

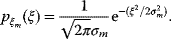

, we obtain the noisy data:

If the errors follow independent Gaussian distributions with mean 0 and variance  , each data point

, each data point  is a Gaussian-distributed random number with mean

is a Gaussian-distributed random number with mean  and variance

and variance  .

.

In parameter estimation, we revert this process: Starting with a model (characterized by a function  and noise variances

and noise variances  ) and a set of noisy data

) and a set of noisy data  (some realization of Eq. (6.1)), we try to infer the unknown parameter set

(some realization of Eq. (6.1)), we try to infer the unknown parameter set  . A function

. A function  mapping each data set

mapping each data set  to an estimated parameter vector

to an estimated parameter vector  is called an estimator.

is called an estimator.

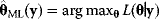

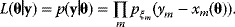

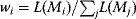

Practical and fairly simple estimators follow from the principle of maximum likelihood. The likelihood of a model is the conditional probability to observe the actually observed data  if the model is correct. For instance, if a model predicts “Tomorrow, the sun will shine with 80% probability,” and sunshine is indeed observed, the model has a likelihood of 0.8. Accordingly, if a model structure is given, each possible parameter set

if the model is correct. For instance, if a model predicts “Tomorrow, the sun will shine with 80% probability,” and sunshine is indeed observed, the model has a likelihood of 0.8. Accordingly, if a model structure is given, each possible parameter set  has a likelihood

has a likelihood  . To compute likelihood values for a biochemical model, we need to specify our assumptions about possible measurement errors. From a generative model like Eq. (6.1), the probability density

. To compute likelihood values for a biochemical model, we need to specify our assumptions about possible measurement errors. From a generative model like Eq. (6.1), the probability density  to observe the data

to observe the data  , given the parameters

, given the parameters  , can be computed. The maximum likelihood estimator

, can be computed. The maximum likelihood estimator

yields the parameter set that would maximize the likelihood given the observed data. If the maximum point is not unique, the model parameters cannot be identified by maximum likelihood estimation.

6.1.1.3 Method of Least Squares

To see how likelihood functions can be computed in practice, we return to the model Eq. (6.1) with additive Gaussian noise. If our model yields a true value  , the observable noisy value

, the observable noisy value  has a probability density

has a probability density  , where

, where  is the probability density of the error term. If the variables

is the probability density of the error term. If the variables  are independently Gaussian-distributed with mean 0 and variance

are independently Gaussian-distributed with mean 0 and variance  , their density reads

, their density reads

Given one data point  , we obtain the likelihood function:

, we obtain the likelihood function:

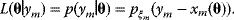

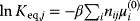

Given all data points and assuming that random errors are independent, the probability to observe the data set  is the product of all probabilities for individual data points. Hence, the likelihood reads

is the product of all probabilities for individual data points. Hence, the likelihood reads

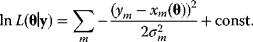

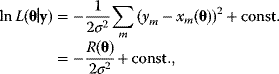

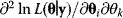

By inserting the Gaussian probability density (6.3) and taking the logarithm, we obtain

If all random errors  have the same variance

have the same variance  , the logarithmic likelihood reads

, the logarithmic likelihood reads

where  is the sum of squared residuals. Thus, maximum likelihood estimation with the error model (6.3) is equivalent to a minimization of weighted squared residuals. This justifies the method of least squares. The same argument holds for data on logarithmic scale. Additive Gaussian errors on logarithmic scale correspond to multiplicative log-normal errors for the original, nonlogarithmic data. In this case, a uniform variance

is the sum of squared residuals. Thus, maximum likelihood estimation with the error model (6.3) is equivalent to a minimization of weighted squared residuals. This justifies the method of least squares. The same argument holds for data on logarithmic scale. Additive Gaussian errors on logarithmic scale correspond to multiplicative log-normal errors for the original, nonlogarithmic data. In this case, a uniform variance  for all data points means that all nonlogarithmic data points have the same range of relative errors. According to the Gauss–Markov theorem, the least-squares estimator is a best linear unbiased estimator if the model is linear and if the errors

for all data points means that all nonlogarithmic data points have the same range of relative errors. According to the Gauss–Markov theorem, the least-squares estimator is a best linear unbiased estimator if the model is linear and if the errors  for different data points are linearly uncorrelated and have the same variance. The error distribution

for different data points are linearly uncorrelated and have the same variance. The error distribution  need not be Gaussian.

need not be Gaussian.

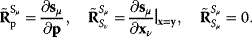

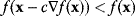

6.1.2 Parameter Identifiability

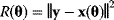

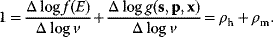

If a likelihood function is seen as a landscape in parameter space, the maximum likelihood estimate  is the maximum point in this landscape. According to Eq. (6.6), this point corresponds to a minimum of the weighted SSR (compare with Figure 6.1). In a single isolated maximum, the logarithmic likelihood function

is the maximum point in this landscape. According to Eq. (6.6), this point corresponds to a minimum of the weighted SSR (compare with Figure 6.1). In a single isolated maximum, the logarithmic likelihood function  has strictly negative curvatures and can be approximated using the local curvature matrix

has strictly negative curvatures and can be approximated using the local curvature matrix  . Directions with weak curvatures correspond to parameter variations that have little effect on the likelihood.

. Directions with weak curvatures correspond to parameter variations that have little effect on the likelihood.

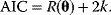

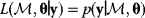

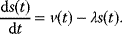

If several parameter sets fit the data equally well, the maximum likelihood criterion does not yield a unique solution, and the estimation problem is underdetermined (Figure 6.2). In particular, the likelihood function may be maximal on an entire curve or surface in parameter space. This can have different reasons: structural or practical nonidentifiability.

Figure 6.2 Identifiability. (a) In identifiable models, the sum of squared residuals (SSR, shown in pink) has a single minimum point (dot). The curvatures (i.e., second derivatives) of the SSR form a matrix. Its eigenvectors point toward directions of maximal (blue) and minimal curvatures (black). (b) In the nonidentifiable model shown, the SSR is minimized on a line in parameter space. A linear combination of parameters (blue arrow) is identifiable from the data, while another one (black arrow) is nonidentifiable, visible from the vanishing curvatures along this direction.

6.1.2.1 Structural Nonidentifiability

If two parameters  and

and  in a model appear only as a product

in a model appear only as a product  , they are underdetermined: For any choice of

, they are underdetermined: For any choice of  , the pair

, the pair  and

and  will yield the same result

will yield the same result  and lead to the same model predictions. Thus, maximum likelihood estimation (which compares model predictions with data) can determine the product

and lead to the same model predictions. Thus, maximum likelihood estimation (which compares model predictions with data) can determine the product  , but not the individual values

, but not the individual values  and

and  . Such models are called structurally nonidentifiable. To resolve this problem, we may replace the product

. Such models are called structurally nonidentifiable. To resolve this problem, we may replace the product  by a new identifiable parameter

by a new identifiable parameter  . Structural nonidentifiability can arise in various ways and may be difficult to detect and resolve.

. Structural nonidentifiability can arise in various ways and may be difficult to detect and resolve.

6.1.2.2 Practical Nonidentifiability

Even structurally identifiable models may be practically nonidentifiable with respect to some parameters if data are insufficient, that is, if too few or the wrong kind of data are given. In particular, if the number of parameters exceeds the number of data points, the parameters cannot be identified. Let us assume that each possible parameter set  would yield a prediction

would yield a prediction  and that the mapping

and that the mapping  is continuous. If the dimensionality of

is continuous. If the dimensionality of  is larger than the dimensionality of

is larger than the dimensionality of  , it is impossible to invert the function

, it is impossible to invert the function  and to reconstruct the parameters from the given data. Rules for the minimum numbers of experimental data needed to reconstruct differential equation models can be found in Ref. [6].

and to reconstruct the parameters from the given data. Rules for the minimum numbers of experimental data needed to reconstruct differential equation models can be found in Ref. [6].

If a model is nonidentifiable, numerical parameter optimization with different starting points will yield different estimates  , all located on the same manifold in parameter space. In the example above, the estimates for

, all located on the same manifold in parameter space. In the example above, the estimates for  and

and  would differ, but they would all satisfy the relation

would differ, but they would all satisfy the relation  with a fixed value for

with a fixed value for  . On logarithmic scale, the estimates would lie on a straight line (provided that all other model parameters are identifiable).

. On logarithmic scale, the estimates would lie on a straight line (provided that all other model parameters are identifiable).

Problems like parameter identification in which the procedure of model simulation is reverted are called inverse problems. If the solution of an inverse problem is not unique, the problem is ill-posed and additional assumptions are required for a unique solution. For instance, we may postulate that the sum of squares of all parameter values should be minimized. Such requirements can help us select a particular solution; the method is called regularization.

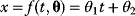

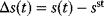

6.1.3 Bootstrapping

From a noisy data set  , we cannot determine the true model parameters

, we cannot determine the true model parameters  ; we only obtain an estimate

; we only obtain an estimate  . Different realizations of the random error

. Different realizations of the random error  lead to different possible sets of noisy data, and thus different estimates

lead to different possible sets of noisy data, and thus different estimates  . Ideally, the mean value

. Ideally, the mean value  of these estimates should yield the true parameter value (in this case, the estimator is called “unbiased”), and their variance should be small. In practice, only a single data set is available, and we obtain a single point estimate

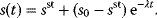

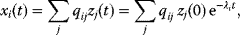

of these estimates should yield the true parameter value (in this case, the estimator is called “unbiased”), and their variance should be small. In practice, only a single data set is available, and we obtain a single point estimate  . Bootstrapping [7] is a way to assess, at least approximately, the statistical distributions of such estimates. First, hypothetical data sets of the same size as the original data set are generated from the original data by resampling with replacement (see Figure 6.3). Then, a parameter estimate

. Bootstrapping [7] is a way to assess, at least approximately, the statistical distributions of such estimates. First, hypothetical data sets of the same size as the original data set are generated from the original data by resampling with replacement (see Figure 6.3). Then, a parameter estimate  is calculated for each of these data sets. The empirical distribution of these estimates is taken as an approximation of the true distribution of

is calculated for each of these data sets. The empirical distribution of these estimates is taken as an approximation of the true distribution of  . The bootstrapping method is asymptotically consistent, that is, the approximation becomes exact as the size of the original data set goes to infinity.

. The bootstrapping method is asymptotically consistent, that is, the approximation becomes exact as the size of the original data set goes to infinity.

Figure 6.3 Bootstrapping with resampled data. (a) A hypothetical data set is generated by sampling values with replacement from the original data. Each resampled data set yields one parameter estimate  . (b) The distribution of parameter estimates obtained from bootstrap samples approximates the true distribution of the estimator

. (b) The distribution of parameter estimates obtained from bootstrap samples approximates the true distribution of the estimator  . For a good approximation, the original data set should be large.

. For a good approximation, the original data set should be large.

6.1.3.1 Cross-Validation

There is a fundamental difference between model fitting and prediction. If a model has been fitted to a given data, we enforce an agreement with exactly these training data, and the model is likely to fit them better than it will fit test data that have not been used during model fitting. The fitted model will fit the data even better than the true model itself – a phenomenon called overfitting. However, overfitted models will predict new data less reliably than the true model, and their parameter values may differ strongly from the true parameter values. Therefore, overfitting should be avoided.

We have seen an example of overfitting in Figure 6.1: The least-squares regression line yields a lower SSR than the true model – because a low SSR is what it is optimized for. The apparent improvement is achieved by fitting the noise, that is, by adjusting the line to the specific realization of random errors in the data. However, this precise fit does not lead to better predictions; here, the true model will be more reliable.

How can we test whether models are overfitted? In theory, we would need new data that have not been used during model fitting. In cross-validation (see Figure 6.4), to mimic this test, an existing data set is split into two parts: a training set for fitting and a test set for evaluating the model predictions. This procedure is repeated many times with different parts of the data used as test sets. From the prediction errors, we can judge how the model predicts new data on average, when fitted to  data points (size of the training set). The average prediction error is an important quality measure and allows us to reject models prone to overfitting. Cross-validation, just like bootstrapping, can be numerically demanding because many estimation runs must be performed.

data points (size of the training set). The average prediction error is an important quality measure and allows us to reject models prone to overfitting. Cross-validation, just like bootstrapping, can be numerically demanding because many estimation runs must be performed.

Figure 6.4 Cross-validation as a way to detect overfitting. (a) In linear regression, a straight line is fitted to data points (gray and red). The fitting error (dotted red line) is the distance between a data point (red) and the corresponding height of the regression line (blue). The regression line is chosen such that fitting errors are minimized. (b) In a leave-one-out cross-validation, we pretend that one point (red) is unknown and attempt to predict it from the model. The regression line is fitted to the remaining (gray) points, and the prediction error for the red data point will be larger than the fitting error shown in part (a). (c) Scheme of leave-one-out cross-validation. The model is fitted to all data points except for one (training set) and the remaining data point (test set) is predicted. This procedure is repeated for each data point to be predicted and yields an estimate of the average prediction error. (d) In  -fold cross-validation, the data are split into

-fold cross-validation, the data are split into  subsets. In every run,

subsets. In every run,  subsets serve as training data, while the remaining subset is used as test data.

subsets serve as training data, while the remaining subset is used as test data.

6.1.4 Bayesian Parameter Estimation

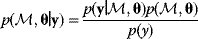

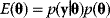

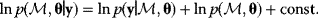

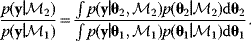

In maximum likelihood estimation, we search for a single parameter set representing the true parameters. Bayesian parameter estimation, an alternative approach, is based on a different premise: The parameter set  is formally treated as a random variable. In this context, randomness describes a subjective uncertainty due to lack of information. Once more data become available, the parameter distribution can be updated and uncertainty will be reduced. By choosing a prior parameter distribution, we state how plausible certain parameter sets appear in advance. Given a parameter set

is formally treated as a random variable. In this context, randomness describes a subjective uncertainty due to lack of information. Once more data become available, the parameter distribution can be updated and uncertainty will be reduced. By choosing a prior parameter distribution, we state how plausible certain parameter sets appear in advance. Given a parameter set  , we assume that a specific data set

, we assume that a specific data set  will be observed with a probability density (likelihood)

will be observed with a probability density (likelihood)  . Hence, parameters and data are described by a joint probability distribution with density

. Hence, parameters and data are described by a joint probability distribution with density  (see Figure 6.5).

(see Figure 6.5).

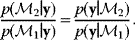

Figure 6.5 Bayesian parameter estimation. (a) In Bayesian estimation, parameters  and data

and data  are described by a joint probability distribution with density

are described by a joint probability distribution with density  . The marginal density

. The marginal density  of the parameters (blue) is called prior, while the conditional density

of the parameters (blue) is called prior, while the conditional density  given a certain data set (magenta) is called posterior. (b) The posterior is narrower than the prior (blue), reflecting the information gained by the data. (c) Prior and likelihood. A variable

given a certain data set (magenta) is called posterior. (b) The posterior is narrower than the prior (blue), reflecting the information gained by the data. (c) Prior and likelihood. A variable  is given by the output

is given by the output  of a generative model (black line) plus Gaussian measurement errors. A given observed value

of a generative model (black line) plus Gaussian measurement errors. A given observed value  gives rise to the likelihood function

gives rise to the likelihood function  in parameter space. (d) The posterior is the product of prior and likelihood function, normalized to a total probability of 1.

in parameter space. (d) The posterior is the product of prior and likelihood function, normalized to a total probability of 1.

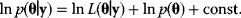

Given a data set  , we can determine the conditional probabilities of θ given

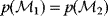

, we can determine the conditional probabilities of θ given  , called posterior probabilities. According to the Bayes formula,

, called posterior probabilities. According to the Bayes formula,

the posterior probability density  is proportional to likelihood and prior density. Since the data

is proportional to likelihood and prior density. Since the data  are given, the denominator

are given, the denominator  is a constant; it is used for normalization only. Bayesian estimation can also be applied recursively, that is, the posterior from one estimation can serve as a prior for another estimation with new data.

is a constant; it is used for normalization only. Bayesian estimation can also be applied recursively, that is, the posterior from one estimation can serve as a prior for another estimation with new data.

Maximum likelihood estimation and Bayesian parameter estimation differ in their practical use and in how probabilities are interpreted. In the former, we ask: Under which hypothesis about the parameters would the data appear most probable? In the latter, we directly ask: How probable does each parameter set appear given the data? Moreover, the aim in Bayesian statistics is not to determine parameters precisely, but to characterize their posterior  (e.g., to compute marginal distributions for individual parameters or probabilities for quantitative predictions). For complicated models, the posterior cannot be computed analytically; instead, it is common to sample parameter sets from the posterior, for instance, by using the Metropolis–Hastings algorithm described below.

(e.g., to compute marginal distributions for individual parameters or probabilities for quantitative predictions). For complicated models, the posterior cannot be computed analytically; instead, it is common to sample parameter sets from the posterior, for instance, by using the Metropolis–Hastings algorithm described below.

Bayesian priors are used to encode general beliefs or previous knowledge about the parameter values. In practice, they can serve as regularization terms that make models identifiable. By taking the logarithm of Eq. (6.8), we obtain the logarithmic posterior:

If the logarithmic likelihood has no unique maximum (as in Figure 6.2b), the model will not be identifiable by maximum likelihood estimation. By adding the logarithmic prior  in Eq. (6.9), we can obtain a unique maximum in the posterior density.

in Eq. (6.9), we can obtain a unique maximum in the posterior density.

6.1.4.1 Bayesian Networks

Bayesian statistics is commonly used in probabilistic reasoning [10,11] to study relationships between uncertain facts. Facts are described by random variables (binary or quantitative), and their (assumed) probabilistic dependencies are represented as a directed acyclic graph  , called Bayesian network [10,12,13]. Vertices

, called Bayesian network [10,12,13]. Vertices  correspond to random variables

correspond to random variables  and edges

and edges  encode conditional dependencies between these variables: A variable, given the variables represented by its parent vertices, is conditionally independent on all other variables. Bayesian networks can also define a joint probability distribution precisely. Each variable

encode conditional dependencies between these variables: A variable, given the variables represented by its parent vertices, is conditionally independent on all other variables. Bayesian networks can also define a joint probability distribution precisely. Each variable  is associated with a conditional probability

is associated with a conditional probability  , where

, where  denotes the parents of variable

denotes the parents of variable  , that is, the set of variables having a direct probabilistic influence on

, that is, the set of variables having a direct probabilistic influence on  . Together, these conditional probabilities define a joint probability distribution via the formula

. Together, these conditional probabilities define a joint probability distribution via the formula  . Notably, the shape of a Bayesian network is not uniquely defined by a dependence structure: Instead, the same probability distribution can be represented by different equivalent Bayesian networks, which cannot be distinguished by observation of the variables

. Notably, the shape of a Bayesian network is not uniquely defined by a dependence structure: Instead, the same probability distribution can be represented by different equivalent Bayesian networks, which cannot be distinguished by observation of the variables  [13].

[13].

Bayesian networks have, for instance, been used to infer gene regulation networks from gene expression data. Variables  belonging to the vertices

belonging to the vertices  are a measure of gene activity, for example, the expression level of a gene or the amount of active protein. The assumption is that statistical information (given the expression of gene

are a measure of gene activity, for example, the expression level of a gene or the amount of active protein. The assumption is that statistical information (given the expression of gene  , the expression of gene

, the expression of gene  is independent of other genes) reflects mechanistic causality (gene product

is independent of other genes) reflects mechanistic causality (gene product  is the only regulator of gene

is the only regulator of gene  ).

).

Generally, Bayesian conditioning can be applied in different ways: to search for networks or network equivalence classes that best explain measured data; to learn the parameters of a given network; or a network can be used to predict data, for example, possible gene expression profiles. In any case, to resolve causal interactions, time series data or data from intervention experiments are needed. In dynamic Bayesian networks, time behavior is modeled explicitly: In this case, the conditional probabilities describe the state of a gene in one moment given the states of genes in the moment before [14].

6.1.5 Probability Distributions for Rate Constants

The values of rate constants, an important requisite for kinetic modeling, can be obtained in various ways: from the literature, from databases like Brenda [15] or Sabio-RK [16], from fits to dynamic data, from optimization for other objectives, or from simple guesses. Based on data, we can obtain parameter distributions. Parameters that have been measured can be described by a log-normal distribution representing the measured value and error bar; if experimental conditions are not exactly comparable, the error bar may be artificially increased. If a parameter has not been measured, one may describe it by a broader distribution. The empirical distribution of Michaelis constants in the Brenda database, which is roughly log-normal, can be used to describe a specific, but unknown Michaelis constant. Such probability distributions can be helpful for both uncertainty analysis and parameter estimation. In maximum likelihood estimation, on can restrict the parameters to biologically plausible values and reduce the search space for parameter fitting. In Bayesian estimation, knowledge encoded in prior distributions is combined with information from the data.

6.1.5.1 Distributions of Enzymatic Rate Constants

If we neglect all possible interdependencies, we can assume separate distributions for all parameters in a kinetic model. According to Bayesian views, a probability distribution reflects what we can know about some quantity, based on data or prior beliefs. The shapes of such distributions may be chosen by the principle of minimal information (see Section 10.1) [17]: For instance, given a known mean value and variance (and no other known characteristics), we should assume a normal distribution. If we apply this principle to logarithmic parameters, the parameters themselves will follow log-normal distributions.

What could constitute meaningful mean values and variances, specifically for rate constants? This depends on what knowledge we intend to represent, namely, values for specific parameters (e.g., the  value of some enzyme, with measurement error) or types of parameters (

value of some enzyme, with measurement error) or types of parameters ( values in general, characterized by median value and spread). In the latter case, we may consult the distributions of measured rate constants, possibly broken down to specific enzyme classes (see Figure 6.6). Thermodynamic and enzyme kinetic parameters can be found in publications or databases like NIST [18], Brenda [15], or Sabio-RK [16]. With more information about model elements, for example, molecule structures, improved parameter estimates can be obtained from molecular modeling or machine learning [19].

values in general, characterized by median value and spread). In the latter case, we may consult the distributions of measured rate constants, possibly broken down to specific enzyme classes (see Figure 6.6). Thermodynamic and enzyme kinetic parameters can be found in publications or databases like NIST [18], Brenda [15], or Sabio-RK [16]. With more information about model elements, for example, molecule structures, improved parameter estimates can be obtained from molecular modeling or machine learning [19].

Figure 6.6 Distribution of enzymatic rate constants from the Brenda database [15]. (a)  values in the Brenda database. (b)

values in the Brenda database. (b)  ratios. (c)

ratios. (c)  values. The values of RuBisCo and some prominent enzymes in E. coli are indicated. From Ref. [20].

values. The values of RuBisCo and some prominent enzymes in E. coli are indicated. From Ref. [20].

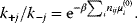

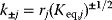

6.1.5.2 Thermodynamic Constraints on Rate Constants

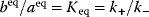

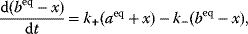

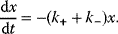

Whenever kinetic constants are fitted, optimized, or sampled, we should ensure that only valid parameter combinations are used. In metabolic networks with mass-action kinetics, for instance, the rate constants  and the equilibrium constant

and the equilibrium constant  in a reaction

in a reaction  are related by

are related by  . The equilibrium constants, in turn, depend on standard chemical potentials

. The equilibrium constants, in turn, depend on standard chemical potentials  via

via  , with

, with  , which yields the condition

, which yields the condition

Thus, a choice of rate constants  will only be feasible if there exists a set of standard chemical potentials

will only be feasible if there exists a set of standard chemical potentials  satisfying Eq. (6.12). If a metabolic network contains loops, it is unlikely that this test will be passed by randomly chosen rate constants.

satisfying Eq. (6.12). If a metabolic network contains loops, it is unlikely that this test will be passed by randomly chosen rate constants.

Instead of excluding parameter sets by hindsight, based on condition (6.12), one may directly construct parameter sets that satisfy it automatically [21,22]. The equation itself shows us how to proceed: The chemical potentials  are calculated or sampled from a Gaussian distribution and used to compute the equilibrium constants. Prefactors

are calculated or sampled from a Gaussian distribution and used to compute the equilibrium constants. Prefactors  are then sampled from a log-normal distribution, and the kinetic constants are set to

are then sampled from a log-normal distribution, and the kinetic constants are set to  . This procedure yields rate constants

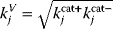

. This procedure yields rate constants  with dependent log-normal distributions, which are feasible by construction. For Michaelis–Menten-like rate laws (e.g., convenience kinetics [23] or the modular rate laws [24]), this works similarly: Velocity constants, defined as the geometric means

with dependent log-normal distributions, which are feasible by construction. For Michaelis–Menten-like rate laws (e.g., convenience kinetics [23] or the modular rate laws [24]), this works similarly: Velocity constants, defined as the geometric means  of

of  values, can be used as independent basic parameters; and feasible

values, can be used as independent basic parameters; and feasible  values, satisfying the Haldane relationships, can be computed from equilibrium constants, Michaelis constants, and velocity constants [24].

values, satisfying the Haldane relationships, can be computed from equilibrium constants, Michaelis constants, and velocity constants [24].

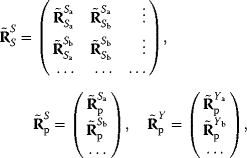

6.1.5.3 Dependence Scheme for Model Parameters

Dependencies between rate constants can be an obstacle in modeling, but they can also be helpful: On the one hand, measured values, when inserted into models, can lead to contradictions. On the other hand, dependencies reduce the number of free parameters, allowing us to infer unknown parameters from the other given parameters. How can we account for parameter dependencies in general, for example, when formulating a joint parameter distribution? Parameters that satisfy linear equality constraints can be expressed in terms of independent basic parameters, and their dependencies can be depicted in a scheme with separate layers for basic and derived parameters (see Figure 6.7). For instance, the vector of logarithmic rate constants in a model (vector  ) can be computed from a set of basic (logarithmic) parameters (in a vector

) can be computed from a set of basic (logarithmic) parameters (in a vector  ) by a linear equation:

) by a linear equation:

The dependence matrix  follows from the structure of the network [21,23,24,25]. In kinetic models with reversible standard rate laws (e.g., convenience kinetics [23] or thermodynamic–kinetic rate laws [22]), this parametrization can be used to guarantee feasible parameter sets. Dependence schemes cover not only rate constants but also other quantities, in particular metabolite concentrations and thermodynamic driving forces (see Figure 6.7).

follows from the structure of the network [21,23,24,25]. In kinetic models with reversible standard rate laws (e.g., convenience kinetics [23] or thermodynamic–kinetic rate laws [22]), this parametrization can be used to guarantee feasible parameter sets. Dependence schemes cover not only rate constants but also other quantities, in particular metabolite concentrations and thermodynamic driving forces (see Figure 6.7).

Figure 6.7 Dependence scheme for rate constants and metabolic state. (a) Dependence scheme for rate constants. To obtain consistent Michaelis–Menten constants and catalytic constants  for a kinetic model, one treats them as part of a larger dependence scheme. In the scheme, basic parameters (top) can be chosen at will and derived parameters (center and bottom) are computed from them by linear equations. Parameters appearing in the model are marked by red frames. (b) Dependence scheme for a kinetic model in a specific metabolic state. The scheme additionally includes metabolite concentrations, chemical potentials, and reaction Gibbs free energies, which predefine the flux directions.

for a kinetic model, one treats them as part of a larger dependence scheme. In the scheme, basic parameters (top) can be chosen at will and derived parameters (center and bottom) are computed from them by linear equations. Parameters appearing in the model are marked by red frames. (b) Dependence scheme for a kinetic model in a specific metabolic state. The scheme additionally includes metabolite concentrations, chemical potentials, and reaction Gibbs free energies, which predefine the flux directions.

A dependence scheme can help us define a joint probability distribution of all parameters. We can describe the basic parameters by independent normal distributions; the dependent parameters (which are linear combinations of them) will then be normally distributed as well. We obtain a multivariate Gaussian distribution for logarithmic parameters, and each parameter, on nonlogarithmic scale, will follow a log-normal distribution. The chosen distribution should match our knowledge about rate constants. But what can we do if standard chemical potentials (as basic parameters) are poorly determined, although equilibrium constants (as dependent parameters) are almost precisely known? To express such knowledge by a joint distribution, we need to assume correlated distributions for the basic parameters. Such distributions can be determined from collected kinetic data by parameter balancing.

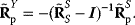

6.1.5.4 Parameter Balancing

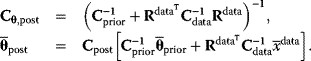

Parameter balancing [9,25] is a method for estimating consistent parameter sets in kinetic models based on kinetic, thermodynamic, and metabolic data as well as prior assumptions and parameter constraints. Based on a dependence scheme, all model parameters are described as linear functions of some basic parameters with known coefficients. Using this as a linear regression model, the basic parameters can be estimated from observed parameter values using Bayesian estimation (Figure 6.8). General expectations about parameter ranges can be formulated in two ways: as prior distributions (for basic parameters) or as pseudo-values (for derived parameters). Pseudo-values appear formally as data points, but represent prior assumptions [25], providing a simple way to define complex correlated priors, for example, priors for standard chemical potentials that entail a limited variation of equilibrium constants.

Figure 6.8 Parameter balancing. (a) Based on measured rate constants, and using a dependence scheme represented by a matrix  , the independent parameters are estimated by Bayesian multivariate regression. (b) From the posterior distribution of the basic parameters, a joint posterior of all model parameters is obtained.

, the independent parameters are estimated by Bayesian multivariate regression. (b) From the posterior distribution of the basic parameters, a joint posterior of all model parameters is obtained.

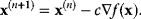

To set up the regression model, we use Eq. (6.13), but consider on the left only parameters for which data exist:  . Inserting this relation into Eq. (6.11) and assuming priors for the basic parameters, we obtain the posterior covariance and mean for the basic parameters:

. Inserting this relation into Eq. (6.11) and assuming priors for the basic parameters, we obtain the posterior covariance and mean for the basic parameters:

Using Eq. (6.13) again, we obtain the posterior distribution for all parameters, characterized by

Parameter balancing does not yield a point estimate, but a multivariate posterior distribution for all model parameters. The posterior describes typical parameter values, uncertainties, and correlations between parameters, based on what is known from data, constraints, and prior expectations. Priors and pseudo-values keep the parameter estimates in meaningful ranges even when few data are available. Parameter balancing can also account for bounds on single parameters and linear inequalities for parameter combinations. With such constraints, the posterior becomes restricted to a region in parameter space.

Model parameters for simulation can be obtained in two ways: by using the posterior mode (i.e., the most probable parameter set) or by sampling them from the posterior distribution. By generating an ensemble of random models and assessing their dynamic properties, we can learn about potential behavior of such models, given all information used during parameter balancing. Furthermore, the posterior can be used as a prior in subsequent Bayesian parameter estimation, for example, when fitting kinetic models to flux and concentration time series [9].

Being based on linear regression and Gaussian distributions, parameter balancing is applicable to large models with various types of parameters. Flux data cannot be directly included because rate laws, due to their mathematical form, do not fit into the dependence scheme. However, if a thermodynamically feasible flux distribution is given, one can impose its signs as constraints on the reaction affinities and obtain solutions in which rate laws and metabolic state match the given fluxes (see Section 6.4) [26].

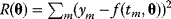

6.1.6 Optimization Methods

Parameter fitting typically entails optimization problems:

In the method of least squares, for instance,  denotes the parameter vector

denotes the parameter vector  and

and  is the sum of squared residuals. The allowed choices of

is the sum of squared residuals. The allowed choices of  may be restricted by constraints such as

may be restricted by constraints such as  . Global and local minima of

. Global and local minima of  are defined as follows. A parameter set

are defined as follows. A parameter set  is a global minimum point if no allowed parameter set

is a global minimum point if no allowed parameter set  has a smaller value for

has a smaller value for  . A parameter set

. A parameter set  is a local minimum point if no other allowed parameter set

is a local minimum point if no other allowed parameter set  in a neighborhood around

in a neighborhood around  has a smaller value. To find such optimal points numerically, algorithms evaluate the objective function

has a smaller value. To find such optimal points numerically, algorithms evaluate the objective function  (and possibly its derivatives) in a series of points

(and possibly its derivatives) in a series of points  , leading to increasingly better points until a chosen convergence criterion is met.

, leading to increasingly better points until a chosen convergence criterion is met.

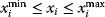

6.1.6.1 Local Optimization

Local optimizers are used to find a local optimum in the vicinity of some starting point. Gradient descent methods are based on the local gradient  , a vector that indicates the direction of the strongest increase of

, a vector that indicates the direction of the strongest increase of  . A sufficiently small step in the opposite direction will lead to lower function values:

. A sufficiently small step in the opposite direction will lead to lower function values:

for sufficiently small coefficients  . In gradient descent methods, we iteratively jump from the current point

. In gradient descent methods, we iteratively jump from the current point  to a new point by

to a new point by

The coefficient  can be adapted in each step, for example, by a numerical line search:

can be adapted in each step, for example, by a numerical line search:

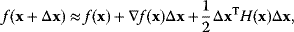

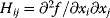

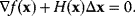

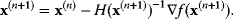

Newton's method is based on a local second-order approximation of the objective function:

with the curvature matrix  (also called Hessian matrix). If we neglect the approximation error in Eq. (6.20), a direct jump

(also called Hessian matrix). If we neglect the approximation error in Eq. (6.20), a direct jump  to an optimum would require that

to an optimum would require that

In the iterative Newton method, we approximate this and jump from the current point  to a new point:

to a new point:

The second term can be multiplied by a relaxation coefficient  : With smaller jumps, the iteration process will converge more stably.

: With smaller jumps, the iteration process will converge more stably.

6.1.6.2 Global Optimization

Theoretically, global optimum points of a function  can be found by scanning the space of

can be found by scanning the space of  values with a very fine grid. However, for a problem with

values with a very fine grid. However, for a problem with  parameters and

parameters and  grid values per parameter, this would require

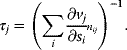

grid values per parameter, this would require  function evaluations, which soon renders the problem intractable. In practice, most global optimization algorithms scan the parameter space by random jumps (Figure 6.9). The aim is to find high-quality solutions (preferably, close to a global optimum) in a short computation time or with an affordable number of function evaluations. To surmount the basins of attraction of local solutions, algorithms should allow for jumps toward worse solutions during the search. Among the many global optimization algorithms [27,28], popular examples are simulated annealing and genetic algorithms.

function evaluations, which soon renders the problem intractable. In practice, most global optimization algorithms scan the parameter space by random jumps (Figure 6.9). The aim is to find high-quality solutions (preferably, close to a global optimum) in a short computation time or with an affordable number of function evaluations. To surmount the basins of attraction of local solutions, algorithms should allow for jumps toward worse solutions during the search. Among the many global optimization algorithms [27,28], popular examples are simulated annealing and genetic algorithms.

Figure 6.9 Global optimization. (a) A function may have different local minima with different function values. (b) The Metropolis–Hastings algorithm samples points  by an iterative jump process: Points are sampled with probabilities

by an iterative jump process: Points are sampled with probabilities  , reflecting their function values

, reflecting their function values  . Tentative jumps toward lower

. Tentative jumps toward lower  values (A → B) are always accepted, while upward jumps (A → C) are accepted only with probability

values (A → B) are always accepted, while upward jumps (A → C) are accepted only with probability  .

.

Aside from purely local and global methods, there are hybrid methods [29,30] that combine the robustness of global optimization algorithms with the efficiency of local methods. Starting from points generated in the global phase, they apply local searches that can accelerate the convergence to optimal solutions up to some orders of magnitude. Hybrid methods usually prefilter their candidate solutions to avoid local searches leading to known optima.

6.1.6.3 Sampling Methods

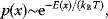

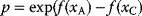

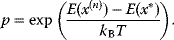

Simulated annealing, a popular optimization method based on sampling, is inspired by statistical thermodynamics. To use a physical analogy, we consider a particle with position  (scalar or vectorial) that moves by stochastic jumps in an energy landscape

(scalar or vectorial) that moves by stochastic jumps in an energy landscape  . In a thermodynamic equilibrium ensemble at temperature

. In a thermodynamic equilibrium ensemble at temperature  , the particle position

, the particle position  follows a Boltzmann distribution with density

follows a Boltzmann distribution with density

where  is Boltzmann's constant (see Section 16.6). In the Metropolis–Hastings algorithm [31,32], this Boltzmann distribution is realized by a jump process (Monte Carlo Markov chain):

is Boltzmann's constant (see Section 16.6). In the Metropolis–Hastings algorithm [31,32], this Boltzmann distribution is realized by a jump process (Monte Carlo Markov chain):

- Given the current position

with energy

with energy  , choose a new potential position

, choose a new potential position  at random.

at random. - If

has an equal or a lower energy

has an equal or a lower energy  , accept the jump and set

, accept the jump and set  .

. - If

has a higher energy

has a higher energy  , accept the jump with probability

, accept the jump with probability

To accept or reject a potential jump, we draw a uniform random number

between 0 and 1; if

between 0 and 1; if  , we accept the jump and set

, we accept the jump and set  ; otherwise, we set

; otherwise, we set  , keeping the particle at its old position.

, keeping the particle at its old position.

Programming the Metropolis–Hastings algorithm is easy. The random rule for jumps in step 1 can be chosen at will, with only one restriction: The transition probability for potential jumps from state  to state

to state  must be the same as for potential jumps from

must be the same as for potential jumps from  to

to  . If this condition does not hold, the unequal transition probabilities must be compensated by a modified acceptance function in step 3. Nevertheless, the rule in step 1 must be carefully chosen: Too large jumps will mostly be rejected; too small jumps will cause the particle to stay close to its current position; in both cases, the distribution will converge very slowly to the Boltzmann distribution. Convergence can be improved by adapting the jump rule to previous movements and, thus, to the energy landscape itself [8].

. If this condition does not hold, the unequal transition probabilities must be compensated by a modified acceptance function in step 3. Nevertheless, the rule in step 1 must be carefully chosen: Too large jumps will mostly be rejected; too small jumps will cause the particle to stay close to its current position; in both cases, the distribution will converge very slowly to the Boltzmann distribution. Convergence can be improved by adapting the jump rule to previous movements and, thus, to the energy landscape itself [8].

According to the Boltzmann distribution (6.23), a particle will spend more time and yield more samples in positions with low energies: The preference for low energies becomes more pronounced if the temperature is low. At temperature  , only jumps to lower or same energies will be accepted and the particle reaches, possibly after a long time, a global energy minimum. The Metropolis–Hastings algorithm has two important applications:

, only jumps to lower or same energies will be accepted and the particle reaches, possibly after a long time, a global energy minimum. The Metropolis–Hastings algorithm has two important applications:

- Sampling from given probability distributions In Bayesian statistics, a common method for sampling the posterior distribution is Metropolis–Hastings sampling with fixed temperature (6.8): We set

and choose

and choose  , ignoring the constant factor

, ignoring the constant factor  . From the resulting samples, we can compute, for instance, the posterior mean values and variances of individual parameters

. From the resulting samples, we can compute, for instance, the posterior mean values and variances of individual parameters  .

. - Global optimization by simulated annealing For global optimization by simulated annealing [33],

is replaced by some function

is replaced by some function  to be minimized,

to be minimized,  is set to 1, and the temperature is varied during the optimization process. Simulated annealing starts with a high temperature, which is then continuously lowered during the sampling process. If the temperature falls slowly enough, the system will reach a global optimum almost certainly (i.e., with probability 1). In practice, finite run times require a faster cooling, so convergence to a global optimum is not guaranteed.

is set to 1, and the temperature is varied during the optimization process. Simulated annealing starts with a high temperature, which is then continuously lowered during the sampling process. If the temperature falls slowly enough, the system will reach a global optimum almost certainly (i.e., with probability 1). In practice, finite run times require a faster cooling, so convergence to a global optimum is not guaranteed.

6.1.6.4 Genetic Algorithms

Genetic algorithms like differential evolution [34] are inspired by biological evolution. As we shall see in Section 11.1, genetic algorithms do not iteratively improve a single solution (as in simulated annealing), but simulate a whole population of possible solutions (termed “individuals”). In each step, the function value of each individual is evaluated. Individuals with high values (in relation to other individuals in the population) can have offspring, which form the following generation. In addition, mutations (i.e., small random changes) or crossover (i.e., random exchange of properties between individuals) allow the population to explore large regions of the parameter space in a short time. In problems with constraints, the stochastic ranking method [35] provides an efficient way to trade the objective function against the need to satisfy the constraints. Genetic algorithms are not proven to find global optima, but they are popular and have been successfully applied to various optimization problems.

6.2 Model Selection

A biochemical system can be described by multiple model variants, and choosing the right variant is a major challenge in modeling. Different model variants may cover different pathways, different substances or interactions within a pathway, different descriptions of the same process (e.g., different kinetic laws, fixed or variable concentrations), and different levels of details (e.g., subprocesses or time scales). Together, such choices can lead to a combinatorial explosion of model variants. To choose between models in a justified way, statistical methods for model selection are needed [36,37]. In fact, model fitting and model selection are very similar tasks: In both cases, we look for models that agree with biological knowledge and match experimental data; in one case, we choose between possible parameter sets, and in the other case between model structures. Moreover, model selection can involve parameter estimation for each of the candidate models.

A philosophical principle called Ockham's razor (Entia non sunt multiplicanda praeter necessitatem: Entities should not be multiplied without necessity) claims that theories should be free of unnecessary elements. Statistical model selection relies on a similar principle: Complexity in models – for example, additional substances or interactions in the network – should be avoided unless it is supported, and thus required, by data. If two models achieve equally good fits, the one with fewer free parameters should be chosen. With limited and inaccurate data, we may not be able to pinpoint a single model, but we can at least rule out models that contradict the data or for which there is no empirical evidence. With data values being uncertain, notions like “contradiction” and “evidence” can only be understood in a probabilistic sense, which calls for statistical methods.

6.2.1 What Is a Good Model?

Good models need not describe a biological system in all details. J.L. Borges writes in a story [38]: “In that empire, the art of cartography attained such perfection that the map of a single province occupied the entirety of a city, and the map of the empire, the entirety of a province. In time, those unconscionable maps no longer satisfied, and the cartographers guilds struck a map of the empire whose size was that of the empire, and which coincided point for point with it.” Similarly, systems biology models range from simple to complex “maps” of the cell, and like in real maps, details must be omitted. Otherwise, models would be as hard to understand as the biological systems described.

Models are approximations of reality or, as George Box put it, “Essentially, all models are wrong, but some are useful” [39]. In model selection, we have to make this specific and ask: useful for what? Depending on their purpose, models will have to meet various, sometimes contrary, requirements (see Figure 6.10):

- In data fitting, we start from data and describe them by some mathematical function. A reason to do this can be simple economy of description: Instead of specifying many data pairs

on a curve, we specify much fewer curve parameters (e.g., offset and slope for a straight line). If data points deviate from our curve, we may attribute the discrepancy to measurement errors. In the context of model fitting, a dynamical model is just one specific way to define predicted curves. Given a model structure, for example, a differential equation system, we can fit the model parameters, for instance, by minimizing the sum of squared residuals.

on a curve, we specify much fewer curve parameters (e.g., offset and slope for a straight line). If data points deviate from our curve, we may attribute the discrepancy to measurement errors. In the context of model fitting, a dynamical model is just one specific way to define predicted curves. Given a model structure, for example, a differential equation system, we can fit the model parameters, for instance, by minimizing the sum of squared residuals. - To serve for prediction, a model should not just fit existing data, but also remain valid for future observations. In the language of statistical learning, it should generalize well to new data.

- Realistic models are supposed to picture processes “as they happen in reality.” Since there is a limit to detailing, models have to focus on certain pathways and describe them to reasonable details. Simplifying assumptions and model reduction (see Section 6.3) can be used to simplify models to a tractable level.

- To highlight key principles of biological processes, models need to be simple. Simplicity makes models useful as didactic or prototypic examples. This holds not only for computational models but also for example cases in general, for instance, the lac operon as an example of microbial gene regulation.

Figure 6.10 Possible requirements for good models.

Only few of these requirements can be tested formally, as described in Section 5.4. Moreover, different criteria can either entail or compromise each other. A good data fit, for instance, may suggest that a model is mechanistically correct and sufficiently complete. However, it may also arise in models with a very implausible structure, as long as they are flexible enough to fit various data. As a rule of thumb, models with more free parameters can fit data more easily (“With four parameters I can fit an elephant, and with five I can make him wiggle his trunk.” J. von Neumann, quoted in Ref. [40]). In this case, even though the fit becomes better, the average amount of experimental information per parameter decreases and the parameter estimates become poorly determined. This, in turn, compromises prediction. The overfitting problem notoriously occurs when many free parameters are fitted to few data points or when a large set of models is prescreened for good data fits (Freedman's paradox [41]).

6.2.2 The Problem of Model Selection

In modeling, one typically gets to a point at which a number of model variants have been proposed and the best model is chosen by comparing model predictions with experimental data. The procedure resembles parameter fitting, as described in the previous chapter; now it is the model structure that needs to be chosen – which possibly entails parameter estimation for each of the model variants.

6.2.2.1 Likelihood and Overfitting

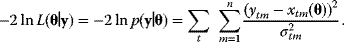

The quality of models can be assessed by their likelihood, that is, the probability that a model assigns to experimental observations. Let us assume that a model is correct in its structure and parameters; then, data values  (component

(component  , at time point

, at time point  ) will be Gaussian random variables with mean values

) will be Gaussian random variables with mean values  and variances

and variances  . According to Eq. (6.6), the logarithmic likelihood is related to the weighted sum of squared residuals (wSSR) as

. According to Eq. (6.6), the logarithmic likelihood is related to the weighted sum of squared residuals (wSSR) as

The wSSR itself, as a sum of standard Gaussian distributions, follows a  -distribution with

-distribution with  degrees of freedom. This fact can be used for a statistical test: If the weighted SSR for the given data falls in the upper 5% quantile of the

degrees of freedom. This fact can be used for a statistical test: If the weighted SSR for the given data falls in the upper 5% quantile of the  -distribution, we can reject the model on a 5% confidence level. If we do reject it, we conclude that the model is wrong. Importantly, a negative test – one in which the model is not rejected – does not prove that the model is correct; it only shows that there is not enough evidence to disprove the model. Also, note that this test works only for data that have not previously been used to fit the model.

-distribution, we can reject the model on a 5% confidence level. If we do reject it, we conclude that the model is wrong. Importantly, a negative test – one in which the model is not rejected – does not prove that the model is correct; it only shows that there is not enough evidence to disprove the model. Also, note that this test works only for data that have not previously been used to fit the model.

A high likelihood can serve as a criterion for model selection provided that the likelihood is evaluated with new data, that is, data that have not been used for fitting the model before. For instance, the statement “Tomorrow, the sun will shine with 80% probability” (obtained by some model A) can be compared with the statement “Tomorrow, the sun will shine with 50% probability” (obtained from another model B). If sunshine is observed, model A has a higher likelihood (Prob than model B (Prob

than model B (Prob and will be chosen by the likelihood criterion.

and will be chosen by the likelihood criterion.

However, there is one big problem: When posing the question “which model fits our data best,” we usually do not compare models with given parameters, but fit candidate models to data and then compare them by their maximized likelihood values. In doing so, we use the same data twice: first for fitting the model and then for assessing its quality. This would be a wrong usage of statistics and leads to overfitting. A direct comparison by likelihood values is justified only if model parameters have been fixed in advance and without using the test data.

6.2.2.2 Methods for Model Selection

This is the central problem in model selection: We try to infer model details based on data fits, but we know that good data fits can also arise from overfitting. To counter this risk, models with more free parameters must satisfy stricter requirements regarding the goodness of fit; or, following Ockham's principle, we should choose the most simple model variant unless others are clearly better supported by data. The problem of overfitting in model selection can be addressed in a number of ways:

- Cross-validation In cross-validation, models are tested with data that have not been used for fitting. Like a normal goodness of fit, the mean prediction error from cross-validation can be used as a criterion to select parameters or structures of models. Notably, the cross-validation error after this selection will again be biased. To prove that the selected model is free of overfitting, one would need to run a nested cross-validation: an inner cross-validation loop for selecting a model, and an outer cross-validation loop to check the selected model for overfitting in an unbiased way.

- Statistical tests In statistical tests, we compare a more complex model with a simpler background model. Our null hypothesis states that both models perform equally well. Only if the predictions of the more complex model are strikingly better, we reject this hypothesis. If we perform a test with confidence level

, and if the null hypothesis is indeed correct, there will be an

, and if the null hypothesis is indeed correct, there will be an  chance that we wrongly reject it.

chance that we wrongly reject it. - Selection criteria A given set of candidate models can be scored by selection criteria [42,43,44,45]. Selection criteria are mathematical score functions that trade agreement with experimental data against model complexity: To compensate the advantage of complex models in fitting, they penalize high numbers of free parameters. Selection criteria can be used to rank models, to choose between them, and to weight predictions from different models when computing weighted averages.

- Tests with artificial data It can be helpful to test model fitting and selection procedures with artificial data obtained from model simulations. Knowing the true model behind the data, we can judge more easily if a model selection method is able to recover the original model.

A second problem arises when we have several models to compare. If we test many models, we are likely to find some models that fit the data well just by chance. In such cases of multiple testing, stricter significance criteria must be applied: Predefining the false discovery rate is a good strategy for choosing sensible significance levels. But let us now describe likelihood ratio test and selection criteria in more detail.

6.2.3 Likelihood Ratio Test

The likelihood ratio test [46] compares two models A and B (with  and

and  free parameters) by their maximized likelihood values

free parameters) by their maximized likelihood values  and

and  . Like in Eq. (6.25), the models must be nested, that is, model B must be a special case of model A with some of the parameters fixed. As a null hypothesis, we assume that both models explain the data equally well. However, even if the null hypothesis is true, model A is likely to show a higher observed likelihood because its additional parameters make it easier to fit the noise. The statistical test accounts for this fact. As a test statistic, we consider the expression

. Like in Eq. (6.25), the models must be nested, that is, model B must be a special case of model A with some of the parameters fixed. As a null hypothesis, we assume that both models explain the data equally well. However, even if the null hypothesis is true, model A is likely to show a higher observed likelihood because its additional parameters make it easier to fit the noise. The statistical test accounts for this fact. As a test statistic, we consider the expression  . Assuming that the number of data points is large and that measurement errors are independent and Gaussian distributed, this statistic will asymptotically follow a

. Assuming that the number of data points is large and that measurement errors are independent and Gaussian distributed, this statistic will asymptotically follow a  -distribution with

-distribution with  degrees of freedom. In the test, we consider the empirical value of

degrees of freedom. In the test, we consider the empirical value of  ; if it is significantly high, we reject the null hypothesis and accept model A. Otherwise, we accept the simpler model B. The likelihood ratio test can be sequentially applied to more than two models, provided that they are subsequently nested. Likelihood ratio tests, like other statistical tests, can only tell us whether to a reject the null hypothesis model: If the test result is negative, we cannot reject the null model, that is, model A may still be the better one, but we cannot prove it.

; if it is significantly high, we reject the null hypothesis and accept model A. Otherwise, we accept the simpler model B. The likelihood ratio test can be sequentially applied to more than two models, provided that they are subsequently nested. Likelihood ratio tests, like other statistical tests, can only tell us whether to a reject the null hypothesis model: If the test result is negative, we cannot reject the null model, that is, model A may still be the better one, but we cannot prove it.

The likelihood values and, thus, the result of the likelihood ratio test depend on the data values and their standard errors. If standard errors are known to be small, the data require an accurate fit. Accordingly, if we simply shrink all error bars, the likelihood ratio becomes larger and complex models have better chances to be selected.

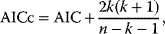

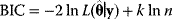

6.2.4 Selection Criteria

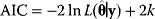

Nonnested models can be compared by using selection criteria. As we saw, if the likelihood value were not biased, it could directly be used as a criterion for model selection. However, the likelihood after fitting is biased due to overfitting. The average bias  is unknown, but estimates for it have been proposed. By adding these estimates to the log-likelihood, we obtain supposedly less biased score functions, the so-called selection criteria. By minimizing these functions instead of the log-likelihood, we can reduce the impact of overfitting. The Akaike information criterion [47]