capacity limits and consciousness Capacity limits refer to limits in how much information an individual can process at one time.

1. A brief history

2. Objective and subjective sources of evidence of capacity limits and consciousness

3. Capacity limits, type 1: information processing, attention, and consciousness

4. Capacity limits, type 2: working memory, primary memory, and consciousness

5. Reconciling limits in attention, primary memory, and consciousness

Early in the history of experimental psychology, it was suggested that capacity limits are related to the limits of conscious awareness. For example, James (1890) described limits in how much information can be attended at once, in a chapter on *attention; and he described limits in how much information can be held in mind at once, in a chapter on *memory. In the latter chapter, he distinguished between primary memory, the trailing edge of the conscious present comprising the small amount of information recently experienced and still held in mind; and secondary memory, the vast amount of information that one can recollect from previous experiences, most of which is not in conscious awareness at any one time. Experimental work supporting these concepts was already available to James from contemporary researchers, including Wilhelm Wundt, who founded the first experimental psychology laboratory. In modern terms, primary and secondary memory are similar to *working memory and long-term memory although, according to most investigators, working memory is a collection of abilities used to maintain information for ongoing tasks and only part of it is associated with consciousness.

In the late 1950s and early 1960s, the concepts of capacity limits began to receive further clarification with the birth of the discipline known as cognitive psychology. Broadbent (1958) in a seminal book described some work from investigators of the period indicating tight limits on attention. For example, individuals who received different spoken messages in both ears at the same time were unable to listen fully to more than one of these messages at a particular moment. Miller (1956) described work indicating limits on how long a list has to be before people can no longer repeat it back. This occurs in adults for lists longer than 5–9 items, with the manageable list length within that range depending on the materials and the individuals involved. One of the most important questions we must address is how attention and primary memory limits are related to one another. Are they different and, if so, which one indicates how much information is in conscious awareness? This will be discussed.

Philosophers worry about a distinction between objective sources of information used to study capacity limits, and subjective sources of information used to understand consciousness. For objective information, one gives directions to research participants and then collects and analyses their responses to particular types of stimuli, made according to those directions. The only kind of subjective information is one’s own experience of what it is like to be conscious (aware) of various things or ideas. People usually agree that it is not possible to be conscious of a large number of things at once, so it makes sense to hypothesize that the limits on consciousness and the limits on information processing have the same causes. However, logically speaking, this need not be the case.

Certain experimental methods serve as our bridge between subjective and objective sources of information. If an experimental participant claims to be conscious of something, we generally give credit for the individual being conscious of it. Often, we verify this by having the participant describe the information. For example, it is not considered good methodology to ask an individual, ‘Did you hear that tone?’ One could believe one is aware of a tone without really hearing the intended tone. It is considered better methodology to ask, ‘Do you think a tone was presented?’ On some trials, no tone is presented and one can compare the proportion of ‘yes’ responses on tone-present and tone-absent trials. Nevertheless, an individual could be conscious of some information but could still say ‘no’, depending on how incomplete information is interpreted.

There seem to be solid demonstrations that individuals can process some information outside the focus of attention and, presumably, outside conscious awareness. One demonstration is found, for example, in early work on selective listening (Broadbent 1958). Only one message could be comprehended at once but a change in the speaker’s voice within the unattended message (say, from a male to a female speaker) automatically recruited attention away from the attended message and to the formerly unattended one. The evidence was obtained by requiring that the attended message be repeated. In that type of task, breaks in repetition typically are found to occur right after the voice changes in the unattended message, and participants in that situation often note the change or react to it and can remember it.

There has been less agreement about whether higher-level semantic information can be processed outside attention. Moray (1959) found that people sometimes noticed their own name when it was included in the unattended message, implying that the name had to have been identified before it was attended. However, one important question is whether the individuals who noticed actually were focusing their attention steadily on the message that they were supposed to repeat. When Conway et al. (2001) examined this for individuals in the highest and the lowest quartiles of ability on a working memory span task (termed high- and low-span, respectively), they found that only 20% of the high-span individuals noticed their names, whereas 65% of the low-span individuals noticed their names. This outcome suggests that the low-span individuals may have noticed their names only because their attention often wandered away from the assigned message, or was not as strongly focused on it as in the case of high-span individuals, making attention available for the supposedly unattended message. This tends to negate the idea that one’s name can be automatically processed without attention, in which case high-span individuals would be expected to notice their names more often than low-span individuals.

There are some clear cases of processing without consciousness. In *blindsight, a particular effect of one kind of brain damage, an individual claims to be unable to see one portion of the visual field but still accurately points to the location of an object in that field, if required to do so (even though such patients often find the request illogical). Processing without consciousness of the processed object seems to occur.

In normal individuals, one can find *priming effects in which one stimulus influences the interpretation of another one, without awareness of the primed stimulus. This occurs, for example, if a priming word is presented with a very short interval before a masking pattern is presented, and is followed by a target word that the participant must identify, such as the word ‘dog’. This target word can be identified more quickly if the preceding priming word is semantically related (e.g. ‘cat’) than if it is unrelated (e.g. ‘brick’), even on trials in which the participant denies having seen the priming word at all and later shows no memory of it.

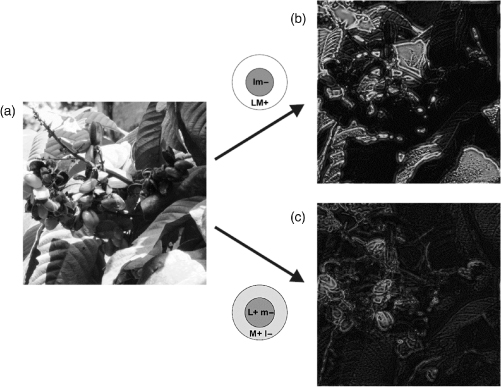

The question arises as to how much can be processed not only without conscious awareness, but also without attention. In the above cases, participants attended to the location of the stimulus in question, even when they remained unaware of the stimulus itself. As in the early work using selective listening procedures, work on vision by Ann Treisman and others has suggested that individuals can process simple physical features automatically, whereas attention is needed to process combinations of those features. This has been investigated by presenting arrays in which participants had to find a specific target item (e.g. a red square) among other, distracting items with a common feature distinguishing them from the target (e.g. all red circles, or all green squares) or among distracting items that shared multiple features with the target (e.g. some red circles and some green squares on the same trial). In the former case (a common distinguishing feature), searching for the target is rapid no matter how many distracting objects are included in the array. This suggests that participants can abstract physical features from many objects at once, and that an item with a unique feature automatically stands out (e.g. the only square or the only red item in the array). However, when the target can be distinguished from the distracting objects only by the particular conjunction of features (e.g. the only red square), searching for the target occurs slowly and depends on how many distracting objects are present. Thus, it takes focused attention, and presumably conscious awareness, to find an object with a particular conjunction of features. This attention must be applied relatively slowly, to just one object or a small number of objects at a time. Further research along these lines (Chong and Treisman 2005) suggests that it is possible for the brain automatically to compute statistical averages of features, such as the mean size of a circle in an array of circles of various sizes.

An especially interesting procedure that illustrates a limit on attention and awareness is *change blindness. If one views a scene and it cuts to another scene, something in the scene can change and, often, people will not notice the change. For example, in a scene of a table setting, square napkins might be replaced with triangular napkins without people noticing. This appears to occur because only a small number of objects can be attended at once and unattended objects are processed to a level that allows the entire scene to be perceived and comprehended in some holistic sense, but not to a level that allows individual details of most objects to be registered in memory.

The previous discussion implies that attention is closely related to conscious awareness (although for differences between the two see ATTENTION AND AWAREDNESS). Next, consider the other main faculty of the mind that may be linked to consciousness, namely primary memory. Here, the case may not be as straightforward as one would think. Miller (1956) showed that people can repeat lists of about seven items, but are they conscious of all seven at once? Not necessarily. Miller also showed that people can improve performance by grouping items together to form larger units called chunks. For example, it may be much more difficult to remember a list of nine random letters than it is to remember the nine letters IRS–FBI–CIA, because one may recognize acronyms for three prominent United States agencies in the latter case and therefore may have to keep in mind only three chunks. Once the grouping has occurred, however, it is not clear if one is simultaneously aware of all of the original elements in the set, in this example including I, R, S, F, B, C, and A. Miller did not specifically consider that the seven random items that a person might remember could be memorable only because new, multi-item chunks are formed on the spot. For example, if one remembers the telephone number 548-8634, one might have accomplished that by quickly memorizing the digit groups 548, 86, and 34. After that there might be simultaneous awareness of the three chunks of information, but not necessarily of the individual digits within each chunk.

People have a large number of strategies and resources at their disposal to remember word lists and other stimuli, and these strategies and resources together make up working memory. For example, they may recite the words silently to themselves, and this covert rehearsal process may take attention only for its initiation (Baddeley 1986). Rehearsal might have to be prevented before one can fairly measure the conscious part of working memory capacity (i.e. the primary memory of William James). The chunking process also may have to be controlled so that one knows how many items or chunks are being held. A large number of studies appearing to meet those requirements seem to suggest that most adults can retain 3–5 items at once (Cowan 2005). This is the limit, for example, in a type of method in which an array of coloured squares is briefly presented, followed after a short delay by a second array identical to the first or differing in the colour of one square, to be compared to the first array (Luck and Vogel 1997). A similar limit of 3–5 items occurs for verbal lists when one prevents effective rehearsal and grouping by presenting items rapidly with an unpredictable ending point of the list, or when one requires that a single word or syllable be repeated over and over during presentation of the list in order to suppress rehearsal.

What is essential in such procedures is that the research participant has insufficient time to group items together to form larger, multi-item chunks (Cowan 2001). Another successful technique is to test free recall of lists of multi-item chunks that have a known size because they were taught in a training session before the recall task. Chen and Cowan (2005) did that with learned pairs and singletons, and obtained similar results (3–5 chunks recalled).

A limit in primary memory of 3–5 items seems to be analogous to the limits in attention and conscious awareness. The latter are assumed to be general in that attention to, and conscious awareness of, stimuli in one domain detracts from attention and awareness in another domain. For example, listening intently to music would not be a good idea while one is working as an air traffic controller because attention would sometimes be withdrawn from details of the air traffic display to listen to parts of the music. Similarly, in the case of primary memory, Morey and Cowan (2004) found that reciting a random seven-digit list detracted from carrying out the two-array comparison procedure of Luck and Vogel that has just been described, whereas reciting a known seven-digit number (the participant’s telephone number) had little effect.

It is not clear where the 3–5-chunk working memory capacity limit comes from, or how it may help the human species to survive. Cowan (2001, 2005) summarized various authors’ speculations on these matters. The capacity limit may occur because each object or chunk in working memory is represented by the concurrent firing of neurons signalling various features of that object. Neural circuits for all objects represented in working memory must be activated in turn within a limited period and, if too many objects are included, there may be contamination between the different circuits representing two or more objects. Capacity limits may be beneficial in highlighting the most important information to guide actions in the right direction; representation of too much at once might result in actions that were based on confusions or were dangerously slow in emergency situations. Some mathematical analyses suggest that forming chunks of 3–5 items allows optimal searching for the items. To acquire complex tasks and skills, chunking can be applied in a reiterative fashion to encompass, in principle, any amount of information.

A major question that remains is how to reconcile the different capacity limits of attention vs primary memory. People generally can attend to only one message at a time, whereas they can keep several items at once in primary memory. Can these somehow represent compatible limits on conscious awareness? Perhaps so. There are several possible resolutions of the findings with attention vs primary memory. It might be that only a single message can be attended and understood because several ideas in the message must be held in primary memory at once, long enough for them to be integrated into a coherent message. Alternatively, the several (3–5) ideas that can be held in primary memory at once may have to be sufficiently uniform in type to be integrated into a coherent scene, in effect becoming like one message. According to this account, one would have difficulty remembering, say, one tone, one colour, one letter, and one shape at the same time because an integration of these events may not be easy to form. The more severe limit for paying attention, compared to the primary memory limit, might also occur because the items to be attended are fleeting, whereas items to be held in working memory theoretically might be entered into attention one at a time, or at least at a limited rate, and must be made available long enough for that to happen (Cowan 2005).

We at least know that individuals who can hold more items in primary memory seem to be many of the same individuals who are capable of carrying out difficult attention tasks. Two such tasks are (1) to go against what comes naturally by looking in the direction opposite to where an object has suddenly appeared, called anti-saccade eye movements (Kane et al. 2004); and (2) efficiently to filter out irrelevant objects so that only the relevant ones have to be retained in working memory (e.g. Conway et al. 2001). However, one study suggests that the capacity of primary memory and the ability to control attention are less than perfectly correlated across individuals (Cowan et al. 2006), and that both of these traits independently contribute to intelligence. It may be that the focus of attention and conscious awareness need to be flexible, expanding to apprehend a field of objects or contracting to focus intensively on a difficult task such as making an anti-saccade movement. If so, attention and primary memory tasks should interfere with one another to some extent, and this seems to be the case (Bunting et al. in press). There may also be additional skills that help primary memory but not attention, or vice versa. The present field of study of memory and attention and their relation to conscious awareness is exciting, but there is much left to learn.

See also AUTOMATICITY; GLOBAL WORKSPACE THEORY

This chapter was prepared with funding from NIH grant number R01-HD 21338.

NELSON COWAN

Baddeley, A. D. (1986). Working memory.

Broadbent, D. E. (1958). Perception and communication.

Bunting, M. F., Cowan, N., and Colflesh, G. H. (2008). ‘The deployment of attention in short-term memory tasks: tradeoffs between immediate and delayed deployment’. Memory and Cognition, 36.

Chen, Z. and Cowan, N. (2005). ‘Chunk limits and length limits in immediate recall: a reconciliation’. Journal of Experimental Psychology: Learning, Memory, and Cognition, 31.

Chong, S.C. and Treisman, A. (2005). Statistical processing: computing the average size in perceptual groups’. Vision Research, 45.

Conway, A. R. A., Cowan, N., and Bunting, M. F. (2001). ‘The cocktail party phenomenon revisited: the importance of working memory capacity’. Psychonomic Bulletin and Review, 8.

Cowan, N. (2001). ‘The magical number 4 in short-term memory: a reconsideration of mental storage capacity’. Behavioral and Brain Sciences, 24.

—— (2005). Working Memory Capacity.

——, Fristoe, N. M., Elliott, E. M., Brunner, R. P., and Saults, J. S. (2006). ‘Scope of attention, control of attention, and intelligence in children and adults’. Memory and Cognition, 34.

James, W. (1890). The Principles of Psychology.

Kane, M. J., Hambrick, D. Z., Tuholski, S. W., Wilhelm, O., Payne, T. W., and Engle, R. W. (2004). ‘The generality of working-memory capacity: a latent variable approach to verbal and visuospatial memory span and reasoning’. Journal of Experimental Psychology: General, 133.

Luck, S. J. and Vogel, E. K. (1997). ‘The capacity of visual working memory for features and conjunctions’. Nature, 390.

Miller, G. A. (1956). ‘The magical number seven, plus or minus two: some limits on our capacity for processing information’. Psychological Review, 63.

Moray, N. (1959). ‘Attention in dichotic listening: affective cues and the influence of instructions’. Quarterly Journal of Experimental Psychology, 11.

Morey, C. C. and Cowan, N. (2004). ‘When visual and verbal memories compete: evidence of cross-domain limits in working memory’. Psychonomic Bulletin and Review, 11.

Cartesian dualism See DUALISM

cerebellum See BRAIN

change blindness Change blindness, a term coined by Ronald Rensink and colleagues (Rensink et al. 1997), refers to the striking difficulty people have in noticing large changes to scenes or objects. When a change is obscured by some disruption, observers tend not to detect it, even when the change is large and easily seen once the observer has found it. Many types of disruption can induce change blindness, including briefly flashed blank screens (e.g. Rensink et al. 1997), visual noise or ‘mudsplashes’ flashed across a scene (O’Regan et al. 1999, Rensink et al. 2000), eye movements (e.g. Grimes 1996), eye blinks (O’Regan et al. 2000), motion picture cuts or pans (e.g. Levin and Simons 1997), and real-world occlusion events (e.g. Simons and Levin 1998). It can also occur in the absence of a disruption, provided that the change occurs gradually enough that it does not attract attention (Simons et al. 2000).

Change blindness is interesting because the missed changes are surprisingly large: a failure to notice one of ten thousand people in a stadium losing his hat would be unsurprising, but the failure to notice that a stranger you were talking to was replaced by another is startling. People incorrectly assume that large changes automatically draw attention, whereas evidence for change blindness suggests that they do not. This mistaken intuition, known as change blindness blindness, is evidenced by the finding that people consistently overestimate their ability to detect change (Levin et al. 2000).

The phenomenon of change blindness has challenged traditional assumptions about the stability and robustness of internal representations of visual scenes. People can encode and retain huge numbers of scenes and recognize them much later (Shepard 1967), suggesting the possibility that people form extensive representations of the details of scenes. Change blindness appears to contradict this conclusion—we fail to notice large changes between two versions of a scene if the change signal is obscured or eliminated. The phenomenon has led some to question whether internal representations are even necessary to explain our conscious experience of our visual world (e.g. Noë 2005).

Change blindness has received increasing attention over the past decades, in part as a result of the advent of readily accessible image editing software. Limits on our ability to detect changes have been investigated using simple stimuli since the 1950s; most early studies documented the inability to detect changes to letters and words when the changes occurred during an eye movement. More recent change blindness research built on these paradigms, but extended them to more complex and naturalistic stimuli. In a striking demonstration, participants studied photographs of natural scenes and then performed a memory test (Grimes 1996). While they viewed the images, some details of the photographs were changed, and if the changes occurred during eye movements, people failed to notice them. Even large changes, such as two people exchanging their heads, went unseen. This demonstration, coupled with philosophical explorations of the tenuous link between visual representations and visual awareness, sparked a resurgence of interest in change detection failures as well as paradigms to study change blindness.

The flicker task is perhaps the best-known change blindness paradigm (Rensink et al. 1997). In this task, an original image and a modified image alternate rapidly with a blank screen between them until observers detect the change. The inserted blank screen makes this task difficult by hiding the change signal. Most changes are found eventually, but they are rarely detected during the first cycle of alternation, and some changes are not detected even after one minute of alternation. Unlike earlier studies of change blindness, the flicker task allows people to experience the extent of their change blindness. That is, people can experience a prolonged inability to detect changes, but once they find the change, the change seems trivial to detect. The method also allows a rigorous exploration of the factors that contribute to change detection and change blindness in both simple and complex displays.

Change blindness also occurs for other tasks and with more complex displays, even including real-world interactions (see Rensink 2002 for a review). For example, in one experiment an experimenter approached a pedestrian (the participant) to ask for directions, and during the conversation, two other people interrupted them by carrying a door between them. Half of the participants failed to notice when the original experimenter was replaced by a different person during the interruption (Simons and Levin 1998). Change blindness has also been studied using simple arrays of objects, complex photographs, and motion pictures. It occurs when changes are introduced during an eye movement, a flashed blank screen, an eye blink, a motion picture cut, or a real-world interruption.

Despite the generalizability of change blindness from simple laboratory tasks to real-world contexts, it does not represent our normal visual experience. In fact, people generally do detect changes when they can see the change occur. Changes typically produce a detectable signal that draws attention. In demonstrations of change blindness, this signal is hidden or eliminated by some form of interruption or distraction. In essence, the interruption ‘breaks’ the system by eliminating the mechanism typically used to detect changes. In so doing, change blindness provides a tool to better understand how the visual system operates. Just as visual *illusions allow researchers to study the default assumptions of our visual system, change blindness allows us to better understand the contributions of attention and memory to conscious perception.

In the absence of a change signal, successful change detection requires observers to encode, retain, and then compare information about potential change targets across views. Successful change detection requires having both a representation of the pre-change object and a successful comparison of it to the post-change object. If any step in the process fails—encoding, representation, recall, or comparison—change detection fails. Evidence for change blindness suggests that at least some steps in this are not automatic—when the change signal is eliminated, people do not consistently detect changes. A central question in the literature is which part or parts of this process fail when change blindness occurs.

Change blindness has often been taken as evidence for the sparseness or absence of internal representations. If we lacked internal representations of previously viewed scenes, there would be no way to compare the current view to the pre-change scene, so changes would go unnoticed due to a representation failure. However, it is crucial to note that change blindness does not logically require the absence of representations. Change blindness could occur even if the pre-change scene were represented with photographic precision, provided that observers failed to compare the relevant aspects of the scene before and after the change. In fact, even when observers represent both the initial and changed version of a scene, they sometimes fail to detect changes (Mitroff et al. 2004).

The completeness of our visual representations in the face of evidence for change blindness remains an area of extensive investigation. Some researchers use evidence of change blindness to support the claim that our visual experience does not need to rely on complete or detailed representations. In essence, our visual representations can be sparse, provided that we have just-in-time access to the details we need to support conscious experience of our visual world. Others argue that our representations are, in fact, fairly detailed. Such detailed representations might underlie our long-term memory for scenes, but change blindness occurs either due to a disconnection between these representations and the mechanisms of change detection, or because of a failure to compare the relevant aspects of the pre- and post-change scenes. The differences in these explanations for change blindness have implications both for the mechanisms of visual perception and representation and for the link between representations and consciousness (see Simons 2000, Simons and Rensink 2005 for detailed discussion of these and other possible explanations for change blindness).

In addition to spurring research on visual representations and their links to awareness, change blindness has also yielded interesting insights into the relationship between *attention and awareness. In the presence of a visual disruption, the change signal no longer attracts attention and change blindness ensues. Even when observers know that a change is occurring, they cannot easily find it and have to shift attention from one scene region to another looking for change. Once they attend to the change, it becomes easy to detect. This finding, coupled with evidence that changes to attended objects (e.g. the ‘centre of interest’ of the scene) are more easily detected, led to the conclusion that attention is necessary for successful change detection. However, attention to an object does not always eliminate change blindness. Observers may fail to detect changes to the central object in a scene. Thus, attention to the changing object may not be sufficient for detection.

In the past several years, the phenomenon of change blindness also has attracted the attention of neuroscientists who have used *functional brain imaging techniques to investigate the neural underpinnings of change blindness and change detection. Most of these studies suggest a role for both *frontal and parietal (particularly right parietal) cortex in change detection (e.g. Beck et al. 2006). Other imaging studies have examined the role of focused attention in change detection as well as the correlation between conscious change perception and neural activation. Such neuroimaging measures hold promise as a way to explore the mechanisms of change detection by providing additional measures of change detection even in the absence of a behavioural response or a conscious report of change. In that way, they might help determine the extent to which visual representation and change detection occur in the absence of awareness.

In summary, change blindness has become increasingly central to the field of visual cognition, and through its study we can improve our understanding of visual representation, attention, scene perception, and the neural correlates of consciousness.

XIAOANG IRENE WAN, MICHAEL S. AMBINDER, AND DANIEL J. SIMONS

Beck, D. M., Muggleton, N., Walsh, V., and Lavie, N. (2006). ‘Right parietal cortex plays a critical role in change blindness’. Cerebral Cortex, 16.

Grimes, J. (1996). ‘On the failure to detect changes in scenes across saccades’. In Akins, K. (ed.) Vancouver Studies in Cognitive Science: Vol. 5. Perception.

Levin, D. T. and Simons, D. J. (1997). ‘Failure to detect changes to attended objects in motion pictures’. Psychonomic Bulletin and Review, 4.

——, Momen, N., Drivdahl, S. B., and Simons, D. J. (2000). ‘Change blindness blindness: the metacognitive error of overestimating change-detection ability’. Visual Cognition, 7.

Mitroff, S. R., Simons, D. J., and Levin, D. T. (2004). ‘Nothing compares 2 views: change blindness results from failures to compare retained information’. Perception and Psychophysics, 66.

Noë, A. (2005). ‘What does change blindness teach us about consciousness?’ Trends in Cognitive Sciences, 9.

O’Regan, J. K., Rensink, R. A., and Clark, J. J. (1999). ‘Change-blindness as a result of “mudsplashes”’. Nature, 398.

——, Deubel, H., Clark, J. J., and Rensink, R. A. (2000). ‘Picture changes during blinks: looking without seeing and seeing without looking’. Visual Cognition, 7.

Rensink, R. A. (2002). ‘Change detection’. Annual Review of Psychology, 53.

——, O’Regan, J. K., and Clark, J. J. (1997). ‘To see or not to see: the need for attention to perceive changes in scenes’. Psychological Science, 8.

——, ——, —— (2000). ‘On the failure to detect changes in scenes across brief interruptions’. Visual Cognition, 7.

Shepard, R. N. (1967). ‘Recognition memory for words, sentences and pictures’. Journal of Verbal Learning and Verbal Behavior, 6.

Simons, D. J. (2000). ‘Current approaches to change blindness’. Visual Cognition, 7.

—— and Levin, D. T. (1998). ‘Failure to detect changes to people during a real-world interaction’. Psychonomic Bulletin and Review, 5.

—— and Rensink, R. A. (2005). ‘Change blindness: past, present, and future’. Trends in Cognitive Sciences, 9.

——, Franconeri, S. L., and Reimer, R. L. (2000). ‘Change blindness in the absence of a visual disruption’. Perception, 29.

Charles Bonnet syndrome The natural scientist and philosopher Charles Bonnet (1720–93) wrote on topics as diverse as parthenogenesis, worm regeneration, metaphysics, and theology. Influencing Gall’s system of organology and the 19th century localizationist approach to cerebral function, Bonnet viewed the brain as an ‘assemblage of different organs’, specialized for different functions, with activation of a given organ, e.g. the organ of vision, responsible not only for visual perception, but also visual imagery and visual memory—a view strikingly resonant with contemporary cognitive neuroscience. His theory of the brain and its mental functions was first outlined in his ‘Analytical essay on the faculties of the soul’ (Bonnet 1760), in which passing mention was made of a case he had encountered that was so bizarre he feared no one would believe it. The case concerned an elderly man with failing eyesight who had experienced a bewildering array of silent visions without evidence of memory loss or mental illness. The visions were attributed by Bonnet to the irritation of fibres in the visual organ of the brain, resulting in *hallucinatory visual percepts indistinguishable from normal sight. Bonnet was to present the details of the case in full at a later date but never returned to it other than as a footnote in a later edition of the work identifying the elderly man as his grandfather, the magistrate Charles Lullin (1669–1761).

Bonnet’s description of Lullin, although brief, was taken up by several 18th and 19th century authors as the paradigm of hallucinations in the sane. However, it would likely have become little more than a historical curiosity, were it not for the chance finding of Lullin’s sworn, witnessed testimony among the papers of an ophthalmological collector and its publication in full at the beginning of the 20th century (Flournoy 1902). In 1756, three years after a successful operation to remove a cataract in his left eye, Lullin developed a gradual deterioration of vision in both eyes which continued despite an operation in 1757 to remove a right eye cataract (his visual loss was probably related to age-related macular disease). The hallucinations occurred from February to September of 1758 when he was aged 89. They ranged from the relatively simple and mundane (e.g. storms of whirling atomic particles, scaffolding, brickwork, clover patterns, a handkerchief with gold disks in the corners, and tapestries) to the complex and bizarre (e.g. framed landscape pictures adorning his walls, an 18th century spinning machine, crowds of passers-by in the fountain outside his window, playful children with ribbons in their hair, women in silk dresses with inverted tables on their heads, a carriage of monstrous proportions complete with horse and driver, and a man smoking—recognized as Lullin himself). Some 250 years on, it has become clear that experiences identical to Lullin’s are reported by c.10% of patients with moderate visual loss.

In 1936, Georges de Morsier, then a recently appointed lecturer in neurology at the University of Geneva, honoured Bonnet’s account of Lullin’s hallucinations by giving the name ‘Charles Bonnet syndrome’ to the clinical scenario of visual hallucinations in elderly individuals with eye disease (de Morsier 1936). However, de Morsier made it clear that eye disease was incidental, not causal, and in 1938 removed it entirely from the definition. In his view, Lullin’s hallucinations, and those of patients like him, were the result of an unidentified degenerative brain disease which did not cause dementia as it remained confined to the visual pathways. While the idea of honouring Bonnet was widely embraced by the medical community, de Morsier’s definition of the syndrome was not. Parallel uses of the term have emerged, some following de Morsier, others describing complex visual hallucinations with insight (ffytche 2005). Yet the use of the term that has found most favour describes an association of visual hallucinations with eye disease, reflecting mounting evidence for the pathophysiological role played by loss of visual inputs (see Burke 2002). While arguments continue over the use of the term, what is beyond dispute is that visual hallucinations are relatively common and occur in patients able to faithfully report their hallucinated experiences without the potential distortion of memory loss or mental illness. As foreseen by Bonnet, the hallucinations in such patients, and their associated brain states, provide important insights into the neural correlates of the contents of consciousness.

DOMINIC. H. FFYTCHE

Bonnet, C. (1760). L’Essai analytique sur les facultés de l’âme.

Burke, W. (2002). ‘The neural basis of Charles Bonnet hallucinations: a hypothesis’. Journal of Neurology, Neurosurgery and Psychiatry (London), 73.

de Morsier, G. (1936). ‘Les automatismes visuels. (Hallucinations visuelles rétrochiasmatiques)’. Schweizerische Medizinische Wochenschrift, 66.

ffytche, D. H. (2005). ‘Visual hallucinations and the Charles Bonnet syndrome’. Current Psychiatry Reports, 7.

Flournoy, T. (1902). ‘Le cas de Charles Bonnet: hallucinations visuelles chez un vieillard opéré de la cataracte’. Archives de Psychologie (Geneva), 1.

Chinese room argument John Searle’s Chinese room argument goes against claims that computers can really think; against ‘strong *artificial intelligence’ as Searle (1980a) calls it. The argument relies on a thought experiment. Suppose you are an English speaker who does not speak a word of Chinese. You are in a room, hand-working a natural language understanding (NLU) computer program for Chinese. You work the program by following instructions in English, using data structures, such as look-up tables, to correlate Chinese symbols with other Chinese symbols. Using these structures and instructions, suppose you produce responses to written Chinese input that are indistinguishable from responses that might be given by a native Chinese speaker. By processing uninterpreted formal symbols (‘syntax’) according to rote instructions, like a computer, you pass for a Chinese speaker. You pass the *Turing test for Chinese. Still, it seems, you would not know what the symbols meant: you would not understand a word of the Chinese. The same, Searle concludes, goes for computers. And since ‘nothing depends on the details of the program’ or the specific psychological processes being ‘simulated’, the same objection applies against all would-be examples of artificial intelligence. That is all they ever will be, simulations; not the real thing.

In his seminal presentations, Searle speaks of ‘intentionality’ (1980a) or ‘semantics’ (1984), but to many it has seemed, from Searle’s reliance on ‘the first person point of view’ (1980b) in the thought experiment and in fending off objections, that the argument is really about consciousness. The experiment seems to show that the processor would not be conscious of the meaning of the symbols; not that the symbols or the processing would be meaningless … unless it is further assumed that meaning requires consciousness thereof. Searle sometimes bridles at this interpretation. Against Daniel Dennett, for example, Searle complains, ‘he misstates my position as being about consciousness rather than about semantics’ (1999:28). Yet, curiously, Searle himself (1980b, in reply to Wilensky 1980) admits he equally well ‘could have made the argument about pains, tickles, and anxiety’, when pains, tickles, and undirected anxiety are not intentional states. They have no semantics! The similarity of Searle’s scenario to ‘absent *qualia’ scenarios generally, and to Ned Block’s (1978) Chinese nation example in particular, further supports the thought that the Chinese room concerns consciousness, in the first place, and *intentionality only by implication (insofar as intentionality requires consciousness). What the experiment, then, would show is that hand-working an NLU program for Chinese would not give rise to any sensation or first-person impression of understanding; that no such computation could impart—not meaning itself—but phenomenal consciousness thereof.

Practical objections to Searle’s thought experiment concern whether human-level conversational ability can be computationally achieved at all, by look-up table or otherwise; and if it could be, whether such a program could really be hand-worked in real time, as envisaged. Such objections raise questions about computability and implementation that do not directly concern consciousness. More theoretical replies—granting the ‘what if’ of the thought experiment—however, either go directly to consciousness themselves; or else Searle’s responses immediately do.

The systems reply says the understander would not be the person in the room, but the whole system (person, instruction books, look-up tables, etc.). Even if meaning does require consciousness, according to this reply, from the fact that the person in the room would not be conscious of the meanings of the Chinese symbols, it does not follow that the system would not be; perhaps the system, consequently, understands. Many proponents of this reply, additionally, however, would not grant the supposition that meaning requires consciousness. According to conceptual role semantics, inscriptions in the envisaged scenario get their meanings from their inferential roles in the overall process. If they instance the right roles, they are meaningful; unconsciousness notwithstanding. The causal theory of reference (inspiring the robot reply), on the other hand, says that inscriptions acquire meaning from causal connections with the actual things they refer to. If computations in the room were caused by real-world inputs, as in perception; and if they caused real-world outputs, such as pursuit or avoidance (put the room in the head of a robot); then the inscriptions and computations would be about the things perceived, avoided, etc. This would give the inscriptions and computations semantics, unconsciousness notwithstanding.

Searle responds to the systems and robot replies, initially (1980a), by tweaking the thought experiment. Suppose you internalize the system (memorize the instructions and data structures) and take on all the requisite conceptual roles yourself. Put the room in a robot’s head to supply causal links. Still, Searle argues, this would not make the symbols meaningful to you, the processor, so as to give them ‘intrinsic intentionality’ (Searle 1980b) besides the ‘derived’ or ‘observer relative intentionality’ they have from the conventional Chinese meanings of the symbols. Derived intentionality, Searle explains, exists ‘only in the minds of beholders like the Chinese speakers outside the room’: this too is curious. Inferential roles and causal links are not observer-relative in this way. Inferential roles are system-internal by definition. On the other hand, if I avoid real dangers to myself (in the robot head) by heeding written warnings in Chinese, the understanding would effectively seem to be mine (or the robot’s) independently of any outside observers. Searle’s response in either case is to take the ‘in’ of ‘intrinsic’ to mean, not just objectively contained, but subjectively experienced; not just physically in but metaphysically inward or ‘ontologically subjective’: to take it in a way that seems to suppose meaning requires consciousness thereof. Searle’s later advocacy of ‘the Connection Principle’ (1990b) can be viewed as an attempt to discharge this supposition.

According to Searle’s Connection Principle, ‘ascription of an unconscious intentional phenomenon to a system implies the phenomenon is in principle accessible to consciousness’. As he goes on to explain it, unconscious psychological phenomena do not actually have meaning while unconscious ‘for the only occurrent reality’ of that meaning ‘is the shape of conscious thoughts’ (1992). Searle, however, remains vague about what these ‘shapes’ are and how their subjective and qualitative natures (on which he insists) could be meaning constitutive in ways that objective factors like inferential roles and causal links (as he argues) cannot be. Nor is this the only reason Searle’s Connection Principle has won few adherents. Psychological phenomena such as ‘unconscious *priming’ (e.g. subjects previously exposed to the word-pair ‘ocean–moon’ being subsequently more likely to respond ‘Tide’ when asked to name a laundry detergent) seem to show that unconscious mental states and processes do have intentionality while unconscious, since they are subject to semantic effects while unconscious. Furthermore, the supposition of unconscious intentionality has proved scientifically fruitful. ‘Cognitive’ theories such as Noam Chomsky’s theories of language and David Marr’s theories of vision suppose the existence of unconscious representations (e.g. language rules, and preliminary visual sketches) which are intentional (about the grammars they rule, or scenes preliminarily sketched) but not accessible to introspection as Searle’s Connection Principle requires. In contrast, the view of the mental that Searle endorses in accord with his Principle—according to which ‘the actual ontology of mental states is a first-person ontology’ and ‘the mind consists of qualia, so to speak, right down to the ground’ (1992)—seems, to many, scientifically regressive; seeming to ‘regress to the Cartesian vantage point’ (Dennett 1987) of dualism.

Of the Chinese room, Searle says, the ‘point of the story is to remind us of a conceptual truth that we knew all along’ (1988), that ‘syntax is not sufficient for semantics’(1984). But the insufficiency of syntax in motion (playing inferential roles), and in situ (when causally connected) are hardly conceptual truths we knew all along; they are empirical claims the experiment has to support (Hauser 1997). The support offered would seem to be the intuition that such processing would not make the processor conscious of the meanings of the symbols. But, of course, it is supposed to be an understanding program, not a consciousness thereof (or introspective access) program: if the link between intentionality and consciousness his Connection Principle articulates were not already being presupposed, it seems Searle’s famous argument against ‘strong AI’ would go immediately wrong. However much Searle might like his Chinese room example to make a case against AI that stands independently of this ‘conceptual connection’ (Searle 1992), then, it seems extremely doubtful that it does.

See also, COGNITION, UNCONSCIOUS; CONSCIOUSNESS, CONCEPTS OF; DUALISM; INTENTIONALITY; QUALIA; SUBJECTIVITY

LARRY. S. HAUSER

Block, N. (1978). ‘Troubles with functionalism’. In Savage, C. W. (ed.) Perception and Cognition: Issues in the Foundations of Psychology.

Dennett, D. (1987). The Intentional Stance.

Hauser, L. (1997). ‘Searle’s Chinese box: debunking the Chinese room argument’. Minds and Machines, 7.

Preston, J. and Bishop, M. (eds) (2001). Views into the Chinese Room: New Essays on Searle and Artificial Intelligence.

Searle, J. R. (1980a). ‘Minds, brains, and programs’. Behavioral and Brain Sciences, 3.

Searle, J. R. (1980b). ‘Intrinsic intentionality’. Behavioral and Brain Sciences, 3.

Searle, J. R. (1984). Minds, Brains and Science.

Searle, J. R. (1988). ‘Minds and brains without programs’. In Blakemore, C. (ed.) Mindwaves.

Searle, J. R. (1990a). ‘Is the brain’s mind a computer program?’ Scientific American, 262(1).

Searle, J. R. (1990b). ‘Consciousness, explanatory inversion, and cognitive science’. Behavioral and Brain Sciences, 13.

Searle, J. R. (1992). The Rediscovery of the Mind.

Searle, J. R. (1999). The Mystery of Consciousness.

Wilensky, R. (1980). ‘Computers, cognition and philosophy’. Behavioral and Brain Sciences, 3.

cocktail party effect See ATTENTION; CAPACITY LIMITS AND CONSCIOUSNESS

cognition, unconscious The unconscious mind was one of the most important ideas of the 20th century, influencing not just scientific and clinical psychology but also literature, art, and popular culture. Sigmund Freud famously characterized the ‘discovery’ of the unconscious as one of three unpleasant truths that humans had learned about themselves: the first, from Copernicus, that the Earth was not the centre of the universe; the second, from Darwin, that humans are just animals after all; and the third, ostensibly from Freud himself, that the conscious mind was but the tip of the iceberg (though Freud apparently never used this metaphor himself), and that the important determinants of experience, thought, and action were hidden from conscious awareness and conscious control.

In fact, we now understand that Freud was not the discoverer of the unconscious (Ellenberger 1970). The concept had earlier roots in the philosophical work of Leibniz and Kant, and especially that of Herbart, who in the early 19th century introduced the concept of a limen, or threshold, which a sensation had to cross in order to be represented in conscious awareness. A little later, Helmholtz argued that conscious perception was influenced by unconscious inferences made as the perceiver constructs a mental representation of a distal stimulus. In 1868, while Freud was still in short trousers, the Romantic movement in philosophy, literature, and the arts set the stage for Hartmann’s Philosophy of the Unconscious (1868), which argued that the physical universe, life, and individual minds were ruled by an intelligent, dynamic force of which we had no awareness and over which we had no control. And before Freud was out of medical school, Samuel Butler, author of Erewhon, drew on Darwin’s theory of evolution to argue that unconscious memory was a universal property of all organized matter.

Nevertheless, consciousness dominated the scientific psychology that emerged in the latter part of the 19th century. The psychophysicists, such as Weber and Fechner, focused on mapping the relations between conscious sensation and physical stimulation. The structuralists, such as Wundt and Titchener, sought to analyse complex conscious experiences into their constituent (but conscious) elements. James, in his Principles of Psychology, defined psychology as the science of mental life, by which he meant conscious mental life—as he made clear in the Briefer Course, where he defined psychology as ‘the description and explanation of states of consciousness as such’. Against this background, Breuer and Freud’s assertion, in the early 1890s, that hysteria is a product of repressed memories of trauma, and Freud’s 1900 topographical division of the mind into conscious, preconscious, and unconscious systems, began to insinuate themselves into the way we thought about the mind.

On the basis of his own observations of hysteria, fugue, and hypnosis, James understood, somewhat paradoxically, that there were streams of mental life that proceeded outside conscious awareness. Nevertheless, he warned (in a critique directed against Hartmann) that the distinction between conscious and unconscious mental life was ‘the sovereign means for believing what one likes in psychology, and of turning what might become a science into a tumbling-ground for whimsies’. This did not mean that the notion of unconscious mental life should be discarded; but it did mean that any such notion should be accompanied by solid scientific evidence. Unfortunately, as we now understand, Freud’s ‘evidence’ was of the very worst sort: uncorroborated inferences, based more on his own theoretical commitments than on anything his patients said or did, coupled with the assumption that the patient’s resistance to Freud’s inferences were all the more proof that they were correct—James’s ‘psychologist’s fallacy’ writ large. Ever since, the challenge for those who are interested in unconscious mental life has been to reduce the size of the tumbling-ground by tightening up the inference from behaviour to unconscious thought.

Unfortunately, the scientific investigation of unconscious mental life was sidetracked by the behaviourist revolution in psychology, initiated by Watson and consolidated by Skinner, which effectively banished consciousness from psychological discourse, and the unconscious along with it. The ‘cognitive revolution’ of the 1960s, which overthrew behaviourism, began with research on *attention, short-term memory, and imagery—all aspects of conscious awareness. The development of cognitive psychology led ultimately to a rediscovery of the unconscious as well, but in a form that looked nothing like Freud’s vision. As befits an event that took place in the context of the cognitive revolution, the rediscovery of the unconscious began with cognitive processes—the processes by which we acquire knowledge through perception and learning; store knowledge in memory; use, transform, and generate knowledge through reasoning, problem-solving, judgement, and decision-making; and share knowledge through language.

The first milestone in the rediscovery of the unconscious mind was a distinction between *automatic and controlled processes, as exemplified by various phenomena of perception and skilled reading. For example, the perceiver automatically takes distance into account in inferring the size of an object from the size of its retinal image (this is an example of Helmholtz’s ‘unconscious inferences’). And in the *Stroop effect, subjects automatically process the meaning of colour words, which makes it difficult for them to name the incongruent colour of the ink in which those words are printed. In contrast to controlled processes, automatic processes are inevitably evoked by the appearance of an effective stimulus; once evoked, they are incorrigibly executed, proceeding to completion in a ballistic fashion; they consume little or no attentional resources; and they can be performed in parallel with other cognitive activities. While controlled processes are performed consciously, automatic processes are unconscious in the strict sense that they are both unavailable to introspective access, known only through inference, and involuntary.

It is one thing to acknowledge that certain cognitive processes are performed unconsciously. As noted earlier, such a notion dates back to Helmholtz, and was revived by Chomsky, at the beginning of the cognitive revolution, to describe the unconscious operation of syntactic rules of language. But it is something else to believe that cognitive *contents—specific percepts, memories, and thoughts—could also be represented unconsciously. However, evidence for just such a proposition began to emerge with the discovery of spared *priming and source *amnesia in patients with the amnesic syndrome secondary to damage to the hippocampus and other subcortical structures. This research, in turn, led to a distinction between two expressions of episodic memory, or memory for discrete events: explicit memory entails conscious recollection, usually in the form of recall or recognition; by contrast, implicit memory refers to any effect of a past event on subsequent experience, thought, or action (Schacter 1987; see AUTONOETIC CONSCIOUSNESS).

Preserved priming in amnesic patients showed that explicit and implicit memory could be dissociated from each other: in this sense, implicit memory may be thought of as unconscious memory. Similar dissociations have now been observed in a wide variety of conditions, including the anterograde and retrograde amnesias produced by electroconvulsive therapy, general *anaesthesia, conscious sedation by benzodiazepines and similar drugs, *dementias such as Alzheimer’s disease, the forgetfulness associated with normal ageing, posthypnotic amnesia, and the ‘functional’ or ‘psychogenic’ amnesias associated with the psychiatric syndromes known as the dissociative disorders, such as ‘hysterical’ fugue and *dissociative identity disorder (also known as multiple personality disorder).

Implicit memory refers to the influence of a past event on the person’s subsequent experience, thought, or action in the absence of, or independent of, the person’s conscious recollection of that event. This definition can then serve as a model for extending the cognitive unconscious to cognitive domains other than memory. Thus, implicit perception refers to the influence of an event in the current stimulus environment, in the absence of the person’s conscious perception of that event (Kihlstrom et al. 1992). Implicit perception is exemplified by so-called subliminal perception (see PERCEPTION, UNCONSCIOUS), as well as the *blindsight of patients with lesions in striate cortex. It has also been observed in conversion disorders (such as ‘hysterical’ blindness); in the anaesthesias and negative *hallucinations produced by hypnotic suggestion; and in failures of conscious perception associated with certain attentional phenomena, such as unilateral neglect, dichotic listening, parafoveal vision, *inattentional blindness, repetition blindness, and the *attentional blink. In each case, subjects’ task performance is influenced by stimuli that they do not consciously see or hear—the essence of unconscious perception.

Source amnesia shades into the phenomenon of implicit *learning, in which subjects acquire knowledge, as displayed in subsequent task performance, but are not aware of what they have learned. Although debates over unconscious learning date back to the earliest days of psychology, the term implicit learning was more recently coined in the context of experiments on *artificial grammar learning (Reber 1993). In a typical experiment, subjects were asked to memorize a list of letter strings, each of which had been generated by a set of ‘grammatical rules’. Despite being unable to articulate the rules themselves, they were able to discriminate new grammatical strings from ungrammatical ones at better-than-chance levels. Later experiments extended this finding to concept learning, covariation detection, *sequence learning, learning the input–output relations in a dynamic system, and other paradigms. In source amnesia, as an aspect of implicit episodic memory, subjects have conscious access to newly acquired knowledge, even though they do not remember the learning experience itself. In implicit learning, newly acquired semantic or procedural knowledge is not consciously accessible, but nevertheless influences the subjects’ conscious experience, thought, and action.

There is even some evidence for unconscious thought, defined as the influence on experience, thought, or action of a mental state that is neither a percept nor a memory, such as an idea or an image (Kihlstrom et al. 1996). For example, when subjects confront two problems, one soluble and the other not, they are often able to identify the soluble problem, even though they are not consciously aware of the solution itself. Other research has shown that the correct solution can generate priming effects, even when subjects are unaware of it. Because the solution has never been presented to the subjects, it is neither a percept nor a memory; because it has been internally generated, it is best considered as a thought. Phenomenologically, implicit thought is similar to the *feeling of knowing someone we cannot identify further, or the experience when words seem to be on the tip of the tongue; it may also be involved in intuition and other aspects of creative problem-solving.

With the exception of implicit thought, all the phenomena of the cognitive unconscious are well accepted, although there remains considerable disagreement about their underlying mechanisms. For example, it is not clear whether explicit and implicit memory are mediated by separate brain systems, or whether they reflect different aspects of processing within a single *memory system. The theoretical uncertainty is exacerbated by the fact that most demonstrations of implicit perception and memory involve repetition priming that can be based on an unconscious perceptual representation of the prime, and the extent of unconscious semantic priming, especially in the case of implicit perception, has yet to be resolved. One thing that is clear is that there are a number of different ways to render a percept or memory unconscious; the extent of unconscious influence probably depends on the particular means chosen.

Occasional claims to the contrary notwithstanding, the cognitive unconscious revealed by the experiments of modern psychology has nothing in common with the dynamic unconscious of classic psychoanalytic theory (Westen 1999). To begin with, its contents are ‘kinder and gentler’ than Freud’s primitive, infantile, irrational, sexual, and aggressive ‘monsters from the Id’; moreover, unconscious percepts and memories seem to reflect the basic architecture of the cognitive system, rather than being motivated by conflict, anxiety, and repression. Moreover, the processes by which emotions and motives are rendered unconscious seem to bear no resemblance to the constructs of psychoanalytic theory. This is not to say that emotional and motivational states and processes cannot be unconscious. If percepts, memories, and thoughts can be unconscious, so can feelings and desires. Of particular interest is the idea that stereotypes and prejudices can be unconscious, and affect the judgements and behaviours even of people who sincerely believe that they have overcome such attitudes (Greenwald et al. 2002).

Mounting evidence for the role of automatic processes in cognition, and for the influence of unconscious percepts, memories, knowledge, and thoughts, has led to a groundswell of interest in unconscious processes in learning and thinking. For example, many social psychologists have extended the concept of *automaticity to very complex cognitive processes as well as simple perceptual ones—a trend so prominent that automaticity has been dubbed ‘the new unconscious’ (Hassin et al. 2005). An interesting characteristic of this literature has been the claim that automatic processing pervades everyday life to the virtual exclusion of conscious processing—‘the automaticity of everyday life’ and ‘the unbearable automaticity of being’ (e.g. Bargh and Chartrand 1999). This is a far cry from the two-process theories that prevail in cognitive psychology, and earlier applications of automaticity in social psychology, which emphasized the dynamic interplay of conscious and unconscious processes. Along the same lines, Wilson has asserted the power of the ‘adaptive unconscious’ in learning, problem-solving, and decision-making (Wilson 2002)—a view popularized by Gladwell as ‘the power of thinking without thinking’ (Gladwell 2005). Similarly, Wegner has argued that conscious will is an illusion, and that the true determinants of conscious thoughts and actions are unconscious (Wegner 2002). For these theorists, automaticity replaces Freud’s ‘monsters from the Id’ as the third unpleasant truth about human nature. Where Descartes asserted that consciousness, including conscious will, separated humans from the other animals, these theorists conclude, regretfully, that we are automatons after all (and it is probably a good thing, too).

The stance, which verges on *epiphenomenalism, or at least conscious inessentialism, partly reflects the ‘conscious shyness’ of psychologists and other cognitive scientists, living as we still do in the shadow of functional behaviourism (Flanagan 1992)—as well as a sentimental attachment to a crypto-Skinnerian situationism among many social psychologists (Kihlstrom 2008). But while it is clear that consciousness is not necessary for some aspects of perception, memory, learning, or even thinking, it is a stretch too far to conclude that the bulk of cognitive activity is unconscious, and that consciousness plays only a limited role in thought and action. ‘Subliminal’ perception appears to be analytically limited, and earlier claims for the power of *subliminal advertising were greatly exaggerated (Greenwald 1992). Assertions of the power of implicit learning are rarely accompanied by a methodologically adequate comparison of conscious and unconscious learning strategies— or, for that matter, a properly controlled assessment of subjects’ conscious access to what they have learned. Similarly, many experimental demonstrations of automaticity in social behaviour employ very loose definitions of automaticity, confusing the truly automatic with the merely incidental. Nor are there very many studies using techniques such as Jacoby’s process-dissociation procedure to actually compare the impact of automatic and controlled processes (Jacoby et al. 1997; see MEMORY, PROCESS-DISSOCIATION PROCEDURE).

So, despite the evidence for unconscious cognition, reports of the death of consciousness appear to be greatly exaggerated. At the very least, consciousness gives us something to talk about; and it seems to be a logical prerequisite to the various forms of social learning by precept, including sponsored teaching and the social institutions (like universities) that support it, which in turn make cultural evolution the powerful force that it is.

JOHN F. KIHLSTROM

Bargh, J. A. and Chartrand, T. L. (1999). ‘The unbearable automaticity of being’. American Psychologist, 54.

Ellenberger, H. F. (1970). The Discovery of the Unconscious: the History and Evolution of Dynamic Psychiatry.

Flanagan, O. (1992). Consciousness Reconsidered.

Gladwell, M. (2005). Blink: the Power of Thinking Without Thinking.

Greenwald, A. G. (1992). ‘New Look 3: Unconscious cognition reclaimed’. American Psychologist, 47.

——, Banaji, M. R., Rudman, L. A., Farnham, S. D., Nosek, B. A., and Mellott, D. S. (2002). ‘A unified theory of implicit attitudes, stereotypes, self-esteem, and self-concept’. Psychological Review, 109.

Hassin, R. R., Uleman, J. S., and Bargh, J. A. (eds) (2005). The New Unconscious.

Jacoby, L. L., Yonelinas, A. P., and Jennings, J. M. (1997). ‘The relation between conscious and unconscious (automatic) influences: a declaration of independence’. In Cohen, J. and Schooler, J. (eds) Scientific Approaches to Consciousness.

Kihlstrom, J. F. (2008). ‘The automaticity juggernaut’. In Baer, J. et al. (eds) Psychology and Free Will.

——, Barnhardt, T. M., and Tataryn, D. J. (1992). ‘Implicit perception’. In Bornstein, R. F. and Pittman, T. S. (eds) Perception Without Awareness: Cognitive, Clinical, and Social Perspectives.

——, Shames, V. A., and Dorfman, J. (1996). ‘Intimations of memory and thought’. In Reder, L. M. (ed.) Implicit Memory and Metacognition.

Reber, A. S. (1993). Implicit Learning and Tacit Knowledge: an Essay on the Cognitive Unconscious.

Schacter, D. L. (1987). ‘Implicit memory: history and current status’. Journal of Experimental Psychology: Learning, Memory, and Cognition, 13.

Wegner, D. M. (2002). The Illusion of Conscious Will.

Westen, D. (1999). ‘The scientific status of unconscious processes: is Freud really dead?’ Journal of the American Psychoanalytic Association, 47.

Wilson, T. D. (2002). Strangers to Ourselves: Discovering the Adaptive Unconscious.

cognitive control and consciousness In a forced-choice reaction time task, responses are slower after an error. This is one example of dynamic adjustment of behaviour, i.e. control of cognitive processing, which according to Botvinick et al. (2001) refers to a set of functions serving to configure the cognitive system for the performance of tasks. We focus on the question whether cognitive control requires conscious awareness. We wish to emphasize that the question refers not to the awareness of all aspects of the world surrounding the performing organism, but to the conscious awareness of the control process itself, i.e. consciousness of maintaining the task requirements, supporting the processing of information relevant to the goals of the current task, and suppressing irrelevant information (van Veen and Carter 2006).

During the last quarter of the 20th century, the term control was contrasted with *automaticity (e.g. Schneider and Shiffrin 1977). Automatic processes were defined as being effortless, unconscious, and involuntary, and the terms unconscious and automatic were used by some interchangeably, leading to the conclusion that control should be viewed as constrained to conscious processing. However, it was shown that phenomena considered to be examples of automatic processing, such as the flanker effect and the *Stroop effect, showed a dynamic adjustment to external conditions, corresponding to the notion of control. In particular, some (e.g. Gratton et al. 1992) showed an increase in the flanker effect after an incompatible trial, while others (e.g. Logan et al. 1984) showed sensitivity of the Stroop effect to the various trial types. Consequently, Tzelgov (1997) proposed to distinguish between monitoring as the intentional setting of the goal of behaviour and the intentional evaluation of the outputs of a process, and control, referring to the sensitivity of a system to changes in inputs, which may reflect a feedback loop.

According to these definitions, monitoring can be considered to be the case of conscious control, that is, the conscious awareness of the representations controlled and the very process of their evaluation. The *global workspace (GW) framework proposed by Dehaene et al. (1998) may be seen as one possible instantiation of the notion of monitoring in neuronal terms. Accordingly, unconscious processing reflects the activity of a set of interconnected modular systems. Conscious processing is possible due to a distributed neural system, which may be seen as a ‘workspace’ with long-distance connectivity that interconnects the various modules, i.e. the multiple, specialized brain areas. It allows the performance of operations that cannot be accomplished unconsciously and ‘are associated with planning a novel strategy, evaluating it, controlling its execution, and correcting possible errors’ (Dehaene et al. 1998:11). Within such a framework the anterior cingulate cortex (ACC) and the prefrontal cortex (PFC), two neural structures known to be involved in control, may be seen as parts of the GW and consequently, are supposed to indicate conscious activity.

In contrast, there are models of control that do not assume involvement of consciousness. For example, Bodner and Masson (2001) argue that the operations applied to the prime in order to identify and interpret it result in new memory *representations, which can later be used without awareness. Jacoby et al. (2003) referred to such passive control as ‘automatic’. A computational description of passive control is provided by the conflict-monitoring model of the Stroop task (Botvinick et al. 2001), which includes a conflict detection unit (presumed to correspond to the ACC) that triggers the control-application unit (presumed to correspond to the PFC). To be more specific, consider a presentation of an incongruent Stroop stimulus, for example, the word ‘BLUE’ printed in red ink, with instructions to respond with the colour of the ink. This results in a strong activation to respond with the word presented, and in parallel, the instructions cause activation of the colour the stimulus is written in. The resulting conflict in the response unit is detected by the ACC, which in turn augments the activation of the colour unit in the PFC, leading to the relevant response. Notice that no conscious decisions are involved in this process.

Thus, the question of the relation between consciousness and cognitive control may be restated in terms of whether cognitive control requires conscious monitoring as implied by the GW and similar frameworks, or whether it can be performed without the involvement of consciousness, as hypothesized by the conflict-monitoring model. Mayr (2004) reviewed an experimental framework for analysing the consciousness-based vs consciousness-free approaches to cognitive control by focusing on behavioural and neural (e.g. ACC and ERN activity) indications of control. He proposed to contrast these indications under a condition of conscious awareness vs absence of awareness, of the stimuli presumed to trigger control by generating conflict. For example, in the study of Dehaene et al. (2003), the contrast is between an unmasked (high awareness) condition in which the participants can clearly see the prime, and a *masked (low awareness) condition. After reviewing a few studies that applied such a design, Mayr had to conclude that the emerging picture is still inconclusive. We agree that the proposed approach is very promising, yet some refinements are needed. First, in most cases discussed by Mayr, the manipulation of awareness was achieved by masking a prime stimulus. The critical assumption, that under masking conditions the participants are totally unaware of the masked stimulus and yet process it up to its semantic level, is still controversial (Holender 1986). Second, concerning the casual order: consider the case in which both behavioural and neuronal (i.e. ACC activity) markers of conflict are obtained only when the person is fully aware of the conflict-triggering stimulus. At face value, it seems to indicate that the causal link is from awareness to markers of conflict; however, it could be equally true that the causal link is from the markers of conflict to awareness. Mayr (2004:146) hints at this point by suggesting the ‘possibility that rather than consciousness being a necessary condition for conflict related ACC activity, conflict related ACC activity might be the necessary condition for awareness of conflict’. Third, concerning the assumed notion of conscious control: what is supposed to be manipulated in the awareness-control design is the awareness of the conflict. Actually, however, what is manipulated is the awareness of the stimulus generating the conflict. Such awareness may be seen as a precondition for applying deliberate monitoring.

Thus, in order to advance answering the question whether cognitive control requires consciousness, future research should distinguish between awareness of the stimulus that causes the conflict, awareness of the conflict per se and awareness of the very process of control as implied by the notion of monitoring. Furthermore, such research should emphasize the distinction between consciousness as a condition for control processes and consciousness as a result of control processes.

JOSEPH TZELGOV AND GUY PINKU

Bodner, G. E. and Masson, M. E. J. (2001). ‘Prime validity affects masked repetition priming: evidence for an episodic resource account of priming’. Journal of Memory and Language, 45.

Botvinick, M., Braver, T., Barch, D., Carter, C., and Cohen, J. (2001). ‘Conflict monitoring and cognitive control’. Psychological Review, 108.

Dehaene, S., Kerszberg, M., and Changeux, J. P. (1998). ‘A neuronal model of a global workspace in effortful cognitive tasks’. Proceedings of the National Academy of Sciences of the USA, 95.

——, Artiges, E., Naccache, L. et al. (2003). ‘Conscious and subliminal conflicts in normal subjects and patients with schizophrenia: the role of the anterior cingulate’. Proceedings of the National Academy of Sciences of the USA, 100.

Gratton, G., Coles, M. G. H., and Donchin, E. (1992). ‘Optimizing the use of information: strategic control of activation and responses’. Journal of Experimental Psychology: General, 121.

Holender, D. (1986). ‘Semantic activation without conscious identification in dichotic listening, parafoveal vision, and visual masking: a survey and appraisal’. Behavioral and Brain Sciences, 9.

Jacoby, L. L., Lindsay, D. S., and Hessels, S. (2003). ‘Item-specific control of automatic processes: Stroop process dissociations’. Psychonomic Bulletin and Review, 10.

Logan, G., Zbrodoff, N., and Williamson, J. (1984). ‘Strategies in the color-word Stroop task’. Bulletin of the Psychonomic Society, 22.

Mayr, U. (2004). ‘Conflict, consciousness and control’. Trends in Cognitive Sciences, 8.

Schneider, W. and Shiffrin, R. M. (1977). ‘Controlled and automatic human information processing: I. Detection, search and attention’. Psychological Review, 84.

Tzelgov, J. (1997). ‘Specifying the relations between automaticity and consciousness: a theoretical note’. Consciousness and Cognition, 6.

van Veen, V. and Carter, C. S. (2006). ‘Conflict and cognitive control in the brain’. Current Directions in Psychological Science, 15.

cognitive feelings Cognitive feelings are a loose class of experiences with some commonality in their phenomenology, representational content, and function in the mental economy. Examples include *feelings of knowing, of familiarity, of preference, tip-of-the-tongue states, and the kinds of hunches that guide behavioural choice in situations ranging from implicit *learning paradigms to consumer choice. The concept overlaps with those of intuition, *metacognition, and *fringe consciousness, and probably has some degree of continuity with *emotional feeling.