task switching See WORKING MEMORY

temporality, philosophical perspectives Our experience of time over days, months, and years can involve complexities (as readers of Proust will be aware) but the principal psychological elements involved are not particularly mysterious. Our relationship with the medium-to-long term past depends heavily on memories and beliefs, whereas our relationship with the future is forged by expectations, anticipations, hopes, fears, intentions and the like. It is otherwise with the short term. Consider: (1) Our immediate experience is confined to the present.

We can remember the past and anticipate the future, but our immediate experience is confined to the present. It seems obvious that we are only directly aware of what is happening now.

(2) The present is instantaneous.

This too looks very plausible. Although we often talk of the ‘present century’ or the ‘present day’, the contention that the present does have some duration quickly runs into difficulties: since some parts of such a duration will occur earlier than others, some parts must lie in the past with respect to others, so how can all parts be present? This familiar reasoning suggests that the present is the durationless boundary separating the past from the future. Now consider:

(3) We can directly experience change, succession, and persistence.

Some changes happen too quickly for us to perceive (e.g. a bullet in flight), others happen too slowly (e.g. the growth of an oak tree), but some changes can be perceived: we cannot see a tree growing, but we can certainly see its branches swaying in the wind—just as we a car moving along the street, or hear the whirr of a drill, or feel the ebb and flow of a throbbing toothache. In such cases we seem to be aware of a continuous flow of sensory content.

Clearly, construed as a purely phenomenological claim (3) seems very plausible. Unfortunately, it difficult to reconcile with (1) and (2). Given that change and persistence both possess temporal extension, how can we directly experience them if our immediate experience is confined to a durationless instant?

This apparent paradox gave rise to the doctrine that the present as it features in our experience—the so-called ‘specious present’—is not instantaneous, but rather has some temporal depth. William James refers to it as ‘the short duration of which we are immediately and incessantly sensible’ (1890:631). If change and persistence feature in our immediate experience in the way they appear to, then we may well need to appeal to the specious present to explain how this is possible. But precisely how should the specious present be conceived? Much hangs on this question—not least for our understanding of the general structure of consciousness—but there is little agreement on how it should be answered. Opinions have tended to divide into two main camps: on the one hand, there are those who hold that our consciousness really does extend some short distance through time, on the other there are those who hold that it merely seems to. In the absence of any widely agreed terminology, let us call the latter approach the retention theory (here following Kelly 2004) and the former the duration theory.

1. Retention theories

2. Duration theories

3. Contents and vehicles

4. Psychophysical findings

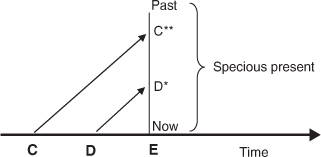

The retention approach is rooted in a widely accepted (perhaps ultimately Kantian) thesis that is then developed in a particular direction. In James’s words, the relevant thesis is that ‘A succession of feelings, in and of itself, is not a feeling of succession.’ (1890:628) It is easy to see what James means. Suppose you hear a succession of notes C, D, E, and that when you hear D you have no recollection whatsoever of having heard C, and similarly, by the time you hear E you have no recollection of having heard D (or C). You would experience the tones in sequence, but you would have no awareness of the sequence. Evidently, to experience a succession as a succession a sequence of experiences must be unified in some manner. It is here that retention theorists introduce a more contentious assumption: they hold that for a succession of contents to be unified in experience they must be apprehended together simultaneously, in a single momentary apprehension. Following Miller (1984), let us call this the principle of simultaneous awareness (PSA). Accordingly, when we hear the succession C–D–E as a succession we first hear C and then hear D accompanied by a representation (or in Husserl’s terminology, a retention) of C which places it in the very recent past, and then we hear E accompanied by a representation of D as ‘just past’ and C as ‘more past’ (Fig. T1). The simultaneous awareness of present and just-past phases of our *stream of consciousness underpins the experience of succession in such cases: when we hear the later note the earlier ones have not entirely vanished from our consciousness.

Fig. T1. Hearing the succession C–D–E.

C. D. Broad (1938) expounded an account along these lines in more detail, and a yet more sophisticated— though at times obscure—account (or succession of accounts) had already been developed by Husserl, in his various writings on the topic. The relevant Husserlian texts can be found in Husserl (1991); see Miller (1984) for a useful exposition.

The retention approach faces two particularly pressing problems. The first concerns the relationship between neighbouring specious presents. The vertical line in Fig. T1 depicts just one specious present; in reality (if the retention theory were correct) it would be surrounded on each side by other specious presents, each with a slightly different content. If each specious present consists of an entirely self-contained phase of experience, it is by no means obvious how they can combine to form a continuous stream of consciousness of the sort we typically enjoy. The second difficult lies with the retentions themselves: how is that a collection of simultaneously occurring momentary contents can appear to be spread through time? Returning to Fig. T1, why is it that E, D*, and C** are apprehended as a succession rather than as a chord?

Husserl was well aware of both these potential pitfalls. He appreciated that successive stream-phases had to be connected, and postulated a level of ‘absolute time-consciousness’ to accomplish this. He also saw that if retentions were akin to ordinary memory-impressions they would not appear spread through time. He thus stipulated that retentions function in a different way: they directly present the past as past. Opinion remains divided over whether Husserl solved these problems, or merely appreciated what a successful solution would look like. (Dainton 2003, Gallagher 2003).

Despite these difficulties (and obscurities), the retention theory in its Husserlian guise has inspired work in cognitive science and neuroscience: see Van Gelder (1996), Varela (1999), Grush (2005).

Whereas the retention approach confines our immediate experience to momentary slices, duration theorists hold that it extends a short distance through time, and hence is well suited to embrace temporally extended events. When it comes to spelling out the precise manner in which our consciousness extends through time, opinions diverge.

In his earlier writings on time-perception in his Scientific Thought, Broad (1923) combined the thesis that our consciousness is temporally extended with PSA: he accomplished this by holding that we are at each moment aware of a short stretch of the recent past, in the manner depicted in Fig. T2. Only three momentary acts of awareness are shown here—A1, A2,

This theory certainly allows us to see how the specious present could be ‘the short duration of which we are immediately and incessantly sensible’, but in other respects it is problematic. Since the contents of neigh-bouring awarenesses (such as A1 and A2) overlap, will not the same occurrences be experienced several times over? There is a further, and more general worry. If Broad’s theory is correct, we are at each moment directly aware of what has been and gone. How plausible is this?

An alternative (and perhaps less problematic) form of the duration theory emerges if the commitment to PSA is dropped. Rather than supposing that the different phases of temporal spread of content are unified by being presented simultaneously to a momentary awareness, we recognize that the relationship of ‘experienced togetherness’ (or co-consciousness) comes in synchronic and diachronic forms: co-consciousness can obtain at a time, but it can also obtain over (brief) intervals of time. When it does, the contents so unified have the form of what James termed a ‘duration-block’, i.e. a temporal spread that is apprehended as a temporally extended whole, a whole whose parts are connected by the relationship of diachronic co-consciousness. Since PSA has been abandoned, it is no longer being assumed that the successive phases of a duration-block are apprehended simultaneously at any one point in time: at the phenomenal level they exist only as a succession. Wholes of this kind are not fully experienced as such at each moment of their duration, rather they are experienced as wholes over the full course of their duration. (There may be a sense in which James’s talk of a ‘block’ in this context is misleading: these units of experience are no means static or frozen, they typically contain change and movement.)

Fig. T2. Momentary acts of awareness.

How do duration-blocks, thus conceived, combine to form a stream of consciousness? Since from a *phenomenological perspective our experience is typically continuous, not packaged into discrete pulses, it is not a (phenomenologically) viable option to hold that they are laid end to end, in the manner of a row of bricks. Continuity can be secured if neighbouring blocks overlap, by sharing common parts, in the manner shown in Fig. T3. Hence, and recalling our earlier example, if a subject hears a succession of tones C–D–E, this might take the form of two specious presents, one consisting of the succession C–D, the other D–E, where the experiencing of D in the latter is numerically identical with the experiencing of D in the former. If successive specious presents overlap in this manner, the repetitions which plagued Broad’s version of the duration theory are eliminated.

This ‘overlap’ version of the duration approach circumvents some problems, but it also faces difficulties of its own. How is the apparent direction of experienced time to be explained on this view? Can phenomenal unity be spread through time in the way envisaged? For further discussion see Dainton (2000) and Kelly (2004).

A signpost bearing the message ‘I weigh a ton’ need not itself weigh a ton, and what goes for signposts goes for thoughts and utterances and other modes of representation. Quite generally we need to distinguish between the vehicle of a representation and its content: the latter is the state of affairs represented, the former is what carries the representation. Although it is possible for vehicles to possess the properties represented by their contents—some signposts saying ‘I weigh a ton’ do weigh a ton—there is no need for them to do so: it may well be the case that no conscious thoughts carrying this content weigh anything like a ton.

Experiences have temporal features (they can extend through time) but they also have contents which represent temporal features. How do the temporal features of experiences which are the vehicles of such contents relate to the contents themselves? Since experiential contents can be classified in different ways this is potentially a complex issue, but some basic observations can be made. It is obvious that in the case of conscious thoughts there can be a wide discrepancy between vehicle-properties and content-properties: it can take almost no time at all to think ‘This has been going on for hours’. In the case of perception, the time-lags created by the finite speed of signal transmission and neural processing mean we perceive events some time after they actually occur (millions of years in the case of distant stars). Discrepancies of this kind can be eliminated by construing ‘content’ in a narrow way, so as to refer only to the phenomenal features of our experiences, rather than their usually distant causes. Even when content is thus construed, on the retention model there remains a difference, of a systematic kind, between content- and vehicle-properties. The relevant vehicles are momentary (or very brief) experiences, but the phenomenal contents of these experiences are typically not momentary: the content of the momentary experience depicted in Fig. T1 is the sequence of phenomenal tones C–D–E. This divergence coexists with a correlation, in that there is no discrepancy between the order in which experiences occur and the order in which they are represented as occurring. Duration theorists agree that the latter correlation obtains but posit a still stronger one. The experience which carries the content C–D–E is itself extended over time. Indeed, if this content is construed narrowly (as denoting purely phenomenal items, as opposed to their environmental causes) the duration of the experiential vehicle and its content perfectly coincide.

Dennett moves in the opposite direction. He argues that (over quite brief time scales) there need be no correspondence between the order in which we represent events as occurring and the order in which these events are apprehended: ‘what matters is that the brain can proceed to control events ‘under the assumption that A happened before B’ whether or not the information that A has happened enters the relevant system of the brain and gets recognized as such before or after the information that B has happened.’ (Dennett 1991:149) It is not obvious (to say the least) that this degree of dissociation is compatible with the phenomenology of temporal perception: when I directly perceive a succession C–D, it is hard to believe that my hearing of C occurs later than my hearing of D. But Dennett’s propounds this view in the context of his *multiple drafts model, according to which there is no fact of the matter as to the precise moment at which a subject becomes conscious of a given stimulus. For Dennett, the project of attempting to discover the temporal microstructure of phenomenal consciousness is misconceived, and our consciousness does not possess the continuity writers such as James attribute to it—and in line with this Dennett often prefers to speak of ‘informational vehicles’ rather than ‘experiences’. It goes without saying that Dennett’s operationalist conception of consciousness—‘There is no reality of conscious experience independent of the effects of the various vehicles of content on subsequent action’ (1991: 132)—is itself contentious.

Fig. T3. Continuity secured by overlapping blocks.

If the specious present does exist (in one or other of the forms just outlined), what is its duration? Since there may well be intersubjective differences—and even in the case of a single subject, it may be different at different times— there may well be no one answer to this question. Nonetheless, we are all in a position to make our own rough estimations. If I clap my hands twice in a row, I am no longer experiencing the first clap when I hear the second, so an answer of ‘a few seconds at most’ has some plausibility, at least in the auditory case. James estimated that it typically extended to a dozen or so seconds, and sometimes more. Most commentators have found been baffled by James’s opting for an estimation of this magnitude, and more recent experimental work (e.g. Rühnau 1995) suggests a far shorter duration.

Experimental evidence has given rise to further puzzles. One such derives from Libet’s work on how long it takes our brains to turn a perceptual stimulus into a conscious experience (Libet 2004). Libet concluded that the delay is typically of the order of a full half-second. If this is correct the implications are potentially significant: since we frequently react to events in less than half a second, it seems that our conscious decision-making is often nothing more than an epiphenomenal after-effect. For critical discussion of Libet see Dennett (1991:Chs 5–6) and Pockett (2000).

For further relevant psychophysical results and discussion see TEMPORALITY, SCIENTIFIC PERSPECTIVES.

BARRY DAINTON

Broad, C. D. (1938). An Examination of McTaggart’s Philosophy.

Dainton, B. (2000). Stream of Consciousness. London: Routledge.

Dainton, B. (2003). ‘Time in experience: reply to Gallagher’. Psyche, 9.

Dennett, D. (1991). Consciousness Explained.

Gallagher, S. (2003). ‘Syn-ing in the stream of experience: time-consciousness in Broad, Husserl, and Dainton’. Psyche, 9.

Grush, R. (2005). ‘Brain time and phenomenological time’. In Brook, A. & Atkins, K. (eds) Cognition and the Brain: The Philosophy and Neuroscience Movement.

Husserl, E. (1991). On the Phenomenology of the Consciousness of Internal Time (1893–1917), ed. and transl. J. B. Brough.

James, W. (1890/1950) The Principles of Psychology.

Kelly, S. (2004). ‘The Puzzle of Temporal Experience’. In Brook, A. and Atkins, K. (eds) Philosophy and Neuroscience, Cambridge: Cambridge University Press.

Libet, B. (2004). Mind Time.

Miller, I. (1984). Husserl, Perception, and Temporal Awareness.

Pockett, S. (2000). ‘On subjective back-referral and how long it takes to become conscious of a stimulus: A reinterpretation of Libet’s data’. Consciousness and Cognition, 11.

Rühnau, E. (1995). ‘Time gestalt and the observer’. In Metzinger T. (ed.) Conscious Experience.

Van Gelder, T. (1996). ‘Wooden iron? Husserlian phenomenology meets cognitive science’. Electronic Journal of Philosophy, 4.

Varela, F. (1999). ‘Present-time consciousness’. Journal of Consciousness Studies, 6.

temporality, scientific perspectives Most of the actions that brains carry out on a daily basis—such as perceiving, speaking, and driving a car—require exact timing on the scale of tens to hundreds of milliseconds. Although this timing may seem effortless, brains in fact have a difficult problem to solve: signals from different modalities are processed at different speeds in distant neural regions. To be useful for a unified conscious impression of ‘what just happened’, signals must become aligned in time and correctly tagged to outside events. Understanding the timing of events—such as a motor act followed by a sensory consequence—is critical for moving, speaking, determining causality, and decoding the barrage of temporal patterns at our sensory receptors.

Scattered confederacies of investigators have been interested in time for decades, but only in the past decade has a concerted effort been applied to old problems. Now, experimental psychology is striving to understand how animals perceive and encode temporal intervals, while electrophysiology and neuroimaging unmask how neurons and brain regions underlie temporal computations. The questions being addressed include: How are signals entering various brain regions at varied times coordinated with one another for a unified conscious experience? What is the temporal precision with which perception represents the outside world? How are intervals, durations, and orders coded in the brain? What factors (causality, attention, adrenaline [epinephrine], eye movements) influence temporal judgements, and why? Does the brain constantly recalibrate its time perception?

Most of what we know about time in the brain comes from psychophysical experiments. One class of studies involves ways in which duration perception distorts—for example, observers can misperceive durations during rapid eye movements (saccades), or after adaptation to flickering or moving stimuli. More dramatically, during brief but dangerous events such as car accidents and robberies, many people report that events seem to have passed in slow motion, as though time slowed down. To test this sort of conscious experience, Stetson et al. (2007) dropped participants in free fall from a tower 150 feet (46 m) high (they were caught in a net below). During this 3 s psychophysical experiment, changes in the speed of visual processing were measured by a wristwatch-like display strapped to the participant’s wrist. The surprising result: although participants retrospectively estimated (with a stopwatch) the duration of their own fall to be 35% longer than others’ falls, they did not gain increased temporal resolution—in other words, they could not actually see the world in slow motion. This result illustrates that conscious ‘time’ is not a unified experience in the brain, but instead that aspects of it (e.g. durations) can change with no concomitant change in other aspects (e.g. flicker rate).

Safer experiments in the laboratory have confirmed this same conclusion. For example, when many stimuli are shown in succession, an unpredicted ‘oddball’ stimulus in the series appears to last subjectively longer than the repeated stimuli when they are presented for the same objective duration (Tse et al. 2004). This illusion was originally described as the ‘subjective distortion of time’, but we now know that it is only duration, not time in general, that is distorted: even during the perceptually expanded oddball, flicker rates do not change and auditory pitches do not lower.

It now seems likely that the story of time will emerge in the same manner as the story of vision. Although vision seems like an effortless, unified experience, it is underpinned by a motley crew of different neural mechanisms. The same applies in the temporal domain. Varied judgements—such as simultaneity, duration, flicker rate, order, and others—are underpinned by separate neural mechanisms that usually work in concert but are increasingly separable in the laboratory—demonstrating that their cooperation is typical but not necessary. The word ‘time’ is currently loaded with too much semantic weight; future experiments will be forced to be more specific about which aspect of time they are exploring, abandoning the naive assumption that time is a single, unified experience.

Another illustration that time perception is a construction of the brain comes from examples of its dynamic recalibration. Judging the order of action and sensation is essential for determining causality. Accordingly, the nervous system must be able to recalibrate its expectations about the normal temporal relationship between action and sensation in order to overcome changing neural latencies. A novel illusion in this domain shows not only that the perceived time of a sensation can change, but also that temporal order judgements of action and sensation can become reversed as a result of a normally adaptive recalibration process. When a fixed delay is consistently injected between the participant’s keypress and a subsequent flash, adaptation to this delay induced a reversal of action and sensation: flashes appearing at delays shorter than the injected delay were perceived as occurring before the keypress (Stetson et al. 2006). This illusion appears to reflect a recalibration of motor-sensory timing, which results from a neural prior expectation that sensory consequences should follow motor acts with little delay.

Another clue in our search for understanding time in the brain is the basic temporal limits on perceiving various aspects of the visual world. Specialized processors in our visual system allow us to perceive certain changes rapidly, on timescales of a few dozen milliseconds or less. But when a specialized detector is not available for a visual timing judgement, the brain shows very poor temporal resolution (e.g. six changes per second; Holcombe and Cavanagh 2001). There is no single speed at which the brain processes information, consistent with the emerging picture that a diverse group of neural mechanisms mediates temporal judgements.

We have so far highlighted psychophysical findings which demonstrate that time judgements can distort, recalibrate, reverse, or have a range of resolutions depending on the stimulus and on the state of the viewer. But the theoretical details of the neural mechanisms are in debate. For the experience of duration, at least at short time scales, some have proposed a simple ‘counter’ model, in which one part of the brain provides the ticking of a pacemaker, and another mechanism acts like a counter. In this framework, distortions of time perception are thought to be the result of a speeding or slowing pacemaker. If the brain’s assessment of duration is the result of the output of such a counter, it would come to the wrong conclusion that more objective time had passed.

The counter model has lost momentum, however, largely because no good evidence has emerged to support it. In contrast to a counter which integrates events, a ‘state-dependent’ network model proposes that the way network patterns evolve through time can code for time itself (Mauk and Buonomano 2004). In other words, as patterns of neural activity unfold through time, a snapshot of the pattern at every moment can encode how long it has been since the original event happened. This framework suggests that temporal processing is distributed throughout the brain rather than relying on a centralized timing area. Further experiments are needed to cleanly separate the domains of integrator models and state-dependent network models, and understanding the difference will be critical to our search for how brains tell time.

At the level of the behaving animal, experiments in monkeys have shown that neurons in the posterior parietal can encode signals related to the passage of time. In humans, *functional brain imaging studies such as PET and fMRI are identifying brain regions (including the posterior parietal area) that are involved in various sorts of temporal judgements.

Over the last decade researchers have come to view certain disorders—e.g. aphasias and dyslexias—as potentially being disorders of timing rather than disorders of language. Other deficits in time perception are found in a variety of disorders such as Parkinson’s, attention deficit hyperactivity disorder (ADHD), and *schizophrenia. Ongoing studies of time in the brain are expected to uncover other contact points with clinical neuroscience.

DAVID M. EAGLEMAN

Buhusi, C. V. and Meck, W. H. (2005). ‘What makes us tick? Functional and neural mechanisms of interval timing’. Nature Reviews Neuroscience, 6.

Holcombe, A. O. and Cavanagh, P. (2001). ‘Early binding of feature pairs for visual perception’. Nature Neuroscience, 4

Mauk, M. D. and Buonomano, D. V. (2004). ‘The neural basis of temporal processing’. Annual Review of Neuroscience, 27.

Stetson, C., Cui, X., Montague, P. R., and Eagleman, D. M. (2006). ‘Motor-sensory recalibration leads to an illusory reversal of action and sensation’. Neuron, 51.

——, Fiesta, M. P., and Eagleman D. M. (2007). ‘Does time really slow down during a frightening event?’ PLoS ONE, 2.

Tse, P. U., Intriligator, J., Rivest, J., and Cavanagh, P. (2004). ‘Attention and the subjective expansion of time’. Perception and Psychophysics, 66.

theory of mind and consciousness The human theory of mind (ToM) encompasses the cognitive and conceptual tools with which people grasp the mental states of others.

1. What ToM is and is not

2. The importance of ToM in social functioning

3. Research topics

4. ToM and consciousness

The ‘theory of mind’ label is often used synonymously with terms such as naive theory of action, folk psychology, or mind-reading. Most fundamentally, ToM refers to the network of concepts and assumptions people make about what ‘minds’ are and how they relate to behaviour (Wellman 1990). Central elements in this network include the concepts of agency and intentionality, the distinction between observable behaviour and unobservable mental states, and distinctions among a number of specific mental states, such as belief, desire, intention, and various emotions (Malle 2005). Some scholars also include within the ToM label the cognitive mechanisms by which people come to represent others’ mental states. But theorists disagree on which mechanisms are most central, and there are in fact many such mechanisms, such as rule-based inferences, stereotypes, simulation (e.g. grasping the other’s mental states by running a ‘model’ composed of one’s own mental states), emotional contagion (e.g. the mere presence of a joyful person makes another person joyful as well), and so on.

In addition to clarifying what ToM is, it is also important to clarify what it is not. ToM is not a set of cultural beliefs about mind and behaviour. Rather, it comprises a conceptual framework, a set of fundamental distinctions, requisite for developing any cultural beliefs about how minds and behaviour work. ToM is also not a set of social norms or obligations that can be adhered to or disregarded. A social norm might be to punish unintentional behaviours less than intentional ones, but the intentional–unintentional distinction itself is not a cultural norm. Strictly, ToM should also be distinguished from the ability to understand other minds. ToM provides the conceptual assumptions and distinctions on which a variety of psychological processes (inference, simulation, empathy, etc.) rely, and concepts and processes together constitute the person’s ability to grasp mental states.

Ubiquitous in social interaction, ToM is often taken for granted by ordinary people and scientists alike—after all, fish are usually the last to notice the water. But the importance of ToM in successful social functioning cannot be overstated, as people who appear to lack ToM often have tremendous difficulties in everyday social situations. Consider a man leaving a tip at a restaurant. Without a ToM, one could give a mechanistic account of this behaviour: ‘The man left the money on the table because something forced his hand to grab his wallet, remove some money from it, and set the money on the table.’ How would people with a ToM account for this behaviour? They would refer to beliefs, desires, and the agent’s decision to act (Dennett 1987). For example, ‘He left a tip because he wanted the waiter to know he appreciated the service’; or ‘Because he thought that tipping is expected in this country’; or ‘Because he had decided to become a more generous person and thought this was good opportunity to start.’ For organisms with a ToM, these explanations not only clarify what caused the behaviour but how one can make sense of it as an interplay between the person’s mental states and observable behaviour.

Recent research on the human ToM has focused on a variety of topics: ToM’s rapid development from infancy into the early school years; the possible absence of a genuine ToM even in our closest primate relatives (they appear to be excellent behaviour readers but apparently not mind-readers); the relationship between ToM and other faculties, such as executive functioning and language; the grounding of ToM in specific brain mechanisms; and the severe challenges for people who seem to lack ToM capacities—primarily *autistic individuals and perhaps some with *schizophrenia (Baron-Cohen et al. 2000).

An emergent topic is the question of whether ToM is employed primarily consciously or unconsciously. If ToM is the conceptual framework on which a number of different psychological tools rely, then the answer is twofold. As a conceptual framework, ToM is normally unconscious (though it can be made conscious by specifically asking people about their conceptual assumptions). Among the cognitive mechanisms that operate on those concepts, some are conscious, some are not. Among the conscious ones, we can list active simulation of the other’s mental states, search for prior knowledge about the behaviour, the agent, or the context, and specific attempts to detect subtle signs in the agent’s outward behaviour that might reveal inward states. The list of unconscious processes is longer: tracking gaze and body orientation, parsing the behaviour stream into intention-relevant units, empathy by emotional contagion, reading of facial and body expressions that transparently indicate the underlying mental state, and projection of one’s own beliefs and perceptions onto another person. For some of these unconscious processes, the perceiver may not literally represent a mental state, making them precursors or facilitators of ToM. Many of these processes have indeed been found operative at a very early age and some among other primates. Several researchers assume that a conscious grasp of mental states requires the prior operation, both ontogenetically and phylogenetically, of many of these unconscious processes.

Even unconscious ToM mechanisms can confer powerful adaptive advantages on the individual. Parsing others’ intentional actions, recognizing their goal-directedness, and sensing in one’s own affective system the affect of others both facilitates social coordination and opens opportunities for influence and manipulation. In addition, processes like emotional contagion may select for prosocial behaviours because making others feel good has the attractive consequence that, due to the automatic empathy with others’ states, one feels good oneself. Indeed, there is experimental evidence that prosocial behaviour can produce positive mood in the helper.

If ToM has evolved from a more automatic and unconscious to a more deliberate and conscious variety, one might ask whether the evolution of ToM can tell us something about the evolution of consciousness. What might precipitate the emergence of conscious processes in social perception? Automatic processes are sufficient as long as the input stimuli fall within the sensitivity range of the response mechanism, such as prototypical facial expressions, coordinated gaze and body orientation, repeated behaviour patterns, and the like. However, when the input stimuli lie outside these ranges (e.g. because they are novel or ambiguous), the organism must respond with a more flexible system. Conscious processing appears to provide two distinct advantages: it slows down processing to gain time for ‘re-computing’ the input stimuli, and it allows, in service of this re-computation, an open search for and consideration of any potentially relevant information, be it in the immediate situation or stored in memory. Thus, the organism interrupts a normally fast and fixed stimulus–response linkage and takes time to build a creative new link, holding and experimenting with several pieces of information simultaneously. The early origins of such conscious processing can be seen in the infant’s longer looking times towards objects or scenes that violate the infant’s assumptions; longer looking presumably equates here to a slowing and re-computing of information.

The slowing and creative re-computation as one aspect of consciousness both points to consciousness’ important problem-solving function and makes intelligible why, as is assumed by many scholars, the evolution of ToM may have resulted from a ratcheting-up process in which humans had to become more sophisticated in order to make sense of ever more sophisticated conspecifics. Organisms with consciousness, who act upon novel stimuli with novel solutions, are less predictable than stimulus–response creatures. Such conscious organisms in turn pose new complex and surprising stimuli for their conspecifics, who have to consciously re-compute them and therefore show yet another level of novel responses, which figure as yet another layer of novel stimuli, and so on. Thus emerges an escalation between humans’ complexity in behaviour and their complexity in perception. Interestingly, the perception of others as complex, creative, and unpredictable in response to novel situations may have given rise to the central assumption of ToM that humans are intentional agents who can make free choices. Conversely, humans’ actual slowing and creative recomputation of stimuli may very well define the nature of free will.

Another aspect of the relationship between ToM and consciousness concerns conscious vs unconscious mental states as the objects of social perception. Perhaps the most remarkable mental states that organisms with a ToM represent are another’s conscious reasons to act. Reasons, typically beliefs and desires, are seen as motivating and rationalizing an intention, which in turn constitutes perceived free will. In our times, humans additionally make inferences about unconscious motives. It is not clear whether these inferences occur in all cultures, or existed even 500 years ago. The conscious/unconscious distinction may represent merely a culturally bound application of the ToM framework to a new domain. Indeed, the domain of the unconscious is understood with the same concepts that apply to the mental domain in general: it contains specific states (e.g. beliefs, desires) that are not under the person’s intentional control and are, in a sense, unobservable even to the person him- or herself.

Future ToM research will attempt to provide a coherent theory of how early infant cognition of behaviour develops into full-fledged adult inferences of mind; outline a similar progression at the evolutionary level; illuminate ToM deficits in some individuals and what might be done to ameliorate them; and document in more detail the social functions and adaptations afforded by a ToM. There will also be attempts to identify brain mechanisms that underlie ToM capacities, but because of the involvement of specific concepts and the breadth of the encompassed cognitive processes, the research will not provide a specific location but perhaps a better understanding of the interplay of all the elements that go into the complex phenomenon of a ToM.

See also AUTOMATICITY; COGNITION, UNCONSCIOUS; FUNCTIONS OF CONSCIOUSNESS;

BERTRAM F. MALLE AND JESS SCON HOLBROOK

Baron-Cohen, S., Tager-Flusberg, H., and Cohen, D. (eds) (2000). Understanding Other Minds: Perspectives From Developmental Cognitive Neuroscience.

Dennett, D. C. (1987). The Intentional Stance.

Malle, B. F. (2005). ‘Folk theory of mind: conceptual foundations of human social cognition’. In Hassin, R. et al. (eds) The New Unconscious.

Wellman, H. (1990). The Child’s Theory of Mind.

third person See FIRST PERSON/THIRD PERSON

threshold, objective vs subjective See DISSOCIATION METHODS

tickling Tickling is a pleasure that ‘cannot be reproduced in the absence of another’, as psychoanalyst Adam Phillips wrote (Phillips 1994). Why can’t you tickle yourself? Evidence suggests that the sensory consequences of some self-generated movements are perceived differently from identical sensory input when it is externally generated. An example of such differential perception is the phenomenon that people cannot tickle themselves (e.g. Weiskrantz et al. 1971). We carried out a series of experiments to investigate why this is the case.

In the first set of experiments, subjects were asked to rate the sensation of a tactile stimulus on the palm of their hand when the correspondence between self-generated movement and its sensory consequences was altered. Subjects moved a robotic arm with their left hand and this movement caused a second foam-tipped robotic arm to move across their right palm. By using this robotic interface so that the tactile stimulus could be delivered under remote control by the subject, delays of 100, 200, and 300 ms were introduced between the movement of the left hand and the tactile stimulus on the right palm. The result is that the sensory stimulus no longer corresponds to what is predicted, so as the delay is increased the sensory prediction becomes less accurate. The results showed that subjects rated self-produced tactile stimulation as being less tickly, intense, and pleasant than an identical stimulus produced by the robot (Blakemore et al. 1999). Furthermore, subjects reported a progressive increase in the tickly rating as the delay was increased. These results suggest that the perceptual attenuation of self-produced tactile stimulation is due to precise sensory predictions. When there is no delay, a forward model correctly predicts the sensory consequences of the movement, so no sensory discrepancy ensues between the predicted and actual sensory information, and the motor command to the left hand can be used to attenuate the sensation on the right palm. As the sensory feedback deviates from the prediction of the model (by increasing the delay) the sensory discrepancy between the predicted and actual sensory feedback increases, which leads to a decrease in the amount of sensory attenuation.

In the second series of experiments, we investigated the neural basis of this phenomenon. In an fMRI study, subjects experienced tactile stimulation on their palm that was produced either by the subject himself, or by the experimenter. The results showed an increase in activity of the secondary somatosensory cortex (SII) and the anterior cingulate cortex (ACC) when subjects experienced an externally produced tactile stimulus relative to a self-produced tactile stimulus. The reduction in activity in these areas in response to self-produced tactile stimulation might be the physiological correlate of the reduced perception associated with this type of stimulation. While the decrease in activity in SII and ACC might underlie the reduced perception of self-produced tactile stimuli, the pattern of brain activity in the cerebellum suggests that this area is the source of the SII and ACC modulation. In SII and ACC, activity was attenuated by all movement: these areas were equally activated by movement that did and that did not result in tactile stimulation. In contrast, the right anterior cerebellar cortex was selectively deactivated by self-produced movement which resulted in a tactile stimulus, but not by movement alone, and significantly activated by externally produced tactile stimulation. This pattern suggests that the cerebellum differentiates between movements depending on their specific sensory consequences. A further experiment supported this hypothesis. When delays were introduced between the movement and its tactile consequences, cerebellar activity increased (Blakemore et al. 2001). The higher the delay, the higher was activity in the cerebellum. We suggest that the cerebellum is involved in signalling the discrepancy between predicted and actual sensory consequences of movements.

SARAH-JAYNE BLAKEMORE

Blakemore, S.-J., Wolpert, D. W., and Frith, C. D. (1998). ‘Central cancellation of self-produced tickle sensation’. Nature Neuroscience 1.

——, Frith, C. D., and Wolpert, D. W. (1999). ‘Spatiotemporal prediction modulates the perception of self-produced stimuli’. Journal of Cognitive Neuroscience, 11.

——, Frith, C. D., and Wolpert, D. W. (2001). ‘The cerebellum is involved in predicting the sensory consequences of action’. NeuroReport, 12.

Phillips, A. (1994). On Kissing, Tickling, and Being Bored. Psychoanalytic Essays on the Unexamined Life.

Weiskrantz, L., Elliot, J., and Darlington, C. (1971). ‘Preliminary observations of tickling oneself’. Nature, 230.

tip of the tongue See FEELING OF KNOWING

touch Touch is the most ill-defined sense modality. As Aristotle said ‘It is a problem whether touch is a single sense or a group of senses … we are unable clearly to detect in the case of touch what the single subject is which … corresponds to sound in the case of hearing.’ (Aristotle, De Anima 422b20–24).

1. What is touch?

2. Touch and reality

3. Touch and the body

If sensory modalities are to be individuated by their proper objects, there seems to be no single modality of touch, for there are too many proper objects: texture, temperature, solidity, humidity, contact, weight, pressure, force, material bodies. Other individuating criteria are equally problematic. There is no obvious proper organ for touch. The hands are too restrictive, but neither the skin nor the whole body is restrictive enough, for each contains other sensory organs. Looking for more specific organs, one faces the multiplicity of receptors involved in touch: nociceptors, thermoceptors, and mechanoreceptors (which themselves divide into Meissner and Pacinian corpuscles, Ruffini organs, Merkel discs, hair receptors, and bare nerve endings). Neuroscience textbooks often define touch extensionally as the sense mediated by cutaneous mechanoreceptors (except mechanical nociceptors), but this definition seems to be ad hoc.

A third option is to individuate senses by their *introspectible character. Here again, it is not obvious what *qualia experiences of heat, weight, and humidity might have in common. One might argue that they all involve bodily feelings, but if so, pain, itches, tickles, experiences of taste, and proprioception should be considered as instances of tactile perception as well.

In response to these difficulties many theorists attempt to find a happy medium between splitting the sense of touch into multiple senses and reducing some proper objects to others. With respect to the first option, most people agree that touch differs from senses of the body (e.g. *proprioception, kinaesthesis, nociception, hunger, thirst). Likewise, the sense of temperature and the sense of force (or pressure) are widely held to be distinct (Weber 1846). More controversially, Katz (1925) distinguishes a specific vibration sense. With respect to the second option, one might reduce the felt properties of (a) hardness, solidity, weight, texture, and vibration to spatiotemporal patterns of pressures; (b) humidity, liquidity, and clamminess to complexes of felt pressures and temperatures; and, more controversially, (c) pressure to spatial properties of the body (Armstrong 1962, who has since abandoned this view).

There are, however, more radical responses to the problem of defining the sense of touch. The first holds that touch is not a sense, by denying the intentionality of tactile sensations. According to this view, experiences of touch are merely subjective feelings, contingently associated with physical contacts. The second strategy, attributed to Democritus by Aristotle, claims that all sensory modalities are forms of touch. Both strategies rely on a reduction of tact to mere physical contact.

If a definition can be given, one has still to distinguish between different types of touch. An influential distinction is between passive touch, which is static and merely cutaneous, and active touch (or haptics), which involves exploratory movements. However, this influential distinction suffers from several problems. First, not all movements are exploratory. The notion of active touch confuses the notions of kinaesthesis and motor control. When the subject’s hand is moved by the experimenter, only kinaesthesis comes into play. Moreover, even when the body does not move, one may still distinguish between static touch (the pressure of the cat on your knees) and cinematic touch (the motion of the beetle on your arm). Second, there are different types of exploratory movements. One can follow the contours of an object, or one can grasp, lift, wield, and manipulate it with muscular effort. The latter has been called effortful or dynamic touch (Turvey and Carello 1995, Gibson 1966).

Touch has often been claimed to play a special role in the origin and justification of our belief in the external world. In this respect, we might say that touch is the most objective of the senses because, unlike other sensory modalities, it is needed for common-sense realism. There are two main versions of this view.

(a) Kant suggested that the sense of touch is the most reliable sense because it puts us in direct contact with the world, leaving no room for distortion of the information coming from the object. Optical phenomena such as refraction or perspective distortions appear to have no tactual counterpart. Consequently, there is no need to postulate mental intermediaries, tactile sense-data, between us and the world (O’Shaughnessy 1989). Insofar as this view attempts to overcome the argument from illusion by denying the possibility of tactile illusions, it is probably false. Indeed, tactile illusions are possible because (1) one is not necessarily in direct contact with the touched object (e.g. prosthetic touch) and (2) even if one is in direct contact, the presence of other types of information like visual information may influence and distort tactile sensations. There is still room for misrepresentation after transduction, resulting in physiological and psychological illusions. There is no reason why those internal processes would be more reliable in the case of touch.

(b) The sense of touch is not necessarily more reliable than the other senses, but it is the only one to provide access to certain essential properties of physical bodies. This proposal has been developed in two rather different ways.

According to Berkeley, touch is the only sense that informs us about the third spatial dimension, in contrast with sight, which is only two-dimensional. However, one might respond that touch, on the contrary, is essentially a sequential and temporal sense that does not allow for direct perception of spatial properties (see Evans 1985). Another response is to question the assumption that vision is two-dimensional.

By contrast, Locke argued that touch is the only sense to provide us with perceptual access to the impenetrability of objects. This form of access involves both passive and active touch. Passive touch provides exclusive access to causal relationships of forces via cutaneous sensations of pressure. Active touch enables us to experience the resistance of objects to our will, which is often held to be at the origin of our belief in the external world (Katz 1925, Baldwin 1995).

The second distinctive feature of touch is that it seems to be closely tied to the body (Katz 1925, O’Shaughnessy 1989, Martin 1992). However, since all sensory modalities somehow depend on bodily information, the challenge is to explain the uniqueness of the dependency between touch and bodily awareness. Are bodily sensations just a subjective *epiphenomenon, as Reid claimed, or do they constitutively calibrate touch?

O’Shaughnessy (1989) and Martin (1992) suggest that the body functions as a template for tactile perception, i.e. tactile perception of spatial properties of the object relies on the experience of similar properties of one’s body. This is particularly salient for shape in active touch: we feel the circularity of a glass because we feel the circularity of the motion of our hand. Even in passive touch, the experience of the shape of objects might mirror the feeling of the concavities of the flesh. As to size, an object feels bigger if the touched body part feels temporarily elongated because of kinaesthetic illusions. This template function also holds for the experience of location: tactile properties are ascribed to a location within a spatial representation of the body. More precisely, the experimental literature has distinguished between two kinds of tactile localization depending on the context. Actions directed toward the location of tactile stimuli are based on a sensorimotor map. Judgements about their location are based on a visuospatial map. This distinction is illustrated by patients with numbsense who can point to the touched body part though they cannot identify it, while deafferented patients show the reverse dissociation.

We can highlight two kinds of difficulties for the template theory (Scott 2001). First, veridical tactile perception does not necessarily match proprioceptive sensations, as in extrasomatic touch. While scanning the outline of an object with a stick, the shape of the hand movement differs from the shape of the object. However, the template theory can reply that extrasomatic touch is prosthetic. Bodily awareness integrates tools as appendices of body.

Second, tactile *illusions are not necessarily linked with proprioceptive illusions. A stick that is seen curved also feels curved, despite the fact that the exploratory movement is straight. There seems to be a predominance of vision on touch over proprioception. However, the template theory can reply that in this illusion vision also influences proprioception. Here are some more illusions. If one places an object between one’s fingers while they are crossed, one will feel two objects (Aristotle, Metaphysics). There is a mismatch between one’s proprioceptive awareness of the crossed fingers and the tactile processing which does not take the fact that one’s fingers are crossed into account. Similarly, if one rotates one’s tongue by 90°, one will not perceive the orientation of a tactile stimulus applied to one’s tongue as identical to the orientation of the tongue itself. Furthermore, if one crosses one’s hands, one will have difficulty judging the temporal order of tactile stimulations delivered on the crossed hands due to conflict between the body-centred and the external frames of reference. The template of touch is not only proprioceptive, but also visual. These illusions raise a more important worry for the template theory: although they do not show that touch is completely independent of bodily awareness, they do point toward some possible dissociations between them.

The template theory can account for the privileged relations between touch and the body, at the cost of forbidding the possibility of mismatch between touch and proprioception. It is far from obvious that it can provide a homogeneous account of the different ways proprioception calibrates touch. To cope with these difficulties, one might claim that the proper objects of touch are perceived relations between the body and the world (Armstrong 1962).

Many questions still remain open about the dependency of touch on proprioception. Is it reciprocal, or is there a priority of proprioception over touch (O’Shaughnessy 1989)? Does proprioceptive information merely play a causal role or does it plays an epistemic role? Is the specific contribution of proprioception to touch part of the phenomenology of touch?

FRÉDÉRIQUE DE VIGNEMONT AND OLIVIER MASSIN

Armstrong, D. M. (1962). Bodily Sensations.

Baldwin, T. (1995). ‘Objectivity, causality, and agency’. In Bermùdez, J. L. et al. (eds) The Body and the Self.

Evans, G. (1985). ‘Molyneux’s question’. In Collected Papers.

Gibson, J. J. (1966). The Senses Considered as Perceptual Systems.

Katz, D. (1925/1989). The World of Touch, trans. L. E. Krueger.

Martin, M. G. F. (1992). ‘Sight and touch’. In Crane, T. (ed.) The Contents of Experience.

O’Shaughnessy, B. (1989). ‘The sense of touch’. Australasian Journal of Philosophy, 67.

Scott, M. (2001). ‘Tactual perception’. Australasian Journal of Philosophy, 79.

Turvey, M. T. and Carello, C. (1995). ‘Dynamic touch’. In Epstein, W. and Rogers, S. (eds), Handbook of Perception and Cognition: Vol. 5. Perception of Space and Motion.

Weber, E. H. (1846/1996). ‘Tastsinn und Gemeingefühl’. In Ross, H. E. and Murray D. J. (trans.) E. H. Weber on the Tactile Senses.

transcranial magnetic stimulation There are many ways of attempting to capture the scientific essence of consciousness. One can record brain activity that correlates with states of awareness (see CORRELATES OF CONSCIOUSNESS, SCIENTIFIC PERSPECTIVS; ELECTROENCEPHALOGRAPHY), study patients who have lost aspects of awareness (see BLINDSIGHT), or manipulate awareness by using psychological techniques (see CHANGE BLINDNESS). One can also directly interfere with states of awareness by stimulating neurologically intact brains. This is achieved by transcranial magnetic stimulation (TMS).

TMS operates by placing an electrical coil on the scalp of an experimental subject. A brief electrical current is passed through this coil and induces a magnetic field which passes through the scalp of the subject. The magnetic field in its turn induces an electrical field in the region of the brain underneath the coil and this electrical change stimulates neurons (see Walsh and Pascual-Leone 2003). The duration of a single magnetic pulse is less than 1 ms and it is therefore possible to interfere with brain processes at very fine levels of temporal resolution. Figure T4 shows a timeline of a sequence of events in a typical TMS application.

Because TMS can interfere with local brain processes with fine temporal resolution, it presents us with two powerful ways of making inferences about consciousness and other processes. It has been used most successfully in studies of visual awareness. Cowey and Walsh (2000) and Pascual-Leone and Walsh (2001) used TMS to explore the cortical connectivity and timing of interactions between brain areas necessary for visual awareness. By applying TMS to a region of the brain containing many movement-sensitive cells, Pascual-Leone and Walsh caused subjects to experience visual movement. By applying a second pulse of magnetic stimulation over the primary visual cortex (*V1) they were able to degrade and, depending on the timing of stimulation, abolish the sensation of visual movement. The effect of stimulating primary visual cortex was greatest when it occurred between 15 and 45 ms after the stimulation of the movement sensitive neurons. Figure T5 shows the sequence of events in this experiment. This experiment showed that our awareness of activity in parts of the brain that are responsive to visual stimulation is dependent on back-projections to V1.

Fig. T4. Cycle of events in the application of a magnetic stimulation pulse. An electrical current is generated by the TMS stimulating unit and discharged into a circular or figure-of-eight shaped coil. Note the short rise time

Fig. T5. An example of using the temporal resolution of magnetic stimulation, the differential effects of high and low intensity stimulation, and double coil stimulation to examine cortical connectivity. (a) Schematic of the experiment by Pascual-Leone and Walsh (2001). TMS was delivered over MT/V5 to induce the perception of movement and either preceding or following this pulse a single pulse of sub-threshold TMS was applied over V1. (b) The results show that the perception of movement was degraded or abolished when V1 stimulation post-dated V5 stimulation by approximately 15–40 ms. (after Pascual-Leone and Walsh 2001).

TMS can also be used to control the level of stimulation in brain areas. Silvanto et al. (2005) exploited this to explore whether the level of activity in V1 was important in determining whether activity in other regions of the visual cortex reached awareness. In this experiment a region of the visual cortex containing movement-sensitive cells received TMS at a level of intensity sufficient to stimulate the neurons but not sufficient to induce an experience of visual movement. Within a few milliseconds of this stimulation TMS was also delivered over V1 at one of two levels of intensity: a level sufficient to induce a visual percept (a phosphene) or a lower level sufficient to stimulate the underlying neurons but not to induce a visual experience. Remarkably, when the level of V1 stimulation was high enough to induce a visual percept, the percept acquired characteristics of the neurons in the subliminally stimulated region of cortex containing visual movement-sensitive neurons. These perceptual characteristics could only have been carried by back projections from movement -sensitive neurons to V1. Thus TMS has established the necessity of V1 in visual awareness (Cowey and Walsh 2000, Pascual-Leone and Walsh 2001) and the fact that the level of activity in V1 gates access to visual experience (Silvanto et al. 2005). These findings are taken to speak very strongly against the *microconsciousness view of visual awareness, which predicts that activity in the movement-sensitive neurons would be sufficient for visual experience irrespective of V1 activity. They are more in line with the *re-entrant view of awareness.

TMS can be used in a simpler way to directly test whether a brain region is necessary for awareness. Any theory which proposes that a particular brain region is a necessary part of the circuits supporting consciousness must meet what might be called the ‘lesion challenge’: i.e. if a region of the brain is said to be important, then interfering with the normal processes of that region should also interfere with awareness. This is a test that has so far not been passed by any other region of cortex as impressively as V1, but there interesting suggestions that stimulating the right parietal cortex may affect awareness (see Walsh and Pascual-Leone 2003).

The phosphenes evoked by TMS in these and other studies are interesting in the context of consciousness and they have been evoked by electromagnetic stimulation for some time (see Fig. T6). When TMS is used to stimulate the regions of the brain responsible for hand movement, parts of the hand will twitch because TMS has introduced a disorganized pattern of firing into one of the motor areas of the brain. When TMS is applied to visual regions of the brain, the disorganized pattern of activity may be expressed as flash of light or a sensation of shimmering light. These light percepts are called phosphenes and their value lies in them as a means of activating the visual areas of the brain by bypassing the eye and pathways between the eye and the cortex. Cowey and Walsh (2000) used phosphenes to good effect to resolve a dispute about the patient G. Y. who has blind-sight. There had been a long debate concerning whether G. Y.’s ability to accurately guess about the presence and even location of stimuli of which he was not aware was due to stray light in the retina. Cowey and Walsh were able to excite parts of G. Y.’s visual cortex without stimulating the eye and therefore avoiding stray light.

Phosphenes are intriguing in their own right. They tend to be colourless, but colours can be induced if subjects have adapted before TMS is applied. They tend to indistinct in form—‘blurry, jagged edges, uneven brightness’ are the kinds of comments subjects make, rather than reporting anything organized such as a face or an object. Much remains to be discovered about phosphenes and they promise to continue to provide an important method of parsing visual awareness. Figure T7 shows some examples of phosphenes drawn by subjects.

Fig. T6. Sylvanus P. Thompson (c.1910), one of the pioneers of brain stimulation.

We are of course not only aware of external events but are also aware of ourselves and TMS has been used to test whether brain activity related to self-awareness seen in *functional brain imaging (fMRI) studies is an essential for this experience. Keenan and colleagues (2001) applied repetitive pulses of TMS over the right prefrontal cortex and observed that subjects were less aware of their own faces than without stimulation. This is consistent with several other studies implicating the right prefrontal cortex in self-recognition. Keenan’s finding is also important because it is indicative of different brain networks for different aspects of consciousness. Even severe damage to the prefrontal cortex or repetitive TMS over the left and right prefrontal cortex simultaneously does not interfere with visual awareness (Cowey and Walsh 2000).

The advantages of TMS, then, are in its temporal accuracy and the potential to study the necessity of a brain region’s contribution to awareness. Future advances may depend on the integration of TMS with other techniques and in particular with electroencephalography (EEG) and fMRI. If brain activations are postulated to be necessary for awareness it should be possible to stimulate these or other connected brain regions prior to recording brain activity with EEG or fMRI and determine whether the brain activity under examination is modulated by different levels of awareness.

Fig. T7. Some examples of phosphenes drawn by subjects when TMS was applied over a region of the brain containing movement-selective cells. The arrows indicate the direction of perceived movement.

VINCENT WALSH

Cowey, A. and Walsh, V. (2000). Magnetically induced phosphenes in sighted, blind and blindsighted observers. NeuroRe-port, 11.

Keenan, J. P., Wheeler, M. A., Gallup Jr, G. G., and Pascual-Leone, A. (2001). Self recognition and the right prefrontal cortex. Trends in Cognitive Sciences, 4.

Pascual-Leone, A. and Walsh, V. (2001). Fast backprojections from the motion area to the primary visual area necessary for visual awareness. Science, 292.

Silvanto, J., Cowey, A., Lavie, N., and Walsh, V. (2005). Striate cortex (V1) activity gates awareness of motion. Nature Neuro-science, 8.

Walsh, V. and Pascual-Leone, A. (2003). Transcranial Magnetic Stimulation: A Neurochronometrics of Mind.

transitive consciousness See CONSCIOUSNESS, CONCEPTS OF

transparency Yesterday, turning my head from the computer in my office to the window, I caught sight of the sulphur crest of a cockatoo sitting in a branch opposite; I had, as philosophers say, a visual experience as of the sulfur crest. Later (having nothing better to do) I indulged in some *introspection. I reflected on this experience, asking myself how it might have been different had circumstances been otherwise: what if I had been wearing dark glasses, for example, or if it were later in the evening? This kind of reflection is often characterized as being directed inward, as being focused on my psychological states and how they might have been different. Indeed, the word ‘introspection’ itself suggests this sort of orientation. But if you think about what actually happens in this sort of case—on what we do when we do what is called ‘introspection’—the opposite seems true. In the course of such reflection, my attention seems to be occupied, not on some psychological fact about myself, but completely on the sulfur crest. For if had been wearing dark glasses, it is the sulfur crest itself that would have looked different. This fact or seeming fact—that my attention is directed outward to the sulfur crest, rather than inward to my experience—is the so-called transparency of experience: in introspection, one apparently looks through the experience to the world, just as if the experience itself were transparent. The transparency of experience is sometimes known as the ‘diaphanousness’ of experience—the latter being the word the English philosopher G. E. Moore used in ‘The refutation of idealism’, the paper to which the original insight is often credited (see Moore 1903). The word ‘diaphanousness’ is cumbersome, and this is no doubt one reason that ‘transparency’ is commonly used. But ‘transparency’ has the disadvantage that it already has a number of established uses in philosophy for phenomena that have little to do with the fact about introspection at issue here. For example, in philosophy of language, a transparent linguistic context is one that permits substitution of coextensive terms salva veritate. This idea is quite unrelated to the transparency of experience, since here we are talking about experience, not bits of language. And ‘transparent’ is sometimes used in epistemology for the apparent privileged access of psychological states, the fact that at least some psychological states are such that if one is in them, one thereby bears knows or is in a position to know various facts about those states—that one is having it, for example, or various essential features of it. Privileged access is closer to transparency as intended here, but still different. When Moore and others talk about transparency, they are talking about what the focus of introspection is; they are not talking about any epistemological property of the experience.

The transparency of experience has played a significant role in philosophy of mind in the last hundred years or so. Moore himself drew attention to it in the course of arguing against *idealism, the view that reality is in some fundamental sense spiritual or mental—strange as now seems, a very influential doctrine in philosophical circles at the end of the 19th century. Idealists, in Moore’s view, failed to distinguish between the act of having a sensation, on the one hand, and the object of the sensation, on the other: the sensing of something blue, and the something blue that one senses. Moore offered the transparency of experience as part of a psychological explanation of why idealists commit this mistake (assuming they do).

But Moore’s point was almost immediately recognized as having a bearing on philosophy of mind more specifically. Writing only a year after Moore, William James (1904), the American philosopher and psychologist, argued that Moore did not go far enough. In his original paper, Moore says that when ‘we try to introspect the sensation of blue, all we can see is the blue; the other element is as if it were diaphanous’. But he added: ‘Yet it can be distinguished, if we look attentively enough, and know that there is something to look for.’ For James, there is nothing ‘as if’ about it. Consciousness cannot be found, James thinks, for the simple reason it does not exist—at any rate, not if one means by ‘consciousness’ or ‘experience’ an object that might be the target of some perceptual like process. What we see in James is an example of certain style of philosophical reasoning often exhibited by discussions of transparency. It is alleged that ordinary thought about consciousness or experience implicitly supposes that there are objects called ‘experiences’ and that such objects can be seen by an act of inner perception. Transparency is then brought in to destroy this implicit supposition, often with the accompanying implication that, if one corrects for it, one would likewise be in a position to defuse the central metaphysical puzzles about the nature of experience, in particular, whether the existence of experience is compatible with a complete physical or scientific or objective theory of the world.

Later philosophers (at least in the analytic tradition) continue this tendency of discussing transparency in the context of the question of whether ordinary thought about experience embodies a mistake. C. D. Broad, a philosopher deeply influenced by Moore, is one who takes himself to be defending the picture. In Mind and Its Place in Nature (1925), he argues against those who appeal to transparency by drawing a distinction between introspection and inspection: one inspects the penny but introspects one’s sensing the penny. And B. A. Farrell (1950), writing in the heyday of ordinary language philosophy, took himself to be attacking the idea of experience as an object, a position he summarized by saying that experience is featureless.

In contemporary philosophy, the idea that there is some conceptual mistake or confusion associated with various positions in philosophy of mind is less influential than it once was—in part because the underlying conception of philosophy as the removal of conceptual blockages to science is less influential. But the transparency of experience nevertheless continues to play an important role, particularly in the debate between *representationalists (or *intentionalists) and anti-representationalists (anti-intentionalists) in philosophy of mind. In this debate, the representationalist position says that the most basic fact about the mind is its ability to represent things as being thus and so. If that is so, then every difference in experience must at the end of the day be explained in terms of a representational difference. Transparency is often appealed to as a datum that supports this view. For example, transparency is sometimes said to support the premise that in introspection one has access only to the properties represented in one’s experience and not to properties of one’s experience. From this premise it is concluded that representationalism is true. Indeed representationalists often leave the strong impression that in their view the transparency point provides a very powerful, and perhaps decisive, argument in favour of representationalism (see Harman 1990).

How successful is the argument from transparency for representationalism? At the time of writing there is no consensus on this issue. But what a number of philosophers have argued is that the argument here is much less decisive and crisp than it might have seem (see Stoljar 2004). For one thing, the idea that one only has access to properties represented in experience is, if taken quite literally, false. Take the property of having an experience as opposed to imagining having an experience. It seems clear enough that we can distinguish these in introspection, at least in clear cases. But then it cannot be that we only have access to represented properties, for an experience and an imagined experience might represent precisely the same properties. Moreover, it is quite unclear that the phenomenology of introspection supports the claim (ignoring for the moment whether it is true) that one only has access to represented properties. The phenomenology seems to be a about where one’s attention is primarily occupied; it does not seem to entail a negative thesis about what one has access to.

What all of this seems to indicate is that the transparency point is of limited value when it comes to deciding large-scale issues in philosophy of mind. On reflection, however, this is unsurprising. For just below the surface here is a more general epistemological question about the extent to which abstract theories may be viewed as being confirmed by relatively unadulterated reports of experience. The problem is that the theories themselves seem to recast the reports in their own image. Whether this is true in general is a big issue, but it certainly seems true in this part of philosophy of mind: representationalists tend to construe transparency in such a way that it supports their view; mutatis mutandis the anti-representationalists.

DANIEL STOLJAR

Broad, C. D. (1925). The Mind and Its Place in Nature.

Farrell, B. A. (1950). ‘Experience’. Mind, 59.

Harman, G. (1990). ‘The intrinsic quality of experience’. Philosophy of Mind and Action Theory: Philosophical Perspectives, 4.

James, W. (1904). ‘Does “consciousness” exist?’ Journal of Philosophy, Psychology and Scientific Method, I.

Moore, G. E. (1903/1922). ‘The refutation of idealism’. In Moore, G. E. Philosophical Papers.

Peacocke, C. (1983). Sense and Content.

Stoljar, D. (2004). ‘The argument from diaphanousness’. In Ezcurdia, M. et al. (eds) New Essays in The Philosophy of Mind and Language (Canadian Journal of Philosophy Supplementary Volume 30).

Turing test The underlying idea of the Turing test is that whatever acts as if it is sufficiently intelligent is intelligent. ‘If it walks like a duck and quacks like a duck, it probably is a duck.’ So goes a pithy observation, undoubtedly as old as English itself, that provides a quick-and-dirty way to recognize ducks. This little maxim constitutes an operational means of duck identification that, for better or for worse, sidesteps all the thorny issues associated with actually explicitly defining a set of features allowing us to identify a duck (e.g. has feathers, can fly, weighs less than 5 kg, has webbed feet, swims well, has nucleated red blood cells, has a four-chambered heart, has a flat bill, etc.). What folk wisdom did for ducks, Alan Turing did for intelligence. He was the first to suggest an operational means of identifying intelligence that has come to be called the Turing test (Turing 1950). The underlying idea of his test is the same as our folk means of duck identification. Translated into the vernacular of modern electronic communication, the Turing test says that if, by means of an extended computer-mediated conversation alone, you cannot tell whether you are chatting with a machine or a person, then whomever or whatever you are chatting with is intelligent.

Since it first appeared nearly six decades ago, Turing’s article has become the single most cited article in artificial intelligence. In fact, few articles in any field have caused so much ink to flow. References to the Turing test still appear regularly in artificial intelligence journals, philosophy journals, technical treatises, novels, and the popular press. Type ‘Turing test’ into any Internet search engine and there will be, literally, thousands of hits.

1. How the Turing test works

2. Commentary on the Turing test: two lines of argument

3. The Turing test as a graded measure of human intelligence and consciousness

Turing’s original description of his ‘Imitation Game’ was somewhat more complicated than the simpler version that we describe below. However, there is essentially universal agreement that the additional complexity of the original version adds nothing of substance to its slightly simplified reformulation that we refer to today as the Turing test.

The Turing test starts by supposing that there are two rooms, one of which contains a person, the other a computer. Both rooms are linked by means of text-only communication to an Interrogator whose job it is, by means of questioning the entities in each room, to determine which room contains the computer and which the person. Any and all questions that can be transmitted via typed text are fair game. If after a lengthy session of no-holds-barred questions, the Interrogator cannot distinguish the computer from the person, then the computer is declared to be intelligent (i.e. to be thinking). It is important to note that failing the Turing test proves nothing. It is designed to be a sufficient, but not necessary, condition for intelligence.

There have been numerous approaches to discussing the Turing test (see French 2000, Saygin et al. 2000, Shieber 2004 for reviews.) The first, and by far the most frequent, set of commentaries on the Turing test attempt to show that if a machine did indeed succeed in passing it, that this alone would not necessarily imply that the machine was intelligent (e.g. Scriven 1953, Gunderson 1964, Purtill 1971 and more recently Searle 1980, Block 1981, Copeland 2000 who argue against the ‘behaviouristic’—i.e. input/output (I/O) only—nature of the Turing test). Numerous authors (e.g. Millar 1973, Moor 1976, Dennett 1985, Hofstadter and Dennett 1981) argued, on the contrary, that passing the test would indeed constitute a sufficient test for intelligence. Certain authors (e.g. Dennett 1985, French 1990, Harnad 1991) also emphasized the enormous, if not insurmountable, difficulties a machine would have in actually passing the test.