This chapter introduces the concept of recursion and recursive algorithms. First, the definition of recursion and fundamental rules for recursive algorithms will be explored. In addition, methods of analyzing efficiencies of recursive functions will be covered in detail using mathematical notations. Finally, the chapter exercises will help solidify this information.

Introducing Recursion

Recursion illustrated

Rules of Recursion

When recursive functions are implemented incorrectly, it causes fatal issues because the program will get stuck and not terminate. Infinite recursive calls result in stack overflow. Stack overflow is when the maximum number of call stacks of the program exceeds the limited amount of address space (memory).

For recursive functions to be implemented correctly, they must follow certain rules so that stack overflow is avoided. These rules are covered next.

Base Case

In recursion, there must be a base case (also referred to as terminating case). Because recursive methods call themselves, they will never stop unless this base case is reached. Stack overflow from recursion is most likely the result of not having a proper base case. In the base case, there are no recursive function calls.

The base case for this function is when n is smaller or equal to 0. This is because the desired outcome was to stop counting at 0. If a negative number is given as the input, it will not print that number because of the base case. In addition to a base case, this recursive function also exhibits the divide-and-conquer method.

Divide-and-Conquer Method

In computer science, the divide-and-conquermethod is when a problem is solved by solving all of its smaller components. With the countdown example, counting down from 2 can be solved by printing 2 and then counting down from 1. Here, counting down from 1 is the part solved by “dividing and conquering.” It is necessary to make the problem smaller to reach the base case. Otherwise, if the recursive call does not converge to a base case, a stack overflow occurs.

Let’s now examine a more complex recursive function known as the Fibonacci sequence .

Classic Example: Fibonacci Sequence

1, 1, 2, 3, 5, 8, 13, 21 …

How might you program something to print the Nth term of the Fibonacci sequence?

Iterative Solution: Fibonacci Sequence

A for loop can be used to keep track of the last two elements of the Fibonacci sequence, and its sum yields the Fibonacci number.

Now, how might this be done recursively?

Recursive Solution: Fibonacci

Base case: The base case for the Fibonacci sequence is that the first element is 1.

Divide and conquer: By definition of the Fibonacci sequence, the Nth Fibonacci number is the sum of the (n-1)th and (n-2)th Fibonacci numbers. However, this implementation has a time complexity of O(2n), which is discussed in detail later in this chapter. We will explore a more efficient recursive algorithm for the Fibonacci sequence using tail recursion in the next section.

Fibonacci Sequence: Tail Recursion

Time Complexity: O(n)

At most, this function executes n times because it’s decremented by n-1 each time with only single recursive call.

Space Complexity: O(n)

The space complexity is also O(n) because of the stack call used for this function . This will be further explained in the “Recursive Call Stack Memory” section later in this chapter.

To conclude the rules of recursion, let’s examine another example, which is more complex.

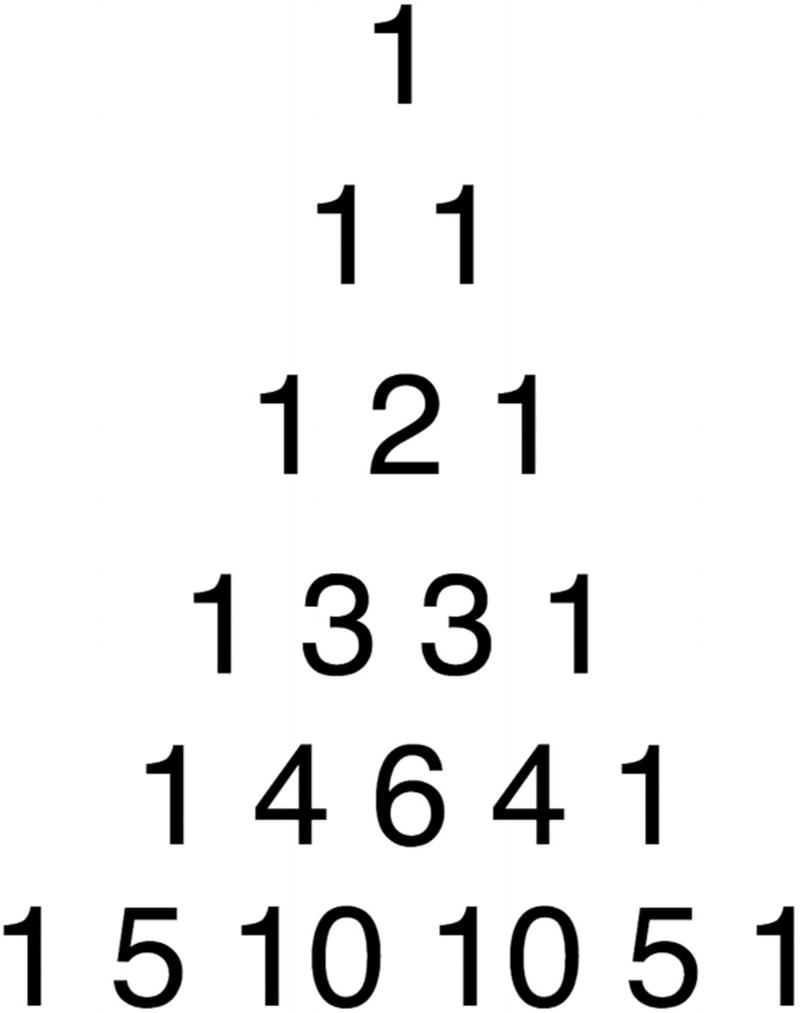

Pascal’s Triangle

Pascal’s triangle

Base case: The base case for Pascal’s triangle is that the top element (row=1, col=1) is 1. Everything else is derived from this fact alone. Hence, when the column is 1, return 1, and when the row is 0, return 0.

This is the beauty of recursion! Look next at how short and elegant this code is.

Big-O for Recursion

In Chapter 1, Big-O analysis of recursive algorithms was not covered. This was because recursive algorithms are much harder to analyze. To perform Big-O analysis for recursive algorithms, its recurrence relations must be analyzed.

Recurrence Relations

In algorithms implemented iteratively, Big-O analysis is much simpler because loops clearly define when to stop and how much to increment in each iteration. For analyzing recursive algorithms, recurrence relations are used. Recurrence relations consist of two-part analysis: Big-O for base case and Big-O for recursive case.

Base case:T (n) = O(1)

Recursive case:T (n) = T (n − 1) + T (n − 2) + O(1)

Now, this relation means that since T (n) = T (n − 1) + T (n − 2) + O(1), then (by replacing n with n−1), T (n − 1) = T (n − 2) + T (n − 3) + O(1). Replacing n−1 with n−2 yields T (n − 2) = T (n − 3) + T (n − 4) + O(1). Therefore, you can see that for every call, there are two more calls for each call. In other words, this has a time complexity of O(2n).

Calculating Big-O this way is difficult and prone to error. Thankfully, there is a concept known as the master theorem to help. The master theorem helps programmers easily analyze the time and space complexities of recursive algorithms.

Master Theorem

Given a recurrence relation of the form T (n) = aT (n/b) + O(nc) where a >= 1 and b >=1, there are three cases.

a is the coefficient that is multiplied by the recursive call. b is the “logarithmic” term, which is the term that divides the n during the recursive call. Finally, c is the polynomial term on the nonrecursive component of the equation.

Case 1:c < logb(a) then T (n) = O(n(logb(a))).

For example, T (n) = 8T (n/2) + 1000n2

Identify a, b, c:a = 8, b = 2, c = 2

Evaluate:log2(8) = 3. c < 3 is satisfied.

Result:T (n) = O(n3)

Case 2:c = logb(a) then T (n) = O(nclog(n)).

For example, T (n) = 2T (n/2) + 10n.

Identify a, b, c:a = 2, b = 2, c = 1

Evaluate:log2(2) = 1. c = 1 is satisfied.

Result:T (n) = O(nclog(n)) = T (n) = O(n1log(n)) = T (n) = O(nlog(n))

Case 3:c > logb(a) then T (n) = O(f (n)).

For example, T (n) = 2T (n/2) + n2.

Identify a,b,c: a = 2, b = 2, c = 2

Evaluate:log2(2) = 1. c > 1 is satisfied.

Result:T (n) = f (n) = O(n2)

This section covered a lot about analyzing the time complexity of recursive algorithms. Space complexity analysis is just as important. The memory used by recursive function calls should also be noted and analyzed for space complexity analysis.

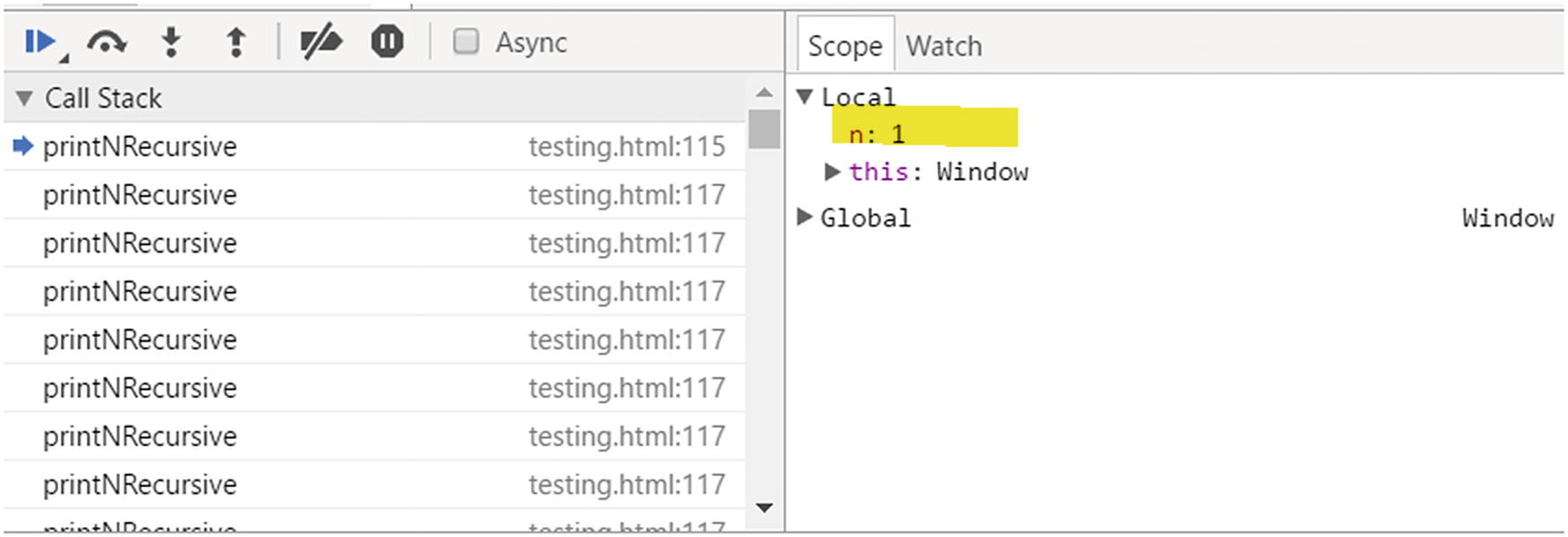

Recursive Call Stack Memory

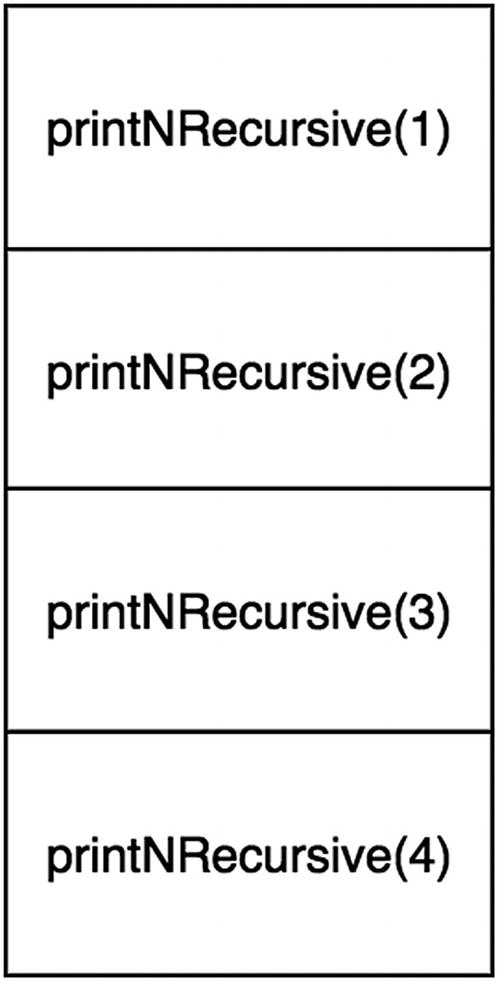

When a recursive function calls itself, that takes up memory, and this is really important in Big-O space complexity analysis.

Call stack in Developer Tools

Call stack memory

Recursive functions have an additional space complexity cost that comes from the recursive calls that need to be stored in the operating system’s memory stack. The stack is accumulated until the base case is solved. In fact, this is often why an iterative solution may be preferred over the recursive solution. In the worst case, if the base case is implemented incorrectly, the recursive function will cause the program to crash because of a stack overflow error that occurs when there are more than the allowed number of elements in the memory stack.

Summary

Recursion is a powerful tool to implement complex algorithms. Recall that all recursive functions consist of two parts: the base case and the divide-and-conquer method (solving subproblems).

Analyzing the Big-O of these recursive algorithms can be done empirically (not recommended) or by using the master theorem. Recall that the master theorem needs the recurrence relation in the following form: T (n) = aT (n/b) +O(nc). When using the master theorem, identify a, b, and c to determine which of the three cases of the master theorem it belongs to.

Finally, when implementing and analyzing recursive algorithms, consider the additional memory caused by the call stack of the recursive function calls. Each recursive call requires a place in the call stack at runtime; when the call stack accumulate n calls, then the space complexity of the function is O(n).

Exercises

These exercises on recursion cover varying problems to help solidify the knowledge gained from this chapter. The focus should be to identify the correct base case first before solving the entire problem. You will find all the code for the exercises on GitHub.1

CONVERT DECIMAL (BASE 10) TO BINARY NUMBER

To do this, keep dividing the number by 2 and each time calculate the modulus (remainder) and division.

Time Complexity: O(log2(n))

Time complexity is logarithmic because the recursive call divides the n by 2, which makes the algorithm fast. For example, for n = 8, it executes only three times. For n=1024, it executes 10 times.

Space Complexity: O(log2(n))

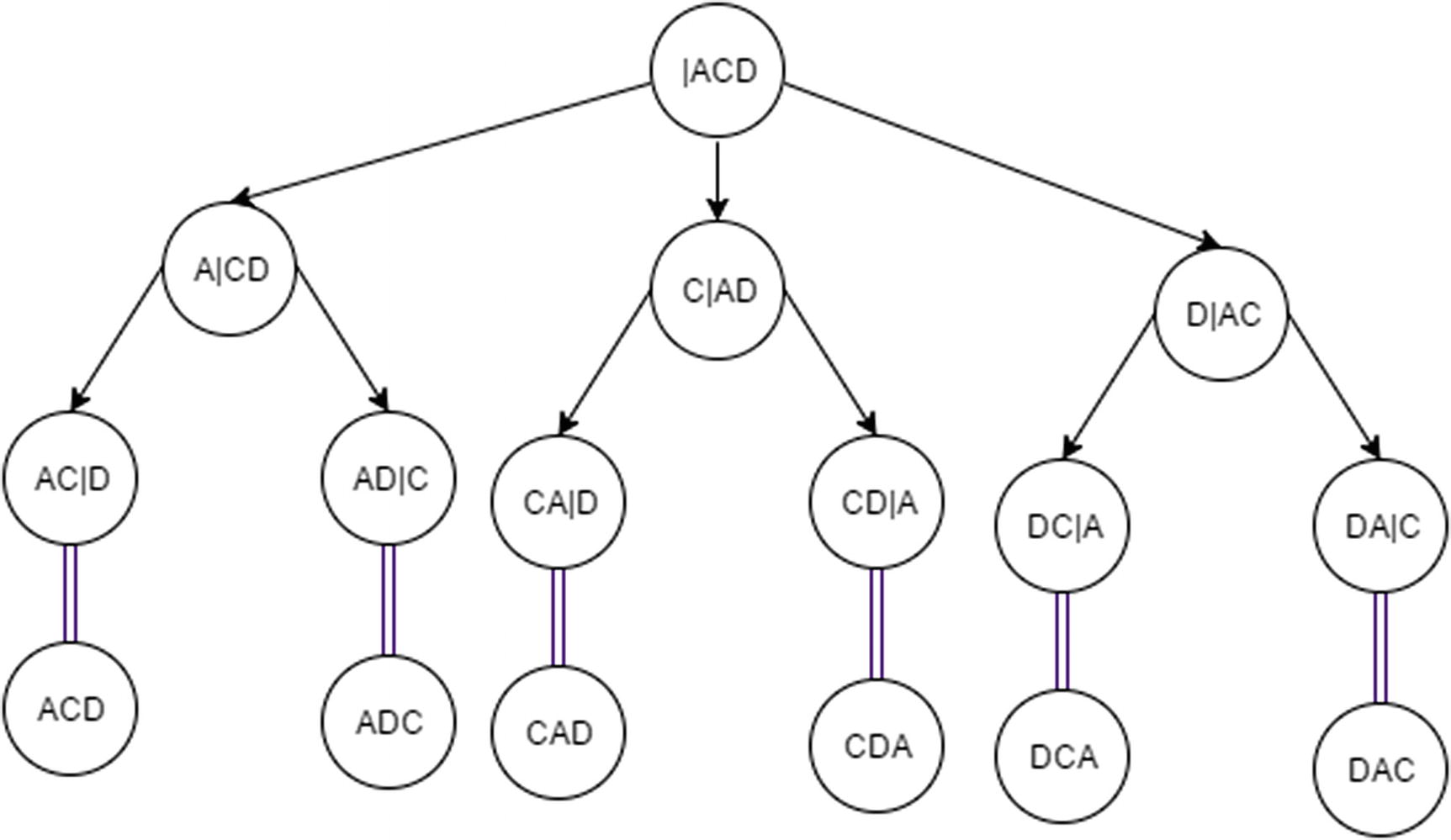

PRINT ALL PERMUTATIONS OF AN ARRAY

This is a classical recursion problem and one that is pretty hard to solve. The premise of the problem is to swap elements of the array in every possible position.

Permutation of array recursion tree

Base case: beginIndex is equal to endIndex.

When this occurs, the function should print the current permutation.

Time Complexity: O(n!)

Space Complexity: O(n!)

There are n! permutations , and it creates n! call stacks.

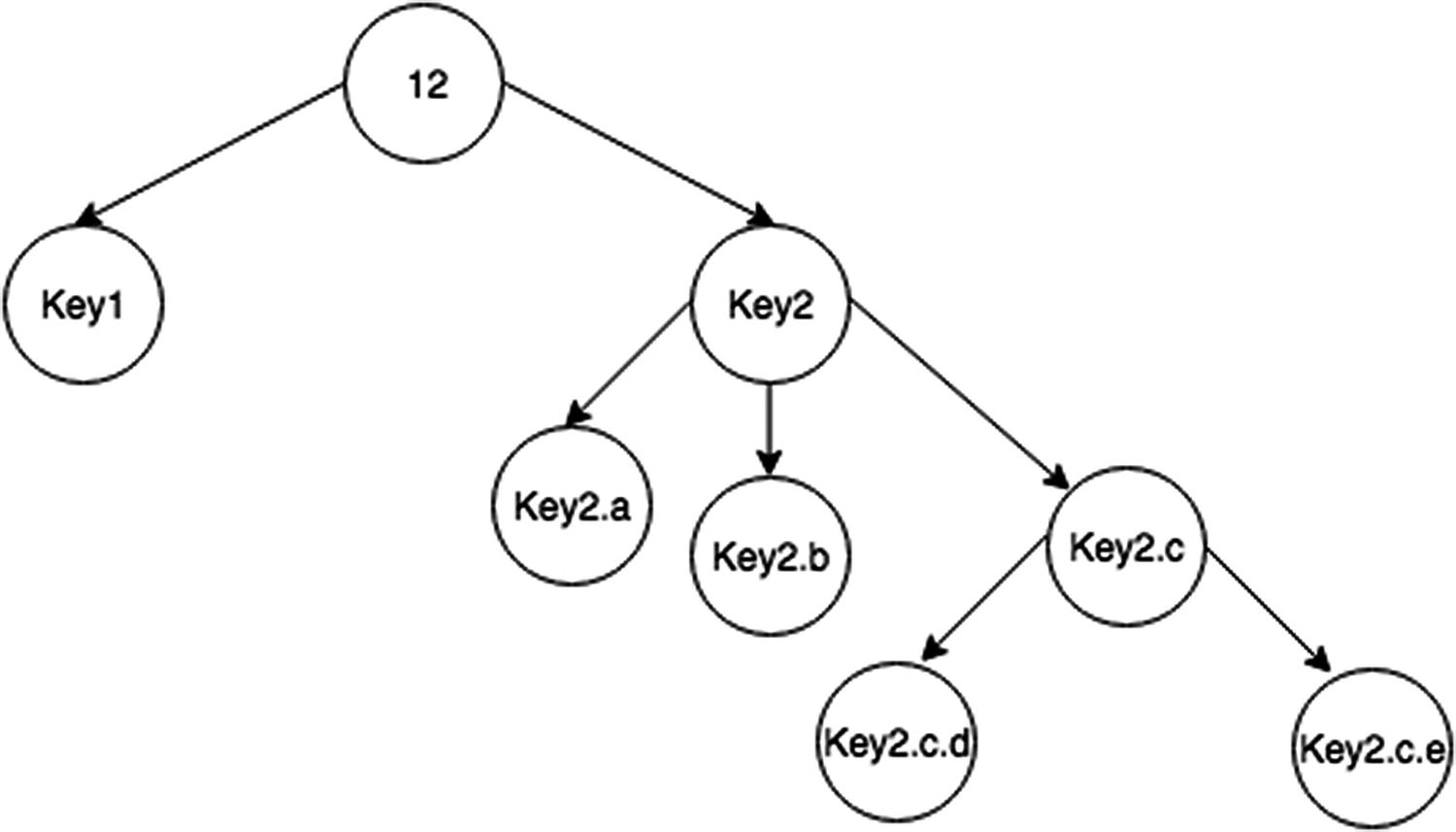

FLATTEN AN OBJECT

Flatten a dictionary recursion tree

To do this, iterate over any property and recursively check it for child properties, passing in the concatenated string name.

Time Complexity: O(n)

Space Complexity: O(n)

Each property is visited only once and stored once per nproperties.

WRITE A PROGRAM THAT RECURSIVELY DETERMINES IF A STRING IS A PALINDROME

The idea behind this one is that with two indexes (one in front and one in back) you check at each step until the front and back meet.

Time Complexity: O(n)

Space Complexity: O(n)

Space complexity here is still O(n) because of the recursive call stack. Remember that the call stack remains part of memory even if it is not declaring a variable or being stored inside a data structure.