Chapter 4

Product and portfolio management

Key concepts covered in this chapter:

Introduction

Effective marketing comes from customer knowledge and an understanding of how a product fits customers’ needs. In this chapter, we’ll describe metrics used in product strategy and planning. These metrics address the following questions: What volumes can marketers expect from a new product? How will sales of existing products be affected by the launch of a new offering? Is brand equity increasing or decreasing? What do customers really want, and what are they willing to sacrifice to obtain it?

We’ll start with a section on trial and repeat rates, explaining how

these metrics are determined and how they’re used to generate sales forecasts for new products. Because forecasts involve growth projections, we’ll then discuss the difference between year-on-year growth and compound annual growth rates (CAGR). Because growth of one product sometimes comes at the expense of an existing product line, it is important to understand cannibalisation metrics. These reflect the impact of new products on a portfolio of existing products.

Next, we’ll cover selected metrics associated with brand equity—a central focus of marketing. Indeed, many of the metrics throughout this book can be useful in evaluating brand equity. Certain metrics, however, have been developed specifically to measure the “health” of brands. This chapter will discuss them.

Although branding strategy is a major aspect of a product offering, there are others, and managers must be prepared to make trade-offs among them, informed by a sense of the “worth” of various features. Conjoint analysis helps identify customers’ valuation of specific product attributes. Increasingly, this technique is used to improve products and to help marketers evaluate and segment new or rapidly growing markets. In the final sections of this chapter, we’ll discuss conjoint analysis from multiple perspectives.

Trial, repeat, penetration and volume projections

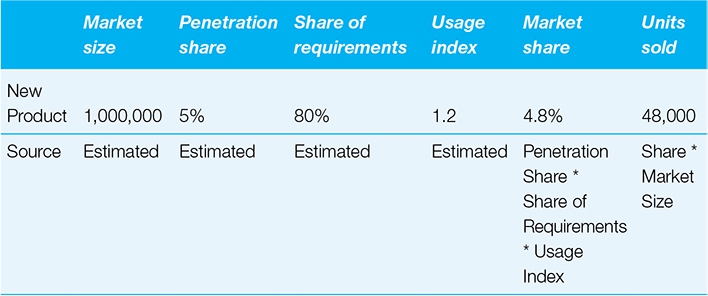

Test markets and volume projections enable marketers to forecast sales by sampling customer intentions through surveys and market studies. By estimating how many customers will try a new product, and how often they’ll make repeat purchases, marketers can establish the basis for such projections.

Projections from customer surveys are especially useful in the early stages of product development and in setting the timing for product launch. Through such projections, customer response can be estimated without the expense of a full product launch.

Purpose: to understand volume projections.

When projecting sales for relatively new products, marketers typically use a system of trial and repeat calculations to anticipate sales in future periods. This works on the principle that everyone buying the product will either be a new customer (a “trier”) or a repeat customer. By adding new and repeat customers in any period, we can establish the penetration of a product in the marketplace.

It is challenging, however, to project sales to a large population on the basis of simulated test markets, or even fully fledged regional rollouts. Marketers have developed various solutions to increase the speed and reduce the cost of test marketing, such as stocking a store with products (or mock-ups of new products) or giving customers money to buy the products of their choice. These simulate real shopping conditions but require specific models to estimate full-market volume on the basis of test results. To illustrate the conceptual underpinnings of this process, we offer a general model

for making volume projections on the basis of test market results.

Construction

The penetration of a product in a future period can be estimated on the basis of population size, trial rates and repeat rates.

Trial rate (%): The percentage of a defined population that purchases or uses a product for the first time in a given period.

Example A cable TV company keeps careful records of the names and addresses of its customers. The firm’s vice president of marketing notes that 150 households made first-time use of his company’s services in March 2017. The company has access to 30,000 households. To calculate the trial rate for March, we can divide 150 by 30,000, yielding 0.5%.

First-time triers in period t

(#): The number of customers who purchase or use a product or brand for the first time in a given period.

Example A cable TV company started selling a monthly sports package in January. The company typically has an 80% repeat rate and anticipates that this will continue for the new offering. The company sold 10,000 sports

packages in January. In February, it expects to add 3,000 customers for the package. On this basis, we can calculate expected penetration for the sports package in February.

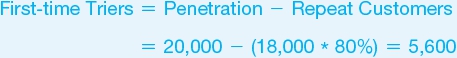

Later that year, in September, the company has 20,000 subscribers. Its repeat rate remains 80%. The company had 18,000 subscribers in August. Management wants to know how many new customers the firm added for its sports package in September:

From penetration, it is a short step to projections of sales.

Simulated test market results and volume projections

Trial volume

Trial rates are often estimated on the basis of surveys of potential customers. Typically, these surveys ask respondents whether they will “definitely” or “probably” buy a product. As these are the strongest of several possible responses to questions of purchase intentions, they are sometimes referred to as the “top two boxes.” The less favourable responses in a standard five-choice survey include “may or may not buy,” “probably won’t buy,” and “definitely won’t buy.” (Refer to

Chapter 2

for more on intention to purchase.)

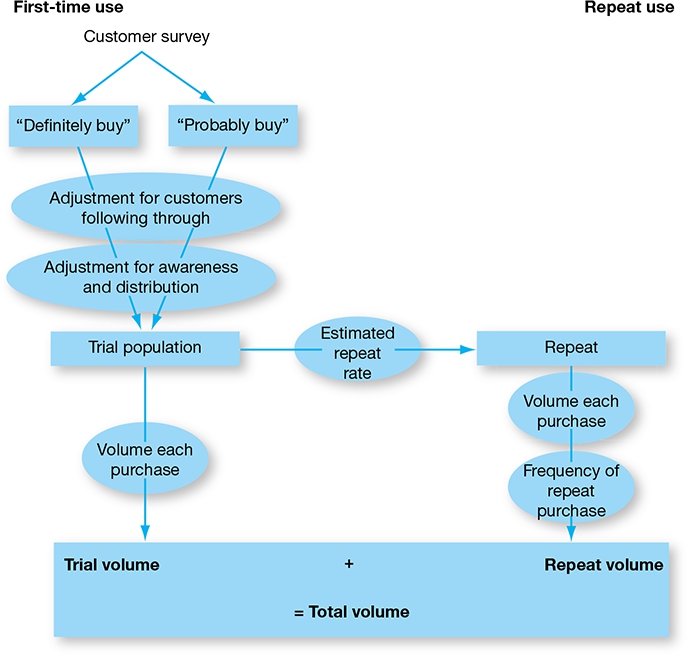

Because not all respondents follow through on their declared purchase intentions, firms often make adjustments to the percentages in the top two boxes in developing sales projections. For example, some marketers estimate that 80% of respondents who say they’ll “definitely buy” and 30% of those who say that they’ll “probably buy” will in fact purchase a product when given the opportunity.

1

(The adjustment for customers following through is used in the following model.) Although some respondents in the bottom three boxes might buy a product, their number is assumed to be insignificant. By reducing the score for the top two boxes, marketers derive a more realistic estimate of the number of potential customers who will try a product, given the right circumstances. Those circumstances are often shaped by product awareness and availability.

Awareness

Sales projection models include an adjustment for lack of awareness of a product within the target market (see

Figure 4.1

). Lack of awareness reduces the trial rate because it excludes some potential customers who might try the product but don’t know about it. By contrast, if awareness is 100%, then all potential customers know about the product, and no potential sales are lost due to lack of awareness.

Figure 4.1

Schematic of simulated test market volume projection

Distribution

Another adjustment to test market trial rates is usually applied—accounting for the estimated availability of the new product. Even survey respondents who say they’ll “definitely” try a product are unlikely to do so if they can’t find it easily. In making this adjustment, companies typically use an estimated distribution, a percentage of total stores that will stock the new product, such as ACV % distribution. (See

Chapter 6

for further detail.)

After making these modifications, marketers can calculate the number of customers who are expected to try the product, simply by applying the adjusted trial rate to the target population.

Estimated in this way, trial population (#) is identical to penetration (#) in the trial period.

To forecast trial volume, multiply trial population by the projected average number of units of a product that will be bought in each trial purchase. This is often assumed to be one unit because most people will experiment with a single unit of a new product before buying larger quantities.

Combining all these calculations, the entire formula for trial volume is

Example The marketing team of an office supply manufacturer has a great idea for a new product—a safety stapler. To sell the idea internally, they want to project the volume of sales they can expect over the stapler’s first year. Their customer survey yields the following results (see

Table 4.1

).

Table 4.1 Customer survey responses

|

% of customers responding

|

|

Definitely Will Buy

|

20%

|

|

Probably Will Buy

|

50%

|

|

May/May Not Buy

|

15%

|

|

Probably Won’t Buy

|

10%

|

|

Definitely Won’t Buy

|

5%

|

|

Total

|

100%

|

On this basis, the company estimates a trial rate for the new stapler by applying the industry-standard expectation that 80% of “definites” and 30% of “probables” will in fact buy the product if given the opportunity.

Thus, 31% of the population is expected to try the product if they are aware of it and if it is available in stores. The company has a strong advertising presence and a solid distribution network. Consequently, its marketers believe they can obtain an ACV of approximately 60% for the stapler and that they can generate awareness at a similar level. On this basis, they project an adjusted trial rate of 11.16% of the population:

The target population comprises 20 million people. The trial population can be calculated by multiplying this figure by the adjusted trial rate.

Assuming that each person buys one unit when trying

the product, the trial volume will total 2.232 million units.

We can also calculate the trial volume by using the full formula:

Repeat volume

The second part of projected volume concerns the fraction of people who try a product and then repeat their purchase decision. The model for this dynamic uses a single estimated repeat rate to yield the number of customers who are expected to purchase again after their initial trial. In reality, initial repeat rates are often lower than subsequent repeat rates. For example, it is not uncommon for 50% of trial purchasers to make a first repeat purchase, but for 80% of those who purchase a second time to go on to purchase a third time.

To calculate the repeat volume, the repeat buyers figure can then be multiplied by an expected volume per purchase among repeat customers and by the number of times these customers are expected to repeat their purchases within the period under consideration.

This calculation yields the total volume that a new product is expected to generate among repeat customers over a specified introductory period. The full formula can be written as

Example Continuing the previous office supplies example, the safety stapler has a trial population of 2.232 million. Marketers expect the product to be of sufficient quality to generate a 10% repeat rate in its first year. This will yield 223,200 repeat buyers:

On average, the company expects each repeat buyer to purchase on four occasions during the first year. On average, each purchase is expected to comprise two units.

This can be represented in the full formula:

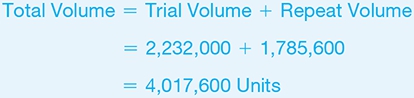

Total volume

Total volume is the sum of trial volume and repeat volume, as all volume must be sold to either new customers or returning customers.

To capture total volume in its fully detailed form, we need only combine the previous formulas.

Example Total volume in year one for the stapler is the sum of trial volume and repeat volume.

A full calculation of this figure and a template for a spreadsheet calculation are presented in

Table 4.2

.

Table 4.2 Volume projection spreadsheet

|

Preliminary data

|

Source

|

|

|

Definitely Will Buy

|

Customer Survey

|

20%

|

|

Probably Will Buy

|

Customer Survey

|

50%

|

|

Likely Buyers

|

|

|

|

Likely Buyers from Definites

|

= Definitely Buy * 80%

|

16%

|

|

Likely Buyers from Probables

|

= Probably Buy * 30%

|

15%

|

|

Trial Rate (%)

|

Total of Likely Buyers

|

31%

|

|

Marketing Adjustments

|

|

|

|

Awareness

|

Estimated from Marketing Plan

|

60%

|

|

ACV

|

Estimated from Marketing Plan

|

60%

|

|

Adjusted Trial Rate (%)

|

= Trial Rate * Awareness * ACV

|

11.2%

|

|

Target Population (#) (thousands)

|

Marketing Plan Data

|

20,000

|

|

Trial Population (#) (thousands)

|

= Target Population * Adjusted Trial Rate

|

2,232

|

|

Unit Volume Purchased per Trial (#)

|

Estimated from Marketing Plan

|

1

|

|

Trial Volume (#) (Thousands)

|

= Trial Population * Volume per Trier

|

2,232

|

|

Repeat Rate (%)

|

Estimated from Marketing Plan

|

10%

|

|

Repeat Buyers (#)

|

= Repeat Rate * Trial Population

|

223,200

|

|

Avg. Volume per Repeat Purchase (#)

|

Estimated from Marketing Plan

|

2

|

|

Repeat Purchase Frequency ** (#)

|

Estimated from Marketing Plan

|

4

|

|

Repeat Volume (#) (Thousands)

|

= Repeat Buyers * Repeat Volume per Purchase * Repeat Purchase

|

1,786

|

|

Total Volume (#) (Thousands)

|

|

4,018

|

**Note: The average frequency of repeat purchases per repeat purchaser should be adjusted to reflect the time available for first-time triers to repeat, the purchase cycle (frequency) for the category, and availability. For example, if trial rates are constant over the year, the number of repeat purchases would be about 50% of what it would have been if all had tried on day 1 of the period.

Data sources, complications and cautions

Sales projections based on test markets will always require the inclusion of key assumptions. In setting these assumptions, marketers face tempting opportunities to make the assumptions fit the desired outcome. Marketers must guard against that temptation and perform sensitivity analysis to establish a range of predictions.

Relatively simple metrics such as trial and repeat rates can be difficult to capture in practice. Although strides have been made in gaining customer data—through customer loyalty cards, for example—it will often be difficult to determine whether customers are new or repeat buyers.

Regarding awareness and distribution: Assumptions concerning the level of public awareness to be generated by launch advertising are fraught with uncertainty. Marketers are advised to ask: What sort of awareness does the product need? What complementary promotions

can aid the launch?

Trial and repeat rates are both important. Some products generate strong results in the trial stage but fail to maintain ongoing sales. Consider the following example.

Example Let’s compare the safety stapler with a new product, such as an enhanced envelope sealer. The envelope sealer generates less marketing buzz than the stapler but enjoys a greater repeat rate. To predict results for the envelope sealer, we have adapted the data from the safety stapler by reducing the top two box responses by half (reflecting its lower initial enthusiasm) and raising the repeat rate from 10% to 33% (showing stronger product response after use).

At the six-month mark, sales results for the safety stapler are superior to those for the envelope sealer. After one year, sales results for the two products are equal. On a three-year time scale, however, the envelope sealer—with its loyal base of customers—emerges as the clear winner in sales volume (see

Figure 4.2

).

The data for the graph is derived as shown in

Table 4.3

.

Figure 4.2 Time horizon influences perceived results

Table 4.3 High initial interest or long-term loyalty—results over time

Repeating and trying

Some models assume that customers, after they stop repeating purchases, are lost and do not return. However, customers may be acquired, lost, reacquired and lost again. In general, the trial-repeat

model is best suited to projecting sales over the first few periods. Other means of predicting volume include share of requirements and penetration metrics (refer to

Chapter 2

). Those approaches may be preferable for products that lack reliable repeat rates.

Related metrics and concepts

Ever-tried

This is slightly different from trial in that it measures the percentage of the target population that has “ever” (in any previous period) purchased or consumed the product under study. Ever-tried is a cumulative measure and can never add up to more than 100%. Trial, by contrast, is an incremental measure. It indicates the percentage of the population that tries the product for the first time in a given period. Even here, however, there is potential for confusion. If a customer stops buying a product but tries it again six months later, some marketers will categorise that individual as a returning purchaser, others as a new customer. By the latter definition, if individuals can “try” a product more than once, then the sum of all “triers” could equal more than the total population. To avoid confusion, when reviewing a set of data, it’s best to clarify the definitions behind it.

Variations on trial

Certain scenarios reduce the barriers to trial but entail a lower

commitment by the customer than a standard purchase.

-

Forced trial:

No other similar product is available. For example, many people who prefer Pepsi-Cola have “tried” Coca-Cola in restaurants that only serve the latter, and vice versa.

-

Discounted trial:

Consumers buy a new product but at a substantially reduced price.

Forced and discounted trials are usually associated with lower repeat rates than trials made through volitional purchase.

Evoked set

The set of brands that consumers name in response to questions about which brands they consider (or might consider) when making a purchase in a specific category. Evoked Sets for breakfast cereals, for example, are often quite large, while those for coffee may be smaller.

Number of new products

The number of products introduced for the first time in a specific time period.

Revenue from new products

Usually expressed as the percentage of sales generated by products introduced in the current period or, at times, in the most recent three to five periods.

Margin on new products

The dollar or percentage profit margin on new products. This can be measured separately but does not differ mathematically from margin calculations.

Company profit from new products

The percentage of company profits that is derived from new products. In working with this figure, it is important to understand how “new product” is defined.

Target market fit

Of customers purchasing a product, target market fit represents the percentage who belong in the demographic, psychographic or other descriptor set for that item. Target market fit is useful in evaluating marketing strategies. If a large percentage of customers for a product belongs to groups that have not previously been targeted, marketers may reconsider their targets—and their allocation of marketing spending.

Growth: percentage and CAGR

There are two common measures of growth. Year-on-year percentage growth uses the prior year as a base for expressing percentage change from one year to the next. Over longer periods of time, compound annual growth rate (CAGR) is a generally accepted metric for average growth rates.

Same stores growth = Growth calculated only on the basis of stores that were fully established in both the prior and current periods.

Purpose: to measure growth.

Growth is the aim of virtually all businesses. Indeed, perceptions of the success or failure of many enterprises are based on assessments of their growth. Measures of year-on-year growth, however, are complicated by two factors:

- Changes over time in the base from which growth is measured. Such changes might include increases in the number of stores, markets, or salespeople generating sales. This issue is addressed by using “same store” measures (or corollary measures for markets, sales personnel, and so on).

- Compounding of growth over multiple periods. For example, if a company achieves 30% growth in one year, but its results remain unchanged over the two subsequent years, this would not be the same as 10% growth in each of three years. CAGR, the compound annual growth rate, is a metric that addresses this issue.

Construction

Percentage growth is the central plank of year-on-year analysis. It addresses the question: What has the company achieved this year, compared to last year? Dividing the results for the current period by the results for the prior period will yield a comparative figure. Subtracting one from the other will highlight the increase or decrease between periods. When evaluating comparatives, one might say that results in Year 2 were, for example, 110% of those in Year 1. To convert this figure to a growth rate, one need only subtract 100%.

The periods considered are often years, but any time frame can be chosen.

Example Ed’s is a small deli, which has had great success in its second year of operation. Revenues in Year 2 are $570,000, compared with $380,000 in Year 1. Ed calculates his second-year sales results to be 150% of first-year revenues, indicating a growth rate of 50%.

Same stores growth

This metric is at the heart of retail analysis. It enables marketers to analyse results from stores that have been in operation for the entire period under consideration. The logic is to eliminate the stores that have not been open for the full period to ensure comparability. Thus, same stores growth sheds light on the effectiveness with which equivalent resources were used in the period under study versus the prior period. In retail, modest same stores growth and high general growth rates would indicate a rapidly expanding organisation, in which growth is driven by investment. When both same stores growth and general growth are strong, a company can be viewed as effectively using its existing base of stores.

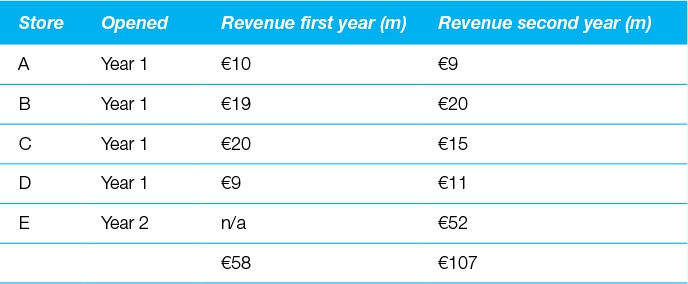

Example A small retail chain in Bavaria posts impressive percentage growth figures, moving from €58 million to €107 million in sales (84% growth) from one year to the next. Despite this dynamic growth, however, analysts cast doubt on the firm’s business model, warning that its same stores growth measure suggests that its concept is failing (see

Table 4.4

).

Table 4.4 Revenue of a Bavarian chain store

Same stores growth excludes stores that were not open at the beginning of the first year under consideration. For simplicity, we assume that stores in this example were opened on the first day of Years 1 and 2, as appropriate. On this basis, same stores revenue in Year 2 would be €55 million—that is, the €107 million total for the year, less the €52 million generated by the newly opened Store E. This adjusted figure can be entered into the same stores growth formula:

As demonstrated by its negative same stores growth figure, sales growth at this firm has been fuelled entirely by a major investment in a new store. This suggests serious doubts about its existing store concept. It also raises a question: Did the new store “cannibalise” existing store sales? (See the next section for cannibalisation metrics.)

Compounding growth, value at future period

By compounding, managers adjust growth figures to account for the

iterative effect of improvement. For example, 10% growth in each of two successive years would not be the same as a total of 20% growth over the two-year period. The reason: Growth in the second year is built upon the elevated base achieved in the first. Thus, if sales run $100,000 in Year 0 and rise by 10% in Year 1, then Year 1 sales come to $110,000. If sales rise by a further 10% in Year 2, however, then Year 2 sales do not total $120,000. Rather, they total $110,000 + (10% * $110,000) = $121,000.

The compounding effect can be easily modelled in spreadsheet packages, which enable you to work through the compounding calculations one year at a time. To calculate a value in Year 1, multiply the corresponding Year 0 value by one plus the growth rate. Then use the value in Year 1 as a new base and multiply it by one plus the growth rate to determine the corresponding value for Year 2. Repeat this process through the required number of years.

Example Over a three-year period, $100 dollars, compounded at a 10% growth rate, yields $133.10.

There is a mathematical formula that generates this effect. It multiplies the value at the beginning—that is, in Year 0—by one plus the growth rate to the power of the number of years over which that growth rate applies.

Example

Using the formula, we can calculate the impact of 10% annual growth over a period of three years. The value in Year 0 is $100. The number of years is 3. The growth rate is 10%.

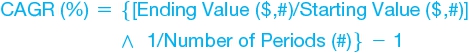

Compound annual growth rate (CAGR)

The CAGR is a constant year-on-year growth rate applied over a period of time. Given starting and ending values, and the length of the period involved, it can be calculated as follows:

Example Let’s assume we have the results of the compounding growth observed in the previous example, but we don’t know what the growth rate was. We know that the starting value was $100, the ending value was $133.10, and the number of years was 3. We can simply enter these numbers into the CAGR formula to derive the CAGR.

Thus, we determine that the growth rate was 10%.

Data sources, complications and

cautions

Percentage growth is a useful measure as part of a package of metrics. It can be deceiving, however, if not adjusted for the addition of such factors as stores, salespeople, or products, or for expansion into new markets. “Same store” sales, and similar adjustments for other factors, tell us how effectively a company uses comparable resources. These adjustments, however, are limited by their deliberate omission of factors that weren’t in operation for the full period under study. Adjusted figures must be reviewed in tandem with measures of total growth.

Related metrics and concepts

Life cycle

Marketers view products as passing through four stages of development:

-

Introductory:

Small markets not yet growing fast.

-

Growth:

Larger markets with faster growth rates.

-

Mature:

Largest markets but little or no growth.

-

Decline:

Variable size markets with negative growth rates.

This is a rough classification. No generally accepted rules exist for making these classifications.

Cannibalisation rates and fair share draw

Cannibalisation is the reduction in sales (units or dollars) of a firm’s existing products due to the introduction of a new product. The cannibalisation rate is generally calculated as the percentage of a new product’s sales that represents a loss of sales (attributable to the introduction of the new entrant) of a specific existing product or products.

Cannibalisation rates represent an important factor in the assessment of new product strategies.

Fair share draw constitutes an assumption or expectation that a new product will capture sales (in unit or dollar terms) from existing products in proportion to the market shares of those existing products.

Cannibalisation is a familiar business dynamic. A company with a successful product that has strong market share is faced by two conflicting ideas. The first is that it wants to maximise profits on its existing product line, concentrating on the current strengths that promise success in the short term. The second idea is that this company—or its competitors—may identify opportunities for new products that better fit the needs of certain segments. If the company introduces a new product in this field, however, it may “cannibalise” the sales of its existing products. That is, it may weaken the sales of its proven, already successful product line. If the company declines to introduce the new product, however, it will leave itself vulnerable to competitors who will

launch such a product, and may thereby capture sales and market share from the company. Often, when new segments are emerging and there are advantages to being early to market, the key factor becomes timing. If a company launches its new product too early, it may lose too much income on its existing line; if it launches too late, it may miss the new opportunity altogether.

Cannibalisation: A market phenomenon in which sales of one product are achieved at the expense of some of a firm’s other products.

The cannibalisation rate is the percentage of sales of a new product

that come from a specific set of existing products.

Example A company has a single product that sold 10 units in the previous period. The company plans to introduce a new product that will sell 5 units with a cannibalisation rate of 40%. Thus 40% of the sales of the new product (40% * 5 units = 2 units) come at the expense of the old product. Therefore, after cannibalisation, the company can expect to sell 8 units of the old product and 5 of the new product, or 13 units in total.

Any company considering the introduction of a new product should confront the potential for cannibalisation. A firm would do well to ensure that the amount of cannibalisation is estimated beforehand to provide an idea of how the product line’s contribution as a whole will change. If performed properly, this analysis will tell a company whether overall profits can be expected to increase or decrease with the introduction of the new product line.

Example Lois sells umbrellas on a small beach where she is the only provider. Her financials for last month were as follows:

|

Umbrella Sales Price:

|

$20

|

|

Variable Cost per Umbrella:

|

$10

|

|

Umbrella Contribution per Unit:

|

$10

|

|

Total Unit Sales per Month:

|

100

|

|

Total Monthly Contribution:

|

$1,000

|

Next month, Lois plans to introduce a bigger, lighter-weight umbrella called the “Big Block.” Projected financials for the Big Block are as follows:

|

Big Block Sales Price:

|

$30

|

|

Variable Cost per Big Block:

|

$15

|

|

Big Block Contribution per Unit:

|

$15

|

|

Total Unit Sales per Month (Big Block):

|

50

|

|

Total Monthly Contribution (Big Block):

|

$750

|

If there is no cannibalisation, Lois thus expects her total monthly contribution will be $1,000 + $750, or $1,750. Upon reflection, however, Lois thinks that the unit cannibalisation rate for Big Block will be 60%. Her projected financials after accounting for cannibalisation are therefore as follows:

|

Big Block Unit Sales:

|

50

|

|

Cannibalisation Rate:

|

60%

|

|

Regular Umbrella Sales Lost:

|

50 * 60% = 30

|

|

New Regular Umbrella Sales:

|

100 − 30 = 70

|

|

New Total Contribution (Regular):

|

70 Units * $10 Contribution per Unit = $700

|

|

Big Block Total Contribution:

|

50 Units * $15 Contribution per Unit = $750

|

|

Lois’ Total Monthly Contribution:

|

$1,450

|

Under these projections, total umbrella sales will increase from 100 to 120, and total contribution will increase from $1,000 to $1,450. Lois will replace 30

regular sales with 30 Big Block sales and gain an extra $5 unit contribution on each. She will also sell 20 more umbrellas than she sold last month and gain $15 unit contribution on each.

In this scenario, Lois was in the enviable position of being able to cannibalise a lower-margin product with a higher-margin one. Sometimes, however, new products carry unit contributions lower than those of existing products. In these instances, cannibalisation reduces overall profits for the firm.

An alternative way to account for cannibalisation is to use a weighted contribution margin. In the previous example, the weighted contribution margin would be the unit margin Lois receives for Big Block after accounting for cannibalisation. Because each Big Block contributes $15 directly and cannibalises the $10 contribution generated by regular umbrellas at a 60% rate, Big Block’s weighted contribution margin is $15 − (0.6 * $10), or $9 per unit. Because Lois expects to sell 50 Big Blocks, her total contribution is projected to increase by 50 * $9, or $450. This is consistent with our previous calculations.

If the introduction of Big Block requires some fixed marketing expenditure, then the $9 weighted margin can be used to find the break-even number of Big Block sales required to justify that expenditure. For example, if the launch of Big Block requires $360 in one-time marketing costs, then Lois needs to sell $360/$9, or 40 Big Blocks to break even on that expenditure.

If a new product has a margin lower than that of the existing product that it cannibalises, and if its cannibalisation rate is high enough, then its weighted contribution margin might be negative. In that case, company earnings will decrease with each unit of the new product sold.

Cannibalisation refers to a dynamic in which one product of a firm takes share from one or more other products of the same firm.

When

a product takes sales from a competitor’s product, that is not cannibalisation … though managers sometimes incorrectly state that their new products are “cannibalising” sales of a competitor’s goods.

Though it is not cannibalisation, the impact of a new product on the sales of competing goods is an important consideration in a product launch. One simple assumption about how the introduction of a new product might affect the sales of existing products is called “fair share draw.”

Fair share draw: The assumption that a new product will capture sales (in unit or dollar terms) from existing products in direct proportion to the market shares held by those existing products.

Example Three rivals compete in the youth fashion market in a small town. Their sales and market shares for last year appear in the following table.

|

Firm

|

Sales

|

Share

|

|

Threadbare

|

$500,000

|

50%

|

|

Too Cool for School

|

$300,000

|

30%

|

|

Tommy Hitchhiker

|

$200,000

|

20%

|

|

Total

|

$1,000,000

|

100%

|

A new entrant is expected to enter the market in the coming year and to generate $300,000 in sales. Two-thirds of those sales are expected to come at the expense of the three established competitors. Under an assumption of fair share draw, how much will each firm sell next year?

If the new firm takes two-thirds of its sales from existing competitors, then this “capture” of sales will total (2/3) *

$300,000, or $200,000. Under fair share draw, the breakdown of that $200,000 will be proportional to the shares of the current competitors. Thus 50% of the $200,000 will come from Threadbare, 30% from Too Cool, and 20% from Tommy. The following table shows the projected sales and market shares next year of the four competitors under the fair share draw assumption:

|

Firm

|

Sales

|

Share

|

|

Threadbare

|

$400,000

|

36.36%

|

|

Too Cool for School

|

$240,000

|

21.82%

|

|

Tommy Hitchhiker

|

$160,000

|

14.55%

|

|

New Entrant

|

$300,000

|

27.27%

|

|

Total

|

$1,100,000

|

100%

|

Notice that the new entrant expands the market by $100,000, an amount equal to the sales of the new entrant that do not

come at the expense of existing competitors. Notice also that under fair share draw, the relative shares of the existing competitors remain unchanged. For example, Threadbare’s share, relative to the total of the original three competitors, is 36.36/(36.36 + 21.82 + 14.55), or 50%—equal to its share before the entry of the new competitor.

The opposite of cannibalisation is incremental sales. This is when the introduction of a new product may boost sales for a complementary product—one that naturally goes with the product.

Data sources, complications and cautions

As noted previously, in cannibalisation, one of a firm’s products takes sales from one or more of that

firm’s other products. Sales taken from the products of competitors are not “cannibalised” sales,

though some managers label them as such.

Cannibalisation rates depend on how the features, pricing, promotion and distribution of the new product compare to those of a firm’s existing products. The greater the similarity of their respective marketing strategies, the higher the cannibalisation rate is likely to be.

Although cannibalisation is always an issue when a firm launches a new product that competes with its established line, this dynamic is particularly damaging to the firm’s profitability when a low-margin entrant captures sales from the firm’s higher-margin offerings. In such cases, the new product’s weighted contribution margin can be negative. Even when cannibalisation rates are significant, however, and even if the net effect on the bottom line is negative, it may be wise for a firm to proceed with a new product if management believes that the original line is losing its competitive strength. The following example is illustrative.

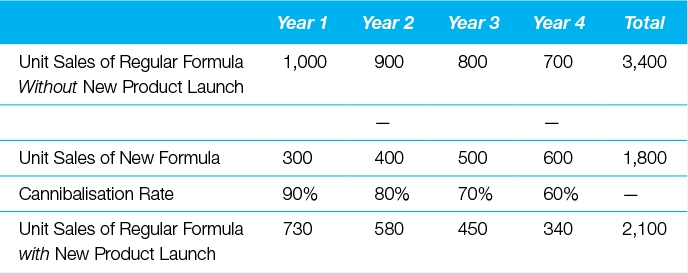

Example A producer of powdered-milk formula has an opportunity to introduce a new, improved formula. The new formula has certain attributes not found in the firm’s existing products. Due to higher costs, however, it will carry a contribution margin of only $8, compared with the $10 margin of the established formula. Analysis suggests that the unit cannibalisation rate of the new formula will be 90% in its initial year. If the firm expects to sell 300 units of the new formula in its first year, should it proceed with the introduction?

Analysis shows that the new formula will generate $8 * 300, or $2,400 in direct contribution. Cannibalisation, however, will reduce contribution from the established line by $10 * 0.9 * 300, or $2,700. Thus, the company’s overall contribution will decline by $300 with the introduction of the new formula. (Note also that the weighted unit margin for the new product is − $1.) This

simple analysis suggests that the new formula should not be introduced.

The following table, however, contains the results of a more detailed four-year analysis. Reflected in this table are management’s beliefs that without the new formula, sales of the regular formula will decline to 700 units in Year 4. In addition, unit sales of the new formula are expected to increase to 600 in Year 4, while cannibalisation rates decline to 60%.

Without the new formula, total four-year contribution is projected as $10 * 3,400, or $34,000. With the new formula, total contribution is projected as ($8 * 1,800) + ($10 * 2,100), or $35,400. Although forecast contribution is lower in Year 1 with the new formula than without it, total four-year contribution is projected to be higher with the new product due to increases in new-formula sales and decreases in the cannibalisation rate.

Brand equity metrics

Brand equity is strategically crucial, but famously difficult to quantify. Many experts have developed tools to analyse this asset, but there’s no universally accepted way

to measure it. In this section, we’ll consider the following techniques to gain insight in this area:

Brand Equity Ten (Aaker)

BrandAsset®

Valuator (Young & Rubicam)

Brand Equity Index (Moran)

Brand Finance

BrandZ (Millward Brown)

Brand Valuation Model (Interbrand)

Purpose: to measure the value of a brand.

A brand encompasses the name, logo, image, and perceptions that identify a product, service, or provider in the minds of customers. It takes shape in advertising, packaging and other marketing communications, and becomes a focus of the relationship with consumers. In time, a brand comes to embody a promise about the goods it identifies—a promise about quality, performance, or other dimensions of value, which can influence consumers’ choices among competing products. When consumers trust a brand and find it relevant, they may select the offerings associated with that brand over those of competitors, even at a premium price. When a brand’s promise extends beyond a particular product, its owner may leverage it to enter new markets. For all these reasons, a brand can hold tremendous value, known as brand equity.

Yet this value can be remarkably difficult to measure. At a corporate level, when one company buys another, marketers might analyse the goodwill component of the purchase price to shed light on the value of the brands acquired. As goodwill represents the excess paid for a firm—beyond the value of its tangible, measurable assets, and as a company’s brands constitute important intangible assets—the

goodwill figure may provide a useful indicator of the value of a portfolio of brands. Of course, a company’s brands are rarely the only intangible assets acquired in such a transaction. Goodwill more frequently encompasses intellectual property and other intangibles in addition to brand. The value of intangibles, as estimated by firm valuations (sales or share prices), is also subject to economic cycles, investor “exuberance,” and other influences that are difficult to separate from the intrinsic value of the brand.

From a consumer’s perspective, the value of a brand might be the amount she would be willing to pay for merchandise that carries the brand’s name, over and above the price she’d pay for identical unbranded goods.

2

Marketers strive to estimate this premium in order to gain insight into brand equity. Here again, however, they encounter daunting complexities, as individuals vary not only in their awareness of different brands, but in the criteria by which they judge them, the evaluations they make, and the degree to which those opinions guide their purchase behaviour. In a similar vein, the value of the brand might be the increased propensity to buy the product because it carries the brand name.

Theoretically, a marketer might aggregate these preferences across an entire population to estimate the total premium its members would pay for goods of a certain brand. Even that, however, wouldn’t fully capture brand equity. What’s more, the value of a brand encompasses not only the premium a customer will pay for each unit of merchandise associated with that brand, but also the incremental volume it generates. A successful brand will shift outward the demand curve for its goods or services; that is, it not only will enable a provider to charge a higher price (P’ rather than P, as seen in

Figure 4.3

), but it will also sell an increased quantity (Q’ rather than Q). Thus, brand equity in this example can be viewed as the difference between the revenue with the brand (P’ x Q’) and the revenue without the brand (P x Q)—depicted as the shaded area in

Figure 4.3

. (Of course, this example focuses on revenue, when, in fact, it is profit or present value of profits that matters more. But, given the increase in price associated with the brand comes with no

incremental variable costs, and, as such, this lift represents profits, as well.)

Figure 4.3

Brand equity—outward shift of demand curve

In practice, of course, it’s difficult to measure a demand curve, and few marketers do so. Because brands are crucial assets, however, both marketers and academic researchers have devised means to contemplate their value. David Aaker, for example, tracks 10 attributes of a brand to assess its strength. Bill Moran has formulated a brand equity index that can be calculated as the product of effective market share, relative price and customer retention. Kusum Ailawadi and her colleagues have refined this calculation, suggesting that a truer estimate of a brand’s value might be derived by multiplying the Moran index by the dollar volume of the market in which it competes. Young & Rubicam, a marketing communications agency, has developed a tool called the BrandAsset®

Valuator, which measures a brand’s power on the basis of differentiation, relevance, esteem, and knowledge. An even more theoretical conceptualisation of brand equity is the difference of the firm value with and without the brand. If you find it difficult to imagine the firm without its brand, then you can appreciate how

difficult it is to quantify brand equity. Interbrand, a brand strategy agency, draws upon its own model to separate tangible product value from intangible brand value and uses the latter to rank the top 100 global brands each year. Finally, conjoint analysis can shed light on a brand’s value because it enables marketers to measure the impact of that brand on customer preference, treating it as one among many attributes that consumers trade off in making purchase decisions (see later in this chapter).

Construction

Brand Equity Ten (Aaker)

David Aaker, a marketing professor and brand consultant, highlights 10 attributes of a brand that can be used to assess its strength. These include Differentiation, Satisfaction or Loyalty, Perceived Quality, Leadership or Popularity, Perceived Value, Brand Personality, Organisational Associations, Brand Awareness, Market Share, and Market Price and Distribution Coverage. Aaker doesn’t weight the attributes or combine them in an overall score, as he believes any weighting would be arbitrary and would vary among brands and categories. Rather, he recommends tracking each attribute separately.

Brand Equity Index (Moran)

Marketing executive Bill Moran has derived an index of brand equity as the product of three factors: Effective Market Share, Relative Price, and Durability.

Effective Market Share is a weighted average. (We suggest that it makes more sense to use market share in units rather than in revenue terms, to avoid effectively double counting the impact of

price, which also influences the calculation through “Relative Price.”) It represents the sum of a brand’s market shares in all segments in which it competes, weighted by each segment’s proportion of that brand’s total sales. Thus, if a brand made 70% of its sales in Segment A, in which it had a 50% share of the market, and 30% of its sales in Segment B, in which it had a 20% share, its Effective Market Share would be (0.7 * 0.5) + (0.3 * 0.2) = 0.35 + 0.06 = 0.41, or 41%.

Relative Price is a ratio. It represents the price of goods sold under a given brand, divided by the average price of comparable goods in the market. For example, if goods associated with the brand under study sold for $2.50 per unit, while competing goods sold for an average of $2.00, that brand’s Relative Price would be 1.25, and it would be said to command a price premium. Conversely, if the brand’s goods sold for $1.50, versus $2.00 for the competition, its Relative Price would be 0.75, placing it at a discount to the market. Note that this measure of relative price is not the same as dividing the brand price by the market average price. It does have the advantage that, unlike the latter, the calculated value is not affected by the market share of the firm or its competitors.

Durability is a measure of customer retention or loyalty. It represents the percentage of a brand’s customers who will continue to buy goods under that brand in the following year.

Example ILLI is a tonic drink that focuses on two geographic markets—eastern and western U.S. metropolitan areas. In the western market, which accounts for 60% of ILLI’s sales, the drink has a 30% share of the market. In the East, where ILLI makes the remaining 40% of its sales, it has a 50% share of the market.

Effective Market Share is equal to the sum of ILLI’s shares of the segments, weighted by the percentage of total brand sales represented by each.

The average price for tonic drinks is $2.00, but ILLI enjoys a premium. It generally sells for $2.50, yielding a Relative Price of $2.50 / $2.00, or 1.25.

Half of the people who purchase ILLI this year are expected to repeat next year, generating a Durability figure of 0.5. (See earlier in this chapter for a definition of repeat rates.)

With this information, ILLI’s Brand Equity Index can be calculated as follows:

Clearly, marketers can expect to encounter interactions among the three factors behind a Brand Equity Index. If they raise the price of a brand’s goods, for example, they may increase its Relative Price but reduce its Effective Market Share and Durability. Would the overall effect be positive for the brand? By estimating the Brand Equity Index before and after the price increase under consideration, marketers may gain insight into that question.

Notice that two of the factors behind this index, Effective Market Share and Relative Price, draw upon the axes of a demand curve (quantity and price). In constructing his index, Moran has taken those two factors and combined them, through year-to-year retention, with the dimension of time.

Ailawadi and her colleagues suggested that the equity index of a

brand can be enhanced by multiplying it by the dollar volume of the market in which the brand competes, generating a better estimate of its value. Ailawadi also contends that the equity of a brand is better captured by its overall revenue premium (relative to generic goods) rather than its price per unit alone, as the revenue figure incorporates both price and quantity and so reflects a jump from one demand curve to another rather than a movement along a single curve.

BrandAsset Valuator (Young & Rubicam)

Young & Rubicam, a marketing communications agency, has developed the BrandAsset Valuator, a tool to diagnose the power and value of a brand. In using it, the agency surveys consumers’ perspectives along four dimensions:

-

Differentiation:

The defining characteristics of the brand and its distinctiveness relative to competitors.

-

Relevance:

The appropriateness and connection of the brand to a given consumer.

-

Esteem:

Consumers’ respect for and attraction to the brand.

-

Knowledge:

Consumers’ awareness of the brand and understanding of what it represents.

Young & Rubicam maintains that these criteria reveal important factors behind brand strength and market dynamics. For example, although powerful brands score high on all four dimensions, growing brands may earn higher grades for Differentiation and Relevance, relative to Knowledge and Esteem. Fading brands often show the reverse pattern, as they’re widely known and respected but may be declining toward commoditisation or irrelevance (see

Figure 4.4

).

Figure 4.4

Young & Rubicam BrandAsset Valuator patterns of brand equity

The BrandAsset Valuator is a proprietary tool, but the concepts behind it have broad appeal. Many marketers apply these concepts by conducting independent research and exercising judgement about their own brands relative to the competition. Leon Ramsellar

3

of Philips Consumer Electronics, for example, has reported using four key measures in evaluating brand equity and offered sample questions for assessing them.

-

Uniqueness:

Does this product offer something new to me?

-

Relevance:

Is this product relevant for me?

-

Attractiveness:

Do I want this product?

-

Credibility:

Do I believe in the product?

Clearly Ramsellar’s list is not the same as Y&R’s BAV, but the similarity of the first two factors is hard to miss.

Brand Finance

Founded in 1996, Brand Finance is an independent intangible asset valuation consultancy based in the UK with offices in more than 20 countries. They focus on valuation of the firm’s intangible assets.

Their methodology specifies three alternative brand valuation approaches: the Market, Cost and Income approaches.

Royalty Relief methodology

Brand Finance uses a Royalty Relief methodology when calculating the value of a brand. This approach is based on the assumption that if the company did not own the trademarks that it benefits from, it would need to license them from a third party brand owner and pay a licensing fee. Ownership of the trademarks relieves the company from paying this royalty fee.

Royalty Relief valuation formula

The Royalty Relief method involves estimating likely future sales, applying an appropriate royalty rate to them, and then discounting estimated future, post-tax royalties, to arrive at a Net Present Value. This is held to represent the brand value.

Brand ratings

In determining the appropriate royalty rate, Brand Finance establishes a range of comparable royalty rates, and determines the point within the range that the brand under review falls by reference to a brand rating. The brand rating is calculated using Brand Finance’s BrandBeta analysis, which benchmarks the strength, risk and potential of a brand, relative to its competitors, on a scale ranging from AAA to D. It is conceptually similar to a credit rating.

The data used to calculate the ratings comes from various sources including Bloomberg, annual reports, client-commissioned

research, and Brand Finance internal research.

BrandZ (Millward Brown)

BrandZ is Millward Brown’s brand equity database. It holds data from more than two million consumers and professionals across 31 countries, comparing more than 23,000 brands. The database is used to estimate brand valuations, and each year since 2006, has been used to generate a list of the top 100 global brands.

Its methodology’s objective is to peel away all financial components of brand value to determine how much brand alone contributes to brand value. BrandZ conducts worldwide, ongoing, in-depth quantitative consumer research, and builds up a global picture of brands on a category-by-category, and country-by-country basis. BrandZ uses the following pillars to anchor its brand valuation: Meaningful, Different and Salient.

Meaningful:

In any category, these brands appeal more, generate greater “love,” and meet the individual’s expectations and needs.

Different:

These brands are unique in a positive way and “set the trends,” staying ahead of the curve for the benefit of the consumer.

Salient:

They come spontaneously to mind as the brand of choice for key needs.

Valuation process

Step 1: Calculating Financial Value

Part A

- Determine total corporate earnings from the corporation’s entire portfolio of brands

- Analyse financial information from annual reports and other sources, such as Kantar Worldpanel and Kantar Retail. This analysis yields a metric called the Attribution Rate.

- Multiply Corporate Earnings by the Attribution Rate to arrive at

Branded Earnings, the amount of Corporate Earnings attributed to a particular brand. If the Attribution Rate of a brand is 50 percent, for example, then half the Corporate Earnings are identified as coming from that brand.

Part B

- Determine future earnings and attribute an earnings multiple to the company. Information supplied by Bloomberg data is used to calculate the Brand Multiple. BrandZ takes the Branded Earnings and multiplies that number by the Brand Multiple to arrive at the Financial Value.

Step 2: Calculating Brand Contribution

- We now have the value of the branded business as a proportion of the total value of the corporation. But this branded business value is still not quite the core that we are after. To arrive at Brand Value, we need to peel away a few more layers, such as other factors that influence the value of the branded business; for example: price, convenience, availability and distribution.

- Because a brand exists in the mind of the consumer, we have to assess the brand’s uniqueness and its ability to stand out from the crowd, generate desire, and cultivate loyalty. This unique role played by brand is called Brand Contribution.

Step 3: Calculating Brand Value

- Take the Financial Value and multiply it by Brand Contribution, which is expressed as a percentage of Financial Value. The result is Brand Value. Brand Value is the dollar amount a brand contributes to the overall value of a corporation. Isolating and measuring this intangible asset reveals an additional source of shareholder value that otherwise would not exist.

Brand Valuation Model (Interbrand)

Interbrand, a division of Omnicom, is a brand consultancy

headquartered in New York City.

Interbrand publishes the Best Global Brands report on an annual basis. The report identifies the world’s 100 most valuable brands. Interbrand’s methodology is also the first of its kind to be ISO certified. To develop the report, Interbrand examines three key aspects that contribute to a brand’s value:

- The financial performance of the branded products or service.

- The role the brand plays in influencing consumer choice.

- The strength the brand has to command a premium price, or secure earnings for the company.

Interbrand has refined its brand valuation into a five-step Economic Value Added methodology. Through a similar methodology, Interbrand releases an annual ranking of the best global brands called “Best Global Brands,” which evaluates each brand’s financial performance, role, and strength. To qualify, brands must have a presence on at least three major continents, and must have broad geographic coverage in growing and emerging markets. Thirty percent of revenues must come from outside the home country, and no more than fifty percent of revenues should come from any one continent. Economic profit must be expected to be positive over the longer term, delivering a return above the brand’s cost of capital. The brand must have a public profile and awareness across the major economies of the world.

Three key components to brand valuation methodology:

-

Financial Analysis

—This measures the overall financial return to an organisation’s investors, or its “economic profit.” Economic profit is the after-tax operating profit of the brand minus a charge for the capital used to generate the brand’s revenue and margins.

-

Role of Brand

—Role of Brand measures the portion of the purchase decision attributable to the brand, as opposed to other factors (for example, purchase drivers like price, convenience, or product features). The Role of Brand Index

(RBI) quantifies this as a percentage. RBI determinations for Best Global Brands derive, depending on the brand, from one of three methods: primary research, a review of historical roles of brands for companies in that industry, or expert panel assessment.

-

Brand Strength

—Brand Strength measures the ability of the brand to create loyalty and, therefore, sustainable demand and profit into the future. Brand Strength analysis is based on an evaluation across several factors that Interbrand believes makes a strong brand (factors are weighted and add up to 100). These factors include leadership (25), stability (15), market (10), geographic spread (25), trend (10), support (10) and protection (5). Performance on these factors is judged relative to other brands in the industry and relative to other world-class brands. The Brand Strength analysis delivers a snapshot of the strengths and weaknesses of the brand and is used to generate a road map of activity to enhance the strength and value of the brand in the future.

Conjoint analysis

Marketers use conjoint analysis to measure consumers’ preference for various attributes of a product, service, or provider, such as features, design, price or location (see the next section). By including brand and price as two of the attributes under consideration, they can gain insight into consumers’ valuation of a brand—that is, their willingness to pay a premium for it.

Data sources, complications, and cautions

The methods described previously represent experts’ best attempts to place a value on a complex and intangible entity. Almost all of the metrics in this book are relevant to brand equity along one dimension or another.

Related metrics and concepts

Brand strategy is a broad field and includes several concepts that at first may appear to be measurable. Strictly speaking, however, brand strategy is not a metric.

Brand identity

This is the marketer’s vision of an ideal brand—the company’s goal for perception of that brand by its target market. All physical, emotional, visual, and verbal messages should be directed toward realisation of that goal, including name, logo, signature, and other marketing communications. Brand identity, however, is not stated in quantifiable terms.

Brand position and brand image

These refer to consumers’ actual perceptions of a brand, often relative to its competition. Brand position is frequently measured along product dimensions that can be mapped in multi-dimensional space. If measured consistently over time, these dimensions may be viewed as metrics—as coordinates on a perceptual map. (See

Chapter 2

for a discussion of attitude, usage measures, and the hierarchy of effects.)

Product differentiation

This is one of the most frequently used terms in marketing, but it has no universally agreed-upon definition. More than mere “difference,” it generally refers to distinctive attributes of a product that generate increased customer preference or demand. These are often difficult to view quantitatively because they may be actual or perceived, as well as non-monotonic. In other words, although certain attributes such as price can be quantified and follow a linear preference model (that is, either more or less is always better), others can’t be analysed numerically or may fall into a sweet spot, outside of which neither more nor less would be preferred (the spiciness of a food, for example). For all these reasons, Product Differentiation is hard to analyse as a metric and has been criticised as a “meaningless term.”

Additional citation

Simon, Julian. (1969). “‘Product Differentiation’: A Meaningless Term and an Impossible Concept”, Ethics,

Vol. 79, No. 2 (Jan.), pp. 131–138. Published by The University of Chicago Press.

Conjoint utilities and consumer preference

Conjoint utilities measure consumer preference for an attribute level and then—by combining the valuations of multiple attributes—measure preference for an overall choice. Measures are generally made on an individual basis, although this analysis can also be performed on a segment level. In the frozen pizza market, for example, conjoint utilities can be used to determine how much a customer values superior taste (one attribute) versus paying extra for premium cheese (a second attribute).

Conjoint utilities can also play a role in analysing compensatory and non-compensatory decisions. Weaknesses in compensatory factors can be made up in other attributes. A weakness in a non-compensatory factor cannot be overcome by other strengths.

Conjoint analysis can be useful in determining what customers really want and—when price is included as an attribute—what they’ll pay for it. In launching new products, marketers find such analyses useful for achieving a deeper understanding of the values that customers place on various product attributes. Throughout product management, conjoint utilities can help marketers focus their efforts on the attributes of greatest importance to customers.

Purpose: to understand what customers want.

Conjoint analysis is a method used to estimate customers’ preferences, based on how customers weight the attributes on which a choice is made. The premise of conjoint analysis is that a customer’s preference between product options can be broken into a set of attributes that are weighted to form an overall evaluation. Rather than asking people directly what they want and why, in conjoint analysis, marketers ask people about their overall preferences for a set of choices described on their attributes and then decompose those into the component dimensions and weights underlying them. A model can be developed to compare sets of attributes to determine which represents the most appealing bundle of attributes for customers.

Conjoint analysis is a technique commonly used to assess the attributes of a product or service that are important to targeted customers and to assist in the following:

- Product design

- Advertising copy

- Pricing

- Segmentation

- Forecasting

Construction

Conjoint analysis: A method of estimating customers by assessing the overall preferences customers assign to alternative choices.

An individual’s preference can be expressed as the total of his or her baseline preferences for any choice, plus the partworths (relative values) for that choice expressed by the individual.

In linear form, this can be represented by the following formula:

Example Two attributes of a cell phone, its price and its size, are ranked through conjoint analysis, yielding the results shown in

Table 4.5

.

This could be read as follows:

Table 4.5 Conjoint analysis: price and size of a cell phone

|

Attribute

|

Level

|

Partworth

|

|

Price

|

$100

|

0.9

|

|

Price

|

$200

|

0.1

|

|

Price

|

$300

|

− 1

|

|

Size

|

Small

|

0.7

|

|

Size

|

Medium

|

− 0.1

|

|

Size

|

Large

|

− 0.6

|

A small phone for $100 has a partworth to customers of 1.6 (derived as 0.9 + 0.7 ). This is the highest result observed in this exercise. A small but expensive ($300) phone is rated as − 0.3 (that is, − 1 + 0.7). The desirability of this small phone is offset by its price. A large, expensive phone is least desirable to customers, generating a partworth of − 1.6 (that is, − 1 + − 0.6).

On this basis, we determine that the customer whose views are analysed here would prefer a medium-size phone at $200 (utility = 0) to a small phone at $300 (utility = −0.3). Such information would be instrumental

to decisions concerning the trade-offs between product design and price.

This analysis also demonstrates that, within the ranges examined, price is more important than size from the perspective of this consumer. Price generates a range of effects from 0.9 to −1 (that is, a total spread of 1.9), while the effects generated by the most and least desirable sizes span a range only from 0.7 to −0.6 (total spread = 1.3).

Compensatory versus non-compensatory consumer decisions

A compensatory decision process is one in which a customer evaluates choices with the perspective that strengths along one or more dimensions can compensate for weaknesses along others.

In a non-compensatory decision process, by contrast, if certain attributes of a product are weak, no compensation is possible, even if the product possesses strengths along other dimensions. In the previous cell phone example, for instance, some customers may feel that if a phone were greater than a certain size, no price would make it attractive.

In another example, most people choose a grocery store on the basis of proximity. Any store within a certain radius of home or work may be considered. Beyond that distance, however, all stores will be excluded from consideration, and there is nothing a store can do to overcome this. Even if it posts extraordinarily low prices, offers a stunningly wide assortment, creates great displays and stocks the freshest foods, for example, a store will not entice consumers to travel 400 miles to buy their groceries.

Although this example is extreme to the point of absurdity, it illustrates an important point: When consumers make a choice on a non-compensatory basis, marketers need to define the dimensions along which certain attributes must

be delivered, simply to qualify

for consideration of their overall offering.

One form of non-compensatory decision-making is elimination-by-aspect. In this approach, consumers look at an entire set of choices and then eliminate those that do not meet their expectations in the order of the importance of the attributes. In the selection of a grocery store, for example, this process might run as follows:

- Which stores are within 5 miles of my home?

- Which ones are open after 8 p.m.?

- Which carry the spicy mustard that I like?

- Which carry fresh flowers?

The process continues until only one choice is left.

In the ideal situation, in analysing customers’ decision processes, marketers would have access to information on an individual level, revealing

- Whether the decision for each customer is compensatory or not

- The priority order of the attributes

- The “cut-off” levels for each attribute

- The relative importance weight of each attribute if the decision follows a compensatory process

More frequently, however, marketers have access only to past behaviour, helping them make inferences regarding these items.

In the absence of detailed, individual information for customers throughout a market, conjoint analysis provides a means to gain insight into the decision-making processes of a sampling of customers. In conjoint analysis, we generally assume a compensatory process. That is, we assume utilities are additive. Under this assumption, if a choice is weak along one dimension (for example, if a store does not carry spicy mustard), it can compensate for this with strength along another (for example, it does carry fresh-cut flowers) at least in part. Conjoint analyses can approximate a non-compensatory model by assigning non-linear weighting to an attribute across certain levels of its value. For example, the

weightings for distance to a grocery store might run as follows:

|

Within 1 mile:

|

0.9

|

|

1–5 miles away:

|

0.8

|

|

5–10 miles away:

|

−0.8

|

|

More than 10 miles away:

|

−0.9

|

In this example, stores outside a 5-mile radius cannot practically make up the loss of utility they incur as a result of distance. Distance becomes, in effect, a noncompensatory dimension.

By studying customers’ decision-making processes, marketers gain insight into the attributes needed to meet consumer expectations. They learn, for example, whether certain attributes are compensatory or non-compensatory. A strong understanding of customers’ valuation of different attributes also enables marketers to tailor products and allocate resources effectively.

Several potential complications arise in considering compensatory versus non-compensatory decisions. Customers often don’t know whether an attribute is compensatory or not, and they may not be readily able to explain their decisions. Therefore, it is often necessary either to infer a customer’s decision-making process or to determine that process through an evaluation of choices, rather than a description of the process.

It is possible, however, to uncover non-compensatory elements through conjoint analysis. Any attribute for which the valuation spread is so high that it cannot practically be made up by other features is, in effect, a non-compensatory attribute.

Example Among grocery stores, Juan prefers the Acme market because it’s close to his home, despite the fact that Acme’s prices are generally higher than those at the local Shoprite store. A third store, Vernon’s, is located in Juan’s apartment complex. But Juan avoids it because Vernon’s doesn’t carry his favourite soda.

From this information, we know that Juan’s shopping choice is influenced by at least three factors: price, distance from his home, and whether a store carries his favorite soda. In Juan’s decision process, price and distance seem to be compensating factors. He trades price for distance. Whether the soda is stocked seems to be a non-compensatory factor. If a store doesn’t carry Juan’s favourite soda, it will not win his business, regardless of how well it scores on price and location.

Data sources, complications and cautions

Prior to conducting a conjoint study, it is necessary to identify the attributes of importance to a customer. Focus groups are commonly used for this purpose. After attributes and levels are determined, a typical approach to Conjoint Analysis is to use a fractional factorial orthogonal design, which is a partial sample of all possible combinations of attributes. This is to reduce the total number of choice evaluations required by the respondent. With an orthogonal design, the attributes remain independent of one another, and the test doesn’t weigh one attribute disproportionately to another.

There are multiple ways to gather data, but a straightforward approach would be to present respondents with choices and to ask them to rate those choices according to their preferences. These preferences then become the dependent variable in a regression, in which attribute levels serve as the independent variables, as in the previous equation. Conjoint utilities constitute the weights determined to best capture the preference ratings provided by the respondent.

Often, certain attributes work in tandem to influence customer choice. For example, a fast and

sleek sports car may provide greater value to a customer than would be suggested by the sum of the fast and sleek attributes. Such relationships between attributes are not captured by a simple conjoint model, unless one accounts for

interactions.

Ideally, conjoint analysis is performed on an individual level because attributes can be weighted differently across individuals. Marketers can also create a more balanced view by performing the analysis across a sample of individuals. It is appropriate to perform the analysis within consumer segments that have similar weights. Conjoint analysis can be viewed as a snapshot in time of a customer’s desires. It will not necessarily translate indefinitely into the future.

It is vital to use the correct attributes in any conjoint study. People can only tell you their preferences within the parameters you set. If the correct attributes are not included in a study, while it may be possible to determine the relative importance of those attributes that are

included, and it may technically be possible to form segments on the basis of the resulting data, the analytic results may not be valid for forming useful

segments. For example, in a conjoint analysis of consumer preferences regarding colours and styles of cars, one may correctly group customers as to their feelings about these attributes. But if consumers really care most about engine size, then those segmentations will be of little value.

Segmentation using conjoint utilities

Understanding customers’ desires is a vital goal of marketing. Segmenting, or clustering similar customers into groups, can help managers recognise useful patterns and identify attractive subsets within a larger market. With that understanding, managers can select target markets, develop appropriate offerings for each, determine the most effective ways to reach the targeted segments and allocate resources accordingly. Conjoint analysis can be highly useful in this exercise.

Purpose: to identify segments based on

conjoint utilities.

As described in the previous section, conjoint analysis is used to determine customers’ preferences on the basis of the attribute weightings that they reveal in their decision-making processes. These weights, or utilities, are generally evaluated on an individual level.

Segmentation entails the grouping of customers who demonstrate similar patterns of preference and weighting with regard to certain product attributes, distinct from the patterns exhibited by other groups. Using segmentation, a company can decide which group(s) to target and can determine an approach to appeal to the segment’s members. After segments have been formed, a company can set strategy based on their attractiveness (size, growth, purchase rate, diversity) and on its own capability to serve these segments, relative to competitors.

Construction

To complete a segmentation based on conjoint utilities, one must first determine utility scores at an individual customer level. Next, one must cluster these customers into segments of like-minded individuals. This is generally done through a methodology known as cluster analysis.

Cluster analysis: A technique that calculates the distances between customers and forms groups by minimising the differences within each group and maximising the differences between groups.

Cluster analysis operates by calculating a “distance” (a sum of squares) between individuals and, in a hierarchical fashion, starts pairing those individuals together. The process of pairing minimises the “distance” within a group and creates a manageable number of segments within a larger population.

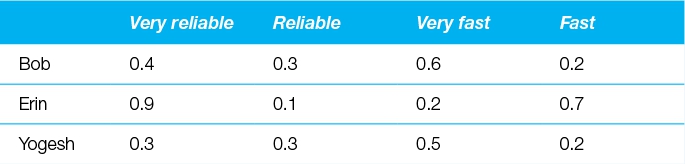

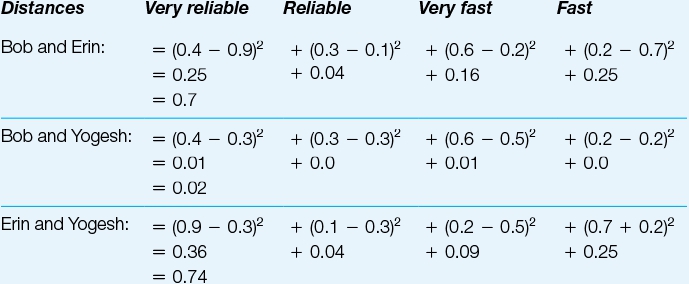

Example