Chapter 10. Red Rover

Alan Brown, Red Rover

The success of Internet-based distributed computing will certainly cause headaches for censors. Peer-to-peer technology can boast populations in the tens of millions, and the home user now has access to the world’s most advanced cryptography. It’s wonderful to see those who turned technology against free expression for so long now scrambling to catch up with those setting information free. But it’s far too early to celebrate: What makes many of these systems so attractive in countries where the Internet is not heavily regulated is precisely what makes them the wrong tool for much of the world.

Red Rover was invented in recognition of the irony that the very people who would seem to benefit the most from these systems are in fact the least likely to be able to use them. A partial list of the reasons this is so includes the following:

- The delivery of the client itself can be blocked

The perfect stealth device does no good if you can’t obtain it. Yet, in exactly those countries where user secrecy would be the most valuable, access to the client application is the most guarded. Once the state recognized the potential of the application, it would not hesitate to block web sites and FTP sites from which the application could be downloaded and, based on the application’s various compressed and encrypted sizes, filter email that might be carrying it in.

- Possession of the client is easily criminalized

If a country is serious enough about curbing outside influence to block web sites, it will have no hesitation about criminalizing possession of any application that could challenge this control. This would fall under the ubiquitous legal category “threat to state security.” It’s a wonderful advance for technology that some peer-to-peer applications can pass messages even the CIA can’t read. But in some countries, being caught with a clever peer-to-peer application may mean you never see your family again. This is no exaggeration: in Burma, the possession of a modem—even a broken one—could land you in court.

- Information trust requires knowing the origin of the information

Information on most peer-to-peer systems permits the dissemination of poisoned information as easily as it does reliable information. Some systems succeed in controlling disreputable transmissions. On most, though, there’s an information free-for-all. With the difference between freedom and jail hinging on the reliability of information you receive, would you really trust a Wrapster file that could have originated with any one of 20 million peer clients?

- Non-Web encryption is more suspicious

Encrypted information can be recognized because of its unnatural entropy values (that is, the frequencies with which characters appear are not what is normally expected in the user’s language). It is generally tolerated when it comes from web sites, probably because no country is eager to hinder online financial transactions. But especially when more and more states are charging ISPs with legal responsibility for their customers’ online activities, encrypted code from a non-Web source will attract suspicion. Encryption may keep someone from reading what’s passing through a server, but it never stops him from logging it and confronting the end user with its existence. In a country with relative Internet freedom, this isn’t much of a problem. In one without it, the cracking of your key is not the only thing to fear.

I emphasize these concerns because current peer-to-peer systems show marked signs of having been created in relatively free countries. They are not designed with particular sensitivity to users in countries where stealth activities are easily turned into charges of subverting the state. States where privacy is the most threatened are the very states where, for your own safety, you must not take on the government: if they want to block a web site, you need to let them do so for your own safety.

Many extant peer-to-peer approaches offer other ways to get at a site’s information (web proxies, for example), but the information they provide tends to be untrustworthy and the method for obtaining it difficult or dangerous.

Red Rover offers the benefits of peer-to-peer technology while offering a clientless alternative to those taking the risk behind the firewall. The Red Rover anti-censorship strategy does not require the information seeker to download any software, place any incriminating programs on her hard drive, or create any two-way electronic trails with information providers. The benefactor of Red Rover needs only to know how to count and how to operate a web browser to access a web-based email account.

Red Rover is technologically very “open” and will hopefully succeed at traversing censorship barriers not by electronic stealth but by simple brute force. The Red Rover distributed clients create a population of contraband providers which is far too large, changing, and growing for any nation’s web-blocking software to keep up with.

Architecture

Red Rover is designed to keep a channel of information open to those behind censorship walls by exploiting some now mundane features of the Internet, such as dynamic IP addresses and the unbalanced ratio of Red Rover clients to censors. Operating out in the open at a low-tech level helps keep Red Rover’s benefactors from appearing suspicious. In fact, Red Rover makes use of aspects of the current Internet that other projects consider liabilities, such as the impermanent connections of ordinary Internet users and the widespread use of free, web-based email services. The benefactors, those behind the censorship barrier (hereafter, “subscribers”), never even need to see a Red Rover client application: users of the client are in other countries.

The following description of the Red Rover strategy will be functional (i.e., top-down) because that is the best way to see the rationale behind decisions that make Red Rover unique among peer-to-peer projects. It will be clear that the Red Rover strategy openly and necessarily embraces human protocols, rather than performing all of its functions at the algorithmic level. The description is simplified in the interest of saving space.

The Red Rover application is not a proxy server, not a site mirror, and not a gate allowing someone to surf the Web through the client. The key elements of the system are hosts on ordinary dial-up connections run by Internet users who volunteer to download data that the Red Rover administrator wants to provide. Lists of these hosts and the content they offer, changing rapidly as the hosts come and go over the course of a day, are distributed by the Red Rover hub to the subscribers. The distribution mechanism is done in a way that minimizes the risk of attracting attention.

It should be clear, too, that Red Rover is a strategy, not just the software application that bears the name. Again, those who benefit the most from Red Rover will never see the program. The strategy is tripartite and can be summarized as follows. (The following sentence is deliberately awkward, for reasons explained in the next section.)

3 simple layers: the hub, the client, & sub scriber.

The hub

The hub is the server from which all information originates. It publishes two types of information.

First, the hub creates packages of HTML files containing the information the hub administrator wants to pass through the censorship barrier. These packages will go to the clients at a particular time. Second, the hub creates a plain text, email notification that explains what material is available at a particular time and which clients (listing their IP addresses) have the material. The information may be encoded in a nontraditional way that avoids attracting attention from software sniffers, as described later in this chapter.

The accuracy of these text messages is time-limited, because clients go on- and offline. A typical message will list perhaps 10 IP addresses of active clients, selected randomly from the hub’s list of active clients for a particular time.

The hub distributes the HTML packages to the clients, which can be done in a straightforward manner. The next step is to get the text messages to the subscribers, which is much trickier because it has to be done in such a way as to avoid drawing the attention of authorities that might be checking all traffic.

The hub would never send a message directly to any subscriber, because the hub’s IP address and domain name are presumed to be known to authorities engaged in censorship. Instead, the hub sends text messages to clients and asks them to forward them to the subscribers. Furthermore, the client that forwards this email would never be listed in its own outgoing email as a source for an HTML package. Instead, each client sends mail listing the IP addresses of other clients. The reason for this is that if a client sent out its own IP address and the subscriber were then to visit it, the authorities could detect evidence of two-way communication. It would be much safer if the notification letter and the subscriber’s decision to surf took different routes.

The IP addresses on these lists are “encrypted” at the hub in some nonstandard manner that doesn’t use hashing algorithms, so that they don’t set off either entropy or pattern detectors. For example, that ungrammatical “3 simple layers” sentence at the end of the last section would reveal the IP address 166.33.36.137 to anyone who knew the convention for decoding it. The convention is that each digit in an IP address is represented by the number of letters in a word, and octets are separated by punctuation marks. Thus, since there is 1 letter in “3,” 6 in “simple,” and 6 in “layers,” the phrase “3 simple layers” yields the octet 166 to someone who understands the convention.

Sending a list of 10 unencoded IP addresses to someone could easily be detected by a script. But by current standards, high-speed extraction of any email containing a sentence with bad grammar would result in an overwhelming flood of false positives. The “encryption” method, then, is invisible in its overtness. Practical detection would require a great expenditure of human effort, and for this reason, this method should succeed by its pure brute force. The IP addresses will get through.

The hub also keeps track of the following information about the subscriber:

Her web-based email address, allowing her the option of proxy access to email and frequent address changes without overhead to the hub.

The dates and times that she wishes to receive information (which she could revise during each Red Rover client visit, perhaps via SSL, in order to avoid identifiable patterns of online behavior).

Her secret key, in case she prefers to take her chances with encrypted list notifications (an option Red Rover would offer).

The clients

The clients are free software applications that are run on computers around the world by ordinary, dial-up Internet users who volunteer to devote a bit of their system usage to Red Rover. Clients run in the background and act as both personal web servers and email notification relays. When the user on the client system logs on, the client sends its IP address to the hub, which registers it as active. For most dial-up accounts, this means that, statistically, the IP will differ from the one the client had for its last session. This simple fact plays an important role in client longevity, as discussed below.

Once the client is registered, the hub sends it two things. The first is an HTML package, which the client automatically posts for anyone accessing the IP address through a browser. (URL encryption would be a nice feature to offer here, but not an essential one.)

The second message from the hub is an email containing the IP list, plus some filler to make sure the size of the message is random. This email will be forwarded automatically from the receiving Red Rover client to a subscriber’s web-based email account. These emails will be generated in random sizes as an added frustration to automated censors which hunt for packet sizes.

The email list, with its unhashed encryption of the IP addresses, is itself fully encrypted at the hub and decrypted by a client-specific key by the client just before mailing it to the subscriber. This way, the client user doesn’t know anything about who she’s sending mail to. The client will also forward the email with a spoofed originating IP address so that if the email is undelivered, it will not be returned to the sender. If it did return, it would be possible for a malicious user of the client (censors and police, for example) to determine the subscriber’s email address simply by reading it off of the route-tracing information revealed by any of a variety of publicly available products. Together with the use of web-based accounts for subscriber email, rather than ISP accounts, subscriber privacy will benefit from these precautions.

The subscribers

The subscriber’s role requires a good deal of caution, and anyone taking it on must understand how to make the safest use of Red Rover as well as the legal consequences of getting caught. The subscriber’s actions should be assumed, after all, to be entirely logged by the state or its agents from start to finish.

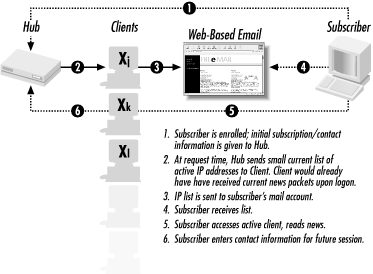

The first task of the subscriber is to use a side channel (a friend visiting outside the country, for instance, or a phone call or postal letter) to give the hub the information needed to maintain contact. She also needs to open a free web-based email account in a country outside the area being censored. Then, after she puts in place any other optional precautions she feels will help keep her under the authorities’ digital radar (and perhaps real-life radar), she can receive messages and download controversial material. Figure 10.1 shows how information travels between the hub, clients, and servers.

In particular, it is wise for subscribers to change their notification times frequently. This decreases the possibility of the authorities sending false information or attempting to entrap a subscriber by sending a forged IP notification email (containing only police IPs) at a time they suspect the subscriber expects notification. If the subscriber is diligent and creates new email addresses frequently, it is far less likely that a trap will succeed. The subscriber is also advised to ignore any notification sent even one second different from her requested subscription time. Safe subscription and subscription-changing protocols involve many interesting options, but these will not be detailed here.

When the client is closed or the computer disconnected, the change is registered by the hub, and that IP address is no longer included on outgoing notifications. Those subscribers who had already received an email with that IP address on it would find it did not serve Red Rover information, if indeed it worked at all from the browser. The subscribers would then try the other IP addresses on the list. The information posted by the hub is identical on all clients, and the odds that the subscriber would find one that worked before all the clients on the list disconnect are quite high.

Client life cycle

Every peer-to-peer system has to deal with the possibility that clients will disappear unexpectedly, but senescence is actually assumed for Red Rover clients. Use it long enough and, just as with tax cheating, they’ll probably catch up with you. In other words, the client’s available IPs will eventually all be blocked by the authorities.

The predominant way nations block web sites is by IP address. This generally means all four octets are blocked, since C-class blocking (blocking any of the possibilities in the fourth octet of the IP address) could punish unrelated web sites. Detection has so far tended to result not in prosecution of the web visitor, but only in the blocking of the site. In China, for example, it will generally take several days, and often two weeks, for a “subversive” site to be blocked.

The nice thing about a personal web server is that when a user logs on to a dial-up account, the user will most likely be assigned a fourth octet different from the one she had in previous sessions. With most ISPs, odds are good of getting a different third octet as well. This means that a client can sustain a great number of blocks before becoming useless, and, depending on the government’s methods (and human workload), many events are likely to evade any notice whatsoever. But whenever the adversary is successful in completely blocking a Red Rover client’s accessible IP addresses, that’s the end of that client’s usefulness—at least until the user switches ISPs. (Hopefully she’ll choose a new ISP that hasn’t been blocked due to detection of another Red Rover client.) Some users can make their clients more mobile, and therefore harder to detect, by subscribing to a variety of free dial-up services.

A fact in our favor is that it is considered extremely unlikely that countries will ever massively block the largest ISPs. A great deal of damage to both commerce and communication would result from a country blocking a huge provider like, for example, America Online, which controls nearly a quarter of the American dial-up market. This means that even after many years of blocking Red Rovers, there will still always be virgin IPs for them. Or so we hope.

The Red Rover strategy depends upon a dynamic population. On one level, each user can stay active if she has access to abundant, constantly changing IP addresses. And at another level, Red Rover clients, after they become useless or discontinued, are refreshed by new users, compounding the frustration of would-be blockers.

The client will be distributed freely at software archives and partner web sites after its release, and will operate without user maintenance. A web site (see Section 10.4) is already live to provide updates and news about the strategy, as well as a downloadable client.

Putting low-tech “weaknesses” into perspective

Red Rover creates a high-tech relationship between the hub and the client (using SL and strong encryption) and a low-tech relationship between the client and the subscriber. Accordingly, this latter relationship is inherently vulnerable to security-related difficulties. Since we receive many questions challenging the viability of Red Rover, we present below in dialogue form our responses to some of these questions in the hope of putting these security “weaknesses” into perspective.

- Skeptic:

I understand that the subscriber could change subscription times and addresses during a Red Rover visit. But how would anyone initially subscribe? If subscription is done online or to an email site, nothing would prevent those sites from being blocked. The prospective subscriber may even be at risk for trying to subscribe.

- Red Rover:

True, the low-tech relationship between Red Rover and the client means that Red Rover must leave many of the steps of the strategy to the subscriber. As we’ve said above, another channel such as a letter or phone call (not web or email communication) will eventually be necessary to initiate contact since the Red Rover site and sites which mirror it will inevitably be victims of blocking. But this requirement is no different than other modern security systems. SSL depends on the user downloading a browser from a trusted location; digital signatures require out-of-band techniques for a certificate authority to verify the person requesting the digital signature.

This is not a weakness; it is a strength. By permitting a diversity of solutions on the part of the subscribers, we make it much harder for a government to stop subscription traffic. It also lets the user determine the solution ingredients she believes are safest for her, whether public key cryptography (legal, for now, in many blocking countries), intercession by friends who are living in or visiting countries where subscribing would not be risky, proxy-served requests to forward email to organizations likely to cooperate, etc.

We are confident that word of mouth and other means will spread the news of the availability of Red Rover. It is up to the subscriber, though, to first offer her invitation to crash the censorship barrier. For many, subscribing may not be worth the risk. But for every subscriber who gets information from Red Rover, word of mouth can also help hundreds to learn of the content.

If this response is not as systematic as desired, remember that prospective subscribers face vastly different risks based on their country, profession, technical background, criminal history, dependents, and other factors. Where a problem is not recursively enumerable, the best solution to it will rarely be an algorithm. A variety of subscription opportunities, combined with non-patterned choices by each subscriber, leads to the same kind of protection that encryption offers in computing: Both benefit from increased entropy.

- Skeptic:

What is to stop a government from cracking the client and cloning their own application to entrap subscribers or send altered information?

- Red Rover:

Red Rover has to address this problem at both the high-tech and low-tech levels. I can’t cover all strategies available to combat counterfeiting, but I can lay out what we’ve accomplished in our design.

At the high-tech level, we have to make sure the hub can’t be spoofed, that the client knows if some other source is sending data and pretending to be the hub. This is a problem any secure distributed system must address, and a number of successful peer-to-peer systems have already led the way in solving this problem. Red Rover can adopt one of these solutions for the relationship between the hub and clients. This aspect of Red Rover does not need to be novel.

Addressing this question for the low-tech relationship is far more interesting. An alert subscriber will know, to the second, what time she is to receive email notifications. This information is sent and recorded using an SSL-like solution, so if that time (and perhaps other clues) isn’t present on the email, the subscriber will know to ignore any IP addresses encoded in it.

- Skeptic:

Ah, but what stops the government from intercepting the IP list, altering it to reflect different IP addresses, and then forwarding it to the subscriber? After all, you don’t use standard encryption and digest techniques to secure the list.

- Red Rover:

First, we have taken many precautions to make it hard for surveillance personnel to actually notice or suspect the email containing the IP list. Second, remember that we told the subscribers to choose web-based email accounts outside the boundaries of the censoring country. If the email is waiting at a web-based site in the United States, the censoring government would have to intercept a message during the subscriber’s download, determine that it contained a Red Rover IP address (which we’ve encoded in a low-tech manner to make it hard to recognize), substitute their own encoded IP address, and finish delivering the message to the subscriber. All this would have to be done in the amount of time it takes for mail to download, so as not to make the subscriber suspicious. It would be statistically incredible to expect such an event to occur.

- Skeptic:

But the government could hack the web-based mail site and change the email content without the subscriber knowing. So there wouldn’t be any delay.

- Red Rover:

Even if this happened, the government wouldn’t know when to expect the email to arrive, since this information was passed from the subscriber to the client via SSL. And if the government examined and counterfeited every unread email waiting for the subscriber, the subscriber would know from our instructions that any email which is not received “immediately” (in some sense based on experience) should be distrusted. It is in the subscriber’s interest to be prompt in retrieving the web pages from the clients anyway, since the longer the delay, the greater the chance that the client’s IP address will become inactive. Still, stagnant IP lists are far more likely to be useless than dangerous.

- Skeptic:

A social engineering question, then. Why would anyone want to run this client? They don’t get free music, and it doesn’t phone E.T. Aren’t you counting a little too much on people’s good will to assume they’ll sacrifice their valuable RAM for advancing human rights?

- Red Rover:

This has been under some debate. Options always include adding file server functions or IRC capability to entice users into spending a lot of time at the sponsor’s site. Another thought was letting users add their own, client- specific customized page to the HTML offering, one which would appear last so as not to interfere with the often slow downloading of the primary content by subscribers in countries with stiff Internet and phone rates and slow modems. This customized page could be pictures of their dog, editorials, or, sadly but perhaps crucially, advertising. Companies could even pay Red Rover users to post their ads, an obvious incentive. But many team members are rightfully concerned that if Red Rover becomes viewed as a mercantile tool, it would repel both subscribers and client users. These discussions continue.

- Skeptic:

- Red Rover:

Red Rover is a playground game analogous to the strategy we adopted for our anti-censorship system. Children form two equal lines, facing each other. One side invites an attacker from the other, yelling to the opposing line: “Red Rover, Red Rover, send Lena right over.” Lena then runs at full speed at the line of children who issued the challenge, and her goal is to break through the barrier of joined arms and cut the line. If Lena breaks through, she takes a child back with her to her line; if she fails, she joins that line. The two sides alternate challenges until one of the lines is completely absorbed by the other.

It is a game, ultimately, with no losers. Except, of course, the kid who stayed too rigid when Lena rammed him and ended up with a dislocated shoulder.

Acknowledgments

The author is grateful to the following individuals for discussions and feedback on Red Rover: Erich Moechel, Gus Hosein, Richard Long, Sergei Smirnov, Andrey Kuvshinov, Lance Cottrell, Otmar Lendl, Roger Dingledine, David Molnar, and two anonymous reviewers. All errors are the author’s.

Red Rover was unveiled in April 2000 at Outlook on Freedom, Moscow, sponsored by the Human Rights Organization (Russia) and the National Press Institute, in a talk entitled “A Functional Strategy for an Online Anti-Blocking Remedy,” delivered by the author. Red Rover’s current partners include Anonymizer, Free Haven, Quintessenz, and VIP Reference.