Brainworks

My job was to alert the driver to the wood-framed carts pulled by one or two bullocks, usually one. None of the carts had tail lights, or for that matter, any lights! It was raining—monsoon time. We had been driving for about ten hours to New Delhi from the foothills of the Indian Himalaya, where we had walked for a month from one monastery to another, photographing Tibetan wall paintings. The wipers were oscillating, but the windshield was greasy. On the short section of “high-speed highway,” dusk was falling and the carts were becoming invisible. Sandwiched between the Tata trucks belching black exhaust fumes and intercity buses hurtling past with horns blaring were examples of Indian ingenuity—moving installations that could have been at home either in the Whitney Biennial for contemporary art or in a junkyard. The strangest was a huge tricycle. A massive steel I-beam, probably scavenged from a bridge, connected a giant front wheel to two small rear wheels. At the front, the driver straddled the beam about eight feet above the road. Behind him a metal box, attached to the beam, slanted downward, crammed with men, women, and children. The engine hanging below the steel looked as though it might have once been connected to a village water pump. The operating design principle was simple: use anything you can find to make it work.

The design principles that guided the evolution of the human brain are similar. Ernst Mayr, who shaped twentieth-century evolutionary and genetic research, repeatedly pointed out the “proximate logic of evolution.” Existing structures and systems are modified sometimes elegantly, sometime weirdly, to carry out new tasks. Our capacity for innovation, which distinguishes human behavior from that of any other species, living or extinct, is a product of this minimal-cost design logic.

The path of human evolution diverged from chimpanzees, our closest living relatives, five to seven million years ago. The brains of our distant ancestors had started to enlarge a million years ago, but big brains alone don’t account for why we act and think in a manner that differs so radically from chimpanzees. We are far from a definitive answer, but converging evidence from recent genetic, anatomical, and archaeological studies shows that neural structures, which have an evolutionary history dating back to when dinosaurs roamed the planet, were modified ever so slightly to create the cognitive flexibility that makes us human. Apart from our ability to acquire a vast store of knowledge, we have a brain that is supremely capable of adapting to change and inducing change. We continually craft patterns of behavior, concepts, and cultures that no one could have predicted.

The archaeological record and genetic evidence suggest that people who had the same cognitive capabilities as you or me probably lived as far back as as 250,000 years ago. However, we don’t live the way our distant ancestors did 50,000 years ago. Nor do we live as our ancestors did in the eighteenth century, or five decades ago. Nor, for that matter, does everyone throughout the world today act in the same manner or share the same values. Unlike ants, frogs, sheep, dogs, monkeys or apes—pick any other species other than Homo sapiens—our actions and thoughts are unpredictable. We are the unpredictable species.

The opposite view, popularized by proponents of what has come be known as “evolutionary psychology,” such as Noam Chomsky, Richard Dawkins, Sam Harris, Marc Hauser, and Steven Pinker, is that we are governed by genes that evolved in prehistoric times and never changed thereafter. The evidence presented to support their theories often involves colorful stories about life 50,000 or 100,000 years ago, sometimes supplemented by colorful blobs that are the end-products of expensive functional magnetic resonance imaging (MRI) machines can monitor brain activity. The red or yellow blobs are supposed to reveal “faculties”—specialized “centers” of the brain that confer language, altruism, religious convictions, morality, fear, pornography, art, and virtually everything else. I shall show that no one is religious because a gene is directing her or his beliefs and thoughts. Moral conduct doesn’t entail having a morality gene. Language doesn’t entail having a language gene.

The current popular model of the brain is a digital computer. It used to be a telephone exchange; at one time, it was a clock or steam engine. The most complex machine of the day usually serves to illustrate the complexity of the human brain. No one can tell you how the brain of even a fly, frog, or a mouse works, but the research that I will review shows that the software, computational architecture, and operations of a digital computer have no bearing on how real brains work. Your brain doesn’t have a discrete “finger-moving” module that conceptually is similar to the electronics and switches that control your computer’s keyboard. Nor do computer programs bear any resemblance to the motor control “instructions” coded in your brain that ultimately move your finger.

As Charles Darwin pointed out, in the course of evolution structures that originally had one purpose took on new roles and were modified to serve both old and new tasks. It has become evident that different neural structures, linked together in “circuits,” constitute the brain bases for virtually every motor act and every thought process that we perform. We have adapted neural circuits that don’t differ to any great extent from those found in monkeys and apes to perform feats such as talking, dancing, and changing the way that we act to one another, or to the world about us. The whole story isn’t in. However, the studies that I will review have identified some of the genes and processes that tweaked these circuits, to yield the cognitive flexibility that is the key to innovation and human unpredictability.

The Functional Architecture of the Brain

Your car’s shop manual provides a better guide to understanding how your brain works than books such as Steven Pinker’s 1998 How the Mind Works. If your car doesn’t start, the shop manual won’t tell you to replace its “starting organ” or “starting module”—a part or set of parts dedicated solely to starting the car. The shop manual instead will tell you to check a set of linked parts: the battery, starter relay, starter motor, starter switch, perhaps the transmission lock. Working together, the “local” operations performed in each part start the car. Your car doesn’t have a “center” of starting. Nor will you find a localized electric power “module.” The battery might seem to be the ticket, but the alternator, powered by the engine, controlled by a voltage regulator, charges the battery. And if you’re driving a gas-electric hybrid, the brakes also charge the battery when you slow down or stop. The brakes, in turn, depend on the electrical system because they’re controlled by a computer that monitors road conditions, and the computer needs electric power. The engine is controlled by an electrically powered computer that senses the outside temperature and engine temperature to control fuel injectors and the timing of the electrical ignition of the fuel injected into each cylinder.

Each component—the battery, voltage regulator, fuel pump, ignition system—performs a “local” operation. The linked local operations form a “circuit” that regulates an observable aspect of your car’s “behavior”—whether it starts and how it accelerates, brakes, and steers. And the local operation performed in a given part can also play a critical role in different circuits. The battery obviously powers the headlights and the sound system, as well as the fuel pump, ignition system, starting circuit, and the computer that controls the engine. The individual components often carry out multiple “local” operations. The engine can propel or brake. The car manual advises downshifting and using the engine to brake when descending long, steep roads. Some circuits include components that play a critical role in other circuits. In short, your car wasn’t designed following current “modular” theories that supposedly account for how brains work and how we think.

In contrast, according to practitioners of evolutionary psychology, various aspects of human behavior, such as language, mathematical capability, musical capability, and social skills, are each regulated by “domain-specific” modules. Domain-specificity boils down to the claim that each particular part of the brain does its own thing, independent of other neural structures that each carry out a different task or thought-process. Steven Pinker, who adopted the framework proposed in earlier books by Jerome Fodor (1983) and Noam Chomsky (1957, 1972, 1975, 1980a, 1980b, 1986, 1995), claims that language, especially syntax, derives from brain mechanisms that are independent from those involved in other aspects of cognition and, most certainly, motor control. Further refinements of modular theory partition complex behaviors such as language into a series of independent modules, a phonology module that produces and perceives speech, a syntax module that in English orders words or interprets word order, and a semantics module that takes into account the meaning of each word, allowing us to comprehend the meaning of a sentence. These modules can be subdivided into submodules. W.J.M. Levelt (1989), for example, subdivided the process by which we understand the meaning of a sentence into a set of modules. Each hypothetical module did its work and sent the product on to another module. Levelt started with a “phonetic” module that converted the acoustic signal that reaches our ears to segments that are roughly equivalent to the letters of the alphabet. The stream of alphabetic letters then was grouped into words by a second module that has no access to the sounds going into the initial phonetic module, the words then fed into a syntax module, and so on.

We don’t have to read academic exercises to understand modular architecture. Henry Ford’s first assembly line is a perfect example of modular organization. One workstation put the hood on, another the doors, another the wheels and tires, and so on. Each workstation was “domain-specific.” The workers and equipment that put doors on the car frame put on doors, nothing else. Another crew and equipment put hoods onto the car frame. The assembly line as a whole was a module devoted to building a specific type of car. In Henry Ford’s first factory, the Model T was available in any color, so long as it was black. As the Ford Motor Company prospered, independent “domain-specific” assembly lines were opened that each built a particular type of car or truck. But the roots of modular theory date back long before, to what neuroscientists generally think of as quack science, “phrenology.”

Phrenology

Phrenology, when it’s mentioned at all in psychology or neuroscience texts, usually is placed in the same category as believing in little green people on Mars. But in the early decades of the nineteenth century, phrenology was at the cutting edge of science. Phrenology attempted to explain why some people are more capable than others when it comes to mathematics. Why are some people pious? Why are some people greedy? Why do some people act morally? The answer was that some particular part of our brain is the “faculty” of mathematics, morality—whatever—and is responsible for what we can do or how we behave.

Whereas present-day pop science links these neural structures to genes that ostensibly result in specialized neural “faculties” located in different modules in discrete parts of your brain, phrenologists had a simpler answer—your abilities and disposition could be determined by measuring bumps on your skull. Phrenologists thought that they could demonstrate that a particular bump was the “seat” of the faculty of mathematics, which conferred mathematical ability; a different bump was the seat of the language faculty; another bump was the seat of the moral faculty; and so on. The size of each bump determined the power of the faculty. A larger bump would correlate with increased capability in language if it was the seat of the faculty of language. A larger bump for the seat of the faculty of piety would presumably be found on the skulls of clerics known for piety. A larger seat of mathematics would characterize learned mathematicians, and so on. The proposed faculties were all domain-specific—independent of each other. The bump signaling piety, for example, had no relevance to language. This theory may sound familiar when transmuted into current modular theory, which pushed to its limits claims that you can be a mathematical genius but can’t find your way home from work.

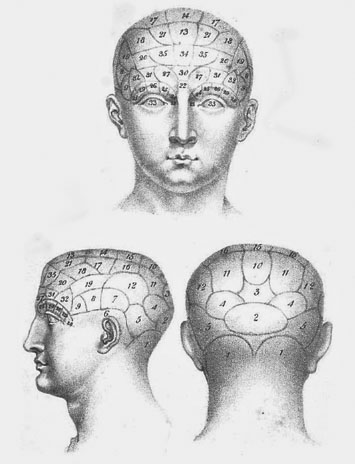

The labeled “map” in figure 1.1, from Johann Spurzheim’s (1815) phrenological treatise, shows the seats of various abilities and personality traits. In the 1970s, I found skulls that had been carefully engraved with phrenological maps in the storage areas of the Musée de l’Homme in Paris. Phrenology collapsed because it was open to test. The skulls of clerics, leading mathematicians, musicians, homicidal maniacs, and so on, were measured. There was no correlation between the size of the bumps on a person’s skull and what she or he could do or how she or he behaved. Some homicidal maniacs had bigger moral bumps than worthy clerics. And so phrenology was declared dead.

Figure 1.1. Phrenological maps of the skull showing the “faculties” of behavior and cognitive capacities. Early nineteenth-century phrenological maps showed the “seats” (areas and bumps on a person’s skull) of faculties that supposedly determined various attributes such as morality, violence, piety, and so on, as well as language, mathematical ability, artistic ability, and other skills. The area of each seat indicated the degree to which an individual had a particular trait. One of the principles of phrenology was that the neural operations in each seat were specific to each faculty, an assumption that still marks many current mind/brain/language theories. From Spurzheim, J. G, The Physionomical system of Drs. Gall and Spurzheim, 1815.

Pop Neuroscience

But phrenology lives on today in studies that purport to identify the brain’s center of religious belief, pornography, and everything in between. The research paradigm is similar to that used by phrenologists 200 years ago, except for the high-tech veneer. Typically, the brains of a group of subjects are imaged using complex neuroimaging systems (described later) that monitor activity in a person’s brain while he or she reads written material, answers questions, or looks at pictures. The response seen in some particular part of the brain is then taken to be the neural basis of the activity in question.

In one such study published in 2009, “The Neural Correlates of Religious and Nonreligious Belief,” Sam Harris and his colleagues monitored the brain activity of 15 committed Christians and 15 nonbelievers who were asked to answer “true” or “false” to “religious” statements, such as “Jesus Christ really performed the miracles attributed to him in the Bible,” and “nonreligious” statements such as “Alexander the Great was a very famous military leader.” Activity in an area in the front of the brain, medial ventrolateral prefrontal cortex, was greater for everyone when the answer was “true.” This finding is hardly surprising since, as we will see, ventromedial prefrontal cortex is active in many cognitive tasks, including pulling information out of memory (Hazy et al., 2006; Postle, 2006). Deciding that something is true also takes more effort when the task is pushing a button when you think that a word is a real English word, for example, “bad,” than not pushing the button when you hear “vad” (Rissman et al., 2003). In fact, ventromedial prefrontal cortex is active in practically every task that entails thinking about anything (Duncan and Owen, 2000).

But the neural structures that Sam Harris and his colleagues claimed were responsible for religious beliefs included the anterior insula, a region of the brain that the authors themselves noted is also associated with pain perception and disgust; the basal ganglia, which I’ll discuss in some detail because it is a key component of neural circuits involved in motor control, thinking, and emotion; and a brain structure that all mammals possess—the anterior cingulate cortex (ACC). The ACC supposedly was the key to religious belief because it was more active when the committed Christians pushed the “true” button about their religious beliefs. The ACC is arguably the oldest part of the brain that differentiates mammals from reptiles, and dates back 258 million years ago to the age of dinosaurs. As we’ll see, its initial role probably was mother-infant care and communication (MacLean, 1986; MacLean and Newman, 1988). Sam Harris’s agenda is scientific atheism—religious belief supposedly derives from the way that our brains are wired. All mammals have an ACC. If Harris and colleagues are right, mice may be religious!

Increased ACC activity often signifies increased attention. The ACC activity observed by Harris et al. (2009) in the committed Christians may have reflected the subjects’ paying more attention to the emotionally loaded questions probing their faith or lack thereof. An obvious control condition would have monitored the subjects’ responses to questions about their tax returns.

Another recent exercise in pop neuroscience “explains” why young men are more interested in pornography than women. In a study published in a first-line journal, Nature Neuroscience, Hamann et al. (2004) showed erotic photographs to 28 Emory University male and female undergraduates. The neuroimaging apparatus, which costs several million dollars, showed that the men had more activity in two closely connected brain structures, the amygdala and hypothalamus. The authors, unsurprisingly, conclude that male undergraduates may be more interested in erotic photographs than women. A glance at a magazine stand near Emory would have reached the same conclusion, saving several thousand dollars spent on the experiment. The main finding of the study is that increased amygdala activity, in itself, accounts for “why men respond more to erotica.” The conclusion is weird; dozens of independent studies show that activity in the amygdala increases in fearful situations. Amygdala activity increases, for example, when people look at angry faces. Were the men afraid of sex? However, other experiments show that the amygdala also is activated by stimuli associated with reward (Blair, 2008), so the pictures may have reflected wishful thinking. What would the amygdala responses have been if the men had been imaged while they looked at expensive sports cars or alternatively at fearful faces? What would have happened if the erotic pictures had been shown to the “committed Christians” studied by Sam Harris and his colleagues?

We’ll return to pop-neuroscience “proofs” for morality and other qualities in chapter 6.

A Pocket Guide to the Human Brain

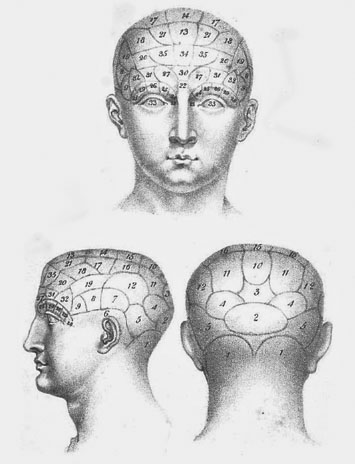

Most of the human brain is divided into two almost equal halves, or cerebral hemispheres. Figure 1.2 shows a side view of the outside of the left hemisphere of the human brain. The neocortex is the outermost layer of the primate brain. In this sketch of the left surface of the neocortex, orbofrontal prefrontal cortex is at the extreme front. Dorsolateral prefrontal cortex is sited behind it in the upper part of the cortex. Ventrolateral prefrontal cortex is below it. (The Latinate terminology simply refers to the location of each cortical area.) Figure 1.3, shown later, will also be useful in locating these “areas” of the cortex. The brains of mammals, other than aquatic dophins and whales, are marked by “fissures,” deep folds separating “gyri.” Some of the external landmarks of the brain that can be seen using current techniques that monitor brain activity are pictured. The Sylvian fissure, the deep channel that is the boundary between temporal lobe and frontal lobe of the cortex, for example, shows up clearly. The human neocortex and most other parts of our brain are about three times larger than a chimpanzee’s.

Figure 1.2. The left side of the human cortex. The “anterior,” prefrontal regions of the cortex are to the left. Orbofrontal prefrontal cortex is located at the marker OF, dorsolateral prefrontal cortex at the marker DL, and ventrolateral prefrontal cortex at the marker VL. These prefrontal regions are involved in language and a range of cognitive tasks; they vary in size and position from one person to another. The Sylvian fissure, SF, marks the boundary between frontal and posterior cortex and is visible in neuroimaging studies. The traditional language “organs” of the brain also are mapped. Although Broca’s and Wernicke’s areas can be involved in language tasks, they are not the brain bases of language. They can be destroyed without permanent language loss; other areas of the brain perform critical roles, making both human language and cognition possible.

Neuroimaging

Neuroimaging techniques that visualize the brains of living persons and track brain activity while people think have led to a revolution in human brain research. But the limitations of current neuroimaging techniques have to be understood to sort out the claims of pop neuroscience from real science.

Computed tomography (CT) scans, computer-derived three-dimensional x-ray images of the brain, first provided images of the brains of living patients. Magnetic resonance imaging (MRI) then came into wide use in the mid-1980s. MRI, which reveals the soft tissue structure of the brain, is the keystone of all neuroimaging techniques, because if you don’t know where brain activity is occurring, you won’t have a clue to what’s happening, other than that the subject is alive.

The heart of the MRI apparatus is a powerful electromagnet—that’s why there are cautions when entering the MRI room about metal fragments in your eyes or having objects on your person that contain iron or steel. The magnet can tear metal fragments loose and pull metal objects into the apparatus. A strong magnetic field first displaces the electrons of the atoms that form the soft tissue of the brain from their rest position while the subject is in the MRI apparatus. The magnetic field produced by the electromagnet then is abruptly turned off, causing the electrons to snap back to their rest position. This produces an electrical signal at frequencies that complex computer programs use to determine the composition of the atoms.

If the magnet is the MRI’s heart, its brain is its computer. The computer programs essentially produce a series of images of thin virtual “slices” of a person’s brain. The person in the MRI apparatus (“magnet” is MRI jargon) hears the noise produced by the magnetic elements in the magnet’s iron core being switched on and off. The downside of any technique based on MRI is the noise—the series of sharp clicks that occur as the MRI apparatus produces a series of slices that are assembled to form a three-dimensional image of the brain. In the early days of MRI, the patient/subject was positioned lying in a narrow tube. An acquaintance who had served in the US Navy during World War II described the experience as being in a submarine’s torpedo tube while hearing a machine gun firing. MRI and all techniques based on MRI inherently cannot track rapid events.

While MRI reveals the structure of the brain, including damage, functional magnetic resonance imaging (fMRI) and positron emission tomography (PET) track brain activity. If you wanted to track the amount of energy consumed by your car at any instant of time, you could monitor either the flow of fuel or the oxygen used to burn the fuel. The brain is a sophisticated engine. The fuel is sugar—glucose. PET tracks glucose fuel consumption in different parts of the brain to infer the pattern of local neural activity. PET works by monitoring the distribution of radioactive glucose. It’s necessary to first place a vial of glucose in a cyclotron to make a radioactive form of glucose, an “isotope” that will rapidly lose its radioactivity. The radioactive isotope has a short “half-life”—the time interval in which the radioactive level will diminish by half. The radioactive glucose is then injected into a volunteer’s brain. The PET apparatus then tracks the injected glucose in different parts of the brain by monitoring the emission of subatomic particles. PET is useful because, unlike fMRI, no magnet noise occurs, but it has its drawbacks. It does not yield very detailed images, and there is a short working period between placing the glucose sample in a cyclotron (which isn’t a standard laboratory instrument) and studying the subject’s responses in an experiment.

fMRI cleverly solved some of these problems by monitoring the by-product of combustion—oxygen depletion. As parts of the brain become more active, they burn more glucose, using up more oxygen. fMRI tracks the relative level of local brain activity by tracking the local level of oxygen left after burning brain fuel. As glucose is burned, the oxygen level falls, reflecting brain activity. The computer system is adjusted to look at the electronic signature of the local oxygen level. The depleted blood oxygen level—the “BOLD” response—thus is the fMRI signature of local brain activity. Direct comparison with neural activity monitored with micro-electrodes inserted in the brains of animals confirms that the BOLD signal is a valid measure of neural activity (Logothetis et al., 2001). Direct recording of neural activity using inserted electrodes, with the exception of some highly limited circumstances, cannot be performed in human subjects.

One of the major problems in interpreting the signals coming out of a PET scanner or fMRI system’s computer has to do with the primary reason for using these techniques—the person who’s being studied is alive. Therefore, there is activity constantly going on in many parts of the subject’s brain. One technique that has been used to attempt to find out whether the neural activity that has been recorded has anything to do with the behavior that the experiment is supposed to be studying is to subtract the signals recorded from a “baseline” from the “experimental” condition. In an experiment that’s aimed at discerning the brain mechanism involved in listening to speech perception, the subjects might be asked to listen to “pure” musical-like tones. The presumed neural mechanisms involved in speech perception would then be determined by subtracting the activity recorded when the subjects listened to musical tones from the activity recorded when they listened to words. The problem that arises is that some neural mechanisms may be involved in listening both to musical tones and to speech, so when the subtraction is performed you don’t see that they also are active when listening to words. That problem has plagued PET and fMRI studies since they first were used. In fact, recent work shows that many of the neural mechanisms used in speech are also used to perceive music (Patel, 2009). Another problem arises from using “region of interest” (ROI) computer processing that examines only neural activity in a predetermined region of the brain. If you don’t look for brain activity in some part of the brain, you won’t find it.

One way of addressing some of these problems is to use a task that can be performed at increasing levels of difficulty. If activities in particular neural structures increase with task difficulty, they most likely are relevant to the task. Information on pathways—the neural circuits that link different parts of the brain—also should be taken into account when brain imaging data are evaluated. We’ll discuss the findings of studies that use these techniques in the chapters that follow.

Diffusion tensor imaging (DTI), another variation on MRI imaging, can trace the neural circuits that connect the structures of the brain in living humans (Lehericy et al., 2004). The principle behind DTI is amazingly simple. Suppose that you dip a stalk of celery into a bowl of water and then invert it. Water will flow down along the stalk. DTI tracks the water flow pattern throughout the brain’s circuits because the flow of neurotransmitters follows the bundle of “white” matter linking the “gray matter” of the neural structures that make up the circuits.

Event-related potentials (ERPs) provide an indirect measure of rapid changes in neural activity within the brain. Electric currents, including the signals that transmit information in the brain, always generate magnetic fields. The magnetic field can be monitored using an array of small antenna-like coils placed on a person’s skull. Although localizing the source of the radiated magnetic field to very specific parts of the brain is not possible, rapid changes can be discerned that correspond to cognitive acts. Angela Frederici, Director of Neuropsychology at the Max Planck Institute in Leipzig, Germany, in her 2002 review article, shows that the subcortical basal ganglia play a part in rapidly integrating the meanings of individual words with syntactic information as a subject listens to a spoken sentence.

Where Is What

There is an inherent problem that affects all neuroimaging studies. The “same” locations noted in different studies may not be the same. Devlin and Poldark, in their 2004 paper “In Praise of Tedious Anatomy,” show that it’s not necessarily the case that the hot-spots indicating increased activity in fMRI scans in a given study occur in the same parts of the brains of the many subjects who must be imaged to obtain usable data. The published fMRI brain maps using red, blue, and green to indicate relative levels of activity in different parts of the brain are derived by complex computer-implemented processing. fMRI signals are weak, and it’s necessary to monitor many subjects performing the same task and then average the responses to factor out irrelevant electronic noise. But “averaging” these responses is not simple. People’s brains differ from one to another as much as their noses, hands, hair, arms, legs, faces, and so on. Because the shape and size of the brain varies from person to person, the MRI brain map of each subject must be stretched and squeezed in different directions until it conforms to a “standard” brain, often the Talairach and Tournoux (1988) coordinates, that attempt to reshape all brains to that of a single 60-year-old woman. The problem is especially acute for frontal and prefrontal cortical areas because they don’t have sharp boundaries that show up in the structural MRI that the fMRI activity is mapped onto.

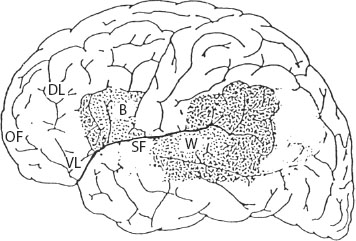

The net result is that it is often impossible to be certain that the hot-spots actually are in the same area of the cortex. Devlin and Poldark proposed using different brain mapping techniques that may provide more realistic brain maps, but some uncertainty always remains, impeding precise localization of brain activity. The level of uncertainty paradoxically increases in studies that attempt to be extremely precise. The brain maps proposed by Korbinian Brodmann between the years 1908 and 1912 have been used to identify the sites of brain damage or neural activity in thousands of studies. The basic “atomic” computational elements of the brain and nervous system are “neurons.” Neurons come in different sizes and shapes, and the neocortex has six layers of neurons arranged in columns. Brodmann microscopically examined human brains and found differences in the “cytoarchitecture”—the distribution of neurons in the columns that made up different parts of the neocortex, which he thought reflected functional differences. He also examined the brains of other animals and was also able to show that homologies—similarities in cytoarchitecture—existed between the brains of closely related species.

Figure 1.3 shows Brodmann’s 1909 maps of the left cortical hemispheres of a rhesus monkey and a human. Some of the Brodmann areas (BAs) are easy to pick out on an MRI. Area 17, at the extreme tail end of the brain, is one. In contrast, precisely locating the BAs that constitute frontal and prefrontal cortex on an MRI is flaky. One area merges seamlessly into another in the MRI. As Devlin and Poldark point out, if you really want to locate a frontal cortical Brodmann area, it’s necessary to carry out a microscopic examination of the subject’s cortex. This would entail sacrificing the subjects. In an exercise aimed at seeing whether it is possible to precisely identify Broca’s area, which consists of BAs 45 and 44, the traditional “language organ” of the human brain (chapter 2 will show that Broca’s area is not the brain’s language organ), Amunts et al. (1999) examined the brains of 10 persons from autopsies performed within 8 to 24 hours after death. Thousands of profiles of cell structure were obtained using an automated observer-independent procedure to construct three-dimensional cytoarchitectural reconstructions of the individual brains. Both cytoarchitecture and the features of the brain that can be seen on an MRI varied from one individual brain to another to the degree that it is impossible to discern the location of either BA 44 or BA 45 from an MRI. Amunts and her colleagues also found 10-to-1 differences in the size of area BA 44 in their small 10-brain sample, close to the minimum number of subjects typically imaged in an fMRI study.

Figure 1.3. Brodmann cytoarchitechtonic maps, showing Brodmann areas (BAs) of the left cortical surfaces of a rhesus macaque and a human. Cortical areas having similar cytoarchitectonic structures mark all primates. However, the size and location of a given BA varies from individual to individual and generally cannot be identified with certainty in a living subject. BA 45 and BA 44 (the BA area to the right of BA 45) are the traditional sites of Broca’s area. Ventrolateral prefrontal cortex includes BAs 47 and 12. Dorsolateral prefrontal cortex includes BAs 49 and 9.

Cortical Malleability

Apart from uncertainty in identifying BA from MRIs, the differing cytoarchitecture of Brodmann areas is not an infallible guide to their function. For example, the parts of the cortex that usually are involved in vision take on different roles in blind people. The visual cortex in persons who were blind early in life is active when they read, “tactilely discerning shapes by touching Braille text and hearing speech sounds (Burton, 2003). Experience shapes cortical function, accounting for blind people using “visual” cortex to process tactile and auditory information. Research using animals strikingly shows the plasticity of the cortex. A series of experiments over 10 years involved rewiring the brains of ferrets. A research team at MIT directed by Mriganka Sur reversed the pathways leading from the ferrets’ eyes and ears to their usual targets in visual and auditory cortex, shortly after birth (Melchner et al., 2000). When the ferrets reached maturity, direct electrophysiologic recording, which involved recording electrical activity from electrodes inserted into the animals’ brains, demonstrated that they saw objects using cortical areas that normally would be part of the auditory system. Cortical malleability may explain why stroke patients who initially have language problems recover if the brain damage is limited to the cortex, noted by Stuss and Benson in their 1986 book directed at neurologists.

Neurons and Learning about the World

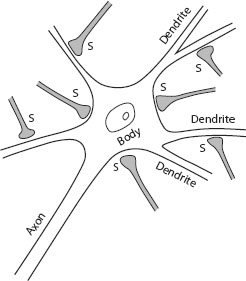

Neurons are the basic elements that make up the nervous systems of all animals. Figure 1.4 shows the cell body of a neuron, its output axon, and a profoundly reduced set of “dendrites” and synapses. I won’t go into the details of how neurons signal to each other, but the discussion here should suffice to understand the significance of new insights from current, ongoing genetic research that will be discussed in chapter 4. It is becoming evident that at least one newly discovered gene makes our neurons more interconnected and more malleable, allowing us to free our behavior from the constraints that hobble apes.

Figure 1.4. Neurons and synapses. The dendrites (the term in Latin refers to tree branches) branching out from each cell body connect neurons to each other. A cortical neuron typically receives inputs on these dendrites from several thousand other neurons. Each neuron has an output axon, which typically transmits information to thousands of other neurons. Incoming axons from other neurons transmit information to synapses on the dendrites as well as on the cell body.

Synapses are malleable structures that transfer information to a greater or lesser degree to neurons. They also are memory “devices.” As a loose analogy, think of a synapse as the neural equivalent of the volume control of an audio amplifier. The degree to which the signal from the tuner, CD, MP3 player, or whatever gadget you’ve connected to the amplifier will be sent on to the amplifier depends on the setting of the volume control. If you set the volume control to a particular level, turn the system off, and turn it on the next day, the volume control’s setting will constitute a memory “trace” of the sound level that you set on the previous day. Each synapse’s “weight” (its volume control setting) thus constitutes a memory trace of the extent to which the incoming signals will be transmitted to the neuron. The neuron’s output axon will “fire,” producing an “action potential,” an electrical signal that is transmitted to other neurons, when the signals transmitted from one or more dendrites and axons, added together, reach a threshold. Some synapses result in incoming signals having less effect in triggering an outgoing action potential.

The process by which synapses are modified is generally thought to be the basis for associative learning—the way that we and other animals learn about the world, and how we learn about our place in the world. Hebb in 1949 proposed that synapses are modified by the signals they transmit. Think of a water channel cut out of sandy soil. When water from two sources simultaneously flows through the channel, it will become eroded to a greater extent than if water were flowing through only one channel. Hence, the water channel subsequently will offer less resistance to the flow of water. The degree to which the channel becomes wider in a sense marks the degree to which the two sources are associated and thus constitutes a “memory trace” of the correlated events. If the channel is deeper and wider, more water can flow through it. Hebb suggested that the activity of neurons becomes correlated as they continually respond to stimuli that share common properties. Experiments that make use of arrays of microlectrodes that can record electrical activity in or near neurons confirm Hebb’s theory. Enhanced conduction across synapses occurs when animals are exposed to paired stimuli (for example, Bear, Cooper, and Ebner, 1987), similar to the bells and meat used in Pavlov’s classic experiments.

A Mollusk Academy

The classic example of associative learning taught in Psychology 101 involves dogs. Pavlov taught dogs to associate a bell’s ringing with a meat treat. The bell was rung before the dogs were fed. Repeated “paired” exposure to the sound of a bell followed by meat resulted in a “conditioned reflex”—the dogs salivated. It’s curious to note that some people also might salivate or automatically say “yum yum” or “that looks great” as they read a menu, but we wouldn’t characterize their responses as “conditioned reflexes.” When animals learn something, the overt behavior that we use to monitor their response is a conditioned reflex. When humans respond, they’re thinking.

Pavlov thought that associative learning occurred in the cortex, but associative learning deriving from synaptic modification isn’t limited to the cortex. A series of experiments in the early 1980s (Carew, Walters, and Kandel, 1981) showed that synaptic modification is the key to associative learning in mollusks. The curriculum of their mollusk academy wouldn’t be approved by any school board. The mollusk species, Aplysia californica, apparently normally likes the taste/odor of extract of shrimp, stretching the meaning of the word like to animals that don’t have a central nervous system. Twenty mollusks were trained using Pavlov’s procedure. Essence of shrimp was presented to the mollusks for 90 seconds. Six seconds after the start of the presentation, an electrical shock was directed to the mollusks’ heads. Six to nine paired shrimp-essence electric shocks were administered. Twenty other mollusks who served as a control group received random electric shocks. Eighteen hours later, shrimp extract was applied to all the mollusks as well as a weak electric shock to their tails. The “trained” mollusks ran away more rapidly, emitting ink (their escape ink-screen) and not feeding as well as the untrained (unpaired) mollusks. Critically, changes in the synapses in the motor-control neurons in their tails were observed. Synaptic modification was the neural mechanism by which the mollusks “learned” that essence of shrimp “means” that “I will be shocked.”

Neural Circuits—Linking Cortex and the Rest of the Brain

However, it is not a mollusk that is tapping at the keyboard, spelling out the words that you are reading. It has become apparent that neural circuits, akin to the linkages between the parts of your car, link local operations throughout our brains, enabling us to walk, run, talk, think, and carry out the acts and dispositions that define our species. We can be acrobats or clods, saints or monsters—these possibilities derive from the neural circuits of our brains.

Diffusion tensor studies are identifying hundreds of human neural circuits. We will discuss the role of some of these circuits in motor control, vision, memory, and other aspects of cognition. Our focus will be on a class of neural circuits involving cortex and the subcortical basal ganglia, which are a set of closely connected neural structures deep within your brain. Contrary to the modular approach of modern-day phrenology, the cortical and subcortical elements that make up these circuits are not domain-specific—they make it possible for you to walk, focus on a task, talk, form or understand a sentence, or decide what you’ll have for dinner or do with the rest of your life. In short, they are involved in motor control, language, thinking, and emotion. Hundreds of independent studies over the course of more than thirty years have established the local operations performed in these circuits, some of the aspects of behavior that they regulate, and why they differ from similar circuits in our close primate relatives. It has become apparent that they have a singular role in enhancing associative learning and cognitive flexibility. As you will see, the absence of modularity, neural structures committed to one aspect of human behavior, reflects their evolutionary history.

The basal ganglia evolved eons ago in anurans—the class of animals that includes present-day frogs (Marin, Smeets, and Gonzalez, 1998). They control motor acts in frogs and continue to do so in human beings, but they also play a central role in human cognition. Earlier theories on how the human brain works assumed that subcortical structures, such as the basal ganglia, were involved solely with motor control or emotion. You can still read or hear reports on CNN or NPR that claim that the brain’s “pleasure center” is the basal ganglia. The cortex was supposed to control rational conduct, overriding subcortical emotional responses. Paul MacLean’s 1973 Triune brain theory, for example, suggested that disorders such as Tourette syndrome, which causes a person to uncontrollably grunt or utter profanities, reflected the cortex losing control over the “emotional” subcortical brain.

That’s not the case. Neural circuits that connect cortical areas with the basal ganglia and other subcortical structures do regulate emotion. However, other cortical-basal ganglia circuits make it possible to pay attention to a task. The ACC activity that was Sam Harris’s brain marker for religious belief is part of this circuit. Still other circuits link prefrontal medial ventrolateral cortex and other prefrontal cortical structures with the basal ganglia and posterior regions of the cortex. These circuits are involved in comprehending the meaning of a sentence, and the range of cognitive acts that fall under the term “executive control” (for example, Badre and Wagner, 2006; Heyder et al., 2004; Lehericy et al., 2004; Miller and Wallis, 2009; Monchi et al., 2001, 2006b, 2007; Postle, 2006; Simard et al., 2011). Executive control is, to me, an odd choice of words for designating cognitive acts such as changing the direction of a thought process, or keeping a stream of words in short-term memory to derive the meaning of a sentence, subtracting numbers, and performing other cognitive tasks. What may come to mind is a domineering boss telling everyone what they must do or be fired, but we’re stuck with the term.

Coming Attractions

It will become clear in the chapters that follow that apart from the size of the human brain, neural circuits involving cortex, basal ganglia, hippocampus, and other neural structures form the engine that drives human cognitive capabilities, including language. Genetic events that occurred in the last 200,000 to 500,000 years increased the efficiency of basal ganglia circuits that confer cognitive flexibility—the key to innovation and creativity. These mutations also enhanced human motor control capabilities, allowing us to talk.

Only humans can talk. Dogs can learn to comprehend the simple meanings of words; apes can learn to use manually signaled words to communicate and can understand simple sentences. Parrots can imitate some words and form simple requests, but no other animal can talk. Speech plays a central role in language, allowing us to transmit information to one another at a rapid rate, freeing our hands and gaze. We don’t have to look at each other while we communicate. In the course of human evolution, the demands of speech shaped the human tongue, neck, and head, and have compromised a basic aspect of survival. We can more easily choke to death when we eat, because our tongues have been shaped to enhance the clarity of human speech. This permits us to estimate when humans had brains that could take advantage of tongues optimized for talking at the cost of eating. These same brain mechanisms allow us to sing, dance, and carry out the odd acts that distinguish human behavior from that of other species, including chimpanzees, who are almost genetically identical to us.

But the genes dreamed up over coffee and cookies—or other substances—by evolutionary psychologists don’t exist. The historical record that shows how people really act, as well as the evidence that reaches our ears and eyes every day, has been ignored. When we, for example, compare the behavior of present-day Norwegians, Danes, and Icelanders with their Viking ancestors, it is evident that no moral gene exists or ever existed. Ethical, moral behavior is a product of cultural evolution. This stew of invented genes diverts our attention from real progress in understanding the interplay of culture and biology in shaping human behavior.

In brief, we will explore the neural and cultural bases of the human capacities that have transformed the world. We will examine the central claim of evolutionary psychology, that we are locked into predictable patterns of behavior that were fixed by genes shaped by the conditions of life hundreds of thousands of years ago. The human brain evolved in a way that enhances both cognitive flexibility and imitation, the qualities that shaped our capacity for innovation, other aspects of cognition, art, speech, language, and free will.