Brain Design by Rube Goldberg

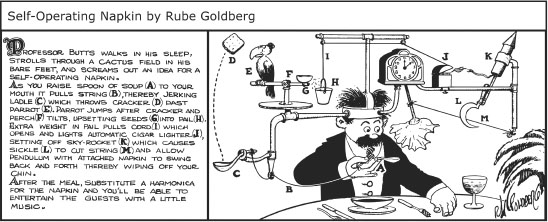

In my youth, the cartoonist Rube Goldberg “designed” machines in the spirit of the giant motorized tricycle glimpsed on the road to New Delhi—odd assemblages that achieved an action by a set of ill-sorted steps that no one possessed of design logic would elect to build (figure 2.1).

The functional organization of the human brain doesn’t include a parrot, but it is just as improbable. Neural structures that date back to the age of dinosaurs have been adapted in a manner as weird as any of Rube Goldberg’s machines. As I pointed out in chapter 1, our brains don’t have the simple, neat organization early nineteenth-century phrenologists proposed, or the modular structure that evolutionary psychologists push. The functional architecture of the human brain instead reflects the opportunistic logic of evolution.

Neophrenology

You may be puzzled because earlier I stated that phrenology, which claimed that our brains contained language organs, morality organs, and math organs, was declared dead about 1850. But most explanations for why we have the ability to talk, understand words, and compose and understand sentences all come from what amounts to a phrenological model; we supposedly have two parts of the cortex, Broca’s and Wernicke’s areas, that constitute the brain’s “language organ.”

Figure 2.1. As you raise spoon of soup (A) to your mouth, it pulls string (B), thereby jerking ladle (C), which throws cracker (D) past parrot (E). Parrot jumps after cracker, and perch (F) tilts, upsetting seeds (G) into pail (H). Extra weight in pail pulls cord (I), which opens and lights automatic cigar lighter (J), setting off skyrocket (K), which causes sickle (L) to cut string (M) and allow pendulum with attached napkin to swing back and forth, thereby wiping off your chin. Artwork Copyright © Rube Goldberg Inc. All Rights Reserved. RUBE GOLDBERG ® is a registered trademark of Rube Goldberg Inc. All materials used with permission.

My only previous reference to Broca’s area was that it is not the brain’s language organ. I didn’t even mention Wernicke’s area. So why do you keep reading or being told that Broca’s and Wernicke’s areas are the brain bases of human language? Broca’s area is often the touchstone for determining which of our distant fossilized hominin ancestors (the term “hominin” signifies a primate in or close to the line of human descent) talked. Televised documentaries on human evolution may feature someone in a white coat holding up a fossil skull and pointing to where they believe they can detect traces of Broca’s area, supposedly showing that the fossil could have talked. So how can they all be wrong?

As the previous chapter pointed out, the basic premise of phrenology is that a discrete part of the brain, in itself, is the neural basis for an observable aspect of human behavior such as mathematical ability, morality, or language. Early nineteenth-century technology precluded examining a living person’s brain to determine the boundaries of the cortical area that was the “seat” of language, or art, or piety, so phrenologists instead measured the area of a discrete part of the skull, which reflected the size of the area of the brain beneath it. The seats were domain-specific. The part of the skull conferring mathematical ability had nothing to do with whether someone was linguistically gifted or not. Phrenology collapsed precisely because it was a testable—hence, scientific—theory.

Phrenology Resuscitated—The Broca-Wernicke Language Theory

But phrenology was resuscitated in 1861when Paul Broca published one of the first studies of a neural “experiment-in-nature.” If you take as a given the phrenological model (that presupposes that brains are machines) in which a given part is the “seat” of some observable aspect of behavior such as talking, then taking out that part should preclude talking. This clearly was a “forbidden” experiment. Broca instead initially studied two patients who had extreme difficulties talking after brain damage inflicted by strokes. Similar experiments-in-nature became the primary source of information on how brains might work until the development 100 years later of neuroimaging techniques, like fMRI and PET and EEG (electroencephalography, recording electrical activity on the scalp that reflects neural activity).

Broca’s study of patient “Tan” is the better-known experiment-in-nature. Tan, whose real name was Leborgne, was a 51-year-old man who had a series of neurological problems. Leborgne could control the intonation, the “melody” of speech. (A primer on how speech is produced follows shortly.) However, Leborgne could not produce any recognizable words other than the syllable “tan,” and thus was described as patient “Tan.” According to phrenology, the “seat” of language, the part of the brain that controlled language, was between the eyes. Broca didn’t question the underlying premise of phrenology—that a specific part of the brain was the seat of language, but he thought that the seat of language was located elsewhere.

The patient died soon after Broca saw him. An autopsy showed damage to the surface of the left frontal lobe of the patient’s brain. However, Broca limited his observations to the surface of Leborgne’s brain instead of sectioning it and systematically determining the nature and extent of damage. A few months later, Broca examined a second patient who could speak only five words after suffering a stroke. An autopsy showed brain damage at approximately the same part of the surface of the brain as for Leborgne. Broca, following in the footsteps of phrenology, localized language to the part of the brain that has since been termed Broca’s area.

However, the actual pattern of damage to both patients’ brains does not support Broca’s phrenological theory. The brains of both patients had been carefully preserved in alcohol, and 140 years afterward, high-resolution MRIs were performed (Dronkers et al., 2007). The MRIs showed that damage was not limited to the part of the brain identified by Broca as the seat of language. In both patients, massive damage had occurred to the neural circuits that link cortex with other parts of the human brain. The pattern of damage involved the structures that make up the basal ganglia, other subcortical structures, and the pathways that connect cortical and subcortical neural structures. The MRIs also showed that the brain structure commonly labeled Broca’s area—the left inferior gyrus, Brodmann’s areas 44 and 45 (see figures 1.2 and 1.3)—wasn’t the cortical area that was damaged in Paul Broca’s first two patients. Parts of the brain to the front (anterior) to it instead were damaged.

In 1874, Karl Wernicke studied a stroke patient who had difficulty comprehending speech and had damage to the posterior temporal region of the cortex. Wernicke, in the spirit of phrenology, decided that this area was the brain’s speech comprehension organ. Since spoken language entails both comprehending and producing speech, Lichtheim in 1885 proposed a cortical pathway linking Broca’s and Wernicke’s area. Thus Broca’s and Wernicke’s cortical areas became the neural bases of human language, supposedly devoted to language and language alone. Doubts were expressed soon afterward about the localization of language to a single part of the brain. Marie (1926), for example, basing his conclusions on autopsies of stroke victims, proposed that Broca’s syndrome involved damage to the basal ganglia. However, new life was given to the Broca-Wernicke theory by David Geschwind in a 1970 paper published in the prestigious journal Science.

Why the Broca-Wernicke Theory Is Wrong

In the hundred years before Geschwind published his paper, postmortem autopsies were necessary to establish the pattern of brain damage that was responsible for “aphasia,” permanently losing some aspect of language. But just about the time that Geschwind’s paper appeared in print, CT scans—three-dimensional x-ray images of a living person’s brain—were coming into general clinical practice. Whereas autopsies of the brain are not common, thousands of CT scans of patients’ brains became available. It soon became clear that aphasia did not result from damage limited to these cortical “language areas.” The traditional Broca-Wernicke theory is wrong. The syndromes, the patterns of possible deficits, are real, but they do not derive from brain damage localized to these cortical areas. CT scans showed that patients recovered or had only minor problems when Broca’s and Wernicke’s areas were completely destroyed, so long as the subcortical structures of the brain were intact. Geschwind, who participated in these studies, revised his views on the viability of the Broca-Wernicke theory shortly before his untimely death.

Moreover, CT scans subsequently showed that the language deficits of Broca’s syndrome didn’t necessarily involve damage to Broca’s area. Damage to subcortical structures that supposedly had nothing to do with language, leaving Broca’s and Wernicke’s cortical areas intact, caused the classic signs and symptoms of aphasia—speech production deficits as well as difficulty comprehending the meaning of a sentence. Margaret Naeser and her colleagues in a 1982 research paper described the language problems of patients who had suffered strokes that spared cortex altogether, but selectively damaged the basal ganglia and pathways to it. Aphasia, permanent loss of language, occurs when the neural circuits linking cortical areas through the basal ganglia, thalamus, and other subcortical structures are damaged. The current position expressed by Stuss and Benson in their 1986 book directed at neurologists, The Frontal Lobes, is that aphasia never occurs absent subcortical damage.

The problem with the Broca-Wernicke theory and all variants of phrenology is that they fail to recognize the fact that brains are the product of the opportunistic illogic of evolution. As Dronkers and her colleagues note, the neural operations underlying speech and language “involve large networks of brain regions and connecting fibres.” Locating the site of brain damage is easy compared to determining whether it’s really the sole cause of a problem. For example, if you cut the wires that supply a high-voltage impulse to the spark plugs, your car won’t start. But no mechanic, probably no one having even a vague notion of how cars work, would think that the wires constituted the “seat” of starting. The wires are just one element of the complex circuits linking many parts. Each part performs a “local” operation that is necessary to have a functioning car. And as the troubleshooting section of your car’s service manual will point out, a defective part can cause many different, seemingly unrelated, problems. Why then does the Broca-Wernicke theory survive? Its survival probably hinges on its being a simple theory. TVs differ from radios in that they have extra parts. No radio has a visual display Humans can do things that no chimpanzee can achieve. We, therefore, must have some different part in our brain. In that frame of reference, Broca’s area accounts for our being able to talk. And since the extra part is biologically specified, there must be a human language gene (perhaps a set of genes) that shaped Broca’s area. Similar arguments would point to our possessing moral genes, cheater-detector genes, and selfish genes.

Neural Circuits

My wife and I spent part of the summers of 1993 and 1994 in a remote region of Nepal close to its Tibetan border. We slept in a tent on the roof of the home of one of the rich men of the principal village of Mustang, Lo Manthang. Mustang is a culturally Tibetan part of Nepal that had been annexed by the Gorkha monarchy that created Nepal in the eighteenth century. Lo Manthang has two large fifteenth-century Tibetan Buddhist “gompas”—monastic temples whose inner walls were covered with intricate wall paintings. The paintings are among the few original surviving examples of that period. The paintings of other temples were defaced in Chinese-occupied Tibet, and we had been awarded a grant from the Getty Foundation to document the paintings. My photographic equipment, which included staging to reach some of the paintings that were 20 feet above the temple floor, had been loaded onto the backs of a string of ponies because there was no road to Lo Manthang. The ponies, descendants of the ponies that carried the Mongol warriors of Genghis Khan, were docilely led by our horseman, Nima Wangdi.

The photographs and text that resulted from our work in Mustang are on the Brown University website (http://dl.lib.brown.edu/BuddhistTempleArt) and on a DVD commissioned by the Getty Foundation. What we didn’t anticipate was that five years later, we would be waiting in a pediatric neurologist’s office at Rhode Island Hospital in Providence to hear his assessment of whether Nima Wangdi’s daughter would ever talk. Through a series of the improbable events that mark one’s life, we had brought his daughter, who had epileptic seizures, to Providence for medical treatment that was not available in Nepal. Lhakpa Dolma, a bright-eyed, alert five-year-old girl, also was having difficulty talking. The neurologist had examined the MRI of Lhakpa Dolma’s brain. He assured us that she would be able to talk because her “language organs,” the left cortical areas that were the sites of Broca’s and Wernicke’s areas, were intact.

Would that that had been the case! At age eighteen, Lhakpa Dolma still cannot talk; her cognitive ability has also deteriorated. So the short answer to whether Broca’s and Wernicke’s cortical areas constitute our brain’s language organ unfortunately is no. But the full answer, which the previous chapter touched on, is that neural circuits linking activity in many different parts of the brain control speech production, comprehend speech, understand or compose a meaningful sentence, and carry out the other acts and thoughts that in their totality constitute our “language faculty.” What was apparent in Dolma’s MRI was damage to the pathways connecting cortex and the basal ganglia.

Cortical-Basal Ganglia-Cortical Circuits

Diffusion tensor imaging (DTI), which can map out neural circuits in a noninvasive manner, has revealed hundreds of circuits in the human brain, including circuits that connect different parts of the cortex. The functional roles of some circuits have been teased out, but our knowledge is imperfect. That’s also the case for many of the local operations performed in the cortex. Decades of research have shown that various bits and pieces of visual processing are performed in different cortical areas. Some parts of the cortex seem to be sensitive to lines, others to angles, others to color, but what we see when we look at a cat is a cat, not a jumble of lines, angles, and colors. No one can tell you how the “local” operations, the bits and pieces of visual processing, are put together to form the image that you see. How these local operations are integrated to form images remains mysterious. It’s as though we had a giant road map that didn’t show where any road went to.

But over the past thirty years, the destinations of a few circuits have become evident. The discovery process began with experiments-in-nature that focused on aphasia—Paul Broca’s original experiment-in-nature. Over the course of the twentieth century, it gradually became apparent that patients who had suffered brain damage that resulted in aphasia, permanent loss of some aspect of linguistic ability, had cognitive deficits. Kurt Goldstein, in his 1948 book, based on observations of patients over the course of decades, also characterized aphasia as a loss of the “abstract capacity.” Aphasic patients lost cognitive flexibility, pointing to neural circuits that regulated both language and cognition. Other experiments-in-nature, studying the effects of Parkinson disease and instances of brain damage localized to the basal ganglia, revealed a class of circuits that link cortical areas and the basal ganglia. These cortical-basal ganglia-cortical neural circuits play a central role in regulating motor control (including speech), comprehending the meaning of words and sentences, thinking, as well as emotional control.

Imagine a Martian scientist faced with the problem of determining how your car worked. He could systematically destroy individual parts and keep track of what happened. But most people would object to this practice, so the Martian could fall back to studying experiments-in-nature, cars that had broken down. Other experiments-in-nature, studying the effects of Parkinson disease and other instances of brain damage, filled in more blank spots. Neuroimaging studies of both neurologically intact “normal” subjects and patients are filling in some of the questions about precisely what particular neural structures do, as well as posing new questions.

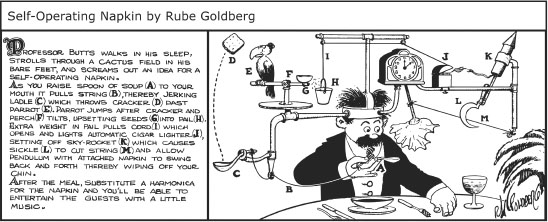

Neural circuits linking cortical areas through the basal ganglia provide the basis for many of the qualities that differentiate us from animals. The basal ganglia are buried deep within the human brain (figure 2.2). The Latin terminology, which attempted to describe the shape of the basal ganglia, was coined centuries ago. The caudate nucleus and putamen, two contiguous structures, are the principal inputs to the basal ganglia. The putamen receives a stream of sensory information from other parts of the brain. It also monitors the completion of motor and cognitive acts. The caudate nucleus is active in a range of cognitive tasks. The globus pallidus, which is contiguous to the putamen, is the output structure for information. The information stream then is channeled to the thalamus and other subcortical structures.

Figure 2.2. Basal ganglia. The basal ganglia are located deep within the skull. The putamen and palladium (another name for the globus pallidus) are contiguous and form the lentiform nucleus. The caudate nucleus and putamen form striatum. Cortical-to-basal ganglia circuits are often termed cortical-striatal circuits. These circuits transfer information from the basal ganglia to the thalamus. Other subcortical structures closely connected to the basal ganglia are omitted in this sketch.

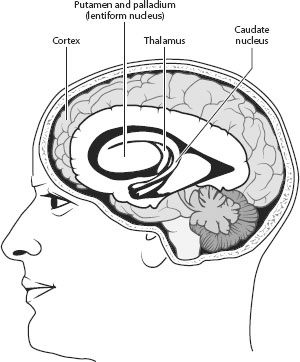

Figure 2.3 shows three circuits, omitting most of the details, including links to other subcortical structures and posterior cortical areas. It is based on Cummings’s 1993 review of clinical studies. Subsequent studies have confirmed its general conclusions and explored the neural bases of the behavioral deficits that Cummings reported. Cummings took into account the behavioral deficits of patients who had suffered strokes, comas, trauma, and Parkinson disease; the neural structures that were damaged or dysfunctional; and neural circuits mapped out by tracer studies of monkeys and other animals (for example, Alexander, DeLong, and Strick, 1986).

Conventional tracer techniques used on animals first mapped out circuits that connect various parts of the brain. These highly invasive techniques involve first injecting chemicals or viruses that form a “tag” into a specific neural structure. The tag essentially attaches itself to the electrochemical process by which neurons communicate with each other. Different tracer tags can propagate up or down a circuit’s pathways. If the objective is to trace the neural circuits that control tongue movements, a “back-propagating” tracer tag could be injected into the tongue to trace the circuit backward. A “forward-propagating” tracer tag injected into a neural structure could determine whether it was part of a circuit that ultimately controlled tongue movements. After an animal was allowed to live for a week or so to move the tracer through the neural circuit, it was “sacrificed,” that is, killed. The excised brain tissue then was stained with color couplers, similar to those used in conventional color film, that became attached to the tracer tag that had been transported through the neural circuit. The stained brain tissue, which showed the circuit in color, was visible after it was sliced in an apparatus that produced very thin sections and then viewed microscopically. Another invasive technique directly monitors brain activity by driving “microelectrodes”—exceedingly minute electrodes—into an animal’s brain to pick up the electrical signals that transmit information between neurons. Studies using multiple microelectrodes generally confirm the pathways mapped out by tracer studies (for example, Miller and Wilson, 2008). These techniques clearly cannot be used to map out neural circuits in human beings. Fortunately, noninvasive DTI confirms that humans have similar neural cortical-basal ganglia-cortical circuits (Lehericy et al., 2004).

Figure 2.3. Three cortical-basal ganglia-cortical circuits that regulate attention, motor control, cognition, and emotion in human beings (omitting many details, including links to other subcortical structures and posterior cortical areas). The diagrams are based on Cummings’s 1993 review of clinical studies. Subsequent studies confirm its general conclusions. Cummings took into account the behavioral deficits of patients who had suffered strokes, comas, trauma, and Parkinson disease; the neural structures that were damaged or dysfunctional; and the neural circuits mapped out by tracer studies of monkeys and other animals. The circuit from the anterior cingulate cortex involves the nucleus accumbens, which is also affected by alcohol and other substances.

The arrows connecting the labeled boxes in figure 2.3 track the flow of information from dorsolateral prefrontal cortex, lateral orbofrontal cortex, and ACC. The anterior cingulate circuit, as chapter 1 noted, regulates attention as well as laryngeal phonation (the melody of speech). Studies of the effects of brain damage from strokes and trauma showed that damage anywhere along the ACC circuit could result in a patient’s becoming apathetic and/or mute. Virtually every neuroimaging (PET or fMRI) study that has ever been published shows ACC activity while subjects are paying attention to the task. The dorsolateral circuit is involved in cognitive tasks. Damage or dysfunction anywhere along this circuit (cortical or subcortical) and other circuits involving other prefrontal cortical areas, particularly ventrolateral prefrontal cortex, results in cognitive deficits ranging from being unable to understand the meaning of a sentence, to insisting on continuing to climb upward to the summit of Mount Everest when a blizzard is clearly approaching. The orbofrontal prefrontal circuit is involved in emotion, inhibition, and other aspects of behavior that define a person’s personality.

The neuroanatomical terminology shouldn’t put you off. For the most part, it simply specifies the location of each cortical area. Dorsolateral prefrontal cortex refers to the upper part of the side of the cortex. Ventrolateral prefrontal cortex is located below dorsolateral prefrontal cortex. Orbofrontal refers to the prefrontal area immediately behind the eyes. Note that the same neural structure, the caudate, receives information that is channeled into the globus pallidus before connecting to the thalamus and then back to prefrontal cortex. Information is transferred from one group of neurons to another in these neural structures in anatomically independent subcircuits, though these subcircuits appear to be interconnected by a web of dendrites in the globus pallidus.

Local Operations of the Basal Ganglia

The basal ganglia, in themselves, are not the key to human language. They must work in concert with parts of the cortex that are involved with motor control, cognition, and emotional regulation. The local operations of the basal ganglia first became evident through the study and treatment of Parkinson disease. The immediate cause of Parkinson disease is degeneration of the substantia nigra, a subcortical structure that besides forming part of the basal ganglia circuitry also produces the neurotransmitter dopamine. Neurotransmitters are hormones produced in the brain that are necessary for particular neural structures to function. Dopamine is one of the neurotransmitters involved in running the basal ganglia, and dopamine depletion is generally the primary cause of the motor, cognitive, and emotional problems associated with Parkinson disease (Jellinger, 1990). Levadopa, a medication that augments the level of dopamine in a patient’s brain, and dopamine “agonists,” medications that intensify the effect of remaining dopamine, can ameliorate some of the deficits of Parkinson disease.

Parkinson disease was first identified by Dr. James Parkinson in An Essay on the Shaking Palsy in 1817, about 150 years before levodopa treatment came into general use. The disease’s motor problems can include tremor, muscular rigidity, slow movements, and an inability to carry out actions that require executing a series of internally guided sequential acts—walking, tying shoelaces, writing using a keyboard—most any learned activity. Patients generally do much better when they have an “external” model that they can copy. For example, patients who have a difficult time walking often are much better at imitating someone walking. These motor control problems stem from the failure of the basal ganglia’s “normal” operation as a “sequencing engine” that calls out cortically stored submovements and executes them. The term “pattern generator” often is used to describe the set of motor commands that constitute an element of a motor action, such as walking, picking up a cup, or talking.

A Primer on Speech Production

A brief explanation of how human speech is produced is in order at this point because some of the most striking effects of basal ganglia damage are apparent when speech is analyzed. Computer-implemented systems can provide detailed analyses of movement patterns that yield insights on basal ganglia function.

Talking is arguably the most complex aspect of motor control that everyone has to master, which explains why even subtle speech production deficits can serve as markers for basal ganglia dysfunction. Playing the violin or performing as a tightrope walker or tennis star undoubtedly requires the same or more precise degree of control, but everyone talks. It takes at least ten years, however, for normal children to learn to talk at adult rates (Smith, 1978). In part, children sound like children because their speech production is slow and full of errors.

A useful starting point in understanding what’s involved in talking is to think about the way that tinted sunglasses color the world. The tint of the sunglasses results from a “source” of light (electromagnetic energy) being “filtered” by the sunglasses’ colored lenses. The dye in the sunglasses’ lenses acts as a filter, allowing maximum light energy to pass through at specific frequencies that result in your seeing the world tinted yellow, green, or whatever lens color you selected. The sunglasses’ lenses don’t provide any light energy; the “source” of light energy is sunlight, which has equal energy across the range of electromagnetic frequencies that our visual system sees as white. If the sunglasses’ lenses remove light energy at high electromagnetic frequencies, the result is a reddish tint. If energy is removed at low frequencies, the result is a bluish tint.

Pipe organs work in much the same manner. The note that you hear when the organist presses a key is the product of the organ pipe acoustically filtering the source of sound energy produced by the air flowing through a constriction at the end of the organ pipe. The high airflow through the constriction produces acoustic energy across a wide range of frequencies, yielding “white” noise analogous to white light. The organ pipe reduces the amount of sound energy that can pass through it at most frequencies. The frequency at which maximum acoustic energy passes through the organ pipe is perceived as the musical note.

Human speech is a bit more complex. The human larynx (often called the “voice-box”) generates a periodic source of energy for vowels and consonants, like [m]. The larynx is a complex bit of anatomy that sits on top of the trachea, the “windpipe” that leads upward from your lungs. It acts as a valve that can interrupt the flow of air from or into your lungs. The “vocal cords,” sometimes called “vocal folds,” act as a valve that opens the air passage when you breathe. When you talk, the vocal cords are moved closer together and are tensioned by the muscles of the larynx. The airflow out from the lungs then sets them into motion and they rapidly close and open, producing puffs of air that are the source of acoustic energy for “phonated” vowels and consonants. such as [m] and [v] of the words “ma” and “vat.” The rate, that is, the “fundamental frequency” (F0), at which the vocal cords open and close depends on the pressure of the air in your lungs, the tension placed on the vocal cords and the mass of the vocal cords.

The average F determines the “pitch” of a speaker’s voice. The fundamental frequency of phonation for adult males, who have larger larynxes than most adult women, can vary from about 60 Hz to 200 Hz. Adult women and children can have fundamental frequencies ranging up to 500 Hz, sometimes higher. The unit “hertz” (Hz) simply refers to frequency, the number of events per second. The periodic, or almost periodic, phonation also has acoustic energy at the “harmonics” integral multiples of the fundamental frequency of phonation. For example, if the F0 is 100 Hz, there will be acoustic energy at 200 Hz, 300 Hz, 400 Hz, 500 Hz, and so on. Depending on how abruptly the vocal cords open and close, the acoustic energy produced by phonation falls off at higher harmonics to a greater or lesser degree.

While the perceived pitch of a person’s voice is closely related to F0, vowels and consonants are largely determined by “formant frequencies”—the frequencies at which the airway above the larynx can let maximum acoustic energy through it by acting as an acoustic filter, similar to an organ pipe. The difference between an organ and human speech-producing anatomy is that we have one “pipe”—the airway above the larynx, usually termed the supralaryngeal vocal tract (SVT)—that continually changes its shape as we talk by moving our lips, tongue, jaw, soft palate (a flap that can close off our nose from the rest of the airway), and the vertical position of our larynx. The continually changing shape of the SVT changes its filtering characteristics, producing a continual change in the formant frequency pattern. We’ll return to this critical aspect of human speech, which yields its signal advantage over other vocal signals—a data transmission rate that is about ten times faster.

Speech Deficits of Broca’s Syndrome and Parkinson Disease

The computer-implemented speech analyses that Sheila Blumstein and her colleagues published in 1980 showed that the primary speech production deficit of Broca’s aphasia was errors in sequencing lip or tongue movements with phonation in words that began with the “stop” consonants [b], [p], [d], [t], [g], and [k]. Patients suffering from Broca’s aphasia would produce the word “bat” when they intended to say “pat.” I was aware of Sheila’s research because we had jointly written a textbook on speech physiology and speech analysis. We and our graduate students found similar speech motor control sequencing problems in Parkinson disease (Lieberman et al., 1992; Pickett, 1998: Hochstadt et al., 2006).

Eliza Doolittle’s Speech Lesson

Eliza Doolittle, who appears in George Bernard Shaw’s play Pygmalion and the movie My Fair Lady, is based on a real person. Henry Sweet, one of the leading British phoneticians of the nineteenth century, taught a Cockney girl to speak “proper” English. It is said that Sweet never received the honor of a knighthood because he presented the tutored, real-life, Eliza to Queen Victoria at a social event.

In the movie, Eliza is shown enunciating proper English phrases before a candle flame. The candle’s wavering flame served as a “burst detector” to mark the burst—the puff of air that happened when she opened her lips at the onset of a stop consonant. A strong burst would deflect the candle flame to a greater degree than a weaker burst. The burst for [b] should be less than [p] when the “voice-onset-times” that differentiate these consonants are correct, and so Eliza practiced speaking before a lit candle. She perhaps might have been rehearsing producing a mellifluous short-lag [d] and [b] for her future life when she requested her maidservant to “Please draw the bath.”

Stop consonants, as their name implies, are formed by closing off the SVT (stopping it up) and then abruptly releasing the closure. The stop consonants [b] and [p] of words “bat” and “pat” are formed by the speaker’s lips closing off the SVT; the distinction between them rests on the time that elapses between the burst of sound that occurs when the lips open and the start of phonation. The interval between the burst and phonation for the short-lag [b] is less than 25 msec (1 msec = 1/1000 of a second). The interval for the long-lag [p]’s exceeds 25 msec. The larger [p] burst is a consequence of the long lag between the burst and phonation (cf. Lieberman and Blumstein, 1988). Leigh Lisker and Arthur Abramson in 1964 analyzed the stop consonants of languages around the word. They found that every language that they analyzed (sound spectrographs, not candles, were used) differentiated stop consonants by means of this time-lag, which they termed “voice onset time” (VOT). In English, VOT differences differentiate short-lag [b], [d], and [g] from long-lag [p], [t], and [k]. The stop consonants [t], [d], [g], and [k] (of the words “to,” “do,” “go,” and “come”) are formed by obstructing the VOT with the tongue. A third VOT category “prevoicing,” which isn’t used in English, involves starting phonation before the stop consonant’s release-burst. Spanish, for example, uses prevoicing for the stop consonant of words beginning with the letters “b” and “d.”

The Woman Who Suddenly Spoke Irish

As I listened to the messages recorded on my telephone one day in 1997, I learned that a case of “foreign accent syndrome” had been identified in Warwick, a suburb of Providence. A woman in her 40s, patient CM, had suddenly started to speak with a strong Irish accent when she came out of a coma. She wasn’t Irish, nor had she ever visited Ireland. An MRI of CM’s brain showed extensive bilateral damage to the putamen and caudate nucleus, the structures of the basal ganglia “striatum,” which receives inputs from both motor cortex and prefrontal cortex. The query was whether my lab was interested in analyzing CM’s speech, given our ongoing studies of Parkinson disease patients. We studied CM’s speech, as well as her linguistic and cognitive capacities. Whereas the dopamine decrements in Parkinson can have some effects on cortical function, the lesions in CM’s brain isolated the effects of basal ganglia dysfunction.

Our computer analysis showed absolutely no trace of an Irish accent. Instead, she had extreme problems coordinating and sequencing the motor acts involved in talking. She couldn’t regulate the air pressure in the lungs in synchrony with gestures that moved her tongue, lips, jaw, velum (which closes off the nose), and the muscles that control laryngeal phonation. VOT was extremely disrupted. Sudden changes in the amplitude of her speech randomly occurred; her voice would abruptly fall to a whisper and then become suddenly loud. Her vocal cords were phonating where they shouldn’t. The speech therapists probably were at their wits’ end and decided that she was speaking with an Irish accent because her speech was unlike any that they had previously heard.

Despite her ostensible foreign accent, CM could be understood. Her major problem was cognitive; her daily life was constrained by the fact that she couldn’t plan ahead or adjust to unanticipated events, however minor. She needed overt cues; her refrigerator door had a daily schedule that listed the routine tasks that she had to do throughout the week. Similar problems beset Parkinson disease patients, who have difficulty with routine tasks that require executing a sequence of internally guided motor acts, or planning ahead. External cues make the task easier. The most severe cognitive problem, which CM shared with Parkinson disease patients, was cognitive perseveration—the inability to change the direction of a thought process.

Cognitive Flexibility—Perseveration

In the 1980s, it became apparent that Parkinson disease has negative cognitive consequences. Cognitive inflexibility, characterized as “subcortical dementia,” occurred. Subcortical dementia is different in kind from Alzheimer’s, which primarily affects memory. It shows up as perseveration—continuing to act or think along one line, though circumstances dictate a change. The Odd-Man-Out (OMO) test was devised by Flowers and Robinson (1985) to assess cognitive flexibility in Parkinson disease.

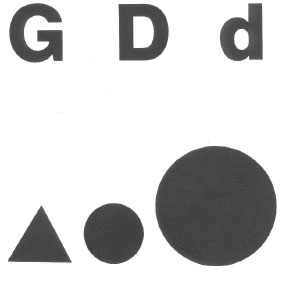

The test is straightforward. Healthy “control” subjects usually are puzzled why anyone would bother administering a test that is so simple. The person being tested is presented with a booklet that has two sets of 10 cards. Each card has three images printed on it. The first card might have a large triangle, a small triangle, and a large circle. The subject is asked to identify the “odd” image. There are two ways, “criteria,” that the subject can use to reach that decision—the subject can use either shape or size to decide which image is “odd.” If the criterion selected by the subject is size, the small circle will be selected. The second card also has three images printed on it, an uppercase E, a lowercase e, and an uppercase A. The subject is asked to select the odd image using the same criterion, and is told whether his/her decision is correct or not after each trial. The subject starts with the first 10-card set; after s/he comes to card 10, the subject is asked “to do the sort another way” for the next 10-card set. And after 10 trials, the subject is again asked to re-sort the same packet of cards “the other way.” This entails returning to the criterion that s/he used to sort the first 10-card set, five minutes or so earlier. The procedure is repeated six or eight times. Figure 2.4 shows two Odd-Man-Out cards.

Figure 2.4. Two cards from the Odd-Man-Out test.

Control subjects generally have a few errors on the first 10-card sort and none thereafter. In contrast, Parkinson patients often make few or no errors on the first set of 10 cards, but they encounter problems on each shift of the sorting criterion—shifting from size to shape, or from shape to size. They even have high error rates when they return to the first 10-card set on which they made few or no errors. Thirty-percent error rates for subjects having basal ganglia damage typically occur on the OMO test. The problem rests in shifting the sorting criterion; Parkinson disease patients perseverate—they keep to the criterion that they have previously been using.

When CM, the woman who spoke Irish, was tested by Emily Pickett and her colleagues, she was at a complete loss whenever she was asked to change the sorting criterion. She stared helplessly at the test cards, incapable of changing the sorting criterion (Pickett et al., 1998). CM was not demented; she understood what she was supposed to do and knew that she was not able to perform this simple task. She repeatedly asked for explicit guidance. CM also had problems on a range of cognitive tasks that involve “executive control,” such as remembering images that she had viewed a few minutes earlier, and suppressing a response on the Stroop Test, in which colored inks are used to spell out color terms. When asked to identify the color of red or green ink that spelled out the word “blue,” she had difficulties. She also had high error rates on a sentence comprehension test on which children older than age eight years have no problems. Her error rates increased dramatically for sentences in which the syntax departed from “canonical” sentences that had no clauses, for example, “The girl kissed the boy.” CM had difficulty deciding who kissed whom when she heard the similar sentence in the passive voice, “The boy was kissed by the girl.” Problems comprehending distinctions in meaning conveyed by syntax also beset Parkinson disease patients (Lieberman et al., 1990, 1992; Grossman et al., 1992, 1993, 2001; Hochstadt et al., 2006).

Motor Control and Cognitive Flexibility

The speech motor sequencing problems apparent in Parkinson disease and instances of damage confined to the basal ganglia in experiments-in-nature such as the study of CM have a common cause, a degraded basal ganglia local operation. In 1994, the British neurosurgeons David Marsden and Jose Obeso, in their comprehensive review of the effects of neurosurgery aimed at ameliorating the motor control problems of Parkinson disease, identified two aspects of basal ganglia motor control. The first basal ganglia motor control function explains why Parkinson disease patients and Broca’s aphasics can have difficulty sequencing the exceedingly precise motor commands that are necessary to produce stop consonants:

First, their [the basal ganglia] normal routine activity may promote automatic execution of routine movement by facilitating the desired cortically driven movements and suppressing unwanted muscular activity. Secondly, they may be called into play to interrupt or alter such ongoing action in novel circumstances (Marsden and Obeso, 1994, p. 889).

Marsden and Obeso were aware of the cognitive deficits that can occur in Parkinson disease, and the dual roles of the basal ganglia in motor control led them to conclude that:

The basal ganglia are an elaborate machine, within the overall frontal lobe distributed system, that allow routine thought and action, but which respond to new circumstances to allow a change in direction of ideas and movement (Marsden and Obeso, 1994, p. 893).

As we shall see, this insight, which explained the cognitive inflexibility that occurs in Parkinson disease, is a key to understanding the neural basis and probable evolution of human cognitive flexibility and creativity.

Our Laboratory at Mount Everest

For almost 10 years, Mount Everest served as the site for an experiment-in-nature that further explored the role of cortical-to-basal ganglia circuits in motor control, language, and cognitive flexibility, as well as keeping one’s impulses under control (Lieberman et al., 1995, 2005; Lieberman, 2006). The basal ganglia are metabolically active and need a copious supply of oxygen to function properly. Basal ganglia function thus is degraded by the low oxygen content of air at the extreme altitudes reached on Himalayan peaks. The effects generally do not show up at the lower altitudes of the Alps and most other mountain ranges. Climbers ascending Everest and other 8,000-meter-high Himalayan peaks have exhibited Parkinson-like motor deficits and subcortical dementia. In some instances, they have collapsed and died. Autopsies and MRIs in these instances reveal bilateral lesions in the putamen and caudate nucleus (Chie et al., 2004; Swaminath et al., 2006). Cognitive deficits, similar to those seen in Parkinson disease, occur when climbers ascending Mount Everest are seemingly doing fine, but extreme motor and cognitive deficits resulting from basal ganglia dysfunction contributed to the death of one climber (Lieberman et al., 2005; Lieberman, 2006).

Only a handful of climbers have ever reached Everest’s summit in one long, sustained climb. The ascent almost always involves climbing up to a high “camp” and then descending to base camp, resting, climbing up to a higher camp and descending. After repeating this sequence and reaching successively higher camps over a period of weeks, climbers ultimately reach the highest camp at 8,000 meters before attempting to make a final push to the 8,848-meter-high summit. This allowed my research team to run a series of motor and cognitive tests on the same individual as he or she reached higher and higher altitudes and breathed “thinner air” that had lower and lower oxygen levels. We remained, nerd-like, at base camp and used radio links to administer linguistic and cognitive tests and record the climbers’ speech.

Climbing Everest also differs radically in another way from the manner in which mountains are usually climbed. Without the aid of the Sherpas, a Tibetan people who moved to the Everest region of Nepal 300 years ago, almost no one else would be able to reach Everest’s summit. Before any other climbers leave base camp, Sherpas string miles of “fixed” rope. A rope line is anchored to the slopes between camp 2 at 6,500 meters and camp 4 at 8,000 meters. Climbers then ascend and descend with the aid of the fixed rope line using clamping devices attached to their safety harnesses. As they push onward and upward on the rope line, the brightly colored down-filled garments of the long line of climbers on summit days (the few days on which the wind dies down so that they can reach the summit) from a distance look like a long red and yellow caterpillar moving upward.

We found correlated speech production deficits, deficits in comprehending the meaning of sentences that eight-year-old children can understand, and perseverative errors on the Odd-Man-Out test and Wisconsin Card Sorting Test (described later), which provides another measure of cognitive flexibility. The errors on these tests and degraded speech effects were similar to those evident in Parkinson disease and subjects like speaker CM. The climbers’ speech became slower, and error rates on these tests increased. We could predict cognitive and sentence deficits by means of computer-implemented acoustic analysis of the climber’s speech.

The effects were subtle in most instances. However, profound cognitive and motor dysfunction had a tragic outcome in one instance. At Everest base camp, the 5,300-meter altitude starting point, one 23-year-old male climber was error-free on the Odd-Man-Out test. His speech was normal when analyzed using a computer system that reveals subtle deficits that are not apparent to the unaided ear. Two days later, when he was tested by two-way radio shortly after he reached camp 2 at 6,500 meters, his performance had degenerated to a pathologic level. His OMO error rate exceeded 40 percent. The computer analysis revealed profound speech motor sequencing deficits similar to the brain-damaged woman CM studied in Pickett et al. (1998). The climber was forcefully advised to descend. Tragically, his inability to change the direction of a thought process was not limited to the OMO test. His cognitive perseveration was general, and he kept to his original climbing plan, which called for him to ascend to camp 3 at 7,200 meters. Once there, he attempted to return to base camp. Motor sequencing problems then contributed to his death. Two “carabiners,” snap links tethered to each climber’s safety harness, link each climber to the fixed ropes. The carabiners’ function is to prevent the climber from sliding down the icy slopes. Two carabiners are necessary because the fixed rope is anchored into the slope every 5 meters or so by snow-stakes or ice screws. It’s necessary to first unsnap the downhill carabiner, move it past the past the anchor point and refasten it to the rope. The uphill carabiner must remain in place above the anchor while the downhill carabiner is unlocked and moved. The uphill carabiner then can be moved past the anchor point after the downhill carabiner is refastened to the fixed rope. At some point during his descent, the oxygen-starved 23-year-old climber failed to carry out the correct carabiner sequence and fell to his death.

Cognitive inflexibility induced by low oxygen levels accounted for the disaster on Everest described in Jon Krakauer’s book, Into Thin Air. The expedition leaders, who had years of Himalayan experience, “perseverated,” keeping to their original climbing plan toward Everest’s summit though a major storm was approaching. They should instead have immediately descended. Similar cognitive inflexibility is thought to be the immediate cause of aviation disasters and near-disasters over the past century. Engines run out of fuel and planes crash because pilots fail to switch to full auxiliary fuel tanks. The 2008 PBS documentary Redtail Reborn about the World War II African-American Tuskegee fighter group re-created a near-fatal incident. The oxygen-mask of one pilot malfunctioned. He lost control and his plane went into a steep dive while he hallucinated, talking to a stranger who somehow was sitting astride the plane’s nose. He survived because the cockpit canopy was open and the oxygen-rich outside air at a lower altitude revived him before the plane hit the ground. Climbers on Mount Everest suffering from hypoxia also have reported conversing with hallucinatory companions.

Gage Is No Longer Gage—Disinhibition

Another effect of oxygen deficits became evident during the nine years that we tracked motor and cognitive performance in climbers on Mount Everest. Some climbers became disinhibited, losing control over impulsive behavior. This tended to occur in climbers who had spent lots of time at altitudes above 6,500 meters, or had attempted the climb without ever using supplementary oxygen. Disinhibition on Everest from hypoxic disruption of basal ganglia activity approaching clinical levels on Everest wasn’t surprising. The story of Phineas Gage is a staple of neuroscience texts. Gage was a nineteenth-century railway worker who survived after a three-foot-long iron rod, propelled by a blasting charge, passed through his brain. Gage’s miraculous survival was marred by a personality change—disinhibition. The iron rod destroyed Gage’s orbofrontal prefrontal cortex. Dr. Harlow, the physician who attended Gage, noted the change,

He is fitful, irreverent, indulging at times in the grossest profanity (which was not previously his custom), manifesting but little deference for his fellows, impatient of restraint or advice when it conflicts with his desires, at times pertinaciously obstinate. . . . Previous to his injury, although untrained in the schools, he possessed a well-balanced mind, and was looked upon by those who knew him as a shrewd, smart businessman, very energetic and persistent in executing all his plans of operation. In this regard his mind was radically changed, so decidedly that his friends and acquaintances said he was “no longer Gage” (Harlow, 1868, p. 342).

The disinhibited Everest climbers we observed acted impulsively and talked in a manner that approached the clinical cases reviewed by Cummings (1993) for disruption to circuits linking the basal ganglia and orbofrontal prefrontal cortex.

Executive Control

The mechanisms involved in treating Parkinson disease using levadopa are not fully understood. After granting a temporary levadopa “holiday” to the Parkinson disease patient, it often becomes ineffective. When it works, many Parkinson disease patients respond well over the course of an entire day. In other “fluctuating” patients, the effects of medication rapidly wear off. In some circumstances, patients are taken off levadopa to assess the state of Parkinson disease, unmasked by medication. This sensitivity to medication creates an opportunity for exploring the effects of basal ganglia dysfunction, since cortical function, for the most part, depends on different neurotransmitters. The same person, “on” or “off” medication, can function as his or her own “control” in experiments that systematically test motor and cognitive abilities. Trevor Robbin’s research group at Cambridge University in England, in a series of elegant experiments (for example, Lange et al., 1992), showed that medicated Parkinson disease patients can perform at levels close to unimpaired normal controls on a range of cognitive “executive control” tasks. As chapter 1 noted, executive control encompasses tasks such as problem solving, decision-making, visual tracking, and tasks involving temporary, short-term, working memory as well as cognitive flexibility.

Working memory refers to the ability to hold and act on information that is not directly present in the environment. In experiments that date back to the 1920s, monkeys were first trained to push a button positioned at a specific location on a control board to obtain food. They could look at the button during the training period. When the control board was obscured, the monkeys then were for a short period still able to obtain their food reward by poking at the same position on a blank panel. Baddeley and Hitch (1974) pointed out that many of the tasks that constitute human linguistic and cognitive capabilities involve working memory. Mentally adding or subtracting numbers, for example, involves keeping at least one number in working memory. Sorting out images according to a desired shape or color involves retaining the desired “target” shape or color in memory. Comprehending the meaning of a spoken sentence involves keeping the words in working memory because the sentence’s meaning is not clear unless you take account of all the words. Baddeley and Hitch posited an “executive” mental capacity that directs the cognitive acts involved in performing these tasks. Hence the term “executive control” came into being to refer to these tasks.

It was first thought that the short-term “working memory” that enabled the monkeys to perform this task involved temporarily storing information in prefrontal cortex, because neurons there were active during the time interval in which a monkey could perform the task. Current studies suggest that working memory does not actually entail storing information in prefrontal cortex. The details of how this selection process works and what cortical structures are involved are still a work in progress, but data from many neuroimaging studies (for example, Postle, 2006; Badre and Wagner, 2006; Miller and Wallis, 2009) indicate that ventrolateral, dorsolateral, and other prefrontal cortical areas in neural circuits involving the basal ganglia direct attention to information stored in the brain systems that directly learn, store, and execute concepts and actions. The information then is used to carry out tasks involving executive control, such as comprehending the meaning of a sentence (Kotz et al., 2003), mental arithmetic (Wang et al., 2006), and selecting words according to their meaning or sound structure (Simard et al., 2011). My laboratory, working with Dr. Joseph Friedman, a Parkinson disease specialist, showed that Parkinson disease patients had difficulty comprehending sentences where distinctions in meaning conveyed by syntax involve holding words in working memory (Lieberman et al., 1990, 1992; Hochstadt et al., 2006). The findings have replicated in other laboratories (for example, Grossman et al., 2001, 2002; Natsopoulos, et al. 1993).

Neuroimaging

Although there sometimes is a tendency toward reductionist thinking in some fMRI and PET neuroimaging experiments (locating the “center” of morality or syntax, and so on), the vast majority are advancing our understanding of how human brains work. Oury Monchi’s research group in Montreal, which is one of the world’s centers of research on the brain, has separated out some of the critical functions performed by the structures that form the basal ganglia. The Monchi research group in a sequence of fMRI imaging experiments has teased apart some of the local operations that yield human cognitive flexibility using the Wisconsin Card Sorting Test (WCST), which is the gold standard for assessing cognitive flexibility. The conventional WCST uses a set of 18 cards and 4 “reference” cards. Each test card has pictures of one of four shapes—circles, stars, squares, and plus signs (+)—printed in one of four colors. There can be one, two, three, or four circles, stars, squares, or plus signs on a card. The subjects have to match each test card to one of the four reference cards, for example, a card with one red triangle, a card with two green stars, a card with three yellow plus signs, a card with four green circles. A typical test card might have four yellow stars printed on it. The subject isn’t told how she or he should sort the test cards. The subject starts out by making a sort and then is informed whether the sort was “correct” or not.

For example, starting with number and matching the test card to the reference card that has four green circles on it would be incorrect if the person running the session wanted to start with color. The subject in this instance would be told that the sort was “wrong” and have to continue to make sorts until s/he matched to the color on one of the four reference cards, when s/he would be told that the sort was “correct.” The subject then would continue to match by color, receiving a “correct” response until the sorting criterion was again changed by the test administrator. After achieving a correct response to the new criterion, for example, number, the subject would then receive “positive feedback”—being informed that the sort was correct—and would continue until the sorting criterion changed again. The WCST has been in use since the 1960s to study the cognitive role of frontal cortical activity in humans and shows whether cognitive flexibility is degraded when subjects have difficulty changing the sorting criterion, or learning a new sorting criterion, as well as maintaining a cognitive criterion. The WCST also can be used to determine the specific roles of of basal ganglia structures in these tasks.

The Monchi et al. (2001) fMRI study reported the involvement of a cortical-basal ganglia loop involving the ventrolateral prefrontal cortex, the caudate nucleus, and the thalamus when a subject received “negative” feedback, indicating the need to plan a cognitive set-shift by looking for an alternative rule-sorting criterion. Another cortical-striatal loop, including the posterior prefrontal cortex and the putamen, was active during the execution of a set-shift—that is, when applying the new sorting criterion for the first time. Dorsolateral prefrontal cortex was involved whenever subjects received any feedback, positive or negative, as they performed card sorts, to apparently monitor whether their responses were consistent with the chosen criterion. In another fMRI study, Monchi et al. (2006a) showed that ventrolateral prefrontal cortex is specifically active in planning ahead when a subject has to compare the card that is to be selected and the criterion. This study confirmed that the caudate nucleus uses this information when any novel action needs to be planned.

The role of the caudate nucleus, putamen, and the subthalamic nucleus, and other subcortical structures involved in these circuits (not shown in the simplified 1993 Cummings diagram; cf. Alexander et al. for a fuller picture) became clearer in this study and others by the same group (Monchi et al., 2006a, 2006b; François-Brosseau et al., 2009; Provost et al., 2011). The results of these studies point to the caudate nucleus being involved in the selection and planning, and the putamen in the execution of a self-generated action among competitive alternatives. Neither was active when the information for shifting to an alternative was implicitly given in the task. In contrast, the subthalamic nucleus (another basal ganglia structure) was involved only when a new motor action was required, whether planned or not.

Other neuroimaging studies confirm the role of dopamine and the basal ganglia in cognitive flexibility. When early-stage Parkinson patients who have low dopamine levels were compared with healthy control subjects in another study, a pattern of reduced activity in both dorsolateral prefrontal cortex and left ventrolateral prefrontal cortex was apparent in “executive control” tasks (Monchi et al., 2007). Parts of parietal and temporal cortex, posterior parts of the brain that these cortical-basal ganglia circuits connect to prefrontal cortex, also showed reduced activity compared to healthy subjects when the putamen or caudate nucleus of the basal ganglia were required for the task.

Cortical-Basal Ganglia Circuits Are Not Domain-Specific

As noted earlier, the local operations performed in a particular neural structure aren’t necessarily domain-specific—devoted to one task and one task alone. That is clearly the case for the basal ganglia. Independent neuroimaging studies have reached similar conclusions concerning the role of specific areas of the prefrontal cortex and basal ganglia in a range of cognitive tasks. Cools et al. (2008), using PET imaging, showed that working memory tasks involving recalling digits, words, images, reading, and so on all correlated with basal ganglia dopamine levels. Hazy et al. (2006) showed that cortical-basal ganglia circuits integrate activity in prefrontal cortex, basal ganglia, and the subcortical hippocampus in these tasks, which accounts for the higher basal ganglia dopamine levels that occurred in these tasks. Wang et al. (2005) found increased activity in left ventrolateral prefrontal cortex and the basal ganglia as task difficulty increased during a mental arithmetic task. Kotz et al. (2003), using event-related fMRI, found bilateral ventrolateral prefrontal cortical and basal ganglia activity in tasks that involved either comprehending the meaning of a spoken sentence or the emotion conveyed by the speaker’s voice. These studies confirm the conclusions of Duncan and Owen, who reviewed the findings of neuroimaging papers published before 2000. They concluded that mid-ventrolateral and dorsolateral prefrontal cortex, as well as the anterior cingulate cortex, are active in virtually every cognitive task involving executive control. There may be some neural structures devoted strictly to language, but that is becoming less and less likely.

It also is becoming evident that the cortical to basal ganglia circuits that we have been discussing are not specific to cognition, insofar as they are involved in regulating emotion. But are there specific circuits, constituting “modules,” that are domain-specific. fMRI studies using variations on the Wisconsin Card Sorting Test suggest that the observed neural activity in the cortical-basal ganglia circuits that carry out this task is not domain-specific—limited to sorting out visual symbols. France Simard and her colleagues in their 2011 study had subjects match words according to their meaning (semantics), their phonetic similarity (roughly their spelling), and whether they rhymed (the syllable’s onset sounds). The same activation patterns occurred (involving ventrolateral prefrontal cortex, dorsolateral prefrontal cortex, caudate nucleus, and putamen) as was observed when sorting images. Additional cortical areas involved with sound perception also were activated. The critical point was an identical pattern of prefrontal cortex to basal ganglia circuits activity while shifting cognitive criteria in sorting tasks involving either visual or linguistic criteria.

The local operations performed in the thalamus, which has many distinct areas, are not clear, but it has been apparent for decades that lesions in thalamus, particularly in areas that form part of the circuits to the basal ganglia, can result in speech motor, word-finding, and comprehension deficits (Mateer and Ojemann, 1983). The surgical procedures used to treat Parkinson disease before levodopa was available entailed producing deliberate unilateral lesions in the basal ganglia circuitry that projects to the thalamus or bilaterally. Similar surgical procedures directed by MRIs and electronic stimulators implanted in basal ganglia, thalamus, and other nearby subcortical structures are again being used to tread Parkinson disease. In some instances, especially with bilateral lesions of the globus pallidus (Scott et al., 2002), cognitive deficits occur. In some instances, no cognitive deficits are apparent. I saw a film of a young boy who had been unable to stand upright or walk, running after surgery that caused bilateral lesions in globus pallidus; no cognitive deficits were apparent. As Marsden and Obeso (1994) pointed out, our knowledge of neural circuitry and local neural operations remains imperfect.

So You Want to Be a Samurai, Play the Piano, or Understand German

The basal ganglia have yet another role that isn’t domain-specific—associative learning and “automatization.” Many of the tasks that we carry out throughout life involve the formation of “matrisomes,” groups of neurons in motor cortex that control the muscles that carry out a learned task rapidly, without conscious thought. The data of an fMRI study aimed at showing microcortical areas in individual subjects specific to understanding sentences and words (the experimental protocol didn’t achieve that end) suggests that a similar process forms “cognitive” and “linguistic” matrisomes in prefrontal cortex (Fedorenko et al., 2011).

One of the movies that spoke to my wife and me in the late 1970s was the Japanese film Yojimbo. We were in the midst of a legal battle against the overwhelming resources of the University of Connecticut. Marcia was on the front line of the feminist struggle for equality. She had been denied tenure because she had pushed for equal access to UConn’s athletic facilities for women. In Yojimbo, Toshiro Mifune, in his role of a wandering Samurai, defeated a villainous band. We needed a Yojimbo. We viewed The Hidden Fortress, The Throne of Blood, and other Japanese slice-’em-ups. The precise, blazingly fast Samurai sword cuts were incredible. How did the Samurai do it?

The path to mastering the Samurai sword was laid out in 1645 by Miyamoto Musashi in The Book of the Five Rings. Musashi’s lesson plan applies with equal force to talking, playing the piano, touch-typing, and the complex protocols that mark human culture, including learning the linguistic “rules” of syntax and word-formation. If you’ve successfully taught someone how to drive a car with a stick shift, you’ve probably followed Musashi’s formula. To master the Samurai sword, or gearshift and clutch, you must slowly perform the action, repeating it again and again. At first, you’ll have to consciously think about each step. But at some point, you’ll suddenly realize that you are not consciously aware of the steps involved and can go on to thinking about other things. Research started in the 1970s shows that many neural structures besides motor cortex are active while you are engaged in learning the task. However, when the task becomes automatic, most of the neural structures that apparently were thrown into action to learn the task are no longer active. They constitute a sort of “temp-help” that facilitate learning the task; they’re no longer needed (cf. Sanes et al., 1999). And at the point where an action becomes automatic, “matrisomes,” populations of neurons, are formed in motor cortex that code the entire action. They code the submovements, the motor acts necessary to carry out the task automatically. Marsden and Obeso (1994) correctly pointed out that the basal ganglia sequentially call out the submovements stored in motor cortex when we walk, talk, or perform any motor act.

Learning Anything

Studies of motor learning have revealed another local basal ganglia operation—facilitating a reward-based associative learning process—making it even clearer that the local operations of the basal ganglia are not domain-specific. Mirenowicz and Schultz (1996) monitored basal ganglia dopamine-activated neurons in the basal ganglia while monkeys learned how to obtain a juice reward by pushing a button. The neurons were activated when the monkeys were rewarded. When MPTP, a neurotoxin that degrades the dopaminergic basal ganglia activity, was administered, the monkeys were unable to obtain their juice reward. When the monkeys were given dopamine “agonists,” used to treat Parkinson disease by restoring basal ganglia activity, the monkeys again pressed the buttons to get the juice. Using somewhat different procedures, Graybiel (1995) replicated these findings. Her studies show that the basal ganglia play an essential role in learning both motor and cognitive acts.

The neural network that makes up the basal ganglia involves two integrated subsystems. A”fast” system that involves the neurotransmitters glutamate and GABA connects the cortex with the basal ganglia’s input—the striatum, which includes the putamen, caudate nucleus, and the basal ganglia’s output structures. A second subsystem arising from the midbrain makes use of the neurotransmitters dopamine and acetylcholine in “interneurons” that connect neurons within the basal ganglia (Bar-Gad and Bergman, 2001). The neurons of these subsystems are among the elements that are the brain’s memory traces of the relationships formed through associative learning. In an experiment that used classic Pavlovian techniques, Mati Joshua and colleagues (2008) studied neuronal basal ganglia activity in monkeys after they learned to perform tasks that resulted in either a “reward” (fruit juice) or an “aversive stimulus” (a puff of air directed on their face). The experimental technique involved placing microelectrodes into the monkeys’ brains. Increased activity in dopaminergic basal neurons coded the probability that a reward will be achieved. This explains the results of degraded dopamine activity noted by Mirenowicz and Schultz as well as clinical observations of Parkinson disease patients, who show less facility in learning tasks that would result in a pleasing outcome. In contrast, increased activity occurs in the monkey’s interneurons when an aversive outcome is anticipated (Joshua et al., 2008). Wael Assad and Emad Eskandar (2011) monitored neurons in monkeys as they performed trial-and-error learning. They found neurons that coded the monkey’s expectations of potential reward (juice) or aversive puffs of air in both the caudate nucleus of the basal ganglia and the prefrontal cortical areas that are linked to the caudate. These coding processes allow monkeys to learn to perform long sequences of actions that have “selectional constraints.” At each step of the sequence, the monkey must learn to perform a particular act and choose not to perform other acts if he is to achieve his goal (a food reward). The monkey thereby learns to perform a series of acts that if we were to use linguistic terminology, have an action syntax.

In short, these neurons code the expectations that guide associative learning, allowing animals to learn to perform complex linked sequences. In humans, similar processes would account for our learning complex grammatical “rules,” as well as the equally complex rules that guide our interactions with other people, other species, the conditions of daily life, and responses to new and novel situations.

Evie Fedorenko and her colleagues at MIT in their 2011 fMRI study attempted to identify locations in Broca’s area that they believe are innate and specific to comprehending sentences and words. However, these micro-areas probably are learned matrisomes that code the suite of neural operations that are necessary to comprehend a class of sentences. Automatized motor acts are controlled by matrisomes formed in motor cortex. Ongoing projects developing brain-controlled prostheses and speech-producing synthesizers for individuals who have lost limbs or have suffered profound muscle degeneration are using electrodes to tap into these matrisomes. There is no reason to believe that other cortical areas, including prefrontal cortex, engage any neural processes that differ from those carried out in motor cortex.

The studies noted earlier (a small subset of current studies) show that the outcomes of tasks learned by means of associative learning are coded by neurons in the basal ganglia and prefrontal cortical areas linked to basal ganglia. The pervasive presence of cortical malleability suggests that cognitive matrisomes that code the “rules” by which we comprehend sentences and the operations involved in mathematics, formal logic, and so on are also formed by means of associative learning, imitation, and other “general” cognitive processes. One of my students may hold the current world’s record for being the subject of fMRI studies. She is totally blind and “reads” using text-to-speech software on her computer. Her “visual” cortex responds to speech and other vocal signals; she has been the focus of fMRI studies directed at mapping out the cortical activation that enables her to recognize spoken words.

It is probable that we form cortical cognitive matrisomes, populations of neurons that code the semantic referents of words and syntax of a language as we learn it. The cognitive matrisomes would be formed by the process of automatization that we know takes place in motor cortex as we learn to tie our shoes, shift gears, or for the students of Toshiro Mifune, mastering the Samurai sword.

But, as Miyamoto Musashi observed, automatization takes time. Learning to tie your shoes was a slow process. Learning to talk takes years; normal children don’t achieve the same speaking rate as adults until age 10 (Smith, 1978). The same time frame is necessary to learn syntax, contrary to the claim of linguists, who have adopted the theoretical framework proposed by Noam Chomsky. We will return to the question of the “faculty of language” in the chapters that follow.