IN CONTEXT

Computer science

1906 US electrical engineer Lee De Forest invents the triode valve, the mainstay of early electronic computers.

1928 German mathematician David Hilbert formulates the “decision problem”, asking if algorithms can deal with all kinds of input.

1943 Valve-based Colossus computers, using some of Turing’s code-breaking ideas, begin work at Bletchley Park.

1945 US-based mathematician John von Neumann describes the basic logical structure, or architecture, of the modern stored-program computer.

1946 The first general-purpose electronic programmable computer, ENIAC, based partly on Turing’s concepts, is unveiled.

Imagine sorting 1,000 random numbers, for example 520, 74, 2395, 4, 999…, into ascending order. Some kind of automatic procedure could help. For instance: A Compare the first pair of numbers. B If the second number is lower, swap the numbers, go back to A. If it is the same or higher, go to C. C Make the second number of the last pair the first of a new pair. If there is a next number, make it the second number of the pair, go to B. If there is no next number, finish.

This set of instructions is a sequence known as an algorithm. It begins with a starting condition or state; receives data or input; executes itself a finite number of times; and yields a finished result, or output. The idea is familiar to any computer programmer today. It was first formalized in 1936, when British mathematician and logician Alan Turing conceived of machines now known as Turing machines to perform such procedures. His work was initially theoretical – an exercise in logic. He was interested in reducing a numbers task to its simplest, most basic, automatic form.

The a-machine

To help envisage the situation, Turing conceived a hypothetical machine. The “a-machine” (“a” for automatic) was a long paper tape divided into squares, with one number, letter, or symbol in each square, and a read/print tape head. With instructions in the form of a table of rules, the tape head reads the symbol of the square it sees, and alters it by erasing and printing another, or leaves it alone, as per the rules. It then moves to one square either to the left or right, and repeats the procedure. Each time there is a different overall configuration of the machine, with a new sequence of symbols.

The whole process can be compared to the number-sorting algorithm above. This algorithm is constructed for one particular task. Similarly, Turing envisaged a range of machines, each with a set of instructions or rules for a particular undertaking. He added, “We have only to regard the rules as being capable of being taken out and exchanged for others and we have something very akin to a universal computing machine.”

Now known as the Universal Turing Machine (UTM), this device had an infinite store (memory) containing both instructions and data. The UTM could therefore simulate any Turing machine. What Turing called changing the rules would now be called programming. In this way, Turing first introduced the concept of the programmable computer, adaptable for many tasks, with input, processing of information, and output.

"A computer would deserve to be called intelligent if it could deceive a human into believing that it was human."

Alan Turing

A Turing machine is a mathematical model of a computer. The head reads a number on the infinitely long tape, writes a new number on it, and moves left or right according to rules contained in the action table. The state register keeps track of the changes and feeds this input back into the action table.

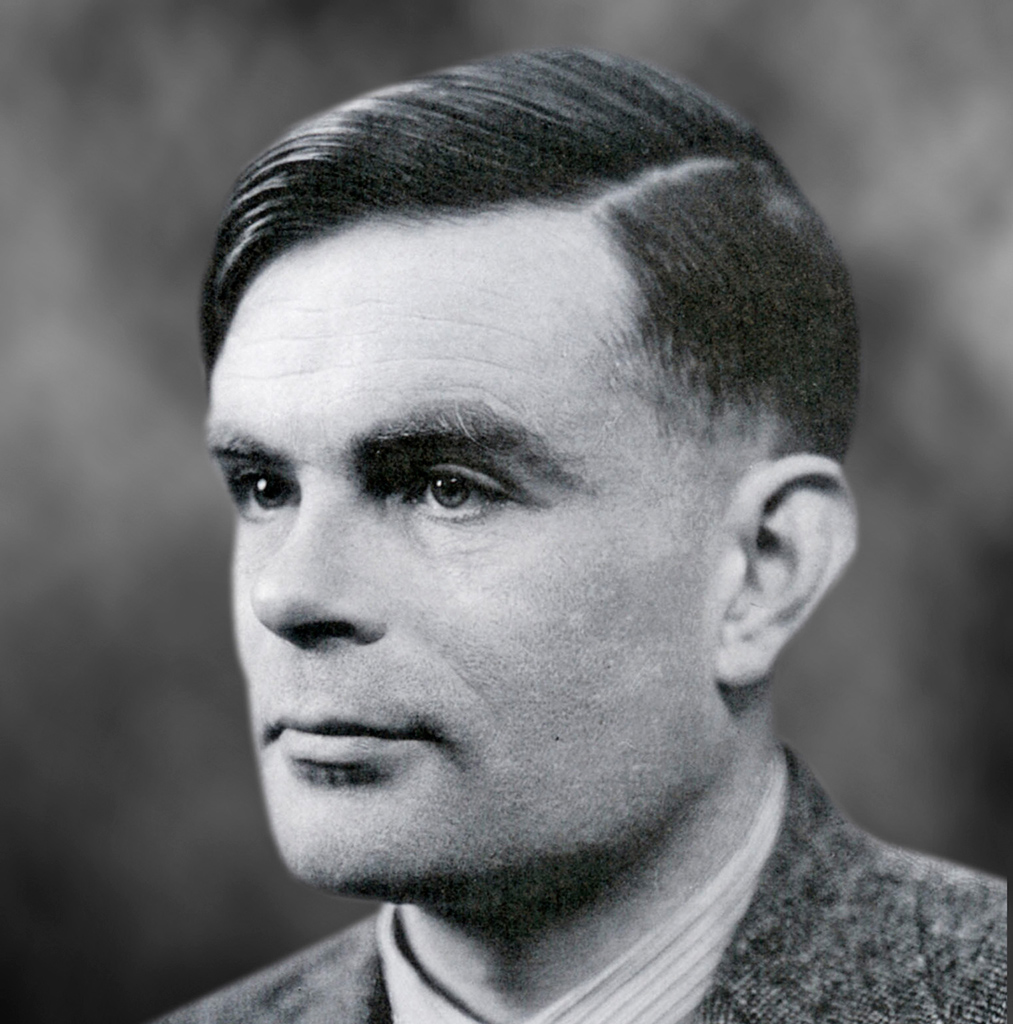

ALAN TURING

Born in London in 1912, Turing showed a prodigious talent for mathematics at school. He gained a first class degree in mathematics from Kings College, Cambridge, in 1934, and worked on probability theory. From 1936 to 1938, he studied at Princeton University, USA, where he proposed his theories about a generalized computing machine.

During World War II, Turing designed and helped build a fully functioning computer known as the “Bombe” to crack German codes made by the so-called Enigma machine. Turing was also interested in quantum theory, and shapes and patterns in biology. In 1945, he moved to the National Physics Laboratory in London, then to Manchester University to work on computer projects. In 1952, he was tried for homosexual acts (then illegal), and two years later died from cyanide poisoning – it seems likely this was by suicide rather than by accident. In 2013, Turing was granted a posthumous pardon.

Key work

1939 Report on the Applications of Probability to Cryptopgraphy

See also: Donald Michie • Yuri Manin