In the last several years, there has been a significant transition from networks dominated by dedicated leased lines to virtual links based on encrypting and isolating traffic at the packet level. This approach is the basis of a virtual private network (VPN). VPNs can be used for many network designs because the nature of a virtual network provides great flexibility. The two most common designs are linking branch offices together and providing portals for remote clients to create virtual office environments. These two design goals can be achieved by a private solution where the endpoints are owned and managed by the organization, or a company might choose to outsource their VPN services and have an ISP provide them.

The most basic goal when providing VPN services is to allow clients the ability to work from any location as if they were in the office. Ideally the VPN will use preexisting networks or low-cost network links to keep the connectivity cost down. VPN vendors, and others deploying VPNs, are faced with a challenge: how can they provide the flexibility required by clients for today’s computing needs while still ensuring the corporate network and clients are protected?

In many cases, balancing functionality and protection means choosing among technologies. If you are designing a solution for a client that only needs limited access to a web-based application, you don’t really need to provide complete VPN-based connectivity. It is important for the architect of the remote solution to define the scope of the services and provide solutions that have well-documented limits and capabilities. In most areas in the computer field, it is a good idea to limit the variety of solutions for your services, but in the remote access field, it is good to have a number of different options for the seemingly endless types of requests that come in from different clients. However, it is equally important to have a handle on the scope and goals of each of these options, and to have a complete understanding of the security ramifications of each. An architect of remote solutions can have a very busy job!

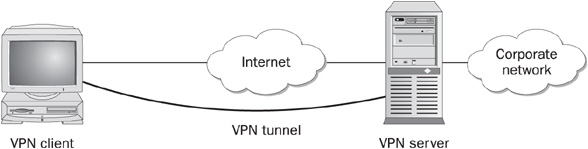

FIGURE 12-1 To the client, the VPN tunnel looks like a simple connection directly between itself and the server, avoiding the complex routing paths of the Internet.

The goal of a VPN is to provide a private tunnel through a public infrastructure. To do this, the traffic is encapsulated with a header that provides routing information, allowing the traffic to get to the destination. The traffic is also encrypted, which provides integrity, confidentiality, and authenticity. Captured traffic can, in theory, be decrypted, but the effort and time it takes is prohibitive.

It is referred to as a tunnel because the client does not know or care about the actual path between the two endpoints. For example, suppose a branch office is linked to the corporate network by a VPN. There might be 15 hops over the public Internet between the corporate VPN concentrator and the branch office’s endpoint, but once the VPN is established, any clients using this connection will only see the single hop between the VPN endpoints. Figure 12-1 illustrates this logic. In the figure, the Internet cloud represents all of the potential connections and transit points that might actually be taken by packets traveling from the client to the server. The loop from client to server represents the logical tunnel—to the client the connection looks like an end-run around the Internet.

Most VPN tunnels allow for the encapsulation of all common types of network traffic over the VPN link. This means that everything from SNA to IPX to AppleTalk can be encapsulated and transported over a TCP/IP-based network. The ultimate goal of VPN service is to allow clients to have the same capabilities through the tunnel that they would have if they were locally connected to their corporate network. Considering the variety of services in today’s networked environment, this can pose quite a challenge for the remote access architect.

Several computer companies started developing VPN technologies in the mid 1990s. Each of the solutions tended to be vendor-specific, and it was some time before the important potential of VPNs was realized. Toward the late ‘90s VPNs started taking the roles they have today, but they were based on a number of vendor-specific protocols. Today, most all VPN venders are using an IPSec as the basic protocol in their product.

IPSec, as it stands today is an Internet Engineering Task Force (IETF) to standardization of the various vendor-specific solutions in order to provide cross-vendor connectivity. The protocol specifies how VPNs should work, but it does not specify the details of how the complete authentication model builds the tunnel, nor does it cover how the IPSec driver should interact with the IPv4 stack. As a result, there is still limited vendor interoperability. It is very important for the remote access architect to research this in detail as part of the vendor evaluation.

In many cases, it is possible to reduce the sophistication of the authentication process and create custom rules for the IPSec connection, allowing for effective interoperability. This is constantly being improved by VPN vendors, and vendor interoperability with more complex authentication and security association models continues to improve.

There are several choices a vendor can make when designing a VPN product based on IPSec. The two most common types of IPSec VPN products use tunnels based on IPSec tunnel mode and L2TP over IPSec.

Nearly all operating systems or VPN-related products that have IPSec support have the ability to create IPSec tunnel-mode tunnels. Because most products can create this type of tunnel, its most significant advantage is interoperability; however, the tunnel is very streamlined and tunable largely because it was designed without consideration for legacy support issues. Due to different interpretations of authentication and/or routing, creating the IPSec tunnel relationship can be a very manual process. Although there are exceptions, IPSec tunnel-mode tunnels generally do not support anything other than TCP/IP being encapsulated within the tunnel. For many organizations this is not a significant issue, but it must be considered when designing the remote access solution.

IPSec tunnel mode is typically used for gateway-to-gateway connections such as business partner links and branch office connections. This is because of its flexibility, optimization, and vendor compatibility, and also because the parameters of the connection do not often change, unlike other sorts of client connections. Often the connection parameters for building the tunnel between the devices must be manually created, and although this works well when linking sites together, it would be impractical to support this for clients whose connections change often. Vendors that have chosen this tunneling protocol solution have gotten around the need for manual configuration in a variety of ways, but they typically require the client to load connection software.

L2TP over IPSec is the result of combining the best parts of Point-to-Point Tunneling Protocol (PPTP) from Microsoft and Layer Two Forwarding Protocol (L2F) from Cisco, dropping both of the encryption solutions, and using IPSec as the encryption solution. L2TP over IPSec uses IPSec transport mode and has the advantage of being a PPP-based tunnel, which allows two things: protocols other than TCP/IP can easily be supported in the tunnel, and the operating systems can create a known connection object that can be used to address the tunnel (this is particularly important in Microsoft operating systems). These options are significant if the operating system design allows the use of multiple protocols.

Although L2TP over IPSec gives both the client and the server more flexibility, it creates overhead in the tunnel environment that could be argued is unnecessary, particularly if the environment only uses TCP/IP. However, many companies that deploy VPNs consider the native Windows 2000, XP, and 2003 support of L2TP over IPSec to be a significant advantage. Typically, L2TP over IPSec is used for client-to-server connectivity because the connection parameters can handle dynamically changing clients. If your network environment requires the use of protocols other than TCP/IP, L2TP can also be used for gateway-to-gateway connections. Since both IPSec and L2TP are defined within the IETF standard, there are more vendors that support this solution, although there are still many more supporting IPSec tunnel mode.

As mentioned earlier, there are a number of other protocols that have been developed over the years and are still in many products today. The most common of these protocols is Microsoft’s Point-to-Point Tunneling Protocol (PPTP). PPTP is still used quite a bit in the industry because it is easy to deploy, flexible, and supported by most operating solutions today. PPTP was initially deployed in 1998 as part of Windows NT 4.0 and was immediately pounced on by the press because of its horrible initial security model. This has largely been corrected in Windows 2000 and 2003, but PPTP’s reputation will likely be forever marred by the initial mistakes.

Recently there has been a lot of attention paid to the capabilities of SSL VPNs. Most vendors’ products are not exactly VPNs as defined by the tunnel standards, but instead are SSL-based links to corporate networks for specific applications. This approach has three major advantages:

• Nearly every client has the needed software loaded by default—the Internet browser. No additional software is needed.

• Most firewalls support SSL, and no additional protocols or ports need be opened to support this type of connection.

• In many cases, remote users simply need to perform predictable tasks, such as checking their e-mail or running a specific application. The more flexibility the remote user needs, the more likely they are to need a full tunnel session (using a solution like IPSec).

The SSL approach has been getting more popular recently because IPSec-based tunnels have a lot of overhead, they can be expensive depending on the implementation, and many vendors have problems with their proprietary extensions.

Full VPN connections do have some advantages, though. A VPN provides full support for the client’s network stack instead of just for a web-based application. This is a significant advantage for any environment that needs complete network access over the VPN, supporting all kinds of protocols and ports. This is of particular importance to clients needing to run legacy applications.

Some vendors have started blurring the lines between IPSec VPNs and SSL connections by creating true tunnels that are actually IPSec sessions encapsulated in SSL. In many ways, this is the best of both worlds, but it does introduce the new problem of controlling access through corporate firewalls to prevent just anyone from using a full SSL-based VPN connection. Many environments simply would not have the ability to monitor what information was leaving the network or prevent it. The field of secure networking is changing very quickly, and it will be interesting to watch the developments.

When allowing remote sites and clients to connect to the corporate network over public networks, security is an obvious concern. Many organizations have specific gatehouse guidelines that require bastion hosts (computing devices which serve as links between the organization’s trusted network and external, untrusted networks such as the Internet) to be placed in or around the demilitarized zone (DMZ), and yet remote VPN clients would fall into the same category as the bastion hosts, since they can access the internal network from outside the gateways. Obviously these clients must be treated differently from the bastion hosts, while still ensuring that they do not create holes into the corporate network.

To address this issue, both endpoints of the VPN tunnel must be considered, and both must be secured. Specific techniques for securing the tunnel endpoints, the remote client and the remote access server, rely on the software that is implemented. However, there are a number of common concerns for each.

A commonly overlooked security concern for corporate networks is the dial-in servers. These are often holdovers from the pre-VPN days, but they can also be completely justified for international connectivity or other customer uses. These servers often support all types of logons with no encryption or modern authentication processes required for access. It is critical that the remote access architect not overlook such servers when defining the remote access security model.

Considering the cost of maintaining private modem banks, it is obvious that the ultimate goal should be to try and phase out this type of service as much as possible. However, in many cases, and particularly for international uses, this might not be possible. In that case, it is important to start merging the requirements of VPN and dial-in as much as possible. If the corporate standard is a certificate-based, two-factor authentication model, don’t just apply this to the VPN—make it the standard for all remote access solutions. Yes, the process of doing so might be difficult, complicated, unpopular, expensive, stressful and generally painful, but it is better to make exceptions for specific situations that cannot support the standard than to leave a whole family of access methods open to your network.

The remote client (whether VPN based and dial-in based) represents the biggest challenge for remote access security. Many remote access architects consider the authentication process as the most critical aspect of securing client connections. Even though this is obviously important, it leaves out some other factors:

• Unless the company provides all remote systems and mandates that only these can be used, it is impossible to predict the history or settings on the clients. The most common scenario is the couple sharing their home computer, with both using the same system to access their different corporate networks. What happens when one company mandates the use of one personal firewall, the other mandates the use of another, and they are not compatible?

• Many remote access solutions do not allow for seamless awareness of Microsoft domains, or it is difficult to mandate that the remote clients must be members of the domain. This means that you will not be able to simply set group policies or logon scripts to ensure that clients comply with corporate security policies.

• Most remote access solutions need to provide connectivity solutions for all kinds of clients, which makes specifying any scripts or security settings much more difficult. Defining the corporate standard for software such as personal firewalls and antivirus programs is more complicated because certain programs might be supported on one platform before others.

• Most deployments of Microsoft Windows NT, 2000, XP (and others) and of Linux, Mac, and Unix have the clients logging in as local administrators. This means that regardless of the controls and settings, the local administrator can override them.

• It is impossible to guarantee what happens to the client computer when the system is not connected. This, combined with the variety of local administrators and personally owned computers, can be a huge liability. The typical example of this is when a whole family is using a single computer for browsing to all types of locations that could hide Trojans or install viruses.

• Since the organization generally has limited control of the client, it is difficult to guarantee that all service packs and security patches are installed.

• The organization also has limited control over the network design where the client is located. Most organizations must allow for great flexibility in the types of networks, covering most of the common, consumer networking designs. This might not sound complicated, but considering international ISPs and everything ranging from cellular modems to bidirectional satellites to T-lines, it can be very challenging.

This list provides just an overview of some of the basic problems a remote access architect faces. In addition to considering these issues, you will need to decide when and how to stop a user that has a valid account and yet is trying to get around corporate standards and policies. The following sections will discuss the specifics and consider what can be done about these issues.

There have been many discussions about what constitutes effective authentication for remote clients. Most authentication processes for remote clients have been based on a username and a password. This system has a number of disadvantages, but the most significant is that logon attempts can be scripted. (I am not listing weak passwords as a disadvantage, because I am assuming that anyone using this type of authentication will have an account policy that mandates strong passwords.) The reason that usernames and passwords are still being used today is because they are so easy to deploy and use. Also, this type of authentication has been around for so long that it is very well supported in nearly all implementations of client operating systems.

Most enterprise remote access programs are moving toward a two-factor authentication process. There are many ways to do this, but the criteria for this solution requires that users must have something and know something, providing the two factors. For Windows-based environments, this typically involves a certificate-based smart card because of the native support in Windows 2000, XP, and 2003. There are other solutions that range from one-time-password (OTP) systems such as RSA’s SecureID to biometric solutions. For many organizations, the decision of which solution to use will depend on the balance between functionality and the cost of buying the necessary equipment for all users. PKI-based authentication solutions are not widely deployed yet because of the complexity of deploying them. These will be more common in the future, but until then many vendors are adding native support for RSA’s SecureID products for authentication tasks.

For VPN clients that are using the native support for L2TP over IPSec in Microsoft Windows (regardless of the back-end server), the default behavior is to require a certificate to initiate the security association between the client and the server. This is usually either an IPSec-specific certificate (normally for non-domain users) or a machine certificate (normally for computers that are domain members). Windows XP and 2003 also support using a shared secret to build the security association. It is possible to do this with Windows 2000, but it is not supported and it is not possible to use the Connection Manager’s built-in shared-secret support. The complexity of the mandated machine certificate is one of the main reasons more organizations did not initially deploy the L2TP/IPSec support in Windows 2000. It is much more common now that more organizations have Public Key Infrastructures (PKIs) in place to handle machine certificates, but as with authentication, many organizations are still deploying PKIs. This implementation has lagged behind because of the complexity of certificate infrastructures.

If your organization is using add-on third-party software for VPN connectivity, the authentication will be based on the options the particular vendor has made available. It is typical to see these clients using an embedded shared secret for the IPSec Security Association, with links either to the Microsoft Graphical Identification and Authentication (GINA) DLL or a stand-alone authentication engine just for the connections. Each has advantages and disadvantages, so it will be a task for the remote access architect to decide which is best for the environment.

When you cut through the complexity of the authentication, it really boils down to two issues:

• Identifying the machine The machine certificate (or the shared secret, to a lesser extent) identifies the system as a valid system for establishing the IPSec security association. (This step does not happen with PPTP and some other VPN solutions.)

• Identifying the user The user proves who they are based on username, OTP, certificate, or some other mechanism, but the basic function is determining whether the user has permission to establish a connection.

This is a very limited way of deciding whether a remote client should have full corporate access, yet this is currently what most organizations are basing their security model on. Most remote access vendors are introducing some ways to authenticate the user and then move on to checking the client configuration before giving full corporate access. The following sections describe why and how this should be done.

In nearly all security audits and attacks it is the tunnel endpoints that are the victims. While it is possible to attack traffic en route, it is very time consuming, requires a high level of sophistication, and traffic must be captured at specific network locations. Most attacks do not bother with the traffic, but instead are directed at the tunnel endpoints. It is much more fruitful to launch simple attacks at servers or clients in an effort to both compromise the traffic and the corporate network itself. Therefore, the condition of the tunnel endpoints is critical in any remote access plan.

Traditionally, security has only been concerned with whether the user has the rights to connect to the remote access service, but more recently there has been a move to require certain additional settings and client systems. This is a necessary move because of the number of security patches and updates that must be deployed on the client system to ensure a secure connection.

When deploying remote access solutions, many organizations take the logical course of trying to purchase one VPN solution for all types of clients. If the organization has limited types of clients, this is relatively easy, but it can be a real challenge for organizations that have a broad base of clients. Though ideally a unified solution can be found for all clients, it is often the case that only some clients can be supported, or some are supported better than others. Again, the remote access architect will need to evaluate these issues and how they will affect the organization, and then decide on the best course of action.

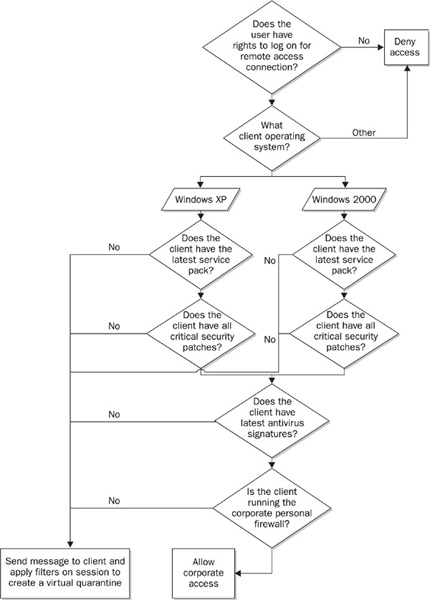

Typically an organization will want to require three things of a Windows-based remote client: security patches and service packs must be kept up to date and a personal firewall and antivirus software must be used. In the Windows 2003 Server Resource Kit, Microsoft has provided a way to build an environment that checks for this by putting all connecting clients in a virtual quarantine, based either on session specific filters or on connection timers. Then the client is required to run a script that will check whatever settings the remote access program requires. If the client checks out, it will then send a message to the remote access server that indicates all is okay, and the quarantine will be lifted. (See Figure 12-2.)

FIGURE 12-2 Sample quarantine logic for the authentication of Windows 2000 and XP clients

There are two obvious concerns when increasing the logic of the logon process like this. The first is that your clients are all local administrators on the systems that are running the analysis, and it would be possible for a script to be created that would simulate the check and send the okay message to the server. This can be complicated to reverse-engineer, and it will be up to the remote access architect to decide what efforts will be made to protect this process from authentication users.

The other concern is that requiring more logic to be performed by the clients makes it more difficult to support different client operating systems and devices on the same network access server. It is possible to put together kits for different clients, and this is what many vendors and large implementations are scrambling to do. Whether this will be done, and how, is a decision that will need to be made based on the needs of the organization.

I have used the Windows 2003 remote access server as an example of the quarantine network, but this is an approach found with many vendors. Each of the products have their own set of capabilities, but the basic goal is to establish the state and safety of the client configuration. Although there have been some attempts to standardize how these checking processes are performed in the logon process, it has not happened yet. Unfortunately, the client-checking features of vendors’ remote access products are not generally interoperable at this time. This is another situation where the remote access architect needs to make design decisions based on the remote access goals.

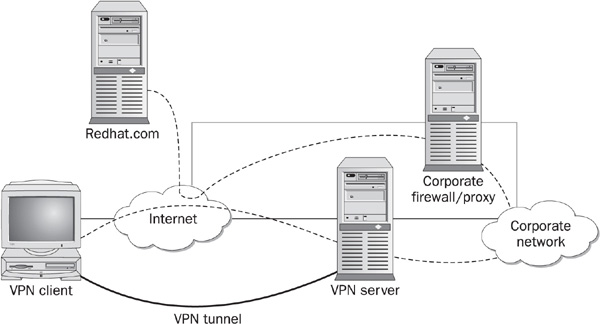

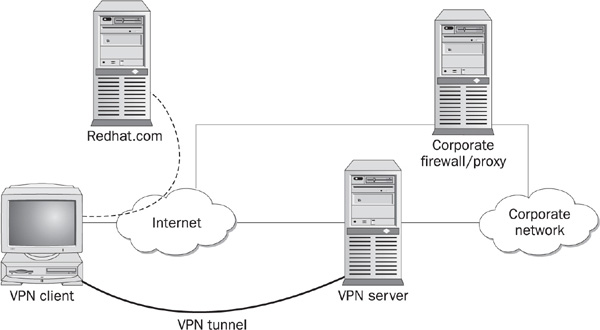

Another problematic topic in remote access setups is how the client handles the network connection for the virtual tunnel. If the client can be connected to more than one remote network at a time, this is commonly referred to as split-tunnel routing. In its most common implementation, a remote client can access both the Internet and the organization’s network at the same time. Figures 12-3 and 12-4 represent examples where split tunneling is not allowed and where it is, respectively. In the figures the dotted lines represent the path to the remote web site. In Figure 12-3, the path is from the client to the corporate network and on to the web site. There is no split tunnel here. In Figure 12-4, the client goes directly to the web site and is connected to corporate headquarters via a VPN. This is a split tunnel.

FIGURE 12-3 Client connecting without split-tunnel routing

FIGURE 12-4 Client connecting with split-tunnel routing

There are two main reasons that split-tunnel routing causes concern. The first problem is that when a client’s routing knows how to talk directly to both the corporate network and the public Internet, it increases the chance of unauthorized traffic going through the client to the corporate network. The second reason is that if a Trojan were installed on the client, the client could be taken control of by an attacker who could then access the corporate network.

Essentially, with the split-tunnel configuration, the client is truly a bastion host. Each of the clients is on the edge of the corporate network and is responsible for the protection of the network. This is where we might need to redefine how we categorize different types of bastion hosts.

Regardless of add-on software that can set routing parameters, the path of the traffic is a client decision because the client is responsible for handling the behavior of the TCP/IP stack. Many vendors require add-on software on the client for the tunnel session that can monitor unauthorized routing table changes and, in most cases, will drop the connection if such changes are made. A fundamental problem with this approach is that the users of the client tunnel endpoint are usually the local administrator. As an administrator, they are “authorized” to change the routing table so the vendor can’t guarantee that the monitoring agent won’t be hijacked or simulated.

If the client is relying on the native support for L2TP/IPSec in Windows 2000, XP, and 2003, there will not be anything monitoring the routing table by default. Software can be added to do this monitoring, but it will have the same limitations as other monitoring software because of the local rights issues.

There are really only a few advantages of having a split-tunnel environment, but they seem to be important ones, because it is very common to find VPN clients modifying their routing tables (regardless of whether they are supposed to or not).

• When the routing table allows for a direct connection to a destination instead of having the connection flow through the corporate firewalls and proxy servers, it allows the remote clients to potentially have more capabilities. For example, the organization’s firewall rules might not allow terminal server traffic, but since the client traffic would not be passing through the firewall, they could connect to the destination with terminal service traffic directly. This may be a client need, depending on the business, although the remote access architect should research whether the needed protocols should be enabled on the corporate firewall.

• Direct connections can provide a speed increase when going to external Internet web sites. This is more common today, since more and more clients are connecting with high speed cable and DSL connections, and the speed difference will really depend on the design of the network and the conduit to the VPN services. Many designers use caching servers to help with this issue, and in many cases they can not only increase the speed to match the direct connection, but in many cases they can leverage the large Internet links of an organization to better the performance. This tends to be a client want instead of a need.

• Some VPN software disables the ability to print or access local resources on any subnets, so if you have a small office LAN behind a connection-sharing device, you might not be able to transfer files or print when the tunnel is connected. This creates many problems for clients and tends to be a client need instead of a want.

• Finally there is the big-brother perception. This typically comes from home users who are using their own systems and do not want the company to be tracking where they might go. This is understandable, but the obvious answer is that they should disconnect from the corporate resources when they want to work on personal material. This tends to be a client want instead of a need.

It is easy to sum up the client wants and simply decide that it is best for the corporate network not to allow these capabilities. This approach can, however, cause clients to go off and find ways around this prohibition by scripting, writing simulation applets, or creating network designs that are specifically set up for more routing capabilities. It is very difficult to detect users doing this from the VPN server.

There are really only two effective options. One is to use today’s technologies to check the clients’ status and monitor whether the clients are modifying their routing information or not. This can be done more and more effectively as time goes on, but it will always be a battle to stay ahead of users who might be developing ways around it. It is also possible to add specific applets to search for and disable any routing changes as part of the login process. As with everything, though, it becomes very complicated to do this when trying to support multiple types of clients. The user experience can be made acceptable by providing adequate bandwidth to the VPN servers, providing caching servers to optimize web traffic, and more. Another option is to mandate certain client configurations and allow for varying levels of capabilities. The remote access team will need to monitor the infrastructure at the core gateways to ensure that performance remains acceptable.

As the remote access architect, you will need to establish what you consider to be a well protected client. For example, let’s consider a typical Windows client and start building on the criteria for the logon process. Suppose the remote access policy dictates the following authentication requirements:

• Must establish IPSec security association with a valid certificate from a trusted root

• Must provide certificate-based smart card logon via the Extensible Authentication Protocol (EAP)

Once this is done, the client is placed in a virtual quarantine network, and we can force the client to continue with the following checks:

• Must be running Windows 2000 or higher

• Must have the latest service pack installed

• Must have all critical security patches installed

• Must be running the standard corporate virus scanner with the latest signature file

• Must be running the corporate (centrally managed) personal firewall

If the client is running the first three items, but is not running the corporate virus scanner and personal firewall, we can give the client a message explaining the results of the scan, starting an applet to monitor the routing environment, and then taking the client out of the quarantine. Once the client does load the last two items, they then could start using the more flexible routing environment. This will also allow for some flexibility for shared computers. The only client configuration items we are mandating in order to take the system out of quarantine are the operating system version, the service packs, and the patches.

There are several ways the client can be quarantined from the rest of the corporate network:

• Drop the connection Some vendors have chosen to simply send the client a message that explains the problem with the client and simply drops the connection. This will prevent potential infection, but it also might make the task of fixing the client more complicated, without any access to the corporate network.

• Set a time limit on the connection In this situation, the user is sent a message that explains the problem and is then given a certain amount of time to fix the issue before the session is disconnected. This introduces a potential problem when there are large patches and slow connections, and it tends to be more difficult to support. This solution has the advantage of being easier to configure on the back end.

• Create access control lists (ACLs) on the session In this case the client will receive a message explaining the problem, and then the connection session will have filters applied that can restrict the traffic to certain ports or internal destinations. This is ideal, since it gives the client the opportunity to fix the problem using internal resources without posing a huge risk to the rest of the corporation. This can get rather complex to configure and maintain, so the remote access team must be very clear about the minimum client requirements, both initially and as changes and upgrades are necessary.

Typically, checking for service patches and security patches is a one-time check at the time of client logon, but you might need to monitor the state of personal firewalls and antivirus software. Most vendors are creating solutions within their own software that offers this level of checking. As this technology matures, it is likely we will see products that will bring all of the client validation together in a single, manageable solution.

The key with this sort of scanning is being able to control the safety of the client. They must be patched and running the standard corporate security applications. And as was mentioned previously, this cannot be guaranteed with group policies or logon scripts. With the monitoring capability, it is possible to be more granular in the authentication process and to more completely guarantee the safety of network resources.

In home network always-on connections (like DSL and cable), it should be mandated that all clients have a connection-sharing device such as a router or router/firewall combination, particularly since many of them cost less than $50. This fixes several problems, such as these:

• Many ISP DHCP issues (like short least duration, in which ISP provided client IP addresses are frequently changed) are solved by the connection-sharing device. The device can deal with the renegotiation instead of the client doing this while also maintaining the tunnel.

• Even if the connection-sharing device has only a limited stateless firewall, it moves the front line of the network from the client maintaining the VPN session to the connection-sharing device.

• It provides the ability to have multiple systems access the same connection for the Internet and permits multiple VPN sessions.

• Many ISPs require software to be loaded on the clients (this is particularly true with DSL), and the connection-sharing device will handle this requirement with no software on the client. This is of particular interest for VPN connections, since many DSL connections can interfere with VPN connections.

A connection-sharing device will not fix the fact that many ISPs are charging extra for VPN-related traffic. This is a disturbing trend, where ISPs require a business rate that might be twice the cost paid instead of a residential rate. This extra charge may not be fair, but it is a fact, and the remote access architect will need to include it in the cost analysis.

A connection-sharing device offers a cheap way of isolating clients from the network, but it is not a guarantee of network security. It should not replace client protection at all, but it is a very nice way of helping isolate the client from the public network.

As was mentioned previously, most companies do not insist that only the company providing systems be used to connect to the remote access server. They allow client systems not provided by the organization to log on to the remote access server. Since the organization won’t know the state of these other client systems, it is critical that the condition of the client be analyzed at the time of connection. It is common for the child of an employee to use the home system and unknowingly allow it to get infected with all sorts of Trojans and viruses. When the parent sits down at the same computer and connects to the corporate network, the client immediately starts spreading the infection. This has little or no bearing on the type of VPN, on whether the client is using split-tunneling, or even on the type of operating system (although some operating systems are obviously more prone to viruses than others).

By using the more detailed logon process, this problem can be reduced immensely. If you mandate that at the time of connection all service packs and security patches must be installed, that the virus scanner must be up to date and operating, and that the personal firewall must be installed and configured, this will very probably clean and protect the client and the network.

The remote access architect will need to make sure that any process or script that checks the client settings will not be overly intrusive, and it must be as verbose as possible. Remember this process will potentially be running on a privately owned computer that is used by different users. It will make the person using the VPN connection much more comfortable to know exactly what is happening and why. It is possible to use web pages as the interface to the client, making it possible to define what is being looked for, why, and how to fix any potential problems that might be found.

For many organizations, there is nothing that prevents supporting shared computers and those not owned by the company. It is up to the remote access architect and the security team to define what should be mandated and what options should or should not be permitted when allowing more flexible connections.

Some organizations choose to mandate that all computers be company owned and controlled. This does help with mandating the configuration of the client. It also allows the organization to control the state of the client without worrying about legal or many privacy issues.

Other organizations choose to provide each of the clients with a router or other device that allows the tunnel endpoint to be maintained on it instead of on the client. For all practical purposes, this makes each of the remote client sites the same as a branch office. In most cases, this is the best way to ensure the security and capabilities of the clients. The only downsides are the extra maintenance required on the devices, and the expense of purchasing dedicated hardware for all remote clients.

The popularity of the Internet and the availability of VPNs have caused an explosion of activity, with companies replacing leased lines or connections between sites and partners. This is because VPN connections tend to be a fraction of the cost of leased lines, and many site connections find that the speed dramatically increases once the change from physical lines occurs.

Many of the problems outlined in this chapter about using remote access clients as endpoints are not common problems. This is mainly because the corporation typically owns and controls both ends of the tunnel for site-to-site connections. Also, most branch offices are connected with routers instead of various client operating systems. (However, this is not a guarantee, since all operating systems, including those on routers, can and do require patches.) Users do not log on to routers and browse the Internet, install unknown applications, or double-click on e-mail attachments. All of these reasons tend to make site-to-site connections more secure, but also much more expensive.

There are also site-to-site links where the corporation only owns one side of the connection. This is typically found in links with business partners. There is no quarantine-type solution that will check this type of connection yet, so it is up to the remote access architect to define the minimum requirements for the partners’ tunnel endpoint. It is also critical to monitor the link traffic and, if possible, restrict it to only the necessary internal destinations.

Site-to-site connections often allow multiple users to use the same connection, which means the remote access architect can afford to spend more money on the ends of the connection. Many organizations actually put in stateful firewalls at the branch offices with the corporate rules loaded on them. Having distributed firewall rules provides a very good security model because it guarantees the same rules are used regardless of the client location. It would be ideal to have stateful distributed firewalls at all remote locations—both at corporate endpoints and at home users’ endpoints—but this is typically too expensive. The remote access architect will need to evaluate the cost and security of this approach for the particular environment.

It is very important to ensure that branch offices are not simply using a Network Address Translation (NAT) device also as a VPN endpoint. This is sometimes done in small offices and companies, but setting up a NAT device with no firewall features gives the users and administrators a false sense of security, probably because the network is within a private network.

Another difficulty with distributed networks is continuing to update the devices that maintain the links. In today’s security-conscious world, it is critical to ensure that the routers and firewalls are always updated with security patches and operating system updates. This can be a stressful task. If a security patch or upgrade causes a system to go down, its location and that of the other tunnel endpoint might be anywhere in the world. When an endpoint goes down, critical systems cannot communicate and expert help may not locally be available. However, this is very important task. The last thing your VPN-based network needs is infected or vulnerable endpoints.

Ensuring that all clients at the branch sites are up to date is very important. This is relatively simple, because the clients and the infrastructure will not have any VPN-related differences loaded in their configuration—this is all handled by the VPN endpoints. However, client updates should not be overlooked when designing the network. Many organizations spend a lot of time optimizing the process of keeping clients up to date at remote sites. Often a significant part of this planning is related to optimizing the bandwidth for patch downloads. This can be incorporated into the details of the network design, which might include Quality of Service (QoS) to modify the priority of the patch replications. (QoS can provide a technical solution to giving critical data priority in bandwidth usage.)

Creating and supporting a secure remote access environment can be complicated, but it requires us to rethink client risks and security strategies. With the complicated protection required for clients, we must grow the authentication process to include more complete analysis of the client’s condition and to make decisions appropriate to the current client configuration. Remote access programs require planning, testing, and continued attention to keep up with the ever-changing environment.