In this chapter, we will be discussing concepts related to operating systems security models, namely:

• The security reference monitor and how it manages all the security of the elements related to it

• Access control—the heart of information security

• International standards for operating system security—while not tied directly to operating systems, they provide a level of assurance and integrity to organizations.

Quite simply, an operating system security model is the foundation of the operating system’s security functionality. All security functionality is architected, specified, and detailed in advance, before a single line of code is written. Everything built on top of the security model must be mapped back to it, and any action that violates the security model is denied and logged.

The operating system security model (also known as the trusted computing base, or TCB) is simply the set of rules, or protocol, for security functionality. The security commences at the network protocol level and maps all the way up to the operations of the operating system.

An effective security model protects the entire host and all of the software and hardware that operate off it. Older systems used an older, monolithic design, which proved to be less than effective. Current operating systems are optimized for security and use a compartmentalized approach.

These older monolithic operating systems kernels were built for a single architecture and necessitated that the operating system code be rewritten for all new architectures that the operating system was to be ported to. This lack of portability caused development costs to skyrocket.

While the key feature of a monolithic kernel is that it can be optimized for a particular hardware architecture, its lack of portability is a major negative.

The trend in operating systems since the early 1990s has been to go to a microkernel architecture. In contrast to the monolithic kernel, microkernels are platform independent. While they lack the performance of monolithic systems, they are catching up in speed and optimization.

A microkernel approach is built around a small kernel that uses a common hardware level. In Windows NT/2000/XP, this is known as the hardware abstraction layer (HAL). The HAL offers a standard set of services with a standard API to all levels above it. The rest of the operating system is written to run exclusively on the HAL. The key advantage of a microkernel is that the kernel is very small and very easy to port to other systems.

From a security perspective, by using a microkernel or compartmentalized approach, the security model ensures that any capacity to inflict damage to the system is contained. This is akin to the watertight sections of a submarine; if one section is flooded, it can be sealed and the submarine can still operate. But this is only when the security model is well defined and tested. If not, then it would be more analogous to the Titanic, where they thought that the ship was unsinkable, but in the end, it was downed by a large piece of ice.

Extending the submarine analogy, the protocol of security has a direct connection to the communication protocol. In 2004, the protocol we are referring to is TCP/IP—the language of the Internet, and clearly, the most popular and most utilized protocol today. If the operating system is an island, then TCP/IP is the sea. Given that fact, any operating system used today must fill in for the lackings of TCP/IP.

As you know, even the best operating system security model can’t operate in a vacuum or as an island. If the underlying protocols are insecure, then the operating system is at risk. What’s frightening about this is that the language of the Internet is TCP/IP, but effective security functionality was not added to TCP/IP until version 6 in the late 1990s. Given that roughly 95 percent of the Internet is still running an insecure version of TCP/IP, version 4 (version 5 was never put into production; see www.cisco.com/univercd/cc/td/doc/cisintwk/itg_v1/tr1907.htm), the entire Internet and corporate computing infrastructure is built on and running on an insecure infrastructure and foundation.

The lack of security for TCP/IP has long been known. In short, the main problems of the protocol are these:

• Spoofing Spoofing is the term for establishing a connection with a forged sender address; this normally involves exploiting trust relations between the source address and the destination address. The ability to spoof the source IP address assists those carrying out DoS attacks, and the ISN (Initial Sequence Number) contributes more to spoofing attacks.

• Session highjacking An attacker can take control of a connection.

• Sequence guessing The sequence number used in TCP connections is a 32-bit number, so it would seem that the odds of guessing the correct ISN are exceedingly low. However, if the ISN for a connection is assigned in a predictable way, it becomes relatively easy to guess. The truth is that the ISN problem is not a protocol problem but rather an implementation problem. The protocol actually specifies psuedorandom sequence numbers, but many implementations have ignored this recommendation.

• No authentication or encryption The lack of authentication and encryption with TCP/IP is a major weakness.

• Vulnerable to SYN flooding This involved the three-way handshake in establishing a connection. When Host B receives a SYN request from A, it must keep track of the partially opened connection in a listen queue. This enables successful connections even with long network delays. The problem is that many implementations can keep track of only a limited number of connections. A malicious host can exploit the small size of the listen queue by sending multiple SYN requests to a host but never replying to the SYN and ACK the other host sends back. By doing so, it quickly fills up the other host’s listen queue, and that host will stop accepting new connections until a partially opened connection in the queue is completed or times out.

If you want a more detailed look at the myriad security issues with TCP/IP version 4, the classic resource for this issue is Steve Bellovin’s seminal paper “Security Problems in the TCP/IP Protocol Suite” (www.research.att.com/~smb/papers/ipext.pdf).

Some of the security benefits that TCP/IP version 6 offers are:

• IPSec security

• Authentication and encryption

• Resilience against spoofing

• Data integrity safeguards

• Confidentiality and privacy

An effective security model recognizes and is built around the fact that since security is such an important design goal for the operating system, every resource that the operating system interfaces with (memory, files, hardware, device drivers, etc.) must be interacted with from a security perspective. By giving each of these objects an access control list (ACL), the operating system can detail what that object can and can’t do, by limiting its privileges.

Much of the security functionality afforded by an operating system is via the ACL. Access control comes in many forms. But in whatever form it is implemented, it is the foundation of any security functionality.

Access control enables one to protect a server or parts of the server (directories, files, file types, etc.). When the server receives a request, it determines access by consulting a hierarchy of rules in the ACL.

The site SearchSecurity.com (http://searchsecurity.techtarget.com/sDefinition/0,,sid14_gci213757,00.html) defines an access control list as a table that tells a computer operating system which access rights each user has to a particular system object, such as a file directory or an individual file. Each object has a security attribute that identifies its access control list. The list has an entry for each system user with access privileges. The most common privileges include the ability to read a file (or all the files in a directory), to write to the file or files, and to execute the file (if it is an executable file, or program). Microsoft Windows NT/2000, Novell’s NetWare, Digital’s OpenVMS, and Linux and other Unix-based systems are among the operating systems that use access control lists. The list is implemented differently by each operating system.

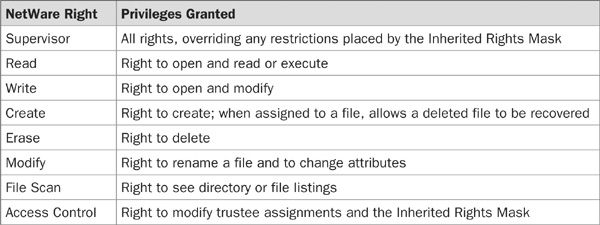

As an example, Table 17-1 provides a listing of access control rights as used by Novell NetWare (www.novell.com/documentation/lg/nfs24/docui/index.html#../nfs24enu/data/hk8jrw5x.html).

TABLE 17-1 Access Control Rights as Used by Novell NetWare

In Windows NT/2000/Server 2003, an ACL is associated with each system object. Each ACL has one or more access control entries (ACEs), each consisting of the name of a user or a group of users. The user can also be a role name, such as programmer or tester. For each of these users, groups, or roles, the access privileges are stated in a string of bits called an access mask. Generally, the system administrator or the object owner creates the access control list for an object.

Each ACE identifies a security principal and specifies a set of access rights allowed, denied, or audited for that security principal. An object’s security descriptor can contain two ACLs:

• A discretionary access control list (DACL) that identifies the users and groups who are allowed or denied access

• A system access control list (SACL) that controls how access is audited

Access control lists can be further refined into both required and optional settings. This is more precisely carried out with discretionary access control and is implemented by discretionary access control lists (DACLs). The difference between discretionary access control and its counterpart mandatory access control is that DAC provides an entity or object with access privileges it can pass to other entities. Depending on the context in which they are used, these controls are also called RBAC (rule-based access control) and IBAC (identity-based access control).

Mandatory access control requires that access control policy decisions be beyond the control of the individual owners of an object. MAC is generally used in systems that require a very high level of security. With MAC, it is only the administrator and not the owner of the resource that may make decisions that bear on or derive from the security policy. Only an administrator may change the category of a resource, and no one may grant a right of access that is explicitly forbidden in the access control policy.

MAC is always prohibitive (i.e., all that is not expressly permitted is forbidden), and not permissive. Only within that context do discretionary controls operate, prohibiting still more access with the same exclusionary principle.

All of the major operating systems (Solaris, Windows, NetWare, etc.) use DAC. MAC is implemented in more secure, trusted operating systems such as TrustedBSD and Trusted Solaris.

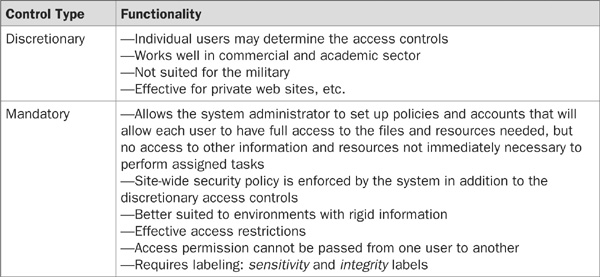

Table 17-2 details the difference in functionality between discretionary and mandatory access control.

TABLE 17-2 The Difference in Functionality Between Discretionary and Mandatory Access Control

Anyone who has studied for the CISSP exam or done postgraduate computer security knows that three of the most famous security models are Bell-LaPadula, Biba, and Clark-Wilson. These three models are heavily discussed and form the foundation of most current operating system models. But practically speaking, most of them are little used in the real world, functioning only as security references.

Those designing operating systems security models have the liberty of picking and choosing from the best of what the famous models have, without being encumbered by their myriad details.

While the Bell-LaPadula model was revolutionary when it was published in 1976 (http://seclab.cs.ucdavis.edu/projects/history/CD/ande72a.pdf), descriptions of its functionality in 2004 are almost anticlimactic. The Bell-LaPadula model was one of the first attempts to formalize an information security model. The Bell-LaPadula model was designed to prevent users and processes from reading above their security level. This is used within a data classification system—so that a given classification cannot read data associated with a higher classification—as it focuses on sensitivity of data, according to classification levels.

In addition, this model prevents objects and processes with any given classification from writing data associated with a lower classification. This aspect of the model caused a lot of consternation in the security space. Most operating systems assumed that the need to write below one’s classification level is a necessary function. But the military influence on which Bell-LaPadula was created mandated that this be taken into consideration.

In fact, the connection of Bell-LaPadula to the military is so tight that much of the TCSEC (aka the Orange Book) was designed around Bell-LaPadula.

Biba is often known as a reversed version of Bell-LaPadula, as it focuses on integrity labels, rather than sensitivity and data classification. (Bell-LaPadula was designed to keep secrets, not to protect data integrity.)

Biba covers integrity levels, which are analogous to sensitivity levels in Bell-LaPadula, and the integrity levels cover inappropriate modification of data. Biba attempts to preserve the first goal of integrity, namely to prevent unauthorized users from making modifications.

Clark-Wilson attempts to define a security model based on accepted business practices for transaction processing. Much more real-world-oriented than the other models described, it articulates the concept of well-formed transactions, that

• Perform steps in order

• Perform exactly the steps listed

• Authenticate the individuals who perform the steps

In the early 1970s, the United States Department of Defense published a series of documents to classify the security of operating systems known as the Trusted Systems Security Evaluation Criteria (www.radium.ncsc.mil/tpep/library/rainbow/5200.28-STD.html). The TCSEC was heavily influenced by Bell-LaPadula and classified systems at levels A through D.

TCSEC was developed to meet three objectives:

• To give users a yardstick for assessing how much they can trust computer systems for the secure processing of classified or other sensitive information

• To guide manufacturers in what to build into their new, widely available trusted commercial products to satisfy trust requirements for sensitive applications

• To provide a basis for specifying security requirements for software and hardware acquisitions

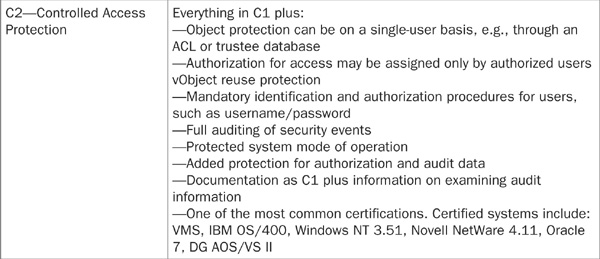

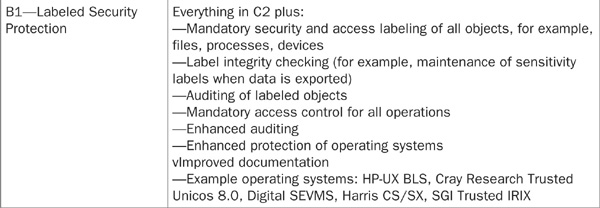

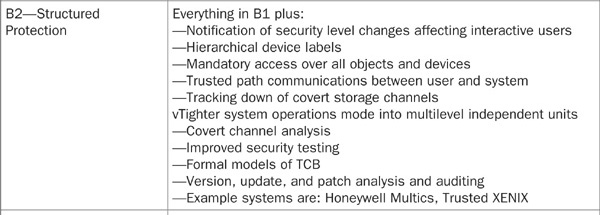

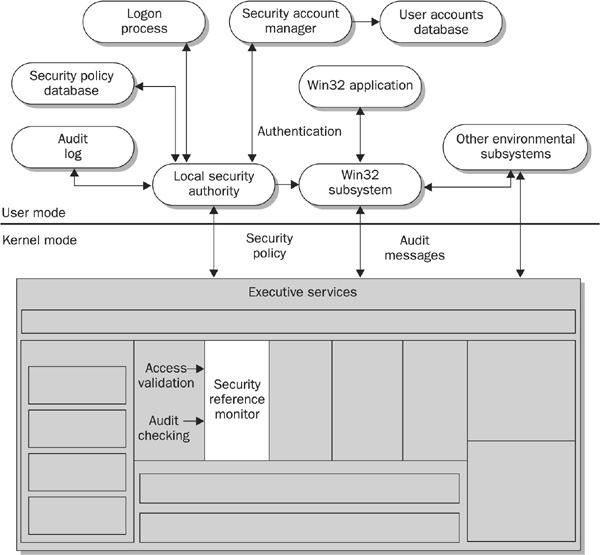

Table 17-3 provides a brief overview of the different classification levels.

TABLE 17-3 Classifications of the Security of Operating Systems

While TCSEC offered a lot of functionality, it was by and large not suitable for the era of client/server computing. Although its objectives were admirable, the client/server computing world was embryonic when the TCSEC was created. Neither Microsoft or Intel were really on the scene, and no one thought that one day a computer would be on every desktop. In 2004, C2 is a dated, military-based specification that does not work well in the corporate computing environment (see “The Case Against C2,” www.winntmag.com/Articles/Index.cfm?ArticleID=460&pg=1&show=600). Basically, it doesn’t address critical developments in high-level computer security, and it is cumbersome to implement in networked systems.

One of the big secrets of C2 certification was that it was strictly for stand-alone hosts. For those that wanted to go beyond Orange Book functionality to their networked systems, they had to apply the requirements of the Trusted Network Interpretation of the TCSEC (TNI), also known as the Red Book (www.radium.ncsc.mil/tpep/library/rainbow/NCSC-TG-005.pdf).

By way of example, those that attempted to run C2config.exe (the C2 Configuration Manager, a utility from the Microsoft NT Resource Kit that configured the host for C2 compliance) were shocked to find out that the utility removed all network connectivity.

One of the main problems with the C2 rating within the TCSEC is that, although it is a good starting point, it was never intended to be the one-and-only guarantee that security measures are up to snuff.

Finally, the coupling of assurance and functionality is really what brought down the TCSEC. Most corporate environments do not have enough staff to support the assurance levels that TCSEC required. Also, the lack of consideration of networks and connectivity also played a huge role, as client/server computing is what brought information technology into the mainstream.

TCSEC makes heavy use of the concept of labels. Labels are simply security-related information that has been associated with objects such as files, processes, or devices. The ability to associate security labels with system objects is also under security control.

Sensitivity labels, used to define the level of data classification, are composed of a sensitivity level and possibly some number of sensitivity categories. The number of sensitivity levels available is dependent on the specific operating system.

In a commercial environment, the label attribute could be used to classify, for example, levels of a management hierarchy. Each file or program has one hierarchical sensitivity level. A user may be allowed to use several different levels, but only one level may be used at any given time.

While the sensitivity labels identify whether a user is cleared to view certain information, integrity labels identify whether data is reliable enough for a specific user to see. An integrity label is composed of an integrity grade and some number of integrity divisions.

The number of hierarchical grades to classify the reliability of information is dependent on the operating system.

While TCSEC requires the use of labels, other regulations and standards such as the Common Criteria (Common Criteria for IT Security Evaluation, ISO Standard 15408) require security labels.

There are many other models around, including the Chinese Wall (seeks to prevent information flow that can cause a conflict of interest), Take-Grant (a model that helps in determining the protection rights, for example, read or write, in a computer system), and more. But in practice, none of these models has found favor in contemporary operating systems (Linux, Unix, Windows, etc.)—they are overly restrictive and reflect the fact that they were designed before the era of client/server computing.

Current architects of operating systems are able to use these references as models, pick and choose the best they have to offer, and design their systems accordingly.

In this section, we will discuss the reference monitor concept and how it fits into today’s security environment.

The Computer Security Technology Planning Study Panel called together by the United States Air Force developed the reference monitor concept in 1972. They were brought together to combat growing security problems in a shared computer environment.

In 1972, they were unable to come up with a fail-safe solution; however, they were responsible for reshaping the direction of information security today. A copy of the Computer Security Technology Planning Study can be downloaded from http://seclab.cs.ucdavis.edu/projects/history/CD/ande72a.pdf.

The National Institute of Standards and Technologies (www.nist.gov) describes the Reference Monitor Concept as an object that maintains the access control policy. It does not actually change the access control information; it only provides information about the policy.

The security reference monitor is a separable module that enforces access control decisions and security processes for the operating system. All security operations are routed through the reference monitor, which decides if the specific operation should be permitted or denied.

Perhaps the main benefit of a reference model is that it can provide an abstract model of the required properties that the security system and its access control capabilities must enforce.

The main elements of an effective reference monitor are that it is

• Always on Security must be implemented consistently and at all times for the entire system, for every file and object.

• Not subject to preemption Nothing should be able to preempt the reference monitor. If this were not the case, then it would be possible for an entity to bypass the mechanism and violate the policy that must be enforced.

• Tamperproof It must be impossible for an attacker to attack the access mediation mechanism such that the required access checks are not performed and authorizations not enforced.

• Lightweight It must be small enough to be subject to analysis and tests, to prove its effectiveness.

While few reference models have been used in their native state, as Cynthia Irvine of the Naval Postgraduate School writes in “The Reference Monitor Concept as a Unifying Principle in Computer Security Education” (www.cs.nps.navy.mil/people/faculty/irvine/publications/1999/wise99_RMCUnifySecEd.pdf), for over twenty-five years, the reference monitor concept has proved itself to be a useful tool for computer security practitioners. It can also be used as a conceptual tool in computer security education.

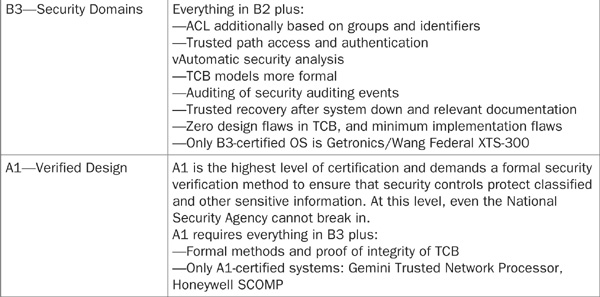

Once again, we are looking at Windows NT/2000/XP, because it is currently one of the most deployed and supported operating systems in production.

The Windows Security Reference Monitor (SRM) is responsible for validating Windows process access permissions against the security descriptor for a given object. The Object Manager then in turn uses the services of the SRM while validating the process’s request to access any object.

Windows NT/2000/XP is clearly not a bulletproof operating system; as is evident from the number of security advisories in 2003 alone. In fact, it is full of security holes. But the fact that it is the most popular operating system in use in corporate settings, and that Microsoft has been for the most part open with its security functionality, makes it a good case study for a real-world example of how an operating system security model should operate.

Figure 17-1 illustrates the Windows NT Security Monitor (www.microsoft.com/technet/treeview/default.asp?url=/technet/prodtechnol/ntwrkstn/reskit/security.asp).

FIGURE 17-1 The Windows NT Security Monitor

Versions of Windows before NT had absolutely no security. Any so-called security was ineffective against even the most benign threat. That all changed with the introduction of Windows NT.

Way back in the early 1990s, Microsoft saw that security was crucial to any new operating system. Windows NT and its derivatives Windows 2000, Windows Server 2003, and Windows XP employ a modular architecture, which means that each component provided within it has the responsibility for the functions that it supports.

While slightly dated, a detailed look at the foundations of the Windows NT Security architecture can be found in David A. Solomon, Inside Windows NT 2/e (Redmond, Washington: Microsoft Press, 1998). A more current look can be found in Ben Smith, et al., Microsoft Windows Security Resource Kit (Redmond, Washington: Microsoft Press, 2003) and Ed Bott and Carl Siechert, Microsoft Windows Security Inside Out for Windows XP and Windows 2000 (Redmond, Washington: Microsoft Press, 2002).

Before going into detail into the security monitor, you need a little background on Windows NT.

The base functionality in Windows NT is facilitated via the Windows NT Executive. This subsystem is what provides the system services software applications and programs. It is somewhat similar to the Linux kernel, but in Windows, it includes significantly more functionality than other operating system kernels.

The Windows NT Security Reference Monitor (SRM) is a service of the Windows NT Executive. It is the SRM that checks the DACL for any request and then either grants permission to the request or denies the request.

It is crucial to know that since the SRM is at the kernel level, it controls access to any objects above it. This ensures that objects can’t bypass the security functionality.

Windows’ use of the DACL ensures that security can be implemented at a very granular level, all the way down to specific users. In fact, some people are put off by how granular Windows NT is, with all of its groups and operator settings.

For many years, people would never use Microsoft and security in the same sentence. But all of that started to change in early 2002 with Microsoft’s Trustworthy Computing initiative. On January 15, 2002, Bill Gates sent a memo to all employees of Microsoft stating that security was the highest priority for all the work Microsoft was doing (http://zdnet.com.com/2100-1104-817343.html).

Gates wants Microsoft’s customers to be able to rely on Microsoft systems to secure their information, and to be as freely available as electricity, water services, and telephony.

Gates notes that “today, in the developed world, we do not worry about electricity and water services being available. With telephony, we rely both on its availability and its security for conducting highly confidential business transactions without worrying that information about who we call or what we say will be compromised. Computing falls well short of this, ranging from the individual user who isn’t willing to add a new application because it might destabilize their system, to a corporation that moves slowly to embrace e-business because today’s platforms don’t make the grade.”

When Gates sent the memo to all 50,000 employees of Microsoft, declaring that effective immediately security would take priority over features, many took it as a PR stunt. This e-mail came out during the development of Windows XP, and shortly after that, all development at Microsoft was temporarily halted to allow the developers to go through security training. While it will take years for Trustworthy Computing to come to fruition, security at last was going prime time.

The four goals to the Trustworthy Computing initiative are:

• Security As a customer, you can expect to withstand attack. In addition, that the data is protected to prevent availability problems and corruption.

• Privacy You have the ability to control information about yourself and maintain privacy of data sent across the network.

• Reliability When you need your system or data, they are available.

• Business integrity The vendor of a product acts in a timely and responsible manner, releasing security updates when a vulnerability is found.

In order to track and assure its progress in complying with the Trustworthy Computing initiative, Microsoft created a framework to explain its objectives: that its products be secure by design, secure by default, and secure in deployment, and that it provide communications (SD3+C).

Secure by design simply means that all vulnerabilities are resolved prior to shipping the product. Secure by design requires three steps.

• Build a secure architecture This is imperative. From the beginning, software needs to be designed with security in mind first and then features.

• Add security features Feature sets need to be added to deal with new security vulnerabilities.

• Reduce the number of vulnerabilities in new and existing code The internal process at Microsoft is being revamped to make developers more conscious of security issues while designing and developing software.

Secure in deployment means ongoing protection, detection, defense, recovery, and maintenance through good tools and guidance.

Communications is the key to the whole project. How quickly can Microsoft get the word out that there is vulnerability and help you to understand how to operate your system with enhanced security? You can find more about this Microsoft platform at www.microsoft.com/security/whitepapers/secure_platform.asp.

Trustworthy Computing cannot simply be accomplished by software design alone. As an administrator of whatever type network, you have a duty to assure that your systems are properly patched and configured against known problems. Nimda and Code Red are two prime examples of a vulnerability for which adequate forewarning and known preventive action (patching and configuration) were communicated but not taken seriously. Nimda and Code Red taught many system administrators that their first order of daily business was to check Microsoft security bulletins and other security sites and to perform vulnerability assessments before checking their favorite sports site.

So how effective is Trustworthy Computing? Noted security expert Bruce Schneier writes in his CryptoGram newsletter (www.counterpane.com./crypto-gram-0202.html#1), “Bill Gates is correct in stating that the entire industry needs to focus on achieving trustworthy computing. He’s right when he says that it is a difficult and long-term challenge, and I hope he’s right when he says that Microsoft is committed to that challenge. I don’t know for sure, though. I can’t tell if the Gates memo represents a real change in Microsoft, or just another marketing tactic. Microsoft has made so many empty claims about their security processes—and the security of their processes—that when I hear another one I can’t help believing it’s more of the same flim-flam…. But let’s hope that the Gates memo is more than a headline grab, and represents a sea change within Microsoft. If that’s the case, I applaud the company’s decision.”

Anyone who thinks that security can be implemented in a matter of months is incorrect. A company like Microsoft that has billions of lines of software code to maintain also can’t expect to secure such legacy code, even in a matter of years. Security is a long and arduous and often thankless process. But at least Microsoft is moving in the right direction.

Until the early 1990s, information security was something that, for the most part, only the military and some financial services organizations took seriously. But in the post–September 11 era, all of that has radically changed.

While post–9/11 has not turned into the information security boon that was predicted, it nonetheless jumped the interest level in information security up a few notches. Security is a very slow process, and it will be years (perhaps decades) until corporate America and end users are at the level of security that they should be.

The only area that really saw a huge jump post-9/11 was that of physical security. But from an information security and privacy perspective, the jump was simply incremental.

While Microsoft’s Trustworthy Computing initiative of 2002 was a major story, a lot of the momentum for information security started years earlier. And one of the prime forces has been the Common Criteria.

The need for a common information security standard is obvious. Security means many different things to different people and organizations. But this subjective level of security cannot be objectively valuable. So common criteria were needed to evaluate the security of an information technology product.

The need for common agreement is clear. When you buy a DVD, put gas in your car, or make an online purchase from an e-commerce site, all of these function because they operate in accordance with a common set of standards and guidelines.

And that is precisely what the Common Criteria are meant to be, a global security standard. This ensures that there is a common mechanism for evaluating the security of technology products and systems. By providing a common set of requirements for comparing the security functions of software and hardware products, the Common Criteria enable users to have an objective yardstick by which to evaluate the security of a product.

With that, Common Criteria certification is slowly but increasingly being used as a touchstone for many Requests for Proposals, primarily in the government sector. By offering a consistent, rigorous, and independently verifiable set of evaluation requirements for hardware and software, Common Criteria certification is intended to be the Good Housekeeping seal of approval for the information security sector.

But what is especially historic about the Common Criteria is that this is the first time governments around the world have united in support of an information security evaluation program.

In the U.S., the Common Criteria have their roots in the Trusted Computer System Evaluation Criteria (TCSEC), also known as the Orange Book. But by the early 1990s, it was clear that TCSEC was not viable for the new world of client/server computing. The main problem with TCSEC was that it was not accommodating to new computing paradigms.

And with that, TCSEC as it was known is dead (see NSTISSAM COMPUSEC/1-99: “Transition from the Trusted Computer System Evaluation Criteria to the International Common Criteria for Information Technology Security Evaluation,” available as www.nstissc.gov/Assets/pdf/nstissam_compusec_1-99.pdf). The very last C2 and B1 Orange Book evaluations performed by NSA under the Orange Book itself were completed and publicly announced at the NISSC conference in October 2000 (http://csrc.nist.gov/nissc/program/features.htm). However, the C2 and B1 classes have been converted to protection profiles under the Common Criteria, and C2 and B1 evaluations are still being performed by commercial laboratories under the Common Criteria. According to the TPEP web site, NSA is still willing to perform Orange Book evaluations at B2 and above, but most vendors are preferring to evaluate against newer standards cast as Common Criteria protection profiles.

Another subtle point is that the Orange Book and the Common Criteria are not exactly the same types of documents. Whereas the Orange Book is a set of requirements that reflect the practice and policies of a specific community (i.e., the U.S. Department of Defense and later the national security community), the Common Criteria are policy-independent and can be used by many organizations (including those in the DoD and the NSC) to articulate their security requirements.

In Europe, the Information Technology Security Evaluation Criteria (ITSEC), already in development in the early 1990s, were published in 1991 by the European Commission. This was a joint effort with representatives from France, Germany, the Netherlands, and the United Kingdom contributing.

Simultaneously, the Canadian government created the Canadian Trusted Computer Product Evaluation Criteria as an amalgamation of the ITSEC and TCSEC approaches. In the United States, the draft of the Federal Criteria for Information Technology Security was published in 1993, in an attempt to combine the various methods for evaluation criteria.

With so many different approaches going on at once, there was consensus to create a common approach. At that point, the International Organization for Standardization (ISO) began to develop a new set of standard evaluation criteria for general use that could be used internationally. The new methodology is what later became the Common Criteria.

The goal was to unite the various international and diverse standards into new criteria for the evaluation of information technology products. This effort ultimately led to the development of the Common Criteria, now an international standard in ISO 15408:1999. (The official name of the standard is the International Common Criteria for Information Technology Security Evaluation1.)

Common Criteria version 2.1 (as of September 2003) is the current version2 of the Common Criteria. Version 2.1 is a set of three distinct but related parts that are individual documents. These are the three parts of the Common Criteria:

• Part 1 (61 pages) is the introduction to the Common Criteria. It defines the general concepts and principles of information technology security evaluation and presents a general model of evaluation. Part 1 also presents the constructs for expressing information technology security objectives, for selecting and defining information technology security requirements, and for writing high-level specifications for products and systems. In addition, the usefulness of each part of the Common Criteria is described in terms of each of the target audiences.

• Part 2 (362 pages) details the specific security functional requirements and details a criterion for expressing the security functional requirements for Targets of Evaluation (TOE).

• Part 3 (216 pages) details the security assurance requirements and defines a set of assurance components as a standard way of expressing the assurance requirements for TOE. Part 3 lists the set of assurance components, families, and classes and defines evaluation criteria for protection profiles (PPs;3 a protection profile is a set of security requirements for a category of TOE; see www.commoncriteria.org/protection_profiles) and security targets (STs;4 security targets are the set of security requirements and specifications to be used as the basis for evaluation of an identified TOE). It also presents evaluation assurance levels that define the predefined Common Criteria scale for rating assurance for TOE, namely the evaluation assurance levels (EALs).

Protection profiles (PPs) and security targets (STs) are two building blocks of the Common Criteria.

A protection profile defines a standard set of security requirements for a specific type of product (for example, operating systems, databases, or firewalls). These profiles form the basis for the Common Criteria evaluation. By listing required security features for product families, the Common Criteria allow products to state conformity to a relevant protection profile. During Common Criteria evaluation, the product is tested against a specific PP, providing reliable verification of the security capabilities of the product.

The overall purpose of Common Criteria product certification is to provide end users with a significant level of trust. Before a product can be submitted for certification, the vendor must first specify a ST. The ST description includes an overview of the product, potential security threats, detailed information on the implementation of all security features included in the product, and any claims of conformity against a PP at a specified EAL.

The vendor must submit the ST to an accredited testing laboratory for evaluation. The laboratory then tests the product to verify the described security features and evaluate the product against the claimed PP. The end result of a successful evaluation includes official certification of the product against a specific protection profile at a specified evaluation assurance level.

Examples of various protection profiles can be found at:

• NSA PP for firewalls and a peripheral sharing switch www.radium.ncsc.mil/tpep/library/protection_profiles/index.html

• IATF PP for firewalls, VPNs, peripheral sharing switches, remote access, multiple domain solutions, mobile code, operating systems, tokens, secured messaging, PKI and KMI, and IDS www.nsff.org/protection_profiles/profiles.cfm

• NIST PP for smart cards, an operating system, role-based access control, and firewalls http://niap.nist.gov/cc-scheme/PPRegistry.html

While there are huge benefits to the Common Criteria, there are also problems with this approach. The point of this section is not to detail those problems, but in a nutshell, some of the main issues are:

• Administrative overhead The overhead involved with gaining certification takes a huge amount of time and resources.

• Expense Gaining certification is extremely expensive.

• Labor-intensive certification The certification process takes many, many weeks and months.

• Need for skilled and experienced analysts Availability of information security professionals with the required experience is still lacking.

• Room for various interpretations The Common Criteria leave room for various interpretations of what the standard is attempting to achieve.

• Paucity of Common Criteria testing laboratories There are only seven laboratories in the U.S.A.

• Length of time to become a Common Criteria testing laboratory Even for those organizations that are interested in becoming certified, that process in and of itself takes quite a while.

Another global standard is ISO 17799:2000, “Code of Practice for Information Security Management.” The goal of this standard is similar to that of the Common Criteria. It is meant to provide a framework within which organizations can assess the relevance and suitability of information security controls to their organization.

ISO 17799 is different from the Common Criteria in that the goal of the Common Criteria is to provide a basis for evaluating IT security products; while ISO 17799 is intended to help organizations improve their information security compliance posture. Another subtle difference is that Common Criteria documentation is freely available for no charge (at www.commoncriteria.org/index_documentation.htm); whereas ISO 17799:2000 costs about $150.00 (from http://bsonline.techindex.co.uk). With ISO 17799:2000, organizations are able to benchmark their security arrangements against the standard to ensure that the management and controls that they have in place to protect and manage information are effective and in line with accepted best practice. Managing security to an international standard provides an organization’s staff, clients, customers, and/or trading partners the confidence that their information is held and managed by the organization in a secure manner.

ISO 17799 is quite comprehensive. A sample checklist for 17799 compliance is included in its Appendix.

ISO 17799 started out as British Standard BS7799. ISO 17799:2000 (Part 1), which is also Part 1 of BS7799, details ten security domains containing 36 security control objectives and 127 security controls that are either essential requirements or considered to be fundamental building blocks for information security. The security domains are:

1. Security policy

2. Organizational security

3. Asset classification and control

4. Personnel security

5. Physical and environmental security

6. Communications and operations management

7. Access control

8. Systems development and maintenance

9. Business continuity management

10. Compliance

ISO 17799 stresses the importance of risk management and makes it clear that organizations have to implement only those guidelines (security control) that are relevant to it. Since ISO 17799 is so comprehensive, organizations should not make the mistake of thinking that they have to implement everything.

One last standard worth a look is COBIT (www.isaca.org/cobit) from the Information Systems Audit and Control Association (ISACA).

COBIT, similar to ISO 17799, was developed to be used as a framework for a generally applicable and accepted standard for effective information technology security and control practices. COBIT is meant to provide a reference framework for management and for audit and security staff.

The COBIT Management Guidelines component contains a framework responding to management’s need for control and measurability of IT by providing tools to assess and measure the enterprise’s IT capability for the 34 COBIT IT processes.

As we have seen, the security reference monitor is a critical aspect to the underlying operating systems security functionality. Since all security functionality is architected, specified, and detailed in the operating system, it is the foundation to all security above it.

Understanding how this functionality works, and how it is tied specifically to the operating system in use within your organization is crucial to ensuring that information security is maximized.

Here are some additional security references available on the Web:

Windows 2000 Security Services Features www.microsoft.com/windows2000/server/evaluation/features/security.asp

Windows 2000 Default Access Control Settings www.microsoft.com/windows2000/techinfo/planning/security/secdefs.asp

Computer Security Technology Planning Guide http://seclab.cs.ucdavis.edu/projects/history/CD/ande72a.pdf

Windows 2000 Deployment Guide—Access Control www.microsoft.com/technet/treeview/default.asp?url=/technet/prodtechnol/windows2000serv/reskit/distsys/part2/dsgch12.asp

Trusted Computer System Evaluation Criteria (TCSEC) http://csrc.ncsl.nist.gov/publications/history/dod85.pdf

Administering Trustworthy Computing www.microsoft.com/technet/treeview/default.asp?url=/technet/prodtechnol/windowsserver2003/proddocs/datacenter/comexp/adsecuritybestpractices_0spz.asp

NIAP Common Criteria Scheme home page http://niap.nist.gov/cc-scheme

International Common Criteria information portal www.commoncriteria.org

Common Criteria Overview www.commoncriteria.org/introductory_overviews/CCIntroduction.pdf