Those of us who look in the mirror each morning see something that few other animals ever recognize: ourselves. Some of us smile at our image and blow ourselves a kiss; others rush to cover the disaster with makeup or to shave, lest we appear unkempt. Either way, as animals go, the human reaction is an odd one. We have it because somewhere along the path of evolution we humans became self-aware. Even more important, we began to have a clear understanding that the face we see in reflections will in time grow wrinkles, sprout hair in embarrassing places, and, worst of all, cease to exist. That is, we had our first intimations of mortality.

Our brain is our mental hardware, and it was for purposes of survival that we developed one with the capacity to think symbolically and to question and reason. But hardware, once you have it, can be put to many uses, and as our Homo sapiens sapiens imagination leapt forward, the realization that we will all die helped turn our brains toward existential questions such as “Who is in charge of the cosmos?” This is not a scientific question per se, yet the road to questions like “What is an atom?” began with such queries, as well as more personal ones, like “Who am I?” and “Can I alter my environment to suit me?” It is when we humans rose above our animal origins and began to make these inquiries that we took our next step forward as a species whose trademark is to think and question.

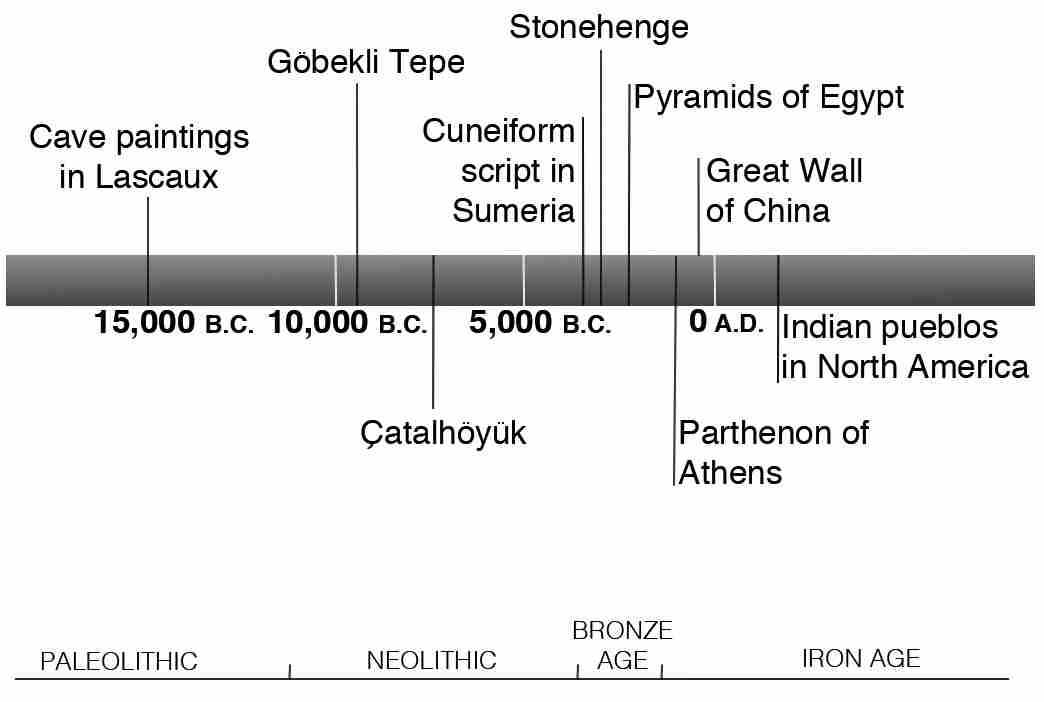

The change in human thought processes that led us to consider these issues probably simmered for tens of thousands of years, beginning around the time—probably forty thousand years ago or thereabouts—when our subspecies began to manifest what we think of as modern behaviors. But it didn’t boil over until about twelve thousand years ago, around the end of the last ice age. Scientists call the two million years leading up to that period the Paleolithic era, and the following seven or eight thousand years the Neolithic era. The names come from the Greek words palaio, meaning “old,” neo, meaning “new,” and lithos, meaning “stone”—in other words, the Old Stone Age (Paleolithic) and the New Stone Age (Neolithic), both of which were characterized by the use of stone tools. Though we call the sweeping change that took us from the Old to the New Stone Age the “Neolithic revolution,” it wasn’t about stone tools. It was about the way we think, the questions we ask, and the issues of existence that we consider important.

Paleolithic humans migrated often, and, like my teenagers, they followed the food. The women gathered plants, seeds, and eggs, while the men generally hunted and scavenged. These nomads moved seasonally—or even daily—keeping few possessions, chasing the flow of nature’s bounty, enduring her hardships, and living always at her mercy. Even so, the abundance of the land was sufficient to support only about one person per square mile, so for most of the Paleolithic era, people lived in small wandering groups, usually numbering fewer than a hundred individuals. The term “Neolithic revolution” was coined in the 1920s to describe the transition from that lifestyle to a new existence in which humans began to settle into small villages consisting of one or two dozen dwellings, and to shift from gathering food to producing it.

With that shift came a movement toward actively shaping the environment rather than merely reacting to it. Instead of simply living off the bounty nature laid before them, the people living in these small settlements now collected materials with no intrinsic worth in their raw form and remade them into items of value. For example, they built homes from wood, mud brick, and stone; forged tools from naturally occurring metallic copper; wove twigs into baskets; twisted fibers gleaned from flax and other plants and animals into threads and then wove those threads into clothing that was lighter, more porous, and more easily cleaned than the animal hides people had formerly worn; and formed and fired clay into pots and pitchers that could be used for cooking or storing surplus food products.

At face value, the invention of objects like clay pitchers seems to represent nothing deeper than the realization that it is hard to carry water around in your pocket. And indeed, until recently many archaeologists thought that the Neolithic revolution was merely an adaptation aimed at making life easier. Climate change at the end of the last ice age, ten to twelve thousand years ago, resulted in the extinction of many large animals and altered the migration patterns of others. This, it was assumed, had put pressure on the human food supply. Some also speculated that the number of humans had finally grown to the point that hunting and gathering could no longer support the population. Settled life and the development of complex tools and other implements were, in this view, a reaction to these circumstances.

But there are problems with this theory. For one, malnutrition and disease leave their signature on bones and teeth. During the 1980s, however, research on skeletal remains from the period preceding the Neolithic revolution revealed no such damage, which suggests that people of that era were not suffering nutritional deprivation. In fact, paleontological evidence suggests that the early farmers had more spinal issues, worse teeth, and more anemia and vitamin deficiencies—and died younger—than the populations of human foragers who preceded them. What’s more, the adoption of agriculture seems to have been gradual, not the result of any widespread climatic catastrophe. Besides, many of the first settlements showed no sign of having domesticated plants or animals.

We tend to think of humanity’s original foraging lifestyle as a harsh struggle for survival, like a reality show in which starving contestants live in the jungle and are driven to eat winged insects and bat guano. Wouldn’t life be better if the foragers could get tools and seeds from the Home Depot and plant rutabagas? Not necessarily, for, based on studies of the few remaining hunters and gatherers who lived in untouched, unspoiled parts of Australia and Africa as late as the 1960s, it seems that the nomadic societies of thousands of years ago may have had a “material plenty.”

Typically, nomadic life consists of settling temporarily and remaining in place until food resources within a convenient range of camp are exhausted. When that happens, the foragers move on. Since all possessions must be carried, nomadic peoples value small goods over larger items, are content with few material goods, and in general have little sense of property or ownership. These aspects of nomadic culture made them appear poor and wanting to the Western anthropologists who first began to study them in the nineteenth century. But nomads do not, as a rule, face a daunting struggle for food or, more generally, to survive. In fact, studies of the San people (also called Bushmen) in Africa revealed that their food-gathering activities were more efficient than those of farmers in pre–World War II Europe, and broader research on hunter-gatherer groups ranging from the nineteenth to the mid-twentieth centuries shows that the average nomad worked just two to four hours each day. Even in the scorched Dobe area of Africa, with a yearly rainfall of just six to ten inches, food resources were found to be “both varied and abundant.” Primitive farming, by contrast, requires long hours of backbreaking work—farmers must move stones and rock, clear brush, and break up hard land using only the most rudimentary of tools.

Such considerations suggest that the old theories of the reason for human settlement don’t tell the whole story. Instead, many now believe that the Neolithic revolution was not, first and foremost, a revolution inspired by practical considerations, but rather a mental and cultural revolution, fueled by the growth of human spirituality. That viewpoint is supported by perhaps the most startling archaeological discovery of modern times, a remarkable piece of evidence suggesting that the new human approach to nature didn’t follow the development of a settled lifestyle but rather preceded it. That discovery is a grand monument called Göbekli Tepe, Turkish for what it looked like before it was excavated: “hill with a potbelly.”

Göbekli Tepe is located on the summit of a hill in what is now the Urfa Province in southeastern Turkey. It is a magnificent structure, built 11,500 years ago—7,000 years before the Great Pyramid—through the herculean efforts not of Neolithic settlers but of hunter-gatherers who hadn’t yet abandoned the nomadic lifestyle. The most astounding thing about it, though, is the use for which it was constructed. Predating the Hebrew Bible by about 10,000 years, Göbekli Tepe seems to have been a religious sanctuary.

The pillars at Göbekli Tepe were arranged in circles as large as sixty-five feet across. Each circle had two additional, T-shaped pillars in its center, apparently humanoid figures with oblong heads and long, narrow bodies. The tallest of them stand eighteen feet high. Its construction required transporting massive stones, some weighing as much as sixteen tons. Yet it was accomplished prior to the invention of metal tools, prior to the invention of the wheel, and before people learned to domesticate animals as beasts of burden. What’s more, unlike the religious edifices of later times, Göbekli Tepe was built before people lived in cities that could have provided a large and centrally organized source of labor. As National Geographic put it, “discovering that hunter-gatherers had constructed Göbekli Tepe was like finding that someone had built a 747 in a basement with an X-Acto knife.”

The first scientists to stumble across the monument were anthropologists from the University of Chicago and Istanbul University conducting a survey of the region in the 1960s. They spotted some broken slabs of limestone peeking up through the dirt but dismissed them as remnants of an abandoned Byzantine cemetery. From the anthropology community came a huge yawn. Three decades passed. Then, in 1994, a local farmer ran his plow into the top of what would prove to be an enormous buried pillar. Klaus Schmidt, an archaeologist working in the area who had read the University of Chicago report, decided to have a look. “Within a minute of first seeing it, I knew I had two choices,” he said. “Go away and tell nobody, or spend the rest of my life working here.” He did the latter, working at the site until his death in 2014.

Since Göbekli Tepe predated the invention of writing, there are no sacred texts scattered about whose decoding could shed light on the rituals practiced at the site. Instead, the conclusion that Göbekli Tepe was a place of worship is based on comparisons with later religious sites and practices. For example, carved on the pillars at Göbekli Tepe are various animals, but unlike the cave paintings of the Paleolithic era, they are not likenesses of the game on which Göbekli Tepe’s builders subsisted, nor do they represent any icons related to hunting or to the actions of daily life. Instead the carvings depict menacing creatures such as lions, snakes, wild boars, scorpions, and a jackal-like beast with an exposed rib cage. They are thought to be symbolic or mythical characters, the types of animals later associated with worship.

Those ancients who visited Göbekli Tepe did so out of great commitment, for it was built in the middle of nowhere. In fact, no one has uncovered evidence that anyone ever lived in the area—no water sources, houses, or hearths. What archaeologists found instead were the bones of thousands of gazelles and aurochs that seem to have been brought in as food from faraway hunts. To come to Göbekli Tepe was to make a pilgrimage, and the evidence indicates that it attracted nomadic hunter-gatherers from as far as sixty miles away.

The ruins of Göbekli Tepe

Göbekli Tepe “shows sociocultural changes come first, agriculture comes later,” says Stanford University archaeologist Ian Hodder. The emergence of group-based religious ritual, in other words, appears to have been an important reason humans started to settle as religious centers drew nomads into tight orbits, and eventually villages were established, based on shared beliefs and systems of meaning. Göbekli Tepe was constructed in an age in which saber-toothed tigers* still prowled the Asian landscape, and our last non–Homo sapiens human relative, the three-foot-tall hobbitlike hunter and toolmaker Homo floresiensis, had only centuries earlier become extinct. And yet its ancient builders, it seems, had graduated from asking practical questions about life to asking spiritual ones. “You can make a good case,” says Hodder, that Göbekli Tepe “is the real origin of complex Neolithic societies.”

Other animals solve simple problems to get food; other animals use simple tools. But one activity that has never been observed, even in a rudimentary form, in any animal other than the human is the quest to understand its own existence. So when late Paleolithic and early Neolithic peoples turned their focus away from mere survival and toward “nonessential” truths about themselves and their surroundings, it was one of the most meaningful steps in the history of the human intellect. If Göbekli Tepe is humanity’s first church—or at least the first we know of—it deserves a hallowed place in the history of religion, but it deserves one in the history of science, too, for it reflected a leap in our existential consciousness, an era in which people began to expend great effort to answer grand questions about the cosmos.

Nature had required millions of years to evolve a human mind capable of asking existential questions, but once that happened, it took an infinitesimal fraction of that time for our species to evolve cultures that would remake the way we live and think. Neolithic peoples began to settle into small villages and then, as their backbreaking work eventually increased food production, into larger ones, with population density ballooning from a mere one person per square mile to a hundred people.

The most impressive of the new mammoth Neolithic villages was Çatalhöyük, built around 7500 B.C. on the plains of central Turkey, just a few hundred miles west of Göbekli Tepe. Analysis of animal and plant remains there suggests that the inhabitants hunted wild cattle, pigs, and horses and gathered wild tubers, grasses, acorns, and pistachios but engaged in little domestic agriculture. Even more surprising, the tools and implements found in the dwellings indicate that the inhabitants built and maintained their own houses and made their own art. There seems to have been no division of labor at all. That wouldn’t sound unusual for a small settlement of nomads, but Çatalhöyük was home to up to eight thousand people—roughly two thousand families—all, in one archaeologist’s words, going about and “doing their own thing.”

For that reason, archaeologists don’t consider Çatalhöyük and similar Neolithic villages cities, or even towns. The first of those would not come for several millennia. The difference between a village and a city is not just a matter of size. It hinges on the social relationships within the population, as those relationships bear on means of production and distribution. In cities, there is a division of labor, meaning that individuals and families can rely on others for certain goods and services. By centralizing the distribution of various goods and services that everyone requires, the city frees individuals and families from having to do everything for themselves, which in turn allows some of them to engage in specialized activities. For example, if the city becomes a center where agricultural surpluses harvested by farmers who live in the surrounding countryside can be distributed to the inhabitants, people who would otherwise have been focused on gathering (or farming) food will be free to practice professions; they can become craftsmen, or priests. But in Çatalhöyük, even though the inhabitants lived in neighboring houses, the artifacts indicate that individual families engaged in the practical activities of life more or less independently of one another.

If each extended family had to be self-sufficient—if you couldn’t get your meat from a butcher, your pipes repaired by a plumber, and your water-damaged phone replaced by bringing it to the nearest Apple Store and pretending you hadn’t dropped it in the toilet—then why bother to settle side by side and form a village? What bonded and united the people of settlements like Çatalhöyük seems to have been the same glue that drew Neolithic humans to Göbekli Tepe: the beginnings of a common culture and shared spiritual beliefs.

The contemplation of human mortality came to be a feature of these emerging cultures. In Çatalhöyük, for example, we see evidence of a new culture of death and dying, one that differed drastically from that of the nomads. Nomads, on their long journeys over hills and across raging rivers, cannot afford to carry the sick or infirm. And so it is common for nomadic tribes on the move to leave behind their aged who are too weak to follow. The settlers of Çatalhöyük and other forgotten villages of the Near East had a practice that was quite the opposite. Their extended family units often remained physically close, not only in life but in death: in Çatalhöyük, they buried the dead under the floors of their homes. Infants were sometimes interred beneath the threshold at the entrance to a room. Beneath one large building alone, an excavation team discovered seventy bodies. In some cases, a year after burial, the inhabitants would open a grave and use a knife to cut off the head of the deceased, to be used for ceremonial purposes.

If the settlers at Çatalhöyük worried about mortality, they also had a new feeling of human superiority. In most hunter-gatherer societies, animals are treated with great respect, as if hunter and prey are partners. Hunters don’t seek to control their prey but instead form a kind of friendship with the animals that will be yielding their lives to the hunter. At Çatalhöyük, however, wall murals depict people teasing and baiting bulls, wild boars, and bears. People are no longer viewed as partnering with animals but instead are seen as dominating them, using them in much the same way they came to use twigs to make their baskets.

The new attitude would eventually lead to the domestication of animals. Over the next two thousand years, sheep and goats were tamed, then cattle and pigs. At first, there was selective hunting—wild herds were culled to achieve an age and gender balance, and people sought to protect them from natural predators. Over time, though, humans took responsibility for all aspects of the animals’ lives. As domesticated animals no longer had to fend for themselves, they responded by evolving new physical attributes as well as tamer behavior, smaller brains, and lower intelligence. Plants, too, were brought under human control—wheat, barley, lentils, and peas, among others—and became the concern not of gatherers but of gardeners.

The invention of agriculture and the domestication of animals catalyzed new intellectual leaps related to maximizing the efficiency of those enterprises. Humans were now motivated to learn about and exploit the rules and regularities of nature. It became useful to know how animals bred, and what helped plants grow. This was the beginnings of what would become science, but in the absence of the scientific method or any appreciation of the advantages of logical reasoning, magic and religious ideas blended with and often superseded empirical observations and theories, with a goal that is more practical than that of pure science today: to help humans exert their power over the workings of nature.

As humans began to ask new questions about nature, the great expansion of Neolithic settlements provided a new way to answer them. For the quest to know was no longer necessarily an enterprise of individuals or small groups; it could now draw upon contributions from a great many minds. And so, although these humans had largely given up the practice of hunting and gathering food, they now joined forces in the hunting and gathering of ideas and knowledge.

When I was a graduate student, the problem I chose for my Ph.D. dissertation was the challenge of developing a new method for finding approximate solutions to the unsolvable quantum equations that describe the behavior of hydrogen atoms in the strong magnetic field outside neutron stars—the densest and tiniest stars known to exist in the universe. I have no idea why I chose that problem, and apparently neither did my thesis adviser, who quickly lost interest in it. I then spent an entire year developing various new approximation techniques that, one after another, proved no better at solving my problem than did existing methods, and hence not worthy of earning me a degree. Then one day I was talking to a postdoctoral researcher across the hall from my office. He was working on a novel approach to understanding the behavior of elementary particles called quarks, which come in three “colors.” (The term, when applied to quarks, has nothing to do with the everyday definition of “color.”) The idea was to imagine (mathematically) a world in which there are an infinite number of colors, rather than three. As we talked about the quarks, which had no relation at all to the work I was doing, a new idea was born: What if I solved my problem by pretending that we lived not in a three-dimensional world, but in a world of infinite dimensions?

If that sounds like a strange, off-the-wall idea, it was. But as we churned through the math, we found that, oddly, though I could not solve my problem as it arose in the real world, I could if I rephrased it in infinite dimensions. Once I had the solution, “all” I had to do to graduate was figure out how the answer should be modified to account for the fact that we actually live in three-dimensional space.

This method proved powerful—I could now do calculations on the back of an envelope and achieve results that were more accurate than those from the complex computer calculations others were using. After a year of fruitless effort, I ended up doing the bulk of what would become my Ph.D. dissertation on the “large N expansion” in just a few weeks, and over the following year that postdoc and I turned out a series of papers applying the idea to other situations and atoms. Eventually a Nobel Prize–winning chemist named Dudley Herschbach read about our method in a journal with the exciting name Physics Today. He renamed the technique “dimensional scaling” and started to apply it in his own field. Within a decade there was even an academic conference entirely devoted to it. I tell this story not because it shows that one can choose a lousy problem, waste a year on dead ends, and still come away with an interesting discovery, but rather to illustrate that the human struggle to know and to innovate is not a series of isolated personal struggles but a cooperative venture, a social activity that requires for its success that humans live in settlements that offer minds a plentitude of other minds with which to interact.

Those other minds can be found in both the present and the past. Myths abound about isolated geniuses revolutionizing our understanding of the world or performing miraculous feats of invention in the realm of technology, but they are invariably fiction. For example, James Watt, who developed the concept of horsepower and for whom the unit of power, the watt, is named, is said to have hatched the idea for the steam engine from a sudden inspiration he had while watching steam spewing from a teapot. In reality, Watt concocted the idea for his device while repairing an earlier version of the invention that had already been in use for some fifty years by the time he got his hands on it. Similarly, Isaac Newton did not invent physics after sitting alone in a field, watching an apple fall; he spent years gathering information, compiled by others, regarding the orbits of planets. And if he hadn’t been inspired by a chance visit from astronomer Edmond Halley (of comet fame), who inquired about a mathematical issue that had intrigued him, Newton would never have written the Principia, which contains his famous laws of motion and is the reason he is revered today. Einstein, too, could not have completed his theory of relativity had he not been able to hunt down old mathematical theories describing the nature of curved space, aided by his mathematician friend Marcel Grossmann. None of these great thinkers could have achieved their grand accomplishments in a vacuum; they relied on other humans and on prior human knowledge, and they were shaped and fed by the cultures in which they were immersed. And it’s not just science and technology that build on the work of prior practitioners: the arts do, too. T. S. Eliot even went so far as to say, “Immature poets imitate; mature poets steal … and good poets make it into something better, or at least something different.”

“Culture” is defined as behavior, knowledge, ideas, and values that you acquire from those who live around you, and it is different in different places. We modern humans act according to the culture in which we are raised, and we also acquire much of our knowledge through culture, which is true for us far more than it is for other species. In fact, recent research suggests that humans are even evolutionarily adapted to teach other humans.

It’s not that other species don’t exhibit culture. They do. For example, researchers studying distinct groups of chimps found that, just as people around the world have a pretty good record of identifying as American a person who travels abroad and then seeks restaurants that serve milkshakes and cheeseburgers, so too could they observe a group of chimps and identify, from their repertoire of behaviors alone, their place of origin. All told, the scientists identified thirty-eight traditions that vary among those chimp communities. Chimps at Kibale, in Uganda, in Gombe, Nigeria, and in Mahale, Tanzania, prance about in heavy rain, dragging branches and slapping the ground. Chimps in the Tai forest of Côte d’Ivoire and in Bossou, Guinea, crack open Coula nuts by smashing them with a flat stone on a piece of wood. Other groups of chimpanzees have been reported to have culturally transmitted usage of medicinal plants. In all these cases, the cultural activity is not instinctive or rediscovered in each generation, but rather something that juveniles learn by mimicking their mothers.

The best-documented example of the discovery and cultural transmission of knowledge among animals comes from the small island of Kojima, in the Japanese archipelago. In the early 1950s, the animal keepers there would feed macaque monkeys each day by throwing sweet potatoes onto the beach. The monkeys would do their best to shake off the sand before eating the potato. Then one day in 1953, an eighteen-month-old female named Imo got the idea to carry her sweet potato into the water and wash it off. This not only removed the gritty sand but also made the food saltier and tastier. Soon Imo’s playmates picked up her trick. Their mothers slowly caught on, and then the males, except for a couple of the older ones—the monkeys weren’t teaching one another, but they were watching and mimicking. Within a few years, virtually the entire community had developed the habit of washing their food. What’s more, until that time, the macaques had avoided the water, but now they began to play in it. The behavior was passed down through the generations and continued for decades. Like beach communities of humans, these macaques had developed their own distinct culture. Over the years scientists have found evidence of culture in many species—animals as different as killer whales, crows, and, of course, other primates.

What sets us apart is that we humans seem to be the only animals able to build on the knowledge and innovations of the past. One day a human noted that round things roll and invented the wheel. Eventually we had carts, waterwheels, pulleys, and, of course, roulette. Imo, on the other hand, didn’t build on prior chimp knowledge, nor did other chimps build on hers. We humans talk among ourselves, we teach each other, we seek to improve on old ideas and trade insights and inspiration. Chimps and other animals don’t. Says archaeologist Christopher Henshilwood, “Chimps can show other chimps how to hunt termites, but they don’t improve on it, they don’t say, ‘let’s do it with a different kind of probe’—they just do the same thing over and over.”

Anthropologists call the process by which culture builds upon prior culture (with relatively little loss) “cultural ratcheting.” The cultural ratchet represents an essential difference between the cultures of humans and of other animals, and it is a tool that arose in the new settled societies, where the desire to be among like thinkers and to ponder with them the same issues became the nutrient upon which advanced knowledge would grow.

Archaeologists sometimes compare cultural innovations to viruses. Like viruses, ideas and knowledge require certain conditions—in this case, social conditions—to thrive. When those conditions are present, as in large, highly connected populations, the individuals of a society can infect one another, and culture can spread and evolve. Ideas that are useful, or perhaps simply provide comfort, survive and spawn a next generation of ideas.

Modern companies that depend on innovation for their success are well aware of this. Google, in fact, made a science of it, placing long, narrow tables in its cafeteria so that people would have to sit together and designing each food line to be three to four minutes long—not so long that its employees get annoyed and decide to dine on a cup of instant noodles, but long enough that they tend to bump into one another and talk. Or consider Bell Labs, which between the 1930s and the 1970s was the most innovative organization in the world, responsible for many of the key innovations that made the modern digital age possible—including the transistor and the laser. At Bell Labs, collaborative research was so highly valued that the buildings were designed to maximize the probability of chance encounters, and one employee’s job description involved traveling to Europe each summer to act as an intermediary between scientific ideas there and in the United States. What Bell Labs was recognizing was that those who travel in wider intellectual groups have greater chances of dreaming up new innovations. As evolutionary geneticist Mark Thomas put it, when it comes to generating new ideas, “It’s not how smart you are. It’s how well-connected you are.” Interconnectedness is a key mechanism in the cultural ratchet, and it is one of the gifts of the Neolithic revolution.

One evening, shortly after my father’s seventy-sixth birthday, we took an after-dinner walk. The next day he was going to the hospital for surgery. He had been sick for several years, suffering from borderline diabetes, a stroke, a heart attack, and, worst of all—from his point of view—chronic heartburn and a diet that excluded virtually everything he cared to eat. As we slowly strolled that night, he leaned on his cane, raised his eyes from the street to the sky, and remarked that it was hard for him to grasp that this might be the last time he ever saw the stars. And then he began to unfold for me the thoughts that were on his mind as he faced the possibility that his death might be near.

Here on earth, he told me, we live in a troubled, chaotic universe. It had rewarded him at a young age with the cataclysms of the Holocaust and in his old age with an aorta that, against all design specs, was bulging dangerously. The heavens, he said, had always seemed to him like a universe that followed completely different laws, a realm of planets and suns that moved serenely through their age-old orbits and appeared perfect and indestructible. This was something we had talked about often over the years. It tended to come up whenever I was describing my latest adventures in physics, when he would ask me if I really believed that the atoms that make up human beings are subject to the same laws as the atoms of the rest of the universe—the inanimate and the dead. No matter how many times I said that, yes, I did really believe that, he remained unconvinced.

With the prospect of his own death in mind, I assumed that he would be less than ever inclined to believe in the impersonal laws of nature but would be turning, as people so often do at such times, to thoughts of a loving God. My father rarely spoke of God, because although he had grown up believing in the traditional God, and wanted to still, the horrors he had witnessed made that a difficult proposition. But, as he contemplated the stars that night, I thought that he might well be looking to God for solace. Instead he told me something that surprised me. He hoped I was right about the laws of physics, he said, because he now took comfort in the possibility that, despite the messiness of the human condition, he was made of the same stuff as the perfect and romantic stars.

We humans have been thinking about such issues since at least the time of the Neolithic revolution, and we still don’t have the answers, but once we had awakened to such existential questions, the next milestone on the human path toward knowledge would be the development of tools—mental tools—to help us answer them.

The first tools don’t sound grand. They’re nothing like calculus, or the scientific method. They are the fundamental tools of the thinking trade, ones that have been with us for so long that we tend to forget that they weren’t always part of our mental makeup. But for progress to occur, we had to wait for the introduction of professions that dealt with the pursuit of ideas rather than the procurement of food; for the invention of writing so that knowledge could be preserved and exchanged; for the creation of mathematics, which would become the language of science; and finally, for the invention of the concept of laws. Just as epic and transformative in their way as the so-called scientific revolution of the seventeenth century, these developments came about not so much as the product of heroic individuals thinking great thoughts but as the gradual by-product of life in the first true cities.

*The technical term is saber-toothed cat.