1

Introduction to Machine Learning

Machine learning (ML) continues to grow in importance for many organizations across nearly all domains. Some example applications of machine learning in practice include:

• Predicting the likelihood of a patient returning to the hospital (readmission) within 30 days of discharge.

• Segmenting customers based on common attributes or purchasing behavior for targeted marketing.

• Predicting coupon redemption rates for a given marketing campaign.

• Predicting customer churn so an organization can perform preventative intervention.

• And many more!

In essence, these tasks all seek to learn from data. To address each scenario, we can use a given set of features to train an algorithm and extract insights. These algorithms, or learners, can be classified according to the amount and type of supervision needed during training. The two main groups this book focuses on are: supervised learners which construct predictive models, and unsupervised learners which build descriptive models. Which type you will need to use depends on the learning task you hope to accomplish.

1.1 Supervised learning

A predictive model is used for tasks that involve the prediction of a given output (or target) using other variables (or features) in the data set. Or, as stated by Kuhn and Johnson (2013, p. 2), predictive modeling is “…the process of developing a mathematical tool or model that generates an accurate prediction.” The learning algorithm in a predictive model attempts to discover and model the relationships among the target variable (the variable being predicted) and the other features (aka predictor variables). Examples of predictive modeling include:

• using customer attributes to predict the probability of the customer churning in the next 6 weeks;

• using home attributes to predict the sales price;

• using employee attributes to predict the likelihood of attrition;

• using patient attributes and symptoms to predict the risk of readmission;

• using production attributes to predict time to market.

Each of these examples has a defined learning task; they each intend to use attributes (X) to predict an outcome measurement (Y).

Throughout this text we’ll use various terms interchangeably for

– X: “predictor variable”, “independent variable”, “attribute”, “feature”, “predictor”

– Y: “target variable”, “dependent variable”, “response”, “outcome measurement”

The predictive modeling examples above describe what is known as supervised learning. The supervision refers to the fact that the target values provide a supervisory role, which indicates to the learner the task it needs to learn. Specifically, given a set of data, the learning algorithm attempts to optimize a function (the algorithmic steps) to find the combination of feature values that results in a predicted value that is as close to the actual target output as possible.

In supervised learning, the training data you feed the algorithm includes the target values. Consequently, the solutions can be used to help supervise the training process to find the optimal algorithm parameters.

Most supervised learning problems can be bucketed into one of two categories, regression or classification, which we discuss next.

1.1.1 Regression problems

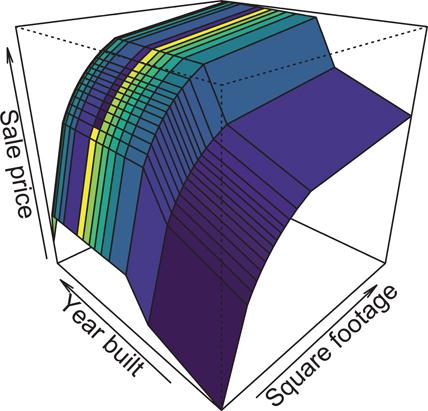

When the objective of our supervised learning is to predict a numeric outcome, we refer to this as a regression problem (not to be confused with linear regression modeling). Regression problems revolve around predicting output that falls on a continuum. In the examples above, predicting home sales prices and time to market reflect a regression problem because the output is numeric and continuous. This means, given the combination of predictor values, the response value could fall anywhere along some continuous spectrum (e.g., the predicted sales price of a particular home could be between $80,000 and $755,000). Figure 1.1 illustrates average home sales prices as a function of two home features: year built and total square footage. Depending on the combination of these two features, the expected home sales price could fall anywhere along a plane.

1.1.2 Classification problems

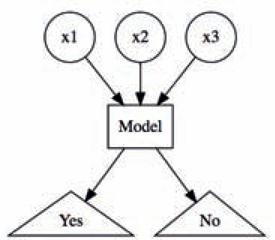

When the objective of our supervised learning is to predict a categorical outcome, we refer to this as a classification problem. Classification problems most commonly revolve around predicting a binary or multinomial response measure such as:

• Did a customer redeem a coupon (coded as yes/no or 1/0)?

• Did a customer churn (coded as yes/no or 1/0)?

• Did a customer click on our online ad (coded as yes/no or 1/0)?

• Classifying customer reviews:

– Binary: positive vs. negative.

– Multinomial: extremely negative to extremely positive on a 0–5 Likert scale.

However, when we apply machine learning models for classification problems, rather than predict a particular class (i.e., “yes” or “no”), we often want to predict the probability of a particular class (i.e., yes: 0.65, no: 0.35). By default, the class with the highest predicted probability becomes the predicted class. Consequently, even though we are performing a classification problem, we are still predicting a numeric output (probability). However, the essence of the problem still makes it a classification problem.

Although there are machine learning algorithms that can be applied to regression problems but not classification and vice versa, most of the supervised learning algorithms we cover in this book can be applied to both. These algorithms have become the most popular machine learning applications in recent years.

1.2 Unsupervised learning

Unsupervised learning, in contrast to supervised learning, includes a set of statistical tools to better understand and describe your data, but performs the analysis without a target variable. In essence, unsupervised learning is concerned with identifying groups in a data set. The groups may be defined by the rows (i.e., clustering) or the columns (i.e., dimension reduction); however, the motive in each case is quite different.

The goal of clustering is to segment observations into similar groups based on the observed variables; for example, to divide consumers into different homogeneous groups, a process known as market segmentation. In dimension reduction, we are often concerned with reducing the number of variables in a data set. For example, classical linear regression models break down in the presence of highly correlated features. Some dimension reduction techniques can be used to reduce the feature set to a potentially smaller set of uncorrelated variables. Such a reduced feature set is often used as input to downstream supervised learning models (e.g., principal component regression).

Unsupervised learning is often performed as part of an exploratory data analysis (EDA). However, the exercise tends to be more subjective, and there is no simple goal for the analysis, such as prediction of a response. Furthermore, it can be hard to assess the quality of results obtained from unsupervised learning methods. The reason for this is simple. If we fit a predictive model using a supervised learning technique (i.e., linear regression), then it is possible to check our work by seeing how well our model predicts the response Y on observations not used in fitting the model. However, in unsupervised learning, there is no way to check our work because we don’t know the true answer—the problem is unsupervised!

Despite its subjectivity, the importance of unsupervised learning should not be overlooked and such techniques are often used in organizations to:

• Divide consumers into different homogeneous groups so that tailored marketing strategies can be developed and deployed for each segment.

• Identify groups of online shoppers with similar browsing and purchase histories, as well as items that are of particular interest to the shoppers within each group. Then an individual shopper can be preferentially shown the items in which he or she is particularly likely to be interested, based on the purchase histories of similar shoppers.

• Identify products that have similar purchasing behavior so that managers can manage them as product groups.

These questions, and many more, can be addressed with unsupervised learning. Moreover, the outputs of unsupervised learning models can be used as inputs to downstream supervised learning models.

1.3 Roadmap

The goal of this book is to provide effective tools for uncovering relevant and useful patterns in your data by using R’s ML stack. We begin by providing an overview of the ML modeling process and discussing fundamental concepts that will carry through the rest of the book. These include feature engineering, data splitting, model validation and tuning, and performance measurement. These concepts will be discussed in Chapters 2,3.

Chapters 4,5,6,7,8,9,10,11,12,13,14 focus on common supervised learners ranging from simpler linear regression models to the more complicated gradient boosting machines and deep neural networks. Here we will illustrate the fundamental concepts of each base learning algorithm and how to tune its hyperparameters to maximize predictive performance.

Chapters 15,16 delve into more advanced approaches to maximize effectiveness, efficiency, and interpretation of your ML models. We discuss how to combine multiple models to create a stacked model (aka super learner), which allows you to combine the strengths from each base learner and further maximize predictive accuracy. We then illustrate how to make the training and validation process more efficient with automated ML (aka AutoML). Finally, we illustrate many ways to extract insight from your “black box” models with various ML interpretation techniques.

The latter part of the book focuses on unsupervised techniques aimed at reducing the dimensions of your data for more effective data representation (Chapters 17,18,19) and identifying common groups among your observations with clustering techniques (Chapters 20,21,22).

1.4 The data sets

The data sets chosen for this book allow us to illustrate the different features of the presented machine learning algorithms. Since the goal of this book is to demonstrate how to implement R’s ML stack, we make the assumption that you have already spent significant time cleaning and getting to know your data via EDA. This would allow you to perform many necessary tasks prior to the ML tasks outlined in this book such as:

• Feature selection (i.e., removing unnecessary variables and retaining only those variables you wish to include in your modeling process).

• Recoding variable names and values so that they are meaningful and more interpretable.

• Recoding, removing, or some other approach to handling missing values.

Consequently, the exemplar data sets we use throughout this book have, for the most part, gone through the necessary cleaning processes. In some cases we illustrate concepts with stereotypical data sets (i.e. mtcars, iris, geyser); however, we tend to focus most of our discussion around the following data sets:

• Property sales information as described in De Cock (2011).

– problem type: supervised regression

– response variable: Sale_Price (i.e., $195,000, $215,000)

– features: 80

– observations: 2,930

– objective: use property attributes to predict the sale price of a home

– access: provided by the AmesHousing package (Kuhn, 2017a)

– more details: See ?AmesHousing::ames_raw

# Access data

ames <- AmesHousing::make_ames()

# Print dimensions

dim(ames)

## [1] 2930 81

# Peek at response variable

head(ames$Sale_Price)

## [1] 215000 105000 172000 244000 189900 195500

You can see the entire data cleaning process to transform the raw Ames housing data (AmesHousing::ames_raw) to the final clean data (AmesHousing::make_ames) that we will use in machine learning algorithms throughout this book by typing AmesHousing::make_ames into the R console.

• Employee attrition information originally provided by IBM Watson Analytics Lab1.

– problem type: supervised binomial classification

– response variable: Attrition (i.e., “Yes”, “No”)

– features: 30

– observations: 1,470

– objective: use employee attributes to predict if they will attrit (leave the company)

– access: provided by the rsample package (Kuhn and Wickham, 2019)

– more details: See ?rsample::attrition

# Access data

attrition <- rsample::attrition

# Print dimensions

dim(attrition)

## [1] 1470 31

# Peek at response variable

head(attrition$Attrition)

## [1] Yes No Yes No No No

## Levels: No Yes

• Image information for handwritten numbers originally presented to AT&T Bell Lab’s to help build automatic mail-sorting machines for the USPS. Has been used since early 1990s to compare machine learning performance on pattern recognition (i.e., LeCun et al. (1990); LeCun et al. (1998); Cireşan et al. (2012)).

– Problem type: supervised multinomial classification

– response variable: V785 (i.e., numbers to predict: 0, 1, …, 9)

– features: 784

– observations: 60,000 (train) / 10,000 (test)

– objective: use attributes about the “darkness” of each of the 784 pixels in images of handwritten numbers to predict if the number is 0, 1, …, or 9.

– access: provided by the dslabs package (Irizarry, 2018)

– more details: See ?dslabs::read_mnist() and online MNIST documentation2

# Access data

mnist <- dslabs::read_mnist()

names(mnist)

## [1] ”train” ”test”

# Print feature dimensions

dim(mnist$train$images)

## [1] 60000 784

# Peek at response variable

head(mnist$train$labels)

## [1] 5 0 4 1 9 2

• Grocery items and quantities purchased. Each observation represents a single basket of goods that were purchased together.

– Problem type: unsupervised basket analysis

– response variable: NA

– features: 42

– observations: 2,000

– objective: use attributes of each basket to identify common groupings of items purchased together.

– access: available on the companion website for this book

# URL to download/read in the data

url <- ”https://koalaverse.github.io/homlr/data/my_basket.csv”

# Access data

my_basket <- readr::read_csv(url)

# Print dimensions

dim(my_basket)

## [1] 2000 42

# Peek at response variable

my_basket

## # A tibble: 2,000 x 42

## ‘7up‘ lasagna pepsi yop red.wine cheese bbq

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 0 0 0 0 0 0 0

## 2 0 0 0 0 0 0 0

## 3 0 0 0 0 0 0 0

## 4 0 0 0 2 1 0 0

## 5 0 0 0 0 0 0 0

## 6 0 0 0 0 0 0 0

## 7 1 1 0 0 0 0 1

## 8 0 0 0 0 0 0 0

## 9 0 1 0 0 0 0 0

## 10 0 0 0 0 0 0 0

## # … with 1,990 more rows, and 35 more variables:

## # bulmers <dbl>, mayonnaise <dbl>, horlics <dbl>,

## # chicken.tikka <dbl>, milk <dbl>, mars <dbl>,

## # coke <dbl>, lottery <dbl>, bread <dbl>,

## # pizza <dbl>, sunny.delight <dbl>, ham <dbl>,

## # lettuce <dbl>, kronenbourg <dbl>, leeks <dbl>,

## # fanta <dbl>, tea <dbl>, whiskey <dbl>, peas <dbl>,

## # newspaper <dbl>, muesli <dbl>, white.wine <dbl>,

## # carrots <dbl>, spinach <dbl>, pate <dbl>,

## # instant.coffee <dbl>, twix <dbl>, potatoes <dbl>,

## # fosters <dbl>, soup <dbl>, toad.in.hole <dbl>,

## # coco.pops <dbl>, kitkat <dbl>, broccoli <dbl>,

## # cigarettes <dbl>

1https://www.ibm.com/communities/analytics/watson-analytics-blog/hr-employee-attrition/