The Asynchronous Completion Token design pattern allows an application to demultiplex and process efficiently the responses of asynchronous operations it invokes on services.

Active Demultiplexing [PRS+99], ‘Magic Cookie’

Consider a large-scale distributed e-commerce system consisting of clusters of Web servers. These servers store and retrieve various types of content in response to requests from Web browsers. The performance and reliability of such e-commerce systems has become increasingly crucial to many businesses.

For example, in a web-based stock trading system, it is important that the current stock quotes, as well as subsequent buy and sell orders, are transmitted efficiently and reliably. The Web servers in the e-commerce system must therefore be monitored carefully to ensure they are providing the necessary quality of service to users. Autonomous management agents can address this need by propagating management events from e-commerce system Web servers back to management applications:

System administrators can use these management agents, applications, and events to monitor, visualize, and control the status and performance of Web servers in the e-commerce system [PSK+97].

Typically, a management application uses the Publisher-Subscriber pattern [POSA1] to subscribe with one or more management agents to receive various types of events, such as events that report when Web browsers establish new connections with Web servers. When a management agent detects activities of interest to a management application, it sends completion events to the management application, which then processes these events.

In large-scale e-commerce systems, however, management applications may invoke subscription operations on many management agents, requesting notification of the occurrence of many different types of events. Moreover, each type of event may be processed differently in a management application using specialized completion handlers. These handlers determine the application’s response to events, such as updating a display, logging events to a database, or automatically detecting performance bottlenecks and system failures.

One way in which a management application could match its subscription operations to their subsequent completion events would be to spawn a separate thread for each event subscription operation it invoked on a management agent. Each thread would then block synchronously, waiting for completion event(s) from its agent to arrive in response to its original subscription operation. In this synchronous design, a completion handler that processed management agent event responses could be stored implicitly in each thread’s run-time stack.

Unfortunately this synchronous multi-threaded design incurs the same context-switching, synchronization, and data-movement performance overhead drawbacks described in the Example section of the Reactor pattern (179). Therefore, management applications may instead opt to initiate subscription operations asynchronously. In this case, management applications must be designed to demultiplex management agent completion events to their associate completion handlers efficiently and scalably, thereby allowing management applications to react promptly when notified by their agents.

An event-driven system in which applications invoke operations asynchronously on services and subsequently process the associated service completion event responses.

When a client application invokes an operation request on one or more services asynchronously, each service returns its response to the application via a completion event. The application must then demultiplex this event to the appropriate handler, such as a function or object, that it uses to process the asynchronous operation response contained in the completion event. To address this problem effectively, we must resolve three forces:

Management agent services in our e-commerce system example do not, and should not, know how a management application will demultiplex and process the various completion events it receives from the agents in response to its asynchronous subscription operations.

Management agent services in our e-commerce system example do not, and should not, know how a management application will demultiplex and process the various completion events it receives from the agents in response to its asynchronous subscription operations.

In our e-commerce system a management application and an agent service should have a minimal number of interactions, such as one to invoke the asynchronous subscription operation and one for each completion event response. Moreover, the data transferred to help demultiplex completion events to their handlers should add minimal extra bytes beyond an operation’s input parameters and return values.

In our e-commerce system a management application and an agent service should have a minimal number of interactions, such as one to invoke the asynchronous subscription operation and one for each completion event response. Moreover, the data transferred to help demultiplex completion events to their handlers should add minimal extra bytes beyond an operation’s input parameters and return values.

A large-scale e-commerce application may have hundreds of Web servers and management agents, millions of simultaneous Web browser connections and a correspondingly large number of asynchronous subscription operations and completion events. Searching a large table to associate a completion event response with its original asynchronous operation request could thus degrade the performance of management applications significantly.

A large-scale e-commerce application may have hundreds of Web servers and management agents, millions of simultaneous Web browser connections and a correspondingly large number of asynchronous subscription operations and completion events. Searching a large table to associate a completion event response with its original asynchronous operation request could thus degrade the performance of management applications significantly.

Together with each asynchronous operation that a client initiator invokes on a service, transmit information that identifies how the initiator should process the service’s response. Return this information to the initiator when the operation finishes, so that it can be used to demultiplex the response efficiently, allowing the initiator to process it accordingly.

In detail: for every asynchronous operation that a client initiator invokes on a service, create an asynchronous completion token (ACT). An ACT contains information that uniquely identifies the completion handler, which is the function or object responsible for processing the operation’s response. Pass this ACT to the service together with the operation, which holds but does not modify the ACT. When the service replies to the initiator, its response includes the ACT that was sent originally. The initiator can then use the ACT to identify the completion handler that will process the response from the original asynchronous operation.

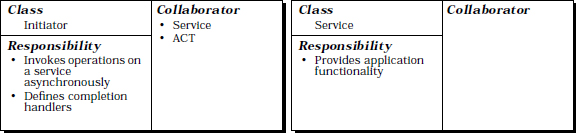

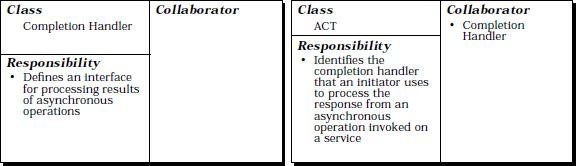

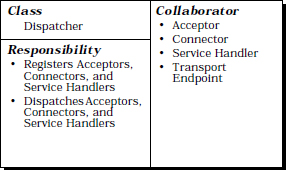

Four participants form the structure of the Asynchronous Completion Token pattern:

A service provides some type of functionality that can be accessed asynchronously.

Management agents provide a distributed management and monitoring service to our e-commerce system.

Management agents provide a distributed management and monitoring service to our e-commerce system.

A client initiator invokes operations on a service asynchronously. It also demultiplexes the response returned by these operations to a designated completion handler, which is a function or object within an application that is responsible for processing service responses.

In our e-commerce system management applications invoke asynchronous operations on management agents to subscribe to various types of events. The management agents then send completion event responses to the management applications asynchronously when events for which they have registered occur. Completion handlers in management applications process these completion events to update their GUI display and perform other actions.

In our e-commerce system management applications invoke asynchronous operations on management agents to subscribe to various types of events. The management agents then send completion event responses to the management applications asynchronously when events for which they have registered occur. Completion handlers in management applications process these completion events to update their GUI display and perform other actions.

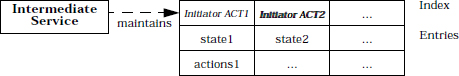

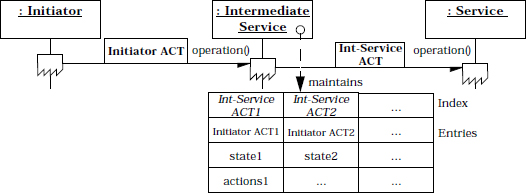

An asynchronous completion token (ACT) contains information that identifies a particular initiator’s completion handler. The initiator passes the ACT to the service when it invokes an operation; the service returns the ACT to the initiator unchanged when the asynchronous operation completes. The initiator then uses this ACT to efficiently demultiplex to the completion handler that processes the response from the original asynchronous operation. Services can hold a collection of ACTs to handle multiple asynchronous operations invoked by initiators simultaneously.

In our e-commerce system a management application initiator can create ACTs that are indices into a table of completion handlers, or are simply direct pointers to completion handlers. To a management agent service, however, the ACT is simply an opaque value that it returns unchanged to the management application initiator.

In our e-commerce system a management application initiator can create ACTs that are indices into a table of completion handlers, or are simply direct pointers to completion handlers. To a management agent service, however, the ACT is simply an opaque value that it returns unchanged to the management application initiator.

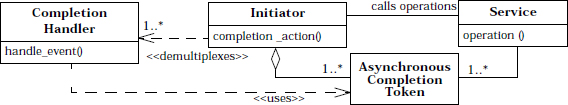

The following class diagram illustrates the participants of the Asynchronous Completion Token pattern and the relationships between these participants:

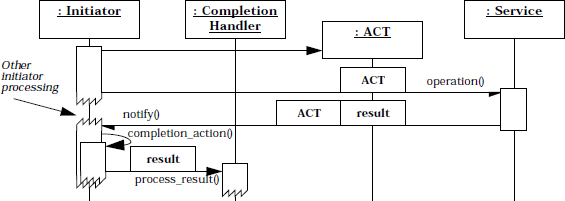

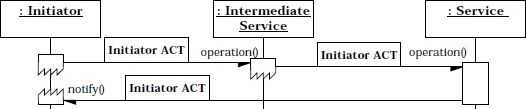

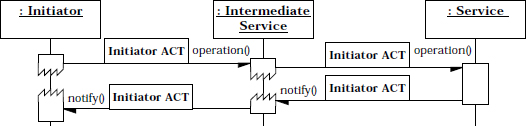

The following interactions occur in the Asynchronous Completion Token pattern:

There are six activities involved in implementing the Asynchronous Completion Token pattern. These activities are largely orthogonal to the implementations of initiators, services, and completion handlers, which are covered by other patterns, such as Proxy [GoF95] [POSA1], Proactor, or Reactor (179). This section therefore focuses mainly on implementing ACTs, the protocol for exchanging ACTs between initiators and services, and the steps used to demultiplex ACTs efficiently to their associated completion handlers.

Another aspect to consider when defining an ACT representation is the support it offers the initiator for demultiplexing to the appropriate completion handlers. By using common patterns such as Command or Adapter [GoF95], ACTs can provide a uniform interface to initiators. Concrete ACT implementations then map this interface to the specific interface of a particular completion handler, as described in implementation activity 6 (271).

The following abstract class defines a C++ interface that can be used as the base for a wide range of pointer ACTs. It defines a pure virtual handle_event() method, which an initiator can use to dispatch a specific completion handler that processes the response from an asynchronous operation:

The following abstract class defines a C++ interface that can be used as the base for a wide range of pointer ACTs. It defines a pure virtual handle_event() method, which an initiator can use to dispatch a specific completion handler that processes the response from an asynchronous operation:

class Completion_Handler_ACT {

public:

virtual void handle_event

(const Completion_Event &event) = 0;

};

Application developers can define new types of completion handlers by subclassing from Completion_Handler_ACT and overriding its handle_event() hook method to call a specific completion handler. The e-commerce system presented in the Example Resolved section illustrates this implementation strategy.

In addition, if the initiator cannot trust the service to return the original ACT unchanged, the explicit data structure can store additional information to authenticate whether the returned ACT really exists within the initiator. This authentication check, however, can increase the overhead of locating the ACT and demultiplexing it to the completion handler within the initiator.

Regardless of which strategy is used, initiators are responsible for freeing any resources associated with ACTs after they are no longer needed. The Object Lifetime Manager pattern [LGS99] is a useful way to manage the deletion of ACT resources robustly.

In our e-commerce system example the management application initiator can subscribe the same ACT with multiple agent services and use it to demultiplex and dispatch these management agents’ responses, such as ‘connection established’ completion events. Moreover, each agent can return this ACT multiple times, for example whenever it detects the establishment of a new connection.

In our e-commerce system example the management application initiator can subscribe the same ACT with multiple agent services and use it to demultiplex and dispatch these management agents’ responses, such as ‘connection established’ completion events. Moreover, each agent can return this ACT multiple times, for example whenever it detects the establishment of a new connection.

Our e-commerce system example illustrates how the synchronous callback object strategy can be implemented using pointer ACTs. We define a generic callback handler class that uses the Callback_Handler_ACT defined in implementation activity 1 (267).

Our e-commerce system example illustrates how the synchronous callback object strategy can be implemented using pointer ACTs. We define a generic callback handler class that uses the Callback_Handler_ACT defined in implementation activity 1 (267).

class Callback_Handler {

public:

// Callback method.

virtual void completion_event

(const Completion_Event &event,

Completion_Handler_ACT *act) {

act->handle_event (event);

};

};

Note how both demultiplexing strategies described above allow initiators to process many different types of completion events efficiently. In particular the ACT demultiplexing step requires constant O(1) time, regardless of the number of completion handlers represented by subclasses of Completion_Handler_ACT.

In our example scenario, system administrators employ the management application in conjunction with management agents to display and log all connections established between Web browsers and Web servers. In addition, the management application displays and logs each file transfer, because the HTTP 1.1 protocol can multiplex multiple GET requests over a single connection [Mog95].

We first define a management agent proxy that management applications can use to subscribe asynchronously for completion events. We next illustrate how to define a concrete ACT that is tailored to the types of completion events that occur in our e-commerce system. Finally we implement the main() function that combines all these components to create the management application.

The management agent proxy [GoF95]. This class defines the types of events that management applications can subscribe to, as well as a method that allows management applications to subscribe a callback with a management agent asynchronously:

class Management_Agent_Proxy {

public:

enum Event_Type { NEW_CONNECTIONS, FILE_TRANSFERS };

void subscribe (Callback_Handler *handler,

Event_Type type,

Completion_Handler_ACT *act);

// …

};

This proxy class is implemented using the Half-Object plus Protocol [PLoPD1] pattern, which defines an object interface that encapsulates the protocol between the proxy and a management agent. When an event of a particular Event_Type occurs, the management agent returns the corresponding completion event to the Management_Agent_Proxy. This proxy then invokes the method completion_event() on the Callback_Handler. This method returns a pointer to the Completion_Handler_ACT that the management application passed to subscribe() originally, as shown in implementation activity 6 (271).

The concrete ACT. Management applications playing the role of initiators and management agent services in our e-commerce system exchange pointers to Completion_Handler_ACT subclass objects, such as the following Management_Completion_Handler:

class Management_Completion_Handler :

public Completion_Handler_ACT {

private:

Window *window_; // Used to display and

Logger *logger_; // to log completion events.

public:

Management_Completion_Handler (Window *w, Logger *l):

window_ (w), logger_ (l) { }

virtual void handle_event

(const Completion_Event &event) {

window_->update (event);

logger_->update (event);

}

};

The parameters passed to the Management_Completion_Handler constructor identify the concrete completion handler state used by the management application to process completion events. These two parameters are cached in internal data members in the class, which point to the database logger and the GUI window that will be updated when completion events arrive from management agents via the handle_event() hook method. This hook method is dispatched by the Callback_Hander’s completion_event() method, as shown in implementation activity 6 (271).

The main() function. The following main() function shows how a management application invokes asynchronous subscription operations on a management agent proxy and then processes the subsequent connection and file transfer completion event responses. To simplify and optimize the demultiplexing and processing of completion handlers, the management application passes a pointer ACT to a Management_Completion_Handler when it subscribes to a management agent proxy. Generalizing this example to work with multiple management agents and other types of completion events is straightforward.

int main () {

The application starts by creating a single instance of the Callback_Handler class defined in implementation activity 6 (271):

Callback_Handler callback_handler;

This Callback_Handler is shared by all asynchronous subscription operations and is used to demultiplex all types of incoming completion events.

The application next creates an instance of the Management_Agent proxy described above:

Management_Agent_Proxy agent_proxy = // …

This agent will call back to the callback_handler when connection and file transfer completion events occur.

The application then creates several objects to handle logging and display completion processing:

Logger database_logger (DATABASE); Logger console_logger (CONSOLE); Window main_window (200, 200); Window topology_window (100, 20);

Some completion events will be logged to a database, whereas others will be written to a console window. Depending on the event type, different graphical displays may need to be updated. For example, a topology window might show an iconic view of the system.

The main() function creates two Management_Completion_Handler objects that uniquely identify the concrete completion handlers that process connection establishment and file transfer completion events, respectively:

Management_Completion_Handler connection_act (&topology_window, &database_logger); Management_Completion_Handler filetransfer_act (&main_window, &console_logger);

The Management_Completion_Handler objects are initialized with pointers to the appropriate Window and Logger objects. Pointer ACTs to these two Management_Completion_Handler objects are passed explicitly when the management application asynchronously subscribes the callback_handler with Management_Agent_Proxy for each type of event:

agent_proxy.subscribe (&callback_handler, Management_Agent_Proxy::NEW_CONNECTIONS, &connection_act); agent_proxy.subscribe (&callback_handler, Management_Agent_Proxy::FILE_TRANSFERS, &file_transfer_act);

Note that the Management_Completion_Handlers are held ‘implicitly’ in the address space of the initiator, as described in implementation activity 2 (269).

Once these subscriptions are complete the application enters its event loop, in which all subsequent processing is driven by callbacks from completion events.

run_event_loop (); }

Whenever a management agent detects a new connection or file transfer, it sends the associated completion event to the Management_Agent_Proxy. This proxy then extracts the Management_Completion_Handler ACT from the completion event and uses the Callback_Handler’s completion_event() method to dispatch the Management_Completion_Handler’s handle_events() hook method, which processes each completion event. For example, file transfer events can be displayed on a GUI window and logged to the console, whereas new connection establishment events could be displayed on a system topology window and logged to a database.

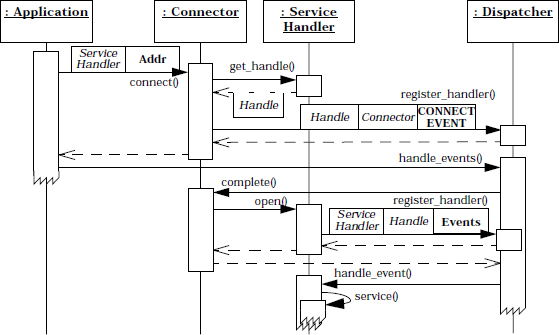

The following sequence diagram illustrates the key collaborations between components in the management application. For simplicity we omit the creation of the agent, callback handler, window, and logging handlers, and focus on using only one window and one logging handler.

Chain of Service ACTs. A chain of services can occur when intermediate services also play the role of initiators that invoke asynchronous operations on other services to process the original initiator’s operation.

For example, consider a management application that invokes operation requests on an agent, which in turn invokes other requests on a timer mechanism. In this scenario the management application initiator uses a chain of services. All intermediate services in the chain—except the two ends—are both initiators and services, because they both receive and initiate asynchronous operations.

For example, consider a management application that invokes operation requests on an agent, which in turn invokes other requests on a timer mechanism. In this scenario the management application initiator uses a chain of services. All intermediate services in the chain—except the two ends—are both initiators and services, because they both receive and initiate asynchronous operations.

A chain of services must decide which service ultimately responds to the initiator. Moreover, if each service in a chain uses the Asynchronous Completion Token pattern, four issues related to passing, storing, and returning ACTs must be considered:

Non-opaque ACTs. In some implementations of the Asynchronous Completion Token pattern, services do not treat the ACT as purely opaque. For example, Win32 OVERLAPPED structures are non-opaque ACTs, because certain fields can be modified by the operating system kernel. One solution to this problem is to pass subclasses of the OVERLAPPED structure that contain additional ACT state, as shown in implementation activity 1.1 in the Proactor pattern (215).

Synchronous ACTs. ACTs can also be used for operations that result in synchronous callbacks. In this case the ACT is not really an asynchronous completion token but rather a synchronous one. Using ACTs for synchronous callback operations provides a well-structured means of passing state related to an operation through to a service. In addition this approach decouples concurrency policies, so that the code receiving an ACT can be used for either synchronous or asynchronous operations.

HTTP-Cookies. Web servers can use the Asynchronous Completion Token pattern if they expect responses from Web browsers. For example, a Web server may expect a user to transmit data they filled into a form that was downloaded from the server to the browser in response to a previous HTTP GET request. Due to the ‘sessionless’ design of the HTTP protocol, and because users need not complete forms immediately, the Web server, acting in the role of a ‘initiator’, transmits a cookie (the ACT) to the Web browser along with the form. This cookie allows the server to associate the user’s response with his or her original request for the form. Web browsers need not interpret the cookie, but simply return it unchanged to the Web server along with the completed form.

Operating system asynchronous I/O mechanisms. The Asynchronous Completion Token pattern is used by operating systems that support asynchronous I/O. For instance, the following techniques are used by Windows NT and POSIX:

For example, when initiators initiate asynchronous reads and writes via ReadFile() or WriteFile(), they can specify OVERLAPPED struct ACTs that will be queued at a completion port when the operations finish. Initiators can then use the GetQueuedCompletionStatus() function to dequeue completion events that return the original OVERLAPPED struct as an ACT. Implementation activity 5.5 in the Proactor pattern (215) illustrates this design in more detail.

CORBA demultiplexing. The TAO CORBA Object Request Broker [SC99] uses the Asynchronous Completion Token pattern to demultiplex various types of GIOP requests and responses efficiently, scalably, and predictably in both the client initiator and server.

In a multi-threaded client initiator, for example, TAO uses ACTs to associate GIOP responses from a server with the appropriate client thread that initiated the request over a single multiplexed TCP/IP connection to the server process. Each TAO client request carries a unique opaque sequence number, the ACT, represented as a 32-bit integer. When an operation is invoked the client-side TAO ORB assigns its sequence number to be an index into an internal connection table managed using the Leader/Followers pattern (447).

Each table entry keeps track of a client thread that is waiting for a response from its server over the multiplexed connection. When the server replies, it returns the sequence number ACT sent by the client. TAO’s client-side ORB uses the ACT to index into its connection table to determine which client thread to awaken and pass the reply.

In the server, TAO uses the Asynchronous Completion Token pattern to provide low-overhead demultiplexing throughout the various layers of features in an Object Adapter [SC99]. For example, when a server creates an object reference, TAO Object Adapter stores special object ID and POA ID values in its object key, which is ultimately passed to clients as an ACT contained in an object reference.

When the client passes back the object key with its request, TAO’s Object Adapter extracts the special values from the ACT and uses them to index directly into tables it manages. This so-called ‘active demultiplexing’ scheme [PRS+99] uses an ACT to ensure constant-time O(1) lookup regardless of the number of objects in a POA or the number of nested POAs in an Object Adapter.

Electronic medical imaging system management. The management example described in this pattern is derived from a distributed electronic medical imaging system developed at Washington University for Project Spectrum [BBC94]. In this system, management applications monitor the performance and status of multiple distributed components in the medical imaging system, including image servers, modalities, hierarchical storage management systems, and radiologist diagnostic workstations. Management agents provide an asynchronous service that notifies management application of events, such as connection establishment events and image transfer events. This system uses the Asynchronous Completion Token pattern so that management applications can associate state efficiently with the arrival of events from management agents received asynchronous subscription operations earlier.

Jini. The handback object in Jini [Sun99a] distributed event specification [Sun99b] is a Java-based example of the Asynchronous Completion Token pattern. When a consumer registers with an event source to receive notifications, it can pass a handback object to this event source. This object is a java.rmi.MarshalledObject, which is therefore not demarshaled at the event source, but is simply ‘handed back’ to the consumer as part of the event notification.

The consumer can then use the getRegistrationObject() method of the event notification to retrieve the handback object that was passed to the event source when the consumer registered with it. Thus, consumers can recover the context rapidly in which to process the event notification. This design is particularly useful when a third party registered the consumer to receive event notifications.

FedEx inventory tracking. An intriguing real-life example of the Asynchronous Completion Token pattern is implemented by the inventory tracking mechanism used by the US Federal Express postal services. A FedEx Airbill contains a section labeled: ‘Your Internal Billing Reference Information (Optional: First 24 characters will appear on invoice).’

The sender of a package uses this field as an ACT. This ACT is returned by FedEx (the service) to you (the initiator) with the invoice that notifies the sender that the transaction has completed. FedEx deliberately defines this field very loosely: it is a maximum of 24 characters, which are otherwise ‘untyped.’ Therefore, senders can use the field in a variety of ways. For example, a sender can populate this field with the index of a record for an internal database or with a name of a file containing a ‘to-do list’ to be performed after the acknowledgment of the FedEx package delivery has been received.

There are several benefits to using the Asynchronous Completion Token pattern:

Simplified initiator data structures. Initiators need not maintain complex data structures to associate service responses with completion handlers. The ACT returned by the service can be downcast or reinterpreted to convey all the information the initiator needs to demultiplex to its appropriate completion action.

Efficient state acquisition. ACTs are time efficient because they need not require complex parsing of data returned with the service response. All relevant information necessary to associate the response with the original request can be stored either in the ACT or in an object referenced by the ACT. Alternatively, ACTs can be used as indices or pointers to operation state for highly efficient access, thereby eliminating costly table searches.

Space efficiency. ACTs can consume minimal data space yet can still provide applications with sufficient information to associate large amounts of state to process asynchronous operation completion actions. For example, in C and C++, void pointer ACTs can reference arbitrarily large objects held in the initiator application.

Flexibility. User-defined ACTs are not forced to inherit from an interface to use the service’s ACTs. This allows applications to pass as ACTs objects for which a change of type is undesirable or even impossible. The generic nature of ACTs can be used to associate an object of any type with an asynchronous operation. For example, when ACTs are implemented as CORBA object references they can be narrowed to the appropriate concrete interface.

Non-dictatorial concurrency policies. Long duration operations can be executed asynchronously because operation state can be recovered from an ACT efficiently. Initiators can therefore be single-threaded or multi-threaded depending on application requirements. In contrast, a service that does not provide ACTs may force delay-sensitive initiators to perform operations synchronously within threads to handle operation completions properly.

There are several liabilities to avoid when using the Asynchronous Completion Token pattern.

Memory leaks. Memory leaks can result if initiators use ACTs as pointers to dynamically allocated memory and services fail to return the ACTs, for example if the service crashes. As described in implementation activity 2 (269), initiators wary of this possibility should maintain separate ACT repositories or tables. These can be used for explicit garbage collection if services fail or if they corrupt the ACT.

Authentication. When an ACT is returned to an initiator on completion of an asynchronous event, the initiator may need to authenticate the ACT before using it. This is necessary if the server cannot be trusted to have treated the ACT opaquely and may have changed its value. Implementation activity 2 (269) describes a strategy for addressing this liability.

Application re-mapping. If ACTs are used as direct pointers to memory, errors can occur if part of the application is re-mapped in virtual memory. This situation can occur in persistent applications that are restarted after crashes, as well as for objects allocated from a memory-mapped address space. To protect against these errors, indices to a repository can be used as ACTs, as described in implementation activities 1 (267) and 2 (269). The extra level of indirection provided by these ‘index ACTs’ protects against re-mappings, because indices can remain valid across re-mappings, whereas pointers to direct memory may not.

The Asynchronous Completion Token and Memento patterns [GoF95] are similar with respect to their participants. In the Memento pattern, originators give mementos to caretakers who treat the Memento as ‘opaque’ objects. In the Asynchronous Completion Token pattern, initiators give ACTs to services that treat the ACTs as ‘opaque’ objects.

These patterns differ in motivation and applicability however. The Memento pattern takes ‘snapshots’ of object states, whereas the Asynchronous Completion Token pattern associates state with the completion of asynchronous operations. Another difference is in their dynamics. In the Asynchronous Completion Token pattern, the initiator—which corresponds to the originator in Memento—creates the ACT proactively and passes it to the service. In Memento, the caretaker—which is the initiator in terms of Asynchronous Completion Token pattern—requests the creation of a memento from an originator, which is reactive.

Irfan Pyarali and Timothy Harrison were co-authors on the original version of the Asynchronous Completion Token pattern. Thanks to Paul McKenney and Richard Toren for their insightful comments and contributions, and to Michael Ogg for supplying the Jini known use.

The Acceptor-Connector design pattern decouples the connection and initialization of cooperating peer services in a networked system from the processing performed by the peer services after they are connected and initialized.

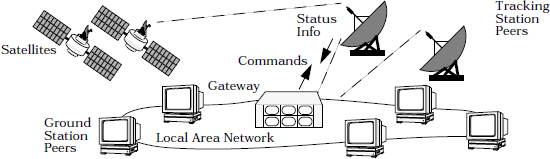

Consider a large-scale distributed system management application consisting that monitors and controls a satellite constellation [Sch96]. Such a management application typically consists of a multi-service, application-level gateway that routes data between transport endpoints connecting remote peer hosts.

Each service in the peer hosts uses the gateway to send and receive several types of data, including status information, bulk data, and commands, that control the satellites. The peer hosts can be distributed throughout local area and wide-area networks.

The gateway transmits data between its peer hosts using the connection-oriented TCP/IP protocol [Ste93]. Each service in the system is bound to a particular transport address, which is designated by a tuple consisting of an IP host address and a TCP port number. Different port numbers uniquely identify different types of service.

Unlike the binding of services to specific TCP/IP host/port tuples, which can be selected early in the distributed system’s lifecycle, it may be premature to designate the connection establishment and service initialization roles a priori. Instead, the services in the gateway and peer hosts should be able to change their connection roles flexibly to support the following run-time behavior:

In general, the inherent flexibility required to support such a run-time behavior demands communication software that allows the connection establishment, initialization, and processing of peer services to evolve gracefully and to vary independently.

A networked system or application in which connection-oriented protocols are used to communicate between peer services connected via transport endpoints.

Applications in connection-oriented networked systems often contain a significant amount of configuration code that establishes connections and initializes services. This configuration code is largely independent of the processing that services perform on data exchanged between their connected transport endpoints. Tightly coupling the configuration code with the service processing code is therefore undesirable, because it fails to resolve four forces:

The gateway from our example may require integration with a directory service that runs over the TP4 or SPX transport protocols rather than TCP. Ideally, this integration should have little or no effect on the implementation of the gateway services themselves.

The gateway from our example may require integration with a directory service that runs over the TP4 or SPX transport protocols rather than TCP. Ideally, this integration should have little or no effect on the implementation of the gateway services themselves.

FTP, TELNET, HTTP and CORBA IIOP services all use different application-level communication protocols. However, they can all be configured using the same connection and initialization mechanisms.

FTP, TELNET, HTTP and CORBA IIOP services all use different application-level communication protocols. However, they can all be configured using the same connection and initialization mechanisms.

Applications with a large number of peers may need to establish many connections asynchronously and concurrently. Efficient and scalable connection establishment is particularly important for applications, such as our gateway example, that communicate over long-latency wide area networks.

Applications with a large number of peers may need to establish many connections asynchronously and concurrently. Efficient and scalable connection establishment is particularly important for applications, such as our gateway example, that communicate over long-latency wide area networks.

Decouple the connection and initialization of peer services in a networked application from the processing these peer services perform after they are connected and initialized.

In detail: encapsulate application services within peer service handlers. Each service handler implements one half of an end-to-end service in a networked application. Connect and initialize peer service handlers using two factories: acceptor and connector. Both factories cooperate to create a full association [Ste93] between two peer service handlers and their two connected transport endpoints, each encapsulated by a transport handle.

The acceptor factory establishes connections passively on behalf of an associated peer service handler upon the arrival of connection request events12 issued by remote peer service handlers. Likewise, the connector factory establishes connections actively to designated remote peer service handlers on behalf of peer service handlers.

After a connection is established, the acceptor and connector factories initialize their associated peer service handlers and pass them their respective transport handles. The peer service handlers then perform application-specific processing, using their transport handles to exchange data via their connected transport endpoints. In general, service handlers do not interact with the acceptor and connector factories after they are connected and initialized.

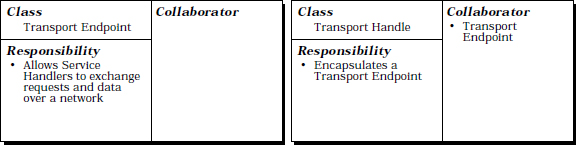

There are six key participants in the Acceptor-Connector pattern:

A passive-mode transport endpoint is a factory that listens for connection requests to arrive, accepts those connection requests, and creates transport handles that encapsulate the newly connected transport endpoints. Data can be exchanged via connected transport endpoints by reading and writing to their associated transport handles.

In the gateway example, we use socket handles to encapsulate transport endpoints. In this case, a passive-mode transport endpoint is a passive-mode socket handle [Ste98] that is bound to a TCP port number and IP address. It creates connected transport endpoints that are encapsulated by data-mode socket handles. Standard Socket API operations, such as recv() and send(), can use these connected data-mode socket handles to read and write data.

In the gateway example, we use socket handles to encapsulate transport endpoints. In this case, a passive-mode transport endpoint is a passive-mode socket handle [Ste98] that is bound to a TCP port number and IP address. It creates connected transport endpoints that are encapsulated by data-mode socket handles. Standard Socket API operations, such as recv() and send(), can use these connected data-mode socket handles to read and write data.

A service handler defines one half of an end-to-end service in a networked system. A concrete service handler often plays either the client role or server role in this end-to-end service. In peer-to-peer use cases it can even play both roles simultaneously. A service handler provides an activation hook method that is used to initialize it after it is connected to its peer service handler. In addition, the service handler contains a transport handle, such as a data-mode socket handle, that encapsulates a transport endpoint. Once connected, this transport handle can be used by a service handler to exchange data with its peer service handler via their connected transport endpoints.

In our example the service handlers are both cooperating components within the gateway and peer hosts that communicate over TCP/IP via their connected socket handles. Service handlers are responsible for processing status information, bulk data, and commands that monitor and control a satellite constellation.

In our example the service handlers are both cooperating components within the gateway and peer hosts that communicate over TCP/IP via their connected socket handles. Service handlers are responsible for processing status information, bulk data, and commands that monitor and control a satellite constellation.

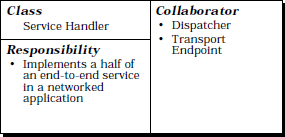

An acceptor is a factory that implements a strategy for passively establishing a connected transport endpoint, and creating and initializing its associated transport handle and service handler. An acceptor provides two methods, connection initialization and connection completion, that perform these steps with the help of a passive-mode transport endpoint.

When its initialization method is called, an acceptor binds its passive-mode transport endpoint to a particular transport address, such as a TCP port number and IP host address, that listens passively for the arrival of connection requests.

When a connection request arrives, the acceptor’s connection completion method performs three steps:

A connector13 is a factory that implements the strategy for actively establishing a connected transport endpoint and initializing its associated transport handle and service handler. It provides two methods, connection initiation and connection completion, that perform these steps.

The connection initiation method is passed an existing service handler and establishes a connected transport endpoint for it with an acceptor. This acceptor must be listening for connection requests to arrive on a particular transport address, as described above.

Separating the connector’s connection initiation method from its completion method allows a connector to support both synchronous and asynchronous connection establishment transparently:

Regardless of whether a transport endpoint is connected synchronously or asynchronously, both acceptors and connectors initialize a service handler by calling its activation hook method after a transport endpoint is connected. From this point service handlers generally do not interact with their acceptor and connector factories.

A dispatcher is responsible for demultiplexing indication events that represent various types of service requests, such as connection requests and data requests.

Note that a dispatcher is not necessary for synchronous connection establishment, because the thread that initiates the connection will block awaiting the connection completion event. As a result this thread can activate the service handler directly.

Networked applications and services can be built by subclassing and instantiating the generic participants of the Acceptor-Connector pattern described above to create the following concrete components.

Concrete service handlers define the application-specific portions of end-to-end services. They are activated by concrete acceptors or concrete connectors. Concrete acceptors instantiate generic acceptors with concrete service handlers, transport endpoints, and transport handles used by these service handlers. Similarly, concrete connectors instantiate generic connectors.

Concrete service handlers, acceptors, and connectors are also instantiated with a specific type of interprocess communication (IPC) mechanism, such as Sockets [Ste98] or TLI [Rago93]. These IPC mechanisms are used to create the transport endpoints and transport handles that connected peer service handlers use to exchange data.

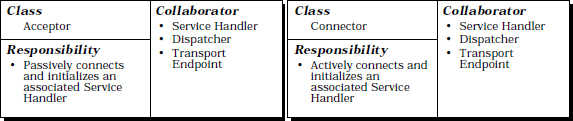

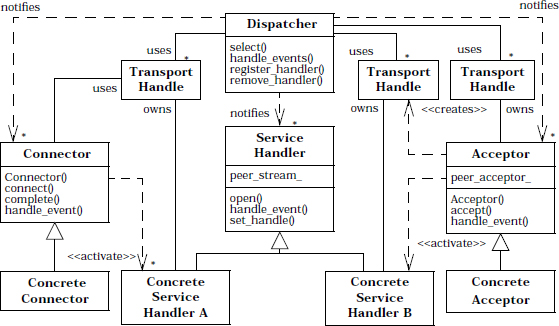

The class diagram of the Acceptor-Connector pattern is shown in the following figure:

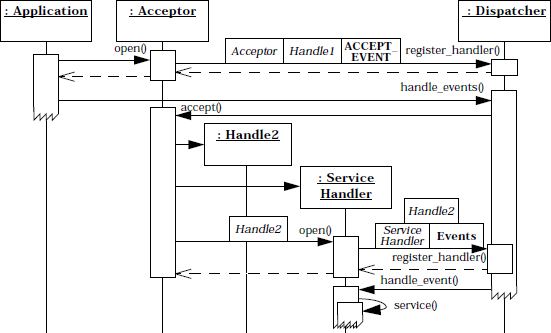

To illustrate the collaborations performed by participants in the Acceptor-Connector pattern, we examine three canonical scenarios:

Scenario I: This scenario illustrates the collaboration between acceptor and service handler participants and is divided into three phases:

Next, the acceptor’s initialization method registers itself with a dispatcher, which will notify the acceptor when connection indication events arrive from peer connectors. After the acceptor’s initialization method returns, the application initiates the dispatcher’s event loop. This loop waits for connection requests and other types of indication events to arrive from peer connectors.

This hook method performs service handler-specific initialization, for example allocating locks, spawning threads, or establishing a session with a logging service. A service handler may elect to register itself with a dispatcher, which will notify the handler automatically when indication events containing data requests arrive for it.

A connector can initialize its service handler using two general strategies: synchronous and asynchronous. Synchronous service initialization is useful:

Asynchronous service initialization is useful in different situations, such as establishing connections over high latency links, using single-threaded applications, or initializing a large number of peers that can be connected in an arbitrary order.

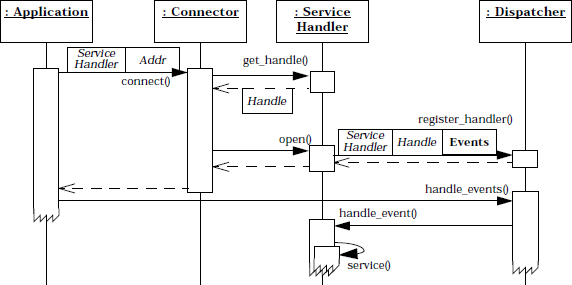

Scenario II: The collaborations among participants in the synchronous connector scenario can be divided into three phases:

In the synchronous scenario, the connector combines the connection initiation and service initialization phases into a single blocking operation. Only one connection is established per thread for every invocation of a connector’s connection initiation method.

Scenario III: The collaborations among participants in the asynchronous connector scenario are also divided into three phases:

Unlike Scenario II, however, the connection initiation request executes asynchronously. The application thread therefore does not block while waiting for the connection to complete. To receive a notification when a connection completes, the connector registers itself and the service handler’s transport handle with the dispatcher and returns control back to the application.

Note in the following figure how the connection initiation phase in Scenario III is separated temporally from the service handler initialization phase. This decoupling enables multiple connection initiations and completions to proceed concurrently, thereby maximizing the parallelism inherent in networks and hosts.

The participants in the Acceptor-Connector pattern can be decomposed into three layers:

Our coverage of the Acceptor-Connector implementation starts with the demultiplexing/dispatching component layer and progresses upwards through the connection management and application component layers.

A passive-mode transport endpoint is a factory that listens on an advertised transport address for connection requests to arrive. When such a request arrives, a passive-mode transport endpoint creates a connected transport endpoint and encapsulates this new endpoint with a transport handle. An application can use this transport handle to exchange data with its peer-connected transport endpoint. The transport mechanism components are often provided by the underlying operating system platform and may be accessed via wrapper facades (47), as shown in implementation activity 3 (311).

For our gateway example we implement the transport mechanism components using the Sockets API [Ste98]. The passive-mode transport endpoints and connected transport endpoints are implemented by passive-mode and data-mode sockets, respectively. Transport handles are implemented by socket handles. Transport addresses are implemented using IP host addresses and TCP port numbers.

For our gateway example we implement the transport mechanism components using the Sockets API [Ste98]. The passive-mode transport endpoints and connected transport endpoints are implemented by passive-mode and data-mode sockets, respectively. Transport handles are implemented by socket handles. Transport addresses are implemented using IP host addresses and TCP port numbers.

To implement the dispatching mechanisms, follow the guidelines described in the Reactor (179) or Proactor (215) event demultiplexing patterns. These patterns handle synchronous and asynchronous event demultiplexing, respectively. A dispatcher can also be implemented as a separate thread or process using the Active Object pattern (369) or Leader/Followers (447) thread pools.

For our gateway example, we implement the dispatcher and event handler using components from the Reactor pattern (179). This enables efficient synchronous demultiplexing of multiple types of events from multiple sources within a single thread of control. The dispatcher, which we call ‘reactor’ in accordance with Reactor pattern terminology, uses a reactive model to demultiplex and dispatch concrete event handlers.

For our gateway example, we implement the dispatcher and event handler using components from the Reactor pattern (179). This enables efficient synchronous demultiplexing of multiple types of events from multiple sources within a single thread of control. The dispatcher, which we call ‘reactor’ in accordance with Reactor pattern terminology, uses a reactive model to demultiplex and dispatch concrete event handlers.

We use a reactor Singleton [GoF95] because only one instance of it is needed in the entire application process. The event handler class, which we call Event_Handler in our example, implements methods needed by the reactor to notify its service handlers, connectors, and acceptors when events they have registered for occur. To collaborate with the reactor, therefore, these components must subclass from class Event_Handler, as shown in implementation activity 2 (299).

Applications must also configure a service handler component with a concrete IPC mechanism that encapsulates a transport handle and its corresponding transport endpoint. These IPC mechanisms are often implemented as wrapper facades (47). Concrete service handlers can use these IPC mechanisms to communicate with their remote peer service handlers.

In our gateway example we define a Service_Handler abstract base class that inherits from the Event_Handler class defined in implementation activity 1 of the Reactor pattern (179):

In our gateway example we define a Service_Handler abstract base class that inherits from the Event_Handler class defined in implementation activity 1 of the Reactor pattern (179):

template <class IPC_STREAM>

// <IPC_STREAM> is the type of concrete IPC data

// transfer mechanism.

class Service_Handler : public Event_Handler {

public:

typedef typename IPC_STREAM::PEER_ADDR Addr;

// Pure virtual method (defined by a subclass).

virtual void open () = 0;

// Access method used by <Acceptor> and <Connector>.

IPC_STREAM &peer () { return ipc_stream_; }

// Return the address we are connected to.

Addr &remote_addr () {

return ipc_stream_.remote_addr ();

}

// Set the <handle> used by this <Service_Handler>.

void set_handle (HANDLE handle){

return ipc_stream_.set_handle (handle);

}

private:

// Template ‘placeholder’ for a concrete IPC

// mechanism wrapper facade, which encapsulates a

// data-mode transport endpoint and transport handle.

IPC_STREAM ipc_stream_;

};

This design allows a Reactor to dispatch the service handler’s event handling method it inherits from class Event_Handler. In addition, the Service_Handler class defines methods to access its IPC mechanism, which is configured into the class using parameterized types. Finally this class includes an activation hook that acceptors and connectors can use to initialize a Service_Handler object once a connection is established. This pure virtual method, which we call open(), must be overridden by a concrete service handler to perform service-specific initialization.

A generic acceptor is customized by the components in the application layer, as described in implementation activity 3 (311) to establish connections passively on behalf of a particular service handler using a designated IPC mechanism. To support this customization an acceptor implementation can use two general strategies, polymorphism or parameterized types:

The behavior of the individual steps in this process are delegated to hook methods [Pree95] that are also declared in the acceptor’s interface. These hook methods can be overridden by concrete acceptors to perform application-specific strategies, for example to use a particular concrete IPC mechanism to establish connections passively. The concrete service handler created by a concrete acceptor can be manufactured via a factory method [GoF95] called as one of the steps in the acceptor’s accept() template method.

One advantage of using parameterized types is that they allow the IPC connection mechanisms and service handlers associated with an acceptor to be changed easily and efficiently. This flexibility simplifies porting an acceptor’s connection establishment code to platforms with different IPC mechanisms. It also allows the same connection establishment and service initialization code to be reused for different types of concrete service handlers.

Inheritance and parameterized types have the following trade-offs:

Different applications and application services have different needs that are best served by one or the other strategies.

In our gateway example we use parameterized types to configure the acceptor with its designated service handler and a concrete IPC mechanism that establishes connections passively. The acceptor inherits from Event_Handler to receive events from a reactor singleton, which pays the role of the dispatcher in this example:

In our gateway example we use parameterized types to configure the acceptor with its designated service handler and a concrete IPC mechanism that establishes connections passively. The acceptor inherits from Event_Handler to receive events from a reactor singleton, which pays the role of the dispatcher in this example:

template <class SERVICE_HANDLER, class IPC_ACCEPTOR>

// The <SERVICE_HANDLER> is the type of concrete

// service handler created/accepted/activated when a

// connection request arrives.

// The <IPC_ACCEPTOR> provides the concrete IPC

// passive connection mechanism.

class Acceptor : public Event_Handler {

public:

typedef typename IPC_ACCEPTOR::PEER_ADDR Addr;

// Constructor initializes <local_addr> transport

// endpoint and register with the <Reactor>.

Acceptor (const Addr &local_addr, Reactor *r);

// Template method that creates, connects,

// and activates <SERVICE_HANDLER>’s.

virtual void accept ();

protected:

// Factory method hook for creation strategy.

virtual SERVICE_HANDLER *make_service_handler ();

// Hook method for connection strategy.

virtual void accept_service_handler

(SERVICE_HANDLER *);

// Hook method for activation strategy.

virtual void activate_service_handler

(SERVICE_HANDLER *);

// Hook method that returns the I/O <HANDLE>.

virtual HANDLE get_handle () const;

// Hook method invoked by <Reactor> when a

// connection request arrives.

virtual void handle_event (HANDLE, Event_Type);

private:

// Template ‘placeholder’ for a concrete IPC

// mechanism that establishes connections passively.

IPC_ACCEPTOR peer_acceptor_;

};

The Acceptor template is parameterized by concrete types of IPC_ACCEPTOR and SERVICE_HANDLER. The IPC_ACCEPTOR is a placeholder for the concrete IPC mechanism used by an acceptor to passively establish connections initiated by peer connectors. The SERVICE_HANDLER is a placeholder for the concrete service handler that processes data exchanged with its peer connected service handler. Both of these concrete types are provided by the components in the application layer.

The behavior of the acceptor’s passive-mode transport endpoint is determined by the type of concrete IPC mechanism used to customize the generic acceptor. This IPC mechanism is often accessed via a wrapper facade (47), such as the ACE wrapper facades [Sch92] for Sockets [Ste98], TLI [Rago93], STREAM pipes [PR90], or Win32 Named Pipes [Ric97].

The acceptor’s initialization method also registers itself with a dispatcher. This dispatcher then performs a ‘double dispatch’ [GoF95] back to the acceptor to obtain a handle to the passive-mode transport endpoint of its underlying concrete IPC mechanism. This handle allows the dispatcher to notify the acceptor when connection requests arrive from peer connectors.

The Acceptor from our example implements its constructor initialization method as follows:

The Acceptor from our example implements its constructor initialization method as follows:

template <class SERVICE_HANDLER, class IPC_ACCEPTOR>

void Acceptor<SERVICE_HANDLER, IPC_ACCEPTOR>::Acceptor

(const Addr &local_addr, Reactor *reactor) {

// Initialize the IPC_ACCEPTOR.

peer_acceptor_.open (local_addr);

// Register with <reactor>, which uses <get_handle>

// to get handle via ‘double-dispatching.’

reactor->register_handler (this, ACCEPT_MASK);

}

When a connection request arrives from a remote peer, the dispatcher automatically calls back to the acceptor’s accept() template method [GoF95]. This template method implements the acceptor’s strategies for creating a new concrete service handler, accepting a connection into it, and activating the handler. The details of the acceptor’s implementation are delegated to hook methods. These hook methods represent the set of operations available to perform customized service handler connection and initialization strategies.

If polymorphism is used to specify concrete acceptors, the hook methods are dispatched to their corresponding implementations within the concrete acceptor subclass. When using parameterized types, the hook methods invoke corresponding methods on the template parameters used to instantiate the generic acceptor. In both cases, concrete acceptors can modify the generic acceptor’s strategies transparently without changing its accept() method’s interface. This flexibility makes it possible to design concrete service handlers whose behavior can be decoupled from their passive connection and initialization.

The Acceptor class in our gateway example implements the following accept() method:

The Acceptor class in our gateway example implements the following accept() method:

template <class SERVICE_HANDLER, class IPC_ACCEPTOR>

void Acceptor<SERVICE_HANDLER, IPC_ACCEPTOR>::accept () {

// The following methods comprise the core

// strategies of the <accept> template method.

// Factory method creates a new <SERVICE_HANDLER>.

SERVICE_HANDLER *service_handler =

make_service_handler ();

// Hook method that accepts a connection passively.

accept_service_handler (service_handler);

// Hook method that activates the <SERVICE_HANDLER>

// by invoking its <open> activation hook method.

activate_service_handler (service_handler);

}

The make_service_handler() factory method [GoF95] is a hook used by the generic acceptor template method to create new concrete service handlers. Its connection acceptance strategy is defined by the accept_service_handler() hook method. By default this method delegates connection establishment to the accept() method of the IPC_ACCEPTOR, which defines a concrete passive IPC connection mechanism. The acceptor’s service handler activation strategy is defined by the activate_service_handler() method. This method can be used by a concrete service handler to initialize itself and to select its concurrency strategy.

In our gateway example the dispatcher is a reactor that notifies the acceptor’s accept() method indirectly via the handle_event() method that the acceptor inherits from class Event_Handler. The handle_event() method is an adapter [GoF95] that transforms the general-purpose event handling interface of the reactor to notify the acceptor’s accept() method.

When an acceptor terminates, due to errors or due to its application process shutting down, the dispatcher notifies the acceptor to release any resources it acquired dynamically.

In our gateway, the reactor calls the acceptor’s handle_close() hook method, which closes its passive-mode socket.

In our gateway, the reactor calls the acceptor’s handle_close() hook method, which closes its passive-mode socket.

A connector contains a map of concrete service handlers that manage the completion of pending asynchronous connections. Service handlers whose connections are initiated asynchronously are inserted into this map. This allows their dispatcher and connector to activate the handlers after the connections complete.

As with the generic acceptors described in implementation activity 2.2 (300), components in the application layer described in implementation activity 3 (311) customizes generic connectors with particular concrete service handlers and IPC mechanisms. We must therefore select the strategy—polymorphism or parameterized types—for customizing concrete connectors. Implementation activity 2.2 (300) discusses both strategies and their trade-offs.

As with the acceptor’s accept() method, a connector’s connection initiation and completion methods are template methods [GoF95], which we call connect() and complete(). These methods implement the generic strategy for establishing connections actively and initializing service handlers. Specific steps in these strategies are delegated to hook methods [Pree95].

We define the following interface for the connector used to implement our gateway example. It uses C++ templates to configure the connector with a concrete service handler and the concrete IPC connection mechanism. It inherits from class Event_Handler to receive asynchronous completion event notifications from the reactor dispatcher:

We define the following interface for the connector used to implement our gateway example. It uses C++ templates to configure the connector with a concrete service handler and the concrete IPC connection mechanism. It inherits from class Event_Handler to receive asynchronous completion event notifications from the reactor dispatcher:

template <class SERVICE_HANDLER, class IPC_CONNECTOR>

// The <SERVICE_HANDLER> is the type of concrete

// service handler activated when a connection

// request completes. The <IPC_CONNECTOR> provides

// the concrete IPC active connection mechanism.

class Connector : public Event_Handler {

public:

enum Connection_Mode {

SYNC, // Initiate connection synchronously.

ASYNC // Initiate connection asynchronously.

};

typedef typename IPC_CONNECTOR::PEER_ADDR Addr;

// Initialization method that caches a <Reactor> to

// use for asynchronous notification.

Connector (Reactor *reactor): reactor_ (reactor) { }

// Template method that actively connects a service to

// a <remote_addr>.

void connect (SERVICE_HANDLER *sh,

const Addr &remote_addr,

Connection_Mode mode);

protected:

// Hook method for the active connection strategy.

virtual void connect_service_handler

(const Addr &addr, Connection_Mode mode);

// Register the <SERVICE_HANDLER> so that it can be

// activated when the connection completes.

int register_handler (SERVICE_HANDLER *sh,

Connection_Mode mode);

// Hook method for the activation strategy.

virtual void activate_service_handler

(SERVICE_HANDLER *sh);

// Template method that activates a <SERVICE_HANDLER>

// whose non-blocking connection completed. This

// method is called by <connect> in the synchronous

// case or by <handle_event> in the asynchronous case.

virtual void complete (HANDLE handle);

private:

// Template ‘placeholder’ for a concrete IPC

// mechanism that establishes connections actively.

IPC_CONNECTOR connector_;

typedef map<HANDLE, SERVICE_HANDLER*> Connection_Map;

// C++ standard library map that associates <HANDLE>s

// with <SERVICE_HANDLER> *s for pending connections.

Connection_Map connection_map_;

// <Reactor> used for asynchronous connection

// completion event notifications.

Reactor *reactor_;

// Inherited from <Event_Handler> to allow the

// <Reactor> to notify the <Connector> when events

// complete asynchronously.

virtual void handle_event (HANDLE, Event_Type);

};

The Connector template is parameterized by a concrete IPC_CONNECTOR and SERVICE_HANDLER. The IPC_CONNECTOR is a concrete IPC mechanism used by a connector to synchronously or asynchronously establish connections actively to remote acceptors. The SERVICE_HANDLER template argument defines one-half of a service that processes data exchanged with its connected peer service handler. Both concrete types are provided by components in the application layer.

When connect() establishes a connection asynchronously on behalf of a service handler, the connector inserts that handler into an internal container—in our example a C++ standard template library map [Aus98]—that keeps track of pending connections. When an asynchronously-initiated connection completes, its dispatcher notifies the connector. The connector uses the pending connection map to finish activating the service handler associated with the connection.

If connect() establishes a connection synchronously, the connector can call the concrete service handler’s activation hook directly, without calling complete(). This short-cut reduces unnecessary dynamic resource management and processing for synchronous connection establishment and service handler initialization.

The code fragment below shows the connect() method of our Connector. If a SYNC value of the Connection_Mode parameter is passed to this method, the concrete service handler will be activated after the connection completes synchronously. Conversely, connections can be initiated asynchronously by passing the Connection_Mode value ASYNC to the connect() method:

The code fragment below shows the connect() method of our Connector. If a SYNC value of the Connection_Mode parameter is passed to this method, the concrete service handler will be activated after the connection completes synchronously. Conversely, connections can be initiated asynchronously by passing the Connection_Mode value ASYNC to the connect() method:

template <class SERVICE_HANDLER, class IPC_CONNECTOR>

void Connector<SERVICE_HANDLER,IPC_CONNECTOR>::connect

(SERVICE_HANDLER *service_handler,

const Addr &addr, Connection_Mode mode) {

// Hook method delegates connection initiation.

connect_service_handler (service_handler, addr,mode);

}

The template method connect() delegates the initiation of a connection to its connect_service_handler() hook method. This method defines a default implementation of the connector’s connection strategy. This strategy uses the concrete IPC mechanism provided by the IPC_CONNECTOR template parameter to establish connections, either synchronously or asynchronously.

template <class SERVICE_HANDLER, class IPC_CONNECTOR>

void Connector<SERVICE_HANDLER,

IPC_CONNECTOR>::connect_service_handler

(SERVICE_HANDLER *svc_handler,

const Addr &addr,

Connection_Mode mode) {

try {

// Concrete IPC_CONNECTOR establishes connection.

connector_.connect (*svc_handler, addr, mode);

// Activate if we connect synchronously.

activate_service_handler (svc_handler);

} catch (System_Ex &ex) {

if (ex.status () == EWOULDBLOCK && mode == ASYNC)

{

// Connection did not complete immediately,

// so register with <reactor_>, which

// notifies <Connector> when the connection

// completes.

reactor_ ()->register_handler (this, WRITE_MASK);

// Store <SERVICE_HANDLER *> in map.

connection_map_ [connector_.get_handle ()]

= svc_handler;

}

}

}

Note how connect() will activate the concrete service handler directly if the connection happens to complete synchronously. This may occur, for example, if the peer acceptor is co-located in the same host or process.

The complete() method activates the concrete service handler after its initiated connection completes. For connections initiated synchronously, complete() need not be called if the connect() method activates the service handler directly. For connections initiated asynchronously, however, complete() is called by the dispatcher when the connection completes. The complete() method examines the map of concrete service handlers to find the service handler whose connection just completed. It removes this service handler from the map and invokes its activation hook method. In addition, complete() unregisters the connector from the dispatcher to prevent it from trying to notify the connector accidentally.

Note that the service handler’s open() activation hook method is called regardless of whether connections are established synchronously or asynchronously, or even if they are connected actively or passively. This uniformity makes it possible to define concrete service handlers whose processing can be completely decoupled from the time and manner in which they are connected and initialized.

When a connection is initiated synchronously in our gateway, the concrete service handler associated with it is activated by the Connector’s connect_service_handler() method, rather than by its complete() method, as described above. For asynchronous connections, conversely, the reactor notifies the handle_event() method inherited by the Connector from the Event_Handler class.

When a connection is initiated synchronously in our gateway, the concrete service handler associated with it is activated by the Connector’s connect_service_handler() method, rather than by its complete() method, as described above. For asynchronous connections, conversely, the reactor notifies the handle_event() method inherited by the Connector from the Event_Handler class.

We implement this method as an adapter [GoF95] that converts the reactor’s event handling interface to forward the call to the connector’s complete() method. The complete() method then invokes the activation hook of the concrete service handler whose asynchronously initiated connection completed successfully most recently.

The complete() method shown below finds and removes the connected service handler from its internal map of pending connections and transfers the socket handle to the service handler.

template <class SERVICE_HANDLER, class IPC_CONNECTOR>

void Connector<SERVICE_HANDLER,

IPC_CONNECTOR>::complete(HANDLE handle) {

// Find <SERVICE_handler> associated with <handle> in

// the map of pending connections.

Connection_Map::iterator i =

connection_map_.find (handle);

if (i == connection_map_.end ())

throw /* …some type of error… */;

// We just want the value part of the <key, value>

// pair in the map

SERVICE_HANDLER *svc_handler = (*i).second;

// Transfer I/O handle to <SERVICE_handler>.

svc_handler->set_handle (handle);

// Remove handle from <Reactor> and .

// from the pending connection map.

reactor_->remove_handler (handle, WRITE_MASK);

connection_map_.erase (i);

// Connection is complete, so activate handler.

activate_service_handler (svc_handler);

}

Note how the complete() method initializes the service handler by invoking its activate_service_handler() method. This method delegates to the initialization strategy designated in the concrete service handler’s open() activation hook method.

Concrete service handlers define the application’s services. When implementing an end-to-end service in a networked system that consists of multiple peer service handlers, the Half Object plus Protocol pattern [Mes95] can help structure the implementation of these service handlers. In particular, Half Object plus Protocol helps decompose the responsibilities of an end-to-end service into service handler interfaces and the protocol used to collaborate between them.

Concrete service handlers can also define a service’s concurrency strategy. For example, a service handler may inherit from the event handler and employ the Reactor pattern (179) to process data from peers in a single thread of control. Conversely, a service handler may use the Active Object (369) or Monitor Object (399) patterns to process incoming data in a different thread of control than the one used by the acceptor that connects it.

In the Example Resolved section, we illustrate how several different concurrency strategies can be configured flexibly into concrete service handlers for our gateway example without affecting the structure or behavior of the Acceptor-Connector pattern.

Concrete connectors and concrete acceptors are factories that create concrete service handlers. They generally derive from their corresponding generic classes and implement these in an application-specific manner, potentially overriding the various hook methods called by the accept() and connect() template methods [GoF95].

Another way to specify a concrete acceptor is to parameterize the generic acceptor with a concrete service handler and concrete IPC passive connection mechanism, as discussed in previous implementation activities and as outlined in the Example Resolved section. Similarly, we can specify concrete connectors by parameterizing the generic connector with a concrete service handler and concrete IPC active connection mechanism.

Components in the application layer can also provide custom IPC mechanisms for configuring concrete service handlers, concrete connectors, and concrete acceptors. IPC mechanisms can be encapsulated in separate classes according to the Wrapper Facade pattern (47). These wrapper facades create and use the transport endpoints and transport handles that exchange data with connected peer service handlers transparently.

The use of the Wrapper Facade pattern simplifies programming, enhances reuse, and enables wholesale replacement of concrete IPC mechanisms via generic programming techniques. For example, the SOCK_Connector, SOCK_Acceptor, and SOCK_Stream classes used in the Example Resolved section are provided by the ACE C++ Socket wrapper facade library [Sch92].

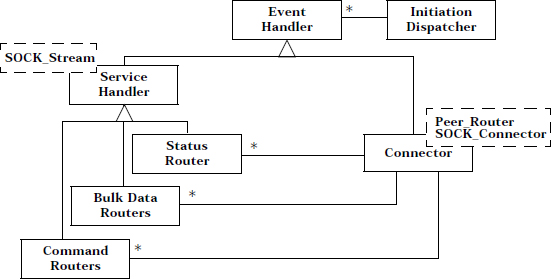

Peer host and gateway components in our satellite constellation management example use the Acceptor-Connector pattern to simplify their connection establishment and service initialization tasks:

By using the Acceptor-Connector pattern, we can also reverse or combine these roles with minimal impact on the service handlers that implement the peer hosts and gateway services.

Implement the peer host application. Each peer host contains Status_Handler, Bulk_Data_Handler, and Command_Handler components, which are concrete service handlers that process routing messages exchanged with a gateway.

Each of these concrete service handlers inherit from the Service_Handler class defined in implementation activity 2.4 (306), enabling them to be initialized passively by an acceptor. For each type of concrete service handler, there is a corresponding concrete acceptor that creates, connects, and initializes instances of the concrete service handler.

To demonstrate the flexibility of the Acceptor-Connector pattern, each concrete service handler’s open() hook method in our example implements a different concurrency strategy. For example, when a Status_Handler is activated it runs in a separate thread, a Bulk_Data_Handler runs as a separate process, and a Command_Handler runs in the same thread as the Reactor that demultiplexes connection requests to concrete acceptors. Note that changing these concurrency strategies does not affect the implementation of the Acceptor class.

We start by defining a type definition called Peer_Handler:

typedef Service_Handler <SOCK_Stream> Peer_Handler;

This type definition instantiates the Service_Handler generic template class with a SOCK_Stream wrapper facade (47). This wrapper facade15 defines a concrete IPC mechanism for transmitting data between connected transport endpoints using TCP. The PEER_HANDLER type definition is the basis for all the subsequent concrete service handlers used in our example. For example, the following Status_Handler class inherits from Peer_Handler and processes status data, such as telemetry streams, exchanged with a gateway:

class Status_Handler : public Peer_Handler {

public:

// Performs handler activation.

virtual void open () {

// Make this handler run in separate thread (note

// that <Thread::spawn> requires a pointer to

// a static method as the thread entry point).

Thread_Manager::instance ()->spawn

(&Status_Handler::svc_run, this);

}

// Static entry point into thread. This method can