Chapter 2. The Glass Ecosystem: What It Is and How It Is Different

When Glass was first unveiled in 2012, the developer community was both put on notice and challenged, with Google producing a new application client that’s radically different. But Glass is more than just a set of hardware specifications or the apps that are available by default, it is a new way of interacting with your computer and with the real world.

Our main goal isn’t to give you the information just to produce Glassware—we want you to produce GREAT Glassware. Your success is our success. So let’s hit the ground running and set you off on your journey to thoroughly understanding the Glass ecosystem and becoming a Glassware-producing superstar.

What You See and What You Get

As you can tell from Figure 2-1, the maturity of the Glass design scheme has come a long way in about two years. The headset appears as a minimalist pair of glasses that have been bulked out with some additional hardware. On the one hand, the additions are sleek and almost futuristically styled. On the other hand, they are clearly noticeable and perhaps a bit bulky. Some are made even more visible because of the color choice.

But contained in this device is a small modular bundle of technology:

- A battery module

- A micro-USB port that serves as power source, headphone jack, and data port

- A bone conduction transducer speaker

- A trackpad that detects forward, backward, and downward swipes, as well as one-, two-, and three-finger taps and long presses

- Assorted sensors that can detect head tilts, head turns, and eyelid movement

- WiFi radio and Bluetooth module to communicate with either a phone or directly to the Internet

- A fixed-focus camera that has roughly the same field of vision as your eyes

- A microphone tuned to pick up the wearer’s voice

- And, of course, the characteristic display that gives Glass its name

We fully detail the full technical specs for Glass later in this chapter.

Glass Is a Platform, Not a Product

It’s important in learning how to Think for Glass to realize that the product known to the world as “Google Glass” isn’t just a device you wear on your head. It’s a full ecosystem—a synergy of hardware, software, applications, APIs, and a backend environment. It’s also an opportunity to create new peripherals and accessories for things like custom frames, sticker designs, display sleeves and covers, and other modular add-ons.

Like most of Google’s product line, Glass is a platform, not a product (Figure 2-2). The hardware that makes up Google Glass itself is an achievement of industrial design. The product has conquered environmental challenges of size, weight, and sturdiness, impressively addressing obstacles like power consumption, networking requirements, and heat dissipation. On a functional level, it seeks to accomplish a single type of experience—to cater to microinteractions, allowing the wearer to utilize technology while not being taken out of the moment.

The hardware, the system software, and the application environments represent a balance between user needs, aesthetics, and design constraints and are extremely elegant in their approach. Glass embodies a huge shift for Google from a design standpoint. The company that gained global notoriety for its intentionally sparse and minimal user interface elements with its web systems to capitalize on speed has invested heavily into assembling a team of designers, materials experts, human–computer interaction savants, and scholars of personal computing to come up with a design concept that’s pleasing to look at, comfortable to wear, inherently self-promotional (“Hey, check it out! That guy’s got Google Glass!”), and functional. The slender shape of Glass, functionally wrapped around your head, is sleek and modern, not clunky and cliche.

That’s a fairly tall order for any device, much less one that’s worn on your face, and weighing less than a pound.

The components are packaged into a thin form factor that sports cosmetic appeal and handles the connectivity for Glass and communications demands of an Internet-aware device. Glass was built with modularity in mind, and the arm containing the electrical components can be removed from the frame for aftermarket modifications like fitting them to custom frames.

The Glass team was beyond obsessed with getting the form factor right. Isabelle Olsson, lead industrial designer for Google Glass said at Google I/O 2013, “If it is not light, you’re not going to want to wear it for more than ten minutes,” adding, “We care about every gram.”

The space-aged aesthetics of the headset with its extremely pliable titanium-trimmed frame, matte finish, and prism display make Glass comfortably symmetric on either axis—the headset doesn’t slide too far forward on your face, being counterbalanced by the rear-seated battery sitting behind your ear; and it won’t awkwardly sag sideways, despite the impression that all the gadgetry is lopsided to one of the arms.

On the software side of things, the Android-based firmware that runs Glass features an extremely responsive UI sitting on top of the timeline concept that’s easy to master with a slick multi-input control system, is highly performant, and properly handles multimedia like other mobile platforms. The UI uses a simple card-based metaphor (increasingly used across many of Google’s products, and one that we’ll go into great detail about later) with simple head gestures like nods and voice commands to control it. This lets most of the common tasks be run with minimal CPU usage, and thus minimal drain on the battery.

The Glass Application Model

Glassware, those programs running on Glass, is available in two distinct flavors: those built using the Google Mirror API and those written with the Glass Development Kit. Each has its own approach to deliver a consistent experience. Both of these frameworks are thoroughly examined in third part of the book, Develop, but let’s take a look at them briefly here.

Mirror API

The Mirror API is a RESTful interface, handling server-side programs with all computation done in the cloud before they get sent to your device, inserted into your timeline, and rendered as cards. All you get is the finished product, thoroughly cooked and ready to eat. These types of services resemble traditional client/server web apps.

Because Mirror API programs run purely in the cloud, the processor on Glass is left free to work on other things and not worry about calculations, string manipulation, on-the-fly interpolation, working with binary data locally, or client-side presentation mechanics. The payload is pure HTML and CSS style rules (but no JavaScript, at least not at the moment), both lightweight and simple to handle. Multimedia can also be included with minimal additional overhead, and video is streamed on-demand, not downloaded.

The Mirror API framework is based on the publish/subscribe model that enables push notifications, and avoids repetitive operations between clients and servers. As opposed to the traditional method of installed programs, this keeps downstream payloads to a minimum. To receive updates and interact with the Glassware, users authorize their Google accounts to register with the Glassware without needing to install executables on the device. This eliminates a lot of unnecessary network roundtrips, which again, reduces radio activity (via WiFi or Bluetooth tethering to a mobile device), bandwidth, and battery use.

This is significant to the optimal performance of the system. There’s no requirement for managing a massive internal storage hard disk to store application components like embedded databases, configuration settings, and data caches. This also results in the development time for Mirror API projects being incredibly rapid—you can get a complex project up and running in a couple of hours. This speaks directly to the flexibility of the Glass ecosystem.

Mirror services do have two big requirements: being cloud-aware, they obviously need connectivity. Also, services need to be registered by using an OAuth provider, which typically means having to do so in a browser. There’s no executable file you can just send to someone.

For services where network access is optional, you need access to the Glass sensors, the default UI of Glass needs to be extended, or you’d like a more flexible means of distributing your programs, Glassware can be written using the GDK.

Glass Development Kit (GDK)

For those who want more granular control of their application and the environment in which it runs, Glassware built using the Glass Development Kit, an extension to the Android SDK for Glass, are programs that are written in Java and installed on your device. The GDK extends the standard libraries used for Android programming with Glass-specific features, resulting in an app that can be distributed easily and installed directly on the device.

GDK Glassware goes functionally further than the Mirror API, in directly accessing the hardware and being able to run offline. In applications programmed with the GDK, the same lightweight, microinteraction model is enforced via the timeline and cards UX, but this can be expanded upon, or even diverted from completely. It’s possible to program immersive native experiences on Glass, letting you create UIs that stay resident in the prism and are meant to be used over longer sessions.

Examples of immersions are games and applications using the camera.

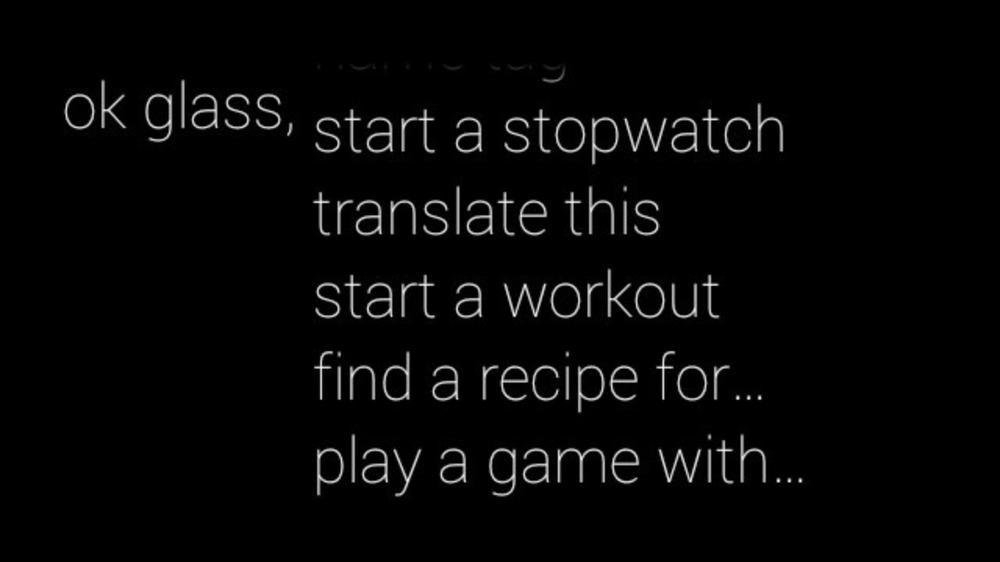

Actions, Not Apps

This approach to the application model leads to a different way of thinking about how to treat apps with Glass. As we will see, the most natural way to use Glass is to think about what you want to do, not what app you want to run to do it. In fact, we would be so bold to say that if your mindset is about apps and not what you want to do with Glass, YOU’RE THINKING ABOUT IT WRONG. Glass doesn’t have a launcher in the same way a phone or tablet does, and the home screen invites you to issue instructions to it. The results from Glass commands are displayed as part of the timeline, mixed together with the results of other notifications, updates, and commands. Although each of these cards is generated by a program, we don’t normally need to think about which program generated them.

Similarly, Glass changes the way that we as users interact with wearable computing devices through the various input mechanisms it supports—swiping/tapping on the trackpad, using voice commands, using head movement gestures, and even winking to instruct Glass to take a picture. All of these manipulate what is shown to us, but none of them appear to “launch” an application directly. Instead, Glass represents them as verbs—actions that are doing something, rather than objects that do it.

In the future, it may even be possible to see other physical actions associated with activities on Glass. There has been a lot of work in extending the ways to trigger actions on the system—Google was granted patents to allow a user to make hand motions out in space, which the company hinted might ultimately be used to endorse a post on social networks (like, +1, heart, star, thumbs-up, mood, etc.), by making the popular heart hand gesture. And the company Remotte aimed to further make interacting with Glass seamless, launching a Kickstarter campaign to sell its remote control that communicates with Glass over Bluetooth. Users can keep their hands in your pockets and just control the HMD with simple button clicks while out for a walk, never needing to reach up and fiddle with the trackpad.

A point of reference about the “actions, not apps” idea is the voice commands available to launch Glassware. These aren’t just arbitrary trigger phrases picked because they sound neat or roll off the tongue nicely or are terse enough to work in a crowded room—they use active voicing with strong verbs and truly capture the essence of interacting with the wearable software. They lay out very simply and without ambiguity what single action you need to take to get an app to conduct its main purpose. And that’s what you need to target.

The projection unit actually shares space with other key hardware in the area of the device officially known as the optics pod, the module that houses three very powerful sensors on Glass—the accelerometer, the gyroscope, and the magnetometer.

The outside of the brick (the side perpendicular to your face) is covered with two-way reflective material so as not to let ambient light in or out. Projected content bounces off the angled piece, refracting the light down through the flat side of the prism facing the user’s eye, and projecting it onto the wearer’s retina, but giving the impression of information being projected out in space in front of them. The end result isn’t unlike most commercial HUDs commonly used in some automobiles.

Because the HMD is strapped to your head, the display perfectly follows whatever direction you’re facing, consistently and without lag. It’s always there.

The timeline UI and the cards within it appear as semitransparent images, allowing the user to look through the content set against the real world as a background stage. The projector can also be adjusted manually, as the section containing the unit is on a hinge, and can be manipulated for better visibility. Glass sends the content directly at you at a perceived scale that’s neither too big nor too small. To achieve a natural and comfortable depth perspective, Glass tweaks the appearance of projected material out in space by way of a clever optical illusion (see the sidebar Calling upon an Old Hollywood Trick), all while being mere centimeters from your periphery. Many first-generation Glass users have said looking at content in Glass is akin to holding your smartphone screen at arm’s length.

As a pro tip to see the actual difference between the projected display to really appreciate the display while wearing Glass, try this: wake up Glass so that some sort of content is showing, and then look at yourself in a mirror. While the display as it appears to you is relatively large and legible, the actual dimensions of the display are actually miniscule.

That’s the effect it achieves and the magic behind how it gets it done.

A couple more helpful things that the prism does for its wearer automatically are in terms of the amount of available rays. The prism is made of a photochromatic lens that reacts to an increase in ultraviolet light, applying tinting so that direct sunlight doesn’t blur information or become magnified and blind you while you wear it. On the opposite end of the spectrum (pun certainly intended), if you have trouble reading the content being projected indoors in artificially lit rooms, try pointing Glass for a moment toward a lamp, a TV, or some other highly illuminated object. One of the sensors that the device has on board (which we cover in Chapter 13) measures light and self-brightens the display if the environment is too dim.

Reinventing Human–Computer Interaction

Another subject that got a lot of attention before Glass rolled out was exactly how much independence it would have as a communications device. People constantly debated the autonomy of the wearable computing apparatus—one camp assumed that it would merely exist as a peripheral or accessory, linked to a phone in order to communicate, and ostensibly dead in the water without it; others surmised it would be a first-class computer, complete with its own cellular data connection.

Glass thankfully is a hybrid of sorts, a self-contained device with its own WiFi radio and not explicitly requiring an accompanying smartphone, but enhanced by tethering to another smart device for network connectivity and for telecommunications services like text messaging and voice calls. This mercifully relieves you as a user of the need to have multiple data plans with a carrier, reducing your total cost of ownership. Administering your Glass profile requires only the occasional peek at the helpful MyGlass mobile/web app to manage Glassware subscriptions and manage contacts.

The main theme, and a prime objective of how the system was designed, is to require as little user input as possible to negotiate the system. It’s a hands-free, ears-free, and wires-free means of staying connected and interacting with others online.

Glass is also able to audibly recite text content to you. Glass additionally becomes a telephony device when tethered to a smartphone, facilitating text messaging and voice calls, and chat sessions and multiuser calls through Hangouts.

As long as Glass has a network connection, you’re plugged in (metaphorically) and ready to run with core services like search, participating in Hangouts, obtaining directions, and getting real-time directions, as well as using the growing number of third-party Glassware applications the community is building. You could get by with just WiFi and the occasional visit to a desktop web browser to manage your profile.

And even if you go offline because you’re out of cellular range, your phone’s battery dies, your WiFi time at the library expires, whatever the reason—Glass is still perfectly capable of taking pictures and shooting video and storing files locally, which can be synced to the cloud when you regain connectivity.

And in case you were wondering about any dangers about waves being emitted from a computer that sits resident right next to your brain for potential extended periods of time, because the radio communication is short range, being Bluetooth tethering or WiFi, the radiation that Glass gives off, according to Google, is “significantly less than a cell phone.” Additionally, Glass is designed to force heat generated by the processor to flow away from the user, so even in times when Glass may be heating up due to extended use or with applications that require a significant amount of processing, the heat can be felt on the outside of the touchpad opposite your head, but you won’t feel it against your skull.

What Glass brings to the table is a rapid-response mechanism for you to stay in the moment. And this means not requiring you to look down, negotiate input controls, type frantically, and navigate through menus and complex user interfaces to do what you want. Its hands-free, ears-free, wires-free design liberates you in being able to interact with objects, places, and people as you normally would without fidgeting with a device and manipulating a screen to perform operations like editing photos, seeing where people are in relation to you, joining a Hangout, or sending a message.

Glass doesn’t accomplish anything you can’t already do with existing technology, it just does it without so much effort. What’s critically important to realize is that Glass was designed so people would interact with it very differently than other types of computing devices. “But,” you’re probably saying, “isn’t this what I’m already doing with my smartphone?” Absolutely. The product doesn’t intend to take any of the shine away from its more tenured cousins—the smartphones, laptops, and tablets you’ve been using for years. Rather, it wants to be a valuable, contributing member of the family and take that interaction to the next level.

This isn’t just wearable technology, this is personal technology. Really let that statement sink in for a few seconds, because it’s key. Understand that this isn’t personal like “personal computer”—in some ways, it is, but it’s also so much more. This is a new dimension of connectedness with data, and a new form of intimacy—both for you with your social connections, and for you with your device.

The Science Behind the Projection

One of the biggest curiosities pundits sought resolution on as the initial Project Glass: One day…. concept video and the follow-up video, How it Feels (through Google Glass) made their way around the Internet and into societal consciousness was the device’s display. This fervor ramped up in intensity as shots from various angles of the early prototypes immediately went viral. Great interest ensued for insight about the little sliver of clear glass’s resolution, focal length, clarity, HD capacity, and ability to share what the wearer was seeing on other displays via mirroring. It wasn’t too long until the projection system was detailed, revealing some very impressive engineering that delivers remarkable quality in an incredibly small space.

People pondered the possibilities of the system and what radical new technology might be at play, and whether text, images, graphics, and video in Glass would be floating as an overlay in some sort of flat monitor with content sitting out in space for a user to gaze at, or whether data would be projected directly onto an owner’s eyeball. A technical teardown of the hardware by Catwig.com published in June 2013 notes the “the pixels are one-eighth the physical width of those on the iPhone 5’s retinal display.” This for a while was one of the most hotly contested topics in Glass forums, in media reports, in social posts, and around water coolers.

The answer is—it’s both!

Glass displays information by using its signature prism display in concert with a sophisticated projector system. The projection unit is mounted at the front of the arm just behind the embedded camera, casting its image within the display brick, which houses a second piece of glass at a 45-degree angle, creating a prism. What you may not have immediately noticed is that the Glass logo, which is also its social avatar, features a slanted “A” in the product name, which is a clever mnemonic device reminding the wearer about how Glass achieves its overall experience (it admittedly took the both of us months to realize this). Pretty sneaky, huh?

How Glass Gets Audio into Your Ear

Bone conduction transducers aren’t exactly a new concept, but their use in Glass is going to be most people’s maiden voyage with the technology at the consumer level. Devices built on the technology have been available as hearing aids for the hearing impaired or the elderly.

Ears-free audio in Glass is achieved by converting audio signals to vibrations, which are then sent through the speaker inside the arm of the frame on Glass and vibrate against the user’s skull behind the earlobe and into the inner ear, rather than by broadcasting soundwaves directly into the user’s eardrum. The audio quality and clarity is remarkably good, comparable to a good pair of headphones.

But for the more traditional user, Google makes a set of micro-USB earbuds designed specially for Glass. You get the best of both worlds!

Battery Life

Glass isn’t meant to be used as a perpetually-on device. The use of camera-centric services such as recording video for long periods of time, in addition to applications that cause the Glass projector to stay on and tax the processor, like games and turn-by-turn navigation, will more dramatically drain a battery’s charge than allowing the device to go to sleep after a few seconds of nonuse. This shouldn’t come as a surprise—most mobile devices that excessively use a camera, display, or data communication won’t have tremendous usage time. (The Amazon Kindle is one of the few exceptions.) Glass isn’t any different in that regard.

Building apps in such a way as to not kill the user’s battery is a major principle of program design for any mobile developer, but it’s especially true for Glass when considering the low-intrusion goal that we’ll cover thoroughly in Chapter 13 when we talk about the GDK. But just know that it is possible—and highly encouraged—to design your native apps in such a way to leverage Glass gracefully going to sleep on its own while having your program continue to run in the background.

Using the System

Information in Glass is presented through a simple concept but controlled in a variety of ways. Everything in Glass exists around the timeline. Your home screen—the simple UI element with the current time and “OK Glass” underneath it—serves as your timeline’s anchor as its center point, as indicated in Figure 2-3. Much like windows are the main visual elements that let you control a graphical operating system, a user’s timeline is the interface through which she receives information, gets notified of new content, interacts with subscribed services, sends the system user input, and makes changes to her customization settings. It’s a snapshot of your activity and notifications.

A timeline consists of cards—units of information that support text and multimedia, spanning everything from system settings and status messages, to games, tweets, chat messages, and email messages, optionally organized into groups known as bundles. Additionally, cards may be pinned so that they sit close to the home screen and are available for quick reference, like bookmarking.

Each user’s timeline has two parts, each consisting of information and events—what’s coming up and what’s already happened, navigable by single-finger swiping back or forward on the touchpad. System settings, pinned cards, and Google Now cards for upcoming events like calendar entries, to-do items, sports scores, weather forecasts, and stock prices are accessible by swiping back on the touchpad toward your ear (to the left of the home screen). Also found left of the home screen are any pinned cards, as well as running programs for native apps—both types of cards give the feeling of more dynamic content, and play into the idea of things that are happening at the current moment.

Swiping forward toward your eye (to the right of the home screen) moves over the collection of older items for a running log of your activities. If cards have additional functionality like a series of menu items for a picture, tapping the trackpad to iterate through the available commands for selection is analogous to a mouse click on a desktop computer. Swiping downward goes up a level, so that if you’re in a menu item, it goes back to the card with that menu, for example. If you’re at the top level, it turns the display off.

Glass turns itself off after a few seconds of inactivity. To turn it back on, you can either tap the trackpad to wake it up, or enable a setting that lets you tilt your head upward and wake Glass up. Glass additionally supports optional features, such as automatically waking from sleep when it’s being worn, or enforcing a screen lock consisting of user-defined gestures. Powerful voice controls as we’re accustomed to in recent versions of Android are available, both in interpreting specific commands from the main screen for things like messaging—the canonical “OK Glass” menu—as well as for spoken input when replying to a message.

The Camera: Photos, Videos, and More!

Glass is pretty much a point-and-shoot device. A shutter button on top of the camera manually initiates photo taking or video recording. Framing can be a bit awkward because the lens is shifted to the right, but the widescreen nature of the pictures generally captures everything that is in your field of vision. The Viewfinder application acts as a preview monitor, and some Glassware projects are looking to expand the camera’s capabilities to be on par with many commercial DSLRs so you can have a better handle on getting the shot you want.

If you’ve enabled Google+, media files are uploaded to a private album via Auto Backup. This also means that pictures shot in rapid succession along with videos can be assembled by Google+ as Auto Awesome movies. (The ability for an app to compile and edit video footage into a prepared presentation is a concept that Glassware like Perfect provide, taking your raw footage and assembling time-lapsed mini-movies.)

Once you’ve taken a picture, you can immediately share it by saying, “OK Glass, share with…” to share the image with a contact or a piece of Glassware (this concept is big and we’ll get to in Chapter 11). You can also choose the Send menu item to upload the image to a contact to start a Hangout chat, or to an ongoing conversation with a group.

Video recording by default is for 10 seconds. To record longer clips, tap the trackpad and choose Extend video or just press the top button again. To stop, tap and select Stop recording or press the top button once more. You can also upload recorded video to the YouTube channel associated with your Google account. Just enable the YouTube Glassware and you’ll see the options to share the video as Public, Private, or Unlisted.

However, while Glass can take impressive five-megapixel pictures that look especially good in natural light, don’t expect to throw out your existing gear or anticipate never buying another camera again. Because of the intent for the embedded camera to work quickly and capture things in the moment, it doesn’t have auto-focus or zoom. It also lacks the types of controls that cameras on other Android devices have, with the focal perspective being fixed. In this regard, the camera is a compromise between several different needs in order to handle the types of situations in which you’ll want to quickly capture life happening, at the expense of having a full-feature photography rig.

This shouldn’t be seen as a handicap of the system, but the key driver of it. This is what it means to Think for Glass.

Some of the initial natural limitations with the device are evident in the framing of a shot (with the lens right-of-center on your head), having to holding the camera still (meaning not moving your head), and shooting subjects from an appropriate distance to capture them legibly (which varies depending on the situation). So, some early criticism was that the Glass camera generally isn’t suitable for doing QR code processing, but some app developers have found clever ways around this.

Glass Is a Great Listener

You’ll probably be using voice actions a ton to interact with the system. Glass wakes up either through you tapping the touchpad or using a head gesture (if enabled in the Settings bundle). Once active, announcing the hotwords “OK Glass…” triggers the full list of voice actions available to you to be displayed, which is made up of the default system commands for Glass plus any trigger phrases for any apps you may have installed or services to which you’ve subscribed. This is essentially the same voice-driven technology used in Android search and in Microsoft’s Xbox One. You can speak the command or tilt your head vertically to scroll through the choices and say the command to select.

Voice actions (as of the Explorer Edition):

“OK Glass, Google + <QUERY>”

- Open-ended searches return a list of cards as results, which may be matching images and/or web pages. You can also force a filtered search of Google’s index for images by saying “images of…” and for video clips by saying “videos of…”.

- As far as browsing the sources for search results, Glass provides you with an embedded instance of a browser so you can view pages on the Web. A set of slick tools are available to let you navigate pages.

Search on Glass taps Google’s Knowledge Graph and uses the same natural language syntax as Google Now, and works best with simple informational queries based on factual information like the following:

- “Who wrote The Canterbury Tales?”

- “How tall was Wilt Chamberlain?”

- “Who were Mel Brooks’ wives?”

- “Stock price of General Electric”

- “When was Bon Jovi’s Slippery When Wet released?”

- “Convert 3 US Dollars to Yen”

- “What will the weather be like in Columbus, Ohio on Thursday?”

- “How old was Bruce Lee when he died?”

- “What states make up The Four Corners?”

- “What’s the population of Winter Garden, Florida?”

- “How long does it take to get a PhD?”

- “How far to Hershey, Pennsylvania?”

- “How many homeruns did Roger Maris hit in 1961?”

- “What is breaking the fourth wall?”

- “Cast of Dude, Where’s My Car”

- “What is the seating capacity of Michigan Stadium?”

- “Definition of subcutaneous”

- “What is Gene Simmons’ real name?”

- Asking something more vague like searching for something abstract such as “Why did Francis Ford Coppola feel the need to make The Godfather: Part III?” or “Will I ever find love?” won’t be as accurate. Glass isn’t that smart…yet.

“OK Glass, take a picture" / “OK Glass, record a video”

- You can also press and release the shutter button to take a picture, or hold the shutter button down to initiate recording.

- If you’ve optionally enabled and set up the Wink feature in the Settings bundle, you can take pictures with Glass by winking, even if the device is idle and the screen is locked.

“OK Glass, get directions to + <LOCATION or ADDRESS or GENERIC PLACE>”

- Returns a list of known places. Selecting a place launches turn-by-turn-navigation.

- Tapping on a directions card also lets you swipe through a series of travel choices, giving you directions for driving, walking, biking, and transit.

- Location searches are best when using a formal name of a place like “McDonald’s” or a generic type of location like “gas stations” (which give you a list of matches), the full address of a location such as “1600 Pennsylvania Avenue,” or user-defined names like “School,” “Work,” and “Party Spot.”

“OK Glass, send a message to + <CONTACT’S NAME or CIRCLE or HANGOUT GROUP>”

- Create a message for the specified recipient via voice dictation. Once you stop speaking, Glass waits for one second before delivering the message and during that time gives you the option to swipe down to erase it and start again.

- Glass uses a specific hierarchy of services for Messaging, with Hangouts sitting atop the food chain. If you have the Hangouts Glassware enabled, that service will be the default delivery mechanism for all contacts. If you’ve set up Google Voice on your phone and are tethered via Bluetooth and have paired Glass with the MyGlass mobile app, the message will arrive from your Google Voice number. Otherwise, Glass defaults to using your phone’s SMS number with your carrier. If your recipient only has an email address listed in his or her contact information or you’re not running MyGlass, voice action messages use email delivery.

- Glass also sets a phone number associated with your contacts as their Calling information, which is what’s dialed when selecting them for a voice call.

- You can still configure default messaging services on a per-user basis—just click/tap on their card in MyGlass and you’ll be able to assign a service to them as their preferred delivery method—email, SMS, or Hangouts. Thus, when you use “Send a message to…”, the selected service is used.

- Group conversations are also supported through Hangouts. If you’re part of a group chat, that conversation will be available for you to send messages and photos to. The structure of group messaging on Glass typically requires you to add multiple users via the desktop, browser, or mobile versions of Hangouts, which are then available on Glass. This makes the process more akin to email—not everyone in the group will have Glass or may be designated as a sharing contact, so you will need to add them to the chat manually on another device (just like addressing an email message). It’s an extra step, but it really does pay off in the final wash.

- MyGlass also manages SMS threads intuitively, forwarding outgoing text messages from a connected smartphone to Glass and displaying full threaded conversations as card bundles.

“OK Glass, make a call to + <CONTACT’S NAME or CHOOSE FROM LIST>”

“OK Glass, post an update to,” “OK Glass, take a note with,” “OK Glass…<invoke native Glass app>”

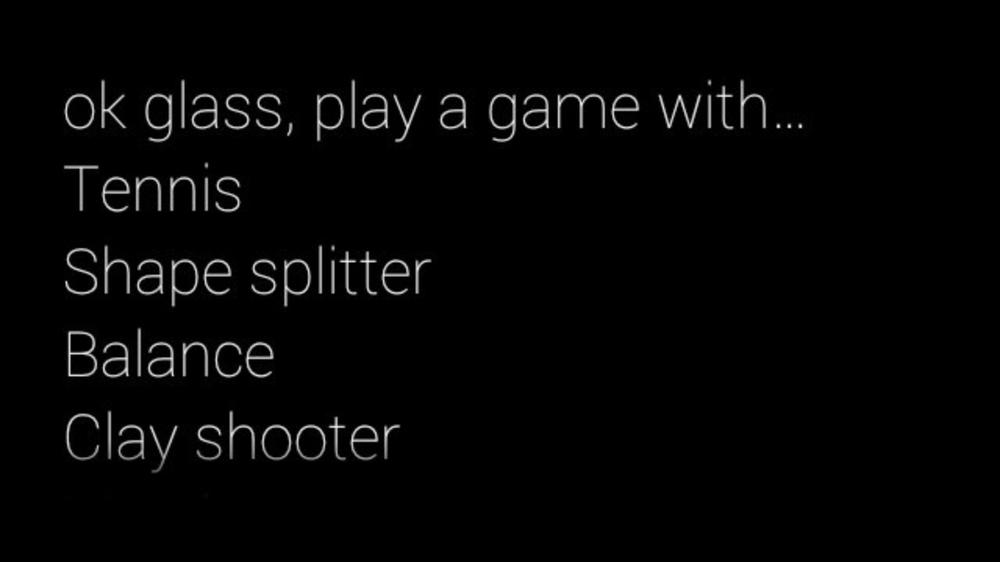

- While the GDK gives you a little more leeway into what voice prompts are accepted to launch an installed application, the Mirror API allows you to use two custom voice commands to invoke your service. “Post an update to” and “Take a note with” can be used a gateways into your Glassware for sharing images, videos, or voice data transcribed to text with your contacts. The other wildcard that the voice commands menu provides is as a gallery of your installed applications, written with the GDK. Native programs that you’ve enabled through MyGlass or sideloaded can be launched hands-free—each has a key hotword phrase, such as “Play a game” or “Translate this” or “Start a timer,” appearing in order of their most-recent use among other trigger phrases and the system commands mentioned previously.

- The chance that two or more applications will want to use the same trigger phrase is inevitable. To resolve these naming conflicts when someone utters a shared command, Glass displays a secondary menu to disambiguate the command between apps. For example, the Mini Games Glassware is actually a collection of five titles, which all share the same “Play a game” hotword phrase, but so does the social spelling game Spellista, in a completely different Glassware package. Saying the phrase displays a second-level menu that lets you choose between the games, as Figures 2-4 and 2-5 demonstrate. So Glass handles what could be potentially confusing same-name issues for you right out of the box.

- In case you’re wondering how the trigger phrases get chosen, here’s the lowdown: all voice commands that stem off “OK Glass” are subject to approval by Google. While Glassware developers working with the GDK may be able to add their own spoken text to launch their apps during testing, programmers have to choose from a finite set of approved voice commands, as is the case with Mirror API Glassware, as mentioned earlier. This helps cut down on abuse and provides categorization.

It’s fully expected that future system updates will include more voice actions.

Content Creation in a POV World

The future of content generation is going to take some very interesting turns with Glass and other platforms like GoPro and Action Cam rigs that emphasize the first-person perspective. Whether in telling a story, documenting history, or accidentally stumbling across a significant moment in time, first-person video will invariably change the premium that we as content creators put on the use of body language and gesticulation to create effective media when standing in front of the camera—the emphasis is now shifting to POV cinematography and ad-lib narration.

Even the most seasoned media-savvy presenters may find this transition a little odd, being devoid of the physical traits of stressing points mid-dialogue and relying on shot selection. Jason, who’s got a ton of experience as a TV presenter under his belt spanning everything from anchoring newscasts to calling live sports to doing storm coverage to moderating political debates to hosting beauty pageants, is really excited about some of the new opportunities this creates. In this light, we’re becoming one-man bands—wholly independent camera crews. And the prospect of someday being able to broadcast video via Hangouts On Air live from Glass from the field means no satellite trucks and other cost-prohibitive infrastructure. Some very interesting and creative opportunities will come from this very quickly.

And the implications for video from the perspective of the user aren’t a new thing. The passionate uptake in first-person view videography by the remote control and unmanned aerial vehicle communities will simply leave you speechless. Using off-the-shelf and hobby shop components, with cameras that feature amazing stabilization control mid-flight, it’s possible to remotely control quadcopter drones and capture and monitor high-definition video that’s of uncanny quality. In years past, this required the type of gear and expertise that made it available to largely only professional film crews commissioned for special projects. Now, practically anyone can get involved.

If you’d like to learn more about this exploding space, check out former Wired editor-in-chief Chris Anderson’s company, 3D Robotics.

User-driven media is already changing in neat and very creative ways, so have fun being part of the new generation of filmmakers!

Which Hue Is for You?

How many points has your blood pressure increased when trying to pick out that perfect paint job when buying a car? It expresses your personality and makes a statement about your style. Glass is available in five colors—white (Cotton), powder blue (Sky), primered black (Charcoal), beige (Shale), and orange (Tangerine). Don’t sell color selection short—consumer electronics like the iMac, iPod Mini, Toyota Prius, and Nintendo 3DS have made billions of dollars using a varied palette of pastels, endearing themselves to their owners’ personalities based solely on aesthetics. We don’t dare attempt to quantify the volume of posts throughout social networks crying over why someone’s favorite shade of green or purple or red or yellow or pink or the millions of other RGB combinations got no love.

As proof of the level of devotion people have about their selected shade of frame, if you peruse Google+ Communities groups focused on Glass, you’ll notice the friendly rivalry between the ardent supporters of each color—Team Sky, Team Tangerine, etc. People really get passionate and align themselves with this stuff.

We’ll discuss some of the fashion concerns about Glass in Chapter 3, and opportunities for expansion kits, accessories, and even alternate frame designs in Chapter 15.

Welcome to Wearable Computing!

As a new Glassware developer you join a select community—you’re one of the first wave of people adopting (and we hope passionately embracing) wearable computing in your digital lifestyle. Most testimonials that recount their first time using Glass admit it feels awkward, but then is really cool. It really changes how you multitask. Mastering the control system isn’t difficult, and adjusting system settings to make Glass your own gives you a true sense of ownership. The overall feeling is one of liberation, putting you in charge of your digital existence.

Some people say they feel right at home using Glass, others feel pressured to prove to themselves they can make it work. The best way to use Glass in your daily life is just that—take it around and give it a spin in your normal routine. Put it on, go run some errands, hang out at the mall, take it into the office, fill out a report, take it to the basketball court for a shootaround, or go get your taxes and/or hair and/or nails done. It’s always there when you need it. Do what you’d normally do as a connected individual, but use Glass instead of the tools you’ve always used. Enjoy the freedom of not having to constantly consult your smartphone for news updates, location-specific check-ins, and social posts in all forms. And watch data literally appear as it happens. Take some pictures, shoot some video, share some neat stuff.

So…off you go. Tell Glass what you need while you take a walk and notice how you can be more of an active part of the world around you, no longer looking down at a screen. And then take note of how Glass quietly slips out of the way, not bothering you until you need it the next time. That’s the essence of the Google Glass experience and your first step toward being able to Think for Glass. Chapter 5 gives developers a proper outline of how to design services to best fit this method of delivery.

Welcome to the revolution!