16

Basic Cloud Programming

THE CLOUD, CLOUD COMPUTING, AND THE CLOUD OPTIMIZED STACK

It is only a matter of time before you begin creating applications that run completely or partially in the cloud. It’s no longer a question of “if” but “when.” Deciding which components of your program will run in the cloud, the cloud type, and the cloud service model requires some investigation, understanding, and planning. For starters, you need to be clear on what the cloud is. The cloud is simply a large amount of commoditized computer hardware running inside a datacenter that can run programs and store large amounts of data. The differentiator is elasticity, which is the ability to scale up (for example, increase CPU and memory) and/or out (for example, increase number of virtual server instances) dynamically, then scale back down with seemingly minimal to no effort. This is an enormous difference from the current IT operational landscape where differentiated computer resources often go partially or completely unused in one area of the company, while in other areas there is a serious lack of computer resources. The cloud resolves this issue by providing access to computer resources as you need them, and when you don’t need them those resources are given to someone else. For individual developers, the cloud is a place to deploy your program and expose it to the world. If by chance the program becomes a popular one, you can scale to meet your resources needs; if the program is a flop, then you are not out much money or time spent on setting up dedicated computer hardware and infrastructure.

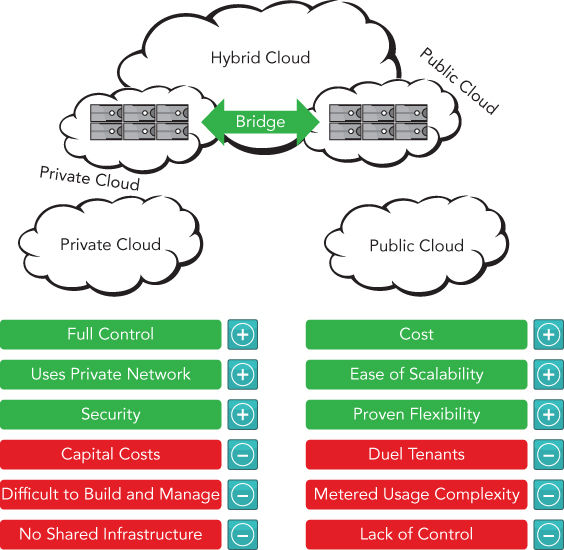

Let’s explore cloud type and cloud service models in more detail now. The common cloud types are public, private, and hybrid and are described in the following bullet points and illustrated in Figure 16‐1 .

- Public cloud is shared computer hardware and infrastructure owned and operated by a cloud provider like Microsoft Azure, Amazon AWS, Rackspace, or IBM Cloud. This cloud type is ideal for small and medium businesses that need to manage fluctuations in customer and user demands.

- Private cloud is dedicated computer hardware and infrastructure that exists onsite or in an outsourced data center. This cloud type is ideal for larger companies or those that must deliver a higher level of data security or government compliance.

- Hybrid cloud is a combination of both public and private cloud types whereby you choose which segments of your IT solution run on the private cloud and which run on the public cloud. The ideal solution is to run your businesses‐critical programs that require a greater level of security in the private cloud and run non‐sensitive, possibly spiking tasks in the public cloud.

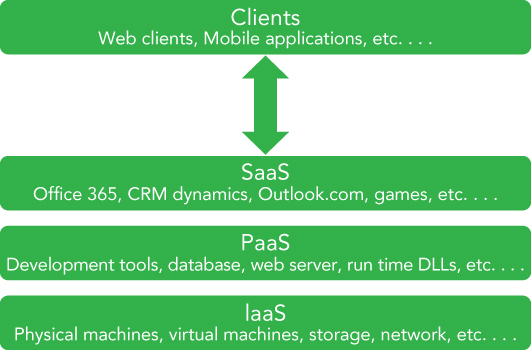

The number of cloud service models continues to increase, but the most common cloud service models are described in the following bullet points and illustrated in Figure 16‐2 .

- Infrastructure as a Service (IaaS) —You are responsible from the operating system upward. You are not responsible for the hardware or network infrastructure; however, you are responsible for operating system patches and third‐party dependent libraries.

- Platform as a Service (PaaS) —You are responsible only for your program running on the chosen operating system and its dependencies. You are not responsible for operating system maintenance, hardware, or network infrastructure.

- Software as a Service (SaaS) —A software program or service used from a device that is accessed via the Internet. For example, O365, Salesforce, OneDrive or Box, all of which are accessible from anywhere with an Internet connection and do not require software to be installed on the client to function. You are only responsible for the software running on the platform and nothing else.

In summary, the cloud is an elastic structure of commoditized computer hardware for running programs. These programs run on IaaS, PaaS, or SaaS service models in a Hybrid, Public, or Private Cloud type.

Cloud programming is the development of code logic that runs on any of the cloud service models. The cloud program should incorporate portability, scalability, and resiliency patterns that improve the performance and stability of the program. Programs that do not implement these portability, scalability, and resiliency patterns would likely run in the cloud, but some circumstances such as a hardware failure or network latency issue may cause the program to execute an unexpected code path and terminate.

Reflecting back to the elasticity of the cloud as being one of its most favorable benefits, it is important that not only the platform is able to scale, but the cloud program can as well. For example, does the code rely on backend resources, databases, read or open files, or parse through large data objects? These kinds of functional actions within a cloud program can reduce its ability to scale and therefore have a low support for throughput. Make sure your cloud program manages code paths that execute long running methods and perhaps place them into an offline process mechanism.

The cloud optimized stack is a concept used to refer to code that can handle high throughput, makes a small footprint, can run side‐by‐side with other applications on the same server, and is cross‐platform enabled. A small footprint refers to packaging into your cloud program only the components for which a dependency exists, making the deployment size as small as possible. Consider whether the cloud program requires the entire .NET Framework to function. If not, then instead of packaging the entire .NET Framework, include only the libraries required to run your cloud program, and then compile your cloud program into a self‐contained application to support side‐by‐side execution. The cloud program can run alongside any other cloud program because it contains the needed dependencies within the binaries package itself. Finally, by using an open source version of Mono, .NET Core, or ASP.NET Core the cloud program can be packaged, compiled, and deployed onto operating systems other than Microsoft—for example Mac OS X, iOS or Linux.

CLOUD PATTERNS AND BEST PRACTICES

In the cloud, very brief moments of increased latency or downtime are expected, and your code must be prepared for this and include logic to successfully recover from these platform exceptions. This is a significant mind shift if you have historically been coding for onsite or on‐premise program executions. You need to unlearn a lot of what you know about managing exceptions and learn to embrace failure and create your code to recover from such failures.

In the previous section words like portability, scalability, and resiliency were touched upon in the context of integrating those concepts into your program slated to run in the cloud. But what does portability specifically mean here? A program is portable if it can be moved or executed on multiple platforms, for example Windows, Linux, and Mac OS X. Take for example some ASP.NET Core features that sit on a new stack of open source technologies that provide the developer with options to compile code into binaries capable of running on any of those platforms. Traditionally, a developer who wrote a program using ASP.NET, with C# in the background, would run it on a Windows server using Internet Information Server (IIS). However, from a core cloud‐centric perspective, the ability of your program and all its dependencies to move from one virtual machine to another, without manual or programmatic intervention, is the most applicable form of portability in this context. Remember that failures in the cloud are expected, and the virtual machine (VM) on which your program is running can be wiped out at any given time and then be rebuilt fresh on another VM. Therefore, your program needs to be portable and able to recover from such an event.

Scalability means that your code responds well when multiple customers use it. For example, if you have 1,500 requests per minute, that would be roughly 25 concurrent requests per second, if the request is completed and responded to in 1 second. However, if you have 15,000 requests per minute, that would mean 250 concurrent requests per second. Will the cloud program respond in the same manner with 25 or 250 concurrent requests? How about 2,550? The following are a few cloud programming patterns that are useful for managing scalability.

- Command and Query Responsibility Segregation (CQRS) pattern —This pattern concerns the separation of operations that read data from operations that modify or update the data.

- Materialized View pattern —This modifies the storage structure to reflect the data query pattern. For example, creating views for specific highly used queries can make for more efficient querying.

- Sharding pattern —This breaks your data into multiple horizontal shards that contain a distinct subset of the data as opposed to vertical scaling via the addition of hardware capacity.

- Valet Key pattern —This gives clients direct access to the data store for streaming of or uploading of large files. Instead of having a web client manage the gatekeeping to the data store, it provides a client with a Valet Key and direct access to the data store.

Resiliency refers to how well your program responds and recovers from service faults and exceptions. Historically, IT infrastructures have been focused on failure prevention where the acceptance of downtime was minimal and 99.99% or 99.999% SLAs (service‐level agreements) were the expectation. Running a program in the cloud, however, requires a reliability mind shift, one which embraces failure and is clearly oriented toward recovery and not prevention. Having multiple dependencies such as database, storage, network, and third‐party services, some of which have no SLA, requires this shift in perspectives. User‐friendly reactions in response to outages or situations that are not considered normal operation make your cloud program resilient. Here are a few cloud programming patterns that are useful for embedding resiliency into your cloud program:

- Circuit Breaker pattern —This is a code design that is aware of the state of remote services and will only attempt to make the connection if the service is available. This avoids attempting a request and wasting CPU cycles when it is already known the remote service is unavailable via previous failures.

- Health Endpoint Monitoring pattern —This checks that cloud‐based applications are functionally available via the implementation of endpoint monitoring.

- Retry pattern —This retries the request after transient exceptions or failure. This pattern retries a number of times within a given timeframe and stops when the retry attempt threshold is breached.

- Throttling pattern —This manages the consumption of a cloud program so that SLAs can be met and the program remains functional under high load.

Using one or more of the patterns described in this section will help make your cloud migration more successful. The discussed patterns enhance usability of your program by improving the scalability and resiliency of it. This in turn makes for a more pleasant user or customer experience.

USING MICROSOFT AZURE C# LIBRARIES TO CREATE A STORAGE CONTAINER

Although there are numerous cloud providers, the cloud provider used for the examples in this and the next chapter is Microsoft. The cloud platform provided by Microsoft is called Azure. Azure has many different kinds of features. For example, the IaaS offering is called Azure VM, and the PaaS offering is called Azure Cloud Services. Additionally, Microsoft has SQL Azure for database, Azure Active Directory for user authentication, and Azure Storage for storing blobs, for example.

The following two exercises walk through the creation of an Azure storage account and an Azure storage container. Once created, images of 52 playing cards are stored in the container using the Microsoft Azure Storage SDK for .NET. Then in the next section you will create an ASP.NET 4.7 Web Application, to access the images stored in the Azure storage container. Then, following along with the card game theme the book, the ASP.NET 4.7 Web Site will deal a hand of playing cards. The card images are the blobs stored in the Azure storage container.

TRY IT OUT Create an Azure Storage Container Using the Microsoft Azure Storage Client Library

You will create a console application using Visual Studio 2017 and the Microsoft Azure Storage Client libraries to create an Azure storage container and upload the 52 cards into it.

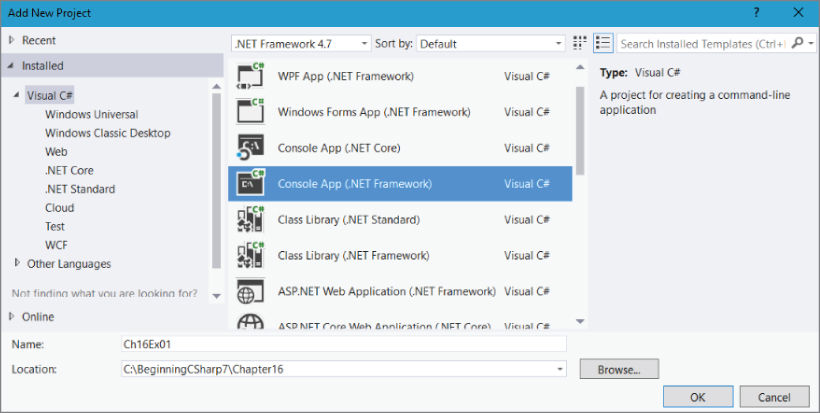

- Create a new console application project by selecting File

New

New  Project within Visual Studio. In the Add New Project dialog box (see Figure 16‐5

), select the category Visual C# and then select the

Console App (.NET Framework) template. Name the project Ch16Ex01 and save it in the directory

Project within Visual Studio. In the Add New Project dialog box (see Figure 16‐5

), select the category Visual C# and then select the

Console App (.NET Framework) template. Name the project Ch16Ex01 and save it in the directory C:\BeginningCSharp7\Chapter16. - Add a directory named

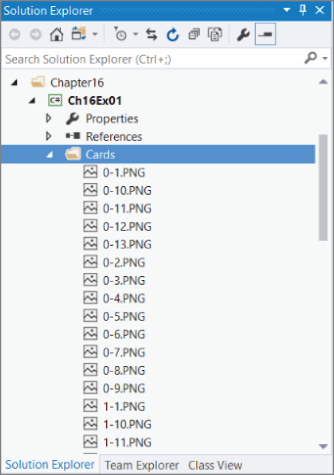

Cardsto the project by right‐clicking on Ch16Ex01 Add…

Add…  New Folder. Add the 52 card images to the directory, like that shown in Figure 16‐6

. The images are available from the source code download site and are named from

New Folder. Add the 52 card images to the directory, like that shown in Figure 16‐6

. The images are available from the source code download site and are named from 0‐1.PNGto3‐13.PNG. -

Additionally, copy the

Cardsdirectory intoC:\BeginningCSharp7\Chapter16\Ch16Ex01\bin\Debugso that the compiled executable can find them when run. - Right‐click again on the Ch16Ex01 project and select Manage NuGet Packages… from the popup menu.

- In the search textbox, as shown in Figure 16‐7

, enter Microsoft Azure Storage and install the WindowsAzure.Storage client library. You can find more information about the Windows Azure Storage library here:

https://docs.microsoft.com/en‐us/dotnet/api/overview/azure/storage?view=azure‐dotnet. - Accept the user agreements and once the NuGet package and its dependencies are installed, you should see a

============== Finished=================message in the Output window of Visual Studio. Additionally, theReferencesfolder within Ch16Ex01 is expanded, and you can view the newly added binaries. - Open the

App.configfile and add the following<appSetting>settings into the<configuration>section. Notice that theAccountNameis the name of the Azure storage account created in the previous Try It Out exercise (deckofcards). You would change this to the name of your Azure storage account. Refer to step 8 for instructions on how to get theAccountKey.<appSettings><add key="StorageConnectionString"value="DefaultEndpointsProtocol=https;AccountName=NAME;AccountKey=KEY"/></appSettings> -

To get the Azure storage account key and connection string, access the Microsoft Azure management portal (

https://portal.azure.com) and navigate to your Azure storage account. As seen in Figure 16‐8 , in the SETTINGS section there is an item named Access keys. Select that and copy the connection string forKey1and place it as thevaluein theApp.configfile. - Now add the code that creates the container, uploads the images, lists them, and if desired deletes them. First add the assembly references and the

try{}…catch{}C# framework to theMain()method, as shown here.using System;using System.IO;using System.Configuration;using Microsoft.WindowsAzure;using Microsoft.WindowsAzure.Storage;using Microsoft.WindowsAzure.Storage.Auth;using Microsoft.WindowsAzure.Storage.Blob;using static System.Console;namespace Ch16Ex01{class Program{static void Main(string[] args){try {}catch (StorageException ex){WriteLine($"StorageException: {ex.Message}");}catch (Exception ex){WriteLine($"Exception: {ex.Message}");}WriteLine("Press enter to exit.");ReadLine();}}} -

Next, add the code within the

try{}code block that creates the container, as shown here. Look at the parameter passed to theblobClient.GetContainerReference("carddeck"), carddeck. This is the name used for the Azure storage container. Content within this container can then be accessed viahttps://deckofcards.blob.core.windows.net/carddeck/0‐1.PNG, for example. You can place any desired name if it meets the naming requirements (for example, it must be 3 to 63 characters long and must begin with a letter or number). If you provide a container name that does not meet the naming requirements, a 400 HTTP status error is returned. Add a reference to theSystem.Configuration.dllassembly by right‐clicking theReferencefolder then Add Reference… Assemblies

Assemblies  Framework

Framework

System.Configurationand then press the OK button.CloudStorageAccount storageAccount = CloudStorageAccount.Parse(ConfigurationManager.AppSettings["StorageConnectionString"]);CloudBlobClient blobClient = storageAccount.CreateCloudBlobClient();CloudBlobContainer container = blobClient.GetContainerReference("carddeck");if (container.CreateIfNotExists()){WriteLine($"Created container '{container.Name}' " +$"in storage account '{storageAccount.Credentials.AccountName}'.");}else{WriteLine($"Container '{container.Name}' already exists " +$"for storage account '{storageAccount.Credentials.AccountName}'.");}container.SetPermissions(new BlobContainerPermissions{ PublicAccess = BlobContainerPublicAccessType.Blob });WriteLine($"Permission for container '{container.Name}' is public."); - Add this code following the code that creates the container in step 10 which uploads the card images stored in the

Cardsfolder.int numberOfCards = 0;DirectoryInfo dir = new DirectoryInfo(@"Cards");foreach (FileInfo f in dir.GetFiles("*.*")){CloudBlockBlob blockBlob = container.GetBlockBlobReference(f.Name);using (var fileStream = System.IO.File.OpenRead(@"Cards\" + f.Name)){blockBlob.UploadFromStream(fileStream);WriteLine($"Uploading: '{f.Name}' which " +$"is {fileStream.Length} bytes.");}numberOfCards++;}WriteLine($"Uploaded {numberOfCards.ToString()} cards.");WriteLine(); - Now that the images are uploaded, just to check that all went well, add this code to list the blobs stored in the newly created Azure storage container, named

carddeckafter the code from step 11.numberOfCards = 0;foreach (IListBlobItem item in container.ListBlobs(null, false)){if (item.GetType() == typeof(CloudBlockBlob)){CloudBlockBlob blob = (CloudBlockBlob)item;WriteLine($"Card image url '{blob.Uri}' with length " +$" of {blob.Properties.Length}");}numberOfCards++;}WriteLine($"Listed {numberOfCards.ToString()} cards."); -

Now, if desired, you can delete the images that were just uploaded. This is really to show an example of how you can delete the blob files from your container programmatically.

WriteLine("Enter Y to delete listed cards, press enter to skip deletion:");if (ReadLine() == "Y"){numberOfCards = 0;foreach (IListBlobItem item in container.ListBlobs(null, false)){CloudBlockBlob blob = (CloudBlockBlob)item;CloudBlockBlob blockBlobToDelete = container.GetBlockBlobReference(blob.Name);blockBlobToDelete.Delete();WriteLine($"Deleted: '{blob.Name}' which was {blob.Name.Length} bytes.");numberOfCards++;}WriteLine($"Deleted {numberOfCards.ToString()} cards.");} - Run the console application and review the output. You should see something like what is shown in Figure 16‐9

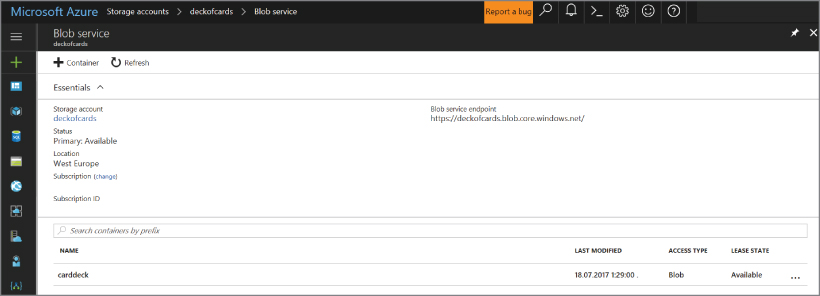

. Then access the Microsoft Azure management console and look on the container page for the newly created container named, for example,

carddeckas shown in Figure 16‐10 . Click on the container to view its contents.

How It Works

It is programmatically possible to create a Microsoft Azure storage account, but the security aspect of that creation is relatively complex, and that step is performed instead from within the Microsoft Azure Management console directly. Once an Azure storage account is created, you can then create multiple containers within the account. In this example, you created a container called carddeck

. There is only a limit on the number of storage accounts per Microsoft Azure subscription and no limit on the number of containers within the storage account. You can create as much and as many as you want, but keep in mind that each comes with a cost.

The code is split into four sections (create the container, upload the images to the container, list the blobs in the container, and optionally delete the contents of the container). The first action taken was to set up the try{}

…catch{}

framework for the console application. This is a good practice because uncaught or unhandled exceptions typically crash the process (EXE), which is something that should always be avoided. The first catch()

expression is the StorageException

and captures exceptions thrown specifically from methods within the Microsoft.WindowsAzure.Storage

namespace.

catch (StorageException ex)

Then there is a catch all exceptions expression that handles all other unexpected exceptions and writes the exception message for them to the console.

catch (Exception ex)

The first line within the try{}

code block creates the storage account using the details added to the App.config

file.

CloudStorageAccount storageAccount = CloudStorageAccount.Parse(ConfigurationManager.AppSettings["StorageConnectionString"]);

The App.config

file contains the storage account name and the secret storage account key that is needed for performing administrative actions on the Azure storage account. Next, you create a client that manages the interface with a specific blob container within the storage account. Then the code gets a reference to a specific container named carddeck

.

CloudBlobClient blobClient = storageAccount.CreateCloudBlobClient();CloudBlobContainer container =blobClient.GetContainerReference("carddeck");

Next the container.CreateIfNotExists()

method is called. If the container is created, meaning it does not already exist, then the value true

is returned and that information is written to the console. Otherwise, false

is returned if the container does already exist.

if (container.CreateIfNotExists()){…} …

Containers can be Private

or Public

. For this example, the container is public, which means an access key is not required to access it. The container is set to be public by executing this code.

container.SetPermissions(new BlobContainerPermissions{ PublicAccess = BlobContainerPublicAccessType.Blob });

At this point the container is created and publicly accessible, but it is empty. Using a System.IO

method like DirectoryInfo

and FileInfo

, you created a foreach

loop that added each of the card images to the carddeck

storage container. The GetBlockBlobReference()

method is used to set the reference to the specific image name to be added to the container. Then using the filename and path, the System.IO.File.OpenRead()

method opens the actual file as a FileStream

, and it is uploaded to the container via the UploadFromStream()

method.

CloudBlockBlob blockBlob = container.GetBlockBlobReference(f.Name);using (var fileStream = System.IO.File.OpenRead(@"Cards\" + f.Name)){blockBlob.UploadFromStream(fileStream);}

All of the files in the Cards

directory are looped through and uploaded to the container. Using the same container object created during the initial creation of the carddeck

container, by calling the ListBlob()

method, a list of existing blobs are returned as an IEnumerable<IListBlobItems>

. You then loop through the list and write them to the console.

foreach (IListBlobItem item in container.ListBlobs(null, false)){if (item.GetType() == typeof(CloudBlockBlob)){CloudBlockBlob blob = (CloudBlockBlob)item;WriteLine($"Card image url '{blob.Uri}' with length of " +$" {blob.Properties.Length}");}numberOfCards++;}

As previously noted, there are numerous types of items that can be stored in a container, like blobs, tables, queues, and files. Therefore, prior to boxing the item as a CloudBlockBlob

, it is important to confirm that the item is indeed a CloudBlockBlob

. Other types to check for are CloudPageBlob

and CloudBlobDirectory

.

To delete the blobs in the container, first the list of blobs is retrieved in the same manner as previously performed when looping through them and writing them to the console. The difference when deleting them is that GetBlockBlobReference(blob.Name)

is called to get a reference to the specific blob, then the Delete()

method is called for that specific blob.

CloudBlockBlob blockBlobToDelete = container.GetBlockBlobReference(blob.Name);blockBlobToDelete.Delete();

Now that the Microsoft Azure storage account and container are created and loaded with the images of a 52‐card deck, you can create an ASP.NET Web Site to reference the Microsoft Azure storage container.

CREATING AN ASP.NET 4.7 WEB SITE THAT USES THE STORAGE CONTAINER

Up to now there has not been any in‐depth examination of what a web application is nor a discussion about the fundamental aspects of ASP.NET. This section provides some insight into these technical perspectives.

A web application causes a web server to send HTML code to a client. That code is displayed in a web browser such as Microsoft Edge or Google Chrome. When a user enters a URL string in the browser, an HTTP request is sent to the web server. The HTTP request contains the filename that is requested along with additional information such as a string identifying the application, the languages that the client supports, and additional data belonging to the request. The web server returns an HTTP response that contains HTML code, which is interpreted by the web browser to display text boxes, buttons, and lists to the user.

ASP.NET is a technology for dynamically creating web pages with server‐side code. These web pages can be developed with many similarities to client‐side Windows programs. Instead of dealing directly with the HTTP request and response and manually creating HTML code to send to the client, you can use controls such as TextBox

, Label

, ComboBox

, and Calendar

, which create HTML code.

Using ASP.NET for web applications on the client system requires only a simple web browser. You can use Internet Explorer, Edge, Chrome, Firefox, or any other web browser that supports HTML. The client system doesn’t require .NET to be installed.

On the server system, the ASP.NET runtime is needed. If you have Internet Information Services (IIS) on the system, the ASP.NET runtime is configured with the server when the .NET Framework is installed. During development, there’s no need to work with Internet Information Services because Visual Studio delivers its own ASP.NET Web Development server that you can use for testing and debugging the application.

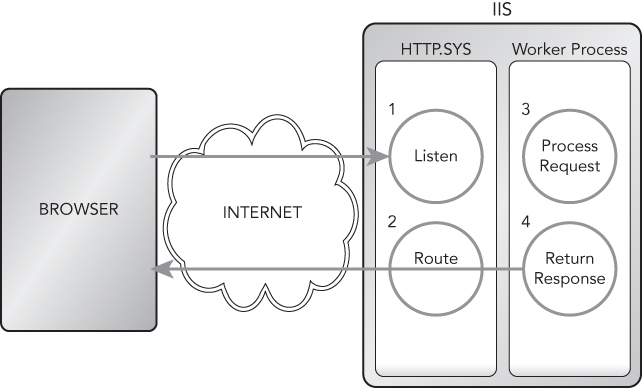

To understand how the ASP.NET runtime goes into action, consider a typical web request from a browser (see Figure 16‐11

). The client requests a file, such as default.aspx

or default.cshtml

, from the server. ASP.NET web form pages usually have the file extension .aspx

, (ASP.NET MVC has no specific file extension), and .cshtml

is used for Razor‐based Web Sites. Because these file extensions are registered with IIS or known by the ASP.NET Web Development Server, the ASP.

NET runtime and the ASP.NET worker process enter the picture. The IIS worker process is named w3wp.exe and is host to your application on the web server. With the first request to the default.cshtml

file, the ASP.NET parser starts, and the compiler compiles the file together with the C# code, which is associated with the .cshtml

file and creates an assembly. Then the assembly is compiled to native code by the JIT compiler of the .NET runtime. Then the Page object is destroyed. The assembly is kept for subsequent requests, though, so it is not necessary to compile the assembly again.

Now that you have a basic understanding of what web applications and ASP.NET are, perform the steps in the following Try It Out.

TRY IT OUT Create an ASP.NET 4.7 Web Site That Deals Two Hands of Cards

Again, you will use Visual Studio 2017, but this time you create an ASP.NET Web Site that requests the names of two players, and then when the page is submitted, two hands of cards are dealt. Those cards are downloaded from the Microsoft Azure storage container created earlier, and the cards are displayed on the web page.

- Create a new Web Site project by selecting File

New

New  Web Site… within Visual Studio. In the New Web Site dialog box (see Figure 16‐12

), select the category Visual C# and then select the ASP.NET Empty Web Site template. Name the Web Site Ch16Ex02.

Web Site… within Visual Studio. In the New Web Site dialog box (see Figure 16‐12

), select the category Visual C# and then select the ASP.NET Empty Web Site template. Name the Web Site Ch16Ex02. -

Add an ASP.NET Folder named

App_Codeby right‐clicking on the Ch16Ex02 solution, and then select Add Add ASP.NET Folder

Add ASP.NET Folder

App_Code. - Download the sample code for this exercise from the download site and place the following class files into the

App_Codefolder you just created. Once downloaded, right‐click on theApp_Codefolder, select Add Existing Item…, and select the seven classes from the downloaded example.

Existing Item…, and select the seven classes from the downloaded example.-

Card.cs -

Cards.cs -

Deck.cs -

Game.cs -

Player.cs -

Rank.cs -

Suit.cs

NOTE

The classes in step 3 are very similar to those used in previous examples. Only a few modifications were implemented, like the removal of

WriteLine()andReadLine()methods and some unused methods. Look in theCard.csclass, and you will see a new constructor which contains the link to the card image.

-

-

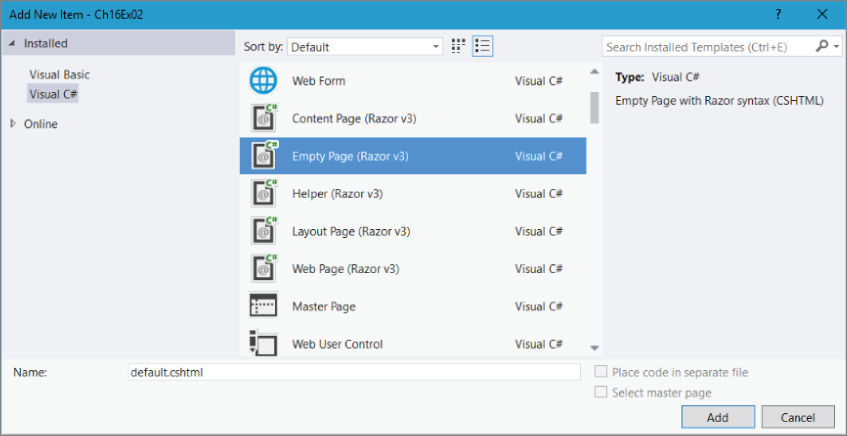

Add a

default.cshtmlRazor v3 file to the project by right‐clicking on the CH16Ex02 solutions, and then select Add New Item… Visual C#

Visual C#  Empty Page (Razor v3) as shown in Figure 16‐13

.

Empty Page (Razor v3) as shown in Figure 16‐13

. - Open the

default.cshtmlfile and place the following code at the top of the page.@{Player[] players = new Player[2];var player1 = Request["PlayerName1"];var player2 = Request["PlayerName2"];if(IsPost){players[0] = new Player(player1);players[1] = new Player(player2);Game newGame = new Game();newGame.SetPlayers(players);newGame.DealHands();}} - Next, add this syntax under the code you added in step 5. Pay close attention to the

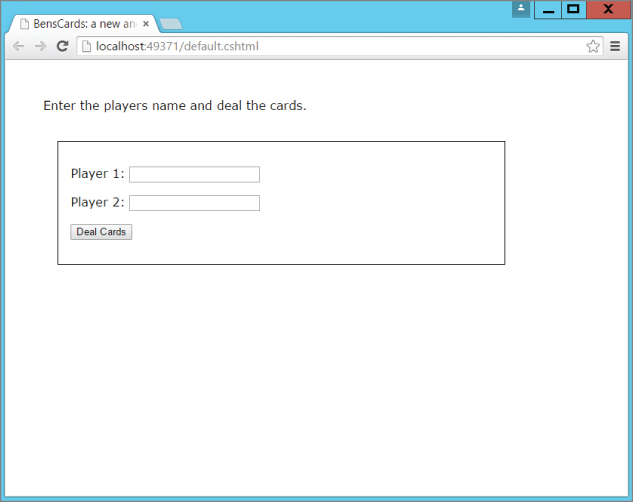

@card.imageLink, which is the newly added parameter to theCardclass.<html lang="en"><head><meta charset="utf-8"/><style>body {font-family:Verdana; margin-left:50px;margin-top:50px;}div {border: 1px solid black; width:40%;margin:1.2em;padding:1em;}</style><title>BensCards: a new and exciting card game.</title></head><body>@if(IsPost){<label id="labelGoal">Which player has the best hand.</label><br/><div><p><label id="labelPlayer1">Player1: @player1</label> </p>@foreach(Card card in players[0].PlayHand){<img width="75px" height="100px" alt="cardImage"src="https://deckofcards.blob.core.windows.net/carddeck/@card.imageLink"/>}</div><div><p><label id="labelPlayer1">Player2: @player2</label> </p>@foreach(Card card in players[1].PlayHand){<img width="75px" height="100px" alt="cardImage"src="https://deckofcards.blob.core.windows.net/carddeck/@card.imageLink"/>}</div>}else{<label id="labelGoal">Enter the players name and deal the cards.</label><br/><br/><form method="post"><div><p>Player 1: @Html.TextBox("PlayerName1")</p><p>Player 2: @Html.TextBox("PlayerName2")</p><p><input type="submit" value="Deal Cards"class="submit"></p></div></form>}</body></html> - Now, run the Web Site by pressing F5 or the Run button within Visual Studio. A browser will start up and you should see a page rendered similar to the one illustrated in Figure 16‐14 . First you are prompted to enter in the Player names. Enter any two names.

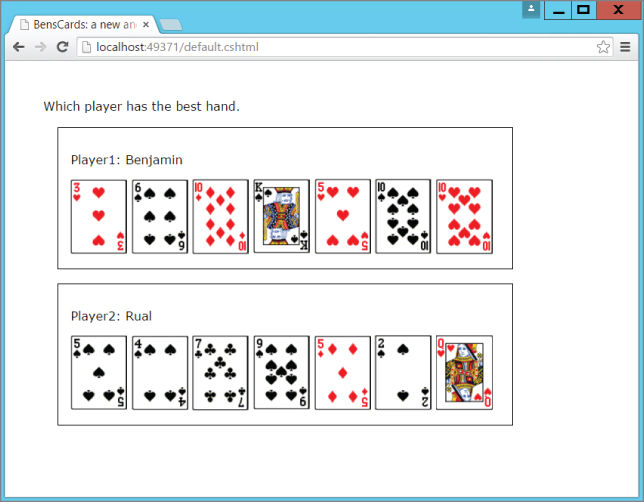

- Press the Deal Cards button, and a hand of cards is dealt to each player. You would see something similar to what is shown in Figure 16‐15 .

You have now created a simple ASP.NET Web Site using Razor v3. The ASP.NET Web Site connects to the Azure storage account and container for displaying the images of the playing cards.

How It Works

You certainly noticed a new technology named Razor that was used in the previous exercise. Razor is a view engine that was introduced with ASP.NET 3 MVC along with Visual Studio 2013. Razor, as you have seen, uses C#‐like language (VB is supported too) that is placed within a @{

…}

code block and is compiled and executed when the page is requested from a browser. Take a look at this code:

@{Player[] players = new Player[2];var player1 = Request["PlayerName1"];var player2 = Request["PlayerName2"];if(IsPost){players[0] = new Player(player1);players[1] = new Player(player2);Game newGame = new Game();newGame.SetPlayers(players);newGame.DealHands();}}

The code is encapsulated within a @{

…}

code block and is compiled and executed by the Razor engine when accessed. When the page is accessed, an array of type Player[]

is created and the contents of the query string are populated into the two variables called player1

and player2

. If the page is not a post back, which means the page was simply requested (GET

) instead of a button click (POST

), then the code within the if(IsPost){}

code block does not execute. If the request to the page is a POST

, which happens when you click the Deal Cards button, the Players

are instantiated, a new game is started, and the hands of cards get dealt.

The initial request to the default.cshtml

file executes this code path because it is not a POST

.

else{<label id="labelGoal">Enter the players name and deal the cards.</label><br/><br/><form method="post"><div><p>Player 1: @Html.TextBox("PlayerName1")</p><p>Player 2: @Html.TextBox("PlayerName2")</p><p><input type="submit"value="Deal Cards"class="submit"></p></div></form>}

The code renders two HTML TextBox

controls that request the player names and a button. Once the information is entered, pressing the Deal Cards button executes a POST

and the following code path is executed. The code loops through the cards dealt to each player of the game.

@if (IsPost){<label id="labelGoal">Which player has the besthand.</label><br/><div><p><label id="labelPlayer1">Player1:@player1</label> </p>@foreach (Card card in players[0].PlayHand){<img width="75"height="100"alt="cardImage"src="https://deckofcards.blob.core.windows.net/carddeck/@card.imageLink"/>}</div><div><p><label id="labelPlayer1">Player2:@player2</label> </p>@foreach (Card card in players[1].PlayHand){<img width="75"height="100"alt="cardImage"src="https://deckofcards.blob.core.windows.net/carddeck/@card.imageLink"/>}</div>}

Notice that within both foreach

loops there is a reference to the Azure storage account URL and the container created in the previous exercise.

NOTE

The Azure storage account URL and container are for example only. You should replace

deckofcards

with your Azure storage account and

carddeck

with your Azure storage container.

EXERCISES

- 16.1 What information would you need to pass between the browser and the server to play the card game?

- 16.2 As web applications are stateless, describe some ways to store this information so it can be included with a web request.

Answers to the exercises can be found in Appendix.

WHAT YOU LEARNED IN THIS CHAPTER

WHAT YOU LEARNED IN THIS CHAPTER

| TOPIC | KEY CONCEPTS |

| Defining the cloud | The cloud is an elastic structure of commoditized computer hardware for running programs. These programs run on IaaS, PaaS, or SaaS service models in a Hybrid, Public, or Private Cloud type. |

| Defining the cloud optimized stack | The cloud optimized stack is a concept used to refer to code that can handle high throughput, makes a small footprint, can run side‐by‐side with other applications on the same server, and is cross‐platform enabled. |

| Creating a storage account | A storage account can contain an infinite number of containers. The storage account is the mechanism for controlling access to the containers created within it. |

| Creating a storage container with C# | A storage container exists within a storage account and contains the blobs, files, or data that are accessible from any place where an Internet connection exists. |

| Referencing the storage container from ASP.NET Razor | It is possible to reference a storage container from C# code. You use the storage account name, the container name, and the name of the blog, file, or data you need to access. |