16

Fractals and Statistical Growth Models

16.1 Fractional Dimension (Math)

Benoit Mandelbrot, who first studied fractional-dimension figures with supercomputers at IBM Research, gave them the name fractals (Mandelbrot, 1982). Some geometric objects, such as Koch curves, are exact fractals with the same dimension for all their parts. Other objects, such as bifurcation curves, are statistical fractals in which elements of chaos occur and the dimension can be defined only for each part of the object, or on the average.

Consider an abstract object such as the density of charge within an atom. There are an infinite number of ways to measure the “size” of this object. For example, each moment 〈rn〉 is a measure of the size, and there is an infinite number of such moments. Likewise, when we deal with complicated objects, there are different definitions of dimension and each may give a somewhat different value.

Our first definition of the dimension df, the Hausdorff–Besicovitch dimension, is based on our knowledge that a line has dimension 1, a triangle has dimension 2, and a cube has dimension 3. It seems perfectly reasonable to ask if there is some mathematical formula that agrees with our experience with regular objects, yet can also be used for determining fractional dimensions. For simplicity, let us consider objects that have the same length L on each side, as do equilateral triangles and squares, and that have uniform density. We postulate that the dimension of an object is determined by the dependence of its total mass upon its length:

where the power df is the fractal dimension. As you may verify, this rule works with the 1D, 2D, and 3D regular figures in our experience, so it is a reasonable to try it elsewhere. When we apply (16.1) to fractal objects, we end up with fractional values for df. Actually, we will find it easier to determine the fractal dimension not from an object’s mass, which is extensive (depends on size), but rather from its density, which is intensive. The density is defined as mass/length for a linear object, as mass/area for a planar object, and as mass/volume for a solid object. That being the case, for a planar object we hypothesize that

16.2 The Sierpiński Gasket (Problem 1)

To generate our first fractal (Figure 16.1), we play a game of chance in which we place dots at points picked randomly within a triangle (Bunde and Havlin, 1991). Here are the rules (which you should try out in the margins now).

- Draw an equilateral triangle with vertices and coordinates:

(16.3)

- Place a dot at an arbitrary point P = (x0, y0) within this triangle.

- Find the next point by selecting randomly the integer 1, 2, or 3:

- a) If 1, place a dot halfway between P and vertex 1.

- b) If 2, place a dot halfway between P and vertex 2.

- c) If 3, place a dot halfway between P and vertex 3.

- Repeat the process using the last dot as the new P.

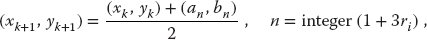

Mathematically, the coordinates of successive points are given by the formulas

where ri is a random number between 0 and 1 and where the integer function outputs the closest integer smaller than or equal to the argument. After 15 000 points, you should obtain a collection of dots like those in Figure 16.1a.

16.2.1 Sierpiński Implementation

Write a program to produce a Sierpiński gasket. Determine empirically the fractal dimension of your figure. Assume that each dot has mass 1 and that p = CLα. (You can have the computer do the counting by defining an array box of all 0 values and then change a 0 to a 1 when a dot is placed there.)

Figure 16.1 (a) A statistical fractal Sierpiński gasket containing 10 000 points. Note the self-similarity at different scales. (b) A geometric Sierpiński gasket constructed by successively connecting the midpoints of the sides of each equilateral triangle. The first three steps in the process are labeled as A, B, C.

16.2.2 Assessing Fractal Dimension

The topology in Figure 16.1 was first analyzed by the Polish mathematician Sierpiński. Observe that there is the same structure in a small region as there is in the entire figure. In other words, if the figure had infinite resolution, any part of the figure could be scaled up in size and would be similar to the whole. This property is called self-similarity.

We construct a nonstatistical form of the Sierpiński gasket by removing an inverted equilateral triangle from the center of all filled equilateral triangles to create the next figure (Figure 16.1b). We then repeat the process ad infinitum, scaling up the triangles so each one has side r = 1 after each step. To see what is unusual about this type of object, we look at how its density (mass/area) changes with size, and then apply (16.2) to determine its fractal dimension. Assume that each triangle has mass m and assign unit density to the single triangle:

Next, for the equilateral triangle with side L = 2, the density is

We see that the extra white space in Figure 16.1b leads to a density that is 3/4 that of the previous stage. For the structure in Figure 16.1c, we obtain

We see that as we continue the construction process, the density of each new structure is 3/4 that of the previous one. Interesting. Yet in (16.2) we derived that

Equation 16.8 implies that a plot of the logarithm of the density ρ vs. the logarithm of the length L for successive structures yields a straight line of slope

As applied to our problem,

As is evident in Figure 16.1, as the gasket grows larger (and consequently more massive), it contains more open space. So despite the fact that its mass approaches infinity as L → ∞, its density approaches zero! And because a 2D figure like a solid triangle has a constant density as its length increases, a 2D figure has a slope equal to 0. Because the Sierpiński gasket has a slope df − 2  − 0.415 04, it fills space to a lesser extent than a 2D object but more so than a ID object; it is a fractal with dimension 1.6.

− 0.415 04, it fills space to a lesser extent than a 2D object but more so than a ID object; it is a fractal with dimension 1.6.

16.3 Growing Plants (Problem 2)

It seems paradoxical that natural processes subject to chance can produce objects of high regularity and symmetry. For example, it is hard to believe that something as beautiful and graceful as a fern (Figure 16.2a) has random elements in it. Nonetheless, there is a clue here in that much of the fern’s beauty arises from the similarity of each part to the whole (self-similarity), with different ferns similar but not identical to each other. These are characteristics of fractals. Your problem is to discover if a simple algorithm including some randomness can draw regular ferns. If the algorithm produces objects that resemble ferns, then presumably you have uncovered mathematics similar to that responsible for the shapes of ferns.

16.3.1 Self-Affine Connection (Theory)

In (16.4), which defines mathematically how a Sierpiński gasket is constructed, a scalingfactor of 1/2 is part of the relation of one point to the next. A more general transformation of a point P = (x, y) into another point P′ = (x′, y′) via scaling is

Figure 16.2 (a) A fractal fern generated by 30 000 iterations of the algorithm (16.14). Enlarging this fern shows that each frond with a similar structure. (b) A fractal tree created with the simple algorithm (16.17).

If the scale factor s > 0, an amplification occurs, whereas if s < 0, a reduction occurs. In our definition (16.4) of the Sierpiński gasket, we also added in a constant an. This is a translation operation, which has the general form

Another operation, not used in the Sierpiński gasket, is a rotation by angle θ:

The entire set of transformations, scalings, rotations, and translations defines an affine transformation (affine denotes a close relation between successive points). The transformation is still considered affine even if it is a more general linear transformation with the coefficients not all related by a single θ (in that case, we can have contractions and reflections). What is important is that the object created with these rules turns out to be self-similar; each step leads to new parts of the object that bear the same relation to the ancestor parts as the ancestors did to theirs. This is what makes the object look similar at all scales.

16.3.2 Barnsley’s Fern Implementation

We obtain a Barnsley’s fern (Barnsley and Hurd, 1992) by extending the dots game to one in which new points are selected using an affine connection with some elements of chance mixed in:

To select a transformation with probability P, we select a uniform random number 0 ≤ r ≤ 1 and perform the transformation if r is in a range proportional to P:

The rules (16.14) and (16.15) can be combined into one:

Although (16.14) makes the basic idea clearer, (16.16) is easier to program, which we do in Listing 16.1.

The starting point in Barnsley’s fern (Figure 16.2) is (x1, y1) = (0.5,0.0), and the points are generated by repeated iterations. An important property of this fern is that it is not completely self-similar, as you can see by noting how different the stems and the fronds are. Nevertheless, the stem can be viewed as a compressed copy of a frond, and the fractal obtained with (16.14) is still self-affine, yet with a dimension that varies from part to part in the fern.

Listing 16.1 Fern3D.pysimulates the growth of ferns in 3D.

16.3.3 Self-Affinity in Trees Implementation

Now that you know how to grow ferns, look around and notice the regularity in trees (such as in Figure 16.2b). Can it be that this also arises from a self-affine structure? Write a program, similar to the one for the fern, starting at (x1, y1) = (0.5, 0.0) and iterating the following self-affine transformation:

16.4 Ballistic Deposition (Problem 3)

There are a number of physical and manufacturing processes in which particles are deposited on a surface and form a film. Because the particles are evaporated from a hot filament, there is randomness in the emission process yet the produced films turn out to have well-defined, regular structures. Again we suspect fractals. Your problem is to develop a model that simulates this growth process and compare your produced structures to those observed.

16.4.1 Random Deposition Algorithm

The idea of simulating random depositions was first reported by Vold (1959), which includes tables of random numbers used to simulate the sedimentation of moist spheres in hydrocarbons. We shall examine a method of simulation that results in the deposition shown in Figure 16.3 (Family and Vicsek, 1985).

Consider particles falling onto and sticking to a horizontal line of length L composed of 200 deposition sites. All particles start from the same height, but to simulate their different velocities, we assume they start at random distances from the left side of the line. The simulation consists of generating uniform random sites between 0 and L, and having a particle stick to the site on which it lands. Because a realistic situation may have columns of aggregates of different heights, the particle may be stopped before it makes it to the line, or it may bounce around until it falls into a hole. We therefore assume that if the column height at which the particle lands is greater than that of both its neighbors, it will add to that height. If the particle lands in a hole, or if there is an adjacent hole, it will fill up the hole. We speedup the simulation by setting the height of the hole equal to the maximum of its neighbors:

Figure 16.3 A simulation of the ballistic deposition of 20 000 particles onto a substrate of length 200. The vertical height increases in proportion to the length of deposition time, with the top being the final surface.

- Choose a random site r.

- Let the array hr be the height of the column at site r.

- Make the decision:

(16.18)

Our simulation is Fractals/Film.py with the essential loop:

The results of this type of simulation show several empty regions scattered throughout the line (Figure 16.3), which is an indication of the statistical nature of the process while the film is growing. Simulations by Fereydoon reproduced the experimental observation that the average height increases linearly with time and produced fractal surfaces. (You will be asked to determine the fractal dimension of a similar surface as an exercise.)

Exercise Extend the simulation of random deposition to two dimensions, so rather than making a line of particles we now deposit an entire surface.

16.5 Length of British Coastline (Problem 4)

In 1967, Mandelbrot (Mandelbrot, 1967) asked a classic question, “How long is the coast of Britain?” If Britain had the shape of Colorado or Wyoming, both of which have straight-line boundaries, its perimeter would be a curve of dimension 1 with finite length. However, coastlines are geographic not geometric curves, with each portion of the coast sometimes appearing self-similar to the entire coast. If the perimeter of the coast is in fact a fractal, then its length is either infinite or meaningless. Mandelbrot deduced the dimension of the west coast of Britain to be df = 1.25, which implies infinite length. In your problem we ask you to determine the dimension of the perimeter of one of our fractal simulations.

16.5.1 Coastlines as Fractals (Model)

The length of the coastline of an island is the perimeter of that island. While the concept of perimeter is clear for regular geometric figures, some thought is required to give it meaning for an object that may be infinitely self-similar. Let us assume that a map maker has a ruler of length r. If she walks along the coastline and counts the number of times N that she must place the ruler down in order to cover the coastline, she will obtain a value for the length L of the coast as Nr. Imagine now that the map maker keeps repeating her walk with smaller and smaller rulers. If the coast was a geometric figure or a rectifiable curve, at some point the length L would become essentially independent of r and would approach a constant. Nonetheless, as discovered empirically by Richardson (1961) for natural coastlines such as those of South Africa and Britain, the perimeter appears to be an unusual function of r:

where M and df are empirical constants. For a geometric figure or for Colorado, df = 1 and the length approaches a constant as r → 0. Yet for a fractal with df > 1, the perimeter L → ∞ as r → 0. This means that as a consequence of self-similarity, fractals may be of finite size but have infinite perimeters. Physically, at some point there may be no more details to discern as r → 0 (say, at the quantum or Compton size limit), and so the limit may not be meaningful.

16.5.2 Box Counting Algorithm

Consider a line of length L broken up into segments of length r (Figure 16.4a). The number of segments or “boxes” needed to cover the line is related to the size r of the box by

where C is a constant. A proposed definition of fractional dimension is the power of r in this expression as r → 0. In our example, it tells us that the line has dimension df = 1. If we now ask how many little circles of radius r it would take to cover or fill a circle of area A (Figure 16.4, middle), we will find that

as expected. Likewise, counting the number of little spheres or cubes that can be packed within a large sphere tells us that a sphere has dimension df = 3. In general, if it takes N little spheres or cubes of side r → 0 to cover some object, then the fractal dimension df can be deduced as

Figure 16.4 Examples of the use of “box” counting to determine fractal dimension. (a) In the top “boxes” are circles and the perimeter is being covered. In the bottom an entire figure is being covered. In (b) a “coastline” is being covered by boxes of two different sizes (scales). The fractal dimension can be deduced by recording the number of box of different scale needed to cover the figures.

Here s ∝ 1/r is called the scale in geography, so r → 0 corresponds to an infinite scale. To illustrate, you may be familiar with the low scale on a map being 10 000 m to a centimeter, while the high scale is 100 m to a centimeter. If we want the map to show small details (sizes), we need a map of high scale.

We will use box counting to determine the dimension of a perimeter, not of an entire figure. Once we have a value for df, we can determine a value for the length of the perimeter via (16.19). (If you cannot wait to see box counting in action, in the auxiliary online files you will find an applet Jfracdim that goes through all the steps of box counting before your eyes and even plots the results.)

16.5.3 Coastline Implementation and Exercise

Rather than ruin our eyes using a geographic map, we use a mathematical one, specifically, the top portion of Figure 16.3 that may look like a natural coastline. Determine df by covering this figure, or one you have generated, with a semitransparent piece of graph paper, 1) and counting the number of boxes containing any part of the coastline (Figures 16.4 and 16.5).

Figure 16.5 Fractal dimensions of a line, box, and coastline determined by box counting. The slope at vanishingly small scale determines the dimension.

- Print your coastline graph with the same physical scale (aspect ratio) for the vertical and horizontal axes. This is required because the graph paper you will use for box counting has square boxes and so you want your graph to also have the same vertical and horizontal scales. Place a piece of graph paper over your printout and look though the graph paper at your coastline. If you do not have a piece of graph paper available, or if you are unable to obtain a printout with the same aspect ratio for the horizontal and vertical axes, add a series of closely spaced horizontal and vertical lines to your coastline printout and use these lines as your graph paper. (Box counting should still be accurate if both your coastline and your graph paper have the same aspect ratios.)

- The vertical height in our printout was 17 cm, and the largest division on our graph paper was 1 cm. This sets the scale of the graph as 1 : 17, or s = 17 for the largest divisions (lowest scale). Measure the vertical height of your fractal, compare it to the size of the biggest boxes on your “piece” of graph paper, and thus determine your lowest scale.

- With our largest boxes of 1 cm × 1 cm, we found that the coastline passed through N = 24 large boxes, that is, that 24 large boxes covered the coastline at s = 17. Determine how many of the largest boxes (lowest scale) are needed to cover your coastline.

- With our next smaller boxes of 0.5 cm × 0.5 cm, we found that 51 boxes covered the coastline at a scale of s = 34. Determine how many of the midsize boxes (midrange scale) are needed to cover your coastline.

- With our smallest boxes of 1 mm × 1 mm, we found that 406 boxes covered the coastline at a scale of s = 170. Determine how many of the smallest boxes (highest scale) are needed to cover your coastline.

- Equation 16.24 tells us that as the box sizes get progressively smaller, we have

Clearly, only the relative scales matter because the proportionality constants cancel out in the ratio. A plot of log N vs. log s should yield a straight line. In our example we found a slope of df = 1.23. Determine the slope and thus the fractal dimension for your coastline. Although only two points are needed to determine the slope, use your lowest scale point as an important check. (Because the fractal dimension is defined as a limit for infinitesimal box sizes, the highest scale points are more significant.)

- As given by (16.19), the perimeter of the coastline

(16.25)

If we keep making the boxes smaller and smaller so that we are looking at the coastline at higher and higher scale and if the coastline is self-similar at all levels, then the scale s will keep getting larger and larger with no limits (or at least until we get down to some quantum limits). This means

(16.26)

Does your fractal implies an infinite coastline? Does it make sense that a small island like Britain, which you can walk around, has an infinite perimeter?

16.6 Correlated Growth, Forests, Films (Problem 5)

It is an empirical fact that in nature there is increased likelihood that a plant will grow if there is another one nearby (Figure 16.6a). This correlation is also valid for the simulation of surface films, as in the previous algorithm. Your problem is to include correlations in the surface simulation.

16.6.1 Correlated Ballistic Deposition Algorithm

A variation of the ballistic deposition algorithm, known as the correlated ballistic deposition, simulates mineral deposition onto substrates on which dendrites form (Tait et al., 1990). In Listing 16.2 we extend the previous algorithm to include the likelihood that a freshly deposited particle will attract another particle. We assume that the probability of sticking P depends on the distance d that the added particle is from the last one (Figure 16.6b):

Here η is a parameter and c is a constant that sets the probability scale.2) For our implementation we choose η = −2, which means that there is an inverse square attraction between the particles (decreased probability as they get farther apart).

Figure 16.6 (a) A view that might be seen in the undergrowth of a forest or after a correlated ballistic deposition. (b) The probability of particle i + 1 sticking in one column depends upon the distance d from the previously deposited particle i.

As in our study of uncorrelated deposition, a uniform random number in the interval [0, L] determines the column in which the particle will be deposited. We use the same rules about the heights as before, but now a second random number is used in conjunction with (16.27) to decide if the particle will stick. For instance, if the computed probability is 0.6 and if r < 0.6, the particle will be accepted (sticks), if r > 0.6, the particle will be rejected.

16.7 Globular Cluster (Problem 6)

Consider a bunch of grapes on an overhead vine. Your problem is to determine how its tantalizing shape arises. In a flash of divine insight, you realize that these shapes, as well as others such as those of dendrites, colloids, and thin-film structure, appear to arise from an aggregation process that is limited by diffusion.

16.7.1 Diffusion-Limited Aggregation Algorithm

A model of diffusion-limited aggregation (DLA) has successfully explained the relation between a cluster’s perimeter and mass (Witten and Sander, 1983). We start with a 2D lattice containing a seed particle in the middle, draw a circle around the particle, and place another particle on the circumference of the circle at some random angle. We then release the second particle and have it execute a random walk, much like the one we studied in Chapter 4, but restricted to vertical or horizontal jumps between lattice sites. This is a simulation of a type of Brownian motion related to diffusion. To make the model more realistic, we let the length of each step vary according to a random Gaussian distribution. If at some point during its random walk, the particle encounters another particle within one lattice spacing, they stick together and the walk terminates. If the particle passes outside the circle from which it was released, it is lost forever. The process is repeated as often as desired and results in clusters (Figure 16.7 and applet dla).

Figure 16.7 (a) A globular cluster of particles of the type that might occur in a colloid, (b) The applet Dla2en.html lets you watch these clusters grow. Here the cluster is at the center of the circle, and random walkers are started at random points around the circle.

Listing 16.2 Column.py simulates correlated ballistic deposition of minerals onto substrates on which dendrites form.

- Write a subroutine that generates random numbers with a Gaussian distribution. 3)

- Define a 2D lattice of points represented by the array

grid[400,400]with all elements initially zero. - Place the seed at the center of the lattice; that is, set

grid[199,199]=1. - Imagine a circle of radius 180 lattice spacings centered at

grid[199,199]. This is the circle from which we release particles. - Determine the angular position of the new particle on the circle’s circumference by generating a uniform random angle between 0 and 2π.

- Compute the x and y positions of the new particle on the circle.

- Determine whether the particle moves horizontally or vertically by generating a uniform random number 0 < rxy < 1 and applying the rule

(16.28)

- Generate a Gaussian-weighted random number in the interval [−∞, ∞]. This is the size of the step, with the sign indicating direction.

- We now know the total distance and direction the particle will move. It jumps one lattice spacing at a time until this total distance is covered.

- Before a jump, check whether a nearest-neighbor site is occupied:

- a) If occupied, the particle stays at its present position and the walk is over.

- b) If unoccupied, the particle jumps one lattice spacing.

- c) Continue the checking and jumping until the total distance is covered, until the particle sticks, or until it leaves the circle.

- Once one random walk is over, another particle can be released and the process repeated. This is how the cluster grows.

Because many particles are lost, you may need to generate hundreds of thousands of particles to form a cluster of several hundred particles.

16.7.2 Fractal Analysis of DLA or a Pollock

A cluster generated with the DLA technique is shown in Figure 16.7. We wish to analyze it to see if the structure is a fractal and, if so, to determine its dimension. (As an alternative, you may analyze the fractal nature of the Pollock painting in Figure 16.8, a technique used to determine the authenticity of this sort of art.) As a control, simultaneously analyze a geometric figure, such as a square or circle, whose dimension is known. The analysis is a variation of the one used to determine the length of the coastline of Britain.

- If you have not already done so, use the box counting method to determine the fractal dimension of a simple square.

- Draw a square of length L, small relative to the size of the cluster, around the seed particle. (Small might be seven lattice spacings to a side.)

- Count the number of particles within the square.

- Compute the density ρ by dividing the number of particles by the number of sites available in the box (49 in our example).

- Repeat the procedure using larger and larger squares.

- Stop when the cluster is covered.

- The (box counting) fractal dimension df is estimated from a log–log plot of the density ρ vs. L. If the cluster is a fractal, then (16.2) tells us that

and the graph should be a straight line of slope df − 2.

and the graph should be a straight line of slope df − 2.

The graph we generated had a slope of −0.36, which corresponds to a fractal dimension of 1.66. Because random numbers are involved, the graph you generate will be different, but the fractal dimension should be similar. (Actually, the structure is multifractal, and so the dimension also varies with position.)

Figure 16.8 Number 8 by the American painter Jackson Pollock. (Used with permission, Neuberger Museum, State University of New York.) Some researchers claim that Pollock’s paintings exhibit a characteristic fractal structure, while some other researchers question this (Kennedy, 2006). See if you can determine the fractal dimensions within this painting.

16.8 Fractals in Bifurcation Plot (Problem 7)

Recollect the project involving the logistics map where we plotted the values of the stable population numbers vs. the growth parameter μ. Take one of the bifurcation graphs you produced and determine the fractal dimension of different parts of the graph by using the same technique that was applied to the coastline of Britain.

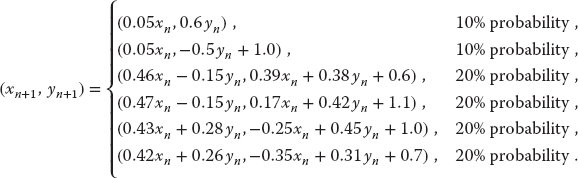

16.9 Fractals from Cellular Automata

Cellular automata were developed by von Neumann and Ulam in the early 1940s (von Neumann was also working on the theory behind modern computers then). Though very simple, cellular automata have found applications in many branches of science (Peitgen et al., 1994; Sipper, 1996). Their classic definition is (Barnsley and Hurd, 1992):

A cellular automaton is a discrete dynamical system in which space, time, and the states of the system are discrete. Each point in a regular spatial lattice, called a cell, can have any one of a finite number of states, and the states of the cells in the lattice are updated according to a local rule. That is, the state of a cell at a given time depends only on its own state one time step previously, and the states of its nearby neighbors at the previous time step. All cells on the lattice are updated synchronously, and so the state of the entice lattice advances in discrete time steps.

A cellular automaton in two dimensions consists of a number of square cells that grow upon each other. A famous one is Conway’s Game of Life, which we implement in Listing 16.3. In this, cells with value 1 are alive, while cells with value 0 are dead. Cells grow according to the following rules:

- If a cell is alive and if two or three of its eight neighbors are alive, then the cell remains alive.

- If a cell is alive and if more than three of its eight neighbors are alive, then the cell dies because of overcrowding.

- If a cell is alive and only one of its eight neighbors is alive, then the cell dies of loneliness.

- If a cell is dead and more than three of its neighbors are alive, then the cell revives.

A variation on the Game of Life is to include a “rule one out of eight” that a cell will be alive if exactly one of its neighbors is alive, otherwise the cell will remain unchanged.

Listing 16.3 Gameoflife.py is an extension of Conway’s Game of Life in which cells always revive if one out of eight neighbors is alive.

Early studies of the statistical mechanics of cellular automata were made by (Wolfram, 1983), who indicated how one can be used to generate a Sierpiński gasket. Because we have already seen that a Sierpiński gasket exhibits fractal geometry (Section 16.2), this represents a microscopic model of how fractals may occur in nature. This model uses eight rules, given graphically at the top of Figure 16.9, to generate new cells from old. We see all possible configurations for three cells in the top row, and the begetted next generation in the row below. At the bottom of Figure 16.9 is a Sierpiński gasket of the type created by the applet JCellAut. This plays the game and lets you watch and control the growth of the gasket.

Figure 16.9 The rules for two versions of the Game of Life. The rules, given graphically on the top row, create the gaskets below (output obtained from the applet JCellAut in the auxiliary files).

16.10 Perlin Noise Adds Realism

We have already seen in this chapter how statistical fractals are able to generate objects with a striking resemblance to those in nature. This appearance of realism may be further enhanced by including a type of coherent randomness known as Perlin noise. The technique we are about to discuss was developed by Ken Perlin of New York University, who won an Academy Award (an Oscar) in 1997 for it and has continued to improve it (Perlin, 1985). This type of coherent noise has found use in important physics simulations of stochastic media (Tickner, 2004), as well as in video games and motion pictures like Tron.

Figure 16.10 The coordinates used in adding Perlin noise. (a) The rectangular grid used to locate a square in space and a corresponding point within the square. As shown with the arrows, unit vectors gi with random orientation are assigned at each grid point. (b) A point within each square is located by drawing the four pi. The gi vectors are the same as on the left.

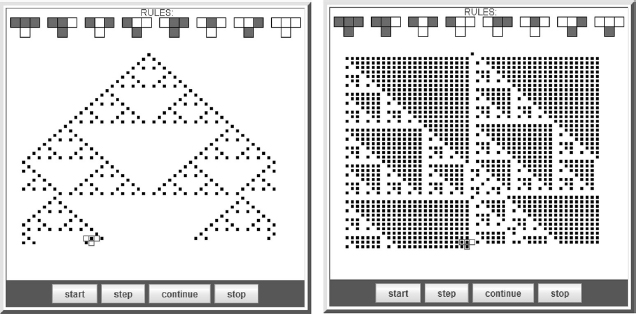

The inclusion of Perlin noise in a simulation adds both randomness and a type of coherence among points in space that tends to make dense regions denser and sparse regions sparser. This is similar to our correlated ballistic deposition simulations (Section 16.6.1) and related to chaos in its long-range randomness and short-range correlations. We start with some known function of x and y and add noise to it. For this purpose, Perlin used the mapping or ease function (Figure 16.11b)

As a consequence of its S shape, this mapping makes regions close to 0 even closer to 0, while making regions close to 1 even closer (in other words, it increases the tendency to clump, which shows up as higher contrast). We then break space up into a uniform rectangular grid of points (Figure 16.10a), and consider a point (x, y) within a square with vertices (x0, y0), (x1, y0), (x0, y1), and (x1, y1). We next assign unit gradients vectors g0 to g3 with random orientation at each grid point. A point within each square is located by drawing the four pi vectors (Figurel6.10b):

Figure 16.11 The mapping used in adding Perlin noise. (a) The numbers s, t, u, and v are represented by perpendiculars to the four vertices, with lengths proportional to their values. (b) The function 3p2 − 2p3 is used as a map of the noise at a point like (x, y) to others close by.

Next the scalar products of the p′s and the g′s are formed:

As shown in Figure 16.11a, the numbers s, t, u, and v are assigned to the four vertices of the square and represented there by lines perpendicular to the square with lengths proportional to the values of s, t, u, and v (which can be positive or negative).

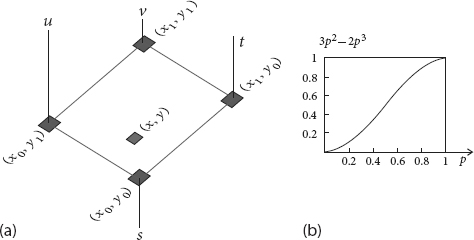

The actual mapping proceeds via a number of steps (Figure 16.12):

- Transform the point (x, y) to (sx, sy),

- Assign the lengths s, t, u, and v to the vertices in the mapped square.

- Obtain the height a (Figure 16.12) via linear interpolation between s and t.

- Obtain the height b via linear interpolation between u and v.

- Obtain sy as a linear interpolation between a and b.

- The vector c so obtained is now the two components of the noise at (x, y).

16.10.1 Ray Tracing Algorithms

Ray tracing is a technique that renders an image of a scene by simulating the way rays of light travel (Pov-Ray, 2013). To avoid tracing rays that do not contribute to the final image, ray-tracing programs start at the viewer, trace rays backward onto the scene, and then back again onto the light sources. You can vary the location of the viewer and light sources and the properties of the objects being viewed, as well as atmospheric conditions such as fog, haze, and fire.

As an example of what can be carried out, in Figure 16.14a we show the output from the ray-tracing program Islands.pov in Listing 16.4, using as input the coherent random noise as shown in Figure 16.13b. The program options we used are given in Listing 16.4 and are seen to include commands to color the islands, to include waves, and to give textures to the sky and the sea. Pov-Ray also allows the possibility of using Perlin noise to give textures to the objects to be created. For example, the stone cup on the right in Figure 16.14 has a marblelike texture produced by Perlin noise.

Figure 16.12 Perlin noise mapping, (a) The point (x,y) is mapped to point (sx,xy). (b) Using (16.33). Then three linear interpolations are performed to find c, the noise at (x, y).

Figure 16.13 After the addition of Perlin noise, the random scatterplot in (a) becomes the clusters on (b).

Listing 16.4 Islands.pov in the Codes/Animations/Fractals directory gives the Pov-Ray ray-tracing commands needed to convert the coherent noise random plot of Figure 16.13 into the mountain like image as shown in Figure 16.14a.

Figure 16.14 (a) The output from the Pov-Ray ray-tracing program that took as input the 2D coherent random noise plot in Figure 16.13 and added height and fog. (b) An image of a surface of revolution produced by Pov-Ray in which the marble-like texture is created by Perlin noise.

16.11 Exercises

- Figure 16.9 gives the rules (at top) and the results (below) for two versions of the Game of Life. These results were produced by the applet

JCellAut. Write a Python program that runs the same games. Check that you obtain the same results for the same initial conditions. - Recall how box counting is used to determine the fractal dimension of an object. Imagine that the result of some experiment or simulation is an interesting geometric figure.

- a) What might be the physical/theoretical importance of determining that this object is a fractal?

- b) What might be the importance of determining its fractal dimension?

- c) Why is it important to use more than two sizes of boxes?