Canonical Forms

10.1 Introduction

Let T be a linear operator on a vector space of finite dimension. As seen in Chapter 6, T may not have a diagonal matrix representation. However, it is still possible to “simplify” the matrix representation of T in a number of ways. This is the main topic of this chapter. In particular, we obtain the primary decomposition theorem, and the triangular, Jordan, and rational canonical forms.

We comment that the triangular and Jordan canonical forms exist for T if and only if the characteristic polynomial Δ(t) of T has all its roots in the base field K. This is always true if K is the complex field C but may not be true if K is the real field R.

We also introduce the idea of a quotient space. This is a very powerful tool, and it will be used in the proof of the existence of the triangular and rational canonical forms.

10.2 Triangular Form

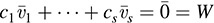

Let T be a linear operator on an n-dimensional vector space V. Suppose T can be represented by the triangular matrix

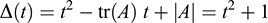

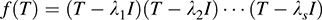

Then the characteristic polynomial Δ(t) of T is a product of linear factors; that is,

The converse is also true and is an important theorem (proved in Problem 10.28).

THEOREM 10.1: Let T:V → V be a linear operator whose characteristic polynomial factors into linear polynomials. Then there exists a basis of V in which T is represented by a triangular matrix.

THEOREM 10.1: (Alternative Form) Let A be a square matrix whose characteristic polynomial factors into linear polynomials. Then A is similar to a triangular matrix—that is, there exists an invertible matrix P such that P−1AP is triangular.

We say that an operator T can be brought into triangular form if it can be represented by a triangular matrix. Note that in this case, the eigenvalues of T are precisely those entries appearing on the main diagonal. We give an application of this remark.

EXAMPLE 10.1 Let A be a square matrix over the complex field C. Suppose λ is an eigenvalue of A2. Show that  or

or  is an eigenvalue of A.

is an eigenvalue of A.

By Theorem 10.1, A and A2 are similar, respectively, to triangular matrices of the form

Because similar matrices have the same eigenvalues,  for some i. Hence,

for some i. Hence,  is an eigenvalue of A.

is an eigenvalue of A.

10.3 Invariance

Let T:V → V be linear. A subspace W of V is said to be invariant under T or T-invariant if T maps W into itself—that is, if υ ∈ W implies T(υ) ∈ W. In this case, T restricted to W defines a linear operator on W; that is, T induces a linear operator  defined by

defined by  for every w ∈ W.

for every w ∈ W.

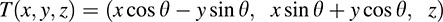

(a) Let T: R3 → R3 be the following linear operator, which rotates each vector υ about the z-axis by an angle θ (shown in Fig. 10-1):

Observe that each vector w = (a, b, 0) in the xy-plane W remains in W under the mapping T; hence, W is T-invariant. Observe also that the z-axis U is invariant under T. Furthermore, the restriction of T to W rotates each vector about the origin O, and the restriction of T to U is the identity mapping of U.

(b) Nonzero eigenvectors of a linear operator T:V → V may be characterized as generators of T-invariant one-dimensional subspaces. Suppose T(υ) = λυ, υ ≠ 0. Then W = {kυ, k ∈ K}, the one-dimensional subspace generated by υ, is invariant under T because

Conversely, suppose dim U = 1 and u ≠ 0 spans U, and U is invariant under T. Then T(u) ∈ U and so T(u) is a multiple of u—that is, T(u) = μu. Hence, u is an eigenvector of T.

The next theorem (proved in Problem 10.3) gives us an important class of invariant subspaces.

THEOREM 10.2: Let T:V → V be any linear operator, and let f(t) be any polynomial. Then the kernel of f(t) is invariant under T.

The notion of invariance is related to matrix representations (Problem 10.5) as follows.

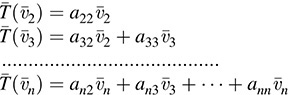

THEOREM 10.3: Suppose W isan invariant subspace of T:V → V.Then T hasa blockmatrix representation  , where A is a matrix representation of the restriction

, where A is a matrix representation of the restriction  of T to W.

of T to W.

10.4 Invariant Direct-Sum Decompositions

A vector space V is termed the direct sum of subspaces W1, ..., Wr, written

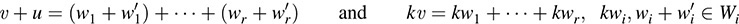

if every vector υ ∈ V can be written uniquely in the form

The following theorem (proved in Problem 10.7) holds.

THEOREM 10.4: Suppose W1, W2, ..., Wr are subspaces of V, and suppose

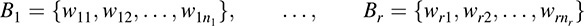

are bases of W1, W2, ..., Wr, respectively. Then V is the direct sum of the Wi if and only if the union B = B1 ∪ ... ∪ Br is a basis of V.

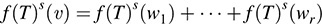

Now suppose T:V → V is linear and V is the direct sum of (nonzero) T-invariant subspaces W1, W2, ..., Wr; that is,

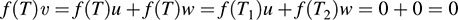

Let Ti denote the restriction of T to Wi. Then T is said to be decomposable into the operators Ti or T is said to be the direct sum of the Ti, written T = T1 ⊕ ... ⊕ Tr. Also, the subspaces W1, ..., Wr are said to reduce T or to form a T-invariant direct-sum decomposition of V.

Consider the special case where two subspaces U and W reduce an operator T:V → V; say dim U = 2 and dim W = 3, and suppose {u1, u2} and {w1, w2, w3} are bases of U and W, respectively. If T1 and T2 denote the restrictions of T to U and W, respectively, then

Accordingly, the following matrices A, B, M are the matrix representations of T1, T2, T, respectively,

The block diagonal matrix M results from the fact that {u1, u2, w1, w2, w3} is a basis of V (Theorem 10.4), and that T(ui) = T1(ui) and T(wj) = T2(wj).

A generalization of the above argument gives us the following theorem.

THEOREM 10.5: Suppose T:V → V is linear and suppose V is the direct sum of T-invariant subspaces, say, W1, ..., Wr. If Ai is a matrix representation of the restriction of T to Wi, then T can be represented by the block diagonal matrix:

10.5 Primary Decomposition

The following theorem shows that any operator T:V → V is decomposable into operators whose minimum polynomials are powers of irreducible polynomials. This is the first step in obtaining a canonical form for T.

THEOREM 10.6: (Primary Decomposition Theorem) Let T:V → V be a linear operator with minimal polynomial

where the fi(t) are distinct monic irreducible polynomials. Then V is the direct sum of T-invariant subspaces W1, ..., Wr, where Wi is the kernel of fi(T)ni. Moreover, fi(t)ni is the minimal polynomial of the restriction of T to Wi.

The above polynomials fi(t)ni are relatively prime. Therefore, the above fundamental theorem follows (Problem 10.11) from the next two theorems (proved in Problems 10.9 and 10.10, respectively).

THEOREM 10.7: Suppose T:V → V is linear, and suppose f(t) = g(t)h(t) are polynomials such that f(t) = 0 and g(t) and h(t) are relatively prime. Then V is the direct sum of the T-invariant subspace U and W, where U = Ker g(T) and W = Ker h(T).

THEOREM 10.8: In Theorem 10.7, if f(t) is the minimal polynomial of T [and g(t) and h(t) are monic], then g(t) and h(t) are the minimal polynomials of the restrictions of T to U and W, respectively.

We will also use the primary decomposition theorem to prove the following useful characterization of diagonalizable operators (see Problem 10.12 for the proof).

THEOREM 10.9: A linear operator T:V → V is diagonalizable if and only if its minimal polynomial m(t) is a product of distinct linear polynomials.

THEOREM 10.9: (Alternative Form) A matrix A is similar to a diagonal matrix if and only if its minimal polynomial is a product of distinct linear polynomials.

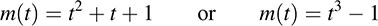

EXAMPLE 10.3 Suppose A ≠ I is a square matrix for which A3 = I. Determine whether or not A is similar to a diagonal matrix if A is a matrix over: (i) the real field R, (ii) the complex field C.

Because A3 = I, A is a zero of the polynomial f(t) = t3 − 1 = (t − 1)(t2 + t + 1). The minimal polynomial m(t) of A cannot be t − 1, because A ≠ I. Hence,

Because neither polynomial is a product of linear polynomials over R, A is not diagonalizable over R. On the other hand, each of the polynomials is a product of distinct linear polynomials over C. Hence, A is diagonalizable over C.

10.6 Nilpotent Operators

A linear operator T:V → V is termed nilpotent if Tn = 0 for some positive integer n; we call k the index of nilpotency of T if Tk = 0 but Tk − 1 ≠ 0. Analogously, a square matrix A is termed nilpotent if An = 0 for some positive integer n, and of index k if Ak = 0 but Ak − 1 ≠ 0. Clearly the minimum polynomial of a nilpotent operator (matrix) of index k is m(t) = tk; hence, 0 is its only eigenvalue.

EXAMPLE 10.4 The following two r-square matrices will be used throughout the chapter:

The first matrix N, called a Jordan nilpotent block, consists of 1’s above the diagonal (called the super-diagonal), and 0’s elsewhere. It is a nilpotent matrix of index r. (The matrix N of order 1 is just the 1 × 1 zero matrix [0].)

The second matrix J(λ), called a Jordan block belonging to the eigenvalue λ, consists of λ’s on the diagonal, 1’s on the superdiagonal, and 0’s elsewhere. Observe that

In fact, we will prove that any linear operator T can be decomposed into operators, each of which is the sum of a scalar operator and a nilpotent operator.

The following (proved in Problem 10.16) is a fundamental result on nilpotent operators.

THEOREM 10.10: Let T:V → V be a nilpotent operator of index k. Then T has a block diagonal matrix representation in which each diagonal entry is a Jordan nilpotent block N. There is at least one N of order k, and all other N are of orders ≤k. The number of N of each possible order is uniquely determined by T. The total number of N of all orders is equal to the nullity of T.

The proof of Theorem 10.10 shows that the number of N of order i is equal to 2mi − mi + 1 − mi − 1, where mi is the nullity of Ti.

10.7 Jordan Canonical Form

An operator T can be put into Jordan canonical form if its characteristic and minimal polynomials factor into linear polynomials. This is always true if K is the complex field C. In any case, we can always extend the base field K to a field in which the characteristic and minimal polynomials do factor into linear factors; thus, in a broad sense, every operator has a Jordan canonical form. Analogously, every matrix is similar to a matrix in Jordan canonical form.

The following theorem (proved in Problem 10.18) describes the Jordan canonical form J of a linear operator T.

THEOREM 10.11: Let T:V → V be a linear operator whose characteristic and minimal polynomials are, respectively,

where the λi are distinct scalars. Then T has a block diagonal matrix representation J in which each diagonal entry is a Jordan block Jij = J(λi). For each λij, the corresponding Jij have the following properties:

(i) There is at least one Jij of order mi; all other Jij are of order ≤mi.

(ii) The sum of the orders of the Jij is ni.

(iii) The number of Jij equals the geometric multiplicity of λi.

(iv) The number of Jij of each possible order is uniquely determined by T.

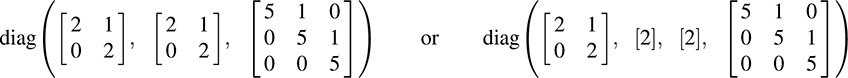

EXAMPLE 10.5 Suppose the characteristic and minimal polynomials of an operator T are, respectively,

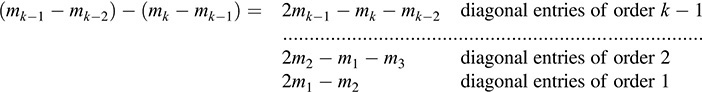

Then the Jordan canonical form of T is one of the following block diagonal matrices:

The first matrix occurs if T has two independent eigenvectors belonging to the eigenvalue 2; and the second matrix occurs if T has three independent eigenvectors belonging to the eigenvalue 2.

10.8 Cyclic Subspaces

Let T be a linear operator on a vector space V of finite dimension over K. Suppose υ ∈ V and υ ≠ 0. The set of all vectors of the form f (T)(υ), where f(t) ranges over all polynomials over K, is a T-invariant subspace of V called the T-cyclic subspace of V generated by υ ; we denote it by Z(υ, T) and denote the restriction of T to Z(υ, T) by Tυ. By Problem 10.56, we could equivalently define Z(υ, T) as the intersection of all T-invariant subspaces of V containing υ.

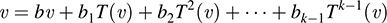

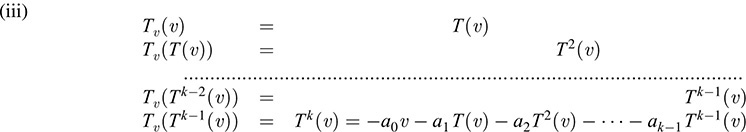

Now consider the sequence

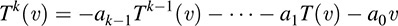

of powers of T acting on υ. Let k be the least integer such that Tk(υ) is a linear combination of those vectors that precede it in the sequence, say,

Then

is the unique monic polynomial of lowest degree for which mυ(T)(υ) = 0. We call mυ(t) the T-annihilator of υ and Z(υ, T).

The following theorem (proved in Problem 10.29) holds.

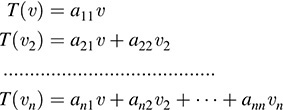

THEOREM 10.12: Let Z(υ, T), Tυ, mυ(t) be defined as above. Then

(i) The set {υ, T(υ), ..., Tk − 1(υ)} is a basis of Z(υ, T); hence, dim Z(υ, T) = k.

(ii) The minimal polynomial of Tυ is mυ(t).

(iii) The matrix representation of Tυ in the above basis is just the companion matrix C(mυ) of mυ(t); that is,

10.9 Rational Canonical Form

In this section, we present the rational canonical form for a linear operator T:V → V. We emphasize that this form exists even when the minimal polynomial cannot be factored into linear polynomials. (Recall that this is not the case for the Jordan canonical form.)

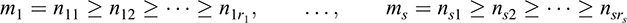

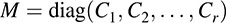

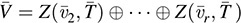

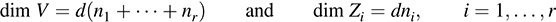

LEMMA 10.13: Let T:V → V be a linear operator whose minimal polynomial is f(t)n, where f(t) is a monic irreducible polynomial. Then V is the direct sum

of T-cyclic subspaces Z(υi, T) with corresponding T-annihilators

Any other decomposition of V into T-cyclic subspaces has the same number of components and the same set of T-annihilators.

We emphasize that the above lemma (proved in Problem 10.31) does not say that the vectors υ i or other T-cyclic subspaces Z(υi, T) are uniquely determined by T, but it does say that the set of T-annihilators is uniquely determined by T. Thus, T has a unique block diagonal matrix representation:

where the Ci are companion matrices. In fact, the Ci are the companion matrices of the polynomials f(t)ni.

Using the Primary Decomposition Theorem and Lemma 10.13, we obtain the following result.

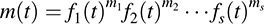

THEOREM 10.14: Let T:V → V be a linear operator with minimal polynomial

where the fi(t) are distinct monic irreducible polynomials. Then T has a unique block diagonal matrix representation:

where the Cij are companion matrices. In particular, the Cij are the companion matrices of the polynomials fi(t)nij, where

The above matrix representation of T is called its rational canonical form. The polynomials fi(t)nij are called the elementary divisors of T.

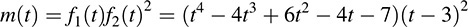

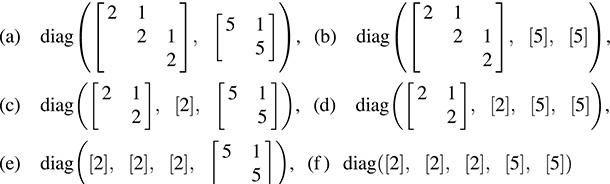

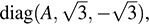

EXAMPLE 10.6 Let V be a vector space of dimension 8 over the rational field Q, and let T be a linear operator on V whose minimal polynomial is

Thus, because dim V = 8, the characteristic polynomial Δ(t) = f1(t) f2(t)4. Also, the rational canonical form M of T must have one block the companion matrix of f1(t) and one block the companion matrix of f2(t)2. There are two possibilities:

(a) diag[C(t4 − 4t3 + 6t2 − 4t − 7), C((t − 3)2), C((t − 3)2)]

(b) diag[C(t4 − 4t3 + 6t2 − 4t − 7), C((t − 3)2), C(t − 3), C(t − 3)]

That is,

10.10 Quotient Spaces

Let V be a vector space over a field K and let W be a subspace of V. If υ is any vector in V, we write υ + W for the set of sums υ + w with w ∈ W; that is,

These sets are called the cosets of W in V. We show (Problem 10.22) that these cosets partition V into mutually disjoint subsets.

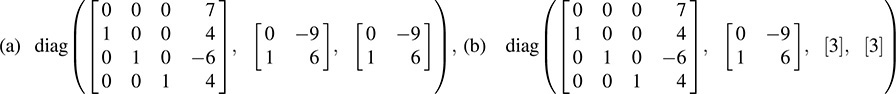

EXAMPLE 10.7 Let W be the subspace of R2 defined by

that is, W is the line given by the equation x − y = 0. We can view υ + W as a translation of the line obtained by adding the vector υ to each point in W. As shown in Fig. 10-2, the coset υ + W is also a line, and it is parallel to W. Thus, the cosets of W in R2 are precisely all the lines parallel to W.

In the following theorem, we use the cosets of a subspace W of a vector space V to define a new vector space; it is called the quotient space of V by W and is denoted by V/W.

THEOREM 10.15: Let W be a subspace of a vector space over a field K. Then the cosets of W in V form a vector space over K with the following operations of addition and scalar multiplication:

We note that, in the proof of Theorem 10.15 (Problem 10.24), it is first necessary to show that the operations are well defined; that is, whenever u + W = u′ + W and υ + W = υ′ + W, then

In the case of an invariant subspace, we have the following useful result (proved in Problem 10.27).

THEOREM 10.16: Suppose W is a subspace invariant under a linear operator T:V → V. Then T induces a linear operator  on V/W defined by

on V/W defined by  . Moreover, if T is a zero of any polynomial, then so is

. Moreover, if T is a zero of any polynomial, then so is  . Thus, the minimal polynomial of

. Thus, the minimal polynomial of  divides the minimal polynomial of T.

divides the minimal polynomial of T.

SOLVED PROBLEMS

Invariant Subspaces

10.1. Suppose T:V → V is linear. Show that each of the following is invariant under T:

(a) {0}, (b) V, (c) kernel of T, (d) image of T.

(a) We have T(0) = 0 ∈ {0}; hence, {0} is invariant under T.

(b) For every υ ∈ V, T(υ) ∈ V; hence, V is invariant under T.

(c) Let u ∈ Ker T. Then T(u) = 0 ∈ Ker T because the kernel of T is a subspace of V. Thus, Ker T is invariant under T.

(d) Because T(υ) ∈ Im T for every υ ∈ V, it is certainly true when υ ∈ Im T. Hence, the image of T is invariant under T.

10.2. Suppose {Wi} is a collection of T-invariant subspaces of a vector space V. Show that the intersection W = ∩i Wi is also T-invariant.

Suppose υ ∈ W; then υ ∈ Wi for every i. Because Wi is T-invariant, T(υ) ∈ Wi for every i. Thus, T(υ) ∈ W and so W is T-invariant.

10.3. Prove Theorem 10.2: Let T:V → V be linear. For any polynomial f(t), the kernel of f(T) is invariant under T.

Suppose υ ∈ Ker f(t)—that is, f(T)(υ) = 0. We need to show that T(υ) also belongs to the kernel of f(T)—that is, f(T)(T(υ)) = (f(T) ∘ T)(υ) = 0. Because f(t)t = tf(t), we have f(T) ∘ T = T ∘ f(t). Thus, as required,

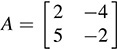

10.4. Find all invariant subspaces of  viewed as an operator on R2.

viewed as an operator on R2.

By Problem 10.1, R2 and {0} are invariant under A. Now if A has any other invariant subspace, it must be one-dimensional. However, the characteristic polynomial of A is

Hence, A has no eigenvalues (in R) and so A has no eigenvectors. But the one-dimensional invariant subspaces correspond to the eigenvectors; thus, R2 and {0} are the only subspaces invariant under A.

10.5. Prove Theorem 10.3: Suppose W is T-invariant. Then T has a triangular block representation  , where A is the matrix representation of the restriction

, where A is the matrix representation of the restriction  of T to W.

of T to W.

We choose a basis {w1, ..., wr} of W and extend it to a basis {w1, ..., wr, υ1, ..., υs} of V. We have

But the matrix of T in this basis is the transpose of the matrix of coefficients in the above system of equations (Section 6.2). Therefore, it has the form  , where A is the transpose of the matrix of coefficients for the obvious subsystem. By the same argument, A is the matrix of

, where A is the transpose of the matrix of coefficients for the obvious subsystem. By the same argument, A is the matrix of  relative to the basis {wi} of W.

relative to the basis {wi} of W.

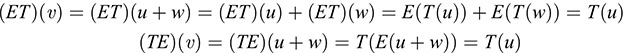

10.6. Let  denote the restriction of an operator T to an invariant subspace W. Prove

denote the restriction of an operator T to an invariant subspace W. Prove

(a) For any polynomial f(t), f( )(w) = f (T)(w).

)(w) = f (T)(w).

(b) The minimal polynomial of  divides the minimal polynomial of T.

divides the minimal polynomial of T.

(a) If f(t) = 0 or if f(t) is a constant (i.e., of degree 1), then the result clearly holds. Assume deg f = n > 1 and that the result holds for polynomials of degree less than n. Suppose that

Then

(b) Let m(t) denote the minimal polynomial of T. Then by (a), m( )(w) = m(T)(w) = 0(w) = 0 for every w ∈ W; that is,

)(w) = m(T)(w) = 0(w) = 0 for every w ∈ W; that is,  is a zero of the polynomial m(t). Hence, the minimal polynomial of

is a zero of the polynomial m(t). Hence, the minimal polynomial of  divides m(t).

divides m(t).

Invariant Direct-Sum Decompositions

10.7. Prove Theorem 10.4: Suppose W1, W2, ..., Wr are subspaces of V with respective bases

Then V is the direct sum of the Wi if and only if the union B = ∪i Bi is a basis of V.

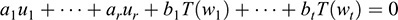

Suppose B is a basis of V. Then, for any υ ∈ V,

where wi = ai1wi1 + ⋯ + ainiwini ∈ Wi. We next show that such a sum is unique. Suppose

Because {wi1, ..., wini} is a basis of  , and so

, and so

Because B is a basis of V, aij = bij, for each i and each j. Hence,  , and so the sum for υ is unique. Accordingly, V is the direct sum of the Wi.

, and so the sum for υ is unique. Accordingly, V is the direct sum of the Wi.

Conversely, suppose V is the direct sum of the Wi. Then for any υ ∈ V, υ = w1 + ⋯ + wr, where wi ∈ Wi. Because {wiji} is a basis of Wi, each wi is a linear combination of the wiji, and so υ is a linear combination of the elements of B. Thus, B spans V. We now show that B is linearly independent. Suppose

Note that ai1wi1 + ⋯ + ainiwini ∈ Wi. We also have that 0 = 0 + 0 ⋯ 0 ∈ Wi. Because such a sum for 0 is unique,

The independence of the bases {wiji} implies that all the a’s are 0. Thus, B is linearly independent and is a basis of V.

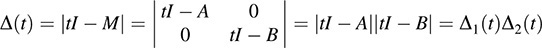

10.8. Suppose T:V → V is linear and suppose T = T1 ⊕ T2 with respect to a T-invariant direct-sum decomposition V = U ⊕ W. Show that

(a) m(t) is the least common multiple of m1(t) and m2(t), where m(t), m1(t), m2(t) are the minimum polynomials of T, T1, T2, respectively.

(b) Δ(t) = Δ1(t)Δ2(t), where Δ(t), Δ1(t), Δ2(t) are the characteristic polynomials of T, T1, T2, respectively.

(a) By Problem 10.6, each of m1(t) and m2(t) divides m(t). Now suppose f(t) is a multiple of both m1(t) and m2(t), then f(T1)(U) = 0 and f(T2)(W) = 0. Let υ ∈ V, then υ = u + w with u ∈ U and w ∈ W. Now

That is, T is a zero of f(t). Hence, m(t) divides f(t), and so m(t) is the least common multiple of m1(t) and m2(t).

(b) By Theorem 10.5, T has a matrix representation  , where A and B are matrix representations of T1 and T2, respectively. Then, as required,

, where A and B are matrix representations of T1 and T2, respectively. Then, as required,

10.9. Prove Theorem 10.7: Suppose T:V → V is linear, and suppose f(t) = g(t)h(t) are polynomials such that f(t) = 0 and g(t) and h(t) are relatively prime. Then V is the direct sum of the T-invariant subspaces U and W where U = Ker g(T) and W = Ker h(T).

Note first that U and W are T-invariant by Theorem 10.2. Now, because g(t) and h(t) are relatively prime, there exist polynomials r(t) and s(t) such that

Hence, for the operator T,

Let υ ∈ V; then, by (*),

But the first term in this sum belongs to W = Ker h(T), because

Similarly, the second term belongs to U. Hence, V is the sum of U and W.

To prove that V = U ⊕ W, we must show that a sum υ = u + w with u ∈ U, w ∈ W, is uniquely determined by υ. Applying the operator r(T)g(T) to υ = u + w and using g(T)u = 0, we obtain

Also, applying (*) to w alone and using h(T)w = 0, we obtain

Both of the above formulas give us w = r(T)g(T)υ, and so w is uniquely determined by υ. Similarly u is uniquely determined by υ. Hence, V = U ⊕ W, as required.

10.10. Prove Theorem 10.8: In Theorem 10.7 (Problem 10.9), if f(t) is the minimal polynomial of T (and g(t) and h(t) are monic), then g(t) is the minimal polynomial of the restriction T1 of T to U and h(t) is the minimal polynomial of the restriction T2 of T to W.

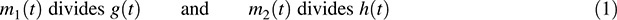

Let m1(t) and m2(t) be the minimal polynomials of T1 and T2, respectively. Note that g(T1) = 0 and h(T2) = 0 because U = Ker g(T) and W = Ker h(T). Thus,

By Problem 10.9, f(t) is the least common multiple of m1(t) and m2(t). But m1(t) and m2(t) are relatively prime because g(t) and h(t) are relatively prime. Accordingly, f(t) = m1(t)m2(t). We also have that. These two equations together with (1) and the fact that all the polynomials are monic imply that g(t) = m1(t) and h(t) = m2(t), as required.

10.11. Prove the Primary Decomposition Theorem 10.6: Let T:V → V be a linear operator with minimal polynomial

where the fi(t) are distinct monic irreducible polynomials. Then V is the direct sum of T-invariant subspaces W1, ..., Wr where Wi is the kernel of fi(T)ni. Moreover, fi(t)ni is the minimal polynomial of the restriction of T to Wi.

The proof is by induction on r. The case r = 1 is trivial. Suppose that the theorem has been proved for r − 1. By Theorem 10.7, we can write V as the direct sum of T-invariant subspaces W1 and V1, where W1 is the kernel of f1(T)n1 and where V1 is the kernel of f2(T)n2 ⋯ fr(T)nr. By Theorem 10.8, the minimal polynomials of the restrictions of T to W1 and V1 are f1(t)n1 and f2(t)n2 ⋯ fr(t)nr, respectively.

Denote the restriction of T to V1 by  . By the inductive hypothesis, V1 is the direct sum of subspaces W2, ..., Wr such that Wi is the kernel of fi(T1)ni and such that fi(t)ni is the minimal polynomial for the restriction of

. By the inductive hypothesis, V1 is the direct sum of subspaces W2, ..., Wr such that Wi is the kernel of fi(T1)ni and such that fi(t)ni is the minimal polynomial for the restriction of  to Wi. But the kernel of fi(T)ni, for i = 2, ..., r is necessarily contained in V1, because fi(t)ni divides f2(t)n2 ⋯ fr(t)nr. Thus, the kernel of fi(T)ni is the same as the kernel of fi(T1)ni, which is Wi. Also, the restriction of T to Wi is the same as the restriction of

to Wi. But the kernel of fi(T)ni, for i = 2, ..., r is necessarily contained in V1, because fi(t)ni divides f2(t)n2 ⋯ fr(t)nr. Thus, the kernel of fi(T)ni is the same as the kernel of fi(T1)ni, which is Wi. Also, the restriction of T to Wi is the same as the restriction of  to Wi (for i = 2, ..., r); hence, fi(t)ni is also the minimal polynomial for the restriction of T to Wi. Thus, V = W1 ⊕ W2 ⊕ Wr is the desired decomposition of T.

to Wi (for i = 2, ..., r); hence, fi(t)ni is also the minimal polynomial for the restriction of T to Wi. Thus, V = W1 ⊕ W2 ⊕ Wr is the desired decomposition of T.

10.12. Prove Theorem 10.9: A linear operator T:V → V has a diagonal matrix representation if and only if its minimal polynomal m(t) is a product of distinct linear polynomials.

Suppose m(t) is a product of distinct linear polynomials, say,

where the λi are distinct scalars. By the Primary Decomposition Theorem, V is the direct sum of subspaces W1, ..., Wr, where Wi = Ker(T − λiI). Thus, if υ ∈ Wi, then (T − λiI)(υ) = 0 or T(υ) = λiυ. In other words, every vector in Wi is an eigenvector belonging to the eigenvalue λi. By Theorem 10.4, the union of bases for W1, ..., Wr is a basis of V. This basis consists of eigenvectors, and so T is diagonalizable.

Conversely, suppose T is diagonalizable (i.e., V has a basis consisting of eigenvectors of T ). Let λ1, ..., λs be the distinct eigenvalues of T. Then the operator

maps each basis vector into 0. Thus, f(t) = 0, and hence, the minimal polynomial m(t) of T divides the polynomial

Accordingly, m(t) is a product of distinct linear polynomials.

Nilpotent Operators, Jordan Canonical Form

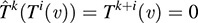

10.13. Let T:V be linear. Suppose, for υ ∈ V, Tk(υ) = 0 but Tk − 1(υ) ≠ 0. Prove

(a) The set S = {υ, T(υ), ..., Tk − 1(υ)} is linearly independent.

(b) The subspace W generated by S is T-invariant.

(c) The restriction  of T to W is nilpotent of index k.

of T to W is nilpotent of index k.

(d) Relative to the basis {Tk − 1(υ), ..., T(υ), υ} of W, the matrix of T is the k-square Jordan nilpotent block Nk of index k (see Example 10.5).

(a) Suppose

Applying Tk − 1 to (*) and using Tk(υ) = 0, we obtain aTk − 1(υ) = 0; because Tk − 1(υ) ≠ 0, a = 0. Now applying Tk − 2 to (*) and using Tk(υ) = 0 and a = 0, we fiind a1Tk − 1(υ) = 0; hence, a1 = 0. Next applying Tk − 3 to (*) and using Tk(υ) = 0 and a = a1 = 0, we obtain a2Tk − 1(υ) = 0; hence, a2 = 0. Continuing this process, we find that all the a’s are 0; hence, S is independent.

(b) Let υ ∈ W. Then

Using Tk(υ) = 0, we have

Thus, W is T-invariant.

(c) By hypothesis, Tk(υ) = 0. Hence, for i = 0, ..., k − 1,

That is, applying  to each generator of W, we obtain 0; hence,

to each generator of W, we obtain 0; hence,  and so

and so  is nilpotent of index at most k. On the other hand,

is nilpotent of index at most k. On the other hand,  ; hence, T is nilpotent of index exactly k.

; hence, T is nilpotent of index exactly k.

(d) For the basis {Tk − 1(υ), Tk − 2(υ), ..., T(υ), υ} of W,

Hence, as required, the matrix of T in this basis is the k-square Jordan nilpotent block Nk.

10.14. Let T:V → V be linear. Let U = Ker Ti and W = Ker Ti + 1. Show that

(a) U ⊆ W, (b) T(W) ⊆ U.

(a) Suppose u ∈ U = Ker Ti. Then Ti(u) = 0 and so Ti+ 1u) = T(Ti(u)) = T(0) = 0. Thus, u ∈ Ker Ti + 1 = W. But this is true for every u ∈ U; hence, U ⊆ W.

(b) Similarly, if w ∈ W = Ker Ti + 1, then Ti + 1(w) = 0. Thus, Ti + 1(w) = Ti(T(w)) = Ti(0) = 0 and so T(W) ⊆ U.

10.15. Let T:V be linear. Let X = Ker Ti − 2, Y = Ker Ti − 1, Z = Ker Ti. Therefore (Problem 10.14),

are bases of X, Y, Z, respectively. Show that

is contained in Y and is linearly independent.

By Problem 10.14, T(Z) ⊆ Y, and hence S ⊆ Y. Now suppose S is linearly dependent. Then there exists a relation

where at least one coefficient is not zero. Furthermore, because {ui} is independent, at least one of the bk must be nonzero. Transposing, we find

Hence,

Thus,

Because {ui, υj} generates Y, we obtain a relation among the ui, υj, wk where one of the coefficients (i.e., one of the bk) is not zero. This contradicts the fact that {ui, υj, wk} is independent. Hence, S must also be independent.

10.16. Prove Theorem 10.10: Let T:V → V be a nilpotent operator of index k. Then T has a unique block diagonal matrix representation consisting of Jordan nilpotent blocks N. There is at least one N of order k, and all other N are of orders ≤k. The total number of N of all orders is equal to the nullity of T.

Suppose dim V = n. Let W1 = Ker T, W2 = Ker T2, ..., Wk = Ker Tk. Let us set mi = dim Wi, for i = 1, ..., k. Because T is of index k, Wk = V and Wk − 1 ≠ V and so mk − 1 < mk = n. By Problem 10.14,

Thus, by induction, we can choose a basis {u1, ..., un} of V such that {u1, ..., umi} is a basis of Wi.

We now choose a new basis for V with respect to which T has the desired form. It will be convenient to label the members of this new basis by pairs of indices. We begin by setting

and setting

By the preceding problem,

is a linearly independent subset of Wk − 1. We extend S1 to a basis of Wk − 1 by adjoining new elements (if necessary), which we denote by

Next we set

Again by the preceding problem,

is a linearly independent subset of Wk − 2, which we can extend to a basis of Wk − 2 by adjoining elements

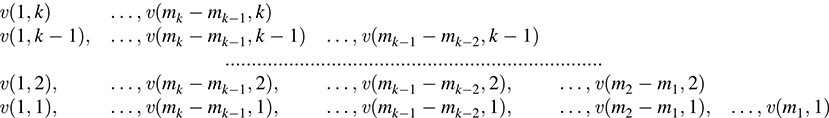

Continuing in this manner, we get a new basis for V, which for convenient reference we arrange as follows:

The bottom row forms a basis of W1, the bottom two rows form a basis of W2, and so forth. But what is important for us is that T maps each vector into the vector immediately below it in the table or into 0 if the vector is in the bottom row. That is,

Now it is clear [see Problem 10.13(d)] that T will have the desired form if the υ(i, j) are ordered lexicographically: beginning with υ (1, 1) and moving up the first column to υ(1, k), then jumping to υ(2, 1) and moving up the second column as far as possible.

Moreover, there will be exactly mk − mk − 1 diagonal entries of order k3. Also, there will be

as can be read off directly from the table. In particular, because the numbers m1, … ,mk are uniquely determined by T, the number of diagonal entries of each order is uniquely determined by T. Finally, the identity

shows that the nullity m1 of T is the total number of diagonal entries of T.

10.17. Let  . The reader can verify that A and B are both nilpotent of index 3; that is, A3 = 0 but A2 ≠ 0, and B3 = 0 but B2 ≠ 0. Find the nilpotent matrices MA and MB in canonical form that are similar to A and B, respectively.

. The reader can verify that A and B are both nilpotent of index 3; that is, A3 = 0 but A2 ≠ 0, and B3 = 0 but B2 ≠ 0. Find the nilpotent matrices MA and MB in canonical form that are similar to A and B, respectively.

Because A and B are nilpotent of index 3, MA and MB must each contain a Jordan nilpotent block of order 3, and none greater then 3. Note that rank(A) = 2 and rank(B) = 3, so nullity(A) = 5 − 2 = 3 and nullity(B) = 5 − 3 = 2. Thus, MA must contain three diagonal blocks, which must be one of order 3 and two of order 1; and MB must contain two diagonal blocks, which must be one of order 3 and one of order 2. Namely,

10.18. Prove Theorem 10.11 on the Jordan canonical form for an operator T.

By the primary decomposition theorem, T is decomposable into operators T1, ..., Tr; that is, T = T1 ⊕ ⋯ ⊕ Tr, where (t − λi)mi is the minimal polynomial of Ti. Thus, in particular,

Set Ni = Ti − λiI. Then, for i = 1, ..., r,

That is, Ti is the sum of the scalar operator λiI and a nilpotent operator Ni, which is of index mi because  is the minimal polynomial of Ti.

is the minimal polynomial of Ti.

Now, by Theorem 10.10 on nilpotent operators, we can choose a basis so that Ni is in canonical form. In this basis, Ti = Ni + λiI is represented by a block diagonal matrix Mi whose diagonal entries are the matrices Jij. The direct sum J of the matrices Mi is in Jordan canonical form and, by Theorem 10.5, is a matrix representation of T.

Last, we must show that the blocks Jij satisfy the required properties. Property (i) follows from the fact that Ni is of index mi. Property (ii) is true because T and J have the same characteristic polynomial. Property (iii) is true because the nullity of Ni = Ti − λiI is equal to the geometric multiplicity of the eigenvalue λi. Property (iv) follows from the fact that the Ti and hence the Ni are uniquely determined by T.

10.19. Determine all possible Jordan canonical forms J for a linear operator T:V → V whose characteristic polynomial Δ(t) = (t − 2)5 and whose minimal polynomial m(t) = (t − 2)2.

J must be a 5 × 5 matrix, because Δ(t) has degree 5, and all diagonal elements must be 2, because 2 is the only eigenvalue. Moreover, because the exponent of t − 2 in m(t) is 2, J must have one Jordan block of order 2, and the others must be of order 2 or 1. Thus, there are only two possibilities:

10.20. Determine all possible Jordan canonical forms for a linear operator T:V → V whose characteristic polynomial Δ(t) = (t − 2)3(t − 5)2. In each case, find the minimal polynomial m(t).

Because t − 2 has exponent 3 in Δ(t), 2 must appear three times on the diagonal. Similarly, 5 must appear twice. Thus, there are six possibilities:

The exponent in the minimal polynomial m(t) is equal to the size of the largest block. Thus,

(a) m(t) = (t − 2)3(t − 5)2, (b) m(t) = (t − 2)3(t − 5), (c) m(t) = (t − 2)2(t − 5)2,

(d) m(t) = (t − 2)2(t − 5), (e) m(t) = (t − 2)(t − 5)2, (f) m(t) = (t − 2)(t − 5)

Quotient Space and Triangular Form

10.21. Let W be a subspace of a vector space V. Show that the following are equivalent:

(i) u ∈ υ + W, (ii) u − υ ∈ W, (iii) υ ∈ u + W.

Suppose u∈ υ + W. Then there exists w0 ∈ W such that u = υ + w0. Hence, u υ = w0 ∈ W. Conversely, suppose u − υ ∈ W. Then u − υ = w0 where w0 ∈ W. Hence, u = υ + w0 ∈ υ + W. Thus, (i) and (ii) are equivalent.

We also have u − υ ∈ W iff − (u − υ ) = υ − u ∈ W iff υ ∈ u + W. Thus, (ii) and (iii) are also equivalent.

10.22. Prove the following: The cosets of W in V partition V into mutually disjoint sets. That is,

(a) Any two cosets u + W and υ + W are either identical or disjoint.

(b) Each υ ∈ V belongs to a coset; in fact, υ ∈ υ + W.

Furthermore, u + W = υ + W if and only if u − υ ∈ W, and so (υ + w) + W = υ + W for any w ∈ W.

Let υ ∈ V. Because 0 ∈ W, we have υ = υ + 0 ∈ υ + W, which proves (b).

Now suppose the cosets u + W and υ + W are not disjoint; say, the vector x belongs to both u + W and υ + W. Then u − x ∈ W and x − υ ∈ W. The proof of (a) is complete if we show that u + W = υ + W. Let u + w0 be any element in the coset u + W. Because u − x, x − υ, w0 belongs to W,

Thus, u + w0 ∈ υ + W, and hence the cost u + W is contained in the coset υ + W. Similarly, υ + W is contained in u + W, and so u + W = υ + W.

The last statement follows from the fact that u + W = υ + W if and only if u ∈ υ + W, and, by Problem 10.21, this is equivalent to u − υ ∈ W.

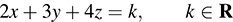

10.23. Let W be the solution space of the homogeneous equation 2x + 3y + 4z = 0. Describe the cosets of W in R3.

W is a plane through the origin O = (0, 0, 0), and the cosets of W are the planes parallel to W. Equivalently, the cosets of W are the solution sets of the family of equations

In fact, the coset υ + W, where υ = (a, b, c), is the solution set of the linear equation

10.24. Suppose W is a subspace of a vector space V. Show that the operations in Theorem 10.15 are well defined; namely, show that if u + W = u′ + W and υ + W = υ ′ + W, then

(a) Because u + W = u′ + W and υ + W = υ′ + W, both u − u′ and υ − υ ′ belong to W. But then (u + υ) (u′ + υ′) = (u − u′) + (υ − υ′) ∈ W. Hence, (u + υ) + W = (u′ + υ′) + W.

(b) Also, because u − u′ ∈ W implies k(u − u′) ∈ W, then ku − ku′ = k(u − u′) ∈ W; accordingly, ku + W = ku′ + W.

10.25. Let V be a vector space and W a subspace of V. Show that the natural map η: V → V/W, defined by η(υ) = υ + W, is linear.

For any u, υ ∈ V and any k ∈ K, we have

and

Accordingly, η is linear.

10.26. Let W be a subspace of a vector space V. Suppose {w1, ..., wr} is a basis of W and the set of cosets  , where

, where  , is a basis of the quotient space. Show that the set of vectors B = {υ1, ..., υs, w1, ..., wr} is a basis of V. Thus, dim V = dim W + dim(V/W).

, is a basis of the quotient space. Show that the set of vectors B = {υ1, ..., υs, w1, ..., wr} is a basis of V. Thus, dim V = dim W + dim(V/W).

Suppose u ∈ V. Because  is a basis of V/W,

is a basis of V/W,

Hence, u = a1υ1 + ⋯ + asυs + w, where w ∈ W. Since {wi} is a basis of W,

Accordingly, B spans V.

We now show that B is linearly independent. Suppose

Then

Because  is independent, the c’s are all 0. Substituting into (1), we find d1w1 + ⋯ + drwr = 0. Because {wi} is independent, the d’s are all 0. Thus, B is linearly independent and therefore a basis of V.

is independent, the c’s are all 0. Substituting into (1), we find d1w1 + ⋯ + drwr = 0. Because {wi} is independent, the d’s are all 0. Thus, B is linearly independent and therefore a basis of V.

10.27. Prove Theorem 10.16: Suppose W is a subspace invariant under a linear operator T:V → V. Then T induces a linear operator  on V/W defined by

on V/W defined by  . Moreover, if T is a zero of any polynomial, then so is

. Moreover, if T is a zero of any polynomial, then so is  . Thus, the minimal polynomial of

. Thus, the minimal polynomial of  divides the minimal polynomial of T.

divides the minimal polynomial of T.

We first show that  is well defined; that is, if u + W = υ + W, then

is well defined; that is, if u + W = υ + W, then  . If u + W = υ + W, then u − υ ∈ W, and, as W is T-invariant, T(u − υ ) = T(u) − T(υ) ∈ W. Accordingly,

. If u + W = υ + W, then u − υ ∈ W, and, as W is T-invariant, T(u − υ ) = T(u) − T(υ) ∈ W. Accordingly,

as required.

We next show that  is linear. We have

is linear. We have

Furthermore,

Thus,  is linear.

is linear.

Now, for any coset u + W in V/W,

Hence,  . Similarly,

. Similarly,  for any n. Thus, for any polynomial

for any n. Thus, for any polynomial

and so  . Accordingly, if T is a root of f(t) then

. Accordingly, if T is a root of f(t) then  ; that is,

; that is,  is also a root of f(t). The theorem is proved.

is also a root of f(t). The theorem is proved.

10.28. Prove Theorem 10.1: Let T:V → V be a linear operator whose characteristic polynomial factors into linear polynomials. Then V has a basis in which T is represented by a triangular matrix.

The proof is by induction on the dimension of V. If dim V = 1, then every matrix representation of T is a 1 × 1 matrix, which is triangular.

Now suppose dim V = n > 1 and that the theorem holds for spaces of dimension less than n. Because the characteristic polynomial of T factors into linear polynomials, T has at least one eigenvalue and so at least one nonzero eigenvector υ, say T(υ) = a11υ. Let W be the one-dimensional subspace spanned by υ. Set  = V/W. Then (Problem 10.26) dim

= V/W. Then (Problem 10.26) dim  = dim V − dim W = n − 1. Note also that W is invariant under T. By Theorem 10.16, T induces a linear operator

= dim V − dim W = n − 1. Note also that W is invariant under T. By Theorem 10.16, T induces a linear operator  on

on  whose minimal polynomial divides the minimal polynomial of T. Because the characteristic polynomial of T is a product of linear polynomials, so is its minimal polynomial, and hence, so are the minimal and characteristic polynomials of

whose minimal polynomial divides the minimal polynomial of T. Because the characteristic polynomial of T is a product of linear polynomials, so is its minimal polynomial, and hence, so are the minimal and characteristic polynomials of  . Thus,

. Thus,  and

and  satisfy the hypothesis of the theorem. Hence, by induction, there exists a basis

satisfy the hypothesis of the theorem. Hence, by induction, there exists a basis  of

of  such that

such that

Now let υ2, ..., υn be elements of V that belong to the cosets υ2, ..., υn, respectively. Then {υ, υ2, ..., υn} is a basis of V (Problem 10.26). Because  , we have

, we have

But W is spanned by υ ; hence, T(υ2) − a22υ2 is a multiple of υ, say,

Similarly, for i = 3, ..., n

Thus,

and hence the matrix of T in this basis is triangular.

Cyclic Subspaces, Rational Canonical Form

10.29. Prove Theorem 10.12: Let Z(υ, T) be a T-cyclic subspace, Tυ the restriction of T to Z(υ, T), and mυ(t) = tk + ak − 1tk − 1 + ⋯ + a0 the T-annihilator of υ. Then,

(i) The set {υ, T(υ), ..., Tk − 1(υ)} is a basis of Z(υ, T); hence, dim Z(υ, T) = k.

(ii) The minimal polynomial of Tυ is mυ(t).

(iii) The matrix of Tυ in the above basis is the companion matrix C = C(mυ) of mυ(t) [which has 1’s below the diagonal, the negative of the coefficients a0, a1, ..., ak − 1 of mυ(t) in the last column, and 0’s elsewhere].

(i) By definition of mυ(t), Tk(υ) is the first vector in the sequence υ, T(υ), T2(υ), ... that, is a linear combination of those vectors that precede it in the sequence; hence, the set B = {υ, T(υ), ..., Tk − 1(υ)} is linearly independent. We now only have to show that Z(υ, T) = L(B), the linear span of B. By the above, Tk(υ) ∈ L(B). We prove by induction that Tn(υ) ∈ L(B) for every n. Suppose n > k and Tn − 1(υ) ∈ L(B)—that is, Tn − 1(υ) is a linear combination of υ, ..., Tk − 1(υ). Then Tn(υ) = T(Tn − 1(υ)) is a linear combination of T(υ), ..., Tk(υ). But Tk(υ) ∈ L(B); hence, Tn(υ) ∈ L(B) for every n. Consequently, f(T)(υ) ∈ L(B) for any polynomial f(t). Thus, Z(υ, T) = L(B), and so B is a basis, as claimed.

(ii) Suppose m(t)= ts + bs − 1ts − 1 + ⋯ + b0 is the minimal polynomial of Tυ. Then, because υ ∈ Z(υ, T),

Thus, Ts(υ) is a linear combination of υ, T(υ), ..., Ts − 1(υ), and therefore k ≤ s. However, mυ(T) = 0 and so mυ(Tυ) = 0. Then m(t) divides mυ(t), and so s ≤ k. Accordingly, k = s and hence mυ(t) = m(t).

By definition, the matrix of Tυ in this basis is the tranpose of the matrix of coefficients of the above system of equations; hence, it is C, as required.

10.30. Let T:V → V be linear. Let W be a T-invariant subspace of V and  the induced operator on V/W. Prove

the induced operator on V/W. Prove

(a) The T-annihilator of υ ∈ V divides the minimal polynomial of T.

(b) The  -annihilator of

-annihilator of  divides the minimal polynomial of T.

divides the minimal polynomial of T.

(a) The T-annihilator of υ ∈ V is the minimal polynomial of the restriction of T to Z(υ, T); therefore, by Problem 10.6, it divides the minimal polynomial of T.

(b) The  -annihilator of

-annihilator of  divides the minimal polynomial of

divides the minimal polynomial of  , which divides the minimal polynomial of T by Theorem 10.16.

, which divides the minimal polynomial of T by Theorem 10.16.

Remark: In the case where the minimum polynomial of T is f(t)n, where f(t) is a monic irreducible polynomial, then the T-annihilator of υ ∈ V and the  -annihilator of

-annihilator of  are of the form f(t)m, where m ≤ n.

are of the form f(t)m, where m ≤ n.

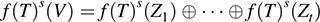

10.31. Prove Lemma 10.13: Let T:V → V be a linear operator whose minimal polynomial is f(t)n, where f(t) is a monic irreducible polynomial. Then V is the direct sum of T-cyclic subspaces Zi = Z(υi, T), i = 1, ..., r, with corresponding T-annihilators

Any other decomposition of V into the direct sum of T-cyclic subspaces has the same number of components and the same set of T-annihilators.

The proof is by induction on the dimension of V. If dim V = 1, then V is T-cyclic and the lemma holds. Now suppose dim V > 1 and that the lemma holds for those vector spaces of dimension less than that of V.

Because the minimal polynomial of T is f(t)n, there exists υ1 ∈ V such that f(T)n − 1(υ1) ≠ 0; hence, the T-annihilator of υ1 is f(t)n. Let Z1 = Z(υ1, T) and recall that Z1 is T-invariant. Let  and let

and let  be the linear operator on

be the linear operator on  induced by T. By Theorem 10.16, the minimal polynomial of

induced by T. By Theorem 10.16, the minimal polynomial of  divides f(t)n; hence, the hypothesis holds for

divides f(t)n; hence, the hypothesis holds for  and

and  . Consequently, by induction,

. Consequently, by induction,  is the direct sum of

is the direct sum of  -cyclic subspaces; say,

-cyclic subspaces; say,

where the corresponding  -annihilators are f(t)n2, ..., f(t)nr, n ≥ n2 ≥ nr.

-annihilators are f(t)n2, ..., f(t)nr, n ≥ n2 ≥ nr.

We claim that there is a vector υ2 in the coset  whose T-annihilator is f(t)n2, the

whose T-annihilator is f(t)n2, the  -annihilator of

-annihilator of  . Let w be any vector in

. Let w be any vector in  . Then f(t)n2(w) ∈ Z1. Hence, there exists a polynomial g(t) for which

. Then f(t)n2(w) ∈ Z1. Hence, there exists a polynomial g(t) for which

Because f(t)n is the minimal polynomial of T, we have, by (1),

But f(t)n is the T-annihilator of υ1; hence, f(t)n divides f(t)n − n2g(t), and so g(t) = f(t)n2 h(t) for some polynomial h(t). We set

Because w − υ2 = h(T)(υ1) ∈ Z1, υ2 also belongs to the coset  . Thus, the T-annihilator of υ2 is a multiple of the

. Thus, the T-annihilator of υ2 is a multiple of the  -annihilator of

-annihilator of  . On the other hand, by (1),

. On the other hand, by (1),

Consequently, the T-annihilator of υ2 is f(t)n2, as claimed.

Similarly, there exist vectors υ3, ..., υr∈ V such that  and that the T-annihilator of υi is f(t)ni, the

and that the T-annihilator of υi is f(t)ni, the  -annihilator of

-annihilator of  . We set

. We set

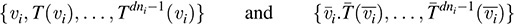

Let d denote the degree of f(t), so that f(t)ni has degree dni. Then, because f(t)ni is both the T-annihilator of υi and the  -annihilator of

-annihilator of  , we know that

, we know that

are bases for Z(υi, T) and  , respectively, for i = 2, ..., r. But

, respectively, for i = 2, ..., r. But  hence,

hence,

is a basis for  . Therefore, by Problem 10.26 and the relation

. Therefore, by Problem 10.26 and the relation  (see Problem 10.27),

(see Problem 10.27),

is a basis for V. Thus, by Theorem 10.4, V = Z(υ1, T) ⊕ ⋯ ⊕ Z(υr, T), as required.

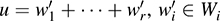

It remains to show that the exponents n1, ..., nr are uniquely determined by T. Because d = degree of f(t),

Also, if s is any positive integer, then (Problem 10.59) f(T)s(Zi) is a cyclic subspace generated by f(T)s(υi), and it has dimension d(ni − s) if ni > s and dimension 0 if ni ≤ s.

Now any vector υ ∈ V can be written uniquely in the form υ = w1 + ⋯ + wr, where wi ∈ Zi. Hence, any vector in f(T)s(V) can be written uniquely in the form

where f(t)s(wi) ∈ f(T)s(Zi). Let t be the integer, dependent on s, for which

Then

and so

The numbers on the left of (2) are uniquely determined by T. Set s = n − 1, and (2) determines the number of ni equal to n. Next set s = n − 2, and (2) determines the number of ni (if any) equal to n − 1. We repeat the process until we set s = 0 and determine the number of ni equal to 1. Thus, the ni are uniquely determined by T and V, and the lemma is proved.

10.32. Let V be a seven-dimensional vector space over R, and let T:V → V be a linear operator with minimal polynomial m(t) = (t2 − 2t + 5)(t − 3)3. Find all possible rational canonical forms M of T.

Because dim V = 7, there are only two possible characteristic polynomials, Δ1(t)= (t2 − 2t + 5)2(t − 3)3 or Δ1(t)= (t2 − 2t + 5)(t − 3)5. Moreover, the sum of the orders of the companion matrices must add up to 7. Also, one companion matrix must be C(t2 − 2t + 5) and one must be C((t − 3)3) = C(t3 − 9t2 + 27t − 27). Thus, M must be one of the following block diagonal matrices:

Projections

10.33. Suppose V = W1 ⊕ ⋯ ⊕ Wr. The projection of V into its subspace Wk is the mapping E: V → V defined by E(υ) = wk, where υ = w1 + ⋯ + wr, wi ∈ Wi. Show that (a) E is linear, (b) E2 = E.

(a) Because the sum υ = w1 + ⋯ + wr, wi ∈ W is uniquely determined by υ, the mapping E is well defined. Suppose, for u ∈ V,  . Then

. Then

are the unique sums corresponding to υ + u and kυ. Hence,

and therefore E is linear.

(b) We have that

is the unique sum corresponding to wk ∈ Wk; hence, E(wk) = wk. Then, for any υ ∈ V,

Thus, E2 = E, as required.

10.34. Suppose E:V → V is linear and E2 = E. Show that (a) E(u) = u for any u ∈ Im E (i.e., the restriction of E to its image is the identity mapping); (b) V is the direct sum of the image and kernel of E:V = Im E ⊕ Ker E; (c) E is the projection of V into Im E, its image. Thus, by the preceding problem, a linear mapping T:V → V is a projection if and only if T2 = T; this characterization of a projection is frequently used as its definition.

(a) If u ∈ Im E, then there exists υ ∈ V for which E(υ) = u; hence, as required,

(b) Let υ ∈ V. We can write υ in the form υ = E(υ) + υ − E(υ). Now E(υ) ∈ Im E and, because

υ − E(υ) ∈ Ker E. Accordingly, V = Im E + Ker E.

Now suppose w ∈ Im E ∩ Ker E. By (i), E(w) = w because w ∈ Im E. On the other hand, E(w) = 0 because w ∈ Ker E. Thus, w = 0, and so Im E ∩ Ker E = {0}. These two conditions imply that V is the direct sum of the image and kernel of E.

(c) Let υ ∈ V and suppose υ = u + w, where u ∈ Im E and w ∈ Ker E. Note that E(u) = u by (i), and E(w) = 0 because w ∈ Ker E. Hence,

That is, E is the projection of V into its image.

10.35. Suppose V = U ⊕ W and suppose T:V → V is linear. Show that U and W are both T-invariant if and only if TE = ET, where E is the projection of V into U.

Observe that E(υ) ∈ U for every υ ∈ V, and that (i) E(υ) = υ iff υ ∈ U, (ii) E(υ) = 0 iff υ ∈ W. Suppose ET = TE. Let u ∈ U. Because E(u) = u,

Hence, U is T-invariant. Now let w ∈ W. Because E(w) = 0,

Hence, W is also T-invariant.

Conversely, suppose U and W are both T-invariant. Let υ ∈ V and suppose υ = u + w, where u ∈ T and w ∈ W. Then T(u) ∈ U and T(w) ∈ W; hence, E(T(u)) = T(u) and E(T(w)) = 0. Thus,

and

That is, (ET)(υ) = (TE)(υ) for every υ ∈ V; therefore, ET = TE, as required.

SUPPLEMENTARY PROBLEMS

Invariant Subspaces

10.36. Suppose W is invariant under T:V → V. Show that W is invariant under f(t) for any polynomial f(t).

10.37. Show that every subspace of V is invariant under I and 0, the identity and zero operators.

10.38. Let W be invariant under T1: V → V and T2: V → V. Prove W is also invariant under T1 + T2 and T1T2.

10.39. Let T:V → V be linear. Prove that any eigenspace, EΛ is T-invariant.

10.40. Let V be a vector space of odd dimension (greater than 1) over the real field R. Show that any linear operator on V has an invariant subspace other than V or {0}.

10.41. Determine the invariant subspace of  viewed as a linear operator on (a) R2, (b) C2.

viewed as a linear operator on (a) R2, (b) C2.

10.42. Suppose dim V = n. Show that T:V → V has a triangular matrix representation if and only if there exist T-invariant subspaces W1 ⊂ W2 ⊂ ⋯ ⊂ Wn = V for which dim Wk = k, k = 1, ..., n.

Invariant Direct Sums

10.43. The subspaces W1, ..., Wr are said to be independent if w1 + ⋯ + wr = 0, wi ∈ Wi, implies that each wi = 0. Show that span(Wi) = W1 ⊕ ⋯ ⊕ Wr if and only if the Wi are independent. [Here span(Wi) denotes the linear span of the Wi.]

10.44. Show that V = W1 ⊕ ⋯ ⊕ Wr if and only if (i) V = span(Wi) and (ii) for k = 1, 2, ..., r, Wk ∩ span(W1, ..., Wk − 1, Wk + 1, ..., Wr) = {0}.

10.45. Show that span(Wi) = W1 ⊕ ⋯ ⊕ Wr if and only if dim [span(Wi) = dim W1 + ⋯ + dim Wr.

10.46. Suppose the characteristic polynomial of T:V → V is Δ(t) = f1(t)n1f2(t)n2 ⋯ fr(t)nr, where the fi(t) are distinct monic irreducible polynomials. Let V = W1 ⊕ ⋯ ⊕ Wr be the primary decomposition of V into T-invariant subspaces. Show that fi(t)ni is the characteristic polynomial of the restriction of T to Wi.

Nilpotent Operators

10.47. Suppose T1 and T2 are nilpotent operators that commute (i.e., T1T2 = T2T1). Show that T1 + T2 and T1T2 are also nilpotent.

10.48. Suppose A is a supertriangular matrix (i.e., all entries on and below the main diagonal are 0). Show that A is nilpotent.

10.49. Let V be the vector space of polynomials of degree ≤n. Show that the derivative operator on V is nilpotent of index n + 1.

10.50. Show that any Jordan nilpotent block matrix N is similar to its transpose NT (the matrix with 1’s below the diagonal and 0’s elsewhere).

10.51. Show that two nilpotent matrices of order 3 are similar if and only if they have the same index of nilpotency. Show by example that the statement is not true for nilpotent matrices of order 4.

Jordan Canonical Form

10.52. Find all possible Jordan canonical forms for those matrices whose characteristic polynomial Δ(t) and minimal polynomial m(t) are as follows:

(a) Δ(t) = (t − 2)4(t − 3)2, m(t) = (t − 2)2(t − 3)2,

(b) Δ(t) = (t − 7)5, m(t) = (t − 7)2, (c) Δ(t) = (t − 2)7, m(t) = (t − 2)3

10.53. Show that every complex matrix is similar to its transpose. (Hint: Use its Jordan canonical form.)

10.54. Show that all n × n complex matrices A for which An = I but Ak ≠ I for k < n are similar.

10.55. Suppose A is a complex matrix with only real eigenvalues. Show that A is similar to a matrix with only real entries.

Cyclic Subspaces

10.56. Suppose T:V → V is linear. Prove that Z(υ, T) is the intersection of all T-invariant subspaces containing υ.

10.57. Let f(t) and g(t) be the T-annihilators of u and υ, respectively. Show that if f(t) and g(t) are relatively prime, then f(t)g(t) is the T-annihilator of u + υ.

10.58. Prove that Z(u, T) = Z(υ, T) if and only if g(T)(u) = υ where g(t) is relatively prime to the T-annihilator of u.

10.59. Let W = Z(υ, T), and suppose the T-annihilator of υ is f(t)n, where f(t) is a monic irreducible polynomial of degree d. Show that f(t)s(W) is a cyclic subspace generated by f(T)s(υ) and that it has dimension d(n − s) if n > s and dimension 0 if n ≤ s.

Rational Canonical Form

10.60. Find all possible rational forms for a 6 × 6 matrix over R with minimal polynomial:

(a) m(t) = (t2 − 2t + 3)(t + 1)2, (b) m(t) = (t − 2)3.

10.61. Let A be a 4 × 4 matrix with minimal polynomial m(t) = (t2 + 1)(t2 − 3). Find the rational canonical form for A if A is a matrix over (a) the rational field Q, (b) the real field R, (c) the complex field C.

10.62. Find the rational canonical form for the four-square Jordan block with λ’s on the diagonal.

10.63. Prove that the characteristic polynomial of an operator T:V → V is a product of its elementary divisors.

10.64. Prove that two 3 × 3 matrices with the same minimal and characteristic polynomials are similar.

10.65. Let C( f(t)) denote the companion matrix to an arbitrary polynomial f(t). Show that f(t) is the characteristic polynomial of C( f(t)).

Projections

10.66. Suppose V = W1 ⊕ ⋯ ⊕ Wr. Let Ei denote the projection of V into Wi. Prove (i) EiEj = 0, i ≠ j; (ii) I = E1 + ⋯ + Er.

10.67. Let E1, ..., Er be linear operators on V such that

(i)  (i.e., the Ei are projections); (ii) EiEj = 0, i ≠ j; (iii) I = E1 + ⋯ + Er

(i.e., the Ei are projections); (ii) EiEj = 0, i ≠ j; (iii) I = E1 + ⋯ + Er

Prove that V = Im E1 ⊕ ⋯ ⊕ Im Er.

10.68. Suppose E: V → V is a projection (i.e., E2 = E). Prove that E has a matrix representation of the form  , where r is the rank of E and Ir is the r-square identity matrix.

, where r is the rank of E and Ir is the r-square identity matrix.

10.69. Prove that any two projections of the same rank are similar. (Hint: Use the result of Problem 10.68.)

10.70. Suppose E: V → V is a projection. Prove

(i) I − E is a projection and V = Im E ⊕ Im (I − E), (ii) I + E is invertible (if 1 + 1 ≠ 0).

Quotient Spaces

10.71. Let W be a subspace of V. Suppose the set of cosets {υ1 + W, υ2 + W, ..., υn + W} in V/W is linearly independent. Show that the set of vectors {υ1, υ2, ..., υn} in V is also linearly independent.

10.72. Let W be a substance of V. Suppose the set of vectors {u1, u2, ..., un} in V is linearly independent, and that L(ui) ∩ W = {0}. Show that the set of cosets {u1 + W, ..., un + W} in V/W is also linearly independent.

10.73. Suppose V = U ⊕ W and that {u1, ..., un} is a basis of U. Show that {u1 + W, ..., un + W} is a basis of the quotient spaces V/W. (Observe that no condition is placed on the dimensionality of V or W.)

10.74. Let W be the solution space of the linear equation

and let υ = (b1, b2, ..., bn) ∈ Kn. Prove that the coset υ + W of W in Kn is the solution set of the linear equation

10.75. Let V be the vector space of polynomials over R and let W be the subspace of polynomials divisible by t4 (i.e., of the form a0t4 + a1t5 + ⋯ + an − 4tn). Show that the quotient space V/W has dimension 4.

10.76. Let U and W be subspaces of V such that W ⊂ U ⊂ V. Note that any coset u + W of W in U may also be viewed as a coset of W in V, because u∈ U implies u ∈ V; hence, U/W is a subset of V/W. Prove that (i) U/W is a subspace of V/W, (ii) dim(V/W) − dim(U/W) = dim(V/U).

10.77. Let U and W be subspaces of V. Show that the cosets of U ∩ W in V can be obtained by intersecting each of the cosets of U in V by each of the cosets of W in V:

10.78. Let T:V → V′ be linear with kernel W and image U. Show that the quotient space V/W is isomorphic to U under the mapping θ:V/W → U defined by θ(υ + W) = T(υ). Furthermore, show that T = i ∘ θ ∘ η, where η:V → V/W is the natural mapping of V into V/W (i.e., η(υ) = υ + W), and  is the inclusion mapping (i.e., i(u) = u). (See diagram.)

is the inclusion mapping (i.e., i(u) = u). (See diagram.)

ANSWERS TO SUPPLEMENTARY PROBLEMS

10.41. (a) R2 and {0}, (b) C2, {0}, W1 = span(2, 1 − 2i), W2 = span(2, 1 + 2i)

(c) Let Mk denote a Jordan block with λ = 2 and order k. Then diag(M3, M3, M1), diag(M3, M2, M2), diag(M3, M2, M1, M1), diag(M3, M1, M1, M1, M1)

10.60. Let  .

.

(a) diag(A, A, B), diag(A, B, B), diag(A, B, –1, –1); (b) diag(C, C), diag(C, D, 2), diag(C, 2, 2, 2)

10.61. Let  .

.

(a) diag(A, B), (b)  , (c)

, (c)

10.62. Companion matrix with the last column [λ4, 4λ3, –6λ2, 4λ]T