IN THIS CHAPTER, I WILL SHOW YOU how to build two digital camera systems capable of composing gigapixel photographs. I will not be building digital cameras with billion-plus pixel resolution because only a few of those exist today, and they are prohibitively expensive. Instead, I will use moderate cost digital cameras, each with millions of pixel resolution, and then take multiple photographs of a subject and use post-processing techniques to form a final gigapixel image. The multiple-image approach is really the only practical way to capture huge-resolution photographs using consumer-grade digital cameras.

The first system will use a small, relatively inexpensive point-and-shoot digital camera mounted on an inexpensive pan-and-tilt servomechanism. The second system will use a more expensive digital single-lens reflex camera (DSLR) mounted on a “prosumer”-grade pan-and-tilt mechanism. I took this two-system approach to accommodate readers who would like to experiment with this technology but do not have the resources to invest in the expensive photographic equipment that would be needed to build the second system.

No cameras or tripod components are listed. Please refer to the text regarding suitable cameras/tripods to use for these projects.

I will start the chapter by discussing two post-processing techniques, both of which allow a gigapixel image to be composed.

Stacking and stitching are both required for image post-processing, which allows the gigapixel camera system to be implemented. I will discuss stacking first, followed by the stitching process.

The stacking in the section title refers to a technique more formally called focus stacking but also known as image stacking . It is necessary to review some fundamental photography basics to understand what focus stacking encompasses. Figure 10-1 is a diagram showing a simple camera lens and sensor that helps to illustrate the key concept of how a camera focuses.

Figure 10-1 Diagram of camera focus system.

In this figure, the lens has been set either manually or automatically to converge the majority of incoming light rays reflecting off the subject object onto the plane of the sensor. However, some light rays reflecting off objects that are separate from the subject object will converge before or after the sensor plane and are said to be out of focus . This loss of focus is also referred to as loss of sharpness and is the result of an optical principle called diffraction . Diffraction is a fundamental physics principle and cannot be overcome simply by using a better lens. However, using focus stacking can mitigate it.

The distance between the optical center of the lens and the sensor plane is known as the focal length . This attribute does not affect the lens focus directly but is related more to image size. An iris also can be seen in the figure situated just in front of the lens, and it controls the amount of light passing through the lens. The opening in the iris is referred to as the aperture , and it also plays an important role in determining the image focus. Figure 10-2 shows an iris set to various aperture settings known as f-stops . The f-stop is defined as the ratio between the lens diameter and the open-aperture diameter.

Figure 10-2 Aperture settings.

This figure should convey the concept that a smaller aperture setting means that a greater depth of field exists, and hence objects being photographed will tend to remain in focus on the sensor plane. The underlying optical physics determining why this happens is complex and really does not need to be discussed. Simply accept the fact that smaller apertures mean greater depth of field for an image. Of course, there is a distinct disadvantage to using small apertures in that less light strikes the image sensor, and there is a good chance that the image will be underexposed depending on the total intensity of light reflecting off the object to be photographed. The main way photographers overcome this issue is to increase the time during which the shutter remains open, exposing light from the lens onto the sensor. This then leads to the issue of image blurriness owing to camera shake, especially if the camera is hand held. Use of a tripod normally mitigates this situation quite well, which is why you see professional photographers using tripods during night shots.

Figure 10-3 is an excellent representation of how focus, aperture, and depth of field are related. This image is courtesy of Dave Shaker and is available as part of his “The Camera Lens” blog at www.thatfish.com .

Figure 10-3 Camera focus, aperture, and depth-of-field relationships. (www.thatfish.com )

I also wanted to mention that the issue of depth of field becomes very problematic when you attempt to take extreme close-up photographs. This type of image taking is known as macrophotography , and it has greatly benefited from the focus-stacking technique.

The type of lens being used also plays a role in this whole interrelationship of focus, aperture, and depth of field. You are likely to have a small zoom lens if you are using a modern point-and-shoot camera such as a Canon SX160 IS, as shown in Figure 10-4 .

Figure 10-4 Canon SX160 IS point-and-shoot camera.

This is the camera I used for the point-and-shoot part of this chapter’s project. It has a 5.0- to 80-mm zoom lens permanently attached, as shown in the figure. This lens is well suited for wide-angle to long-range shots because it incorporates a 16× optical zoom in the lens. Some point-and-shoot cameras do not have a telescopic capability but instead rely on electronic processing to achieve telescopic range. Electronic image magnification usually has less quality than a quality optical telescopic lens. But this is an acceptable tradeoff that most point-and-shoot camera users readily accept for the convenience of using a compact camera at a good price point. In the second portion of this chapter project, I do use a DSLR, which accepts a wide range of lens, including wide-angle and telescopic lenses.

So far, I have presented a brief background on how a camera focuses on a subject object and the related issue of why out-of-focus objects are also typically seen in the frame of view. It is now time to discuss how focus stacking overcomes this problem.

Probably the best way to explain how focus stacking works is to show you an example. This example is pretty simple, consisting of a photograph of a tie set against a dark background. I took three photographs of the tie, with the focus points being set at the front, middle, and back, as shown in Figures 10-5 , 10-6 , and 10-7 , respectively. Note that these three figures were cropped and the colors adjusted for publication in this book. The unaltered original images were used as inputs to the focus-stacking application.

Figure 10-5 Near focus.

Figure 10-6 Middle focus.

Figure 10-7 Far focus.

You should be able to see how only one area of the tie is in focus in each image. These three images were next used as inputs to Zerene Systems, focus-stacking software, ZereneStacker. Figure 10-8 is a screen shot of the ZereneStacker application running on my MacBook Pro.

Figure 10-8 Zerene focus-stacking application.

You can generate an output image using PMax or DMap methods. PMax uses a pyramid method , which is very good at finding and retaining details in an image. It is good at processing images containing matrix-like structures such as hair mats and crisscrossed bristles. It also avoids creating halos, which can mask details and is common in other focus-stacking applications.

DMap uses a depth-map method , which tends to retain more of the original image’s smoothness and colors. It does not retain as much detail as the PMax method.

Zerene Systems recommends processing stacked images using both methods and selecting the best result. Often, selecting the best output image and then retouching it will yield the best results. Figure 10-9 shows the final tie output image, which I cropped and retouched for optimal intensity level, contrast, and color.

Figure 10-9 Focused-stacked tie image.

Of course, the actual algorithms that Zerene uses to focus stack images are proprietary and not public information, as likely are all other similar algorithms. It really doesn’t matter whether you know how the program functions other than to know how to use it effectively. Some readers may know that Adobe’s Photoshop also includes a photo merge function , which is Adobe’s term for focus stacking. I chose not to use Photoshop because it requires many more steps than the ZereneStacker to process input images. In addition, a focus-stacking application is optimized for that particular process as opposed to Photoshop, which has to perform a multitude of different and often unrelated image-processing functions.

Stitching is the next part of this project’s image postprocessing, which I discuss in the following section.

Stitching is the imaging process of seamlessly joining adjacent photographs such that they form one panoramic view. Sometimes panoramic photographs are simply referred to as panos , which I will do from now on for brevity’s sake. Specialized pano image-processing applications are available as well as functions contained in Photoshop that can perform image stitching. I chose to use an easy pano specialized program named Panoweaver v9.1 Standard Edition to create my project panos using similar reasoning as to why I chose a specialized stacking software over the Photoshop merge image function.

For best results, I took a series of images with a digital camera mounted on a tripod. The camera was leveled on the tripod, and I overlapped each image by 40 to 50 percent with the preceding one. Also, I set the camera to take moderate-resolution images to minimize the postprocessing time. Figures 10-10 , 10-11 , and 10-12 are three photographs of a local converted textile mill building that I used as inputs to the stitching application.

Figure 10-10 Left.

Figure 10-11 Center.

Figure 10-12 Right.

These three images were next input to the image-stitching software. Figure 10-13 is a screenshot of the Panoweaver application running on my MacBook Pro.

Figure 10-13 Panoweaver image-stitching application.

You generate an output image by first importing the source images. The panoramic stitch function will not even appear on the ribbon menu bar without the source images being selected. You will next need to select the type of pano desired from one of the radio-button selections appearing on the right-hand side of the application. I list these selections next with a few brief comments about each one.

Spherical.

This is the default selection and was the one I used.

Spherical.

This is the default selection and was the one I used.

Cubic.

There is very little perceived difference between this and spherical selection.

Cubic.

There is very little perceived difference between this and spherical selection.

Cylindrical.

The mill roofline was convex with a “bowed out” appearance.

Cylindrical.

The mill roofline was convex with a “bowed out” appearance.

Littleplanet.

Extremely concave; not recommended except for a special effect.

Littleplanet.

Extremely concave; not recommended except for a special effect.

The generated pano has an uneven border because of the image manipulations necessary to create the pano. I cropped and slightly retouched the pano image using Photoshop to achieve a pleasing image, which is shown in Figure 10-14 .

Figure 10-14 Mill building pano.

A lot of information is available regarding the stitching algorithms, which was not the case for the stacking algorithms. However, the algorithms are quite complex, and not much can be gained in a book of this type by going through them.

At this point, I have covered the entire pertinent image postprocessing that needs to be done to achieve the goals of a gigapixel camera system. It is time to discuss in some detail the point-and-shoot camera, which is the critical part of the first gigapixel camera system.

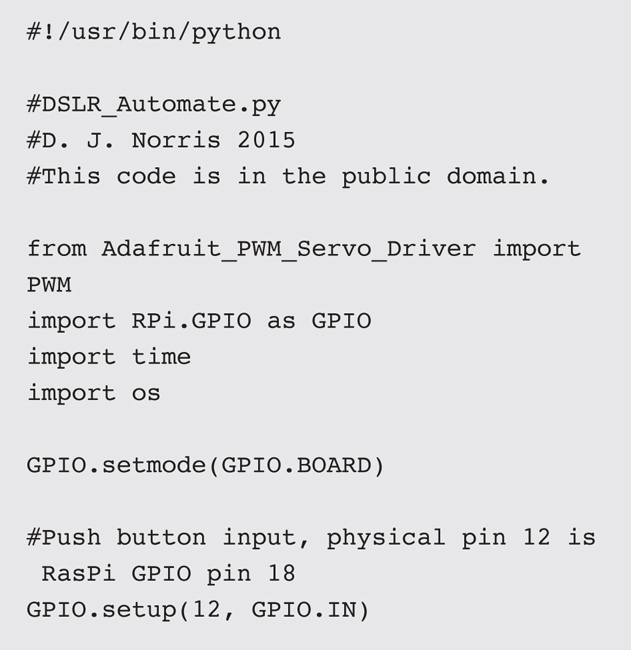

I used a Canon SX160 IS, as mentioned earlier, for the point-and-shoot camera. This is a nice little camera with a decent 16-MB sensor and a good-quality 5.0- to 80-mm zoom lens attached. The camera is not very expensive, and I saved quite a bit by purchasing a refurbished unit. There are many other Canon cameras similar to the one I used, but if you do substitute one, then you must ensure that the selected camera will accept the Canon Hack Development Kit (CHDK) firmware, which is discussed later. This firmware change is needed to make the camera suitable to accept RasPi commands for shutter activation. There are no other required modifications to the camera other than the firmware change. Note that even changing the original firmware will not impair normal camera operations because the CHDK firmware is easily bypassed to place the camera in its normal operating condition.

You should be aware that the CHDK cannot control the camera focus operation. It thus will be impossible to implement automated focus stacking using the CHDK firmware. In fact, most modern point-and-shoot cameras do not have any provision for manual lens focus. However, automatic image capture for the stitching operation will be possible because remote shutter activation is possible with this Canon camera.

The Canon camera also has a ¼-20 tripod mount on the bottom of its case, which is used to mount the camera to the pan-and-tilt servomechanism, which I discuss next.

Figure 10-15 shows the inexpensive pan-and-tilt servomechanism I used, which is controlled by the RasPi in conjunction with the 16-channel PWM/Servo HAT board I introduced in Chapter 9 .

Figure 10-15 Pan-and-tilt servomechanism.

This mechanism has 2 DOFs and will allow the camera to be tilted in excess of 120°. The same is true of the panning servo. In reality, I expected to do very few tilt adjustments but to heavily use the panning to create the pano. This is why I made the tilt operation manual but automated the panning.

You also should note that this pan-and-tilt mechanism handles the lightweight point-and-shoot camera just fine but will not be able to move the much heavier and massive DSLR camera, which is the reason I separated the project into two parts.

You will need to remove the C-bracket from the top of the tilt servo and drill out the center hole to provide clearance for the ¼-20 screw that secures the camera to the C-bracket. Figure 10-16 shows this bracket with the center hole enlarged using a ¼-inch drill bit.

Figure 10-16 C-bracket with mounting hole drilled out.

I do wish to point out that these inexpensive pan-and-tilt mechanisms are a commodity product built in China by various suppliers. Other units may not have the same mounting hole as this one does, so you will have to be flexible and adapt the mounting arrangement to suit what you have in hand. However, I strongly recommend that you attach the camera to the C-bracket while it is not attached to the servo. It will be impossible to install the mounting screw once the C-bracket is reattached to the servo. Figure 10-17 shows the complete assembly of the camera attached to the pan-and-tilt mechanism.

Figure 10-17 Pan-and-tilt servomechanism with camera mounted.

Note that I also attached the mechanism to a temporary Lexan stand for testing purposes. You don’t have to do this as long as you secure the bottom servo so that the whole assembly does not fall over. I eventually removed the stand and attached the bottom servo to a tripod mount adapter, which I describe later in this chapter.

You also must ensure that the servo cables are free and clear of any pinch points and obviously not in the way of the lens. This will be easy to do once the RasPi has been mounted on the tripod adapter along with the battery supply. Again, all this will be discussed a bit later.

Now it is time to discuss the CHDK firmware, which must be installed on the camera to automate the pano process.

The Canon Hack Development Kit (CHDK) is a unique software application that runs on microprocessors contained in Canon point-and-shoot cameras. CHDK software makes no permanent changes to the camera, which means that the original Canon firmware can be restored, if desired.

CHDK allows control over many camera features, some of which are detailed in Table 10-1 .

I do wish to make one item very clear, and that is that the CHDK firmware strictly uses digital pulses to control the camera. It does not use digital text sent over the USB data lines for camera control. This means that camera control is created by both counting the number of pulses and the durations of those pulses. Of course, all the appropriate pulses and durations will be done for you using the RasPi.

A key component for controlling the camera is to modify a USB cable such that it can transfer digital pulses from the RasPi to the camera. This is the topic of the next section.

You will need to modify an existing USB cable that has a mini-A socket as shown in Figure 10-18 .

Figure 10-18 Mini-A USB socket.

The mini-A socket matches the point-and-shoot camera I used, but if you substituted a different Canon camera, just ensure that you match the cable to the existing USB socket. You next need to cut off the standard USB connector and strip back the cable insulation about 1.5 inches to expose the enclosed wires. The good news is that only two wires will be used for the connections, and these are shown in Figure 10-19 .

Figure 10-19 Two USB control wires.

Typically, the two wires are colored red and black, with the red one connected to pin 1 and the black one connected to pin 4. Note that it is not guaranteed that all USB cable manufacturers follow this color-coding convention, so you might have to use a volt-ohm meter (VOM) to confirm the wire-to-pin connections. You also should tin the wire ends to strengthen them a bit so that they can be easily soldered onto the HAT board. By tin , I mean to apply a little solder to the wire ends. Just be careful because the wires are very small gauge and easily damaged by overheating.

I next set up for an initial test using the same RasPi that was used to control the pan-and-tilt mechanism. You cannot use a Pi Cobbler for the USB cable connections because the servo HAT covers all 40 GPIO pins. Instead, you will need to solder the black USB wire to an open ground point and the red wire to the pin labeled pin 4 on the HAT board. Figure 10-20 shows these wires soldered to the HAT board.

Figure 10-20 USB wires attached to HAT board.

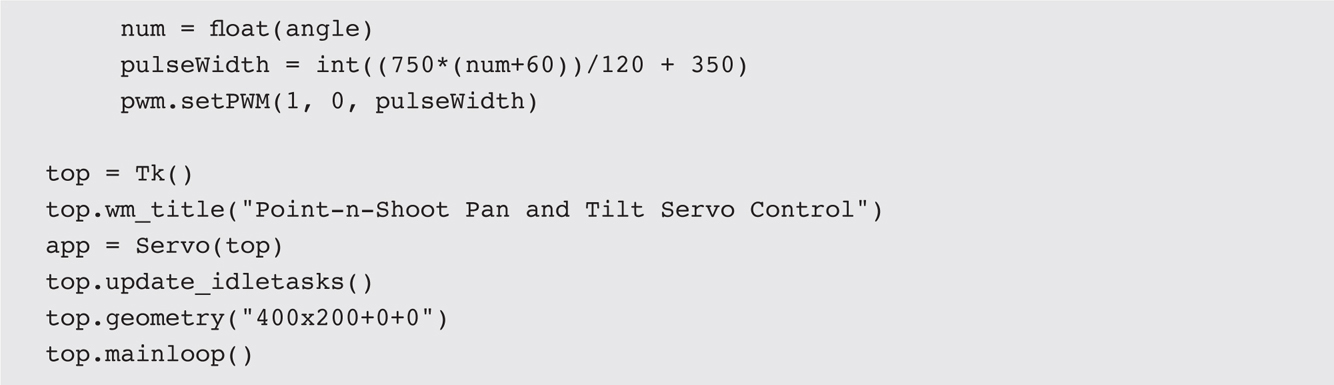

I wrote a simple Python test program that checked out the USB cable connection and ensured that the camera could be controlled by the RasPi. This program is a modified version of the 3-DOF robotic arm program I used in Chapter 9 . There are only two sliders in this GUI, one for the pan and the other for the tilt. I also included a GUI button that may be clicked to take a picture. The program is named PnS_Camera1.py and is available on this book’s companion website. It is listed next with comments.

You must be in X Windows to run this program, as was the case for the robotic arm programs. You also must be in the same directory, which I specified in Chapter 9 , which contain all the robotic arm programs. This program uses the same Adafruit PWM/Servo library as was used in the robotic arm programs.

The PWM/Servo HAT board must be attached to the RasPi with a connected 5-V servo power supply, as it was set up with the robotic arm tests. In this case, the pan servo is connected to channel 0, and the tilt servo is connected to channel 1.

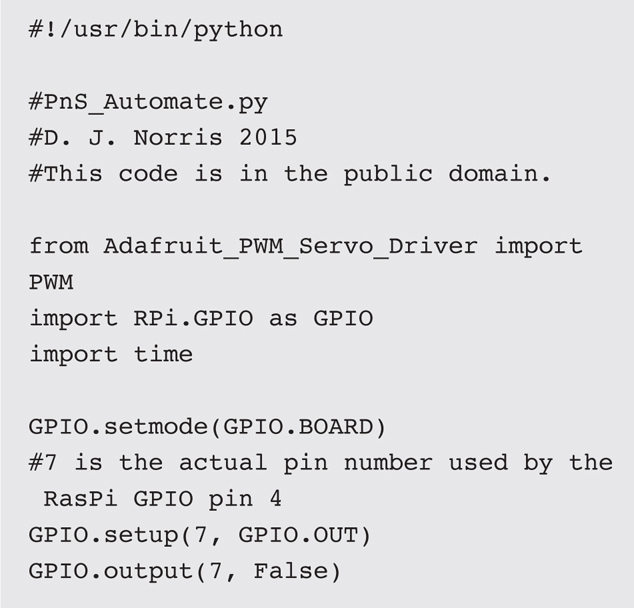

Enter the following commands to run the camera program:

Figure 10-21 is a screenshot of this program running on a RasPi.

Figure 10-21 PnS_Camera1 program GUI.

You should be able to easily tilt and pan the camera using the sliders. Just be careful because the camera is sufficiently heavy to the point of causing the stand to tip over if it is tilted too far forward or backward.

The next step in completing the point-and-shoot camera system is to build a tripod adapter to hold all the components, including a hefty battery supply for the RasPi and the servomechanism.

I will start this section by stating that my design is not the only way to build a frame that will safely hold all the components and yet allow you to mount them on a sturdy tripod for the picture taking. After some experimenting, I settled on an O-frame structure made out of ⅛- × 1.5-inch aluminum flat stock, which allowed me to mount all the items without too much difficulty in machining yet was strong enough and light enough to be mounted on a normal camera tripod. I used a typical tripod connect adapter called a shoe plate that matched my Vanguard tripod. These adapters are inexpensive and are really the only proper way to mount devices on tripods. Just be sure that you purchase a matching adapter that fits your tripod because they come in several sizes.

The shoe plate then was mounted to the bottom of the O-frame using a ¼-20 machine screw, thumbscrew, and washer to spread the load out onto the frame. Figure 10-22 shows the O-frame with the shoe plate attached.

Figure 10-22 O-frame with shoe plate attached.

I next had to fashion a small L-bracket, which I attached between the existing bottom servo C-frame and the top portion of the aluminum O-frame. This bracket also serves as the connecting plate between the ends of the O-frame. I found this to be the most convenient way to join the O-frame ends unless you happen to have an aluminum welding rig. Figure 10-23 shows this small L-frame, which I made of a strip of aluminum flat stock.

Figure 10-23 Aluminum L-frame holds the servos to the top of the steel C-frame.

The RasPi-HAT combination is attached to the vertical portion of the C-frame using an inexpensive plastic case designed for a Model B+. I first attached the case using 4-40 machine screws/nuts and then inserted the RasPi into the case. Just be sure that there is sufficient slack in the servo wires connecting to the HAT board. Figure 10-24 shows the completed assembly mounted on a tripod.

Figure 10-24 Complete point-and-shoot tripod adapter.

Notice that I simply placed two battery packs on the bottom portion of the O-frame. One pack consists of four AA batteries, which power the servos. The other is a commercial cell phone battery extender, which powers the RasPi. Both these packs should provide sufficient capacity for a least one photographic expedition. This arrangement allows you to carry along some fresh AA batteries as well as an extra cell phone battery extender. Having the battery packs resting freely on the tripod adapter makes swapping them a simple task.

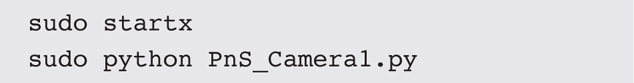

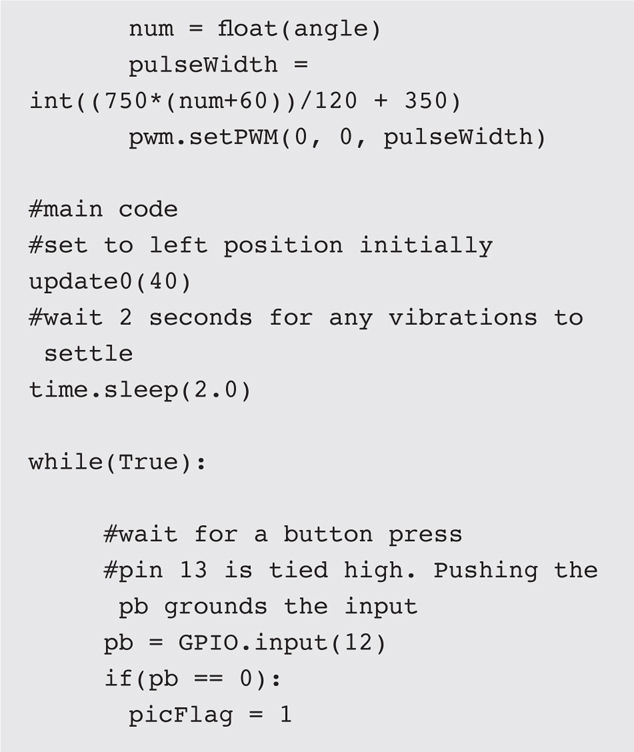

The only task left is to create a program that not only modifies the one I already showed you but also automates the panning and picture-taking functions. It will also be started by a real push button because you do not want to have to use a monitor, keyboard, and mouse to access the RasPi.

The changes required to automate the existing point-and-shoot program are extensive. I first removed all the GUI components because they are no longer required for this self-contained program. I next added a forever loop, which, when activated by a user pressing a push button, will cause the camera to pan from left to right in 30° increments, pausing 5 seconds after each motion. The shutter then would be activated after a 2-second delay to allow any vibrations caused by the camera motion to subside. Figure 10-25 shows the schematic for the push-button connection. I recommend that it be wired directly to the HAT board. Any normally open push button can be used. Just don’t forget to add the 10-kΩ resistor; otherwise, you will be shorting the 3.3-V supply to ground, which would not be a good thing!

Figure 10-25 Push-button connection.

I mounted the push button on an L-shaped piece of Lexan for easy access on the tripod adapter. Figure 10-26 is a dimensioned sketch of this Lexan piece. Note that I used a hot-air gun to soften the Lexan, which allowed me to bend it into an L shape.

Figure 10-26 Push-button mounting bracket sketch.

Figure 10-27 shows the push button mounted on the Lexan and connected to the RasPi.

Figure 10-27 Mounted push button connected to the RasPi.

Tilt control was not enabled because you want the camera to be a steady horizontal plane as it is panned. It is easy to gently adjust the tilt angle because no power is being applied to the tilt servo. Simply leave the tilt angle alone once you have adjusted the camera to the desired plane.

The program also was configured to autostart after the power was applied to the RasPi. I explain how to configure the RasPi for an autostart configuration after the program listing.

This program finally ensures that the camera always starts the picture-taking process facing to the left. I renamed the program PnS_Automate.py

, and it is listed next. As always, it is also available on this book’s companion website.

The program is stopped by disconnecting the power to the RasPi. I realize that this is not the preferred method to end a program, but it should not cause any data disruptions because no configuration data are being saved, and it is much easier than adding a dedicated on/off button. However, I would have definitely added an on/off button if this was to be a commercial product.

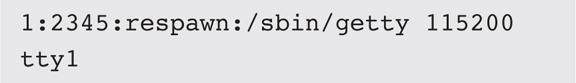

Be sure that you have installed the push button and resistor before doing this configuration. Otherwise, you will not know if the program is actually running. You will next need to make the following changes to the inittab file:

sudo nano /etc/inittab

Comment out the following line:

Comment out the following line:

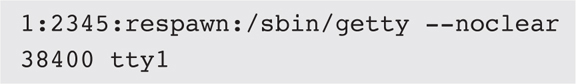

Note that the line may appear like this in more recent versions of the Wheezy distro:

Note that the line may appear like this in more recent versions of the Wheezy distro:

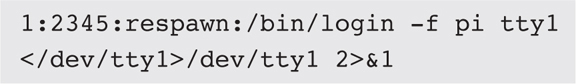

Add this line after the line just commented out:

Add this line after the line just commented out:

Save the file, and exit the nano editor.

Save the file, and exit the nano editor.

Next, edit the profile file:

sudo nano /etc/profile

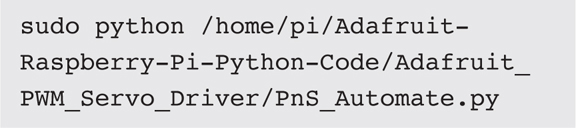

Add the following line to the file (do not add any line returns):

Add the following line to the file (do not add any line returns):

Save the file, and exit the nano editor.

Save the file, and exit the nano editor.

You should be ready to test the autostart configuration. Just turn on the camera, and connect the servo power supply to the HAT board and the 5-V power supply to the RasPi. You then will need to wait about 45 seconds for the initial boot sequence to finish. Then press the push button, and the camera should move to the left, if not already there, and take a picture. The camera will move again after several seconds and take another picture and continue this sequence three more times. Once at the far right, it will take the final picture and then reposition itself to the far left, ready for another picture-taking sequence. Remember that only one image-capture sequence is needed because there is no way to change the focus on this camera, and no focus stacking can be accomplished.

Creating the actual pano image was discussed in a previous section. All you need to do is to put the camera’s SD card into the computer hosting the Panoweaver program and drag and drop the images into the application. It is very easy, and the results are well worth the effort. The only disadvantage to this whole process is that you will need a printer capable of handling large formats if you wish to print the final pano, and that is an expensive purchase.

This last test completes the point-and-shoot portion of the gigapixel camera project. At this point, I will go on to discuss the DSLR pano project.

I used a Canon 70D DSLR as the camera for this portion of this chapter’s project. It is an excellent camera that, when used with an appropriate lens, is fully capable of taking pictures of excellent quality. I also used a Tamron 24 to 70-mm f/2.8 zoom lens with the camera because it is has a good focal-length range for the pano, and its optical quality is outstanding. The other point of using this lens is that it is not too heavy of a lens, which minimizes any overloading of the pan-and-tilt servo-mechanism. Figure 10-28 shows the 70D with the Tamron lens attached.

Figure 10-28 Canon 70D camera with a Tamron 24- to 70-mm lens.

The DSLR camera and lens assembly is far too heavy to be used with the same pan-and-tilt servo assembly employed in the point-and-shoot camera project. Instead, I used a heavy-duty unit purchased from ServoCity.com , which is shown in Figure 10-29 .

Figure 10-29 Heavy-duty DSLR pan-and-tilt servo mechanism.

The servos used in this mechanism are Hitec Model HS-785HBs, which are 3.5-full-turn winch-style servos. These servos rotate 3.5 turns for a total 1260° range of motion compared with the typical servo range of 120 or 180°. Winch servos are so named because they are used in R/C sailboats to remotely raise and lower model sails. For this pan-and-tilt configuration, the servo turns a small gear, which is, in turn, connected to a much larger gear, as you can readily see in the figure. This arrangement allows the servo to reduce its extended range of motion to match the range appropriate for the pan-and-tilt operation. In addition, the gear ratio provides a significant increase in available torque to easily handle the heavy DSLR-lens combination.

The DSLR is easily mounted to the integral platform on the pan-and-tilt mechanism using a standard ¼-20 tripod mounting screw, which is widely available at camera shops, or you can use a shallow head ¼-20 machine screw, if available. I also added a tripod shoe plate to the bottom of the mechanism, which allowed me to use my Vanguard tripod, just as I did with the point-and-shoot arrangement. Figure 10-30 shows the DSLR mounted on the pan-and-tilt mechanism.

Figure 10-30 DSLR on the pan-and-tilt mechanism mounting plate.

The next part of this chapter introduces the gphoto2 software package that I used to control image capture for the DSLR.

Unlike the point-and-shoot camera, the DSLR used for this part of the pano project is controlled using digital command sequences sent through the USB cable from the RasPi. A software package named gphoto2 accomplishes this in a very efficient manner and is able to control a substantial number of the DSLR functions using programmed command sequences. Of course, I will still be using a Python program to control the pan-and-tilt servos because that part of the project is not accommodated by the gphoto2 software. In fact, as you will shortly see, the actual gphoto2 command sequences, which are needed to create the pano, are embedded into the Python program. But first I need to show you how to install and configure the gphoto2 software package.

The RasPi must be connected to the Internet for this procedure to work. Follow these steps to install and configure the gphoto2 software:

1.

sudo apt-get update

2.

sudo wget raw.github.com/gonzalo/gphoto2-updater/master/gphoto2-updater.sh

3.

sudo chmod 755 gphoto2-updater.sh

4.

sudo ./gphoto2-updater.sh

(Be patient; this step takes about 35 minutes on a RasPi 2 and 1 hour and 7 minutes on a RasPi B+.)

You will next need to perform the following steps to ensure that the camera mounts properly:

1.

sudo rm /usr/share/dbus-1/services/org.gtk.Private.GPhoto2VolumeMonitorservice

2.

sudo rm /usr/share/gvfs/mounts/gphoto2.mount

3.

sudo rm /usr/share/gvfs/remote-volume-monitors/gphoto2.monitor

4.

sudo rm /usr/lib/gvfs/gvfs-gphoto2-volume-monitor

Reboot after completing the preceding steps:

![]()

You will need to connect the DSLR to the RasPi using an appropriate USB cable. The Canon 70D uses a regular mini-A socket, as was shown in Figure 10-18 . Enter the following command once the camera and RasPi are connected and both are turned on:

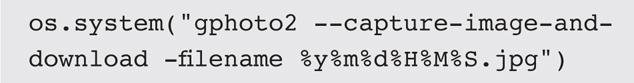

![]()

The camera should proceed to take a picture and subsequently download it into the RasPi. The downloaded picture will be in the same directory from which the preceding command was issued. The downloaded picture also would be named Capt0000.jpg , assuming that the camera’s default setting was to take jpeg images.

Having successfully taken a picture and downloaded it into the RasPi verifies that gphoto2 is working properly and ready for the next steps in this project. These next steps are to create and run a program that will manually control the pan-and-tilt servos and then take and download a picture.

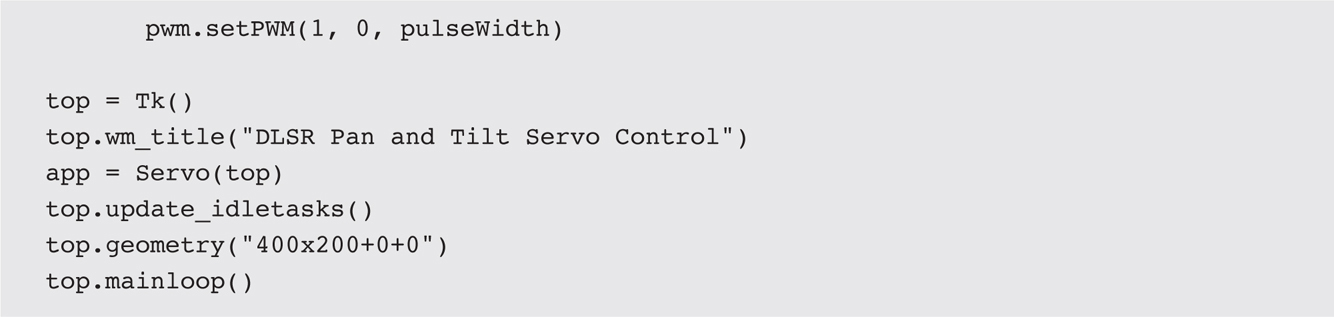

This manual control program is a modified version of the PnS_Camera1.py program I used with the point-and-shoot camera. However, that program required the CHDK firmware be installed on the camera, which is not the case for the DSLR camera. The gphoto2 software takes care of all the low-level interactions between the camera and the RasPi control program, which makes the camera interface quite easy to program. I named the program DSLR_Camera.py

and list it next. It is also available on this book’s companion website. You also should note that I added RasPi pin 18 as an input in this code, but it is not used until the next version, which automates the process.

As with all the other similar programs, you must be in X Windows to run this program. Also, be sure that you are in the same directory that contains the Adafruit PWM/Servo library.

I used the same PWM/Servo HAT board that was used with the point-and-shoot system and connected the pan servo to channel 0 and the tilt servo to channel 1. You must also ensure that the 5-V supply connected to the HAT board is capable of providing a minimum of 2 A (peak) because these servos use more current than the point-and-shoot servos.

Be sure that you are in the proper directory, and then enter the following to run this program:

![]()

Figure 10-31 shows the resulting GUI display after starting this program.

Figure 10-31 DSLR_Camera GUI.

I clicked the button and repositioned the servo slider controls to confirm that the camera and servos responded as they should.

All image captures are stored on the RasPi SD card instead of the camera, which I believe will simplify the postprocessing workflow. You can easily change this feature by making the following edit to the program: change

to

All the images will now be stored on the camera once you change the capture command. Also notice that the images are all automatically named using the date and time to the second when the picture was taken. This ensures that all the image files are unique, and you should be readily able to determine the sequence of when they were taken.

The DLSR focus cannot be programmed using gphoto2 for image capture. It is possible to use gphoto2 to change the focus while in the live view mode, which is the situation where the camera mirror is locked-up and the camera’s viewfinder cannot be consequently used. Unfortunately, the mirror must be unlocked and the camera in a non–live view mode in order to take a picture. This means that you will need to take a series of pictures using different focus points to have the input images to do both focus stacking and stitching.

I would suggest that you start the whole process by manually focusing at a nearby point and taking an automated panning sequence. Next, readjust the focus point to a middle area between close-by and distant objects and repeat the automated panning sequence. Finally, focus at infinity, and do one more automated panning sequence. You will need to review all the images and select the ones that first will be focus stacked and then stitch all the processed stacked images together. All this means is that a total of 12 images will be processed, three each for stacking and then four for stitching, assuming that there are four pan positions. This is not too much work considering how easy it is to use the stack and stitch applications.

I will next discuss how I mounted the RasPi along with the activation push button to the heavy-duty pan-and-tilt mechanism.

I mounted the RasPi and the activation push button on a piece of clear Lexan, which is, in turn, mounted on the pan-and-tilt mechanism. Figure 10-32 is a sketch of this mounting plate. You can use material other than Lexan if that is what you have available. Just be sure that it is sufficiently sturdy to support the RasPi and any battery packs hanging from it.

Figure 10-32 RasPi mounting plate.

The push button is also mounted on an L-shaped piece of Lexan, as was the case for the point-and-shoot system. Figure 10-26 is a sketch of the push-button L-shaped mounting bracket. Fortunately, the rotating base leg is approximately 2.5 inches above the mounting base, which allows plenty of room for the RasPi and push button to be positioned without interfering with leg motion.

The RasPi is attached to the mounting plate with a simple L-shaped bracket, as shown in Figure 10-33 . The clear plastic case I used has a slot in the long wall opposite the wall with the HDMI cutout, which is a convenient feature to hold a small 4-40 machine screw.

Figure 10-33 RasPi mounting bracket.

Figure 10-34 shows the complete assembly with camera, RasPi, and supporting battery packs mounted on my Vanguard tripod.

Figure 10-34 Complete DSLR assembly mounted on tripod.

This whole assembly is heavy, and I would caution anyone against trying to carry all of it as a single package. The assembly also seems to have a stable center of gravity (CG), meaning that it should not tip over once set up on a firm tripod, which is, in turn, set on level ground.

The camera, when secured to the pan-and-tilt mounting plate, will swing down unless the tilt servo is powered on. However, I did not want to continually power this servo because the camera and lens combination puts a considerable bending load on the servo. I found that it started to get warm if I left it powered on with the camera in place. Because there is no need for an automated tilt operation, I configured a simple stop plate, which I made out of a piece of Lexan. Figure 10-35 is a sketch of this stop plate.

Figure 10-35 Lexan stop plate.

The plate was held in place by a ¼-20 machine screw secured with a wing nut. The machine screw went through one of the camera mounting plate slots, as you can see in Figure 10-36 .

Figure 10-36 Stop plate attached to the camera mounting plate.

This arrangement may not be elegant, but it is effective, and it literally saves the tilt servo from overheating and perhaps failing.

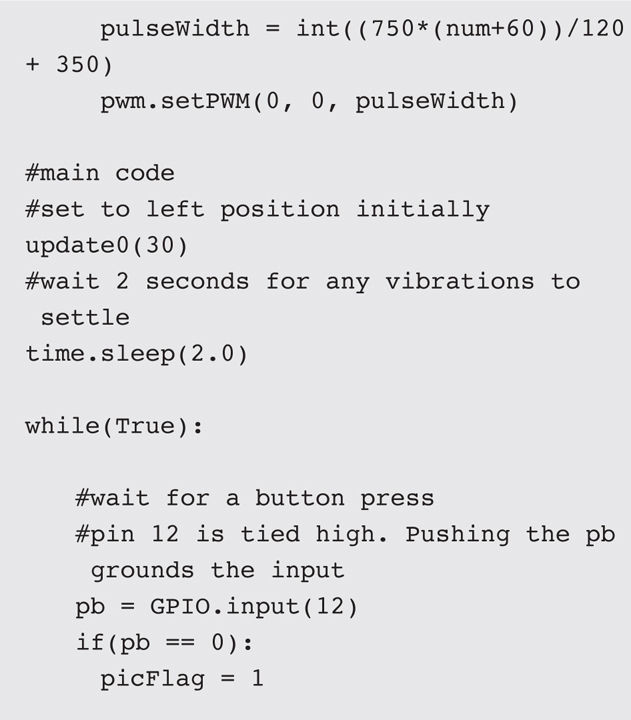

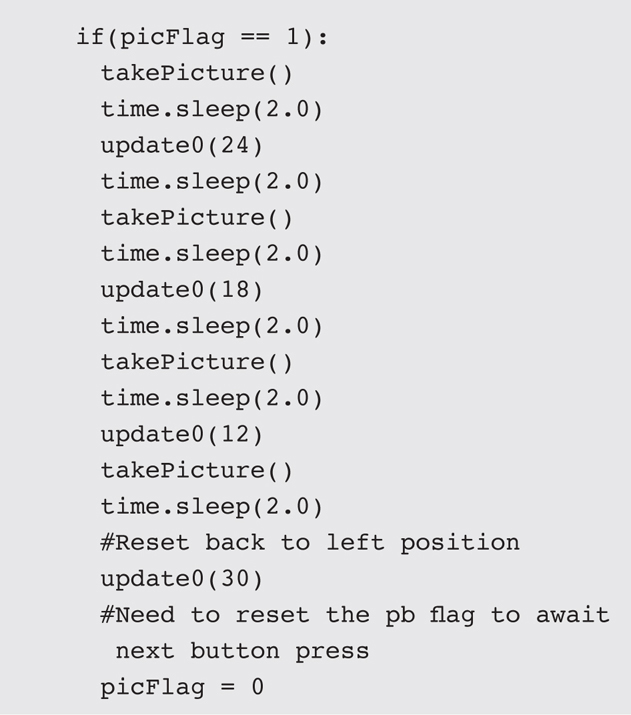

At this point, I will show you the automation program, which functions almost identically to the point-and-shoot version. The only difference is that the gphoto2 application is used to capture an image instead of a digital pulse from one of the RasPi GPIO pins. There are no GUI features in this program, but there is a check for the user pressing the activation button, which is identical in function to the one used for the point-and-shoot system. The program is named DSLR_Automate.py

and is available on the companion website.

I did find that when I initially ran the program, the RasPi didn’t connect or discover the DSLR. This is simply remedied by turning the camera off and then on again. My research on this problem seems to indicate that it is a known issue with the gphoto2 USB implementation. Other than that problem, everything went smoothly with the first test of this program. I also kept the RasPi connected in a workstation configuration because I knew that I had to adjust the servo angle parameters to match the pan requirements for that servo. The numbers in the program listing reflect the correct angles for the initial and subsequent pan positions. Figure 10-37 is a screenshot of the RasPi terminal display for a series of pictures.

Figure 10-37 Terminal display after several pan operations completed.

If you examine the date/time stamp on the image files, you should notice that it takes about 16 seconds to pan, take a picture, download it to the RasPi, and finally delete the image from the camera itself. You should plan on taking about a minute to progress through a single panning operation.

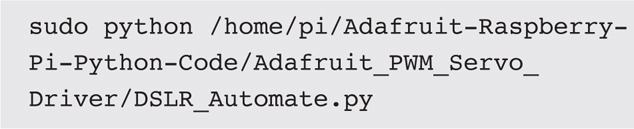

It is now time to configure the autostart after confirming that the pan operation works properly and the camera takes the pictures as commanded. I will follow the exact same procedure I laid out for the point-and-shoot system.

Just follow the exact procedure I described in the point-and-shoot section, except that the following line should be put in the profile file:

You should be all ready for the field once you have completed the changes and rebooted the RasPi.

I have finally included Figure 10-38 , which is a pano image showing the impressive capabilities of this system after all the individual images have been stacked and stitched. The book’s monochrome presentation really doesn’t do it justice, which is why I have asked that a full-color image be put in this book’s companion website. Please take a look at it to get an appreciation of this gigapixel camera system.

Figure 10-38 Sample stacked and stitched pano image.

This chapter’s project demonstrated how to build two variations of a camera system that is capable of creating a panoramic (pano) image composed of multiple images. One variation was designed for a point-and-shoot type of camera and the other for a digital single-lens reflex (DSLR) camera. The beginning portions of this chapter concerned the stack and stitch postprocessing software that is used to create the ultimate pano. I used specialty software in lieu of a do-all application such as Photoshop because I found that the special-purpose applications were both easier to use and more efficient than the general-purpose application.

The next sections concerned how to assemble and program the point-and-shoot system. I noted that my design required the use of a Canon camera because CHDK firmware needed to be installed in the camera to enable remote shutter activation. The RasPi was used to control the pan and image-capture operations to generate the required number of images for the pano generation. I demonstrated both manual control and fully automated programs for this system.

The concluding chapter sections basically repeating the same point-and-shoot discussions except that they were changed to handle the DSLR camera and its requirements. The two biggest changes were use of a very heavy-duty pan-and-tilt mechanism capable of moving the DSLR and use of the gphoto2 application to control the camera image capture. I demonstrated the use of both manual and fully automated control programs for this DSLR system, as I had done for the point-and-shoot system.