By this point, you might be ready to concede that the business of amassing and deploying knowledge of words and language structure is more involved than you initially thought. But once basic language skills are in place and words can be dependably retrieved for language production or comprehension, and once the machinery for assembling well-formed sentences and computing their meanings is running smoothly, we’re home free, right?

Not exactly. Try reading the following collection of impeccably formed English sentences:

Frank became convinced that his brother, a handsome and witty doctor, was having an affair with his wife. The doctor warned her that it was only a matter of months until probable death. Her only hope was to undergo a disfiguring surgery. But she was afraid to do so. She lingered for some time, but eventually, Frank had to confront the fact that she was gone from his life. Then he learned the truth. Racked with sorrow, he killed himself. It was a brutal stab in the back. She thought that she should eventually tell Frank. Frank’s wife was secretly being treated for a dangerous illness. He was consumed with rage over it.

For added fun, now look away from the text and try to paraphrase what you’ve just read. I’ll admit the passage is hard to make sense of. But there’s nothing wrong with the sentences themselves. In fact, they seem to pose no problem at all when arranged in a somewhat different order, like this:

Frank became convinced that his brother, a handsome and witty doctor, was sleeping with his wife. It was a brutal stab in the back. He was consumed with rage over it. Then he learned the truth. His wife was secretly being treated for a dangerous illness. The doctor warned her that it was only a matter of months until probable death. Her only hope was to undergo a disfiguring surgery. She thought that she should eventually tell Frank. But she was afraid to do so. In the end, she lingered for some time, but eventually, Frank had to confront the fact that she was gone from his life. Racked with sorrow, he killed himself.

Why is the second version so much easier to read than the first? It’s not just that this version is “orderly” and the first one is “disorganized.” The reason that the order of sentences matters at all is because our understanding of the passage is supplied only partially by the language itself—the rest of its meaning is actually filled in by the connections that we draw between sentences and the extra details that we throw in.

Normally, when people talk about “reading between the lines,” they have in mind some especially skilled or attentive scrutiny of the message; the phrase usually refers to hunting for some underlying meaning that’s been slipped in or hidden, invisible to anyone who’s not carefully looking for it. But in reality, whether as hearers or readers, we read between the lines of language all the time and without even thinking about it. Further, as producers of language, we rely on our audience to be able to do it. Take the seemingly complete sentence The doctor warned her that it was only a matter of months until probable death. There are many pieces of information that this sentence leaves out. We know that some doctor (but we don’t know exactly which one) warned someone female (but who?) that someone (but who?) would likely die (but from what?) in a matter of months (but how many?). Because this sentence is nestled among others in the two preceding passages, much of this information gets filled in, though the result is somewhat different in the two contexts:

Frank became convinced that his brother, a handsome and witty doctor, was having an affair with his wife. The doctor warned her that it was only a matter of months until probable death.

His wife was secretly being treated for a dangerous illness. The doctor warned her that it was only a matter of months until probable death.

Because a specific doctor and a specific female have already been mentioned in each version, we can easily figure out who is referred to by the doctor and by her. But only the second context leads to a clear and sensible inference about whose death is under discussion. In the first context, we’re left wondering exactly who will die. The wife? Her lover, the doctor? Will they be murdered by the husband? The story only gets more mysterious with the sentence Her only hope was to undergo a disfiguring surgery. If you look back at the first passage, you’ll see that much of its jarring effect comes from the fact that you can’t help but try to make connections among the pieces of the text, sometimes with bizarre effects.

Hearers and readers can be counted on to bring this connection-making mindset to the task of language comprehension, which in turn has a powerful effect on the choices that a speaker makes about how much meaning gets packed into the language itself. If all meaning had to be encoded explicitly through language, we would end up with stories that sound like this:

Frank became convinced that Frank’s brother, a handsome and witty doctor, was sleeping with Frank’s wife. According to Frank’s belief, the fact that Frank’s brother was sleeping with Frank’s wife was a horrible betrayal by Frank’s brother and Frank’s wife, much like the experience of Frank being brutally stabbed in the back by Frank’s brother and Frank’s wife. Frank was consumed with rage over Frank’s belief that Frank’s brother and Frank’s wife were sleeping together. Then Frank learned the truth about the situation between Frank’s brother and Frank’s wife.

This passage is hard to read (not to mention highly annoying), even though it is meant to take the guesswork out of comprehension.

Any account of how human minds engage with language has to grapple with the fact that the meaning that’s conveyed by the actual linguistic code has to be dovetailed with knowledge that comes from other sources. These “other sources” don’t just represent icing on the cake of linguistic meaning. They interact with linguistic form and meaning in complex ways, and without them it would be impossible for us to use language to communicate efficiently.

The goal of this chapter is to give you a sense of the wide-ranging ways in which we all “read between the lines” of language, using the linguistic content of sentences as a starting point—and not the end point—for the construction of an enriched meaning representation. You’ll see how we fill in certain details that are not provided by the language itself; we do this by mentally re-creating the real-world situations that gave rise to the sentences in question. This allows us, among other things, to infer cause–effect relationships between sentences even when they’re not explicitly stated; to have a clear sense of how things and events that are described in a text are related in real time and space; to add vivid perceptual detail to our understanding of a narrative; to understand metaphors; and to draw very precise meanings from linguistic expressions that are inherently vague, such as words like she or his.

11.1 From Linguistic Form to Mental Models of the World

The whole purpose of talking (or writing) to others is to implant certain thoughts in their minds (often with the goal that these thoughts will lead to specific actions). At its heart, then, language comprehension involves transforming information about linguistic form into thought structures. The linguistic code constrains these thought structures, but on its own is not enough to determine them. Let’s start by taking a look at what the linguistic code does and does not contribute to meaning.

What do sentence meanings look like?

Consider a sentence like Juanita kissed Samuel. Your knowledge of English keeps you from transforming this sentence into a thought representation in which Samuel receives a violent wallop from Juanita or where Samuel is the one doing the kissing—the sentence itself simply doesn’t map onto these meanings. And it requires you to build a thought representation in which Juanita kisses Samuel. This event represents the core meaning of the sentence, derived entirely from the meanings of the words in the sentence and their combinations. (Note: with extra assumptions or background knowledge, you might also imagine other events that either led to Juanita kissing Samuel, or are the consequence of Juanita kissing Samuel. But any such additional events hinge on the thought representation of the core Juanita-kissing-Samuel event.)

Language researchers call this core meaning the proposition that corresponds to a sentence. You can think of propositions as the interface between sentences and their corresponding representations of reality. In print, it’s common to see propositions written down as logical formulas that follow specific notational conventions, so you might see the proposition that’s expressed by a sentence like Juanita kissed Samuel as:

kiss (j, s)

This is simply shorthand for a thought structure that looks something like this: In the world we’re talking about, there was a kissing event in which the person referred to as Juanita kissed the person referred to as Samuel.

Propositions represent the bare bones of a sentence, capturing those things about a situation that have to be true in the world in order for the sentence to be considered true. But this leaves a fair bit of detail unspecified. The sentence Juanita kissed Samuel is true regardless of whether Juanita gave Samuel a brief peck on the cheek or whether she kissed him on the mouth for an entire minute without drawing a breath; whether Juanita is Samuel’s mother or his lover; whether Samuel enjoyed it or was repulsed by the kiss; and so on. Presumably, some details along these lines were present in the situation that caused the speaker to utter this sentence in the first place, but none of this is contained within the sentence’s propositional content.

The propositional content is the end result of unpacking the words and syntactic structure of a sentence, so propositions are determined by the structural relationships of elements within the sentence (notice that you get a different proposition for the sentence Samuel kissed Juanita). However, in Chapter 10 you learned that speakers can choose from a variety of sentence structures to express the same meaning. So, several different linguistic forms can give rise to the same proposition: Samuel was kissed by Juanita; It was Juanita who kissed Samuel; It was Samuel who was kissed by Juanita, etc. All of these have the same core meaningful content. What this means is that all of these sentences are either true or false under the same set of circumstances. If you imagine any situation in the real world in which the sentence Juanita kissed Samuel is true, then all of the above paraphrases are true as well. Conversely, any situation in which Juanita kissed Samuel is false also renders the other paraphrases false.

What information do mental models contain?

When linguists talk about the meanings of sentences, they often have in mind their propositions. But we do much more during language comprehension than just extract the abstract propositional content of a sentence. To some extent, we also mentally encode the specific event or situation that might have triggered the utterance of the sentence. That is, we tend to build a fairly detailed conceptual representation of the real-world situation that a sentence evokes. Such representations are often called mental models or situation models. They aren’t nearly as detailed as the real triggering events, but they’re a lot richer than just the sentence’s propositional content.

It seems self-evident that understanding language must involve some form of enriched mental encoding. Admittedly, if all we did with language was to recover the propositional content of sentences, language would still be useful—for all you know, one of your distant ancestors may have survived long enough to reproduce solely because of the very useful propositional content of a statement like, “There’s a saber-tooth tiger behind you!” But there are some things that propositional content alone can’t do. It’s not likely to move you to tears when embedded within a novel, or to create enough suspense to cause you to stay up all night turning the pages of a well-written thriller. It’s often been suggested that fiction has such a hold on us precisely because our mental representations of the events described in the text are almost as detailed as if we were actually participating in those events.

But figuring out exactly what information is contained in that mental model is no trivial matter for psycholinguists. Trying to probe for its contents could well change the type of information that people encode, making it hard to infer what they represent spontaneously when curled up with a book on the couch. (Think about it: How much detail do you think you represent in the normal course of reading sentences? As soon as you try to analyze your mental representations in response to a sentence, the very act of scrutiny probably changes them.) Even less trivial is explaining precisely how the information in the mental model got there and what cognitive mechanisms were involved.

There’s a surprising amount we still don’t know about the thought structures that language implants in us. But we do have some sense of what these mental models look like from an intriguing variety of experimental scenarios and results. The first step in investigating mental models is to establish whether thought representations for sentences do in fact look more like real-world situations than like abstract propositions. So, what do real-world situations look like?

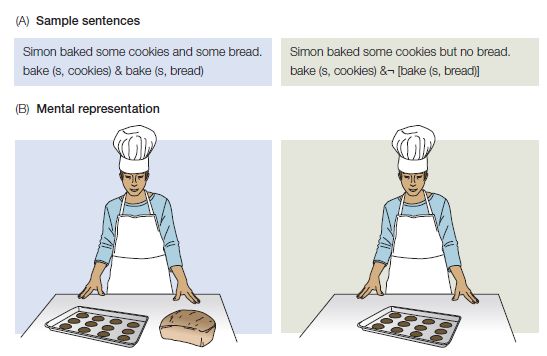

At the most basic level, when a sentence describes a situation, certain things and people are involved. But not all things that are mentioned are actually present in the situation that’s being described. For example, consider the following sentences:

Simon baked some cookies and some bread.

Simon baked some cookies but no bread.

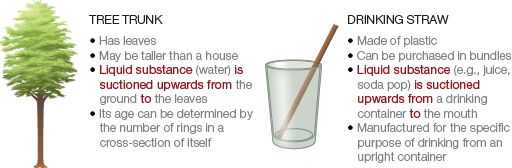

Both of these sentences specifically mention bread, and the propositional content for each sentence also includes bread. (The proposition for a sentence like Simon baked no bread can be paraphrased as something like: it’s false that there was an event of baking in which the person referred to as Simon baked bread; Figure 11.1A.) But things are a bit different if we look at the actual situations in the world that correspond to these sentences (Figure 11.1B). The first sentence evokes a situation in which there are cookies and bread; no bread exists in the situation evoked by the second sentence. The question is, do our mental representations of the sentences somehow reflect this difference between the situations, as shown in Figure 11.1B? Or do they, like the propositions in Figure 11.1A, include the concept of bread for both example sentences?

Figure 11.1 Propositions versus situations for sentences with and without negation. (A) Propositions and corresponding target sentences. Note that the symbol indicates the logical concept of negation, which is understood as stating that the proposition under negation is false. (B) Drawings showing the real-world situations that are consistent with the meanings of each of the target sentences.

To find out, Maryellen MacDonald and Marcel Just (1989) probed readers’ mental models using a memory task. Subjects read stimulus sentences like Simon baked some cookies but no bread, followed immediately by a probe word (bread or cookies). They had to respond to the probe word by pressing a “Yes” or “No” button to indicate whether that word had appeared somewhere in the stimulus sentence (see Researchers at Work 11.1). People were faster to respond “Yes” correctly when the probe word was not negated—that is, when it referred to an object that actually existed in the situation described by the sentence.

A reasonable way of interpreting these results is that even though the word bread appeared in all the critical sentences, the concept of bread was more strongly activated when the sentence required its existence in the real-world situation it described. This suggests that readers’ representations of sentences are more like encodings of real situations than like abstract propositions.

Similar probe tasks have been used to study specific aspects of mental models. A number of studies show that these mental representations aren’t fixed, static recordings; rather, the degree to which entities are active in memory waxes and wanes, much as a camera might zoom in to capture something in more detail, then zoom out again, only to focus on something else. The shifts in focus can reveal interesting things about how people structure their mental representations as they interpret language.

For example, Art Glenberg and colleagues (1987) had their subjects read stories that contained a particular object of interest (here, a sweatshirt). In half the stories, the object was physically connected to the main character of the story, like this:

John was preparing for a marathon in August. After doing a few warm-up exercises, he put on his sweatshirt and went jogging. He jogged halfway along the lake without too much difficulty. Further along his route, however, John’s muscles began to ache.

The other half of the stories were very similar, with one slight but important change: in the second sentence, the critical object becomes separated from the protagonist, as shown in this alternate version of the story:

John was preparing for a marathon in August. After doing a few warm-up exercises, he took off his sweatshirt and went jogging. He jogged halfway along the lake without too much difficulty. Further along his route, however, John’s muscles began to ache.

The researchers varied whether the memory probe appeared immediately following the critical second sentence or after either one or two additional sentences. They found that immediately after the key sentence, subjects were quite fast to respond to the probe (sweatshirt) in both types of stories, suggesting that this object was highly active in memory. But for the second story, in which the sweatshirt was peeled away from the main character, the sweatshirt quickly faded in memory, and responses to the probe were considerably slower if just one sentence intervened between the mention of sweatshirt in the text and the memory probe. In contrast, for the first story, in which the critical object stayed attached to the main character, responses to the sweatshirt probe were faster even after an intervening sentence, suggesting that the sweatshirt concept stayed highly activated in memory. Despite the fact that there’s no further mention of the sweatshirt in either story, subjects must have constructed some mental representation of what the protagonist was wearing as he jogged around the lake, causing them to respond more quickly to the probe when the sweatshirt was attached to his body. But by the fourth sentence of the story (two sentences after the mention of the sweatshirt), the activation of the sweatshirt concept waned to the point that responses were equally slow for both story types.

The memory-probe technique is interesting because it reveals something about how attention to various entities shifts over time and in response to the nature of the situation and the relationships between entities. In the study by Glenberg and colleagues, it’s apparent that the spatial relationship between entities can affect such shifts of attention. Other work with memory probes has shown that temporal information is also coded in the mental model.

In one such study, Rolf Zwaan (1996) had people read stories that described a series of events. At some point in the story, a new event was introduced with one of the following phrases: A moment later/an hour later/a day later. For example, embedded within a story describing an aspiring novelist settling down to work, readers might encounter the following pair of adjacent sentences: Jamie turned on his PC and started typing. An hour later, the telephone rang. After reading the second of these sentences, subjects had to respond to a memory probe that tested for content that had appeared in the first sentence before the temporal phrase (e.g., typing). They took longer to respond “Yes” to the probe when the temporal phrase expressed a longer interval of time (an hour later/a day later) than when it expressed a very short interval, suggesting that material in a mental model becomes less accessible if it has to be retrieved from beyond the imagined barrier of a long time interval.

Zwaan’s study also revealed that people took longer to read sentences that introduced a long temporal shift (an hour or a day, rather than a minute). He took this to mean that when a temporal phrase introduces a long break between events, it becomes harder to integrate these events in a mental model. This was supported by evidence that the connection between events separated by a longer time interval was more tenuous in long-term memory. In a variation on the memory-probe task, Zwaan tested for memory of the stories’ content after all of the stories had been read, rather than while people were reading them. Specifically, he presented subjects with sentences describing events that either had or had not occurred in the little stories, and probed to see how quickly people would respond “Yes” to the test item the telephone rang immediately after responding to the test item Jamie started typing. The idea here is that if the first test item speeds up responses to the second, this must be because the two events are tightly linked in memory. Subjects’ responses to the second event were quite fast for those stories in which the two events were separated by just a very brief interval (a moment later); by comparison, responses to the second test item were significantly slower when a longer time interval intervened between the two events.

A large number of studies have confirmed that information about time tends to be a stable fixture of mental models as people read text. Other information is also encoded in mental models—for instance, the representation of a character’s goals, as is illustrated by these two contrasting stories:

(a) Betty wanted to give her mother a present. She went to the department store. She found out that everything was too expensive. Betty decided to knit a sweater.

(b) Betty wanted to give her mother a present. She went to the department store. She bought her mother a purse. Betty decided to knit a sweater.

In (a), Betty’s decision to knit a sweater is best interpreted as serving the goal of giving her mother a present. In (b), Betty has already satisfied this goal while at the department store, and the decision to knit a sweater seems unrelated. Tom Trabasso and Soyoung Suh (1993) found that content related to a character’s goals became less accessible if the goal had been satisfied. But if the goal remained unfulfilled, depriving the reader of a sense of closure, the same content stayed highly active in memory.

Trabasso and Suh’s is not the only study to show that a lack of closure leads to stronger memory for the unresolved elements; another example can be found in a 2009 paper by Richard Gerrig and colleagues. Such results may make you wonder whether cliffhanger endings in TV episodes actually help you remember their content better. Lab studies have looked at memory over fairly short intervals of time within a single lab session, but it wouldn’t be hard to design an experiment that investigates whether cliffhangers help people remember key events over the period of a week or so.

Various studies have explored dimensions such as time, space, cause–effect relations, or information about a character’s goals, thoughts, or characteristics, and all of these seem to play a part in building mental models that are triggered by linguistic content. There’s still a bit of work to do, though, to establish whether some of these dimensions are more important than others (and if so, why), and how they might interact with each other.

There’s also still a fair bit that we don’t know about the amount of perceptual detail that goes into mental models. For example, we usually take it for granted that when people read novels, they conjure up a lot of perceptual detail through their own imaginations (though there may be some significant individual differences; see Box 11.1). When a novel gets adapted into a movie, many people have strong opinions about whether the actors in the film version look “right,” suggesting that they have mentally encoded these details while reading. But how much detail, exactly, and of what kind?

One intriguing study used a neat twist on the common memory-probe task to test whether readers actually bring to mind sounds that are described in a text. Tad Brunyé and colleagues (2010) showed their participating readers sentences that contained auditory descriptions (for example, The engine clattered as the truck driver warmed up his rig). Subjects then had to classify certain sounds as either real sounds that could occur in the world, or computer-generated artificial sounds. This test included sounds that had been described in the previous sentences, as well as sounds that had not. People were faster to classify the sounds that had been described in the earlier sentences that they’d read, suggesting that they had to some extent mentally activated these sounds, rather than representing them as mere abstractions. As a result, these sounds felt familiar by the time subjects took the sound categorization test. This is consistent with a mound of work in brain imaging, which shows that when people read perceptually rich sentences, this activates those areas of the brain that are responsible for perception in those domains (e.g., Speer et al., 2009).

At the same time, not all perceptual details of an event are represented by readers of texts—or even by their writers, as sometimes becomes apparent when a novel is adapted for the screen. In a New Yorker magazine piece about the screen adaptation of David Mitchell’s novel Cloud Atlas, Aleksandar Hemon (2012) describes some of the challenges that arose unexpectedly in creating real objects out of the novel’s material:

The scene in the control room, for example, features an “orison,” a kind of super-smart egg-shaped phone capable of producing 3-D projections, which Mitchell had dreamed up for the futuristic chapters. The Wachowskis [the film’s directors], however, had to avoid the cumbersome reality of having characters running around with egg-shaped objects in their pockets; it had never crossed Mitchell’s mind that that could be a problem. “Detail in the novel is dead wood. Excessive detail is your enemy,” Mitchell told me, squeezing the imaginary enemy between his thumb and index finger. “In film, if you want to show something, it has to be designed.” The Wachowskis’ solution: the orison is as flat as a wallet and acquires a third dimension only when spun. Mitchell, who had been kept in the loop throughout the process (and has a cameo in the film), was boyishly excited by the filmmakers’ “groping toward exactitude.”

Clearly, David Mitchell, the novel’s author, had never envisioned the “orison” in enough detail to imagine it bulging in his characters’ pockets, and it’s doubtful that his readers had either—nor is it likely that even the most committed readers designed it in their minds to the point of giving the device the aesthetically pleasing feature of shifting from two dimensions to three.

This is not surprising, because it probably takes quite a bit of time and effort to instantiate detailed visual representations (by one estimate, it can take up to 3 seconds for people to generate a detailed image of an object; see Marschark & Cornoldi, 1991). When it comes to language processing speeds, 3 seconds is a thoroughly glacial pace—the average word can be read as much as 10 times faster than that. Presumably, slower reading would allow for more visual detail to be elaborated by the reader (so, if you want to experience a novel more vividly, stop skimming!), but much is still unknown about which features are most likely to be spontaneously brought to mind during ordinary recreational reading.

What information “sticks” in memory?

Let’s step back for a moment and think about the implications of mental models (see Method 11.1). So far, I’ve been suggesting that linguistic representations are not the end result of comprehension processes, but simply the means to an end. If the ultimate goal of language comprehension is the mental model, we might expect that it would be cognitively privileged over abstract linguistic representations. And that seems to be the case, at least in terms of accessibility in long-term memory. In a now-famous study, John Bransford and his colleagues (1972) had people listen to a list of sentences and later take a memory test in which they had to state whether they’d heard that sentence earlier, in exactly that same form. Bransford and colleagues made various subtle changes to the original sentences from the list, so that they appeared in slightly altered form on the memory test. For example, subjects might first hear:

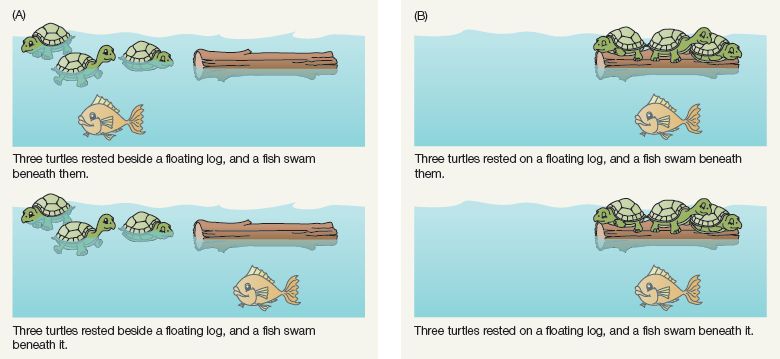

Three turtles rested beside a floating log, and a fish swam beneath them.

and later, might have to respond to the following:

Three turtles rested beside a floating log, and a fish swam beneath it.

Though the difference in wording is very slight, people had little trouble recognizing that the second sentence was different from the first. But they showed a lot more confusion if they first heard this:

Three turtles rested on a floating log, and a fish swam beneath them.

and later had to respond to this:

Three turtles rested on a floating log, and a fish swam beneath it.

In terms of surface linguistic structure, the difference between the second pair of sentences was no greater than the difference between the first pair. Yet people’s responses suggested the difference in the first pair was more memorable. The important fact seems to be that the first two sentences yield different mental models—in one sentence, the fish swims beneath the turtles, while in the second, the fish swims beneath the log and not the turtles. But the sentences in the second pair result in nearly identical mental models (see Figure 11.3). This suggests that what people remember is the mental model rather than the linguistic information used to build the model. The language itself is merely the delivery device for the really valuable information.

Figure 11.3 (A) Two sentences for which a small difference in wording leads to a large difference in their corresponding mental models. (B) Two sentences with a small wording difference but identical mental models. Study results indicate the difference between the two sentences in (A) is remembered much more accurately than the difference between the sentences in (B)—that is, people remember differences between mental models more readily than differences between sentences. (Adapted from Bransford et al., 1972, Cogn. Psych. 3, 193.)

I should add a qualifying remark to the conclusions that we can draw from this famous study: the study reveals that we tend not to consciously remember the exact linguistic form of what we’ve recently heard. But that’s not to say that the details of linguistic form are entirely absent from long-term memory. In numerous chapters throughout this book, you’ve seen many examples where people do retain memory for details of linguistic form, and then make efficient use of this information. Here are just a few examples of phenomena that rely on preserving information about linguistic form in long-term memory: tracking the transitional probabilities of syllables in order to segment words; learning the most probable ways of completing a temporary syntactic ambiguity; and being primed by a previous bit of syntactic structure so that you’re more likely to later reuse that same structure.

What do you remember? In this activity, you’ll take a memory test to get a sense of the difference between what a text says and what you retain from it.

https://oup-arc.com/access/content/sedivy-2e-student-resources/sedivy2e-chapter-11-web-activity-3

The importance of background knowledge

As you’ve seen, the information conveyed by each sentence is integrated into a mental model that contains information from earlier sentences. But other information, such as background knowledge, also contributes to the mental model. If certain background information is missing, it can sometimes make a text extremely hard to understand. Consider the following passage from Bransford and Johnson (1972):

The procedure is actually quite simple. First, you arrange things into two different groups. Of course, one pile may be sufficient depending on how much there is to do. If you have to go somewhere else due to lack of facilities, that is the next step; otherwise you are pretty well set. It is important not to overdo things. That is, it is better to do fewer things at once than too many. In the short run this might not seem important, but complications can easily arise. A mistake can be expensive as well. At first the whole procedure will seem complicated. Soon, however, it will become just another facet of life. It is difficult to foresee an end to the necessity for this task in the immediate future, but then one can never tell. After the procedure is completed, one arranges the material into different groups again. Then they can be put into their appropriate places. Eventually they will be used once more, and the whole cycle will have to be repeated. However, that is part of life.

Raise your hand if you have a very clear image in your head of what’s being described in this passage. Not likely—the passage contains a heap of extraordinarily vague words and phrases: you arrange things (what things?); one pile (of what?) may be sufficient; lack of facilities (what kind of facilities?); It is important not to overdo things, and so on and so on. Chances are, your mental model of this whole “procedure” is not very rich.

Activating background knowledge In this activity, you’ll conduct a small language comprehension experiment, using the clothes-washing passage above from Bransford and Johnson (1972). Using the documents on the website, download two versions of the story, one with a title, and one without a title. Find a handful of willing subjects, and give half of them the titled story, and the other half the untitled story. Ask them to recall as many main ideas from the passage as they can. Compare the number and detail of the ideas that are provided by your two groups of subjects.

https://oup-arc.com/access/content/sedivy-2e-student-resources/sedivy2e-chapter-11-web-activity-4

But let’s activate some background knowledge, simply by slapping a title onto this passage—say, Instructions for washing clothes. Now go back and reread the paragraph. Notice how your mental model suddenly sprouts many details that you had no way of supplying before. This little exercise demonstrates how skimpy the linguistic content can get and still be perfectly comprehensible—provided we have the means to enrich our mental models either through background knowledge or by connecting the dots within a text. It also raises an important set of pedagogical implications: that the understanding of a text can depend heavily on specific knowledge a reader is presumed to have. Even when the ability to decode the linguistic content is there, comprehension can really suffer without an adequate knowledge base (see Language at Large 11.1). For example, if you’ve led a highly sheltered life when it comes to laundry and you really don’t know what’s involved in washing clothes, the title may not have helped you that much.

What does it mean to be literate?

Here’s an excerpt from a 2012 National Geographic story by Russ Rymer about vanishing languages:

In an increasingly globalized, connected, homogenized age, languages spoken in remote places are no longer protected by national borders or natural boundaries from the languages that dominate world communication and commerce. The reach of Mandarin and English and Russian and Hindi and Spanish and Arabic extends seemingly to every hamlet, where they compete with Tuvan and Yanomami and Altaic in a house-to-house battle. Parents and tribal villages often encourage their children to move away from the insular languages of their forbears and toward languages that will permit greater education and success.

Who can blame them? The arrival of television, with its glamorized global materialism, its luxury-consumption proselytizing, is even more irresistible. Prosperity, it seems, speaks English.

Someone who has truly understood this passage should be able to answer questions like these:

■ How did national borders and natural boundaries previously offer protection to remote languages? What has changed, and why do these protections no longer apply?

■ Describe what a “house-to-house battle” between Russian and Tuvan might look like.

■ Explain how the availability of television in remote areas promotes a language like English at the expense of more local languages.

But notice that the author himself provides very little in the way of information that would answer these questions—instead, he alludes to existing knowledge that he assumes the reader will have, and leverages this knowledge to reach certain conclusions. As a reader, you have to already know what it means to live in a globalized, connected age, and that geographic boundaries like rivers or mountains might have previously hindered different linguistic communities from interacting with one another, but no longer have the same impact. You have to know what television is, and what kinds of programs it might be broadcasting in the English language. Without such knowledge, you would find this excerpt as mystifying as the clothes-washing passage from Bransford and Johnson (1972) minus the clarifying title. This example demonstrates that texts that rely heavily on background knowledge are readily found “in the wild,” and not just in carefully constructed, deliberately vague experimental stimuli.

This raises an interesting quandary, though: When we test children for their reading comprehension in school, what exactly are we testing for? It’s been shown that children often perform badly on reading comprehension tests if they have very little background knowledge about the topic of the reading passage. It’s also been noted that American children—especially those from low-income families—show a dip in scores of reading ability between the third and fourth grades, and that over time the gap between wealthier and poorer students widens. Many researchers and educators (e.g., Hirsch, 2003; Johnston, 1984) believe that these two sets of findings are connected: In the early grades, reading tests tend to focus on basic decoding and word recognition abilities, whereas later on there’s an emphasis on overall comprehension of more complex texts. Since background knowledge can affect the richness of the mental model that is constructed from a text, one possibility is that the income gap in scores reflects not just a gap in reading ability, but a lag in general knowledge.

Reading tests, then, seem to be biased against students with a smaller knowledge base. One could argue that this sort of bias should be removed from reading tests, as it leads to an unfair picture of the reading abilities of some students. On the other hand, the reality is that actual texts, even those intended for very general audiences, do rely heavily on readers’ being able to fill in many blanks by drawing on a substantial body of knowledge. One could just as well argue, then, that since we do want all students to eventually be able to read publications like National Geographic, de-biasing tests to remove the effects of prior background knowledge would fail to reflect the students’ readiness to understand a broad variety of texts. And, in recognition of the important connection between prior knowledge and deep reading comprehension, many educators are arguing that teaching in the early grades should focus not just on general reading skills, but also on bulking up domain knowledge.

Still, it would be valuable to be able to distinguish whether a student is having trouble understanding his science textbook because he can’t fluently decode or recognize words, because he lacks a general ability to make important connections and inferences, or because he has an impoverished knowledge base—this might tell us whether he’d most benefit from reading lots of novels or from spending his time watching science programs on TV or going to the science museum. A good set of reading assessment tools should be able to make these important distinctions.

11.1 Questions to Contemplate

11.1 Questions to Contemplate

1. What are some examples of information that may not be included in the propositional representation of a sentence but is likely to be present in the mental model constructed for that sentence?

2. What methods can be used to determine what information is in the mental model, and what logical assumptions do these methods rely on?

3. What effects might age and experience have on the representation of mental models?

11.2 Pronoun Problems

One of the key points to take away from the previous section is that hearers and readers are very good at mentally filling in an abundance of meaning even when the language itself isn’t precise. This means that communication doesn’t depend entirely on information that’s made explicit in the linguistic code, a fact that has far-reaching implications for how human languages are structured.

If readers are able to flesh out detailed meanings when confronted with imprecise language, this makes a speaker’s job much easier. In many contexts, speakers can get away with using vague, common, and easy-to-produce words like thing or stuff rather than digging deeper into the lexicon for a less accessible word, and they can avoid spelling out more detail than is necessary—in short, a great deal of information can be left unstated. Nothing demonstrates this as neatly as the existence of pronouns like she or they. Much like the words thing or stuff, pronouns contain very little semantic information. This becomes evident if you meet one in an out-of-the-blue sentence like She promised to come for lunch. Who’s she? All we know from the pronoun itself is that it refers to someone female. Yet when pronouns are used in text or conversation, we usually have no trouble figuring out the specific identity of the person in question.

Titles in literary texts Many literary texts leave a lot to be filled in by the reader’s imagination. One goal, though, is to make sure that the reader has enough guidance to generate some of the important information that the text leaves implicit. Often, a useful title helps set the context. In this activity, you’ll see some literary examples that achieve this, and you’ll be asked to find your own examples.

https://oup-arc.com/access/content/sedivy-2e-student-resources/sedivy2e-chapter-11-web-activity-5

As far as I know, all languages contain pronouns (though, as you’ll see in a moment, there can be some variety across languages in the specific information that pronouns carry inside themselves). It’s easy to miss just how stripped bare of meaning pronouns can be if you only consider your own familiar language. Their semantic starkness is often more visible from the outside. A revealing example can be found in a discussion of pronouns by the journalist Christie Blatchford (2011), who covered the murder trial of an Afghan-born Canadian, Mohammed Shafia. Together with his wife, Tooba Yahya, and their son, Hamed, Shafia was charged with murdering his three daughters and his first wife. Writing in the National Post, the journalist noted that there were some linguistic difficulties that arose in the testimony of a relative of the slain woman (Ms. Amir) because the witness spoke in Dari, a dialectal variant of the Farsi language:

[The witness] also said in the last months of her life, Ms. Amir was unhappy, often calling to complain about her life, and that she told her she’d overheard a conversation among the parents and Hamed, during which Mr. Shafia threatened to kill Zainab, who in April of 2009 had run away to a women’s shelter, and “the other one,” which Ms. Amir took to mean her.

But because the Dari/Farsi languages have no separate male and female pronouns—essentially, everyone is referred to as male, it apparently being the only worthy sex—she can’t be sure if it was Ms. Yahya who asked about “the other one” or Hamed.

|

Singular |

Plural |

|

|

First person |

||

|

Male |

I/me |

we/us |

|

Female |

I/me |

we/us |

|

Neuter |

I/me |

we/us |

|

Second person |

||

|

Male |

you/you |

you/you |

|

Female |

you/you |

you/you |

|

Neuter |

you/you |

you/you |

|

Third person |

||

|

Male |

he/him |

they/them |

|

Female |

she/her |

they/them |

|

Neuter |

it/it |

they/them |

Blatchford went on to remark that ongoing interpretation difficulties arose at the trial in part because Dari and Farsi are “imprecise languages.” But she’s wrong to attribute imprecision (not to mention sexism) to an entire language based on the potential ambiguity of its pronouns. Pronouns are by their very nature imprecise, as Ms. Blatchford might have concluded had she taken a moment to survey the pronominal system of English. English, as it turns out, doesn’t bother to provide information about the gender of any of its pronouns except the third-person singular; it entirely forgoes marking number on the second person; and it blurs the subject/object distinction for several pronouns (see Table 11.1). In short, using the English pronoun they to refer to a group of women (or to a group of men) leaves an English speaker in exactly the same boat as a speaker of Dari—nothing about the linguistic form of the pronoun gives the hearer a clue about gender. Box 11.2 describes some of the different pronominal systems found in languages other than English.

Even when gender is marked on pronouns, the potential for ambiguity is rife, and yet, highly skilled users of language persist in wielding them. Following are a few passages pulled from acclaimed literary works. As you’ll see, pronouns are used despite the fact that there’s more than one linguistic match in the discourse that precedes them. In these examples, the same color font is used for pronouns (underlined) and all their linguistically compatible matches (that is, all the nouns that agree in number and gender with the pronouns):

In the boxes, the men heard the water rise in the trench and looked out for cottonmouths. They squatted in muddy water, slept above it, peed in it.

from Beloved by Toni Morrison (1987)

Now the drum took on a steady arterial pulse and the sword was returned to the man. He held it high above his head and glowered at the crowd. Someone from the crowd brought him the biscuit tin. He peered inside and shook his great head.

from In Between the Sheets by Ian McEwan (1978)

In 1880 Benjamin Button was twenty years old, and he signalized his birthday by going to work for his father in Roger Button & Co., Wholesale Hardware. It was in that same year that he began “going out socially”—that is, his father insisted on taking him to several fashionable dances. Roger Button was now fifty, and he and his son were more and more companionable—in fact, since Benjamin had ceased to dye his hair (which was still grayish) they appeared about the same age and could have passed for brothers.

from The Curious Case of Benjamin Button by F. Scott Fitzgerald (1922)

Every now and then, pronouns do result in confusion, as evident in the Shafia trial testimony. Most of the time, however, they’re interpreted without fuss exactly as the speaker or writer intended. How is this done?

Pronoun systems across languages

Pronouns in many languages tend to have certain grammatical clues that help hearers link them to their antecedents. Usually, number and gender are marked, though not always in the same ways; for example, Standard Arabic marks dual number (specifically two referents), not just singular and plural (Table A), while German has neuter gender as well as masculine and feminine (Table B). Some languages, like Persian (Farsi) and Finnish (Table C), fail to mark gender at all. The tables below illustrate subject pronouns only. You’ll notice that, regardless of how many dimensions a language might encode, certain pronoun forms are often recycled across dimensions, so they become inherently ambiguous. You’ll notice also that more linguistic information tends to get preserved in the third-person pronouns than in the first- and second-person pronouns, presumably because the context usually makes it clear who we’re referring to when we use pronouns such as I or you.

A complicating factor is that, in many languages, pronouns are often dropped entirely and are used only for special emphasis or stylistic purposes. Usually (but not always), this is allowed in languages where verbs are conjugated in such a way that they preserve at least some of the linguistic information that would appear on the missing pronoun. For example, in Spanish (Table D), verb marking preserves information about person and number when pronouns are dropped, but it doesn’t preserve gender. Thus we get:

Yo veo una montaña. → Veo una montaña. (“I see a mountain.”)

El ve una montaña. → Ve una montaña. (“He sees a mountain.”)

Ella ve una montaña. → Ve una montaña. (“She sees a mountain.”)

Ellos ven una montaña. → Ven una montaña. (“They see a mountain.”)

Pronoun-dropping is yet another way in which languages show a subtle interplay between the inherent ambiguity of linguistic form and the information that can be recovered from context.

|

TABLE A Standard Arabic |

|||

|

Number |

Person |

Masculine |

Feminine |

|

Singular |

1st 2nd 3rd |

anaa anta huwa |

anaa anti hiya |

|

Dual (two persons) |

1st 2nd 3rd |

naHnu antumaa humaa |

naHnu antumaa humaa |

|

Plural |

1st 2nd 3rd |

naHnu antum hum |

naHnu antunna hunna |

|

TABLE B German |

||||

|

Number |

Person |

Masculine |

Feminine |

Neuter |

|

Singular |

1st 2nd (informal) 2nd (formal) 3rd |

ich du Sie er |

ich du Sie sie |

ich du Sie es |

|

Plural |

1st 2nd (informal) 2nd (formal) 3rd person |

wir ihr Sie sie |

wir ihr Sie sie |

wir ihr Sie sie |

|

TABLE C Finnish |

||

|

Number |

Person |

|

|

Singular |

1st 2nd (informal) 2nd (formal) 3rd |

minä sinä Te hän |

|

Plural |

1st 2nd (informal) 2nd (formal) 3rd |

me te Te he |

|

TABLE D Spanish |

|||

|

Number |

Person |

Masculine |

Feminine |

|

Singular |

1st 2nd (informal) 2nd (formal) 3rd |

yo tú usted él |

yo tú usted ella |

|

Plural |

1st 2nd (informal) 2nd (formal) 3rd |

nosotros vosotros ustedes ellos |

nosotros vosotros ustedes ellas |

How do we resolve the meanings of pronouns?

In many cases, we can use real-world knowledge to line up pronouns with their correct referential matches, or antecedents. In the Toni Morrison quote, while both the nouns boxes and cottonmouths match the linguistic features on the pronoun (they’re both plural), practical knowledge about boxes and cottonmouths (venomous snakes) allows us to rule them out as antecedents for the pronoun in the phrase they squatted; only the men remains as a plausible antecedent for they.

antecedent A pronoun’s referent or referential match; that is, the expression (usually a proper name or a descriptive noun or noun phrase) that refers to the same person or entity as the pronoun.

But when real-world plausibility is not enough, we may get some help from information we’ve already entered into the mental model. In the Ian McEwan passage, by the time we get the pronoun it in the second sentence (He held it high above his head), we’ve seen three possible linguistic matches for the pronoun in the first sentence: the drum, a steady arterial pulse, and the sword. The pulse can be ruled out because of basic knowledge about how the world works—you can’t hold a pulse—but something more is needed to decide between the drum and the sword. Here, the mental model derived from the first sentence is critical: only the sword is in the hands of the man (who is the sole possible antecedent for he in He held it high above his head), and therefore is the most likely candidate. So, just as mental models are useful for filling in all sorts of implicit material, they can also help fix the reference of ambiguous pronouns.

But even more than a model is required. In the above quote by F. Scott Fitzgerald, the first sentence introduced Benjamin Button and his father Roger. How should we interpret the pronoun in the second sentence: It was in that same year that he began “going out socially”? Either Benjamin or the father are viable antecedents, given the situation model at that point, and in fact, the text goes on to elaborate that both of these characters go out together. Yet most readers will automatically assume that he refers to Benjamin, and not his father. Why is that? (Go ahead and try to answer—the question’s not purely rhetorical.)

If you did attempt an answer, you might have said something to the effect that Benjamin is the person that the passage is about, or the person who’s being focused on in the text. If so, you were exactly on the right track. In Section 11.1, I described some results by Art Glenberg and colleagues (1987) showing that when entities are entered into a mental model, they wax and wane in terms of their accessibility, depending on what’s going on in the text—typically, this accessibility was measured by memory probes. Let’s revisit the following two stories:

John was preparing for a marathon in August. After doing a few warm-up exercises, he put on his sweatshirt and went jogging. He jogged halfway along the lake without too much difficulty. Further along his route, however, John’s muscles began to ache.

John was preparing for a marathon in August. After doing a few warm-up exercises, he took off his sweatshirt and went jogging. He jogged halfway along the lake without too much difficulty. Further along his route, however, John’s muscles began to ache.

We saw from the Glenberg study that the sweatshirt entity was more accessible in a situation like the first one, where it was spatially connected with the main character, than in the second case, when it was cast aside at some point in the story. It turns out that the degree of accessibility, as measured by a memory probe, also predicted how easy it was for subjects to read sentences containing pronouns. Consider this story:

Warren spent the afternoon shopping at the store. He set down his bag and went to look at some scarves. He had been shopping all day. He thought it was getting too heavy to carry.

Did you trip over the pronoun it in the last sentence, hunting around for what was being referred to? If you did, try this version:

Warren spent the afternoon shopping at the store. He picked up his bag and went to look at some scarves. He had been shopping all day. He thought it was getting too heavy to carry.

If the second version felt smoother, then your intuitions align with the results from this study; participants spent longer reading the last sentence in the first passage than in the second passage. Notice that the sentence itself is identical in both cases, so the difficulty must have come from trying to integrate this sentence with the preceding discourse, presumably because people had some trouble tracking down the antecedent of the pronoun. Based on the results from the memory task, a likely explanation for the difficulty is that the antecedent had already faded somewhat in memory.

Pronouns, then, seem to signal a referential connection to some entity that is highly salient and very easily located in memory; the fact that the entity is so readily accessible is probably exactly what allows pronouns to be as sparse as they are when it comes to their own semantic content. You might view this as one example of a much broader language phenomenon: that the easier it is for hearers to recover or infer certain information, the less the speaker relies on linguistic content to communicate that information. This generalization fits well with the idea that the amount of information that appears in the linguistic code reflects a balance between need for clear communication and ease of production.

What makes some discourse referents more salient than others?

There are quite a few factors that seem to affect the salience or accessibility of possible antecedents. As noted earlier, the relationship of various entities within the mental model can play a role; the spotlight tends to be on the protagonist of a story and other entities associated with or even just spatially close to that character. But a number of other generalizations can be made. Often, the syntactic choices that a speaker has made reflect the accessibility of some referents over others. For example, in Section 10.3, I pointed out that when a concept is highly salient to speakers, they tend to mention this concept first, often slotting it into the subject position of a sentence. This creates a sense that whatever is in the subject position is what the sentence “is about” or is the focus of attention, and has an effect on how ambiguous pronouns get interpreted. Consider these examples:

Bradley beat Donald at tennis after a grueling match. He …

Donald was beaten by Bradley after a grueling match. He …

There’s a general preference for the subject over the object as the antecedent of a pronoun (Bradley in the first sentence, Donald in the second).

Let’s look more closely at the excerpt from F. Scott Fitzgerald on pages 462 and 464. In that passage, the cues guiding the reader through the various interpretations of the third-person pronoun come largely from the syntax. In the first sentence, Benjamin Button is established as the subject and, with two pronouns referring back to him, is the more heavily “lit” character; his father is mentioned more peripherally as an indirect object:

In 1880 Benjamin Button was twenty years old, and he signalized his birthday by going to work for his father in Roger Button & Co., Wholesale Hardware.

Hence, it’s easy to get that the pronoun in the next sentence refers back to Benjamin:

It was in that same year that he began “going out socially”—that is, his father insisted on taking him to several fashionable dances.

But notice what happens in the next sentence:

Roger Button was now fifty, and he and his son were more and more companionable—in fact, since Benjamin had ceased to dye his hair (which was still grayish) they appeared about the same age and could have passed for brothers.

Here, focus has shifted to the father, Roger Button, who now appears in subject position—and as a result, the next appearance of the pronoun he now refers back to Roger, not Benjamin. In fact, the next time that the author refers to Benjamin in the text, he uses his name, not a pronoun.

This last fact turns out to be quite revealing, and suggests that the Benjamin character has been demoted from his original position of prominence in the mental model. Throughout the narrative, the spotlight has moved from one character to the other, as made apparent by the occupant of the subject position of the various sentences and by the preferred interpretation of the pronouns.

The repeated-name penalty

Psycholinguists have found that if an entity is highly salient, readers seem to expect that a subsequent reference to it will involve a pronoun rather than a name, and actually find it harder when the text uses a repeated name instead, even though this name should be perfectly unambiguous (e.g., Gordon et al., 1993). This set of expectations can be inferred from reading times. For example:

Bruno was the bully of the neighborhood. He chased Tommy all the way home from school one day.

Bruno was the bully of the neighborhood. Bruno chased Tommy all the way home from school one day.

Readers seem to find the repeated name in the second example somewhat jarring, as shown by longer reading times for this sentence than the corresponding one in the first passage. This has been called the repeated-name penalty. But if the antecedent is somewhat less salient, no such penalty arises. Consider this sentence:

repeated-name penalty The finding that under some circumstances, it takes longer to read a sentence in which a highly salient referent is referred to by a full noun phrase (NP) rather than by a pronoun.

Susan gave Fred a pet hamster.

Presumably, Susan is more accessible as a referent than Fred. Hence, a repeated-name penalty should be found if Susan is later referred to by name rather than tagged by a pronoun; but no such penalty should be found if Fred is referred to by name in a later sentence.

This is precisely what Gordon and his colleagues found. That is, sequence (a) below took longer to read than sequence (b):

(a) Susan gave Fred a pet hamster. In his opinion, Susan shouldn’t have done that.

(b) Susan gave Fred a pet hamster. In his opinion, she shouldn’t have done that.

But there was no difference between sequences (c) and (d):

(c) Susan gave Fred a pet hamster. In Fred’s opinion, she shouldn’t have done that.

(d) Susan gave Fred a pet hamster. In his opinion, she shouldn’t have done that.

While expressing a referent as a subject has the effect of boosting its salience, certain special syntactic structures—often called focus constructions—are a bit like putting a referent up on a pedestal. Observe:

focus constructions Syntactic structures that have the effect of putting special emphasis or focus on certain elements within the sentence.

It was the bird that ate the fruit. It was already half-rotten.

This sounds odd, because the pronoun in the second sentence can only plausibly refer to the fruit. However, because the bird has been elevated to such a salient status (using a construction called an it-cleft sentence), the inclination to interpret it as referring to the bird is strong, leading to a plausibility clash later in the sentence. There’s no such clash, though, when the first sentence puts focus on the fruit instead, as in the following (using a construction called a wh-cleft sentence):

it-cleft sentence A type of focus construction in which a single clause has been split into two, typically with the form “It is/was X that/who Y.” The element corresponding to X in this frame is focused. For example, in the sentence It was Sam who left Fred, the focus is on Sam.

wh-cleft sentence A type of focus construction in which one clause has been divided into two, with the first clause introduced by a wh- element, as in the sentences What Ravi sold was his old car or Where Joan went was to Finland. In this case, the focused element appears in the second clause (his old car, to Finland).

What the bird ate was the fruit. It was already half-rotten.

Amit Almor (1999) found that, not surprisingly, when a repeated name was used to refer back to the heavily focused antecedent in constructions like these, readers showed the repeated-name penalty. That is, readers took longer to read the repeated name (the bird or the fruit) in the second sentence of passages like these (antecedents that are in focus are in boldface):

(a) It was the bird that ate the fruit. The bird seemed very satisfied.

(b) What the bird ate was the fruit. The fruit was already half-rotten.

rather than these:

(c) It was the bird that ate the fruit. The fruit was already half-rotten.

(d) What the bird ate was the fruit. The bird seemed very satisfied.

Repeated names seem to do more than just cause momentary speed bumps in reading—they can actually interfere with the process of forming an accurate long-term memory representation of the text, as found by a subsequent study by Almor and Eimas (2008). When subjects were later asked to recall critical content from the sentences they’d read (for example, “Who ate the fruit?” or “What did the bird eat?”), they were less accurate if they’d read passages (a) and (b) than if they’d read passages (c) and (d).

We’ve seen that there are several factors that heighten the accessibility of a referent, making it a magnet for later pronominal reference: the degree to which entities are spatially linked to central characters in a text, and syntactic structure, including subject status and the use of focus constructions. In addition, the salience of a referent can be boosted by a number of other factors such as being the first entity to be mentioned in a sentence (either as the subject or not), having been recently mentioned, or having been mentioned repeatedly. Variables like these are famous for affecting the ease with which just about any stimuli can be retrieved from memory (for instance, if you’re trying to remember the contents of your grocery list, it’s easiest to remember items that appeared at the top of the list, or last on the list, or those you happened to write down more than once). It’s interesting to see that the same variables also have an impact on the process of resolving pronouns.

Where’s this going?

Although accessibility is an important factor in pronoun interpretation, it can be overridden. Consider the following examples:

John spotted Bill. He …

John passed the comic to Bill. He …

Chances are, you understood the pronoun in the first sentence to refer to John. And in the second sentence? If you were like the participants in a study by Rosemary Stevenson and her colleagues (1994), you took the pronoun to refer to Bill, even though the name Bill is in a less prominent position in the sentence than John. This fact may have less to do with what’s prominent in memory and more to do with where readers think the discourse is going. In the same study, some participants saw only the first sentence and were asked to provide a plausible second sentence to follow it; in these cases, no pronoun at all was supplied. When building on sentences like John passed the comic to Bill, most people provided a continuation that focused on the goal or endpoint of the event—that is, they more often referred to Bill than to John. Similar results were found by Jennifer Arnold (2001) in an analysis of speech from Canadian parliamentary proceedings: when speakers described an event that had a goal or an end point, they were subsequently more likely to refer back to the goal or end point of the event.

Where the discourse goes depends on the nature of the event, as well as the relations between events that are explicitly coded in the language. Try continuing these sentences:

Sally apologized to Miranda because she …

Sally admired Miranda because she …

The word because throws into relief a causal connection between the first event and whichever event is coming next; but the specific events of admiring or apologizing place different emphases when it comes to their typical causes. Normally, you apologize to someone because of something you did, but you admire someone because of something about the other person. Hence, in the first sentence, the focus is on the subject (Sally), whereas in the second it’s on the object (Miranda). A number of researchers have noted that different verbs seem to evoke different expectations of implicit causality; this was first noticed by Garvey and Caramazza (1974).

implicit causality Expectations about the probable cause/effect structure of events denoted by particular verbs.

Some researchers (e.g., Kehler & Rohde, 2013) have suggested that these facts about pronouns reflect something deeper about how people interpret the relationships between sentences in a discourse. They point to examples like the following, which don’t line up neatly with an accessibility explanation:

(a) Mitt narrowly defeated Rick, and the press promptly followed him to the next primary state. (him = Mitt)

(b) Mitt narrowly defeated Rick, and Newt absolutely trounced him. (him = Rick)

(c) Mitt narrowly defeated Rick, and he quickly demanded a recount. (him = Rick)

While the first clause is identical in all of these examples, the relationship between the two clauses is not. In sentence (a), the second clause describes an event that happened after Rick’s defeat by Mitt; in sentence (b), the second clause describes an event that is highly similar to the event in the first clause (and which may have happened before, after, or at the same time as the first); in example (c), the second clause describes a consequence of the event described in the first.

Finding antecedents for ambiguous pronouns In this exercise, you’ll be see a number of examples in which a pronoun is linguistically compatible with more than one antecedent. These examples will give you a sense of whether the potential ambiguity of the pronoun translates into a real interpretive ambiguity. What are some of the factors that make it easier to feel confident about the reference of ambiguous pronouns?

https://oup-arc.com/access/content/sedivy-2e-student-resources/sedivy2e-chapter-11-web-activity-6

11.2 Questions to Contemplate

11.2 Questions to Contemplate

1. What advantages are there for speakers to using pronouns, and how does this lead to certain predictable patterns of pronoun use?

2. Why do hearers (or readers) sometimes have less trouble interpreting pronouns than full, unambiguous noun phrases?

3. What do pronouns reveal about our understanding of the connections between sentences?

To fix the reference of these pronouns, readers need to be able to discern the relationship between the clauses. But unlike the connection between accessible referents and pronouns, this discernment is not specific to pronoun interpretation—it’s something we need to do all the time in order to understand a string of sentences as a connected, coherent discourse, an issue we’ll take up in Section 11.4. In some cases, linguistic cues—including the meanings of verbs, or connectives like because, so, although, and so on—may allow readers or hearers to anticipate a specific relation, and to generate strong expectations about which entities are likely to be mentioned. In such cases, the use of coherence relations to resolve ambiguous pronoun reference is a happy side effect.

11.3 Pronouns in Real Time

The preceding section helps to explain why pronouns are usually perfectly interpretable, despite their blatant grammatical ambiguity. It also adds to the pile of evidence from earlier chapters showing that ambiguity as an inherent feature of language. We’ve seen that lexical and syntactic ambiguities are almost always resolved without too much trauma. But they’re not cost-free, either. They often exert a processing cost that can be detected through experimental techniques, whether or not that cost is consciously registered by a hearer or reader. And there’s growing evidence that some language users deal with ambiguities less smoothly than others.

In this section, we’ll explore how hearers or readers cope with pronouns under time pressure, coordinating different types of information. And we’ll take a look at what it takes to interpret pronouns smoothly by considering what children need to learn in order to accomplish the task in an adult-like way.

Coordinating multiple sources of information

At the very least, pronoun resolution involves four general sources of information: (1) the grammatical marking of number and gender, among other factors, on the pronouns themselves, where this is available; (2) the prominence of antecedents in a mental model; (3) real-world knowledge that might constrain the matching process; and (4) coherence relations that allow us to understand the connections between sentences. How are these sources of information coordinated by hearers? One possibility is that grammatical marking acts as a filter on prospective antecedents so that only those that are linguistically compatible with the pronoun are ever considered as candidates; information about discourse prominence or real-world information might then kick in to help the reader/listener choose among the viable candidates. On the other hand, the most accessible antecedent may automatically rise to the top and become automatically linked to any pronoun that later turns up; grammatical marking and other information sources might then apply retroactively to verify that the match was an appropriate one.

A number of serviceable techniques can be used to shed light on the time course of pronoun resolution, but probably the most direct and temporally sensitive method is to track people’s eye movements to a scene as they hear and interpret the pronoun. As you’ve seen in Chapters 8 and 9, when people establish a referential link between a word and an image, they tend to look at the object in the visual display that’s linked with that word. The same is true in the case of pronouns. Researchers can use eye movement data to figure out how long it takes hearers to identify the correct antecedent for the pronoun, as well as whether any other entities were considered as possible referents.

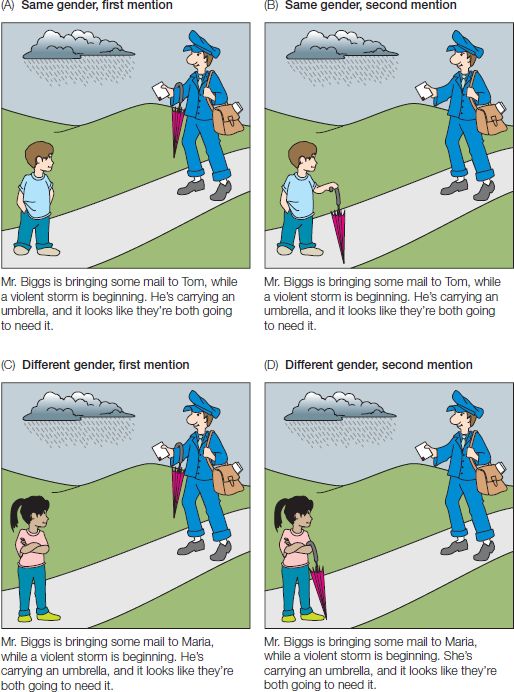

In a 2000 study, Jennifer Arnold and her colleagues had their subjects listen to miniature stories, and tracked their subjects’ eye movements to pictures that depicted the various characters and objects involved in these narratives. The story introduced two characters of either the same gender or different genders. Each story contained a key sentence with a pronoun. Depending on which characters had been introduced, the pronoun was grammatically compatible either with both of the characters, or with just one of them:

Mr. Biggs is bringing some mail to Tom, while a violent storm is beginning. He’s carrying an umbrella, and it looks like they’re both going to need it.

Mr. Biggs is bringing some mail to Maria, while a violent storm is beginning. She’s carrying an umbrella, and it looks like they’re both going to need it.

These two stories and their accompanying illustrations are shown in Figure 11.4. For participants looking at the depictions in Figures 11.4A and 11.4C, it would be obvious that Mr. Biggs is the correct referent for the pronoun he. He also happens to be the character that is mentioned first and occupies the subject position in the first sentence.

Now, if grammatical marking serves as a filter on antecedents so that only matching antecedents are considered, we’d expect that when there’s only one male character, people would be very quick to locate the antecedent of the pronoun and that they wouldn’t consider Maria as a possible referent for the pronoun he. That is, their eye movements should quickly settle on Mr. Biggs and not be lured by the Maria character. But in the stories with two male characters, they should briefly consider both Mr. Biggs and Tom as possibilities, and this should be reflected in their eye movements. The discourse prominence of Mr. Biggs might kick in slightly later to help disambiguate between the two possible referents.

On the other hand, if pronoun resolution is driven mainly by the accessibility of the antecedent, then grammatical marking has a more secondary role to play when it comes to processing efficiency. For the stories above, only Mr. Biggs should be considered as the possible antecedent, regardless of whether the pronoun is grammatically ambiguous or not. So eye movements should favor Mr. Biggs over either Tom or Maria as soon as the pronoun he is pronounced. But now let’s suppose that the picture shows the less prominent discourse entity (that is, either Tom or Maria) as the umbrella holder, and hence the correct referent of the pronoun he in the second sentence. Now finding the referent should be slower and more fraught with error. This should be true regardless of whether the pronoun is grammatically ambiguous (Figure 11.4B) or specific (Figure 11.4D).

Figure 11.4 Visual displays and critical stimuli from the eye-tracking study (Experiment 2) by Arnold et al. The character carrying the umbrella was always the referent of the critical pronoun. (Note: the pictures shown here are modified from the well-known cartoon characters that were used in the original study.) (Adapted from Arnold et al., 2000, Cognition 76, B13.)

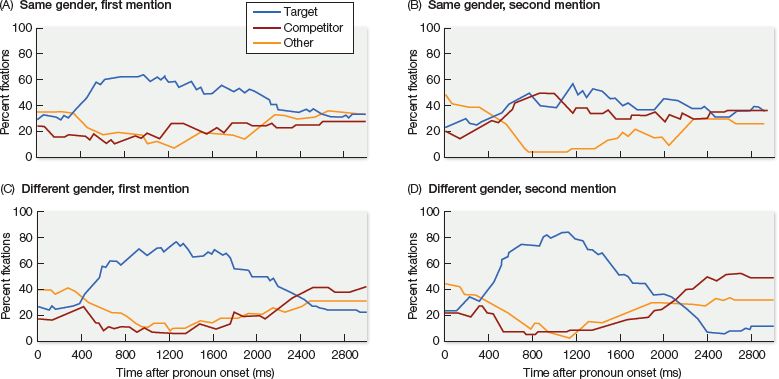

When Arnold and her colleagues analyzed the eye movement data from their study, they found that hearers were able to use gender marking right away to disambiguate between referents, even when the antecedent was the less prominent of the discourse entities (see Figure 11.5). That is, as soon as participants heard the pronoun he, they rejected Maria as a possible antecedent. This was evident by the fact that very shortly after hearing the pronoun, their eye movements for the illustrations 11.4C and D settled on the only male referent. So, grammatical marking of gender seems to be used right away to disambiguate among referents. But discourse prominence had an equally privileged role in the speed of participants’ pronoun resolution. That is, when the pronoun referred to the more prominent entity, hearers quickly converged on the correct antecedent, regardless of whether the pronoun was grammatically ambiguous. The only time that hearers showed any difficulty or delay in settling on the correct referent was when the pronoun was both grammatically ambiguous and referred to a less prominent discourse entity (see Figures 11.4B and 11.5B).

Figure 11.5 Results of Arnold et al.’s Experiment 2. The patterns of eye movements plotted against the three objects in the visual displays shown in Figure 11.4. The graph tracks the mean percentage of looks (within a 33-ms timeframe) to each of the three objects in the display. Target = correct character (with umbrella); competitor = competing character (no umbrella); other = elsewhere in the display (e.g., clouds). (Adapted from Arnold et al., 2000, Cognition 76, B13.)