The film Thirteen Days portrays the efforts of President John F. Kennedy and his staff to deal with the 1962 Cuban Missile Crisis. With the Cold War at its chilliest, the Americans have learned that Soviet missiles have been installed in Cuba, within easy striking range of many U.S. cities. Kennedy needs to apply pressure on the Soviets to force them to withdraw their missiles. But he’s trying not to respond in an overly aggressive way that might be interpreted by the Soviets as an intent to go to war, thereby triggering a full-scale nuclear confrontation. Kennedy’s first move is to declare a blockade of all Soviet ships headed to Cuba. In a later scene of the film, several Soviet ships have crossed the blockade lines, and Secretary of Defense Robert McNamara learns that one of the admirals under Kennedy’s command has taken it upon himself to fire blank warning shots above one of the offending ships. McNamara yells at the admiral that he is not to fire unless directly ordered by the president. The admiral replies that he’s been running blockades for decades and knows how it’s done. McNamara explodes: “You don’t understand a thing, do you admiral? This is not a blockade. This is language. A new vocabulary, the likes of which the world has never seen. This is President Kennedy communicating with Secretary Khrushchev!”

At first glance, it might seem that the mutual messages Kennedy and Khrushchev are sending here are very unlike what happens when people

use conventional language to communicate. Most of the time, people don’t communicate by relying on subtle signals encoded in the movement of ships and the presence or non-presence of gunfire. The whole point of having language would seem to be to make such mind-reading feats unnecessary. After all, isn’t meaning precisely and conveniently packaged up in the language? Linguistic communication, one might argue, doesn’t require mind reading, just straightforward information processing to decipher a code that maps forms fairly directly to meanings.

For the most part, this book has focused on how humans learn and process the linguistic code. We’ve discussed the mechanisms that allow people to transmit linguistic information, or decode it, or discover its patterns and generalizations, all under the assumption that language form and meaning are intrinsically connected. From this angle, the problem of recovering meaning from language is very similar to the problems of, say, interpreting a visual scene or deciphering musical patterns. In all of these cases, information that hits our senses has to be processed and structured into sensible representations before we can recognize specific objects, make sense of a chord progression, or figure out what a sentence means.

Except it’s a bit misleading to talk about “what a sentence means.” When you really think about it, words and sentences don’t convey meanings—people convey meanings by using certain words and combining them into sentences. This might seem like a distinction without a difference until you consider just how often people bend language to their will and manage to convey messages that are very remote from the usual meanings of the words or sentences they’re using. A waitress might communicate a customer’s complaint to the kitchen staff by “The steak sandwich says his meat was overcooked,” using the phrase the steak sandwich to refer not to the food itself, but to the person who ordered it. Or, a person might ask the object of his affections, “Do you feel like having dinner sometime?” This is usually understood as an invitation to go out on a date, and not as a question about whether the other person is likely to ever be inclined to eat food in the evening. Or, a boss might describe her employee metaphorically, saying “That guy’s my best worker. He’s a bulldozer.” And, if you’ve ever resorted to sarcasm (“I’m really looking forward to my exam this afternoon”), you probably figured that your hearer could work out that what you really meant was pretty much the exact opposite of the usual meaning of the language you used.

In all of these cases, communicating through language seems to come a lot closer to the problem that Kennedy was trying to solve. The question is not “What does this bit of language mean?” but instead, “What is the speaker trying to communicate by using this particular bit of language in this situation?” In other words, the hearer has to hoist herself into the speaker’s mind and guess his intended message, using the language he’s uttered as a set of clues.

It might seem that all this sophisticated social cognition is restricted to a smattering of instances of language use—those unusual cases where a speaker’s intended meaning has somehow become disconnected from the inherent meanings of the language he’s recruited. But the bigger point here is that there are no inherent meanings in language, even when we’re talking about its more “fixed” aspects. The only reason there’s a connection between specific sounds and specific meanings is that this connection has been socially sanctioned. In this way, language is deeply different from many other kinds of information processing that we do. When it comes to visual perception, for instance, the array of lines, shadows, and colors that we have to interpret is intrinsically related to the real-world objects that we’re perceiving. But there’s no intrinsic connection between the sounds in the word dog and the thing in the world it refers to; its meaning comes entirely from the fact that a bunch of people within a common linguistic community have tacitly agreed to use that word for that thing. That same group of people can also agree to invent new words for new meanings, like Internet, or mitochondria—and subgroups can agree to appropriate existing words for new meanings, so words like wicked, sick, lame, and cool can mean completely different things, depending on who’s saying them and in what context.

For language, unlike for many kinds of perception, our interpretation of the stimuli doesn’t derive from the laws of physics and biology. Instead, it’s mediated by social conventions, or agreements to use certain words and structures for certain meanings. It’s hard to imagine that someone could possibly be a competent user of language without having a deep grasp of these social underpinnings of language. In fact, as you’ll soon see, it’s common for conversational partners to spend a fair bit of time in everyday conversation negotiating the appropriate language to use.

None of the above is especially controversial among language scientists. Where the disagreements bubble up is over the question of how much active social cognition takes place under the time pressures of normal conversation or reading. In other words, how much of language use is straightforward decoding and how much of it looks like mind reading, Thirteen Days style? Once we’ve learned the conventional, socially sanctioned links between language and meaning, it seems that we should be able to use them as efficient shortcuts to the speaker’s intended meaning, allowing us to bypass the whole business of having to put ourselves inside his mind. For instance, when someone uses the word dog, we should be able to activate the mental representation of a four-legged domestic canine without asking ourselves, “Now, what is the speaker trying to convey by using this word?” At the same time, there’s no guarantee that the speaker’s intended meaning lines up neatly with the conventional meaning of the language he’s chosen to use, so some monitoring of his communicative intentions would certainly be useful. And from the speaker’s perspective, having a sense of the hearer’s knowledge or assumptions could be useful in deciding exactly how to express an idea.

For some researchers, mind-reading capabilities are absolutely central to how language works and are part of the core skills of all language users in all situations. To others, these capabilities are more like icing on the cake—extremely useful to have in many situations, handy in avoiding or clarifying miscommunications, but not always critical for bread-and-butter language use. This chapter explores some ideas about the extent to which we track one another’s mental states in the course of normal language learning, production, and comprehension.

12.1 Tiny Mind Readers or Young Egocentrics?

Anyone who’s locked eyes with a 5-month-old baby on a bus or in a grocery checkout line has experienced an infant’s eagerness for social contact. From a very young age, babies demonstrate a potent urge to make eye contact with other humans, to imitate their facial expressions and actions, and to pay attention to objects that hold the attention of others. Long before they know any words, babies engage in “conversations” with adults, cooing and babbling in response to their caregivers’ utterances. This remarkable social attunement suggests that, right from the start, babies’ learning of the linguistic code may be woven together with an appreciation of the social underpinnings of language and of speakers’ goals and intentions.

In previous chapters, we’ve discussed the close connection between social understanding and language: in Chapter 2, we explored the possibility that complex social cognition is a prerequisite for the development of language in a species, and in Chapter 5, we saw that children draw heavily on inferences about a speaker’s referential intent in learning the meanings of words. However, there are also hints that children take a while to develop a sophisticated social competence, and in some ways, social cognition seems to lag behind language development. At an age when children can speak in complex sentences and show mastery over many aspects of grammatical structure, they can still have trouble producing statements that are relevant or useful to others. And when the language code collides with others’ communicative intent, children tend to stick close to the language code for recovering meaning—sarcasm or innuendo are often completely lost on kids younger than 7 or 8. These findings suggest that language learning may proceed somewhat independently of mind reading and that it takes some time for the two to become well integrated.

In this section, we’ll look closely at the relationship between children’s emerging social cognition and language learning and use. What assumptions do young children make about speakers or hearers, and how do these assumptions shape children’s linguistic behavior? To what extent can children consider evidence about their conversational partner’s mental state?

Social interaction enhances language learning

Language exists for social purposes and arises in social contexts. But it also exists physically as a series of auditory or visual signals. In the previous chapters, I laid out abundant evidence that these signals have a great deal of internal structure. In principle, it should be possible to learn something about how linguistic elements pattern without needing to project yourself into the mind of the speaker, or, for that matter, engage in social interaction at all. And in fact, there’s evidence that babies can learn a great deal about language simply by being exposed to structured repetitions of a natural or artificial language, disembodied from any social communication. In Chapter 4, you saw that infants are able to learn to segment words and form phonemic categories based on the statistical regularities in prerecorded speech. In Chapter 6, I presented evidence that young children are able to use statistical information about co-occurring words to infer the grammatical categories of novel words. None of these studies involved communicative interaction, but children were able to pick up on the statistical patterns present in input that was piped in through loudspeakers.

You also saw that other species of animals can pick up on regularities inherent in human speech. This too suggests that certain aspects of language learning may not require grasping that language is a socially mediated phenomenon. At some level, it’s possible to treat the sounds of human language as just that—sounds whose structure can be learned with sufficient exposure.

Still, there’s intriguing evidence that even for these very basic and physically bound aspects of language learning, social interaction matters. Patricia Kuhl and her colleagues (2003) tested whether social interaction affected the ability of 9-month-olds to learn phonemic contrasts that don’t occur in their native language. As you learned in Chapter 4, over the second half of their first year, babies adapt to the sound structure of their own language and “lose” the ability to easily detect contrasts between two sounds that are not phonemic in their own language (for example, English-reared babies become less responsive to the distinction between aspirated consonants like [ph] and unaspirated consonants like [p]). Kuhl and her colleagues targeted monolingual American-raised infants at an age when they had already lost the distinction between certain contrasts that occur in Mandarin Chinese. They wanted to know: Could the American babies learn to pick up on the Mandarin sound contrasts? And would they learn better from a live speaker than from a video or audio recording?

In their study, a number of the babies interacted with native Mandarin speakers who read books to them and played with toys while speaking Mandarin in an unscripted, natural way (see Researchers at Work 12.1). By the end of this period, they clearly had learned the Mandarin sound contrasts—in fact, their performance was indistinguishable from babies of the same age who had been raised in Taiwan and learned Mandarin as their native language.

This robust learning was all the more startling when compared with the abject failure of another experimental group of the same age to learn the Mandarin sounds. Just like the successful learners, this group was exposed to the same amount of Mandarin language, with the same books and toys, over the same period of time, uttered by the same native speakers of Mandarin. This time, however, the infants were either exposed to the language through video, or merely heard the same recording on audio. There was no evidence of learning in either of these cases. Mere exposure to the language was not enough. Learning only happened in the context of live interactions with a real speaker. This phenomenon, in which learning is enhanced through social interaction, is known as social gating.

Why was live social interaction so important for this task, when many other studies have shown that babies can learn certain sound patterns merely by listening to snippets of prerecorded speech? The researchers suggested that language learning in a child’s natural habitat tends to be much more complex and variable than your typical artificial language experiment, which usually presents a sample of speech carefully designed to provide information about key statistical patterns. Hence, real language learning needs some additional support from social interaction. But what’s the nature of this support? There are several possibilities. One is that interacting with a live talker simply caused the babies to pay more attention to the talker and to be more motivated to learn from the speech input. In the experiments involving the Mandarin phonemes, there was evidence that babies were paying more attention to the talker in the live interactions than in the videos, and they also became quite excited whenever that person entered the room, more so than when the TV screen was switched on.

Another possibility is that the babies were able to pull more information out of the speech stream when interacting with a live person. In the live interaction, the babies had a richer set of cues about the speaker’s referential intentions, which may have given them an advantage in identifying specific words. This would have helped them structure the speech stream in a more detailed way than they could without the strong referential cues, and in turn, this enriched structure would have helped them to figure out which sounds are used to make meaningful distinctions in Mandarin. Under this second interpretation, inferences about a speaker’s intended meaning can indirectly shape even the most basic aspects of language learning, by providing additional information about how the speech stream should be carved up.

A third possibility is that the live interaction allowed the babies to adopt a special, highly receptive mind-set because they recognized that the adult speaker was trying to teach them something. Researchers György Gergely and Gergely Csibra (2005) call this mind-set the pedagogical stance. They argue that while other species of animals are capable of learning through social imitation, and even show evidence of social gating (see Box 12.1), only humans have evolved a special capacity to transmit information by means of focused teaching. When we slide into teaching mode, we instinctively adopt certain communication techniques that signal that we’re trying to impart some knowledge that’s new and relevant to our hearers. And according to Gergely and Csibra, human infants are able to instinctively recognize when someone is trying to teach them. Once they see that the speaker is in teaching mode, they automatically assume that the information will be new and relevant, and focus their efforts on learning it quickly and efficiently.

Not all social interactions involve teaching. So, what kinds of cues signal a pedagogical act? Think of the difference between chatting with a friend while you’re preparing a chocolate soufflé and teaching your friend how to make the soufflé. In both cases, your friend could learn to make the dish by imitating you, but he’d be more likely to succeed if you were deliberately trying to teach him. How would your actions and communication be different? In the teaching scenario, you’d be more inclined to make eye contact to check that your friend was paying attention at critical moments. You’d make a special attempt to monitor his level of understanding. Your actions might be exaggerated and repetitive, and you’d be more likely to provide feedback in response to your friend’s actions, questions, or looks of puzzlement. Notice that many of these behaviors rely on having the learner physically present.

So, one way to make sense of the results of the Mandarin phoneme learning study, which demonstrated huge benefits of live social interaction, is that the babies were being exposed to a very particular kind of social interaction. The infants probably got many cues that signaled a teaching interaction, and as a result they adopted a focused pedagogical stance to the language input that they were hearing.

Csibra and Gergely argue that the pedagogical stance doesn’t require babies to make any sophisticated inferences about the speaker’s state of mind or communicative intentions. Rather, they see it as an instinctive response to behaviors that human adults display when they’re purposefully trying to impart their knowledge. Csibra and Gergely suggest that these behaviors automatically trigger the infant’s default assumption that the adult is in possession of relevant knowledge that they are trying to share. Eventually, children may have to learn that not everyone who exhibits these behaviors actually is knowledgeable, or is demonstrating knowledge that is relevant or new.

Children are selective learners

The next stop on our tour of young language learners is the child who’s trying to learn the conventional meanings of words. As you saw in Section 5.3, children are often reluctant to map a word onto a meaning without some good evidence that the speaker meant to use that word for a particular purpose. For clues, they pay attention to the speaker’s eye gaze or other behavior to identify the object of the speaker’s referential intent.

But as a youngster absorbed in the task of word learning, you might be well advised to do more than just look for signs of referential intent. A speaker might be utterly and obviously purposeful in using the word dax to refer to a dog, but that doesn’t mean that the word she uses is going to match up with what other speakers in your linguistic community agree to call that furry creature. Maybe she speaks another language. Maybe she doesn’t know the right word. Maybe she’s pretending, or even lying. Or maybe she’s insane. Perhaps you should approach such interactions with a grain of skepticism, in light of the fact that the speaker may not always be a reliable source of linguistic information. Naturally, knowing something about the speaker’s underlying knowledge or motivations would be helpful in deciding whether to adopt this new word.

But what evidence would you look for to figure out whether the speaker can be trusted as a teacher of words in the language you’re trying to acquire? Assessing the speaker’s reliability seems to involve a level of social reasoning that’s a notch or two more sophisticated than simply looking for clues about referential intent. Nevertheless, an impressive array of studies shows that preschoolers, and perhaps even toddlers, do carry out some sort of evaluation in deciding whether or not to file away in memory a word that’s just been used by an adult in a clearly referential act.

Mark Sabbagh and Dare Baldwin (2001) created an elaborate scenario in which they established one speaker as clearly knowledgeable about the objects she was referring to, while a second speaker showed evidence of uncertainty. In this scenario, a 3- or 4-year-old child heard a message recorded on an answering machine from a character named Birdie, who asked the experimenter to send her one of her toys, which she called a “blicket.” Birdie’s collection of “toys” consisted of a set of weird, unfamiliar objects. Half of the children interacted with an experimenter who claimed to be very familiar with these objects. This speaker told the child, “I’ve seen Birdie play with these a lot. I’ll show you what this does. I’ve seen all these toys before.” This “expert” then proceeded to demonstrate how to play with Birdie’s toys. In response to Birdie’s request for the blicket, this experimenter confidently announced, “You know, I’d really like to help my friend Birdie, and I know just which one’s her blicket. It’s this one.” The experimenter asked the child to put the blicket into a “mailbox,” saying, “Good, now Birdie will get her blicket.”

The other half of the kids interacted with an experimenter who acted much less knowledgeable. Upon hearing Birdie’s request, this experimenter said, “You know, I’d really like to help my friend Birdie, but I don’t know what a blicket is. Hmm.” While playing with Birdie’s toys, she said, “I’ve never seen Birdie play with these. I wonder what this does. I’ve never seen these toys before.” She played with the toys tentatively, pretending to “discover” their function while manipulating them. Finally, she told the child, touching the same object that the “expert” speaker had, “Maybe it’s this one. Maybe this one’s her blicket. Could you put it in the mailbox to send to Birdie?” When the child complied, the experimenter said, “Good, now maybe Birdie will get her blicket.”

In both of these experimental conditions, the children heard the experimenter use blicket to refer to the same object exactly the same number of times. The question was whether the preschoolers would be more willing to map the word to this object when the speaker claimed to know what she was talking about than when she was unsure. In the test phase, both the older and the younger children were more likely to produce the word blicket to refer to the target object if they’d heard the word from the “expert” speaker, in addition to being more likely to identify the correct object from among the others when asked to pick out the blicket.

Since this initial study, a number of experiments have looked at other cues that kids might use when sizing up the speaker’s credibility. For instance, children seem to figure that a good predictor of future behavior is past behavior: they take into account whether the speaker has previously produced the right labels for familiar objects. Melissa Koenig and Amanda Woodward (2010) showed that if a speaker has falsely called a duck a “shoe,” children are less eager to embrace her subsequent labels of unfamiliar objects (this work was summarized in Researchers at Work 5.1).

It’s hard to know, though, whether children’s evaluations of a speaker’s credibility are truly rational, based on assessing what speakers know, and making predictions on that basis. Sure, it’s logical to conclude that someone who expresses uncertainty about their knowledge, or someone who’s behaved unreliably in the past, is more likely to be an unreliable communicator. But we want to be careful not to attribute more knowledge and sophistication to children’s behavior than the data absolutely indicate. Are kids really reasoning about what speakers know (not necessarily consciously), or does their distrust of certain speakers reflect more knee-jerk responses to superficial cues? For example, preschoolers are also more reticent in accepting information from people who are simply unfamiliar, or who have a different accent or even different hair color than themselves. There are still a lot of questions about how children weight these various cues at different ages, and just how flexibly they adapt their evaluations, as one might expect of a fully rational being, in the face of new, predictive information about a speaker.

Still, there’s quite strong evidence that in many communicative situations, even very young kids make accurate inferences about the mental states of others. In a 2007 study by Henrike Moll and Michael Tomasello (2007), 14- and 18-month-old children interacted with an adult, with adult–child pairs playing together with two objects. The adult then left the room while the toddler played with a third, novel object. When the adult returned, she pointed in the general direction of all of the objects and exclaimed with excitement, “Oh, look! Look there! Look at that there!” She then held out her hand and requested, “Give it to me, please!” It seems that even 14-month-olds know that excitement like this is normally reserved for something new or unexpected and used this information to guess which object the adult was referring to. All three toys were familiar to the children; nevertheless, they handed over the new-to-the-adult item more often than either of the two objects that the adult had played with earlier.

Limits to children’s mind-reading abilities

Studies like those by Moll and Tomasello point to very early mind-reading abilities in children and, as discussed in Chapter 2, a number of researchers have argued that humans are innately predisposed to project themselves into each other’s minds in ways that our closest primate cousins can’t. But these stirring demonstrations of young tots’ empathic abilities seem to contradict the claims of many researchers who have emphasized that children are strikingly egocentric in their interactions with others, often quite oblivious of others’ mental states.

Indeed, anyone who’s ever interacted with very young children can be struck by their lack of ability to appreciate another person’s perspective or emotional state. It seems they constantly have to be reminded to consider another person’s feelings. When they choose gifts for others, they often seem to pick them based on their own wish lists, oblivious to the preferences and needs of others. And they do sweetly clueless things like play hide-and-seek by covering their faces, assuming that if they can’t see you, you can’t see them, or they nod their heads to mean yes while talking on the phone, seemingly unaware that the listener can’t see them.

The famous child psychologist Jean Piaget argued that it’s not until at least about the age of 7 years that children really appreciate the difference between their own perspective and that of others. This observation was based partly on evidence from what’s now known as the “three mountains study,” which involved placing children in front of a model of a mountain range, while the experimenter sat himself on the opposite side of it and asked the young subjects to choose one photo from among a set that best depicted his own viewpoint rather than the child’s (Piaget & Inhelder, 1956). Before age 7, most kids simply guess.

It seems that Piaget overestimated children’s egocentrism, partly because his experiments required kids to be able to do some fancy visual transformations in their heads rather than simply show awareness that another person’s viewpoint could differ from theirs. It’s now established that well before age 7—in fact at around age 4—most kids show a clear ability to reason about the mental states of others, as evident by their ability to handle a tricky setup known as a false-belief test. There are several variants of this basic task, but a common one goes like this: A child is shown a familiar type of box that normally contains a kind of candy called “Smarties.” She’s asked to guess what’s inside, and not surprisingly, kids usually recognize the box and say “Smarties.” The experimenter then opens the box to show the child that—alas!—the box contains pencils, not candy. Then the experimenter asks the child what another person, who had not witnessed this interaction, would think was inside the box. At age 3, children tend to say “pencils,” seemingly unable to shift themselves back into the perspective of someone who saw only the outside of the box. But by 4 or 5, they tend to say that the other person will be fooled into thinking it contains Smarties. To use the terminology of developmental psychologists, success on the false-belief task is taken as solid evidence that children have acquired a theory of mind (ToM)—that is, an understanding that people have mental states that can be different from one’s own.

Some researchers, however, have argued that these false-belief tests underestimate younger children’s mind-reading skills. Even toddlers seem to show awareness that people hold false beliefs if the task uses a toddler-friendly method that doesn’t require him to introspect and report on his own thoughts. In a study by David Buttelmann and his colleagues (2009), 18-month-olds watched an experimenter put a toy in one of two boxes and leave the room. An assistant then snuck in, moved the toy to the other box, and latched both boxes, all in view of the child. When the experimenter returned, he tried to open the empty box, into which he had put the toy. Ever eager to help, the young participants typically went to the other box—the one that now held the toy—to help the experimenter open it. This makes sense if the children thought that the experimenter was trying to retrieve the toy, believing it to be in the original box. In contrast, if the experimenter stayed in the room and watched the toy transfer, and then struggled to open the empty box, the children typically tried to help him open that box—assuming, perhaps, that the experimenter was not trying to get the toy, since he already knew where it was, but wanted to open the empty box for some other reason.

In another cleverly adapted false-belief task by Rose Scott (2017), 20-month-olds watched short videos in which an adult placed three marbles each into a red and green container and shook each of them, which produced a rattling sound. A second clip immediately followed, in which a different person took the marbles from the red container and demonstrated that the red container rattled, but now the green one did not. A third crucial clip showed the original first person back in front of the two containers into which she’d put marbles. She picked up the red container and shook it—but now, no rattle. It’s at this point that the manipulation of interest occurred: some of the babies watched a clip in which the woman showed surprise when the red container was silent, and then picked up the green object, shook it, and smiled and nodded in satisfaction. This reaction is consistent with her believing that both of the containers contained marbles. Some babies watched a clip that was inconsistent with this belief: the woman picked up the red container, shook it, found it produced no sound, and expressed satisfaction; she then picked up the green container, shook it, and expressed surprise upon finding that it rattled. Babies spent more time looking at the inconsistent trial, suggesting that they had some awareness of the woman’s beliefs and expectations, and an understanding that people are surprised when their expectations turn out to be wrong. Importantly, this effect did not occur in a version of the experiment when the woman came back in the third clip and saw that three marbles were sitting on a tray between the two containers; in this clip, a reasonable inference would be that she was aware that three marbles had been removed from one or both of the containers but did not know which. In this case, she shouldn’t have firm expectations that both objects would rattle when shaken.

Right alongside results like these that demonstrate impressive social cognition in tiny children, there are many studies showing that much older kids are surprisingly unhelpful when it comes to avoiding potential communication problems that might arise for their conversational partners. This can be seen by using a common type of experimental setup known as a referential communication task (see Method 12.1). In this paradigm, the subject usually plays a communication “game” with an experimenter (or, in some cases, a secret experimental confederate who the subject believes is a second participant, but who is really following a specific script). The game involves a set of objects that are visible to the speaker and hearer, and the speaker has to refer to or describe certain target objects in a way that will be understood by the hearer. The array of objects can be manipulated to create certain communication challenges.

It turns out that children can be quite bad at communicating effectively with their partners in certain referential contexts. In one study by Werner Deutsch and Thomas Pechmann (1982), children played the speaker role, and had to tell an adult partner which toy from among a set of eight they liked best as a present for an imaginary child. The toys varied in color and size, and the set included similar items, so that to unambiguously refer to just one of these items, the child had to refer to at least two of its properties. For example, a large red ball might be included in a set that also contained a small red ball and a large blue ball, so simply saying “the ball,” or “the red ball,” or “the big ball” would not be enough for the partner to unambiguously pick out one of the objects. To successfully refer, the kids had to recognize that a hearer’s attention wasn’t necessarily aimed at the same object that they were thinking of, and they had to be alert to the potential ambiguity for their partner.

But preschoolers were abysmal at using descriptions that were informative enough for their hearers: at age 3, 87 percent of their descriptions were inadequate, failing to provide enough information to identify a unique referent. Things improved steadily with age, but even by age 9, kids were still producing many more ambiguous expressions (at 22 percent) than adults in the same situation (6 percent). Their difficulty didn’t seem to be in controlling the language needed to refer unambiguously, because when their partner pointed out the ambiguity by asking “Which ball?” they almost always produced a perfectly informative response on the second try. It’s just that they often failed to spontaneously take into account the comprehension needs of the hearer.

We can get an even clearer idea of the extent to which kids are able to take their partner’s perspective by looking at referential communication experiments that directly manipulate the visual perspective of each partner, as discussed in Method 12.1. In these studies, the child and adult interact on opposite sides of a display case that’s usually set up so that the child can see more objects than the adult can. The nature of the communication task shifts depending on which objects are in common ground (visible to both partners) versus privileged ground (visible only to the child). For instance, if there are two balls in common ground, it’s not enough for the speaker to just say “the ball”; but it is if one of the balls is in the speaker’s privileged ground because from the hearer’s perspective, this phrase is perfectly unambiguous. Conversely, suppose the hearer sees two balls, but one of these is in privileged ground so that the speaker only sees one; in this case, if the speaker says “Point to the ball,” this should be perfectly unambiguous to the hearer, assuming she’s taking her partner’s perspective into account. However, the instruction would be completely ambiguous if both balls were in common ground.

Studies along these lines have shown that as early as 3 or 4 years of age, kids show some definite sensitivity to their partner’s perspective, both in the expressions they produce and in their comprehension as hearers (Nadig & Sedivy, 2002; Nilsen & Graham, 2009). But they’re not as good at it as adults are, and there are hints that when it comes to linguistic interactions like these, kids may be more egocentric than adults as late as adolescence (Dumontheil et al., 2010). So, the general conclusion seems to be that while many of the ingredients for mind reading are present at a very young age, it takes a long while for kids to fully hone this ability—longer, even, than it takes them to acquire some of the most complex aspects of syntax.

This raises the question of what it is that has to develop in order for mind-reading abilities to reach full bloom. Some researchers have argued that the social cognition demonstrated by young children is different in kind from older children’s reasoning about mental states. For example, Cecilia Heyes and Chris Frith (2014) suggest that infants have a (possibly innate) system of implicit mind reading that allows for efficient and automatic tracking of others’ attentional states. This may allow very young children to make good predictions about people’s behavior (for example, expecting that someone will search for an object in the last place they saw it) but it falls short of true awareness of mental states. This system can be thought of as a “start-up kit” that serves as the foundation for a more complex system of explicit mind reading, which allows us to consciously reason and talk about mental states. Heyes and Frith propose that the explicit system is dependent upon the implicit system, but separate from it—much like reading text is dependent upon spoken language, but involves mastering a set of cognitive skills that are not required for speech. And, like print reading, they suggest, explicit mind-reading skills are culturally transmitted through a deliberate process of teaching. Parents spend a great deal of time explaining others’ mental states and prompting their offspring to do the same: “She’s crying because you took her toy and now she’s sad”; “Why do you think the witch wants her to eat the apple?” And there’s evidence that talking about mental states does boost children’s (or even adults’) awareness of them (see Box 12.2).

Not all researchers buy into the idea of a sharp split between implicit and explicit mind reading. The ongoing debate is sending scientists off looking for supporting evidence such as: Do explicit mind-reading tasks recruit different brain networks than implicit ones? Does explicit mind reading depend heavily on a culture in which people discuss or read about mental states? In contrast, we might expect variability in implicit mind reading to have more of a genetic cause than a cultural one. Is there neuropsychological evidence for a double dissociation between explicit and implicit mind reading—that is, can one be preserved but the other impaired, and vice versa? And do implicit and explicit mind reading interact differently with other cognitive processes and abilities? If explicit mind reading is a slower, more effortful process than implicit mind reading, we might expect it to be affected more by individual differences in working memory or cognitive control.

Regardless of whether there are two separate systems (or perhaps even more!) for mind reading, there’s growing evidence that children’s failures in complex referential tasks are due at least somewhat to their difficulties in juggling complex information in real time. Think about what’s needed to give your partner just enough information to identify a single object from many others that are similar: you need to notice and keep track of the objects’ similarities and differences all while going through the various stages of language production you read about in Chapter 10. As you saw from the eye-tracking studies described on pages 414–418, adults often start speaking before they’ve visually registered the need for disambiguating information, and if they don’t notice the relevant information quickly enough, they may need to interrupt themselves to launch a repair. This suggests that they’re constantly monitoring their speech in order to quickly fix any speaking glitches that do occur. We know surprisingly little about children’s language production—how far ahead can they plan? Do they have the bandwidth to simultaneously speak and monitor their own speech? But given how complex speaking is, there are many opportunities for breakdown among small people with small working memories.

Things are even more difficult if the child’s own perspective clashes with that of a partner, in which case the child has to suppress their own knowledge, which may be competing with their representation of what their partner knows or can see. And as we’ve seen already in Section 9.6, children are especially bad at inhibiting a competing representation or response, whether this happens to be a lexical item that stirs up competitive activation, or the lure of a misleading syntactic interpretation for an ambiguous sentence fragment. All of these examples rely on highly developed cognitive control (also referred to as “executive function”)—that is, the ability to flexibly manage cognitive processes and behavior in the service of a particular goal. Unfortunately, cognitive control follows a long and slow developmental path, and it’s now well known that the regions of the brain that are responsible for its operation are also among the last to mature. So, many of children’s egocentric tendencies may not be due to the fact that they’re neglecting to consider their partner’s perspective; rather, the problem may be that they have trouble resolving the clash between their partner’s perspective and their own.

A number of studies have explored the consequences of these processing limitations for referential communication. Elizabeth Nilsen and her colleagues (2015) recruited 9- to 12-year-olds for a complex referential communication task and assessed their working memory (using a digit span test) and their cognitive control (using a test in which the kids were taught to press a button if a specific shape appeared on the screen, but avoid pressing it if that shape was preceded by a beep). Kids with better working memory and cognitive control scores produced fewer ambiguous descriptions in the task. The effects of poor cognitive control are even subtly evident in adult’s performance on perspective-taking tasks (Brown-Schmidt, 2009b). And, if a referential communication task is made simple enough, thereby reducing these processing demands, even kids as young as two-and-a-half show attempts to provide disambiguating information for a partner when it’s needed, though they don’t achieve complete success (Abbot-Smith et al., 2016).

In this section, we’ve explored the tension between the very early intertwining of language and social cognition, and its long developmental trajectory. In the next section, we’ll see that social cognition is deeply embedded in the ways in which we elaborate meanings beyond what’s offered by the linguistic code. We’ll also look at how fragile or difficult these social inferences might be.

12.2 Conversational Inferences: Deciphering What the Speaker Meant

As I’ve already suggested, one of the benefits of language is that it should eliminate the need for constant mind reading, since the linguistic code contains meanings that the speaker and hearer presumably agree upon. But in Chapter 11, I used up a lot of ink describing situations in which the linguistic code by itself was not enough to deliver all of the meaning that readers and hearers routinely extract from text or speech. Hearers have a knack for filling in the cracks of meanings left by speakers, pulling out much more precise or elaborate interpretations than are made available by the linguistic expressions themselves—which, as we’ve seen, can sometimes be pretty sparse or vague.

“Soft” and “hard” meanings

It turns out that a good bit of this meaning enrichment involves puzzling out what the speaker intended to mean, based on her particular choice of words. Suppose you ask me how old I am, and I produce the spectacularly vague reply “I’m probably older than you.” My vagueness might be due to the fact that I have trouble keeping track of my precise age, but it seems rather unlikely that I wouldn’t have access to this information. A more plausible way to interpret my response is that I’m letting you know that my age is not open for discussion (and maybe you should apologize for asking). But this message strays quite far from the conventional meaning of my reply, and clearly it does require you to speculate about my knowledge and motivations. So, in this case, even though you can easily access the conventional meaning of the sentence that I’ve produced, you still need to do some mind reading to get at my intended meaning.

The well-known philosopher H. Paul Grice argued that reasoning about a speaker’s intended meaning is something that is so completely woven into daily communication that it’s easy to mistake our inferences about speakers’ meanings for the linguistic meanings themselves. For example, what does the word some mean, as in Some liberals approve of the death penalty? It’s tricky to pin down, and if you took a poll of your classmates’ responses, you’d probably get several different answers. But if your definition included the notion that some means “not many” or “not all” of something, Grice would argue that you’re confusing the conventional meaning of the word some with an inferred conclusion about the most likely meaning that the speaker intended. In other words, the conventional meaning of some doesn’t include the “not all” component. Rather, the speaker has used the word some to communicate—or imply—“not all” in this specific instance.

To see the distinction, it helps to notice that there are contexts where some is used without suggesting “not all.” The sentence I gave you about some liberals was unfairly designed to make you jump to the conclusion that some means “not all,” because that’s the most likely speaker meaning of that sentence. But imagine instead a detective investigating the cause of a suspicious accident. She arrives at the scene of the accident, and tells one of the police officers who’s already there: “I’m going to want to talk to some of the witnesses.” If the police officer lines up every single witness, it’s doubtful that the detective will chastise him by saying, “I said some of the witnesses—don’t bring me all of them.” Here, the detective seems to have used some to mean “at least a few, and all of them if possible.” By looking closely at how the interpretation of some interacts with the context of a sentence, Grice concluded that the linguistic code provides a fairly vague meaning, roughly “more than none.”

You can think of the linguistic code as providing the “hard” part of meaning in language, the part that’s stable across contexts and is impossible to undo without seeming completely contradictory. For example, it sounds nonsensical to say “Some of my best friends like banjo music—in fact, none of them do.” But you can more easily undo the “not all” aspect of the interpretation of some: “Some of my best friends like banjo music—in fact, they all do.” The “not all” component seems to be part of the “soft” meaning of some, coming not directly from the linguistic code, but from inferences about what the speaker probably meant to convey. Grice used the term conversational implicature to refer to the extra “soft” part of meaning that reflects the speaker’s intended meaning over and above what the linguistic code contributes.

Grice suggested that making inferences about speaker meaning isn’t limited to exotic or exceptional situations. Even in everyday, plain-vanilla conversation, hearers are constantly guessing at the speaker’s intentions as well as computing the conventional linguistic meaning of his utterances. The whole enterprise hinges on everyone sharing the core assumptions that communication is a purposeful and cooperative activity in which (1) the speaker is trying to get the hearer to understand a particular message, rather than simply verbalizing whatever thoughts happen to flit through his brain without caring whether he’s understood, and (2) the hearer is trying to interpret the speaker’s utterances, guided by the belief that they’re cooperative and purposeful.

Sometimes it’s easier to see the force of these unspoken assumptions by looking at situations where they come crashing down. Imagine getting the following letter from your grown son:

I am writing on paper. The pen I am using is from a factory called “Perry & Co.” This factory is in England. I assume this. Behind the name Perry & Co. the city of London is inscribed; but not the city. The city of London is in England. I know this from my schooldays. Then, I always liked geography. My last teacher in that subject was Professor August A. He was a man with black eyes. There are also blue eyes and gray eyes and other sorts too. I have heard it said that snakes have green eyes. All people have eyes …

Something’s gone awry in this letter. The text appears to be missing the whole point of why it is that people write letters in the first place. Instead of being organized around a purposeful message, it comes across as a brain dump of irrelevant associations. As it happens, the writer of this letter is an adult patient suffering from schizophrenia, which presumably affects his ability to communicate coherently (McKenna & Tomasina, 2008; the letter itself comes from the case studies of Swiss psychiatrist Egon Bleuler).

Once you know this fact, the letter seems a bit less puzzling. It’s possible to suspend the usual assumptions about how speakers behave because a pathology of some sort is clearly involved. But suppose you got a letter like this from someone who (as far as you knew) was a typically functioning person. Your assumptions about the purposeful nature of communication would kick in, and you might start trying to read between the lines to figure out what oblique message the letter writer was intending to send. Unless we have clear evidence to the contrary, it seems we can’t help but interpret a message through the lens of expectations about how normal, rational communication works.

How do rational speakers behave?

Exactly what do hearers expect of speakers? Grice proposed a set of four maxims of cooperative conversation, which amount to key assumptions about how cooperative, rational speakers behave in communicative situations (see Table 12.1). I’ll discuss each of these four maxims in detail.

MAXIM 1: QUALITY Hearers normally assume that a speaker will avoid making statements that are known to be false, or for which the speaker has no evidence at all. Obviously, speakers can and do lie, but if you think back to the last conversation you had, chances are that the truthful statements far outnumbered the false ones. In most situations, the assumption of truthfulness is a reasonable starting point. Without it, human communication would be much less useful than it is—for example, would you really want to spend your time taking a course in which you regarded each of your instructor’s statements with deep suspicion? In fact, when a speaker says something that’s blatantly and obviously false, hearers often assume the speaker’s intent was to communicate something other than the literal meaning of that obviously false statement; perhaps the speaker was being sarcastic, or metaphorical.

MAXIM 2: RELATION Hearers assume that a speaker’s utterances are organized around some specific communicative purpose, and that speakers make each utterance relevant in the context of their other utterances. This assumption drives many of the inferences we explored in Chapter 11. Watch how meaning gets filled in for these two sentences: Cathy felt very dizzy and fainted at her work. She was carried away unconscious to a hospital. It’s normal to draw the inferences that Cathy was carried away from her workplace, that her fainting spell triggered a call to emergency medical services, that her unconscious state was the result of her fainting episode rather than being hit over the head, and so on. But all of these rest on the assumption that the second sentence is meaningfully connected to the first, and that the two sentences don’t represent completely disjointed ideas.

|

TABLE 12.1 Grice’s “maxims of cooperative conversation”a |

|

1. Quality If a speaker makes an assertion, he has some evidence that it’s true. Patently false statements are typically understood to be intended as metaphorical or sarcastic. 2. Relation Speakers’ utterances are relevant in the context of a specific communicative goal, or in relation to other utterances the speaker has made. 3. Quantity Speakers aim to use language that provides enough information to satisfy a communicative goal, but avoid providing too much unnecessary information. 4. Manner Speakers try to express themselves in ways that reflect some orderly thought, and that avoid ambiguity or obscurity. If a speaker uses a convoluted way to describe a simple situation, he’s probably trying to communicate that the situation was unusual in some way. |

aThat is, mutually shared assumptions between hearers and speakers about about how rational speakers behave.

Adjacent sentences often steer interpretation, but inferences can also be guided by the hearer’s understanding of what the speaker is trying to accomplish. For example, in the very first episode of the TV series Mad Men, adman Don Draper has an epiphany about how to advertise Lucky Strike cigarettes. Given that health research has just shown cigarette smoking to be dangerous (the show is set in the early 1960s), the tobacco company has been forced to abandon its previous ad campaign, which claimed its cigarettes were healthier than other brands. Don Draper suggests the following slogan: “It’s toasted.” The client objects, “But everybody’s tobacco is toasted.” Don’s reply? “No. Everybody else’s tobacco is poisonous. Lucky Strike’s is toasted.”

What Don Draper understands is that the audience can be counted on to imbue the slogan with a deeper meaning. Consumers will assume that if the quality of being toasted has been enshrined in the company’s slogan, then it must be something unique to that brand. What’s more, it must be something desirable, perhaps improving taste, or making the tobacco less dangerous. These inferences are based on the audience’s understanding that the whole point of an ad is to convince the buyer why this brand of cigarettes is better than other brands. Focusing on something that all brands have in common and that doesn’t make the product better would be totally irrelevant. Hence, the strong underlying assumption of relevance leads the audience to embellish the meaning of the slogan in a way that’s wildly advantageous for the company.

If you think advertising techniques like this only turn up in TV fiction, consider what advertisers are really claiming (and hoping you’ll “buy”) when they tell you that their brand of soap floats, or that their laundry detergent has special blue crystals, or that their food product contains no additives. Inferences based on assumptions of quality and relevance likely spring from the hearer’s curiosity about the speaker’s underlying purpose. They allow the hearer to make sense of the question, “Why are you telling me this?” The next two conversational maxims that Grice described allow the hearer to answer the question, “Why are you telling me this in this particular way?”

MAXIM 3: QUANTITY Hearers assume that speakers usually try to supply as much information as is needed to fulfill the intended purpose without delving into extra, unnecessary details. Obviously, speakers are sometimes painfully redundant or withhold important information, but their tendency to strive for optimal informativeness leads to certain predictable patterns of behavior. For example, in referential communication tasks, speakers try to provide as much information as the hearer needs to unambiguously identify a referent, so an adjective such as big or blue is tacked onto the noun ball more often if the hearer can see more than one ball. This is also evident in expressions like male nurse, where the word male presumably adds some informational value—when is the last time you heard a woman described as a “female nurse”? This asymmetry reflects the fact that the speaker probably assumes that nurses are female more often than not, and so it doesn’t add much extra information to specify female gender.

From the hearer’s perspective, certain inferences result from the working assumption that the speaker is aiming for optimal informativeness. Grice argued that this is why people typically assume that some means “not all,” or “not most,” or even “not many.” The argument goes as follows: The conventional meaning of some is compatible with “all,” as well as “most” and “many.” (The test: you can say without contradicting yourself “Some of my friends are blonde—in fact, many/most/all of them are.”) Because some is technically true in a wide range of situations, it’s very vague (just as the vague word thing can be applied to a great many, well, things). If I were to ask you “How many of your friends are blonde?” and you answered “Some of them are,” I could make sense of your use of the word some in one of several ways, including the following:

1. You used the vague expression some because that was all that was called for in this communicative situation, and you assumed that all I cared about was whether you had at least one blonde friend, so there was no need to make finer distinctions (in some contexts, this might be the case, but normally, the hearer would be interested in more fine-grained distinctions).

2. You used such a vague expression because, in fact, you didn’t know how many of them were blonde (this seems unlikely).

3. You used the vague word some because you couldn’t truthfully use the more precise words many, most, or all. Hence, I could conclude that only a few of your friends, and not many, or most, or all, were blonde. This line of reasoning can be applied any time a vague expression sits in a scalar relation to other, more precise words, yielding what language researchers call a scalar implicature (see Box 12.3).

MAXIM 4: MANNER Hearers typically assume that speakers use reasonably straightforward, unambiguous, and orderly ways to communicate. Speakers normally describe events in the order in which they happened, so the speaker is probably conveying different sequences of events by saying “Sam started hacking into his boss’s email. He got fired,” versus “Sam got fired. He started hacking into his boss’s email.” Speakers also generally get to the point and avoid using obscure or roundabout language. It would be strange for someone to say “I bought some frozen dairy product with small brown flecks” when referring to chocolate chip ice cream—unless, for example, she was saying this to another adult in front of small children and meant to get across that she didn’t want the kids to understand what she was saying. In fact, any time a speaker uses an unexpected or odd way of describing an object or a situation, this can be seen as an invitation to read extra meaning into the utterance. To see this in action, next time you’re having a casual conversation with someone, try replacing simple expressions like eat or go to work with more unusual ones like insert food into my oral cavity or relocate my physical self to my place of employment, and observe the effect on your listener.

Grice argued that conversational implicatures arise whenever hearers draw on these four maxims to infer more than what the linguistic code provides. An important claim was that such inferences aren’t triggered directly by the language—instead, they have to be reasoned out, or “calculated,” by the hearer who assumes that the speaker is being cooperative and rational. The speaker, for his part, anticipates that the hearer is going to be able to work out his intended meaning based on the assumptions built into the four conversational maxims.

At what age do children derive conversational inferences?

Grice made a convincing case that conventional linguistic meaning has to be supplemented by socially based reasoning about speakers’ intentions and expected behaviors. As speakers, we tend to take it for granted that our listeners can competently do this, even when we’re talking to young children. For example, a parent confronted with a small child’s request for a cookie might say “We’re having lunch in a few minutes,” or “You didn’t eat all of your dinner.” To get a sensible answer out of this, the child has to assume that the parent is intending to be relevant, and then figure out how this reply is meant to be relevant in the context of the question. Some fairly complex inferencing has to go on. Should the parent be so confident that the message will get across? (And if the child persists in making the request, maybe the parent shouldn’t impatiently burst out, “I already told you—the answer is no!” After all, the parent hasn’t actually said that, merely implied it.)

Some researchers have presented evidence that kids have slid well into middle childhood or beyond before they compute conversational implicatures as readily as adults do. A number of studies have shown that children have trouble understanding indirect answers to questions like the one above until they’re about 6 years old (e.g., Bucciarelli et al., 2003). Even by age 10, kids appear not to have fully mastered the art of implicature. In one such study, Ira Noveck (2001) asked adults and children between the ages of 7 and 11 to judge whether it was true that “some giraffes have long necks.” Adults tended to say the statement was false, reflecting the common inference that some conveys “not all.” But even at the upper end of that age range, most kids accepted the sentence as true. In other words, they seemed to be responding to the conventional meaning of some, rather than to its probable intended meaning. These results raise the possibility that reaching beyond the linguistic code to infer speakers’ meanings is something that develops after kids have acquired a solid mastery over the linguistic code. And they resonate with the notion that some mind-reading tasks are difficult and develop slowly over time, possibly under the tutelage of parents and teachers.

But other researchers have claimed that these experiments drastically underestimate kids’ communicative skills, arguing that many of the studies that show late mastery of conversational inferences require kids to reflect on meanings in a conscious way. This makes it hard to tell whether their trouble is in getting the right inferences or in thinking and talking about these inferences. As with the false-belief tests described in Section 12.1, studies that use more natural interactive tasks, probing for inferences implicitly rather than explicitly, tend to reveal more precocious abilities. Cornelia Schulze and her colleagues (2013) had 3- and 4-year-old children play a game in which the young subjects were to decide which one of two things (cereal or a breakfast roll) to give to a puppet character. When the puppet was asked “Do you want cereal or a roll?” she gave an indirect reply such as “The milk is all gone” from which the child was supposed to infer that she wanted the roll. The researchers analyzed how often the kids handed the puppet the correct object. They found that the responses of even the 3-year-olds were not random: more often than not, the kids understood what the puppet wanted. Another study by Cornelia Schulze and Michael Tomasello (2015) showed that by 18 months, toddlers are able to grasp the intent of a referential gesture, even when its meaning is indirect. They had children play a game with the experimenter in which they retrieved puzzle pieces from a locked container and assembled the puzzle. The experimenter surreptitiously took one of the pieces and put it back into the container. When the child had assembled all the other pieces, the experimenter said, “Oh, look, a piece is missing!” and held up the key to the container. The children took the key and tried to open the container, showing that they had interpreted the experimenter’s action as a suggestion to look in the container for the missing piece. But they didn’t respond in this way if the experimenter held up the key and gazed at it without communicating with the child in any way, or if the experimenter “accidentally” slid the key in the child’s direction. It was the clear intent to communicate that triggered the children’s inferences.

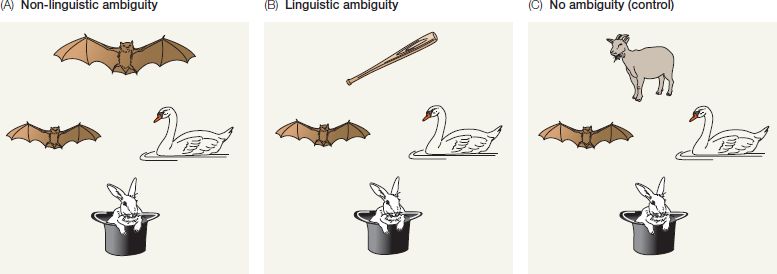

Other researchers have emphasized that specific inferences (such as scalar implicature associated with the word some) may be hard not because young kids lack mind-reading abilities but because they lack experience with language and don’t yet have clear expectations of what people would normally say in certain situations. To understand that a statement like Some giraffes have long necks is weird, you have to know that people would typically say All giraffes have long necks, and this knowledge has to be robust enough to be quickly used in interpreting the speaker’s probable meaning. When this knowledge requirement is removed from a task, children far younger than 9 years can reliably generate scalar inferences. In one such task (Stiller et al., 2015), kids played a game in which they had to guess which referent a stuffed animal had in mind when he “said” things like “My friend has glasses” in reference to a picture like the one shown in Figure 12.5. Two of the “friends” have glasses, so the speaker could be truthfully referring to either of these. But the most sensible way to describe the rightmost character is by saying “My friend has glasses and a hat.” Therefore, the speaker probably meant to refer to the character in the middle. The line of reasoning here is identical to the scalar implicature in which some = not all, but it doesn’t require the child to notice the contrast between various expressions of quantity; instead, the contrast is built right into the experiment. The researchers found that from 2.5 to 3.5 years of age, the kids tended to pick one of the characters with glasses, but their choices were randomly split between the two. At 3.5 years of age, they started to more consistently choose the glasses-only character, and this tendency became stronger for 4- and 4.5-year-olds.

Figure 12.5 A sample picture accompanying the statement My friend has glasses. The child’s task is to identify the “friend.” Although two of the characters have glasses, a reasonable inference is that the statement refers to the one in the center, because the speaker would probably have said My friend has glasses and a hat in referring to the rightmost character. (From Stiller et al., 2015, Lang. Learn. Dev. 11, 176.)

There’s not much evidence, then, that conversational inferences emerge only fairly late in childhood. The machinery to generate them seems to be in place from a very young age. When kids do have trouble with conversational inferences, their difficulties can’t easily be traced to mind-reading limitations or a poor grasp of how cooperative conversation works. Still, their limited experience with language and social interactions can make it hard for them to have the sharp expectations that are sometimes needed to drive conversational implicatures. And it’s quite possible that at least some implicatures elude children because their computational demands exceed the abilities of young minds.

Conversational inferences in real time

Speaking of computational demands, just how much of it do hearers need in order to be able to decipher the speaker’s intended meaning? Some studies suggest that it takes some non-trivial amount of processing effort and time to get from the linguistic code to the intended meaning when there’s a significant gap between the two.

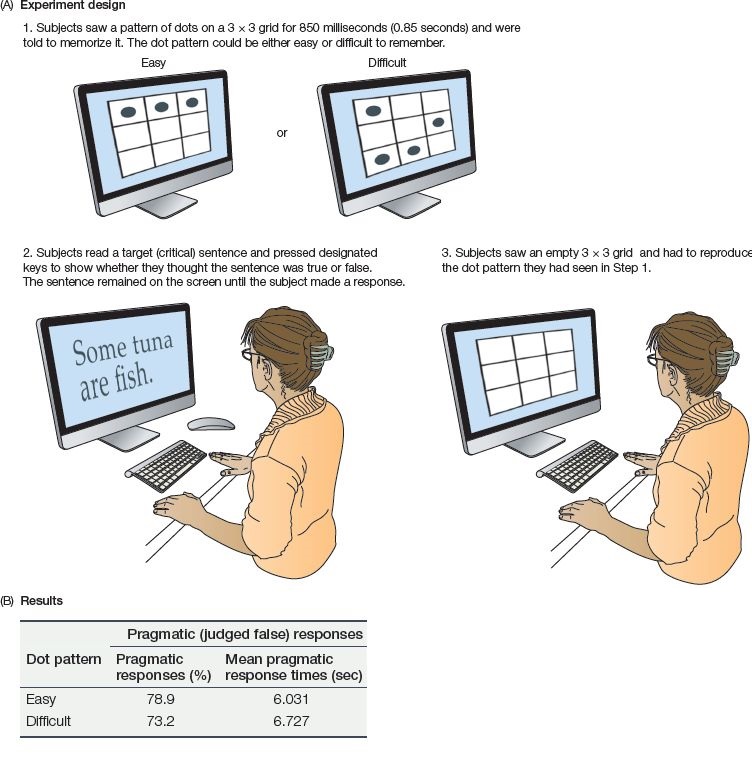

Let’s go back to the scalar implicatures that come up in sentences like Some tuna are fish. To adults, this sentence seems odd, and is usually judged as false because it’s taken to imply that not all tuna are fish. But getting to this implicature takes some work. Lew Bott and Ira Noveck (2004) devised a study in which they explained to their subjects the difference between the conventional linguistic meaning of some (the conventional meaning is often referred to as its semantic meaning) and the commonly intended meaning associated with some, which involves the scalar implicature (or its pragmatic meaning). They then instructed their subjects to push buttons to indicate whether they thought the sentence was true or false. Half of their subjects were told to respond to the semantic interpretation of the sentence (which would make it true), and the other half were told to respond to the pragmatic reading (which would make it false). Even though the pragmatic meaning seems to be the preferred one when people are left to their own devices, the researchers saw evidence that people have to work harder to get it: the subjects who were told to judge the truth or falsity of the pragmatic interpretation took more time to respond than the others, and made more errors when they had to respond under time pressure.

In a follow-up study, Wim De Neys and Walter Schaeken (2007) carried out a similar experiment with a twist: before subjects saw each target sentence, they were shown a visual arrangement of several dots in a grid and had to memorize them. They then pressed a button to indicate whether the target sentence was true or false (they were given no specific instructions about how to respond), all while holding the dot pattern in memory. After their response, they saw an empty grid and had to reproduce the dot pattern. The whole sequence was repeated until the subjects had seen all the experimental trials (see Figure 12.6). The results showed that when the dot pattern was hard to remember (thereby putting a strain on processing resources), subjects were more likely to push the “True” button for sentences like Some tuna are fish, compared with trials where the dot pattern was easy to remember. This suggests that even though the reading with the scalar inference (leading subjects to say the sentence was false) is the most natural one for hearers in normal circumstances, such a reading consumes processing resources, and is vulnerable to failure when there’s a limit on resources and/or time.

Figure 12.6 Summary of experimental design and results from De Neys and Schaeken (2007). (A) Experiment design. Steps 1–3 were repeated until the subject had been exposed to all of the critical target sentences and all of the filler sentences. Example filler sentences for this particular critical sentence might be Some birds are eagles (true) and Some pigeons are insects (false). (B) Tabularized results, showing the percentage of “pragmatic” responses (that is, the statement was judged false based on scalar inference) for the critical target sentence; and response times for the pragmatic responses, separated by whether they occurred in the easy or difficult dot pattern condition. (Adapted from De Neys & Schaeken, 2007, Exp. Psychol. 54, 128.)

One way to interpret results like these is to say that people first compute the semantic meaning of a sentence, and then they evaluate whether extra elaboration is needed, based on the standards for rational communication. This view segregates the processing of pragmatic meanings from that of semantic meanings. And it’s consistent with the idea that the two processes are different in kind, with very different characteristics and developmental paths. (This perspective might remind you of early theories of metaphor comprehension sketched out in Section 11.5, in which people were believed to consider metaphorical meanings only as a last resort, after the literal meaning of a sentence turned out to be problematic.)

But some researchers have taken a very different view, arguing that conversational inferences are deeply interwoven with other aspects of language processing at all stages of comprehension. They’ve pointed to evidence that in some cases, people seem to compute scalar inferences as quickly as they interpret the linguistic code itself (e.g., Breheny et al., 2013; Degen & Tanenhaus, 2015). And scalar inferences can even be used to resolve ambiguities or vagueness in the linguistic code (see Box 12.4).

Noah Goodman and Michael Frank (2016) invite us to think of the problem like this: Let’s take seriously the idea that the goal of language understanding is to grasp what the speaker is trying to tell us about some aspect of reality. This is at odds with the notion that pragmatic inferencing is something “extra” that’s added, like icing, once the core meaning of a sentence emerges from the mental oven. Instead, at every stage, the hearer is trying to figure out which reality the speaker is likely to have wanted to communicate, based on what he actually said (and what he chose not to say). In other words, it’s very much like the scenario depicted in the film Thirteen Days, in which Krushchev and Kennedy had to decipher each other’s probable intentions based on their choice of military maneuvers. The main difference is that in a typical conversation, we have far more information than Kennedy and Krushchev had—including what the speaker said, which turns out to be enormously helpful in constraining the possibilities. But linguistic information is not privileged in this model; it’s simply one of several sources of information that’s used to infer the speaker’s probable meaning. The hearer’s awareness of the menu of linguistic options available to the speaker, her sense of the speaker’s knowledge or linguistic competence, her own knowledge of the world, the apparent purpose of the conversational exchange, and perhaps even the social rules that govern what counts as acceptably polite speech (see Language at Large 12.2)—all of these are combined with what the speaker actually said and culminate in the hearer’s best guess at the speaker’s underlying intent.

If this depiction of how people derive inferences is right, then we’d expect some inferences to be very easy and some to be very hard. They should be easy when all of the sources of information converge upon a highly probable message. But when some of the cues conflict or don’t offer much guidance, the hearer might thrash about for a while, trying to settle on the most likely scenario the speaker wants to convey.

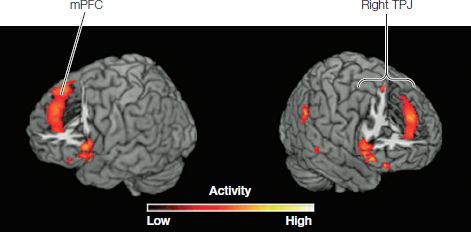

Brain networks for thinking about thoughts

If you look back to Figure 3.13, which shows the brain regions thought to be involved in language, you’ll notice that there are no areas in that diagram representing the mind-reading processes that I’ve just argued are so important for language use. Why is that?

The absence of mind-reading areas from the brain’s language networks is actually perfectly consistent with Grice’s view of how human communication works. Grice argued that the mental calculations involved in generating or interpreting implicatures aren’t specifically tied to language—rather, they’re part of a general cognitive system that allows people to reason about the intentions and mental states of others, whether or not language is involved. So, despite the fact that social reasoning is so commonly enlisted to understand what people mean when they use language, it shouldn’t surprise us to find that it’s part of a separate network.

Over the past 15 years or so, neuroscientists have made tremendous progress in finding evidence for a brain network that becomes active when people think about the thoughts of others. A variety of such tasks—both linguistic and non-linguistic—have consistently shown increased blood flow to several regions of the brain, especially the medial prefrontal cortex (mPFC) and temporoparietal junction (TPJ) (see Figure 12.8).

Figure 12.8 Brain regions for “theory of mind” (ToM). The medial prefrontal cortex and the temporoparietal junction have been found to be especially active in tasks that require subjects to think about the mental states of others.

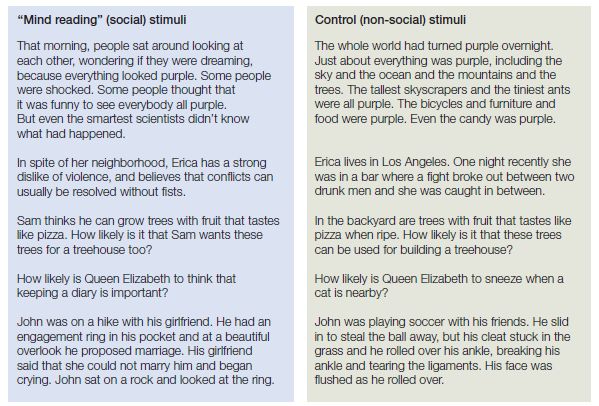

Some of the earliest evidence for brain regions devoted to mind reading came from experiments using false-belief tasks like the “Smarties” test discussed in Section 12.1. Following the terminology used by child development researchers, neuroscientists often refer to these areas as the “theory of mind (ToM) regions.” Similar patterns of brain activity have been found during many other tasks that encourage subjects to consider the mental states of others. To isolate the ToM regions, researchers try to compare two tasks that are equally complex and involve similar stimuli, but are designed so that thinking about mental states is emphasized in one set of stimuli, but not in the other. For example, Rebecca Saxe and Nancy Kanwisher (2003) had their subjects read stories like this:

A boy is making a papier-mâché project for his art class. He spends hours ripping newspaper into even strips. Then he goes out to buy flour. His mother comes home and throws all the newspaper strips away.

This story encourages readers to infer how the boy will feel about his newspaper strips being thrown out. This next story also invites an inference, but one of a physical nature rather than one involving mind reading:

A pot of water was left on low heat yesterday in case anybody wanted tea. The pot stayed on the heat all night. Nobody did drink tea, but this morning, the water was gone.