If you’re young enough to think it’s no big deal that you can speak into your smartphone and have it produce suggestions for a nearby sushi place or tell you the name of the inventor of the toilet, you may not appreciate just how hard it is to build a program that understands speech rather than typed commands. Creating machines that can understand human speech has been a long time coming, and the pursuit of this dream has required vast amounts of research money, time and ingenuity, not to mention raw computing power.

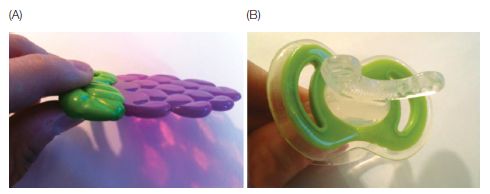

Before computers, only the simplest of “speech recognition” tricks were possible. For example, 1922 saw the release of a children’s toy called Radio Rex, a small bulldog that leaped out of his doghouse upon “hearing” his name. At least, that was the desired illusion. The toy was actually released by a spring that was activated by a 500-Hz burst of acoustic energy—which roughly corresponds to an acoustic component of the vowel in Rex’s name. Well, it does if you’re an adult male, which the toy’s inventors presumably were. This choice of default settings meant that Rex might “obey” an adult male who uttered any one of a number of words that contained the targeted vowel (e.g., red, mess, bled) while ignoring the pleas of an 8-year-old girl faithfully calling his name (though a scientifically minded child might discover that the dog would respond if she pronounced its name with a slightly different vowel so as to sound like “reeks,” to hit the 500-Hz sweet spot).

Unlike Radio Rex’s fixation on a single acoustic property, truly understanding speech depends on being able to detect and combine a number of different acoustic dimensions, which wasn’t possible until the advent of computers. In 1952, a room-sized computer named “Audrey” was able to accomplish the tremendous feat of recognizing spoken numbers from zero to nine—provided there were pauses in between and that the words were uttered by one particular speaker. The development of automatic speech recognition crawled along surprisingly slowly after that, and those of us who have been alive for more than a couple of decades can remember the deeply incompetent “assistants” that were first foisted on seething customers who tried to perform simple banking or travel-related tasks over the telephone. To me, the recent progression from systems that could understand (poorly) a handful of specific words and phrases to the compact wizardry of smartphone apps is truly remarkable, even if the apps are still a poor substitute for a cooperative, knowledgeable human being.

As money poured into companies that were building speech recognition systems, I remember having frequent conversations with speech scientists who would shake their heads pessimistically at the glowing promises being made by some of these companies. My colleagues were painfully aware of the complexities involved in even the most basic step of human speech understanding—that of chunking a stream of acoustic information into the units of language. As you’ve seen in earlier chapters, languages owe their sprawling vocabularies in part to the fact that they can combine and recombine a fairly small set of sound units in a multitude of ways to form words. Vocabularies would probably be severely restricted if each word had to be encoded and remembered as a holistic unit. But these units of sound are not physical objects; they don’t exist as things in the real world as do beads strung on a necklace. Instead, the sound units that we combine are ideas of sounds, or abstract representations that are related to certain relevant acoustic properties of speech, but in messy and complicated ways.

Automated speech recognition has turned out to be an incredibly difficult problem precisely because the relationship between these abstractions and their physical manifestations is slippery and complex. (These abstract representations—or phonemes—were discussed in detail in Chapter 4.) The problem of recognizing language’s basic units is eliminated if you’re interacting with your computing device by typing, because each symbol on the keyboard already corresponds directly to an abstract unit. The computer doesn’t have to figure out whether something is an “A” or a “T”—you’ve told it. A closer approximation of natural speech recognition would be a computer program that could decode the handwriting of any user—including handwriting that was produced while moving in a car over bumpy terrain, possibly with other people scribbling over the markings of the primary user.

This chapter explores what we know about how humans perceive speech. The core problem is how to translate speech—whose properties are as elusive and ever-changing as the coursing of a mountain stream—into a stable representation of something like sequences of individual sounds, the basic units of combination. The main thread running through the scientific literature on speech perception is the constant tension between these two levels of linguistic reality. In order to work as well is it does, speech perception needs to be stable, but flexible. Hearers need to do much more than simply hear all the information that’s “out there.” They have to be able to structure this information, at times ignoring acoustic information that’s irrelevant for identifying sound categories, but at others learning to attend to it if it becomes relevant. And they have to be able to adapt to speech as it’s spoken by different individuals with different accents under different environmental conditions.

7.1 Coping with the Variability of Sounds

Spoken language is constrained by the shapes, gestures, and movements of the tongue and mouth. As a result, when sounds are combined in speech, the result is something like this, according to linguist Charles Hockett (1955, p. 210):

Imagine a row of Easter eggs carried along a moving belt; the eggs are of various sizes, and variously colored, but not boiled. At a certain point the belt carries the row of eggs between the two rollers of a wringer, which quite effectively smash them and rub them more or less into each other. The flow of eggs before the wringer represents the series of impulses from the phoneme source; the mess that emerges from the wringer represents the output of the speech transmitter.

Hence, the problem for the hearer who is trying to identify the component sounds is a bit like this:

We have an inspector whose task it is to examine the passing mess and decide, on the basis of the broken and unbroken yolks, the variously spread out albumen, and the variously colored bits of shell, the nature of the flow of eggs which previously arrived at the wringer.

The problem of perceptual invariance

Unlike letters, which occupy their own spaces in an orderly way, sounds smear their properties all over their neighbors (though the result is perhaps not quite as messy as Hockett’s description suggests). Notice what happens, for example, when you say the words track, team, and twin. The “t” sounds are different, formed with quite different mouth shapes. In track, it sounds almost like the first sound in church; your lips spread slightly when “t” is pronounced in team, in anticipation of the following vowel; and in twin, it might be produced with rounded lips. In the same way, other sounds in these words influence their neighbors. For example, the vowels in team and twin have a nasalized twang, under the spell of the nasal consonants that follow them; it’s impossible to tell exactly where one sound begins and another one ends. This happens because of the mechanics involved in the act of speaking.

As an analogy, imagine a sort of signed language in which each “sound unit” corresponds to a gesture performed at some location on the body. For instance, “t” might be a tap on the head, “i” a closed fist bumping the left shoulder, and “n” a tap on the right hip. Most of the time spent gesturing these phonemic units would be spent on the transitions between them, with no clear boundaries between units. For example, as soon as the hand left the head and aimed for the left shoulder, you’d be able to distinguish that version of “t” from one that preceded, say, a tap on the chin. And you certainly wouldn’t be able to cut up and splice a videotape, substituting a tap on the head preceding a tap on the left shoulder for a tap on the head preceding a tap on the chest. The end result would be a Frankenstein-like mash. (You’ve encountered this problem before, in Chapter 4 and in Web Activity 4.4.)

The variability that comes from such coarticulation effects is hardly the only challenge for identifying specific sounds from a stream of speech. Add to this the fact that different talkers have different sizes and shapes to their mouths and vocal tracts, which leads to quite different ways of uttering the same phonemes. And add to that the fact that different talkers might have subtly different accents. When it comes down to it, it’s extremely hard to identify any particular acoustic properties that map definitively onto specific sounds. So if we assume that part of recognizing words involves recovering the individual sounds that make up those words, we’re left with the problem of explaining the phenomenon known as perceptual invariance: how is it that such variable acoustic input can be consistently mapped onto stable units of representation?

Sounds as categories

When we perceive speech, we’re doing more than just responding to actual physical sounds out in the world. Our minds impose a lot of structure on speech sounds—structure that is the result of learning. If you’ve read Chapter 4 in this book, you’re already familiar with the idea that we mentally represent sounds in terms of abstract categories. If you haven’t, you might like to refer back to Section 4.3 of this book before continuing. In any event, a brief recap may be helpful:

We mentally group clusters of similar sounds that perform the same function into categories called phonemes, much like we might group a variety of roughly similar objects into categories like chair or bottle. The category of phonemes is broken down into variants—allophones—that are understood to be part of the same abstract category. If you think of chair as analogous to a phonemic category, for example, its allophones might include armchairs and rocking chairs.

Linguists have found it useful to define the categories relevant for speech in terms of how sounds are physically articulated. Box 7.1 summarizes the articulatory features involved in producing the consonant phonemes of English. Each phoneme can be thought of as a cluster of features—for example, /p/ can be characterized as a voiceless labial stop, whereas /z/ is an alveolar voiced fricative (the slash notation here clarifies that we’re talking about the phonemic category).

There’s plenty of evidence that these mental categories play an important cognitive role (for example, Chapter 4 discussed their role in how babies learn language). In the next section, we address an important debate: Do these mental categories shape how we actually perceive the sounds of speech?

Do categories warp perception?

How is it that we accurately sort variable sounds into the correct categories? One possibility is that the mental structure we impose on speech amplifies some acoustic differences between sounds while minimizing others. The hypothesis is that we no longer interpret distinctions among sounds as gradual and continuous—in other words, our mental categories actually warp our perception of speech sounds. This could actually be a good thing, because it could allow us to ignore many sound differences that aren’t meaningful.

To get a sense of how perceptual warping might be useful in real life, it’s worth thinking about some of the many examples in which we don’t carve the world up into clear-cut categories. Consider, for example, the objects in Figure 7.2. Which of these objects are cups, and which are bowls? It’s not easy to tell, and you may find yourself disagreeing with some of your classmates about where to draw the line between the two (in fact, the line might shift depending on whether these objects are filled with coffee or soup).

Figure 7.2 Is it a cup or a bowl? The category boundary isn’t clear, as evident in these images inspired by an experiment of linguist Bill Labov (1973). In contrast, the boundary between different phonemic categories is quite clear for many consonants, as measured by some phoneme-sorting tasks. (Photograph by David McIntyre.)

Many researchers have argued that people rarely have such disagreements over consonants that hug the dividing line between two phonemic categories. For example, let’s consider the feature of voicing, which distinguishes voiced stop consonants like /b/ and /d/ from their voiceless counterparts /p/ and /t/. When we produce stop consonants like these, the airflow is completely stopped somewhere in the mouth when two articulators come together—whether two lips, or a part of the tongue and the roof of the mouth. Remember from Chapter 4 that voicing refers to when the vocal folds begin to vibrate relative to this closure and release. When vibration happens just about simultaneously with the release of the articulators (say, within about 20 milliseconds) as it does for /b/ in the word ban, we say the oral stop is a voiced one. When the vibration happens only at somewhat of a lag (say, more than 20 milliseconds), we say that the sound is unvoiced or voiceless. This labeling is just a way of assigning discrete categories to what amounts to a continuous dimension of voice onset time (VOT), because in principle, there can be any degree of voicing lag time after the release of the articulators.

A typical English voiced sound (as in the syllable “ba”) might occur at a VOT of 0 ms, and a typical unvoiced [pha] sound might be at 60 ms. But your articulatory system is simply not precise enough to always pronounce sounds at the same VOT (even when you are completely sober); in any given conversation, you may well utter a voiced sound at 15 ms VOT, or an unvoiced sound at 40 ms.

The idea of categorical perception is that mental categories impose sharp boundaries, so that you perceive all sounds that fall within a single phoneme category as the same, even if they differ in various ways, whereas sounds that straddle phoneme category boundaries clearly sound different. This means that you’re rarely in a state of uncertainty about whether some has said “bear” or “pear,” even if the sound that’s produced falls quite near the voiced/unvoiced boundary. Mapped onto our visual examples of cups and bowls, it would be as if, instead of gradually shading from cup to bowl, the difference between the third and fourth objects jumped out at you as much greater than the differences between the other objects. (It’s interesting to ask what might happen in signed languages, which rely on visual perception, but also create perceptual challenges due to variability; see Box 7.2.)

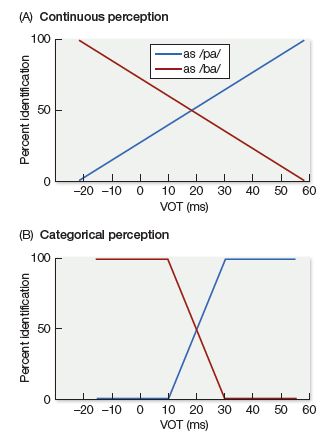

Figure 7.4 Idealized graphs representing two distinct hypothetical results from a phoneme forced-choice identification task. (A) Hypothetical data for a perfectly continuous type of perception, in which judgments about the identity of a syllable gradually slide from /ba/ to /pa/ as VOT values increase incrementally. (B) Hypothetical data for a sharply categorical type of perception, in which judgments about the syllable’s identity remain absolute until the phoneme boundary, where the abruptly shift. Many consonants that represent distinct phonemes yield results that look more like (B) than (A); however, there is variability depending on the specific task and specific sounds.

A slew of studies dating back to 1957 (in work by Mark Liberman and his colleagues) seems to support the claim of categorical perception. One common way to test for categorical perception is to use a forced-choice identification task. The strategy is to have people listen to many examples of speech sounds and indicate which one of two categories each sound represents (for example, /pa/ versus /ba/). The speech sounds are created in a way that varies the VOT in small increments—for example, participants might hear examples of each of the two sounds at 10-ms increments, all the way from –20 ms to 60 ms. (A negative VOT value means that vocal fold vibration begins even before the release of the articulators.)

If people were paying attention to each incremental adjustment in VOT, you’d find that at the extreme ends (i.e., at –20 ms and at 60 ms), there would be tremendous agreement about whether a sound represents a /ba/ or a /pa/, as seen in Figure 7.4A. In this hypothetical figure, just about everyone agrees that the sound with the VOT at –20 ms is a /ba/, and the sound with the VOT at 60 ms is a /pa/. But, as also shown in Figure 7.4A, for each step away from –20 ms and closer to 60 ms, you see a few more people calling the sound a /pa/.

But when researchers have looked at people’s responses in forced-choice identification tasks, they’ve found a very different picture, one that looks more like the graph in Figure 7.4B. People agree pretty much unanimously that the sound is /ba/ until they get to the 20-ms VOT boundary, at which point the judgments flip abruptly. There doesn’t seem to be much mental argument going on about whether to call a sound /ba/ or /pa/. (The precise VOT boundary that separates voiced from unvoiced sounds can vary slightly, depending on the place of articulation of the sounds.) In fact, chinchillas show a remarkably similar pattern (see Box 7.3), suggesting that this way of sorting sounds is not dependent on human capacity for speech or experience with it.

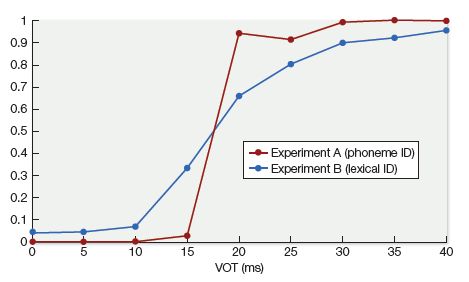

But do studies like these truly tap into the perception of speech sounds—or something else, like decisions that people make about speech sounds, which could be quite different from what they’re doing when they’re perceiving speech in real-time conversation? More recently, researchers have questioned the categorical nature of perception. One such study, led by Bob McMurray (2008), showed that people often do experience quite a bit of uncertainty about whether they’ve heard a voiced or a voiceless sound—much as you might have felt uncertain about the sorting of objects into cups and bowls in Figure 7.2— and that the degree of uncertainty depends on details of the experimental task. Figure 7.6 shows the results of two versions of their experiment. One version, Experiment A, involved a classic forced-choice identification task: participants heard nine examples of the syllables /ba/ and /pa/ evenly spaced along the VOT continuum, and pressed buttons labeled “b” or “p” to show which sound they thought they’d heard. The results (which reflect the percentage of trials on which they pressed the “p” button) show a typical categorical perception curve, with a very steep slope right around the VOT boundary. But the results look very different for Experiment B. In this version, the slope around the VOT boundary is much more graded, looking much more like the graph depicting continuous perception in Figure 7.4A, except at the very far ends of the VOT continuum. This task differed from Experiment A in an important way. Rather than categorizing the meaningless syllables “ba” or “pa,” participants heard actual words, such as “beach” or “peach,” while looking at a computer screen that showed images of both possibilities, and then had to click on the image of the word they thought they’d heard. Like the syllables in Experiment A, these words were manipulated so that the voiced/voiceless sounds at their beginnings had VOTs that were evenly spaced along the VOT continuum.

Figure 7.6 Proportion of trials identified as /p/ as a function of VOT for Experiment A, which involved a forced-choice identification task where subjects had to indicate whether they heard /ba/ or /pa/, and Experiment B, a word recognition task in which subjects had to click on the image that corresponded to the word they heard (e.g., beach or peach). (After McMurray et al., 2008, J. Exp. Psych.: Hum. Percep. Perform. 34, 1609.)

McMurray and his colleagues argued that matching real words with their referents is a more realistic test of actual speech perception than making a decision about the identity of meaningless syllables—and therefore that true perception is fairly continuous, rather than warped to minimize differences within categories. But does this mean that we should dismiss the results of syllable-identification tasks as the products of fatally flawed experiments, with nothing to say about how the mind interprets speech sounds? Not necessarily. The syllable-identification task may indeed be tapping into something psychologically important that’s happening at an abstract level of mental representation, with real consequences for language function (for example, for making generalizations about how sounds pattern in your native tongue) but without necessarily warping perception itself.

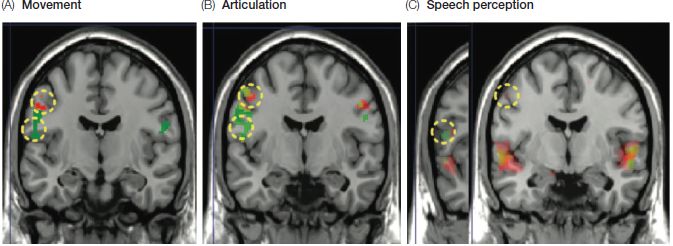

This is one of those occasions when brain-imaging experiments can be helpful in untangling the debate. As you saw in Chapter 3, language function is distributed across many different regions of the brain and organized into a number of networks that perform a variety of language-related tasks. The duties of one brain network might rely on preserving acoustic details, whereas those of another might privilege the distinctions between abstract categories while downplaying graded differences within categories.

An fMRI study led by Emily Myers (2009) suggests that this line of thinking is on the right track. The design of the study leveraged a phenomenon known as repetition suppression, which is essentially the neural equivalent of boredom caused by repetition. When the same sound (e.g., “ta”) is played over and over again, brain activity in the neural regions responsible for processing speech sounds start to drop off; activity then picks up again if a different sound (e.g., “da”) is played. But what happens if a repeated “ta” sound is followed by another “ta” sound that has a different VOT value than the first, but is still a member of the same phonemic category? Will there be a re-firing up of neural activity just as with the clearly different sound “da”? If the answer is yes, this suggests that the brain region in question is sensitive to detailed within-category differences in speech sounds. If the answer is no, it suggests that this particular brain region is suppressing within-category differences and is sensitive mainly to differences across phonemic boundaries.

According to Myers and her colleagues, the answer is: both. Some areas of the brain showed an increase in activation upon hearing a different variant of the same category (compared with hearing yet another identical example of the original stimulus). In other words, these regions were sensitive to changes in VOT even when the two sounds fell within the same phonemic category. But other regions were mainly sensitive to VOT differences across phonemic boundaries, and less so to differences within categories. The locations of these regions provide a hint about how speech processing is organized. Sensitivity to detailed VOT within-category differences was mostly evident within the left superior temporal gyrus (STG), an area that is involved in the acoustic processing of speech sounds. In contrast, insensitivity to within-category differences was evident in the left inferior frontal sulcus, an area that is linked to coordinating information from multiple information sources and executing actions that meet specific goals. This suggests that, even though our perception is finely tuned to detailed differences in speech sounds, we can also hold in our minds more categorical representations and manipulate them to achieve certain language-related goals. Much of speech perception, then, seems to involve toggling back and forth between these different representations of speech sounds, constantly fine-tuning the relationship between them. This helps to explain why the evidence for categorical perception has been so variable, as discussed further in Method 7.1.

7.1 Questions to Contemplate

7.1 Questions to Contemplate

1. Why might an automatic speech recognition (ASR) system succeed in recognizing a sound in one word, but fail to recognize the same sound in a different word; and why might it do better at recognizing speech produced by a single talker rather than many different talkers?

2. Do people typically hear the slight differences between variants of a sound (e.g., the differences between the “t” sounds in tan and Stan), or have they learned to tune them out?

3. Why do some studies suggest that perception is warped by mental categories of sounds, whereas others do not?

7.2 Integrating Multiple Cues

In the previous section, I may have given you the impression that the difference between voiced and voiceless sounds can be captured by a single acoustic cue, that of VOT. But that’s a big oversimplification. Most of the studies of categorical perception have focused on VOT because it’s easy to manipulate in the lab, but out in the real, noisy, messy world, the problem of categorizing sounds is far more complex and multidimensional. According to Leigh Lisker (1986), the distinction between the words rapid and rabid, which differ only in the voicing of the middle consonant, may be signaled by about sixteen different acoustic cues (and possibly more). In addition to VOT, these include: the duration of the vowel that precedes the consonant (e.g., the vowel in the first syllable is slightly longer for rabid than rapid); how long the lips stay closed during the “p” or “b” sound; the pitch contour before and after the lip closure; and a number of other acoustic dimensions that we won’t have time to delve into in this book. None of these cues is absolutely reliable on its own. On any given occasion, they won’t all point in the same direction—some cues may suggest a voiced consonant, whereas others might signal a voiceless one. The problem for the listener, then, is how to prioritize and integrate the various cues.

A multitude of acoustic cues

Listeners don’t appear to treat all potentially useful cues equally; instead, they go through a process of cue weighting, learning to pay attention to some cues more than others (e.g., Holt & Lotto, 2006). This is no simple process. It’s complicated by the fact that some cues can serve a number of different functions. For example, pitch can help listeners to distinguish between certain phonemes, but also to identify the age and sex of the talker, to read the emotional state of the talker, to get a sense of the intent behind an utterance, and more. And sometimes, it’s just noise in the signal. This means that listeners might need to adjust their focus on certain cues depending on what their goals are. Moreover, the connection between acoustic cues and phonemic categories will vary from one language to another, and within any given language, from one dialect to another, and even from one talker to another—and even within a single talker, on how quickly or informally that person is speaking.

cue weighting The process of prioritizing the acoustic cues that signal a sound distinction, such that some cues will have greater weight than others.

We don’t yet have a complete picture of how listeners weight perceptual cues across the many different contexts in which they hear speech, but a number of factors likely play a role. First, some cues are inherently more informative than others and signal a category difference more reliably than other cues. This itself might depend upon the language spoken by the talker—VOT is a more reliable cue to voicing for English talkers then it is for Korean talkers, who also rely heavily on other cues, such as pitch at vowel onset and the length of closure of the lips (Schertz et al., 2015). It’s obviously to a listener’s advantage to put more weight on the reliable cues. Second, the variability of a cue matters: if the acoustic difference that signals one category over another is very small, it might not be worth paying much attention to that cue. On the other hand, if the cue is too variable, with wildly diverging values between members of the same category, then listeners may learn to disregard it. Third, the auditory system may impose certain constraints, so that some cues are simply easier to hear than others. Finally, some cues are more likely to be degraded than others when there’s background noise, so they may be less useful in certain environments, or they may not be present in fast or casual speech.

The problem of cue integration has prompted a number of researchers to build sophisticated models to try to simulate how humans navigate this complex perceptual space (for example, see Kleinschmidt, 2018; Toscano & McMurray, 2010). Aside from figuring out the impact of the factors I’ve just mentioned, these researchers are also grappling with questions such as: How much experience is needed to settle on the optimal cue weighting? How flexible should the assignment of cue weights be in order to mirror the extent to which humans can (or fail to) reconfigure cue weights when faced with a new talker or someone who has an accent? How do higher-level predictions about which words are likely to be uttered interact with lower-level acoustic cues? To what extent do listeners adapt cue weights to take into account a talker’s speech rate?

These models have traveled a long way from the simpler picture presented at the beginning of this chapter—specifically, the idea that stable speech perception is achieved by warping perceptual space to align with category boundaries along a single, critical acoustic dimension. The idea of categorical perception was based on the ideas that it could be useful to ignore certain sound differences; but when we step out of the speech lab and into the noisy and changeable world in which we perceive speech on a daily basis, it becomes clear how advantageous it is to have a perceptual system that does exactly the opposite—one that takes into account many detailed sound differences along many different dimensions, provided it can organize all this information in a way that privileges the most useful information. Given the complexity of the whole enterprise, it would not be surprising to find that there are some notable differences in how individual listeners have mentally organized speech-related categories. Section 7.3 addresses how different experiences with speech might result in different perceptual organization, and Box 7.4 explores the possible impact of experience with music.

Does music training enhance speech perception?

There may be some significant overlap between brain functions for music and language, as explored in the Digging Deeper section of Chapter 3. If that’s the case, could musical practice fine-tune certain skills that are useful for language? Speech perception is a great area in which to explore this question, given that musicians need to be sensitive to a variety of rhythmic and pitch-based cues, and they have to be able to zoom in on some cues and disregard others.

A number of studies comparing musicians to non-musicians do suggest that musicians have heightened abilities when it comes to speech perception. Musicians have been found to have an advantage in accurately perceiving speech embedded in noise (e.g. Parbery-Clark et al., 2009; Swaminathan et al., 2015)—with percussionists showing a stronger advantage than vocalists (Slater & Kraus, 2016). Musical training has also been connected with an improved ability to detect differences between sounds from a foreign language (Martínez-Montes et al., 2013) and with the detection of syllable structure and rhythmic properties of speech (Marie et al., 2011).

These results are intriguing, but on their own, they don’t justify the conclusion that musical practice causes improvements in speech perception—it’s entirely possible that people choose to take up music precisely because they have good auditory abilities to start with, abilities that may help them in both musical and linguistic domains. Researchers are on stronger footing if they can show that people’s perception of speech is better after they’ve spent some in musical training than before they started, as found by a study in which high-schoolers who chose a music program as an option developed faster neural responses to speech after two years in their programs—in contrast with students enrolled in a fitness program, who showed no improvement (Tierney et al., 2013). But this still doesn’t completely control for aptitude or interest, and we don’t know whether putting athletes into music lessons they would otherwise avoid would result in the same effects.

The most scientifically rigorous method (though often least practical) is to randomly assign subjects to music training or a control group. A few such studies are beginning to emerge. Jessica Slater and her colleagues (2015) studied two groups of elementary school children who were on a waiting list for a music program. Half of them were randomly assigned to the program, while the other half were given a spot the following year. After 2 years (but not after just 1 year), the first group performed better in a speech-in-noise test than they had before they started the program, and they also performed better than the second group, who at that point had participated on the program for just 1 year.

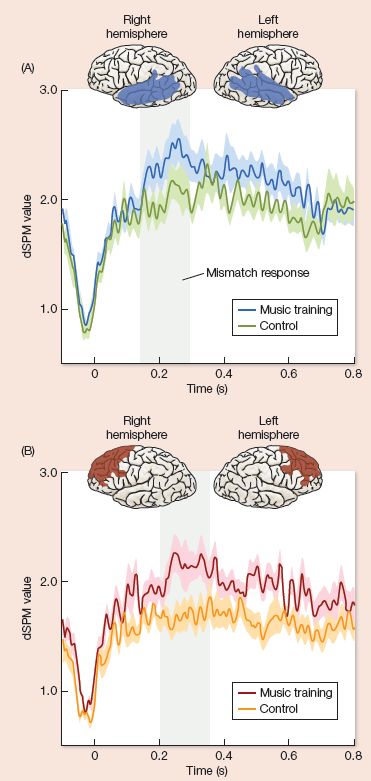

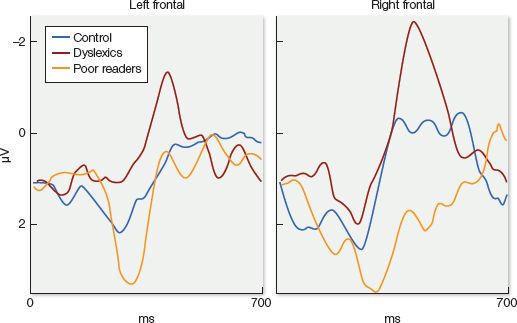

Similar logic was applied by Christina Zhao and Pat Kuhl (2016), who chose to study the effects of music training on 9-month-olds because babies of this age show a remarkable capacity for perceptual tuning. Half of the babies were randomly assigned to a 4-week, 12-session music program in which they were exposed to waltz-based musical rhythms (chosen because the ONE-two-three rhythm of the waltz is more difficult than rhythms that involve two or four equal beats) while their caregivers played clapping and tapping games with them. The other group was assigned to a program that focused on general motor skills through block-stacking and similar games. At the end of the 4 weeks, the researchers measured the infants’ brain activity using MEG techniques (which are very similar to the EEG methods you read about in Chapter 3). Babies heard sequences of speech sounds that contrasted the syllable ibi with ibbi—a subtle difference in consonant length that is phonemic in some languages, but not in English. The stimuli were structured so that ibi occurred 85 percent of the time, and ibbi only 15% of the time. This structure normally elicits a mismatch response—that is, a spike in brain activity whenever the less frequent (and therefore less expected) sound is encountered. The babies who had been randomly assigned to the music program showed stronger mismatch responses than the control group (see Figure 7.7).

Results like these further bolster the idea that musical skills are intertwined with speech perception. This may be welcome news for people who push back against the current trend of cutting educational costs by eliminating musical programs from school curricula. But advocates for music programs shouldn’t get too carried away with waving these results around to promote their cause. Although suggestive, the studies described here don’t tell us much about how meaningful the effects of musical training might be over the course of people’s lives. Do enhanced brain responses translate into practical advantages in processing linguistic information? And are these effects fairly permanent, or do they quickly evaporate if a child decides to drop music lessons and take up tae kwon do instead? Possibly, the best argument for music education is still that it allows students to participate in making music.

Figure 7.7 Plots of brain wave activity in the temporal (top panel) and prefrontal (bottom panel) regions for the music training and control groups. Values on the y-axis reflect the difference between average wave amplitudes for bibi when it occurred as the unexpected syllable (15 percent of the time) and average wave amplitude for bibi when it occurred as the most frequent, expected syllable (85 percent of the time). (After Zhao & Kuhl, 2016, PNAS 113, 5212.)

Context effects in speech perception

Another way to achieve stable sound representations in the face of variable acoustic cues might be to use contextual cues to infer sound categories. In doing this, you work backward, applying your knowledge of how sounds “shape-shift” in the presence of their neighbors to figure out which sounds you’re actually hearing. It turns out that a similar story is needed to account for perceptual problems other than speech. For example, you can recognize bananas as being yellow under dramatically different lighting conditions, even though more orange or green might actually reach your eyes depending on whether you’re seeing the fruit outdoors on a misty day or inside by candlelight (see Figure 7.8). Based on your previous experiences with color under different lighting conditions, you perceive bananas as having a constant color rather than changing, chameleon-like, in response to the variable lighting. Without your ability to do this, color would be pretty useless as a cue in navigating your physical environment. In the same way, knowing about how neighboring sounds influence each other might impact your perception of what you’re hearing. The sound that you end up “hearing” is the end result of combining information from the acoustic signal with information about the sound’s surrounding context.

An even more powerful way in which context might help you to identify individual sounds is knowing which word those sounds are part of. Since your knowledge of words includes knowledge of their sounds, having a sense of which word someone is trying to say should help you to infer the specific sounds you’re hearing. William Ganong (1980) first demonstrated this phenomenon, lending his name to what is now commonly known as the Ganong effect. In his experiment, subjects listened to a list of words and non-words and wrote down whether they’d heard a /d/ or a /t/ sound at the beginning of each item. The experimental items of interest contained examples of sounds that were acoustically ambiguous, between a /d/ and /t/ sound, and that appeared in word frames set up so that a sound formed a word under either the /d/ or /t/ interpretation, but not both. So, for example, the subjects might hear an ambiguous /d-t/ sound at the beginning of __ask, which makes a real word if the sound is heard as a /t/ but not as a /d/; conversely, the same /d-t/sound would then also appear in a frame like __ash, which makes a real word with /d/ but not with /t/. What Ganong found was that people interpreted the ambiguous sound with a clear bias in favor of the real word, even though they knew the list contained many instances of non-words. That is, they reported hearing the same sound as a /d/ in __ash, but a /t/ in __ask.

Ganong effect An effect in which listeners perceive the same ambiguous sound differently depending on which word it is embedded within; for example, a sound that is ambiguous between /t/ and /d/ will be perceived as /t/ when it appears in the context of __ask but as /d/ in the context of __ash.

Figure 7.8 Color constancy under different lighting. We subjectively perceive the color of these bananas to be the same under different lighting conditions, discounting the effects of illumination. Similar mechanisms are needed to achieve a stable perception of variable speech sounds. (Photographs by David McIntyre.)

This experiment helps to explain why we’re rarely bothered by the sloppiness of pronunciation when listening to real, meaningful speech—we do a lot of perceptual “cleaning up” based on our understanding of the words being uttered. But the Ganong study also revealed limits to the influence of word-level expectations: The word frame only had an effect on sounds that straddled the category boundary between a /t/ and /d/. If the sounds were good, clear examples of one acoustic category or the other, subjects correctly perceived the sound on the basis of its acoustic properties, and did not report mishearing dask as task. This shows that context cues are balanced against the acoustic signal, so that when the acoustic cues are very strong, expectations from the word level aren’t strong enough to cause us to “hallucinate” a different sound. However, when the acoustic evidence is murky, word-level expectations can lead to pretty flagrant auditory illusions). One such illusion is known as the phoneme restoration effect, first discovered by Richard Warren (1970). In these cases, a speech sound is spliced out—for example, the /s/ in legislature is removed—and a non-speech sound, such as a cough, is pasted in its place. The resulting illusion causes people to “hear” the /s/ sound as if it had never been taken out, along with the coughing sound.

phoneme restoration effect An effect in which a non-speech sound that shares certain acoustic properties with a speech sound is heard as both a non-speech and speech sound, when embedded within a word that leads to a strong expectation for that particular speech sound.

The phoneme restoration effect In this example, you’ll hear an audio clip illustrating the phoneme restoration effect, in which knowledge of a word allows the hearer to “fill in” a missing sound in the speech stream.

https://oup-arc.com/access/content/sedivy-2e-student-resources/sedivy2e-chapter-7-web-activity-4

Integrating cues from other perceptual domains

You may have noticed, when trying to talk to someone at a loud party, that it’s much easier to have a conversation if you’re standing face to face and can see each other’s face, lips, and tongue. This suggests that, even without special training in lip reading, your knowledge of how sounds are produced in the mouth can help you interpret the acoustic input.

There is, in fact, very clear evidence that visual information about a sound’s articulation melds together with acoustic cues to affect the interpretation of speech. This integration becomes most obvious when the two sources of information clash, pushing the system to an interesting auditory illusion known as the McGurk effect. When people see a video of a person uttering the syllable ga, but the video is accompanied by an audio recording of the syllable ba, there’s a tendency to split the difference and perceive it as the syllable da—a sound that is produced somewhere between ba, which occurs at the front of the mouth, and ga, which is pronounced at the back, toward the throat. This finding is a nice, sturdy experimental effect. It can be seen even when subjects know about and anticipate the effect, it occurs with either words or non-words (Dekle et al., 1992)—and it even occurs when blindfolded subjects feel a person’s lips moving to say ba while hearing recordings of the syllable ga (Fowler & Dekle, 1991).

McGurk effect An illusion in which the mismatch between auditory information and visual information pertaining to a sound’s articulation results in altered perception of that sound; for example, when people hear an audio recording of a person uttering the syllable ba while viewing a video of the speaker uttering ga, they often perceive the syllable as da.

The McGurk effect offers yet more strong evidence that there is more to our interpretation of speech sounds than merely processing the acoustic signal. What we ultimately “hear” is the culmination of many different sources of information and the computations we’ve performed over them. In fact, the abstract thing that we “hear” may actually be quite separate from the sensory inputs that feed into it, as suggested by an intriguing study by Uri Hasson and colleagues (2007). Their study relied on the repetition suppression phenomenon you read about in Section 7.1, in which neural activity in relevant brain regions becomes muted after multiple repetitions of the same stimuli. (In that section, you read about a study by Emily Myers and her colleagues, who found that certain neural regions responded to abstract rather than acoustic representations of sounds, treating variants of the same phonemic category as the “same” even though they were acoustically different.) Hasson and colleagues used repetition suppression to examine whether some brain regions equate a “ta” percept that is based on a McGurk illusion—that is, based on a mismatch between an auditory “pa” and a visual “ka”—with a “ta” percept that results from accurately perceiving an auditory “ta” with visual cues aligned, that is, showing the articulation of “ta.” In other words, are some areas of the brain so convinced by the McGurk illusion that they ignore the auditory and visual sensory information that jointly lead to it?

The McGurk effect In this exercise, you’ll see a demonstration of the McGurk effect, in which the perceptual system is forced to resolve conflicting cues coming from the auditory and visual streams.

https://oup-arc.com/access/content/sedivy-2e-student-resources/sedivy2e-chapter-7-web-activity-5

They found such an effect in two brain regions, the left inferior frontal gyrus (IFG) and the left planum polare (PP). (Note that the left IFG is also the area in which Myers and her colleagues found evidence for abstract sound representations; the left PP is known to be involved in auditory processing.) In contrast, a distinct area—the transverse temporal gyrus (TTG), which processes detailed acoustic cues—showed repetition suppression only when the exact auditory input was repeated, regardless of whether the perceived sound was the same or not. That is, in the TTG, repetition suppression was found when auditory “pa” combined with visual “pa” were followed by auditory “pa” combined with visual “ka,” even though the second instance was perceived by the participants as “ta” and not “pa.”

7.2 Questions to Contemplate

7.2 Questions to Contemplate

1. What factors might shift which acoustic cues a listener pays most attention to when identifying speech sounds?

2. In addition to mapping the appropriately weighted acoustic cues onto speech sounds, what other knowledge would you need to build into an automatic speech recognition (ASR) system in order to accurately mimic speech perception as it occurs in humans?

7.3 Adapting to a Variety of Talkers

As you’ve seen throughout this chapter, sound categories aren’t neatly carved at the joints. They can’t be unambiguously located at specific points along a single acoustic dimension. Instead, they emerge out of an assortment of imperfect, partly overlapping, sometimes contradictory acoustic cues melded together with knowledge from various other sources. It’s a miracle we can get our smartphones to understand us in the best of circumstances.

The picture we’ve painted is still too simple, though. There’s a whole other layer of complexity we need to add: systematic variation across speakers. What makes this source of variation so interesting is that it is in fact systematic. Differences across talkers don’t just add random noise to the acoustic signal. Instead, the variation is conditioned by certain variables; sometimes it’s the unique voice and speaking style of a specific person, but other predictable sources of variation include the age and gender of the talker (which reflect physical differences in the talker’s vocal tract), the talker’s native language, the city or area they live in, or even (to some extent) their political orientation (see Box 7.5). As listeners, we can identify these sources of variation: we can recognize the voices of individual people, guess their mother tongue or the place they grew up, and infer their age and gender. But can we also use this information to solve the problem of speech perception? That is, can we use our knowledge about the talker—or about groups of similar talkers—to more confidently map certain acoustic cues onto sound categories?

Travel to a non-English-speaking country can result in some unsettling cultural experiences, even if you spend much of your time watching TV in your hotel room. It may not have occurred to you to wonder what Captain Kirk would sound like in Slovakian, but—there it is. Such late-night viewing of dubbed programs often arouses my scientific curiosity: How do Slovakian viewers cope with the fact that when they hear Captain Kirk saying the Slovakian version of “Beam me up, Scotty,” his English-moving mouth appears to be saying something that (to them) means “I’d drink some coffee.” Do they listen to Star Trek episodes at a higher volume than their American counterparts to compensate for the fact that they can’t trust the information in the visual channel? Do they learn to re-weight their cues when watching TV to suppress the misleading visual information? If you were to administer a test as the credits were rolling, would you find a temporarily diminished McGurk effect among the viewers of dubbed programs?

While the citizens of many countries are forced to listen to bad dubbing of foreign shows (or read subtitles), Germany takes its dubbing very seriously. According to the Goethe Institute, dubbing became a major enterprise in Germany after World War II, when almost all films screened in German cinemas were foreign (mainly American, French, or British) at a time when the population had negative reactions to hearing these languages, given that they represented the native tongues of their recent foes. To make these films more palatable, they were dubbed into German, giving rise to a highly developed industry that is currently the source of national pride. The ultimate goal for dubbing directors and actors is to produce a version of a film or program that does not appear to be dubbed. Doing so is incredibly difficult. As evident from psycholinguistic experiments, we naturally integrate visual information about how a sound is articulated with the incoming acoustic information, which is why watching most dubbed programs feels so, well, unnatural. This imposes a stringent condition on dubbing that translators of print never have to worry about: how to match up not just the subtle meaning of a translation with its original, but also the articulatory properties of the sounds involved. Very often, the two will clash, leading to difficult choices. For example, suppose an English-speaking character has just uttered the word “you” in a line of dialogue. The German scriptwriter has two possible translation options: the informal du, which is pronounced in a very similar way to “you,” and the formal Sie, in which the vowel requires spreading the lips, rather than rounding them, a visually obvious clash. But what if the character is speaking to a high-status person he’s just met? A German speaker would never say “du” in this situation.

While it’s very hard to achieve dubbing perfection to the point that the dubbing goes unnoticed, it’s possible, with some effort, to achieve impressive results. This is because, while visual information about pronunciation clearly plays a role in the understanding of speech, its role is quite incomplete. Subtle tongue gestures are often only partially visible from behind teeth and lips or—for example, try visually distinguishing the syllables sa, ta, da, and na. And a number of important sound contrasts are not at all visible on the face—such as voicing contrasts, which depend on the vibration of the vocal folds tucked away from view. This gives dubbing artists a fair bit of room in which to maneuver. While this is welcome news for the dubbing industry, it also makes it apparent why “lip-reading” as practiced by people who are deaf is more like “lip-guessing.” The information that is visually available is far less detailed and constraining than the auditory information, forcing the lip-reader to rely heavily on the context, their knowledge base, and lots of fast guessing to fill in the gaps. It’s an uncertain and exhausting process, typically conducted in a non-native language. If you’re a hearing person, this might be a useful analogy: take some ASL courses, then try understanding what someone is signing based on a video in which the hands are blurred out much of the time.

The challenges of lip-reading apparently provided the inspiration for the humorous “Bad Lip Reading” series of videos on YouTube. In an interview with Rolling Stone magazine, the anonymous creator of these videos revealed that his fascination with the topic began when he observed his mother learning to lip-read after losing her hearing in her 40s. The videos cleverly exploit the ample gap between visual and auditory information. By taking footage of TV shows, sports events, or political news and dubbing absurd words over the lip movements of the speakers or singers, the creator treats his public to comedic gems—such as Presidents Trump and Obama exchanging insults during the Trump inauguration of 2018, or a deeply odd episode of the hit series Stranger Things in which Mike and Nancy’s prim mother announces perkily over dinner, “I have a lovely tattoo.”

Have you ever made a snap judgment about someone’s political views based on their clothing (tailored suit, or t-shirt and cargo pants) or their drink order (whatever’s on tap, or 12-year-old Scotch)? It’s no secret among ethnographers that people often tailor their appearance and consumption habits in a way that signals their identification with a certain social group or ideology. The same seems to be true of accents.

The connection between social identity and accent was first noticed by linguist Bill Labov (1972). While visiting the island of Martha’s Vineyard in Massachusetts, Labov noticed that many of the islanders had a unique way of saying words like “rice” and “sight”—they pronounced them higher in the mouth, not quite “roice” and “soight,” but somewhat in that direction. But locals varied quite a bit in terms of how they pronounced their vowels, so Labov set about making painstaking recordings of people from all over the island. He found that the raised “ay” sound was more concentrated in some groups than in others and identified a profile of the typical “ay” raiser. Raising was especially prevalent in the fishing community of Chilmark, especially among fishermen, many of whom were keen on preserving a fading way of life. They looked askance at the “summer people” and newcomers who traipsed around the island as if they owned it. When Labov asked people directly about how proud they felt of their local heritage, and how resentful they were of summer visitors like himself, he found that people who most valued the traditional island lifestyle were the ones who raised their vowels the most. High schoolers who planned to come back to the island after going to college raised their vowels more than those who thought they would build a life away from the island. Most residents of Martha’s Vineyard were not consciously aware that they produced different vowels than the mainlanders, even though they had a general sense that their accent was different from the mainland accent. They subconsciously used their vowels as a subtle badge of identity.

More recently, Labov (2010) has tracked a linguistic change that’s occurring in the northern region of the U.S. clustering around the Great Lakes. This dialect region, called the Inland North, runs from just west of Albany to Milwaukee, loops down to St. Louis, and traces a line to the south of Chicago, Toledo, and Cleveland. Thirty-four million speakers in this region are in the midst of a modern-day rearrangement of their vowel system. Labov thinks it started in the early 1800s, when the linguistic ancestors of this new dialect began to use a distinct pronunciation for the vowel in “man,” such that it leaned toward “mee-an.” This eventually triggered a free-for-all game of musical chairs involving vowels. The empty slot left by this first vowel shift was eventually filled by the “o” sound, so that “pod” came to be pronounced like “pad” used to be; then “desk” ended up being pronounced like the former “dusk,” “head” like “had,” “bus” like “boss,” and “bit” like erstwhile “bet.”

Genuine misunderstandings arise when speakers of this dialect encounter listeners who are not familiar with it. Labov describes the following situation, reconstructed from an incident reported in the Philadelphia Inquirer:

Gas station manager: It looks like a bomb on my bathroom floor.

Dispatcher: I’m going to get somebody. (That somebody included the fire department.)

Manager: The fire department?

Dispatcher: Well yes, that’s standard procedure on a bomb call.

Manager: Oh no, ma’am, I wouldn’t be anywhere near a bomb. I said I have a bum on the bathroom floor.

Eight firefighters, three sheriff’s deputies, and the York County emergency preparedness director showed up at the gas station to escort the homeless transient out.

One of the puzzles about this vowel shift is why it has spread through an area of 88,000 square miles only to stop cold south of Cleveland and west of Milwaukee. Labov points out that the residents of the Inland North have long-standing differences with their neighbors to the south, who speak what’s known as the Midland dialect. The two groups originated from distinct groups of settlers; the Inland Northerners migrated west from New England, whereas the Midlanders originated in Pennsylvania via the Appalachian region. Historically, the two settlement streams typically found themselves with sharply diverging political views and voting habits, with the Northerners generally being more liberal. Labov suggests that it’s these deep-seated political disagreements that create an invisible borderline barring the encroachment of Northern Cities Vowels. When he looked at the relationship between voting patterns by county over the three previous presidential elections and the degree to which speakers in these counties shifted their vowels, he found a tight correlation between the two.

Do vowel-shifters sound more liberal to modern ears? Yes, at least to some extent. Labov had students in Bloomington, Indiana, listen to a vowel-shifting speaker from Detroit and a non-vowel-shifter from Indianapolis. The students rated the two speakers as equal in probable intelligence, education, and trustworthiness. But they did think the vowel-shifting speaker was more likely to be in favor of gun control and affirmative action.

Are we moving toward an era where Americans will speak discernibly “red” or “blue” accents? It’s hard to say, but linguists like Bill Labov have shown that American dialects have been pulling apart from each other, with different dialectal regions diverging over time rather than converging—yet one more polarizing development that is making conversations between fellow Americans increasingly challenging.

Evidence for adaptation

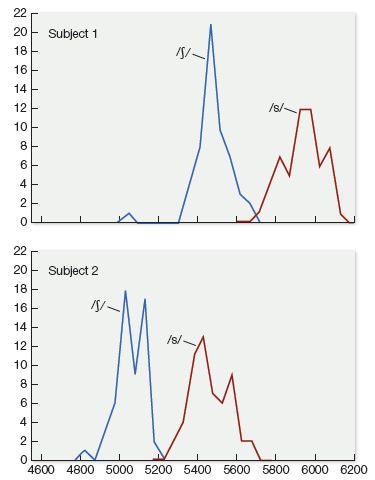

Variation across talkers is significant enough to pose a real challenge for speech perception. For example, Figure 7.9 shows the distribution of /s/ and /ʃ/ (as in the words sip versus ship) along one of the important acoustic cues that distinguish these two sounds. As you can see, these distributions are quite different from each other, with one talker’s /ʃ/ sounds overlapping heavily with the other person’s /s/ sounds. How do listeners cope with such variation? Do they base their sound categories on the average distributions of all the talkers they’ve ever heard? If so, talkers who are outliers might be very hard to understand. Or do listeners learn the various speech quirks of individual talkers and somehow adjust for them?

Figure 7.9 Distribution of frication frequency centroids, an important cue for distinguishing between /s/ and /ʃ/, from two different talkers; note the considerable overlap between the sound /ʃ/ for subject ACY and the sound /s/ for subject IAF. (From Newman et al., 2001, J. Acoust. Soc. Am. 109, 1181.)

People do seem to tune their perception to individual talkers. This was observed by speech scientists Lynne Nygaard and David Pisoni (1998), who trained listeners on sentences spoken by 10 different talkers over a period of 3 days, encouraging listeners to learn to label the individual voices by their assigned names. At the end of the 3-day period, some listeners heard and transcribed new sentences, recorded by the same ten talkers and mixed with white noise, while other listeners were tested on the same sentences (also mixed with noise) spoken by a completely new set of talkers. Those who transcribed the speech of the familiar talkers performed better than those tested on the new, unfamiliar voices—and the more noise was mixed into the signal, the more listeners benefitted from being familiar with the voices.

These results show that speech perception gets easier as a result of experience with individual voices, especially under challenging listening conditions, suggesting that you’ll have an easier time conversing at a loud party with someone you know than someone you’ve just met. But Nygaard and Pisoni’s results don’t tell us how or why. Does familiarity help simply because it sharpens our expectations of the talker’s general voice characteristics (such as pitch range, intonation patterns, and the like), thereby reducing the overall mental load of processing these cues? Or does it have a much more precise effect, helping us to tune sound categories based on that specific talker’s unique way of pronouncing them?

Researchers at Work 7.1 describes an experiment by Tanya Kraljic and Arthur Samuel (2007) that was designed to test this question more precisely. This study showed that listeners were able to learn to categorize an identical ambiguous sound (halfway between /s/ and /ʃ/) differently, based on their previous experience with a talker’s pronunciation of these categories. What’s more, this type of adaptation is no fleeting thing. Frank Eisner and James McQueen (2008) found that it persisted for at least 12 hours after listeners were trained on a mere 4-minute sample of speech.

The same mechanism of tuning specific sound categories seems to drive adaptation to unfamiliar accents, even though the speech of someone who speaks English with a marked foreign accent may reflect a number of very deep differences in the organization of sound categories, and not just one or two noticeable quirks. In a study by Eva Reinisch and Lori Holt (2004), listeners heard training words in which sounds that were ambiguous between /s/ and /f/ were spliced into the speech of a talker who had a noticeable Dutch accent. (As in other similar experiments, such as the one by Kraljic and Samuel, these ambiguous sounds were either spliced into words that identified the sound as /s/, as in harness, or /f/, as in belief). Listeners adjusted their categories based on the speech sample they’d heard, and, moreover, carried these adjustments over into their perception of speech produced by a different talker who also had a Dutch accent. So, despite the fact that the Dutch speakers differed from the average English speaker on many dimensions, listeners were able to zero in on this systematic difference, and generalize across accented talkers. Note, however, that the systematic production of an ambiguous /s/-/f/ sound as either /s/ or /f/ was an artificial one in this experiment—it doesn’t reflect an actual Dutch accent, but was used for the purpose of this experiment because it allowed the researchers to extend a finding that was well established in studies of adaptation to individual talkers to the broader context of adaptation to foreign accents. Reassuringly, similar effects of listener adaptation have been found for real features of accents produced naturally by speakers—as in, for example, the tendency of people to pronounce voiced sounds at the ends of words as voiceless (pronouncing seed as similar to seat) if they speak English with a Dutch or Mandarin accent (Eisner et al., 2013; Xie et al., 2017).

Source: Experiment 2 in T. Kraljic & A. G. Samuel. (2007) Perceptual adjustments to multiple speakers. Journal of Memory and Language 56, 1–15.

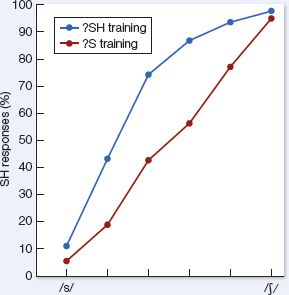

Question: Do listeners learn to adjust phonemic boundaries in a way that takes into account the pronunciations of individual talkers?

Hypothesis: Participants who are exposed to an ambiguous sound halfway between /s/ and /ʃ/ that functions as /s/ for one talker but /ʃ/ for a second talker will shift their phonemic boundaries accordingly, so that ambiguous sounds are more likely to be categorized as /s/ for the first talker but /ʃ/ for the second.

Test: Forty-eight adults with normal hearing who were native speakers of English participated in a two-phase experiment. In the exposure phase, participants listened to 100 words and 100 non-words. and for each item were asked to decide whether it was a real word or not by pressing one of two buttons. Among the real words, 20 key items contained the /s/ phoneme (e.g., hallucinate) and 20 contained the /ʃ/ phoneme (e.g., negotiate); the other words were distractors. Half of the items, including the key items, were spoken by a male voice and the other half by a female voice, so that each participant heard 10 /s/ words and 10 /ʃ/ words spoken by the male and 10 /s/ words and 10 /ʃ/ words spoken by the female. Participants were randomly assigned to one of two groups. For one group, all the /s/ words spoken by the male contained a clear, typical example of /s/, whereas all the /ʃ/ words contained a spliced-in ambiguous sound halfway between /s/ and /ʃ/. Conversely, the female voice produced all of the /s/ words with the (same) ambiguous sound, but all of the /ʃ/, words with a clear, typical example of /ʃ/. The situation was reversed for the second group of participants, so that the male voice uttered the ambiguous sound only in the /s/ words (with typical /ʃ/ sounds in the /ʃ/ words), whereas the female voice uttered the ambiguous sound only in the /ʃ/ words (with typical /s/ sounds in the /s/ words).

If listeners adapt to individual talkers’ pronunciations rather than just averaging acoustic information out across talkers, then they should carve the sound space up differently for each voice. This was tested during the categorization phase: All participants heard both the male and female voice producing six stimulus items on a continuum from /asi/ to /aʃi/, with the items representing equal steps along this continuum. They had to indicate whether they thought each item contained the sound /s/ (by pressing a button labelled “S”) or the /ʃ/ sound (by pressing a button labelled “SH”). The order of the male and female voices was counterbalanced across subjects.

Results: Performance on the categorization task depended on what participants heard in the exposure phase. Figure 7.10 shows the percentage of categorizations as /ʃ/, with the data collapsed across male and female voices and exposure groups. The graph shows that participants were more likely to categorize an intermediate sound as /ʃ/ for a particular voice if the ambiguous sound had appeared in /ʃ/ words uttered by that same talker. For example, those who had heard the female voice utter the ambiguous sound in /ʃ/ words (but not in /s/ words) during the exposure phase were more likely to say that the intermediate examples of the stimuli were /ʃ/ sounds than those who had heard the female voice utter the ambiguous sound in /s/ words. This occurred despite the fact that the participants heard an equal number of ambiguous sounds uttered as /s/ words by the male voice, a fact that they appeared to disregard when making categorization judgments about the female voice.

Conclusions: Under at least some conditions, adult hearers can shift a phonemic boundary to take into account the speech patterns of individual speakers, even when presented with conflicting speech patterns by a different talker. When native speakers of English heard a specific talker produce a sound that was ambiguous between /s/ and /ʃ/ in words that revealed the sound’s phonemic function, their categorization of sounds along the /s/-/ʃ/ continuum reflected a bias toward the phonemic structure apparent for that talker, even though they heard words from a different talker that showed exactly the opposite bias. This adaptation occurred after only ten examples from that talker of words that clarified the phonemic status of the ambiguous sound.

Questions for further research

1. Would similar adaptation effects be found for other phonemic contrasts? (In fact, a version of this experiment was conducted by Kraljic and Samuel—their Experiment 1, reported in the same article—that did not show talker-specific adaptation for the /t/-/d/ contrast).

2. Would listeners show evidence of adapting to specific talkers if their identity were signaled in some way other than gender—for example, for two male voices of different ages, or two female voices with different accents?

3. Does this adaptation effect depend on having very extensive experience with a language? For example, would it occur if, instead of testing native English listeners, we tested listeners of other languages who had learned English fairly recently?

Figure 7.10 The percentage of “SH” categorizations of stimuli along the /s/-/ʃ/ continuum for words produced by the same voice in the exposure phase. For example, for judgments made about the male voice, the cases labeled as “?SH training” refer to judgments made by participants who heard the male voice produce the ambiguous sound in /ʃ/ words, but not in /s/ words, whereas the “?S training” cases refer to judgments made by participants who heard the male voice produce the ambiguous sound in /s/ words. The data here are collapsed across categorization judgments for both male and female voices. (From Kraljic & Samuel, 2007, J. Mem. Lang. 56, 1.)

Relationships between talker variables and acoustic cues

To recap the story so far, this chapter began by highlighting the fact that we carve a graded, multidimensional sound space into a small number of sound categories. At some level, we treat variable instances of the same category as equivalent, blurring their acoustic details. But some portion of the perceptual system remains sensitive to these acoustic details—without this sensitivity, we probably couldn’t make subtle adjustments to our sound categories based on exposure to individual hearers or groups of hearers whose speech varies systematically.

Researchers now have solid evidence that such adjustments can be made, in some cases very quickly and with lasting effect. But they don’t yet have a full picture of the whole range of possible adjustments. It’s complicated by the fact listeners don’t always respond to talker-specific manipulations in the lab by shifting their categories in a way that mirrors the experimental stimuli. For example, Kraljic and Samuel (2007) found that although listeners adjusted the /s/- /ʃ/ border in a way that reflected the speech of individual talkers, they did not make talker-specific adjustments in response to the VOT boundary between /d/ and /t/. Similarly, Reinisch and Holt (2014) found that listeners extended their new categories from one Dutch-accented female speaker to another female speaker, but did not generalize when the new talker was a male who spoke with a Dutch accent. Why do some adaptations occur more readily than others?

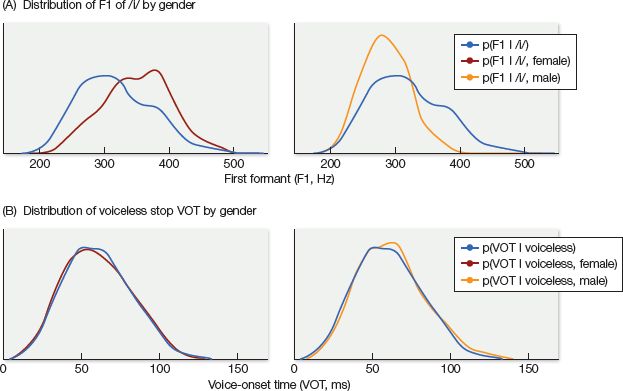

Some researchers argue that the answer to this question lies—at least in part—in the structure that is intrinsic to speech itself. That is, some acoustic cues are very closely tied to the identities of speakers, whereas others are not. Figure 7.11 shows the distribution of two very different cues, one that’s relevant for identifying the vowel /i/ as in beet, and the other (VOT) that’s used to identify voiceless stops like /t/. The distribution averaged across all talkers is contrasted with the distributions for male and female talkers. As you can see, the cue shown in Figure 7.11A shows very different distributions for male versus female speakers; in this case, knowing the gender of the speaker would be very useful for interpreting the cue, and a listener would do well to be cautious about generalizing category structure from a male speaker to a female, or vice versa. In contrast, the VOT cue as depicted in Figure 7.11B does not vary much by gender at all, so it wouldn’t be especially useful for listeners to link this cue with gender. (In fact, for native speakers of English, VOT turns out to depend more on how fast someone is speaking than on who they are.) So, if listeners are optimally sensitive to the structure inherent in speech, they should pay attention to certain aspects of a talker’s identity when interpreting some cues, but disregard talker variables for other cues.

Figure 7.11 (A) Gender-specific distributions (red and yellow lines) of the first vowel formant (F1) for the vowel /i/ are strikingly different from overall average distributions (blue lines). (B) Gender-specific distributions of VOT for voiceless stops diverge very little from the overall distribution. This makes gender informative for interpreting vowel formants, but not for interpreting VOT. (From Kleinschmidt, 2018, PsyArXiv.com/a4ktn/.)

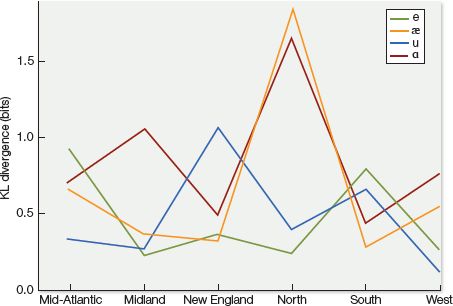

The relationship between talker variables and acoustic cues can be quite complicated; there are several possibly relevant variables and the relationship between a cue and a certain talker variable could be specific to an individual sound. For example, Figure 7.12 shows that it would be extremely useful to know that a speech sample comes from the Northern dialect in the United States if you’re interpreting the vowel formant cues associated with the vowels in cat and Bob—but much less relevant for categorizing the vowels in hit or boot. On the other hand, your interpretation of boot (but not of cat) would be significantly enhanced by knowing that a talker has a New England accent.

Figure 7.12 Degree of acoustic divergence for each vowel by dialect, based on first and second vowel formants (important cues for vowel identification). This graph shows that dialect distinguishes some vowel formant distributions more than others. In particular, the pronunciation of /æ/ as in cat and /ɑ/ as in father by Northern talkers diverge more than any other vowel/dialect combination. The five most distinct vowel/dialect combinations are shown here. (From Kleinschmidt, 2018, PsyArXiv.com/a4ktn/.)

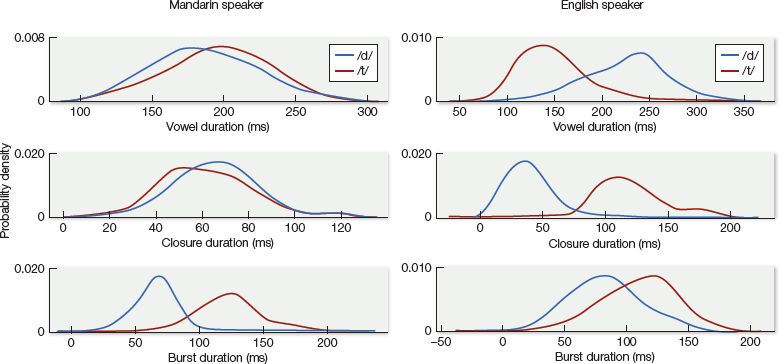

Complicating matters even further is that, as discussed in Section 7.2, a single sound dimension (such as voicing) is often defined not by a single cue, but by a number of different cues that have to be appropriately weighted. To adapt to new talkers, listeners may have to learn to map a whole new set of cue values to a category—but even more than that, they may have to learn how to re-weight the cues. This was the case for the successful adaptation of English-speaking listeners to Mandarin-accented speech, in a study led by Xin Xie (2017). In this study, listeners had to adjust their sound categories to reflect the fact that the talker produced voiced sounds like /d/ at the ends of words with a VOT that resembled a native English /t/. Aside from VOT, English speakers use other cues to signal voicing at the ends of words. These include the length of the preceding vowel (longer in cad than in cat) and closure duration—that is, the amount of time that the articulators are fully closed (longer in cat than in cad). But the Mandarin-accented talker did not use either of these as a reliable cue for distinguishing between voiced and voiceless sounds. Instead, he relied on burst duration as a distinct cue—that is, the duration of the burst of noise that follows the release of the articulators when a stop is produced (see Figure 7.13). English listeners who heard the Mandarin-accented talker (rather than the same words by the native English speaker) came to rely more heavily on burst duration as a cue when deciding whether a word ended in /d/ or /t/. After just 60 relevant words by this talker, listeners had picked up on the fact that burst duration was an especially informative acoustic cue, and assigned it greater weight accordingly.

Figure 7.13 Distributions showing the reliance by a Mandarin speaker (left panel) and a native English speaker (right panel) on various acoustic cues (duration of preceding vowel, closure duration and burst duration) when pronouncing 60 English words ending in /d/ or /t/. (From Xie et al., 2017, J. Exp. Psych.: Hum. Percep. Perform. 43, 206.)

The role of a listener’s perceptual history

To begin my Ph.D. studies, I moved to Rochester, New York, from Ottawa, Canada. I became friends with a fellow graduate student who had moved from California. Amused by my Canadian accent, he would snicker when I said certain words like about and shout. This puzzled me, because I didn’t think I sounded any different from him—how could he notice my accent (to the point of amusement) if I couldn’t hear his? After a few months in Rochester, I went back to Ottawa to visit my family, and was shocked to discover that they had all sprouted Canadian accents in my absence. For the first time, I noticed the difference between their vowels and my Californian friend’s.

This story suggests two things about speech perception: first, that different listeners with different perceptual histories can carve up phonetic space very differently from each other, with some differences more apparent to one person than another; and second, that the same listener’s perceptual organization can change over time, in response to changes in their phonetic environment. This makes perfect sense if, as suggested earlier, listeners are constantly learning about the structure that lies beneath the surface of the speech sounds they hear. Because I was exposed (through mass media’s broadcasting of a culturally dominant accent) to Californian vowels much more than my friend was exposed to Canadian ones, I had developed a sound category that easily embraced both, whereas my friend perceived the Canadian vowels as weird outliers. And the distinctiveness of the Canadian vowels became apparent to me once they were no longer a steady part of my auditory diet.